Introduction to Statistics and Error Analysis...

Transcript of Introduction to Statistics and Error Analysis...

Physics116C, 4/14/06

D. Pellett

Introduction to Statistics and Error

Analysis II

References:Data Reduction and Error Analysis for the Physical Sciences by Bevington and RobinsonParticle Data Group notes on probability and statistics, etc.–online at http://pdg.lbl.gov/2004/reviews/contents_sports.html(Reference is S. Eidelman et al, Phys. Lett. B 592, 1 (2004))

Any original presentations copyright D. Pellett 2006

1

Topics

• Propagation of errors examples

• Background subtraction

• Error for x = a ln(bu)

• Estimates of mean and error

• method of maximum likelihood and error of the mean

• Weighted mean, error of weighted mean

• Confidence intervals

• Chi-square (χ2) distribution and degrees of freedom (ν)

• Histogram χ2

• Format for lab writeup

• Next - least squares fit to a straight line and errors of parameters

2

Overview: Propagation of Errors

• Brief overview

• Suppose we have x = f(u,v) and u and v are uncorrelated random variables with Gaussian distributions.

• Expand f in a Taylor’s series around x0 = f(u0,v0) where u0,v0

are the mean values of u and v, keeping the lowest order terms:

• The distribution of ∆x is a bivariate distribution in ∆u and ∆v. Under suitable conditions (see Bevington Ch. 3) we can approximate σx (the standard deviation of ∆x) by

Brief Article

The Author

April 8, 2006

!x =!

!f

!u

"!u +

!!f

!v

"!v

"x2 !

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

1

Brief Article

The Author

April 8, 2006

!x ! x" x0 =!

!f

!u

"!u +

!!f

!v

"!v

"x2 #

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

1

3

Bevington Problem 2.8 Solution

• Cars at fork in road: two choices, right or left; p=0.75 for right “on a typical day.”

• Binomial distribution 1035 cars

• Mean = np = 1035x0.75 = 776.25,

• x = 752 cars turned right on a particular day

• Is this consistent with being a typical day?

• Find probability for a deviation from the mean this large or larger, either positive or negative

• Could sum PB from 0 to 752 and from 800 to 1035

• Can approximate with Gaussian since n, x large, x still many σ less that n. (19σ to be exact.)

2.8) There are two discrete choices with a fixed probability for each choice, so this is an exampleof a binomial distribution. For a total of 1035 cars, we know that on a typical day 75% (776.25)of people go right, 25% left. On this particular day 752 went right, so let’s call a car going to theright a success. Let’s assume the 75% probability is very well established. Thus,

PB(x; 1035, 0.75) =1035!

x!(1035! x)!

!34

"x !14

"1035!x

where x is the number of cars going to the right.

We are supposed to find the probability of getting a result as far or farther from the mean (assumethe deviation can be less than or more than the mean). This would require summing this uglyfunction from 0 to 752 and 800 to 1035 (or one minus the sum from 753 to 799). Since n " 1and x " 1, we can approximate the Binomial with a Gaussian if we are not too near x = n. Thestandard deviation is given by ! = #npq = 13.9 so the mean is (1035! 776.25)!/13.9 = 19! fromn = 1035. We can appoximate this with a Gaussian distribution.

It is convenient to transform this to a unit normal distribution in the variable z = (x! µ)/!. Thishas µ = 0 and ! = 1. Since we allow deviations in either direction from the mean, our limit is|z| = |752!776.25|/13.9 = 1.745. The probability for falling inside the interval !1.745 < z < 1.745for a unit normal distribution is given in Table C.2 of Bevington as 0.9190 (using interpolation).Thus the probability of exceeding 1.745! from the mean is 1-0.9190 = 0.081 and the probability ofthis being a typical day is 8.1%.

You could also use the Gaussian cumulative distribution function in LabVIEW (called “NormalDist” in the figure below):

This calculates

P (z $ !1.745) =# !1.745

!"

1#2"

exp$!1

2z2

%= 0.0405

so the probability of this being a typical day is 2(.0405)=0.081, as above.

4

2.8) There are two discrete choices with a fixed probability for each choice, so this is an exampleof a binomial distribution. For a total of 1035 cars, we know that on a typical day 75% (776.25)of people go right, 25% left. On this particular day 752 went right, so let’s call a car going to theright a success. Let’s assume the 75% probability is very well established. Thus,

PB(x; 1035, 0.75) =1035!

x!(1035! x)!

!34

"x !14

"1035!x

where x is the number of cars going to the right.

We are supposed to find the probability of getting a result as far or farther from the mean (assumethe deviation can be less than or more than the mean). This would require summing this uglyfunction from 0 to 752 and 800 to 1035 (or one minus the sum from 753 to 799). Since n " 1and x " 1, we can approximate the Binomial with a Gaussian if we are not too near x = n. Thestandard deviation is given by ! = #npq = 13.9 so the mean is (1035! 776.25)!/13.9 = 19! fromn = 1035. We can appoximate this with a Gaussian distribution.

It is convenient to transform this to a unit normal distribution in the variable z = (x! µ)/!. Thishas µ = 0 and ! = 1. Since we allow deviations in either direction from the mean, our limit is|z| = |752!776.25|/13.9 = 1.745. The probability for falling inside the interval !1.745 < z < 1.745for a unit normal distribution is given in Table C.2 of Bevington as 0.9190 (using interpolation).Thus the probability of exceeding 1.745! from the mean is 1-0.9190 = 0.081 and the probability ofthis being a typical day is 8.1%.

You could also use the Gaussian cumulative distribution function in LabVIEW (called “NormalDist” in the figure below):

This calculates

P (z $ !1.745) =# !1.745

!"

1#2"

exp$!1

2z2

%= 0.0405

so the probability of this being a typical day is 2(.0405)=0.081, as above.

4

4

Bevington 2.8 Solution (continued)

• Use Gaussian approximation with same μ, σ.

• Transform interval to unit normal distribution in

• Limits of interval ±z,

• Probability for z within this interval given in Table C.2 of Bevington (probability of being within ±1.745σ of μ):P(-1.745 < z ≤1.745) = 0.9190

• Probability that this is a typical day = 1- P = 0.081 (or 8.1%)

• Confidence interval: the above interval is a 91.9% confidence interval for z

• z should lie within this interval 91.9% of the time.

• Larger probability interval required to exclude hypothesis

• ±3σ would correspond to a 99.73% confidence interval

• Be careful: distribution might not be truly Gaussian

2.8) There are two discrete choices with a fixed probability for each choice, so this is an exampleof a binomial distribution. For a total of 1035 cars, we know that on a typical day 75% (776.25)of people go right, 25% left. On this particular day 752 went right, so let’s call a car going to theright a success. Let’s assume the 75% probability is very well established. Thus,

PB(x; 1035, 0.75) =1035!

x!(1035! x)!

!34

"x !14

"1035!x

where x is the number of cars going to the right.

We are supposed to find the probability of getting a result as far or farther from the mean (assumethe deviation can be less than or more than the mean). This would require summing this uglyfunction from 0 to 752 and 800 to 1035 (or one minus the sum from 753 to 799). Since n " 1and x " 1, we can approximate the Binomial with a Gaussian if we are not too near x = n. Thestandard deviation is given by ! = #npq = 13.9 so the mean is (1035! 776.25)!/13.9 = 19! fromn = 1035. We can appoximate this with a Gaussian distribution.

It is convenient to transform this to a unit normal distribution in the variable z = (x! µ)/!. Thishas µ = 0 and ! = 1. Since we allow deviations in either direction from the mean, our limit is|z| = |752!776.25|/13.9 = 1.745. The probability for falling inside the interval !1.745 < z < 1.745for a unit normal distribution is given in Table C.2 of Bevington as 0.9190 (using interpolation).Thus the probability of exceeding 1.745! from the mean is 1-0.9190 = 0.081 and the probability ofthis being a typical day is 8.1%.

You could also use the Gaussian cumulative distribution function in LabVIEW (called “NormalDist” in the figure below):

This calculates

P (z $ !1.745) =# !1.745

!"

1#2"

exp$!1

2z2

%= 0.0405

so the probability of this being a typical day is 2(.0405)=0.081, as above.

4

2.8) There are two discrete choices with a fixed probability for each choice, so this is an exampleof a binomial distribution. For a total of 1035 cars, we know that on a typical day 75% (776.25)of people go right, 25% left. On this particular day 752 went right, so let’s call a car going to theright a success. Let’s assume the 75% probability is very well established. Thus,

PB(x; 1035, 0.75) =1035!

x!(1035! x)!

!34

"x !14

"1035!x

where x is the number of cars going to the right.

We are supposed to find the probability of getting a result as far or farther from the mean (assumethe deviation can be less than or more than the mean). This would require summing this uglyfunction from 0 to 752 and 800 to 1035 (or one minus the sum from 753 to 799). Since n " 1and x " 1, we can approximate the Binomial with a Gaussian if we are not too near x = n. Thestandard deviation is given by ! = #npq = 13.9 so the mean is (1035! 776.25)!/13.9 = 19! fromn = 1035. We can appoximate this with a Gaussian distribution.

It is convenient to transform this to a unit normal distribution in the variable z = (x! µ)/!. Thishas µ = 0 and ! = 1. Since we allow deviations in either direction from the mean, our limit is|z| = |752!776.25|/13.9 = 1.745. The probability for falling inside the interval !1.745 < z < 1.745for a unit normal distribution is given in Table C.2 of Bevington as 0.9190 (using interpolation).Thus the probability of exceeding 1.745! from the mean is 1-0.9190 = 0.081 and the probability ofthis being a typical day is 8.1%.

You could also use the Gaussian cumulative distribution function in LabVIEW (called “NormalDist” in the figure below):

This calculates

P (z $ !1.745) =# !1.745

!"

1#2"

exp$!1

2z2

%= 0.0405

so the probability of this being a typical day is 2(.0405)=0.081, as above.

4

5

Bevington 2.8 (concluded)

• You could also use the cumulative distribution function to find P

• This is available in LabVIEW:

2.8) There are two discrete choices with a fixed probability for each choice, so this is an exampleof a binomial distribution. For a total of 1035 cars, we know that on a typical day 75% (776.25)of people go right, 25% left. On this particular day 752 went right, so let’s call a car going to theright a success. Let’s assume the 75% probability is very well established. Thus,

PB(x; 1035, 0.75) =1035!

x!(1035! x)!

!34

"x !14

"1035!x

where x is the number of cars going to the right.

We are supposed to find the probability of getting a result as far or farther from the mean (assumethe deviation can be less than or more than the mean). This would require summing this uglyfunction from 0 to 752 and 800 to 1035 (or one minus the sum from 753 to 799). Since n " 1and x " 1, we can approximate the Binomial with a Gaussian if we are not too near x = n. Thestandard deviation is given by ! = #npq = 13.9 so the mean is (1035! 776.25)!/13.9 = 19! fromn = 1035. We can appoximate this with a Gaussian distribution.

It is convenient to transform this to a unit normal distribution in the variable z = (x! µ)/!. Thishas µ = 0 and ! = 1. Since we allow deviations in either direction from the mean, our limit is|z| = |752!776.25|/13.9 = 1.745. The probability for falling inside the interval !1.745 < z < 1.745for a unit normal distribution is given in Table C.2 of Bevington as 0.9190 (using interpolation).Thus the probability of exceeding 1.745! from the mean is 1-0.9190 = 0.081 and the probability ofthis being a typical day is 8.1%.

You could also use the Gaussian cumulative distribution function in LabVIEW (called “NormalDist” in the figure below):

This calculates

P (z $ !1.745) =# !1.745

!"

1#2"

exp$!1

2z2

%= 0.0405

so the probability of this being a typical day is 2(.0405)=0.081, as above.

4

6

Chi-Square Test of a Distribution

• Define

• For Gaussian random variables xi, it can be shown that this follows the chi-square distribution with ν degrees of freedom.

• The expectation value (i.e., mean value) for is ν

• If this is based on a fit where some parameters are determined from the fit, the number of degrees of freedom is reduced by the number of fitted parameters

• One can set confidence intervals in to evaluate the goodness of fit

• distributions can be found from tables, graphs or LabVIEW VI’s as was done for Gaussian probability. (See Table C.4 in Bevington)

• Can be approximated by Gaussian under suitable conditions

Brief Article

The Author

April 19, 2006

!x ! x" x0 =!

!f

!u

"!u +

!!f

!v

"!v

"x2 #

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

PB(x;n, p) =n!

x!(n" x)!px(1" p)n!x

#2 !N#

i=1

!xi " µi

"i

"2

1

Brief Article

The Author

April 19, 2006

!x ! x" x0 =!

!f

!u

"!u +

!!f

!v

"!v

"x2 #

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

PB(x;n, p) =n!

x!(n" x)!px(1" p)n!x

#2 !N#

i=1

!xi " µi

"i

"2

1

Brief Article

The Author

April 19, 2006

!x ! x" x0 =!

!f

!u

"!u +

!!f

!v

"!v

"x2 #

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

PB(x;n, p) =n!

x!(n" x)!px(1" p)n!x

#2 !N#

i=1

!xi " µi

"i

"2

1

Brief Article

The Author

April 19, 2006

!x ! x" x0 =!

!f

!u

"!u +

!!f

!v

"!v

"x2 #

!!f

!u

"2

"u2 +

!!f

!v

"2

"v2

PB(x;n, p) =n!

x!(n" x)!px(1" p)n!x

#2 !N#

i=1

!xi " µi

"i

"2

1

7

Comments on χ2 of Histogram

• Can model this with N individual mutually independent binomials, so long as a fixed total is not required. Then normalize to the actual Ntotal, using 1 degree of freedom in the fit (N = number of bins)(see discussion in Bevington, Ch. 3 and Prob. 4.13 solution on next page)

• For small ni, large Ntotal, large number of bins, ni is approx. Poisson

• χ2 ≈ χ2 distribution if all ni >>1(ni 5 OK) or N >>1

• For fixed Ntotal, model with multinomial distribution (see p. 12)

• But ni are not mutually independent with multinomial

!2!

N!

i=1

(Ni " ni)2

ni

!>

8

Histogram Chi-Square: Bevington Prob. 4.13

10!

i=1

xi

!2i

= 153.08, and

10!

i=1

1!i

= 4.665, so

µ! =153, 084.665

! 32.8 and

!µ ="

1/4.665 ! 0.46

4.13) I made a LabVIEW VI (see figures on next page) to plot the histogram, calculate the Gaussiancomparison values and find "2 according to Eq. 4.33 of Bevington:

"2 =n!

j=1

[h(xj)"NP (xj)]2

NP (xj)

where n is the number of bins, N is the total number of trials, h(xj) is the contents of the jth binand NP (xj) is the expected contents from the Gaussian distribution (see the text for details).

Assume the bins are small enough so we can approximate the integral of the p.d.f. over the binwith the central value of the p.d.f. times the bin width. Then

NP (xj) = N

#

!xj

p(x) dx ! Np(xj)!x

where p(xj) is the Gaussian p.d.f. evaluated at the center of the jth bin and !x = 2 is the binwidth.

This analysis assumes the contents of each histogram bin hj is an independent measurement. µand ! are given but the total number of trials is taken to agree with the experiment (200 trials).This represents one constraint and reduces the number of degrees of freedom by 1. We have 13bins to compare with the Gaussian and # = 12 degrees of freedom.

The expectation value for "2 equals the number of degrees of freedom, < "2 >= 12. The resulting"2 = 8.28 (disagrees with the answer in the book but was checked independently). The probabilityfor exceeding this value of "2 is 0.76 (calculated by LabVIEW but agrees with interpolated valuefrom Table C.4). The reduced "2 # <!2>

" = 0.69 (expectation value of 1). This is not a bad fit. Ofcourse, the "2 distribution is only valid for underlying Gaussians and this is not a good assumptionfor the bins with low occupancy.

5

10!

i=1

xi

!2i

= 153.08, and

10!

i=1

1!i

= 4.665, so

µ! =153, 084.665

! 32.8 and

!µ ="

1/4.665 ! 0.46

4.13) I made a LabVIEW VI (see figures on next page) to plot the histogram, calculate the Gaussiancomparison values and find "2 according to Eq. 4.33 of Bevington:

"2 =n!

j=1

[h(xj)"NP (xj)]2

NP (xj)

where n is the number of bins, N is the total number of trials, h(xj) is the contents of the jth binand NP (xj) is the expected contents from the Gaussian distribution (see the text for details).

Assume the bins are small enough so we can approximate the integral of the p.d.f. over the binwith the central value of the p.d.f. times the bin width. Then

NP (xj) = N

#

!xj

p(x) dx ! Np(xj)!x

where p(xj) is the Gaussian p.d.f. evaluated at xj , the center of the jth bin and !x = 2 is the binwidth.

p(xj) =1

!#

2#exp

$"1

2

%xj " µ

!

&2'.

This analysis assumes the contents of each histogram bin hj is an independent measurement. µand ! are given but the total number of trials is taken to agree with the experiment (200 trials).This represents one constraint and reduces the number of degrees of freedom by 1. We have 13bins to compare with the Gaussian and $ = 12 degrees of freedom.

The expectation value for "2 equals the number of degrees of freedom, < "2 >= 12. The resulting"2 = 8.28 (disagrees with the answer in the book but was checked independently). The probabilityfor exceeding this value of "2 is 0.76 (calculated by LabVIEW but agrees with interpolated valuefrom Table C.4). The reduced "2 $ <!2>

" = 0.69 (expectation value of 1). This is not a bad fit. Ofcourse, the "2 distribution is only valid for underlying Gaussians and this is not a good assumptionfor the bins with low occupancy.

5

9

Histogram Chi-Square ResultThe VI front panel with the results and the diagram which produced them are shown in the followingfigures.

6

10

Histogram Chi-Square: Comments

10!

i=1

xi

!2i

= 153.08, and

10!

i=1

1!i

= 4.665, so

µ! =153, 084.665

! 32.8 and

!µ ="

1/4.665 ! 0.46

4.13) I made a LabVIEW VI (see figures on next page) to plot the histogram, calculate the Gaussiancomparison values and find "2 according to Eq. 4.33 of Bevington:

"2 =n!

j=1

[h(xj)"NP (xj)]2

NP (xj)

where n is the number of bins, N is the total number of trials, h(xj) is the contents of the jth binand NP (xj) is the expected contents from the Gaussian distribution (see the text for details).

Assume the bins are small enough so we can approximate the integral of the p.d.f. over the binwith the central value of the p.d.f. times the bin width. Then

NP (xj) = N

#

!xj

p(x) dx ! Np(xj)!x

where p(xj) is the Gaussian p.d.f. evaluated at the center of the jth bin and !x = 2 is the binwidth.

This analysis assumes the contents of each histogram bin hj is an independent measurement. µand ! are given but the total number of trials is taken to agree with the experiment (200 trials).This represents one constraint and reduces the number of degrees of freedom by 1. We have 13bins to compare with the Gaussian and # = 12 degrees of freedom.

The expectation value for "2 equals the number of degrees of freedom, < "2 >= 12. The resulting"2 = 8.28 (disagrees with the answer in the book but was checked independently). The probabilityfor exceeding this value of "2 is 0.76 (calculated by LabVIEW but agrees with interpolated valuefrom Table C.4). The reduced "2 # <!2>

" = 0.69 (expectation value of 1). This is not a bad fit. Ofcourse, the "2 distribution is only valid for underlying Gaussians and this is not a good assumptionfor the bins with low occupancy.

5

11

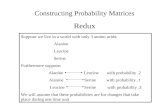

Multinomial Distribution

http://mathworld.wolfram.com/MultinomialDistribution.html

Histogram with n bins, N total counts (partition N events into n bins), xi counts in ith bin, with probability pi to get a count in ith bin

n!

i=1

xi = N,

n!

i=1

pi = 1

P (x1, x2, . . . , xn) =N !

x1!x2! . . . xn!px1

1px2

2. . . pxn

n

with

µi = Npi, !i2 = Npi(1 ! pi), !ij

2 = !Npipj

.

Then

Reference:

12

“Complete” Lab Writeup

• Similar to research report. Outline as follows:

• Abstract (very brief overview stating results)

• Introduction (Overview and theory related to experiment)

• Experimental setup and procedure

• Analysis of data and results with errors

• Graphs should have axes labeled with units, usually points should have error bars and the graph should have a caption explaining briefly what is plotted

• Comparison with accepted values, discussion of results and errors; conclusions, if any.

• References

• Have draft/outline of paper and preliminary calculations at lab time Wednesday

13