Generalized Linear Models - Universitetet i oslo · The linear regression model is a GLM •...

Transcript of Generalized Linear Models - Universitetet i oslo · The linear regression model is a GLM •...

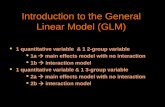

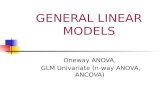

Generalized Linear Models

STK3100 - 4. september 2011

Plan for 3. lecture:1. Definition of GLM

2. Link functions and canonical link

3. Estimation of GLM - Maximum Likelihood

4. Large sample results

5. Tests in GLM - Likelihood Ratio

Generalized Linear Models – p. 1

Definition of GLM

A GLM is defined by

• IndependentY1, Y2, . . . , Yn from the same distribution in

the exponential family

• with pdf/pmff(yi; θi) = c(yi;φ) exp((θiyi − a(θi))/φ)

• and expectationsµi = a′(θi)

• Linear predictorsηi = β0 + β1xi1 + · · ·+ βpxip = β′xi

• Link functiong(): The expectationµi = E[Yi] is coupled to

the linear predictor throughg(µi) = ηi

Note thatµi depends on the (the vector)β throughg(µi) = ηi,

i.e. µi = g−1(ηi).

Thereforeθi also depends onβ throughµi = a′(θi).Generalized Linear Models – p. 2

The linear regression model is a GLM

• Responses (Yi-s) from normal distributions

• Linear predictorsηi = β0 + β1xi1 + · · ·+ βpxip

• E[Yi] = µi = ηi, i.e. the link functiong(µi) = µi is the

identity function

The R-commandslm for linear regression andglm does

essentially the same, but with slightly different output.

Linear regression is the default specification inglm

Generalized Linear Models – p. 3

Ex. 1: Birth weights

> lm(vekt˜sex+svlengde)

Call:

lm(formula = vekt ˜ sex + svlengde)

Coefficients:

(Intercept) sex svlengde

-1447.2 -163.0 120.9

> glm(vekt˜sex+svlengde)

<Call: glm(formula = vekt ˜ sex + svlengde)

Coefficients:

(Intercept) sex svlengde

-1447.2 -163.0 120.9

Degrees of Freedom: 23 Total (i.e. Null); 21 Residual

Null Deviance: 1830000

Residual Deviance: 658800 AIC: 321.4Generalized Linear Models – p. 4

The logistic regression model is a GLM

• Responses (Yi-s) from binomial distributions Bin(ni, µi)

• Linear predictorsηi = β0 + β1xi1 + · · ·+ βpxip

• E[Yi] = µi = niexp(ηi)

1+exp(ηi).

• Gives link functiong(µi) = log( µi

ni−µi)

• Usually expressed as a function ofπ = µ/n, i.e.

g(πi) = log( πi

1−πi)

(which also is the link function for the binomial proportions

Yi/ni)

• log( π1−π

) = logit(π) is called the logit function

Generalized Linear Models – p. 5

Link functions for binomial data

The logit link function gives

π = g−1(η) =exp(η)

1 + exp(η),

i.e. g−1(η) is a cumulative distribution function (CDF) for a

continuous distribution

• Continuous and strictly increasing

• g−1(−∞) = 0 andg−1(∞) = 1

Can in general define link functions byg(π) = F−1(π) where

F () is a continuous CDF

Generalized Linear Models – p. 6

Other link functions for binomial data

Most usual alternative to logit is theprobit-link,

g2(π) = Φ−1(π)

whereΦ(η) =∫ η

−∞exp(−x2/2)√

2πdx = is the CDF for N(0,1) (theπ

here is 3.14)

Another alternative isComplementary log-log-link

g3(π) = log(− log(1− π))

which is the inverse ofF (η) = 1− exp(− exp(η))

(which is CDF for the so called Gumbel distribution)

Generalized Linear Models – p. 7

Ex: Link functions logit and probit

> glm(cbind(Dode,Ant-Dode)˜Dose,family=binomial)

Call: glm(formula = cbind(Dode, Ant - Dode) ˜ Dose, family = binomial)

Coefficients:

(Intercept) Dose

-60.72 34.27

Degrees of Freedom: 7 Total (i.e. Null); 6 Residual

Null Deviance: 284.2

Residual Deviance: 11.23 AIC: 41.43

> glm(cbind(Dode,Ant-Dode)˜dose,family=binomial(link=probit))

Coefficients:

(Intercept) dose

-34.94 19.73

Degrees of Freedom: 7 Total (i.e. Null); 6 Residual

Null Deviance: 284.2

Residual Deviance: 10.12 AIC: 40.32

Generalized Linear Models – p. 8

Ex: Link function complementary log-log

> glm(cbind(Dode,Ant-Dode)˜dose,family=binomial(link=cloglog))

Coefficients:

(Intercept) dose

-39.57 22.04

Degrees of Freedom: 7 Total (i.e. Null); 6 Residual

Null Deviance: 284.2

Residual Deviance: 3.446 AIC: 33.64

Generalized Linear Models – p. 9

Link functions for Poisson regression

• ResponsesYi ∼ Po(µi)

• Linear predictorηi = β0 + β1xi1 + · · ·+ βpxip

Usual link functions

• ηi = g0(µi) = log(µi) which givesµi = exp(ηi)

• ηi = g1/2(µi) =√µi

• ηi = gp(µi) = µpi

Generalized Linear Models – p. 10

Ex: Number of children among pregnant women

> glm(children˜age,family=poisson)

Coefficients:

(Intercept) age

-4.0895 0.1129

Degrees of Freedom: 140 Total (i.e. Null); 139 Residual

Null Deviance: 194.4

Residual Deviance: 165 AIC: 290

> glm(children˜age,data=births,family=poisson(link=sqrt))

Coefficients:

(Intercept) age

-0.61109 0.04477

Degrees of Freedom: 140 Total (i.e. Null); 139 Residual

Null Deviance: 194.4

Residual Deviance: 164.4 AIC: 289.3

Generalized Linear Models – p. 11

Canonical link function

• µi depends onβ throughg(µi) = ηi

• Thereforeθi depends onβ throughµi = a′(θi)

• A GLM becomes mathematically more simple if we

assume that

the canonical parameterθi = the linear predictorηi

• The link functiong(µi) is then calledcanonical.

• Thenµi = g−1(ηi) = g−1(θi)

• Sinceµi = a′(θi) the canonical link function is found from

g−1(θi) = a′(θi)

Generalized Linear Models – p. 12

Examples of canonical link functions

• Normal distribution: Ordinary linear-normal model

a′(θ) = θ = g−1(θ) which givesg(µ) = µ

• Poisson distribution: Log-linear model

a′(θ) = exp(θ) = g−1(θi) which givesg(µ) = log(µ)

• Binomial distribution withn = 1, i.e.µ = π:

a′(θ) = exp(θ)1+exp(θ)

= g−1(θi) which gives

g(π) = log(π/(1− π)) = logit(π)

• Canonical link make computations simpler, but not so

important with modern computers

Generalized Linear Models – p. 13

Re-numberingβ

• So far we have numbered the elements of the vectorβ from

0 top, i.e. p+ 1 parametersβ0, β1, . . . βp

• In the following, we number them from 1 top,

i.e. p parameters (β1 is now the intercept if included)

• This is done to follow the book

Generalized Linear Models – p. 14

Likelihood for GLM

Since theYi-s are independent with pdf/pmff(yi; θi) the

likelihood is

L(β, φ) =n∏

i=1

f(Yi; θi)

Note that this is a function of the regression coefficientsβ since

θi is a function ofµi which further is a function ofβ.

Contribution to the log-likelihood from thei-th observation is

li(β) = log(f(Yi; θi) and the log-likelihood is

l(β, φ) =n

∑

i=1

li(β, φ) =n

∑

i=1

[θiYi − a(θi)

φ+ log(c(Yi;φ))]

Generalized Linear Models – p. 15

Estimation of β

Score function =∂l(β)/∂βj

Componentj in the score functions(β) = (s1(β), . . . , sp(β))′ is

(see next slide)

sj(β) =∂l(β)

∂βj

=n

∑

i=1

sij(β) =1

φ

n∑

i=1

xijYi − µi

g′(µi)V (µi)

MLE β is found by solving

sj(β) =1

φ

n∑

i=1

xijYi − µi

g′(µi)V (µi)= 0

for j = 1, . . . , p, whereµi is estimated expectation withβ = β

Can dropφ here, so MLE ofβ does not depend on the value ofφ

Generalized Linear Models – p. 16

Score-contribution

Score-contribution from observationi is found by the chain rule

and the rule of the derivative of an inverse function:

sij(β) =∂li(β)

∂βj

=∂ηi∂βj

∂µi

∂ηi

∂θi∂µi

∂li∂θi

where∂ηi∂βj

= xij

∂µi

∂ηi= 1

∂ηi∂µi

= 1∂g(µi)

∂µi

= 1g′(µi)

∂θi∂µi

= 1∂µi∂θi

= 1∂a′(θi)

∂θi

= 1a′′(θi)

= 1V (µi)

∂li∂θi

= ∂[(θiYi−a(θi))/φ+log(c(Yi))]∂θi

= Yi−a′(θi)φ

= Yi−µi

φ

This givessij(β) =

1

φxij

Yi − µi

g′(µi)V (µi)

Generalized Linear Models – p. 17

Score function cont.

sj(β) =∂l(β)

∂βj

=n

∑

i=1

sij(β) =1

φ

n∑

i=1

xijYi − µi

g′(µi)V (µi)

Note that E[sj(β)] = 0 since E[Yi − µi] = 0

These estimating equations can not be solved analytically except

in ordinary linear regression with normal distribution and

identity link

Therefore usually solved by numerical optimisation

Generalized Linear Models – p. 18

Numerical optimisation

Newton-Raphson:

β(s+1) =β(s) + [J(β(s))]−1s(β(s))

s(β) =∂l(β)

∂βscore function

J(β) =− ∂2l(β)

∂β∂βTobserved information matrix

The Fisher scoring algorithm:

β(s+1) =β(s) + [I(β(s))]−1s(β(s))

I(β) =E[J(β)] expected information matrix

I(β) is also called Fisher information

Generalized Linear Models – p. 19

Observed information matrix

J(β) = {Jj,k(β)} whereJj,k(β) = − ∂2l

∂βj∂βk

= − ∂sj∂βk

where

− ∂sj∂βk

= − 1φ

∑ni=1 xij

∂[(Yi−µi)/(g′(µi)V (µi))]

∂βk

= − 1φ

∑ni=1 xij

∂ηi∂βk

∂µi

∂ηi

∂[(Yi−µi)/(g′(µi)V (µi))]

∂µi

= − 1φ

∑ni=1 xijxik

1g′(µi)

∂[(Yi−µi)/(g′(µi)V (µi))]

∂µi

and

∂[(Yi−µi)/(g′(µi)V (µi))]

∂µi= 1

g′(µi)V (µi)∂(Yi−µi)

∂µi

+(Yi − µi)∂[1/(g′(µi)V (µi))]

∂µi

= −1g′(µi)V (µi)

+ (Yi − µi)∂[1/(g′(µi)V (µi))]

∂µiGeneralized Linear Models – p. 20

Expected information matrix

Since

E

[

(Yi − µi)∂[1/(g′(µi)V (µi))]

∂µi

]

= 0

The expected information matrix is

I(β) = E[J(β)] =1

φ

[

n∑

i=1

xijxik1

g′(µi)2V (µi)

]p

j,k=1

Generalized Linear Models – p. 21

Estimation of φ

• Maximum likelihood

• The ML estimates ofβ does not depend on the value of

φ and can be found as described above.

• Pluggingβ into the likelihood gives a profile likelihood

for φ, and the ML estimate ofφ is found by maximising

l(φ) = l(β, φ) =n

∑

i=1

[1

φθiyi − a(θi) + log(c(yi;φ))]

• Can be maximised numerically

• φ can also be estimated by the moment method

Generalized Linear Models – p. 22

“Large sample” theory

We want

• properties of estimates, including standard errors and

confidence intervals

• to test hypotheses

Generalized Linear Models – p. 23

Large sample results for MLE of β in a GLM

• Whenβ is p-dimensional and number of observations are

large, we have

β ≈ Np(β, I−1(β))

• This can be used to construct confidence intervals for each

element inβ, for the linear predictorηi for givenx-values

and forµi = g−1(ηi).

• Then plug in the MLEβ

• This is also the basis for the Wald test, see later

Generalized Linear Models – p. 24

Large sample results for the score function in a GLM

s(β) ≈ Np(0, I(β))

The normal distribution comes from the central limit theorem

Thej-th component of s:sj(β) = 1φ

∑ni=1 xij

Yi−µi

g′(µi)V (µi)

E[s(β)] = 0 sinceE(Yi − µi) = 0

Proof for covariance matrix:Cov(sj, sk) = E(sj · sk)

= 1φ2

∑ni=1

xijxik

g′(µi)2V (µi)2Var(Yi − µi)

= 1φ

∑ni=1

xijxik

g′(µi)2V (µi)= Ijk

Generalized Linear Models – p. 25

Multivariate normal distribution

A p-dimensional vectorY = (Y1, . . . , Yp)′

is multivariate normal distributed if we can write

Y = AZ+ µ ,

whereZ′ = (Z1, . . . , Zp) is a vector ofp independent N(0,1)

variablesZi, µ′ = (µ1, . . . , µp) an arbitraryp-dimensional vector

of numbers andA a non-singular matrix

E[Y] = AE[Z] + µ = µ

Var[Y] = V = Var[AZ] = E[(AZ)(AZ)T ]

= E[AZZTA

T ] = AE[ZZ)T ]AT = AAT

ThereforeY ∼ Np(µ,V)

Generalized Linear Models – p. 26

Distribution of (Y − µ)TV−1(Y − µ)

(Y − µ)TV−1(Y − µ) = ZTZ =

p∑

i=1

Z2i ∼ χ2

p

i.e. chi square distributed withp degrees of freedom since

Zi ∼ N(0,1) and independent

Generalized Linear Models – p. 27

Hypothesis testing

Various hypothesis:

• H0 : βj = β∗j : one parameter equal a specified value, e.g. 0

• H0 : β = β∗ : all parameters equal specified values

• H0 : βp−q+1 = βp−q+2 = · · · = βp = 0 whereq < p

- some parameters equal 0

• H0 : βj = βi : two parameters equal

Tree possible tests

• Wald test

• Score test

• Likelihood ratio test

All tests are based on statistics that areχ2q distributed

Generalized Linear Models – p. 28

Hypothesis testing cont.

Can be generalised as a restrictionCβ = r whereC is a known

qxr matrix andr is a q-dimensional vector of known values

• H0 : βj = β∗j : C a row vector with 1 in columnj and 0

elsewhere,r = β∗j

• H0 : β = β∗ : C thepxp identity matrix,r = β∗

• H0 : βp−q+1 = βp−q+2 = · · · = βp = 0 whereq < p : C a

qxp matrix where all elements in the firstp− q columns are

0 and the lastq columns is theqxq identity matrix, r a

q-dimensional vector of 0-s

• H0 : βj = βi : C a row vector with 1 at elementj and -1 at

elementi and 0 elsewhere,r = 0

Generalized Linear Models – p. 29

Wald test

In general, whenY ∼ Np(µ,V) then

(Y − µ)′V−1(Y − µ) ∼ χ2p

Sinceβ ≈ Np(β, I−1(β)) the Wald test statistic withI = I(β)

(and also plugged in a consistent estimate ofφ if needed) is

(β − β)T I(β − β) ≈ χ2p

and

(Cβ − r)T [CI−1CT ]−1(Cβ − r) ≈ χ2q

The hypothesis is rejected if the test statistic is large

The MLE β of the full model is needed, and also a consistentestimate ofφ if φ is unknown

Generalized Linear Models – p. 30

Likelihood ratio test

• Let β denote MLE in the full (unrestricted) model andβ

MLE in the restricted model whereCβ = r

• Then the likelihood ratio statistic is

2 log[L(β)/L(β)] = 2[l(β)− l(β)] ≈ χ2q

• Both β andβ is needed

• If the φ is unknown, one has to use the same consistent

estimate ofφ in the two log likelihoods

Generalized Linear Models – p. 31

Score test

Sinces(β) ≈ Np(0, I(β))

the score test statistic withI = I(β) (and also plugged in a

consistent estimate ofφ if needed) is

s(β)T I−1s(β) ∼ χ2

q

The MLE β of the restricted model is needed,and a consistent estimate ofφ if φ is unknown

Generalized Linear Models – p. 32

Comparing test properties

• Wald-, score- and likelihood ratio (LR) tests are

asymptotically equivalent, but can give different results

when there are few observations (small sample)

• The score and LR tests have in general better small sample

properties than the Wald test

• The Wald test corresponds to the usual confidence intervals

for βj

• Since LRT can can be computed directly from the

likelihood (without an estimate of the Fisher information),

it is simple to use

• The score test may be better for one-sided tests

Generalized Linear Models – p. 33

Ex: Deadly dose of poison for beetles

Yi = number of died beetles out ofni treated with dosexi

Model:

Yi ∼ Bin(ni, πi) with

πi =exp(β0 + β1xi)

1 + exp(β0 + β1xi)

Want to test H0 : β1 = 0

Generalized Linear Models – p. 34

Beetles-ex cont: - Output from R

> glmfit0biller<-glm(cbind(Dode,Ant-Dode)˜Dose,family=binomial)

> summary(glmfit0biller)

Call:

glm(formula = cbind(Dode, Ant - Dode) ˜ Dose, family = binomial)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.5941 -0.3944 0.8329 1.2592 1.5940

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -60.717 5.181 -11.72 <2e-16 ***Dose 34.270 2.912 11.77 <2e-16 ***---

Signif. codes: 0 ’ *** ’ 0.001 ’ ** ’ 0.01 ’ * ’ 0.05 ’.’ 0.1 ’ ’ 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 284.202 on 7 degrees of freedom

Residual deviance: 11.232 on 6 degrees of freedom

AIC: 41.43

Generalized Linear Models – p. 35

Beetles-ex cont: Wald test

• β1 = 34.27

• se1 = 2.912

• z = β1/se1 = 11.77

• z2 = 138.5

• P (Z2 > 138.5) = 1-pchisq(138.5,1) = 0

Generalized Linear Models – p. 36

Beetles-ex cont. Likelihood ratio test

Estimate the two models and extract the likelihoods with thelogLik function:> fit0<-glm(cbind(Dode,Ant-Dode)˜1,family=binomial)

> logLik(fit0)

’log Lik.’ -155.2002 (df=1)

> fit1<-glm(cbind(Dode,Ant-Dode)˜Dose,family=binomial)

> logLik(fit)

’log Lik.’ -18.71513 (df=2)

> 2* (logLik(fit1)-logLik(fit0))

[1] 272.9702

attr(,"df")

[1] 2

attr(,"class")

[1] "logLik"

Note: The test statistic valueG = 272.97 is also given as thedifference between the "Null Deviance" and "ResidualDeviance" on the R output for the full model

Generalized Linear Models – p. 37