Cloud-based Facility Management Benchmarking · Cloud-based Facility Management Benchmarking Sofia...

Transcript of Cloud-based Facility Management Benchmarking · Cloud-based Facility Management Benchmarking Sofia...

Cloud-based Facility Management Benchmarking

Sofia Pereira Martins

Thesis to obtain the Master of Science Degree in

Information Systems and Computer Engineering

Supervisor: Prof. Paulo Jorge Fernandes Carreira

Examination Committee

Chairperson: Prof. José Luís Brinquete BorbinhaSupervisor: Prof. Paulo Jorge Fernandes CarreiraMember of the Committee: Prof. Inês Flores-Colen

May 2015

ii

Acknowledgments

First of all, I need to thank my advisor Professor Paulo Carreira, who gave excellent ideas and re-

sources, who connected me to the right persons. I thank him for his full and expertise support during

the development of this thesis, making this work even more interesting and important.

Thank you to Sara Oliveira for all her help on Facility Management and Benchmarking subject and a

thank you to APFM for helping with the distribution of questionnaires.

A big thank you to Bernardo Simoes who helped me learn Ruby on Rails from scratch, and had

patience even when I was being obtuse. Thank you to all my friends for being there for the funny and the

working times, a special thanks to Andreia Santos who was my great companion through the course.

Also, I need to thank Guilherme Vale for helping, specially at the end of this thesis.

I am specially thankful to my family, who supported me through everything all my life. A big special

thank you to my brother, Andre Martins, who always guided me, and helped me being who I am today.

iii

iv

Resumo

As despesas de instalacoes, tais como a manutencao de equipamentos ou a limpeza do espaco, con-

stituem uma grande fatia do custo base das organizacoes. O Facility Management moderno utiliza

software especıfico, como Computer Aided Facility Management Software, para identificar e otimizar o

desempenho dos instalacoes, atraves do benchmark de diferentes categorias de indicadores non-core

do negocio.

No entanto, um grande problema e que nao e claro para as organizacoes se esses softwares podem

trazer otimizacoes a nıvel de desempenho, ou seja, nao e claro que a pratica do Facility Management

esta directamente ligada com o desempenho dos melhores na sua industria. No geral, ha uma neces-

sidade de uma solucao de benchmarking que junte os indicadores de Facility Management de facilities

distintas, identificando os contrastes e semelhancas entre elas.

Esta tese estuda o problema da avaliacao comparativa do Facilities Management — atraves das

ferramentas existentes para benchmarking de facilities, tendo em conta tanto a literatura cientıfica e

como a pratica atual na industria — e propoe uma solucao em Cloud para integrar indicadores chave

de desempenho (Key Performance Indicators) de instalacoes distintos. Especificamente, esta tese

sistematiza e prioriza uma lista de indicadores mais utilizados e, em seguida, desenvolve uma solucao

Cloud que integra indicadores de facilities distintas, validando a utilidade da lista anteriormente referida.

A solucao e validada atraves de um conjunto de testes de usabilidade e desempenho, com resultados

promissores.

Usando esta solucao, cada organizacao conseguira acompanhar a sua classificacao em relacao

a outras organizacoes, e saber se podem otimizar ainda mais a sua pratica de Facility Management.

Espera-se que este facto leve a uma melhoria global no funcionamento de edifıcios.

Palavras-chave: Gestao de Instalacoes, Indicadores Chave de Desempenho, Benchmark-

ing, Computacao em Nuvem, Analise de Dados, Medicoes, Normas

v

vi

Abstract

Facilities expenditures, such as equipment maintenance or space cleaning, constitute a big slice of

organizations base cost. Modern Facilities Management employs specific software such as Computer

Aided Facility Management Software to identify and optimize facilities performance by benchmarking

different categories of the non-core business indicators.

A pervasive problem, however, is that organizations do not realize whether they can bring those opti-

mizations to new levels of performance, i.e., they do not perceive if their Facilities Management practice

is in line with the performance of the best in their industry. Overall, there is a need for a benchmarking so-

lution that brings Facilities Management indicators of distinct facilities together, identifying the contrasts

and similarities between them.

This thesis studies the problem of Facilities Management benchmarking—through the existing tools

for facilities benchmarking, taking into account both scientific literature and current industry practice—

and proposes a Cloud-based solution to integrate Key Performance Indicators of distinct facilities. Specif-

ically, the thesis systematizes and prioritizes a list of the most commonly used indicators and then devel-

ops a Cloud-based solution to integrate indicators from distinct facilities that validates their usefulness.

The solution is validated through a set of usability and performance tests with promising results.

Using this solution, organizations will be able to track their ranking with respect to other organizations,

and know whether they can further optimize their Facilities Management practice. Expectably, this will

lead to global improvement in the operation of facilities.

Keywords: Facilities Management, Key Performance Indicator, KPI, Benchmarking, Cloud

Computing, Data Analysis, Measurements, Standards

vii

viii

Contents

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . iii

Resumo . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . v

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vii

List of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xiii

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xvi

Glossary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xviii

1 Introduction 1

1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.2 Problem Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Methodology and Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.4 Document Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2 Concepts 7

2.1 Facilities Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 FM Software Systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.3 Benchmarking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.4 Key Performance Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.5 Cloud Computing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.6 Cloud Computing Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.7 Cloud Computing Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

2.8 Cloud Computing Benefits . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.9 Cloud Computing for Facility Management (FM) . . . . . . . . . . . . . . . . . . . . . . . . 13

3 State-of-the-art 15

3.1 International Standards for FM Benchmarking . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.2 Benchmarking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.2.1 Benchmarking in Business . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.2.2 Benchmarking in FM . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.3 Existing Software Solutions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3.1 ARCHIBUS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.3.2 PNM Soft Sequence Kinetics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

ix

3.3.3 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3.4 Scientific Literature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.5 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.5.1 Priority Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.5.2 Normalized FM Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4 Solution Proposal 32

4.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

4.2 Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.2.1 Elicitation Interviews . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.2.2 User Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4.2.3 Functional Requirements and Constraints . . . . . . . . . . . . . . . . . . . . . . . 34

4.2.4 Defining a System Domain Model . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.2.5 Creating the interfaces conceptual model . . . . . . . . . . . . . . . . . . . . . . . 35

4.3 Solution Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.3.1 Server Side . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.3.2 Database Layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.3.3 Client Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

4.4 Implementation Process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.4.1 Languages and Frameworks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.4.2 Version Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.4.3 Test Driven Development . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

4.4.4 Deployment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.5 Solution Implementation Issues . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.5.1 Database Schema . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.5.2 VAT ID and ZIP Code Validation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.5.3 User Authentication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.5.4 Seed and Fixture for Testing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.5.5 KPIs Computation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.5.6 Implementation of Granular Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.5.7 Excel File Import and Values Verification . . . . . . . . . . . . . . . . . . . . . . . . 50

4.5.8 Google Places Auto-complete . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.5.9 Metrics List Improvement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

4.5.10 Login and Register Modal . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5 Evaluation 52

5.1 Validation of the Indicators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

5.2 Usability Tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

5.2.1 Defining Scenarios and Tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

5.2.2 Usability Tests Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

x

5.3 Performance Tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

5.3.1 Cache Efficiency Tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

5.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6 Conclusions 58

6.1 Lessons Learned . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

6.2 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

Bibliography 67

A Indicators Tables 69

A.1 Indicators Table 1 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

A.2 Indicators Table 2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

B Questionnaires 71

B.1 Routine Cleaning Quality Questionnaire . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

B.2 Special Cleaning Quality Questionnaire . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

B.3 Users Questionnaire about KPIs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

B.4 Usability Testing: Google Form . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

B.5 Usability Testing: Scenarios and Tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

C Prototype 78

C.1 Measures Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

C.2 Benchmarking Reports Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

C.3 User Details Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

C.4 Home Screen Prototype . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

C.5 Facility Details Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

C.6 Sign In Screen . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

xi

xii

List of Tables

2.1 SMART characteristics for performance indicators. . . . . . . . . . . . . . . . . . . . . . . 11

3.1 List of relevant International Organization for Standardization (ISO) standards for Facilities

Management and Maintenance. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.2 List of European (EN) FM and Maintenance Standards. . . . . . . . . . . . . . . . . . . . 16

3.3 List of distinct FM softwares characteristics. . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.4 List of KPIs covered by the market leading FM softwares. . . . . . . . . . . . . . . . . . . 23

3.5 Use of the different metrics on UK benchmarking. . . . . . . . . . . . . . . . . . . . . . . . 24

3.6 The final list of 23 Key Performance Indicator (KPI)s to support operational requirements

of organizations. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

3.7 List of KPIs covered by the literature. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.8 Full list of KPIs covered by literature. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.9 Final list of Proposed KPIs. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

4.1 Functional system requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.2 System Constraints. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

5.1 Usability Testing Tasks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

5.2 Qualitative Testing Questionnaire. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.3 Usability Testing Users Information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

5.4 Results from performance testing Benchmarking Page. . . . . . . . . . . . . . . . . . . . 57

A.1 Key Performance Indicators for FM. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

A.2 List of reports of the ARCHIBUS FM software package. . . . . . . . . . . . . . . . . . . . 70

B.1 Example of Routine Cleaning Quality Questionnaire. . . . . . . . . . . . . . . . . . . . . . 71

B.2 Example of Special Cleaning Quality Questionnaire. . . . . . . . . . . . . . . . . . . . . . 72

xiii

xiv

List of Figures

1.1 Outline of the research methodology followed by this thesis. . . . . . . . . . . . . . . . . . 5

2.1 Arrangement of the six classes of software applications for FM. . . . . . . . . . . . . . . . 9

3.1 Measurement methods organization in categories. . . . . . . . . . . . . . . . . . . . . . . 18

3.2 International Facility Management Association (IFMA) Benchmarking Methodology adapted

from Roka-Madarasz. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.3 Measurement Categories ordered by importance. . . . . . . . . . . . . . . . . . . . . . . . 24

3.4 FM KPIs organized according to frequency and importance. . . . . . . . . . . . . . . . . . 29

4.1 FM benchmarking system architecture. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.2 System Domain Model. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4.3 Mockup Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.4 Mockup Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.5 Systems architecture overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

4.6 Database arrangements overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.7 Top Bar specifics. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 42

4.8 Examples of User details and Facility Details screens rendered on Inner Page. . . . . . . 43

4.9 (2)Examples of Metrics and Indicators Report screens rendered on Inner Page. . . . . . . 44

4.10 Database Schema. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.11 VAT ID Validation Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

4.12 Fixture Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.13 Seed Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

4.14 Granular Metric Code . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5.1 Results obtained through the KPI questionnaire. . . . . . . . . . . . . . . . . . . . . . . . 53

5.2 Results obtained through the Usability Testing. . . . . . . . . . . . . . . . . . . . . . . . . 55

5.3 Results obtained through the Usability Questionnaire. . . . . . . . . . . . . . . . . . . . . 56

B.1 First page of the users questionnaire about KPIs. . . . . . . . . . . . . . . . . . . . . . . . 73

B.2 Second page of the users questionnaire about KPIs. . . . . . . . . . . . . . . . . . . . . . 74

B.3 Google Form from Usability Testing first page. . . . . . . . . . . . . . . . . . . . . . . . . . 75

B.4 Google Form from Usability Testing second page. . . . . . . . . . . . . . . . . . . . . . . . 76

xv

B.5 Scenarios and Tasks from Usability Testing. . . . . . . . . . . . . . . . . . . . . . . . . . . 77

C.1 Measures prototype Screen. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

C.2 Benchmarking Reports prototype Screen. . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

C.3 User edition details screen. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

C.4 Home Screen for users not authenticated. . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

C.5 Facility Details Screen. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

C.6 Sign In Screen. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

xvi

List of Acronyms

BAS Building Automation Systems. 1, 8

BCIS Building Cost Information Service. 18

BIM Building Information Models. 1, 8, 19

CAD Computer Aided Design. 1, 8, 19

CAFM Computer Aided Facility Management. 1, 2, 8, 9, 19, 22

CC Cloud Computing. 5, 11, 13, 14

CMMS Computerized Maintenance Management Systems. 1, 8, 9

EMS Energy Management Systems. 1, 8

ERP Enterprise Resource Planning. 1, 9

FM Facility Management. ix, xiii, xv, 1–5, 7–11, 13, 15–17, 19, 20, 22–27, 32, 33, 58, 59

FTE Full Time Equivalent. 22, 26, 28, 30, 39

GEA Gross External Area. 17

GIA Gross Internal Area. 18

IaaS Infrastructure as a Service. 12, 13

ICS International Classification of Standards. 15, 16

ICT Information and Communication Technology. 23, 27–30

IFMA International Facility Management Association. xv, 7, 19

IMS Incident Management Systems. 8

ISO International Organization for Standardization. xiii, 15–17

IT Information Technology. 2, 13

xvii

IWMS Integrated Workspace Management System. 1, 9, 19

KPI Key Performance Indicator. xiii, 1–3, 5, 7, 9–11, 15, 16, 19–21, 24–26, 32, 38, 40, 41, 52, 56, 58

NFA Net Floor Area. 39, 42

NIA Net Internal Area. 18

PaaS Platform as a Service. 12, 13

PI Performance Indicator. 10, 19

RICS Royal Institution of Chartered Surveys. 17, 18

SaaS Software as a Service. 12, 13

TDD Test Driven Development. 47

xviii

Chapter 1

Introduction

Facility Management (FM) is a non-core activity that supports organizations in the pursuit of their objec-

tives (core business), and is considered “the practice of coordinating the physical of business adminis-

tration, architecture, behavior and engineering science” [1].

Over the past three decades FM has known a significant development due to a number of factors

ranging from an increase in construction costs to increased performance requirements by users and

owners, as well as a greater recognition of the effect of space on productivity [2]. Consequently, FM has

matured to a professional activity and it is estimated that, along with related activities, represents more

than 5% of the GDP both of European countries and the US [3].

Since facilities-related expenditure is a big slice of the organizations base cost, FM has been equip-

ping itself with appropriate tools [4, 5], such as Computer Aided Facility Management (CAFM), Building

Information Models (BIM), Computerized Maintenance Management Systems (CMMS), Computer Aided

Design (CAD), Building Automation Systems (BAS), Energy Management Systems (EMS), Enterprise

Resource Planning (ERP), Integrated Workspace Management System (IWMS).

These software applications rely on large amounts of integrated data allowing the ability to extract

measures and indicators and, with them, calculate Key Performance Indicators (KPI). Indeed, these KPIs

give important insight into functioning of FM activities—keeping track of KPIs is one aspect of quality

control [6]—as well enabling organizations to compare each other on performance of their facilities and

services, since organizations have to perform better that their competitors, while operating at the lower

costs.

According to the FM European norm EN1221-7, benchmarking can be defined as “part of a process

which aims to establish the scope for, and benefits of, potential improvements in an organization through

systematic comparison of its performance with that of one or more other organizations” [7]. It has also

been defined as the search of “industry best practices that lead to superior performance” [8] and it has

a key role to play in FM [9]. Benchmarking can be used either for performance comparison between

distinct organizations or between facilities within the same organization. A facility can also be com-

pared with itself at different points in time. Therefore, many organizations have regular benchmarking to

reassess their overall costs [9].

1

Benchmarking provides important advantages to FM such as justification of energy consumption,

costs, identification of weaknesses/ threats, strengths/ opportunities and best practices, and addition

of value to facilities integrating them in CAFM systems and supporting maintenance management. Al-

though some organizations have their own benchmarking software, this software is not compatible be-

tween distinct organizations. There is no centralized mechanism to integrate all these data. Therefore,

benchmarking between FM organizations is not yet possible.

An accurate benchmarking requires the comparison of appropriate measures and indicators. Thus,

the importance of standards resides in the creation of specifications, which normalize how companies

manage their FM data to enable compatibility of analysis results between organizations, and also, ensu-

ing that there is no misinterpretation between different organizations regarding a given measurement.

Furthermore, similarly to other management disciplines, it is still not clear which are the most impor-

tant KPIs to be used in FM. Moreover, the success of benchmarking strategy depends on a number of

organizational factors such as the management support behind it, the personality of the managers, the

organizational structure, and the FM contract [9].

Up to now, the comparison between facilities of distinct organizations is not yet possible. Although

some organizations have their own benchmarking software, this software is not compatible between

distinct organizations. There is no centralized mechanism to integrate all these data. Date is represented

differently and organizations use distinct indicators. Furthermore, is still not clear which are the most

important KPIs that should be used by each sector organizations. The same holds for the field of FM.

Another recent trend is cloud solutions [10] that are being employed successfully in sectors such

as energy, maintenance and space [11, 12, 13], even churches use it now [14]. These cloud solutions

present several benefits such as: saving of Information Technology (IT) costs and maintenance (since

it is not necessary any installation of equipment or software, and neither their maintenance by the or-

ganization IT sector), strong integration capabilities, short time-to-benefit, and scalable computation on

demand that keep up with the customer needs [15, 16]. Specifically, in benchmarking, cloud applications

enable integration of data from web data sources and processing data in a way that will be accessible

by everyone anywhere [17].

A benchmarking application that enables organizations to send facilities related information in stan-

dardized format to be processed and presented graphically is a valuable addition, since it enables every

organization benchmark results to be compared with each other—by creating interfaces that can com-

municate with the software that captures the measurements. This makes possible a ranking between

them, which would generate a healthy competition to spur the improvement of each organization’s FM.

Therefore, the central motivation for this work is to study the migration of facilities benchmarking to

the cloud using the latest computing technologies and design a solution, where a cloud application would

receive all important data from multiple sources, this data will correspond of various metrics necessary

for the calculation of a set of KPIs that will be identified through an analysis of related work. Through

the previous information, the solution would also carry out a benchmark comparison between distinct

organizations in the industry according to the obtained KPIs.

This thesis analyses literature and standard benchmarking surveys to understand which indicators

2

are more commonly used in FM. These indicators were validated through the study of scientific studies,

existing standards and interviews with FM experts. This process was also useful to understand which

features should be implemented on the solution. The interface mockups of a Cloud-based solution for

FM Benchmarking were validated by FM and design experts. The solution was implemented in Ruby on

Rails and validated regarding usability and performance.

1.1 Motivation

Using a Cloud solution that aggregates benchmarking information of distinct facilities, and ranks them

according to their performance, facilities managers will gain a deeper insight of their own FM areas. The

following two scenarios will illustrate this point.

Scenario 1 Consider an organization that has applied FM and where benchmarking has

been applied for some time now. This organization decides to use the application proposed

in this document. Through it, verifies that its position is rating well below than expected.

Thus, seeing their ranking, they become motivated to improve (as they have a perception of

their space for improvement) both globally and particular—at an indicator level.

Scenario 2 Consider two distinct organizations that are using a cloud benchmarking appli-

cation presented in this document. The first organization has come up first on the ranking

for some time now. Suppose that a second organization took their place in the rank and that

the first organization wants to regain its position. This creates a healthy competition among

participants (who do not know the identity of the other), leading to a scenario of dynamic

improvement.

There are some solutions—detailed on Section 3.3 of Chapter 1—to address the facility management

and facility benchmarking. However, today’s solutions are difficult to use, information is difficult to read

and understand, and none of them can give your position in the market relatively to your organizations

competition.

1.2 Problem Statement

As we made clear before, there is yet no agreement about which KPIs should be applicable in each

sector. According to Hinks and McNay [18], the lack of generalized sets of data and industry wide sets

of KPIs, results on poor comparability of performance metrics across organizations and industries. Fur-

thermore, there still is a lack of solutions for FM that enable integrating data from different organizations

in a way that brings gains for them. Organizations continue to use distinct software to support their FM

and KPI gathering, that hinders aggregation and analysis of data.

Our hypothesis is that a cloud-based and vendor-independent FM solution for benchmarking will

enable organizations to know their positioning and also to compare the performance of distinct facilities

3

(in the case of facilities managed by the same entity), through a set of metrics provided by the facilities

managers while at the same time engaging them in performance improvement behaviors.

This thesis aims at developing a platform for comparing KPIs between organizations. Our solution

will thus create an FM Benchmarking vendor-independent system that will use international standard

indicators to compare distinct organizations and enable them self improve through this comparison.

1.3 Methodology and Contributions

The methodology of this document starts with an analysis of literature and standard benchmarking

surveys followed by the systematization of most commonly used indicators in FM. These indicators will

undergo a prioritization to identify the most relevant ones. The prioritization and validation will make

use of scientific studies, existing standards for FM, and interviews with FM experts, analyzing the most

important indicators on a theoretical and practical level and understanding which features should be

implemented on the proposed solution.

Then, a set of mockups of the final solution is developed and validated through interviews with FM

Benchmarking experts and design experts. Finally, a Cloud-based prototype will be implemented that

will undergo validation through processes such as performance testing and usability tests with users.

This process is depicted in Figure 1.1. More specifically, the contributions of this document are as

follows:

• A rigorous comparative study between the different Facilities Management standards;

• An evaluation and comparison between the main FM benchmarks and their corresponding indica-

tors;

• The identification of the main benchmarking indicators of interest to be used to benchmark distinct

facilities with interviews to experts;

• A survey of the main software tools for FM benchmarking;

• The design of the architecture of the Cloud benchmarking application and its implementation;

• An evaluation of the developed application in terms of performance and usability.

4

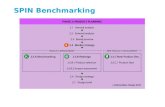

Figure 1.1: Outline of the research methodology followed by this thesis. Each box with a specific colorcorrespond to a stage of development. The lines correspond to the order each stage was applied.

1.4 Document Structure

The remaining of this thesis is organized as follows. Chapter 1 introduces the contents of this document.

Then, Chapter 2 explains in detail some important subjects-matter in the area such as FM, Benchmark-

ing, KPIs and Cloud Computing (CC). Chapter 3 discusses about standard organizations and bench-

marking standards for FM, also it describes an interview of existing benchmarking solutions, presents

the scientific literature with a study of several papers related to FM Benchmarking—to understand the

most used and most useful KPIs for organizations,—and makes a discussion about normalization and

prioritization of KPIs to conclude which indicators should be used internationally on organizations. Chap-

ter 4 describes the process undertaken to achieve the final prototype solution. Finally, in order to validate

the proposed solution described before, Chapter 5 describes the set of tests performed. Lastly, Chapter

6 summarizes the work performed and points direction for future work.

5

6

Chapter 2

Concepts

This section introduces key aspects of FM Indicators, Benchmarking, and Cloud applications. The

discipline of FM and its importance is introduced followed by a description of the distinct softwares which

support FM activities. Software packages gather KPIs that can be used for Benchmarking, enabling the

comparison of performance aspects such as operating costs, maintenance and cleaning activities, space

utilization, energy consumption or administrative costs. Cloud Computing is introduced since it is being

increasingly applied to FM.

2.1 Facilities Management

FM can be understood as the result-oriented management of facilities and services securing best value

in the provision of services, making the organization more efficient, by creating better conditions while

making less expenditures. Thus, a Facility Manager deals “with the proper maintenance and manage-

ment of the facility in a way that expectations of the occupant, owner or portfolio manager are met, and

maximum value from the facility is provided and maintained for all stakeholders” [19].

The core business of FM, aims at creating and maintaining an effective built and equipped environ-

ment to support the successful business operation of an organization – core business [20], making the

organization more efficient, by offering employees better working conditions and rationalizing expendi-

tures related to the facility. While core activities are bound to the central business of the organization

and its strategy, FM is a non-core activity that supports an organization in the pursuit of its objectives

(core business), and is considered “the practice of coordinating the physical of business administration,

architecture, behaviour and engeneering science” [1] by the IFMA. FM is a result-oriented management

of facilities and services securing best value in the provision of services, making the organization more

efficient, by giving better conditions and making less expenditures.

7

2.2 FM Software Systems

FM is supported by specialized software such as CAFM packages that track space usage, cable path-

ways, employee locations, security and access control.

CAFM systems are often integrated with CAD, important to support the planning and monitoring

of spaces and activities in it, and a database back-end that contains non graphical data about the

spaces. CAFM software also enables managing changes to the space since can be tried and tested

in computer before they are made, which can avoid future problems. CAFM systems can be populated

from BIM information containing spatial relationships, geographic information, quantities and component

properties [21], also interfaces to other systems such as CMMS—to manage preventive maintenance

activities. They also have the ability to help decrease the time for task request to task completion,

increase the speed and accuracy of information related to each task, and provide improved cost and

trend analysis [22].

These systems bring along several benefits as: i) efficient completion of operational sequences (en-

try and analysis of data), ii) increase the productivity of workers (by determining property improvements),

iii) potential cost savings (in areas such as cleaning contracts and energy consumption), iv) analysis of

information on costs, v) supporting management decisions, vi) precise valuation of fixed assets, vii) op-

timization of space utilization.

Beyond this softwares, there are others, for instance Incident Management Systems (IMS) that are

used by operators (which register incidents) or technicians (who deal with occurrences and close them)

to register, centralize and follow each occurrence’s status. These systems can generate an automatic

work backlog for each technician, which provides work efficiency. It also has reporting services, statis-

tics, incidents status, etc. IMS can be integrated with CMMS or with incident management systems from

third party service suppliers to fill-in work order requests.

CMMS creates and associates maintenance plans, that can be grouped by type of device, for each

equipment of an organization, such as air conditioning, roller stairs, furnitures, etc. Some CMMS assist

in performing contract management and equipment management. Some of CMMS can be integrated

with BAS to obtain equipment usage metrics, however, some information can not be possible to retrieve

directly from BAS, but it is possible using gathering devices.

BAS manage every building’s environment aspect, automatically controlling devices installed in the

building. Facility managers can remotely command and supervise automation sub-systems in real-time.

BAS keeps a history log of the status of each device and defines alarm conditions that can be used to

detect malfunction symptoms or devices failure, and can be integrated to CMMS or CAFM tools to report

space usage metrics obtained from occupancy sensors.

EMS gathers all energy consumption information from energy meter devices installed along the build-

ing such as electric current consumption. This information can be analyzed in detail and enables facility

managers to analyze consumption variation within comparable periods of time. BAS and EMS can

be connected together to create consumption profiles and determine relative contribution of devices or

groups of devices to the overall energy consumption.

8

Figure 2.1: Arrangement of the six classes of software applications for FM. The interoperability betweenthe classes is represented by a line between them. IWMS is a group composed by the three classes:CMMS, ERP and CAFM.

There are also ERP systems, however, they are not considered FM applications, they are very im-

portant on FM environment—where management of suppliers, logistics, accounting, billing and orders

is being made.

IWMS suites integrate functionalities of ERP for FM with CAFM and CMMS in one single application.

The arrangement of all these softwares referred above can be seen on Figure 2.1.

Most of these systems today are web-based, enabling an easier entering of the facilities data that

can be analyzed and consulted anywhere. With these analysis and benchmarking of data outcomes a

set of KPIs over a core of skills aligned with business objectives as a way to measure current levels of

performance and achievement.

2.3 Benchmarking

Benchmarking has been defined has the search of “industry best practices that lead to superior perfor-

mance” by Camp [8]. It is an important process to compare performance aspects just as operating costs,

maintenance and cleaning activities, space utilization, energy consumption or administrative costs. It

uses different previously established metrics, identifies differences, alternative approaches and assess

opportunities for improvements and change. Overall, it is a process that gives organizations instruments

to comprehend how they are performing both internally and to costumers.

Benchmarking in facilities is a process that compares performance aspects such as operating costs,

maintenance and cleaning activities, space utilization, energy consumption or administrative costs. It

uses previously established metrics, identifies differences, alternative approaches and assesses oppor-

tunities for improvements and change.

Benchmarking of facilities brings many advantages for organizations such as: increasing the aware-

ness of the need for change and restructuring, improvement in and off itself, by forcing organizations to

examine present processes, and not wasting time and resources when someone else has already done

it better, faster and cheaper. However, they all must be SMART [23] as presented on Table 2.1. Overall,

it is a process that gives organizations instruments to know how they are performing both internally and

externally (related to customers).

Historically, FM started with a focus on performance measurement, that had three broad purposes.

9

Ensuring the achievement of goals and objectives, evaluating, controlling and improving procedures

and processes, and comparing and assessing the performance of different organizations, teams and

individuals. Over the years, however, these purposes shifted to quality and consumer satisfaction [5],

translated in a set of requirements such as: knowing what clients require of the organization process,

involving key stakeholders in the benchmarking processes—users, investors, regulators, product man-

ufacturers, designers [24],—eliminating change resistance, and finally, results are not always available

instantaneously.

Benchmarking is not a simple aggregation of a few indicators. It should also include the meaning

and the purpose for each indicator. Camp lists four fundamental steps for benchmarking [8]:

• Knowing operation to evaluate internal operation strengths and weaknesses.

• Knowing the industry leaders or competitors to know the strengths and weaknesses of the

competition.

• Incorporating the best to emulate the strengths of the leaders in competition.

• Gaining superiority to go beyond the best practices installed and be the best of the best.

Benchmarking allows to compare the results between organizations, which can potentially result in

an improvement and enhancement of FM for each of the considered organizations. However, buildings

can easily demand more than their occupants and management are prepared to afford, mainly because

they do not have a defined the level of management they regard as reasonable [25]. Accordingly, it is

essential to have specific standards of measurement and metrics to ensure a common understanding of

performance and to identify performance gaps [26].

To summarize, a number of issues still have to be addressed in order to achieve appropriate and

efficient FM benchmarking, such as: i) the role of standards, ii) performance criteria and iii) verification

methods within the overall regulatory system [27].

Clearly, the FM benchmarking process requires a planning phase that decides which data to collect

[28].

2.4 Key Performance Indicators

The first step of benchmarking is establishing performance objectives and metrics. Measurements are a

direct representation of the scale of the organization and its measurable items (for organizations internal

usage), while indicators are quantifiable metrics that reflect the achievement of goals by the organization

(external usage). The services and the deliverables provided by an organization are measured according

to Performance Indicator (PI)s [29]. It is also important to distinguish between PIs and KPIs. PIs are

collected in many complex systems such as, manufacturing marketing and sales among others [6].

It is known that indicators are not always perfect and may have problems of definition and inter-

pretation. Nevertheless, they are important as they give insight on how well a system is functioning,

which is one aspect of quality control [6]. PIs inform what is the current performance, while the KPIs

10

Characteristic DescriptionSpecific in the sense that they are well defined and clearly understoodMeasurable meaning that there is a well defined process that enables KPI trackingAttainable to be achievable with the data availableRealistic in the sense that in can be measured at a reasonable costTime driven in the sense that it corresponds of a time interval

Table 2.1: SMART characteristics for performance indicators.

inform how to increase performance. KPIs are measures that provide essential information about the

performance of facility services delivery and they are established in order to measure performance and

monitor progress over time [30]. Therefore, KPIs represent a set of metrics which focus on the most

critical aspects of organizational performance [31].

KPIs should be distinguished according to the distinct roles of an organization. It is also common for

associate directors or head of advisers have custom KPI for their specific responsibilities. Furthermore,

there are various generic KPIs for other professionals and for managerial personnel based on business

measures.

Accordingly, FM departments must have their own KPIs that are aligned with the core business

KPIs [32]. The main typical FM KPIs (over a unit of time) according to Teicholz [32] are: i) Operational

Hours, ii) Response Times, iii) Rework, iv) Value Added, v) Number and performance of suppliers,

vi) Employee satisfaction, vii) Innovations (new processes), viii) Customer satisfaction, ix) Plan versus

actual on contracts, x) Number of items on tasks lists.

2.5 Cloud Computing

Cloud Computing (CC) is arising and is increasingly being applied to various fields. CC provides an

environment to enable resource sharing in terms of scalable infrastructures, middleware and application

development platforms, and value-added business applications [33].

2.6 Cloud Computing Concepts

The Cloud is an aggregation of a set of resources such as networks, servers, applications, data storages

and services, in only one place, which the end user can access and use “with minimal management

effort or service provider interaction” [34]. The main goal of CC is to make a better use of distributed

resources, combining them to achieve higher throughput and be able to solve large scale computation

problems [35]. For which contributes the following characteristics [36]:

On-demand self-service enables the consumer to acquire computing capabilities—such as server

time and network storage—as necessary without involving human interaction with the service

provider.

Broad network access in the sense that capabilities are available over the network and ac-

cessed through standard mechanisms that promotes heterogeneous use by thin and thick client

11

platforms (e.g., mobile phones, tablets, laptops, and workstations).

Rapid elasticity means that computing capabilities are provisioned and released in order to

scale rapidly outward and inward proportionally with demand. Therefore, the capabilities available

often appear to be unlimited to the user and can be appropriated in any quantity at any time.

Computing resources pool to serve multiple consumers using a multi-tenant model, with differ-

ent physical and virtual resources dynamically assigned and reassigned according to consumer

demand. The customer has no control or knowledge over the exact location of the provided re-

sources but may be able to specify location at a higher level of abstraction—country, state or

data-center. Examples of resources include storage, processing, memory, and network band-

width.

Measurement services to control and optimize resources by type of service—such as storage,

processing, bandwidth, and active user accounts. Thus, resource usage can be monitored, con-

trolled, and reported, providing transparency for both the provider and consumer of the service.

2.7 Cloud Computing Models

Today we have several services that we can use, since Infrastructure as a Service (IaaS), Platform as a

Service (PaaS) or Software as a Service (SaaS) [37]:

Infrastructure as a Service (IaaS) the consumer has the capability to acquire processing, stor-

age, networks, and other fundamental computing resources. The consumer is able to deploy

and run arbitrary software, which can include operating systems and applications. An example is

Amazon EC2 which provides web service interface to easily request and configure capacity online

[38]. For a higher layer of IaaS, computational, storage, and network. An example is Amazon’s

Dynamo [39].

Platform as a Service (PaaS) the consumer has the capability to deploy onto the cloud infras-

tructure, consumer-created or acquired applications created using programming languages, li-

braries, services, and tools supported by the provider. An example is Google’s App Engine [40]

and Heroku [41].

Software as a Service (SaaS) the applications are accessible from various client devices through

either a thin client interface, such as a browser, or a program interface. The consumer does not

manage or control the underlying cloud infrastructure including network, servers, operating sys-

tems, etc.

The application developers can either use the PaaS layer to develop and run their applications or

directly use the IaaS infrastructure [37].

12

2.8 Cloud Computing Benefits

CC exempts organizations from the burden of installing software. Thus infrastructure requirements are

low because the service is sold on-demand. The infrastructure can be rented rather than bought [11].

Also, cloud applications are managed and updated by the provider, who take care of server maintenance

and security issues [11]. This removes burden of the IT infrastructure, and consequently, lower costs.

Because cloud enables the access of applications with both mobile or desktop devices, personnel can

work flexibly anywhere and anytime. Work traveling restrictions such as different time zones or access

to the software are no longer an issue. Therefore, functionality maintenance and availability are the main

advantages of CC [16].

In short, cloud solutions present several benefits that keep up with the customer needs and have

pushed many residential and commercial solutions to the cloud, such as:

• Saving of IT costs and maintenance because it allows to avoid overhead costs on acquiring and

maintaining hardware, software, and IT staff

• Easy access and up-to-date data because applications can be easily accessed from anywhere

in the world with an Internet connection and a browser, i.e., without having to download or install

anything

• Short time-to-benefit with quick deployment of IT infrastructures and applications. The software

deployment times and resource needs associated with rolling out end-user cloud solutions are

significantly lower than with on-premise solutions

• Improving business processes with better and faster integration of information between different

entities and processes

• Scalable computation on demand to overcame constant environments and usage changes.

As all new technology arrives, it brings with it some issues which may prove to be adverse if not taken

care of. The major concerns about CC are security and privacy, network performance and reliability.

However, if the correct service model (IaaS, PaaS, or SaaS) and the right provider is selected, the

payback can far outweigh the risks and challenges. The cloud implementation speed and ability to scale

up or down quickly, means companies can react much faster to changing requirements like never before.

2.9 Cloud Computing for FM

Today CC is being applied in FM to customize scheduling and reporting, health and safety compliance,

asset management and lower costs managing teams [42]. But also to book meeting rooms, offices and

handling catering [43]. There are also specific cloud-based software for each sector, for example for

maintenance management that provides service, asset, and procurement management solutions for fa-

cility managers such as preventative maintenance schedules [44, 45]. Specifically on FM Benchmarking,

CC brings a set of advantages [17] with respect to:

13

• Comparing information between the system parties

• Comparing the facility with international metrics/standards

• Simplifying data sharing between various stakeholders—without the Cloud, data would be stored

on in-house computers, and could not be shared easily with others, making the integration more

difficult.

Thus, it is clear that CC is already being applied to support FM activities and is likely to bring a set

of new features that will increase the workforce and operational efficiency.

14

Chapter 3

State-of-the-art

This section discusses benchmarking literature and standards in FM. Moreover, it overviews existing

software solutions with respect of their benchmarking features. To understand the most useful KPIs

for organizations, the section discusses the normalization and prioritization of KPIs and drives a set of

indicators to be used internationally on organizations.

3.1 International Standards for FM Benchmarking

ISO is the largest developer of voluntary International Standards covering all aspects of technology

and business. ISO has formed joint committees to develop different kinds of standard according to the

commission they join: IEC-International Electrotechnical Commission or ASTM-American Society for

Testing and Materials. The International Classification of Standards (ICS) is a structure for catalogs of

international, regional and national technical standards and other normative documents developed and

maintained by ISO. It covers every economic sector and activity where these technical standards can be

used. Its objective is to facilitate the harmonization of information and ordering tools [46].

The need for FM standards has been recognized in multiple sources [47]. In this research work,

we select the most important ISO standards for FM and Maintenance for those working with facilities in

various stages of their life cycle, which are shown in Table 3.1, ordered by ICS. There are also standards

focused on a specific European scope that can be consulted in Table 3.2.

15

StandardICS 01. 110: Facilities ManagementISO/CD 18480-1 - Part 1: Terms and definitionsISO/CD 18480-2 - Part 2: Guidance on strategic sourcing and the development of agreementsICS 01. 110: Document ManagementEC 82045-1:2001 - Part 1: Principles and methodsIEC 82045-2:2004 - Part 2: Metadata elements and information reference modelISO 82045-5:2005 - Part 5: Application of metadata for the construction and facility management sectorICS 03. 100: Risk ManagementISO 31000:2009 - Principles and guidelinesISO/TR 31004:2013 - Guidance for the implementation of ISO 31000IEC 31010:2009 - Risk assessment techniquesICS 03. 100: Asset ManagementISO 55000:2014 - Overview, principles and terminologyISO 55001:2014 - Management systems-RequirementsISO 55002:2014 - Management systems-Guidelines for the application of ISO 55001ICS 03. 080: OutsourcingISO/DIS 37500 - Guidance on outsourcingICS 91. 010: Building Information ModelingISO/TS 12911:2012 - Framework for building information modeling (BIM) guidanceISO 29481-1:2010 - Information delivery manual-Part 1: Methodology and formatISO/AWI 29481-1 - Information delivery manual-Part 1: Methodology and formatISO 29481-2:2012 - Information delivery manual-Part 2: Interaction frameworkICS 91. 040: Buildings and Building Related FacilitiesISO 11863:2011 - Functional and user requirements and performance – Tools for assessment and comparisonICS 91. 040: Buildings and Constructed AssetsISO 15686-1:2011 - Service life planning-Part 1: General principles and frameworkISO 15686-2:2012 - Service life planning-Part 2: Service life prediction proceduresISO 15686-3:2002 - Service life planning-Part 3: Performance audits and reviewsISO 15686-5:2008 - Service life planning-Part 5: Life-cycle costingISO 15686-7:2006 - Service life planning-Part 7: Performance evaluation for feedback of service life data from practiceISO 15686-8:2008 - Service life planning-Part 8: Reference service life and service–life estimationISO/TS 15686-9:2008 - Service life planning-Part 9: Guidance on assessment of service–life dataISO 15686-10:2010 - Service life planning-Part 10: When to assess functional performanceISO/DTR 15686-11 - Service life planning-Part 11: TerminologyICS 91. 040: Buildings ConstructionISO 15686-4:2014 - Service Life Planning – Part 4: Service Life Planning using Building Information ModelingISO 6242-1:1992 - Expression of users’ requirements-Part 1: Thermal requirementsISO 6242-2:1992 - Expression of users’ requirements-Part 2: Air purity requirementsISO 6242-3:1992 - Expression of users’ requirements-Part 3: Acoustical requirementsICS 91. 040: Performance Standards in BuildingISO 6240:1980 - Contents and presentationISO 6241:1984 - Principles for their preparation and factors to be consideredISO 7162:1992 - Contents and format of standards for evaluation of performanceISO 9699:1994 - Checklist for briefing-Contents of brief for building designISO 9836:2011 - Definition and calculation of area and space indicators

Table 3.1: List of relevant ISO standards for Facilities Management and Maintenance applicable tofacilities at different stages of their life cycle organized by ICS.

Code TitleFacilities ManagementEN 15221-1:2006 Part 1: Terms and definitionsEN 15221-2:2006 Part 2: Guidance on how to prepare facility management agreementsEN 15221-3:2011 Part 3: Guidance on quality in facility managementEN 15221-4:2011 Part 4: Taxonomy, classification and structures in facility managementEN 15221-5:2011 Part 5: Guidance on facility management processesEN 15221-6:2011 Part 6: Area and space measurement in facility managementEN 15221-7:2012 Part 7: Guidelines for performance benchmarkingMaintenance ManagementNP EN 13269:2007 Instructions for maintenance contract preparationNP EN 13460:2009 Maintenance documentationNP EN 15341:2009 Maintenance KPINP 4483:2009 Guide for maintenance management system implementationNP 4492:2010 Requirements for maintenance servicesEN 13306:2010 Maintenance terminologyEN 15331:2011 Criteria for design, management and control of maintenance services

for buildingsCEN/TR 15628:2007 Qualification of maintenance personnelEN 13269:2006 Guideline on preparation of maintenance contracts

Table 3.2: List of European (EN) FM and Maintenance Standards that apply to facilities in various stagesof their life cycle.

16

3.2 Benchmarking

Benchmarking has been generally explained in Section 2.3 on Chapter 2, however, a more specific

and directional characterization is demanded to understand the importance of benchmarking in both

business and Facility Management.

3.2.1 Benchmarking in Business

Benchmarking is the process that determines who is the best. This is very important in business to

undestand who is the best sales organization, how to quantify a standard or who has less cleaning

expenses [48].

Moreover, benchmarking identifies what other businesses do to increse profit and productivity to

subequently adapt those methods on your organization and make business more competitive [49]. More

speciffically, is important to understand how the winner got the best results and what should be done to

get there [48].

3.2.2 Benchmarking in FM

The importance of standards resides in the creation of specifications, which normalize how some ac-

tivity is performed. In the case of benchmarking, standardizing how companies evaluate their FM data

enables compatibility between organizations, and so, there is no misinterpretation between different

organizations for a given measurement.

Compare the results between them becomes possible, which empowers an improvement and en-

hancement of facilities management for each organization. Accordingly, it is essential to have specific

standards of measurement and metrics for ensuring a common understanding of performance and to

identify performance gaps [26].

Various FM softwares from many organizations tend to use ISO standards and Royal Institution of

Chartered Surveys (RICS) measurement practices, such as the ones presented on Tables 3.1 and 3.2.

Moreover, we could conclude that a cloud benchmarking FM system between several organizations

and facilities on a Portuguese scope do not exist yet, and would bring a great impact for our country’s

economy, since FM represents about 5% of global Gross Domestic Product [50, 51].

RICS and BICS Space Measurement Normalization

Collecting and analyzing concrete and reliable space measurements is of utmost importance for guar-

anteeing the quality of the performance indicators in FM. “Inaccurate performance prediction may lead

to buildings that behave worse than expected” [52]. There are many standards that specify how to per-

form measurements, so that it can be executed equally by the different organizations. However, reliable

measurements must be performed by accredited specialists in the matter, such as members of the RICS.

RICS has a Code of Measurement Practice that deals with practice of measurements such as val-

uation techniques (zoning of shops) or special uses. It specifies measurements for i) Gross External

17

Level Area (LA)

Gross Floor Area (GFA)

Internal Floor Area (IFA)

Net Floor Area (NFA)

Net Room Area (NRA)

Technical Area (TA)

Circulation Area (CA)

Amenity Area(AA)

Primary Area(PA)

Non

Fun

ctio

nal L

evel

Are

a (N

FLA)

Exte

rior C

onst

ruct

ion

Area

(EC

A)

Res

trict

ed P

rimar

y Ar

ea

Unr

estri

cted

Prim

ary

Area

Res

trict

ed A

men

ity A

rea

Unr

estri

cted

Am

enity

Are

a

Res

trict

ed C

ircul

atio

n Ar

ea

Unr

estri

cted

Circ

ulat

ion

Area

Res

trict

ed T

echn

ical

Are

a

Unr

estri

cted

Tec

hnic

al A

rea

Parti

tion

Wal

l Are

a (P

WA)

Inte

rior C

onst

ruct

ion

Area

(IC

A)

Figure 3.1: Measurement methods organization in categories.The different categories which methodsand units of measurement are organized. Rentable Floor Area (RFA) = Net Floor Area (NFA) [54].

Area (GEA): area of a building measured externally at each floor level, ii) Gross Internal Area (GIA):

area of a building measured to the internal face of the perimeter walls at each floor level iii) Net Internal

Area (NIA): area within a perimeter walls at each floor level [53]. RICS provides precise definitions to

permit the accurate measurement of buildings and land or the calculation of sizes (areas and volumes)

presented in the RICS’ Code of Measuring Practice [53].

Belonging to RICS, the Building Cost Information Service (BCIS) provides built environment cost

information, and is the basis of early cost advice in construction industry, since they provide services

respecting occupancy costs, construction duration, repair costs, construction inflation, among others.

The standard EN15221-6 also regulates areas and spaces measurements and is a common basis for

i) planning and design ii) area and space measurement iii) financial assesment iv) tool for benchmarking

for existing and owned or leased buildings as well as buildings in state of planning or development

[54]. It includes concepts such as rentable, lettable, leasable, equivalent rent and corresponding terms.

Methods and units of measurement are explained and illustrated per categorie. The distinct categories

can be seen on Figure 3.1.

Today, the focus is to deliver buildings to a known cost and on being able to track the reduction in

costs that result from improvements in procurement [55]. For this to happen, it is necessary information

provided by BCIS such as cost per m2 of Gross Internal Floor Area for buildings and elements.

The BCIS Elemental Standard Form of Cost Analysis [55] provides built environment cost informa-

tion, such as occupancy costs, construction duration, repair costs, construction inflation, among others.

As referred in the BCIS Elemental Standard Form of Cost Analysis, there has to be detailed informa-

tion documents about the projects, buildings, procurements, costs (there should be provided Total Costs

for each element and sub-elements, and should be shown separately when required and for different

forms of construction), risks and design criteria.

18

Figure 3.2: IFMA Benchmarking Methodology adapted from Roka-Madarasz [4]. First step is to identifythe KPI, then, use it to measure the facility performance. At this point three different paths can be taken:Best-in-class Facility Performance, Own Performance, or directly to Compare. After this, the functionsof benchmark has to be chosen, just like which companies to benchmark.

IFMA Benchmarking

The IFMA has developed a facility benchmarking useful for current FM services benchmarking that can

be seen in Figure 3.2 as shown in the report [4].

In order to measure facilities performance, IFMA has established 9 KPIs that must be easily mea-

surable and that must be defined for monitoring the actual process and also to control it. These Key

Performance Indicators shown in the report [4] can be seen in Table A.1 on Appendix.

3.3 Existing Software Solutions

As specified in section 2.1, there are many types of softwares for FM solutions, just as CAFM or IWMS.

All of known FM solutions like Maxpanda [56] or IBM Tririga [57] are a simpler way to manage facili-

ties. They centralize organizations information, making management more efficient through business

analytics, critical alert, increasing visibility and control.

Some of them, for instance ARCHIBUS [58] or FM:Systems [59] have integration with CAD or BIM

models, which is very important for visualization of departments occupation or others space and occu-

pation management areas.

Most of these systems promotes their capabilities for organizations cost reduction—since they cost-

justify real changes in preventive maintenance routines and predicts cost effects of preventive mainte-

nance changes before they are made—some permits multiple users, others make possible that each

user only can access specific information regarding his organization position.

There are different sectors that a FM system can focus such as: i) Capital/ Financial, ii) Real Es-

tate/Retail: Construction or Project Management, iii) Space and Workplace, iv) Maintenance, v) Sus-

tainability and Energy, vi) Move, vii) Higher Education and Public. Many of the existent solutions only

focus on some of this sectors and not in all of them.

For Real Estate, it is usual features for incorporation of current lease accounting standards, track-

ing of dates and contractual commitments, management of occupancy and facilities costs. On Capi-

tal/Finantial, are being used features to identify funding priorities within capital programs, reduce project

schedule overruns or streamline project cost accounting. In Space and Workplace it is important to have

19

Software SolutionsCentralization of

OrganizationsInfo

BusinessAnalysis

IncreasedVisibility and

ControlCosts Reduction CAD/BIM

Integration Cloud Application Benchmarking

Maxpanda • • • •IBM Tririga • • • •FM:Systems • • • • •Indus System • • • • •PNMSoft • • • • • •ARCHIBUS • • • • • • •

Table 3.3: List of distinct FM softwares characteristics.

tools for space use agreements and chargeback to increase departmental accountability for space use.

Maintenance requires features for automatically route and manage both incoming and planned main-

tenance, while at the same time keeping internal customers up to date on the progress of their work

tickets, or streamline facility maintenance, service management and facility condition assessments. The

Sustainability and Energy sector is also very important for defining which projects will achieve the right

mix of environmental benefits and cost savings, for reduce energy consumption to meet sustainability

goals, identifying poorly performing facilities and automate corrective actions.

Solutions as Indus System [60], Manhattan Mobile Apps [61], PNMSoft [62] or ARCHIBUS have

cloud-based software that enables users to access FM systems anywhere on mobile devices from a

browser. Indus System enables users to store, share, view drawings, space, assets, related costs,

leases and contracts just by accessing the browser.

On the other hand, ARCHIBUS and PNMSoft are both capable of showing an organizations KPIs

through their web site. The packages enables users normal usage of their daily management software

and then, when necessary, the visualization of the results on a graphical web application. However, this

solution is only applicable for the facilities that have ARCHIBUS or PNMSoft software installed, and only

for comparison from previous results from that facility. In contrast, with our solution, any organization

could benefit from these features and one more: the comparison with others organizations on the same

sector. On Table 3.3 is presented a summary of the different FM softwares characteristics.

Moreover, is very important to mention that Cloud-based benchmarking solutions have been used

not only to FM but also in other Business Sectors. For example, eBench [63] is a cloud solution for

benchmarking digital marketing indicators from different brands.

3.3.1 ARCHIBUS

ARCHIBUS is the provider Facilities Management software solution that effectively tracks and analyzes

not only facilities-related information but also real-estate. ARCHIBUS is an integrated solution that

applies to organizations of several sizes and sectors (here we focus on ARCHIBUS for Educational

Institutions reports—Table A.2 on Appendix).

The system architecture consists of three main modules, the first, is named ARCHIBUS Web Central

and provides live enterprise access to facilities data and enables the easy maintenance and distribution

of facilities information across the entire enterprise [64]. A role-based security service, allows that when

users log on, they only access information relevant to their roles on the organization.

ARCHIBUS have a .NET Windows application, named Smart Client, used by back-office personnel

for data entry, data transfer, importing and exporting data from other systems. This module has another

20

one integrated, the Smart Client Extension for AutoCAD or DWG Editor that is very important for those

organizations who want to include Computer-Aided Drawing or BIM models.

From a technical standpoint, the software architecture of ARCHIBUS consists of a database that can

be one of MS SQL, Oracle or Sybase. This database communicates with the application servers that

can run on Tomcat, Jetty, WebLogic or WebSphere. The applications of SmarClient module running on

the computer of the client companies, communicate with application servers through Web Services and

the applications of Web Central module communicates via HTTP with those same servers [64].

Being part of the ARCHIBUS platform, ARCHIBUS Performance Metrics Framework delivers KPIs

and other performance data about the real estate, infrastructure and facilities using a detailed graphical

view of the data. Thus, it is possible to use analytical measures and productivity tools, which provides

decision-makers to align their portfolio to organizational strategy, spotlight underperforming business

units or assets, and benchmark organizational progress to achieve targeted goals.

3.3.2 PNM Soft Sequence Kinetics

PNM Soft Sequence Kinetics is an Intelligent Business Process Management Suite and covers process

optimizing KPI, dynamic process change, KPI analysis, process operation and tracking, communica-

tion with external systems and mobile and cloud KPI. This system has four main focus: Processing-

Optimizing KPI, Process Operation and Visual Tracking, KPI for Process Administrators and Mobile

Process KPI.

On the Process Optimizing KPI there are two different processes:

Extra-Process Performance Analytics permits the process performance tracking via runtime

dashboards and displays KPI like process status levels or average execution time of a process,

which helps to understand how successful the process is and highlight required improvement

areas.

Intra-Process Analytics aggregation and calculation of intra-process data by real-time analyt-

ics, that is built into the Business Rule editor, which enables routing according to their results via

a simple GUI, being an artificial process intelligence form which sees the business teach itself

how to perform better over time.

Process Operation and Visual Tracking is possible by Flowtime that is a extension of Microsoft Share-

Point with a built-in process operation environment, which enables the collaboration on processes in a

familiar interface and includes advanced task management, delegation and monitoring of KPI capabili-

ties with a tracking views, which shows the process stands.

The KPI for Process Administrators provides important indicators on process performance per type

of process [65].

PNM Soft also has a mobile application named Mobile Portal, that is available as an application or

an online service, where users can access the same features provided by Sequence Kinetics Flowtime

and can be configured by the customer to meet his necessities. For the cloud platform PNM Soft uses

Windows Azure.

21

3.3.3 Discussion

The previous softwares have important characteristics, however, none of them have all the features at

once. We asked the collaboration of the providers of Maxpanda, IBM Tririga, ARCHIBUS, FM:Systems

and Indus System to understand which are the indicators on which each of these softwares are more

focus. An email was sent, where was asked them to fill in a form with distinct indicators previously

selected from the scientific literature. However, only two of the providers answered it. On Table 3.4 can

be seen the results of the questionnaire. We concluded that are many Financial indicators that are not

considered by the providers, also, indicators of Service Quality, Satisfaction or Spatial Indicators are

highly applied on softwares. These differences are related to the software classification. Software B is

a CMMS solution, and that is the reason why the it is focused on maintenance and cleaning indicators,

while Software A is a CAFM solution, therefore, it is not focused on those indicators.