Week 3 - Monday. What did we talk about last time? Graphics rendering pipeline Rasterizer Stage.

Transcript of Week 3 - Monday. What did we talk about last time? Graphics rendering pipeline Rasterizer Stage.

The BasicEffect class

An effect says how things should be rendered on the screen

We can specify this in details using shader programs The BasicEffect class gives you the ability to do

effects without creating a shader program Less flexibility, but quick and easy

The BasicEffect class has properties for: World transform View transform Projection transform Texture to be applied Lighting Fog

Vertices

Vertices can be stored in many different formats depending on data you want to keep Position is pretty standard Normals are optional Color is optional

We will commonly use the VertexPositionColor type to hold vertices with color

Vertex buffers

The GPU holds vertices in a buffer that can be indexed into Because it is special purpose hardware, it has to be accessed in

special ways It seems cumbersome, but we will often create an array of

vertices, create an appropriately sized vertex buffer, and then store the vertices into the buffer

VertexPositionColor[] vertices = new VertexPositionColor[3]{ new VertexPositionColor(new Vector3(0, 1, 0), Color.Red), new VertexPositionColor(new Vector3(+0.5f, 0, 0), Color.Green), new VertexPositionColor(new Vector3(-0.5f, 0, 0), Color.Blue) }; vertexBuffer = Buffer.New<VertexPositionColor>(GraphicsDevice, 3, BufferFlags.VertexBuffer); vertexBuffer.SetData<VertexPositionColor>(vertices);inputLayout = VertexInputLayout.New<VertexPositionColor>(0);

Drawing a vertex buffer

In order to draw a vertex buffer, you have to: Set the basic effect to have the appropriate transformations Set the vertex buffer on the device as the current one being drawn Set the vertex input layout so that the device knows what's in the vertex

buffer Loop over the passes in the basic effect, applying them Draw the appropriate kind of primitives

basicEffect.World = world;basicEffect.View = view;basicEffect.Projection = projection;

GraphicsDevice.SetVertexBuffer<VertexPositionColor>(vertexBuffer);GraphicsDevice.SetVertexInputLayout(inputLayout); foreach (EffectPass pass in basicEffect.CurrentTechnique.Passes){

pass.Apply();GraphicsDevice.Draw(PrimitiveType.TriangleList, 3);

}

Index buffer

Sometimes a mesh will repeat many vertices

Instead of repeating those vertices, we can give a list of indexes into the vertex list instead

short[] indices = new short[3] { 0, 1, 2};

indexBuffer = Buffer.New<short>(GraphicsDevice, 3, BufferFlags.IndexBuffer);indexBuffer.SetData<short>(indices);

Drawing with an index buffer Once you have the index buffer, drawing with it is very

similar to drawing without it You simply have to set it on the device

The false means that the indexes are short values instead of int values

Then call DrawIndexed() instead of Draw() on the devicebasicEffect.World = world;basicEffect.View = view;basicEffect.Projection = projection;

GraphicsDevice.SetVertexBuffer<VertexPositionColor>(vertexBuffer);GraphicsDevice.SetVertexInputLayout(inputLayout);GraphicsDevice.SetIndexBuffer(indexBuffer, false); foreach (EffectPass pass in basicEffect.CurrentTechnique.Passes){

pass.Apply();GraphicsDevice.DrawIndexed(PrimitiveType.TriangleList, 3);

}

Drawing an icosahedron

An icosahedron has 20 sides, but it only has 12 vertices By using an index buffer, we can use only 12 vertices

and 60 indices Check out the XNA tutorial on RB Whitaker's site for the

data: http://rbwhitaker.wikidot.com/index-and-vertex-buffers

There are some minor changes needed to make the code work

Lists and strips

It is very common to define primitives in terms of lists and strips

A list gives all the vertex indices for each of the shapes drawn 2n indices to draw n lines 3n indices to draw n triangles

A strip gives only the needed information to draw a series of connected primitives n + 1 indices to draw a connected series of n lines n + 2 indices to draw a connected series of n

triangles

GPU

GPU stands for graphics processing unit

The term was coined by NVIDIA in 1999 to differentiate the GeForce256 from chips that did not have hardware vertex processing

Dedicated 3D hardware was just becoming the norm and many enthusiasts used an add-on board in addition to their normal 2D graphics card Voodoo2

More pipes!

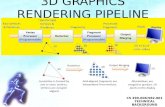

Modern GPU's are generally responsible for the geometry and rasterization stages of the overall rendering pipeline

The following shows colored-coded functional stages inside those stages Red is fully programmable Purple is configurable Blue is not programmable at all

Vertex Shader

Geometry

ShaderClipping Screen

MappingTriangle Setup

Triangle Traversa

l

Pixel Shader Merger

Programmable Shaders

You can do all kinds of interesting things with programmable shading, but the technology is still evolving

Modern shader stages such as Shader Model 4.0 and 5.0 (DirectX 10 and 11) use a common-shader core

Strange as it may seem, this means that vertex, pixel, and geometry shading uses the same language

Shading languages

They are generally C-like There aren't that many:

HLSL: High Level Shading Language, developed by Microsoft and used for Shader Model 1.0 through 5.0

Cg: C for Graphics, developed by NVIDIA and is essentially the same as HLSL

GLSL: OpenGL Shading Language, developed for OpenGL and shares some similarities with the other two

These languages were developed so that you don't have to write assembly to program your graphics cards

There are even drag and drop applications like NVIDIA's Mental Mill

Virtual machines

To maximize compatibility across many different graphics cards, shader languages are thought of as targeting a virtual machine with certain capabilities

This VM is assumed to have 4-way SIMD (single-instruction multiple-data) parallelism

Vectors of 4 things are very common in graphics: Positions: xyzw Colors: rgba

The vectors are commonly of float values Swizzling and masking (duplicating or ignoring)

vector values are supported (kind of like bitwise operations)

Programming model

A programmable shader stage has two types of inputs Uniform inputs that stay constant during draw calls▪ Held in constant registers or constant buffers

Varying inputs which are different for each vertex or pixel

Language style

Fast operations: scalar and vector multiplications, additions, and combinations

Well-supported (and still relatively fast): reciprocal, square root, trig functions, exponentiation and log

Standard operations apply: + and * Other operations come through intrinsic

functions that do not require headers or libraries: atan(), dot(), log()

Flow control is done through "normal" if, switch, while, and for (but long loops are unusual)

Where the idea comes from In 1984, Cook came up with the idea of shade

trees, a series of operations used to color a pixel This example shows what the shader language

equivalent of the shade tree is

Shaders

There are three shaders you can program Vertex shader

Useful, but boring, mostly about doing transforms and getting normals

Geometry shader Optional, allows you to create vertices from

nowhere in hardware Pixel shader

Where all the color data gets decided on Also where we'll focus

Example of real shader code

The following, taken from RB Whitaker's Wiki, shows a shader for ambient lighting We start with declarations:

float4x4 World;float4x4 View;float4x4 Projection;

float4 AmbientColor = float4(1, 1, 1, 1);float AmbientIntensity = 0.1;

struct VertexShaderInput{ float4 Position : POSITION0;};

struct VertexShaderOutput{ float4 Position : POSITION0;};

Example of real shader code continued

VertexShaderOutput VertexShaderFunction(VertexShaderInput input) { VertexShaderOutput output;

float4 worldPosition = mul(input.Position, World); float4 viewPosition = mul(worldPosition, View); output.Position = mul(viewPosition, Projection);

return output;}

float4 PixelShaderFunction(VertexShaderOutput input) : COLOR0 { return AmbientColor * AmbientIntensity;}

technique Ambient { pass Pass1 { VertexShader = compile vs_1_1 VertexShaderFunction(); PixelShader = compile ps_1_1 PixelShaderFunction(); }}

More advanced shader code The following, taken from RB Whitaker's Wiki, shows a shader for diffuse lighting

float4x4 World;float4x4 View;float4x4 Projection;

float4 AmbientColor = float4(1, 1, 1, 1);float AmbientIntensity = 0.1;

float4x4 WorldInverseTranspose;

float3 DiffuseLightDirection = float3(1, 0, 0);float4 DiffuseColor = float4(1, 1, 1, 1);float DiffuseIntensity = 1.0;

struct VertexShaderInput { float4 Position : POSITION0; float4 Normal : NORMAL0;};

struct VertexShaderOutput { float4 Position : POSITION0; float4 Color : COLOR0;};

More advanced shader code continued

VertexShaderOutput VertexShaderFunction(VertexShaderInput input){ VertexShaderOutput output;

float4 worldPosition = mul(input.Position, World); float4 viewPosition = mul(worldPosition, View); output.Position = mul(viewPosition, Projection);

float4 normal = mul(input.Normal, WorldInverseTranspose); float lightIntensity = dot(normal, DiffuseLightDirection); output.Color = saturate(DiffuseColor * DiffuseIntensity * lightIntensity);

return output;}

float4 PixelShaderFunction(VertexShaderOutput input) : COLOR0 { return saturate(input.Color + AmbientColor * AmbientIntensity);}

technique Diffuse { pass Pass1 { VertexShader = compile vs_1_1 VertexShaderFunction(); PixelShader = compile ps_1_1 PixelShaderFunction(); }}

![[UniteKorea2013] The Unity Rendering Pipeline](https://static.fdocuments.us/doc/165x107/558c6a7bd8b42ad9508b4779/unitekorea2013-the-unity-rendering-pipeline.jpg)