Vision-based Control of a Smart Wheelchair for the ... Control of a Smart Wheelchair for the...

Transcript of Vision-based Control of a Smart Wheelchair for the ... Control of a Smart Wheelchair for the...

Vision-based Control of a Smart Wheelchair for theAutomated Transport and Retrieval System (ATRS)

Humberto Sermeno-Villalta and John SpletzerDepartment of Computer Science and Engineering

Lehigh UniversityBethlehem, PA 18015

Abstract— In this paper, we present a novel application ofvision-based control for autonomously docking a wheelchaironto an automotive vehicle lift platform. This is a principalcomponent of the Automated Transport and Retrieval System(ATRS) - an alternate mobility solution for drivers with low erbody disabilities. The ATRS employs robotics, automation,and machine vision technologies, and can be integrated intoastandard minivan or sport utility vehicle (SUV). At the core ofthe ATRS is a “smart” wheelchair system that autonomouslynavigates between the driver’s position and a powered lift atthe rear of the vehicle - eliminating the need for an attendant.

From an automation perspective, autonomously dockingthe wheelchair onto the lift platform presented perhapsthe most significant technical challenge for the proof-of-concept ATRS. Our solution couples vision-based localizationfor feedback with a hybrid control approach employinginput/output feedback linearization techniques. We presentsignificant simulation and experimental results which showthat reliable docking can be achieved under a range ofillumination conditions.

Index Terms— ATRS, smart wheelchair, vision-based con-trol

I. I NTRODUCTION AND MOTIVATION

Automobile operators with lower body disabilities havelimited options with regards to unattended personal mo-bility. The traditional solution is a custom van conversionthat places the operatorin-wheelchairbehind the steeringwheel of the vehicle. Entry/exit to the vehicle is alsoaccomplished in-wheelchair via a ramp or powered liftdevice. Such a design has significant safety shortcomings.Wheelchairs do not possess similar levels of crash protec-tion afforded by traditional motor vehicle seat systems, andthe provisions used for securing them are often inadequate.However, regulating agencies often grant exemptions tovehicle safety requirements to facilitate mobility for dis-abled operators [1]. It should not be surprising then whenresearch by the United States National Highway TrafficSafety Administration (NHTSA) showed that 35% ofallwheelchair/automobile related deaths were the result ofinadequate chair securement. Another 19% were associatedwith vehicle lift malfunctions [2].

The Automated Transport and Retrieval System (ATRS)is offered as an alternate mobility solution for opera-tors with lower body disabilities. It employs robotics,automation, and machine vision technologies, and can beintegrated into a standard minivan or sport utility vehicle(SUV). At the core of the ATRS is a “smart” wheelchairsystem that autonomously navigates between the driver’s

position and a powered lift at the rear of the vehicle.A primary benefit of this paradigm is the operator andchair are separated during vehicle operations as well asentry/exit. This eliminates the potential for injuries ordeaths caused by both improper securement (as the operatoris seated in a crash tested seat system) as well as liftmalfunctions.

A second motivating factor for the ATRS is cost. Inmany applications of robotics and computer vision systemsto assistive technologies, augmented capability is achievedthrough significant increases in cost. In contrast, it isexpected that the integration of an ATRS system into anexisting vehicle will ultimately cost about half that of a vanconversion while providing significantly improved levels ofsafety. Furthermore, the modifications required for ATRSintegration are non-permanent, and unlike van conversionswill not affect vehicle resale value.

From an automation perspective, autonomously dockingthe wheelchair onto the lift platform presented the mostsignificant technical challenge. This was driven primarilyby geometry constraints, which limited clearance betweenthe chair wheels and the lift platform rails to (±4 cm).In response to this, we developed a vision-based solutionfor feedback control that can operate reliably within thesedocking tolerances.

II. RELATED WORK

Extensive work has been done in order to increase thesafety levels of power wheelchairs while minimizing thelevel of human intervention. In these systems, the humanoperator is responsible for high-level decisions while thelow-level control of the wheelchair is autonomous.

The Tin Man system [3], developed by the KISS In-stitute, automates some of the navigation and steeringoperations for indoor environments. The system is ableto navigate through doorways and traverse hallways whileproviding basic obstacle avoidance in all operating modes.The Wheelesley project [4], based on a Tin Man wheel-chair, is designed for both indoor and outdoor environ-ments. The chair is controlled through a graphical userinterface that has successfully been integrated with an eyetracking system and with single switch scanning as inputmethods.

The TAO Project [5] uses a stereo vision system, infraredsensors and bump sensors to provide basic collision avoid-ance, navigation in an indoor corridor, and passage through

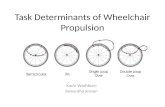

Fig. 1. ATRS operational procedures. After transferring tothe driver’s seat (left), the wheelchair is placed in “docking” mode. It then autonomouslynavigates to the rear of the vehicle (center-left) and docksonto the lift platform (center-right). The operator can then stow the lift into the vehicle fromthe UI (right).

narrow doorways. The system also provides landmark-based navigation that requires a topological map of theenvironment. The NavChair assistive wheelchair naviga-tion system ([6], [7]) uses 12 ultrasonic sensors mountedaround the front and sides of the wheelchair in order toprovide three basic operating modes. In obstacle avoidancemode, the wheelchair responds to joystick commands whilemaintaining a safe distance from obstacles. In door passagemode, this distance is decreased to navigate through nar-row openings. In wall following mode, the system avoidsobstacles while following a wall specified by the user. Thesystem uses a topological map and current sonar data toautomatically select its operating mode. The SmartChair([8], [9], [10]) uses a virtual interface displayed by anon-board projection system to implement a shared con-trol framework that enables the user to interact with thewheelchair while it is performing an autonomous task. TheVAHM system ([11], [12]) offers semi-autonomous navi-gation with obstacle avoidance and autonomous navigationbased on an internal map. The Hephaestus system ([13],[14]) is a modular system that is installed between thewheelchair’s joystick and motor controller. Its navigationassistance behaviors are based on those developed for theNavChair system.

A common theme in the above research is the roboticstechnology has been applied to assist or augment theskills of the chair operator. The development of more“autonomous” chairs is still an active area of research.While our work also facilitates personal mobility for thedisabled, our application area is entirely different. TheATRS wheelchair is in fact capable of autonomous vehiclenavigation in outdoor environments. This can be realizedbecause the operator is never seated in the chair duringautonomous operations, and the chair always operates inthe vicinity of the operator’s vehicle. The former constraintmitigates operator safety issues, while the latter providessignificant, invariant landmarks/features in an otherwiseunstructured environment. What also makes the ATRSwheelchair attractive is that it can become commerciallyviable - providing a safer alternative to van conversions atroughly half the cost.

III. SYSTEM OVERVIEW

The ATRS can be decomposed into five primary com-ponents: a smart wheelchair system, a vision system, apowered lift, a traversing power seat, and a user interface

(UI) computer. From a robotics perspective, the smartwheelchair and vision system are the heart of the ATRS.Combined, these two subsystems allow the operator to beseparated from the chair and eliminate the need for anattendant.

To outline the ATRS operational procedures, we refer toFigure 1. When the operator returns to his/her automobile,a keyless entry is used to both unlock the vehicle andto deploy the traversing driver’s seat. The operator thenpositions the wheelchair adjacent to the driver’s seat, andtransfers to the vehicle (left). Using the UI, the operatornext places the wheelchair into “docking” mode, and itnavigates autonomously to the rear of the vehicle usinga SICK LMS-200 laser rangefinder and gyro-correctedodometry (center-left). At the rear of the vehicle, controlof the wheelchair is handed off to the vision system.This transmits real-time control inputs to the chair overa dedicated RF link to enable reliable docking (locking inplace) onto the lift platform (center-right). With the chairdocked, the operator actuates the lift via the UI and stowsthe platform and chair into the vehicle cargo area (right).The process is repeated in reverse when disembarking fromthe automobile. When not operating autonomously, theATRS wheelchair is placed in “manual mode,” and operatesno differently than any other powered wheelchair.

IV. T ECHNICAL APPROACH

The objective of this research was very specific:Developa means for reliable, autonomous docking (and undocking)of the ATRS wheelchair onto (and off of) the vehicle’s liftplatform.

Any proposed solution was also required to live withinsystem level constraints: alterations to the exterior of thevehicle were prohibited, and (perhaps most significant froma computer vision perspective) sensor systems mountedwithin the van had to live within the UI’s available CPUbudget (1.1 GHz Pentium M).

The entire navigation task (from the lift platform to thedriver’s position and back) was attempted using only theonboard LMS-200 and odometry. Unfortunately, high noiselevels from ambient sunlight,etc., resulted in localizationerrors that yielded inconsistent docking performance. As aconsequence, the navigation task was decomposed into twophases. The first relied upon the LMS-200 and odometryto navigate the ATRS wheelchair from the driver’s seatposition to a designated handoff site at the rear of the

1.80m

Camera FOV

User Interface

Handoff Site

Lift Platform

Fig. 2. ATRS concept illustrating the location of the driver’s seat, liftplatform, and vision system field-of-view (FOV).

vehicle. For the second phase, we integrated a camerainto the vehicle’s liftgate (Figure 2). Computer visiontechniques were then used to provide state feedback to thechair over a dedicated RF link.

Our motivation for employing a computer vision systemwas localization accuracy. Preliminary experiments withthe LMS-200 indicated that position errors on the order ofseveral centimeters were unacceptable for the docking task.We were able to achieve significantly better localizationperformance using a camera. However, the vision-basedapproach introduced its own challenges. The combinationof the large footprint of potential wheelchair positionswith the limited camera mounting height (1.83 meters)dictated a wide field-of view lens (for coverage) andhigh resolution CCD (for localization accuracy). These inturn introduced significant lens distortion and real-timeprocessing challenges. Finally, the vision system wouldhave to operate outdoors under a wide range of illuminationlevels. Addressing these challenges - and the subsequentcontrol problem - is the focus of the remainder of thispaper.

V. V ISION-BASED TRACKING

Several steps were made to facilitate wheelchair de-tection and tracking. Perhaps the most significant wasthe placement of binary fiducials on each of the chair’sarmrests. Furthermore, the following assumptions weremade to simplify the tracking task:

1) Wheelchair motion was constrained to the groundplane which was locally flat.

2) The position of the lift platform with respect to thevan was fixed.

3) The position and orientation of the camera withrespect to the lift platform was fixed.

Each can be justified. Although the ATRS wheelchair willnot be restricted to level terrain, the paved surfaces whereit operates are for the most partlocally flat. The lift isanchored to the van, and its traversal and extension can beconsidered fixed. Finally, the camera is affixed to the vanwhich also constrains its pose relative to the lift platform.

With these assumptions, we reduced the problem oftracking the wheelchair to a two-dimensional pattern

matching task. This was accomplished by composing geo-metric and distortion warps to avirtual camera framedirectly overhead of the expected fiducial positions. Wenow outline the details of our approach.

A. Virtual Camera Image Warps

Our immediate problem is to infer the location of chairfiducials in the camera image. This is non-trivial due to sig-nificant perspective distortion from the rotation/translationof the camera frame. It is further aggravated by largeradial and tangential distortion components from the widefield-of-view lens. However, recall from our assumptionsthat chair motion (and as a consequence fiducial position)is constrained to a singleX-Y plane. Placing an ortho-graphic camera overhead with its optical axis orthogonalto this plane would eliminate the perspective distortion.A subsequent calibration for camera intrinsics would theneliminate lens distortions. This would preserve fiducialscale in the image, and reduce the problem to a planesearch. Unfortunately, the camera pose is of course fixed- dictated by field-of-view requirements. As a result, weinstead employ a virtual camera to serve this same purpose.This relies upon traditional image warping techniques, andis widely used in applications such as computer graphicsand image morphing [15].

1C

0C

P

yx

y

xf

f1I

0I

1p

0p

Fig. 3. Image coordinates from a virtual cameraC1 are mapped back tothe actual cameraC0. Corrections for both perspective and lens distortionscan then be composed into a single warp from imageI0 to I1.

Let us assume that the position of a fiducial is estimatedto be at positionPf = [Xf , Yf , Zf ]T in our world frame.The virtual camera is then moved to positionC1 =[Xf , Yf , Z]T where Z corresponds to a reference heightfrom which the fiducial template was generated. We assumethat our virtual camera is an ideal perspective camerawith zero distortion and image center coincident with theoptical axis. Other intrinsic parameters (e.g. focal length)are chosen the same as the actual camera. Referring toFigure 3, letp1 = [x1, y1]

T ∈ I1 denote the coordinatesfor a pixel in the virtual camera imageI1. Our objectiveis to mapp1 to a pointp0 in the original camera image.To do this, we first projectp1 to a world pointP through

our camera model

P =

−(x1−cx1)(Zf−Z)fx

+ Xf

(y1−cy1)(Zf−Z)fy

+ Yf

Zf

(1)

where (cx1, cy1) denote the image center coordinates ofI1, andfx, fy corresponds to the equivalent focal length inpixels. Note that the Z-coordinate in the world will alwaysbe set toZf . This is dictated by the ground plane constraintfor the wheelchair. While this assumption will incorrectlymap points that are not at the same height of the fiducials,it will map image points corresponding to the fiducialscorrectly.

Next, we projectP to camera coordinates in the truecamera frame. Assuming that the camera has been wellcalibrated (for both intrinsic and extrinsic parameters),weobtain

PC = R−10

(P − C0) (2)

where the rotation matrixR0 denotes the orientation andC0 = [X0, Y0, Z0] the position of the camera in theworld frame. Prior to projectingPC onto the image plane,we must also account for the significant image distortionadmitted by the wide FOV lens. We first obtain the nor-malized image projection

pn = [xn, yn]T = [−XC/ZC , YC/ZC ]T (3)

from which we obtain the distorted projection as

pd = (1 + k1r2 + k2r

4)pn + dP (4)

wherer =√

x2n + y2

n, k1, k2 are radial distortion coeffi-cients, and

dP =

[

2k3xnyn + k4(r2 + 2x2

n)k3(r

2 + 2y2n) + 2k4xnyn

]

(5)

is the tangential distortion component, withk3, k4 thetangential distortion coefficients obtained from the intrinsiccalibration phase. This distortion model is consistent withthat outlined in [16], [17]. Finally, we can obtain thecorresponding pixel coordinates in the true camera frameas

p0 =

[

fxxd + cx0

fyyd + cy0

]

(6)

As p0 will not lie on exact pixel boundaries, we employedbilinear interpolation to determine the corresponding inten-sity value forp1 in the virtual image. The above processis then repeated for each pixelp ∈ I1.

The advantage of this approach is that fiducial trackingis now constrained to the plane. This serves to preservescale and eliminates both perspective and lens distortionsin a single warp - greatly simplifying the tracking problem.

B. Fiducial Segmentation

1) Image Processing:To localize the wheelchair inthe virtual camera image, we utilize normalized intensitydistribution (NID) as a similarity metric [18]. An advan-tage of this formulation is that it explicitly models both

Fig. 4. Evaluation of alternate grayscale values for a pair of binaryfiducials captured under 5.4 kLux (left) and 93 kLux. Image “blooming”in the white regions of the templates is clearly apparent.

changes in scene brightness and contrast from the referencetemplate image. Additionally, in a comparison to alternateapproaches in model based tracking, it was less sensitiveto small motion errors [19].

Given a virtual imageI, an m × n template T ofthe fiducial, and a block regionB ∈ I of equivalentsize corresponding to a hypothetical fiducial position, thesimilarity of T andB can be defined as

ǫ (T, I, B) =

m∑

u=1

n∑

v=1

[

T (u, v) − µT

σT

−B(u, v) − µB

σB

]2

(7)where T (u, v) denotes the grayscale value at location(u, v), µ is the mean grayscale value, andσ the standarddeviation. The image region

B∗ = arg min(ǫ) ∀ B ∈ I (8)

would then correspond to the fiducial position in the givenimage.

One downside to correlation-based pattern recognitionapproaches is they are computationally expensive. To im-plement this in real-time, we first expand Equation 7 andfurther simplify it for comparisons using a single templateby eliminating the invariant factors. The resulting equation,

ǫT (I, B) =1

σB

[

K · µB −

m∑

u=1

n∑

v=1

T (u, v) · B(u, v)

]

(9)where K = m · n · µT , requires 1 division, m · nadditions, andm · n + 1 multiplications. We use fixed-point approximation with four bits for the fractional part tocomputeσ for all B ∈ I, traversingI from top to bottom,left to right. All calculations take advantage of the single-instruction, multiple-data (SIMD) instruction set availableon the PentiumTM M family of processors.

2) Fiducial/Template Design:The current fiducial de-sign (Figure 4) was chosen because of its invarianceto rotation from an orthographic perspective, and sinceits binary representation exhibits a strong contrast whichfacilitates tracking under varying illumination conditions.However, the latter attribute can lead to significant changesto fiducial appearance in the image due to strong changesin illumination.

As shown in figure 4, the white regions of the fiducialappear to increase in size in the camera image under highillumination levels. An attempt was made to mitigate theimpact of this “blooming” by regulating the CCD gain and

shutter speed. Unfortunately, experiments showed that thelowest gain and shutter speed settings were insufficientto prevent saturation of the camera image under highillumination levels. As an alternative solution, the grayscalevalue for white on the printed fiducials was selected suchthat it minimized this effect across varying lighting condi-tions. This is possible because the NID value for a binarytemplate is invariant to the grayscale valuesgb andgw thatrepresent black and white, respectively, as long asgw > gb.

5 10 15 200

500

1000

1500

NID

klux

65 70 75 80 85 90 950

500

1000

1500

klux

NID

Fig. 5. The blue line (bottom) represents the average of the NID valuesfor the wheelchair fiducial under a given light level. The purple line(top) represents the averageminimumNID values for false matches. Theminimum possible NID value for a binary template is zero.

Six fiducial designs with alternate grayscale values forgw were evaluated using the corresponding NID score asour metric. In practice,gw was chosen to be at least 50%of pure white for sufficient contrast under low lightingconditions. For each fiducial design, the respective templategeometry was generated from a composite of the perceivedgeometry of the fiducials from a set of images obtainedat ambient light levels ranging from 5kLux (shade) to95kLux (bright summer day). Results from the best tem-plate/fiducial pair are shown in Figure 5. Theǫ values forthe pair are consistently close to the theoretical minimumacross all tested light levels. The high NID values obtainedfor false correspondences (top line) allow the system toidentify the wheelchair fiducial with a high degree ofreliability.

C. Localization

Recall that the role of the vision system is to pro-vide feedback control to the wheelchair starting from apre-defined handoff pose at the rear of the vehicle, andending with the wheelchair docked on the lift platform.Navigation from the driver’s position to the handoff site isaccomplished through either local (on-wheelchair) sensorfeedback or (in the case of the ATRS-Lite configuration)from being teleoperated by the driver. In either case, thevision system was required to accommodate significanterrors in wheelchair pose. Requirements dictated minimum

tolerances of±0.5 meters in x-y position, and±30◦ inobjective orientation.

To compensate for this uncertainty, a search windowW about the handoff site was tessellated into a setS ofoverlapping subregions, as shown in Figure 6. Each sub-region was unwarped separately using the virtual cameraparadigm as outlined in Section V-A. The number anddimensions of each subregion was determined by the searchregion size and the wheelchair dimensions. The physicaldistance between fiducials was used as a constraint on thesize of eachSi ∈ S to ensure that at most one fiducialwas completely visible in each subregion. The overlappingregion dimensions were chosen such that{B|B ∈ W} =⋃|S|

i=1{B|B ∈ Si}.To identify the two wheelchair fiducials, the NID was

calculated for every member ofS using anm×n templateT . The setB was formed from the best candidate blocksB∗

i ∈ Si ∀ Si ∈ S. The pair of blocksPij = (B∗i , B∗

j )was considered a valid wheelchair candidate if:

• ||B∗i - B∗

j ||2 in world coordinates was within a pre-defined tolerance of the actual distance between thewheelchair fiducials.

• Their distance in pixel coordinates was greater thanmax(m, n).

Among the setP of valid candidates, the pair of regions

P ∗ = arg max(ǫ(T, Si, B∗i ) + ǫ(T, Sj , B

∗j )) ∀ Pij ∈ P

(10)would then correspond to the fiducial positions in the givenwindow. If no such pair was found, the search windowwas expanded by the size of one tessellation subregionand the process repeated. If the system failed to localizethe wheelchair after two iterations, user intervention wasrequired.

Fig. 6. Tessellation of the search window around the predefined handoffsite. A black cross marks the 32x32 area with the highest NID value foreach sub-window.

While the above localization procedure could be con-ducted with the chair stationary, subsequent iterations hadto be accomplished at 15 Hz on a constrained processorbudget (1.1 GHz Pentium M) to support real-time con-trol requirements. A multi-resolution approach [20] wasinitially investigated, but quickly abandoned due to the

−2.5 −2 −1.5 −1 −0.5 0 0.5

−1

−0.5

0

0.5

1

X

Y

A

x

yθ

xR

yR

−2.5 −2 −1.5 −1 −0.5 0 0.5

−1

−0.5

0

0.5

1

X

Y

B

Cx

yθ

−2.5 −2 −1.5 −1 −0.5 0 0.5

−1

−0.5

0

0.5

1

X

Y D E

Fig. 7. Hybrid controller operation. Recovery mode is activated at pose A (left). The chair then employs three open-loopcontrollers to rotate (B),translate (C) and rotate again (D) in order to align the x-axes of the robot and world frames. The primary path-follower controller is then activated inorder to dock the chair (E).

decimation effects on the small, binary template. Instead,we employed a windowed approach. Fiducial velocity wasestimated using world position estimates from the twoprevious images, and only a144×144 pixel region centeredabout the predicted position of each fiducial was processed.This supported localization at camera frame rate (15 Hz),but constrained the maximum wheelchair velocity to 2.5m/s. This constraint was not significant, as in practice thevelocity was≤ 0.5 m/s.

D. Vision-based Feedback Control

With the vision system in place to provide feedback, thetask of our controller is to navigate the wheelchair onto thelift platform where it will lock into the docking station,thereby securing it from further movement. As systemreliability is of utmost importance, the controller shouldalso be sufficiently robust to recover the wheelchair fromhandoff poses outside system specifications. To this end,we employed a hybrid controller design. The primary modeemploys a proportional-derivative (PD) controller for pathfollowing. The secondary recovery mode is employed whenthe initial chair pose would inhibit successful docking bythe primary controller. A detailed outline of the controllerdesign follows.

1) Path Following Mode:To ground the notation forour control problem, we first define our coordinate framesas in Figure 7 (left). The objective handoff pose for thewheelchair in the world frame isxD = [xD, yD, θD]T =[−1.8, 0, 0]T . The robot coordinate frame is centered on thewheelchair’s drive axle. When the chair is ideally docked,its XR-YR axes are coincident with theX-Y axes for theworld frame.

The wheelchair used during system development em-ployed a differential-drive system. While it might appearstraightforward to employ a point-to-point controller asa result, there are two important caveats. First, we mustensure that the path taken by the wheelchair stays inclose proximity to and avoids collisions with the vehicle.Second, the velocityv(t) of the chair at its objective pose[x, y, θ]T = [0, 0, 0]T must be significantly greater thanzero. This was dictated by the docking procedure, whichrequired that a plough affixed to the chair base engagethe dock with sufficient momentum to actuate the locking

mechanism. As a result, we chose instead to model thecontrol problem as a particular case of path following, andapplied I/O feedback linearization techniques to design aproportional-derivative (PD) controller to drive the chairalong the x-axis [21]. The kinematics in terms of the pathvariables then become

x = v(t) cos θy = v(t) sin θ

θ = ω(11)

If we assume thatv(t) is piecewise constant, we obtain

y = ωv(t) cos θ ≡ u (12)

and with error ase = y − yd = y we obtain

u = −kvy − kpy (13)

which with Equations 11 and 12 yields

ω = −kv tan θ −kpy

v cos θ(14)

where ω is the angular velocity command applied bythe robot andkv, kp are positive controller gains. Whatis convenient about this derivation is that although thecontroller contains a derivative term, it only relies uponobservations of the chair pose which are readily availablethrough the localization process.

2) Recovery Mode::The path follower controller de-sign provides reliable docking performance so long asthe wheelchair pose at handoff is within expected errortolerances:[x, y, θ]T = [1.8 ± 0.5m, 0 ± 0.5m, 0 ± 30◦]T .However, to enhance system reliability, a recovery modewas also incorporated to address gross pose errors. Recov-ery mode is activated after the initial localization phaseonly if it is determined that the primary controller is atsignificant risk for failing to dock the chair. In this case, weexploit the chair’s two degrees of mobility to align the chairalong the x-axis in our world frame. This is accomplishedthrough the following set of control inputs.

dθ = −π

2sgn(y) − θ (15)

dxR = abs(y) (16)

dθ = −θ (17)

The effects of these inputs are reflected in Figure 7.Equation 15 reorients the wheelchair from its initial pose(A) to one that is normal to the x-axis (pose B). After thisthe chair translates toy = 0 (pose C), and then rotatesonce more to align thexR and x axes (pose D). At thispoint, the controller switches to the path follower modeand docks the chair onto the lift platform (pose E). For theproof-of-concept phase, the recovery mode employed threeopen-loop controllers with feedback from the vision systemprovided only immediately prior to each of the controlinputs. In practice, a more robust control approach couldbe used.

VI. EXPERIMENTAL RESULTS

A. Simulations

1) Localization: Objective evaluation of localizationperformance for the vision system is a non-trivial task.Nevertheless, we estimated an upperbound on performanceby evaluating accuracy of the camera calibration. Intrinsicparameters were estimated using [17]. Extrinsic parameterswere then determined from a set of point correspondencesmarked by hand on a test image to known world pointson a calibration grid. Reprojections of the same worldpoints onto the image plane were then calculated fromthe complete set of calibration parameters. By assumingthe respective points were coplanar, we could estimatethe potential accuracy of fiducial tracking. These resultsare summarized in Figure 8. Reprojection errors averaged

Fig. 8. Evaluation of calibration performance. Results indicate a potentialtracking accuracy of several millimeters in position is possible

1.0 pixels over the sample set. At the planez = Zf

corresponding to the fiducial height, this amounted to onlyseveral millimeters in mean position error. Again, theseestimates are overly optimistic as they do not accountfor errors in fiducial segmentation, changes in cameracalibration,etc. Still, they were repeatable even after (albeitlimited) vehicle driving.

2) Control: Prior to operations on the chair itself, ex-tensive simulations were conducted to evaluate controllerperformance. We initially investigated whether a simplerproportional controller would be sufficient for the task,and quickly determined this was not the case. Strongoscillations around the x-axis were observed for a rangeof kp. This was not unexpected. From examining Equation14, we see that the proportional term does little to correcterrors in chair orientation in the neighborhood of the y-axis.In contrast, the derivative term primarily corrects errorsinorientation.

−2.5 −2 −1.5 −1 −0.5 0 0.5

−0.6

−0.4

−0.2

0

0.2

0.4

0.6

X

Y

25,000 Trials (kp=0.5, k

v=1.41)

Fig. 9. Sample simulation results for a sub-optimal gain set. The failedinitial poses (red stars) resulted from large orientation errors at a positionnear the lift platform.

In evaluating the PD version of the controller, we focusedon its performance under critically damped behavior,i.e.,kv = 2

√

kp. For kp values ranging from 0.1 to 2.0 (andcorresponding values forkv), 25,000 simulated dockingtrials were run for each gain set. The initial position ofthe chair was constrained to a 1 meter square about thetarget handoff position - the specification for the system.Orientations were drawn at random from a zero meanGaussian withσ = 0.1 radians. Linear velocities wereconstant (0.3 m/s) and angular velocities were constrainedto a maximum of 0.25 rad/s. Closed loop control wasdone at 15Hz. These values were identical to the actualsystem, and were dictated by safety considerations. Tofurther enhance simulation fidelity, unmodelled zero-meanGaussian noise was introduced into both the localizationestimate as well as the control input to the vehicle.

Figure 9 shows the controller performance for the gainsetkp = 0.5, kf = 1.41. In these simulations, a given trialwas considered successful if the wheelchair position errore ≤ 4cm and chair orientation|θ| ≤ 15◦ at x=0. Thesevalues were based upon empirical observations of whichfinal configurations would still permit proper docking. Inthis example, over 99% of trials were successful.

Figure 10 shows controller performance across a rangeof gain sets. This indicates excellent performance for0.9 ≤kp ≤ 1.5. These results were in close agreement to valuesused on the actual system, where gains in the0.7 ≤ kp ≤1.0 yielded excellent performance.

B. Experiments

Extensive experimentation was conducted with the ATRSsystem to support a public demonstration to industry repre-sentatives. This included 25 complete docking/undockingtrials the day prior, as well as a day of demonstrations.Each of these trials was successful, with the vision-basedcontroller demonstrating exceptional reliability and robust-ness to introduced errors. A representative docking trial is

−2.5 −2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

−2.5 −2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

−2.5 −2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

−2.5 −2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

Fig. 11. Testing the vision-based control system with moderate position and orientation errors at the handoff site. Thelower figure illustrates the pathfollowed by the wheelchair as estimated by the vision system.

0.4 0.6 0.8 1 1.2 1.4 1.6 1.8 20.975

0.98

0.985

0.99

0.995

1

Reliability vs. PD Gains (25,000 trials each)

kp

Rel

iabi

lity

Fig. 10. Simulation results for critically damped behavior. Proportionalgains in the range of 0.9 to 1.5 exhibited near perfect reliability underuncertainty.

illustrated in Figure 11. This shows beginning, intermedi-ate, and end poses of the chair as tracked by the visionsystem. The chair path as estimated by the vision systemis also shown. A DragonflyTM high-resolution (1024x768)digital video camera with a 2.6mm focal length lens wasused for all experiments.

A second trial demonstrating the recovery mode of thehybrid controller is also illustrated in Figure 12. In thiscase, significant errors in chair position and orientationwere deliberately introduced. These perturbations werereadily accommodated by the controller design, and thewheelchair docked with relative ease.

Finally, Figure 13 shows a snapshot from a live demon-stration trial, with the wheelchair, van and lift platformclearly visible.

VII. D ISCUSSION

The primary objective of this work was to determinethe feasibility of using vision-based feedback control forautonomously docking a powered wheelchair onto a vehiclelift platform. Using a high resolution camera to providestate feedback and I/O feedback linearization techniquesfor controller design, our approach was able to achieve ahigh level of docking reliability for the proof-of-concept

Fig. 13. Public demonstration of the ATRS system. The chair,van, andlift components are clearly visible.

ATRS under a broad range of illumination conditions.However, we should emphasize this was a proof-of-conceptphase. While we were sufficiently confident with perfor-mance and reliability to demonstrate the ATRS to industryrepresentatives (Figure 13), significant future work will berequired in order to make the system production ready.

To this point, we have assumed a fixed camera calibra-tion. For a commercial vehicle system this is unrealistic, asrelatively small calibration errors (on the order of 10 pixels)dramatically degrade system performance. As a result,we are investigating a means to automatically calibrateextrinsic camera parameters at the onset of each dockingtrial. Our current approach attempts to match SIFT key-points [22] from the lift platform. To improve performance,we first rectified the images (for lens distortion) prior toextracting keypoints. This typically increased the numberof matched keypoints by 15-40%. We also constrained validkeypoint matches geometrically using a distance thresholdof 80 pixels from the original calibration image. Thisassumes only modest changes to camera extrinsics.

Despite these enhancements, results so far have beenmixed. If the image illumination was consistent with thatof the template image, we were typically able to match 80-100 out of 263 keypoints, of which 85-95% were correctly

−2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

1

1.2

−2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

1

1.2

−2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

1

1.2

−2 −1.5 −1 −0.5 0

−0.4

−0.2

0

0.2

0.4

0.6

0.8

1

1.2

Fig. 12. Testing both hybrid controller modes. Despite the poor initial handoff pose, the vision system and controller were easily able to dock thewheelchair.

Fig. 14. Keypoint matches on the lift platform for camera calibration.Matching performance suffered markedly from sharp changesin ambientlighting.

matched. A sample trial is shown in Figure 14 (left).However, Figure 14 (right) shows a second image takenat a different location several minutes later. In this case,adramatic change is lighting contrast yielded only 2 out of263 matched keypoints which were insufficient for cameracalibration.

While preliminary, these results underscore the great-est challenge to commercializing our vision-based controlapproach:robust tracking performance under the enor-mous range of illumination conditions encountered out-doors. We are currently investigating means to help mit-igate the effects of ambient light levels. For example,the DragonflyTM camera system used for all experimentsemployed a passive aperture lens. This necessitated thatcompensations for scene brightness be made through eithercamera shutter and CCD gain adjustments, or via software(e.g.histogram equalization). These were quickly found tobe inadequate, and prevented the use of more robust featuretracking algorithms (e.g. SIFT), as dramatic changes inillumination - such as the sun appearing from behinda cloud - would significantly distort image appearance.And while typically reliable under such conditions, ourfiducial tracker would fail under low light levels. Nearsunset generated a fairly consistent failure mode. As aresult, we are currently investigating active aperture lenses

and control approaches to achieve improved invariance toambient light levels.

Finally, levels of redundancy will need to be incorporatedshould the system ever be commercialized. We are cur-rently investigating the addition of a vision system on thewheelchair itself. Alternately, fusing camera data with thechair’s laser rangefinder could provide redundancy againsteach sensor’s failure modes.

ACKNOWLEDGMENTS

Special thanks to the entire ATRS Team, especiallyHerman Herman and Jeff McMahill (CMU) for all of theirwork on the wheelchair integration, Tom Panzarella, Jr.(Freedom Sciences), and Mike Martin and Tom Panzarella,Sr. (Cook Technologies). This project was funded in partby a grant from the Commonwealth of Pennsylvania,Department of Community and Economic Development.

REFERENCES

[1] U.S. DOT NHTSA, Vehicle Modifications to Accommodate Peoplewith Disabilities, http : //www.nhtsa.dot.gov.

[2] U.S. DOT NHTSA, Research Note: Wheelchair User Injuries andDeaths Associated with Motor Vehicle Related Incidents, Sep 1997.

[3] D. Miller and M. Slack, “Design and testing of a low-cost roboticwheelchair prototype,”Autonomous Robots, vol. 1, no. 3, 1995.

[4] H. Yanco, “Wheelesley, a robotic wheelchair system: indoor navi-gation and user interface,”Lecture Notes in Artificial Intelligence:Assistive Technology and Artificial Intelligence, pp. 256–268, 1998.

[5] T. Gomi and A. Griffith, “Developing intelligent wheelchairs forthe handicapped,”Lecture Notes in Artificial Intelligence: AssitiveTechnology and Artificial Intelligence, pp. 150–178, 1998.

[6] S. Levine, Y. Koren, J. Borenstein, R. Simpson, D. Bell, andL. Jaros, “The navchair assistive wheelchair navigation system,”IEEE Transactions on Rehabilitation Engineering, vol. 7, no. 4, pp.443–451, December 1999.

[7] R. Simpson and S. Levine, “Automatic adaptation in the navchairassistive wheelchair navigation system,”IEEE Transactions onRehabilitation Engineering, vol. 7, no. 4, pp. 452–463, 1999.

[8] R.S. Rao, K. Conn, S.H. Jung, J. Katupitiya, T. Kientz, V.Kumar,J. Ostrowski, S. Patel, and C.J. Taylor, “Human robot interaction:application to smart wheelchairs,” inProc. of the IEEE InternationalConference on Robotics and Automation (ICRA), Washington,DC,2002, pp. 3583–3588.

[9] S.P. Parikh, R.S. Rao, S. Jung, V. Kumar, J. Ostrowski, and C.J.Taylor, “Human robot interaction and usability studies fora smartwheelchair,” in Proc. of the IEEE International Conference onRobotics and Automation (ICRA), Las Vegas, Nevada, 2003, pp.3206–3211.

[10] S.P. Parikh, V. Grassi, V. Kumar, and J. Okamoto, “Incorporatinguser inputs in motion planning for a smart wheelchair,” inProc.of the IEEE International Conference on Robotics and Automation(ICRA), New Orleans, LA, 2004, pp. 2043–2048.

[11] G. Bourhis and P. Pino, “Mobile robotics and mobility assitance forpeople with motor impairments: rational justification for the vahmproject,” IEEE Trans Rehab Eng, vol. 4, no. 1, pp. 7–12, 1996.

[12] G. Bourhis, O. Horn, Habert O, and A. Pruski, “An autonomous ve-hicle for people with motor disabilities,”IEEE Robotics AutomationMagazine, vol. 8, no. 1, pp. 20–28, 2001.

[13] R. Simpson, D. Poirot, and M.F. Baxter, “Evaluation of thehephaestus smart wheelchair system,” inInternational Conferenceon Rehabilitation Robotics, 1999, pp. 99–105.

[14] R.C. Simpson, D. Poirot, and M.F. Baxter, “The hephaestussmart wheelchair system,”IEEE Trans. on Neural Systems andRehabilitation Engineering, vol. 10, no. 2, pp. 118–122, 2002.

[15] S. Seitz and C. Dyer, “Image morphing,” inProc. SIGGRAPH96,1996, pp. 21–30.

[16] J. Heikkila and O. Silven, “A four-step camera calibration procedurewith implicit image correction,” inComputer Vision and PatternRecognition Conference, 1997.

[17] California Institute of Technology,Camera Calibration Toolbox,http : //www.vision.caltech.edu/bouguetj/calib doc/.

[18] A. Fusiello, E. Trucco, T. Tommasini, and V. Roberto, “Improv-ing feature tracking with robust statistics,”Pattern Analysis andApplication, vol. 2, pp. 312–320, 1999.

[19] Ch. Grl, T. Ziner, and H. Niemann,Pattern Recognition, chapterIllumination insensitive template matching with hyperplanes, pp.273–280, Springer, 2003.

[20] E. Adelson, C. Anderson, J. Bergen, P. Burt, and J. Ogden, “Pyramidmethods in image processing,” vol. 29-6, 1984.

[21] A. Deluca, G. Oriolio, and C. Samson,Robot Motion Planning andControl, chapter Feedback control of a nonholonomic car-like robot,pp. 171–253, Springer-Verlag, 1998.

[22] D. Lowe, “Distinctive image features from scale-invariant key-points,” International Journal of Computer Vision, vol. 60-2, pp.91–110, 2004.