Strata Big Data Feb. 26, 2013 Let the Data Decide: Predictive Analytics in Healthcare

Strata 2013: Tutorial-- How to Create Predictive Models in R using Ensembles

-

date post

18-Oct-2014 -

Category

Education

-

view

3.060 -

download

8

description

Transcript of Strata 2013: Tutorial-- How to Create Predictive Models in R using Ensembles

How to Create Predictive Models in R using Ensembles

Strata - Hadoop World, New York

October 28, 2013

Giovanni Seni, Ph.D. Intuit

@IntuitInc [email protected]

Santa Clara University

© 2013 G.Seni 2013 Strata Conference + Hadoop World 2

Reference

© 2013 G.Seni 2013 Strata Conference + Hadoop World 3

Overview

• Motivation, In a Nutshell & Timeline

• Predictive Learning & Decision Trees

• Ensemble Methods - Diversity & Importance Sampling

– Bagging – Random Forest – Ada Boost – Gradient Boosting – Rule Ensembles

• Summary

© 2013 G.Seni 2013 Strata Conference + Hadoop World 4

Motivation

Volume 9 Issue 2

© 2013 G.Seni 2013 Strata Conference + Hadoop World 5

Motivation (2)

“1′st Place Algorithm Description: … 4. Classification: Ensemble classification methods are used to combine multiple classifiers. Two separate Random Forest ensembles are created based on the shadow index (one for the shadow-covered area and one for the shadow-free area). The random forest “Out of Bag” error is used to automatically evaluate features according to their impact, resulting in 45 features selected for the shadow-free and 55 for the shadow-covered part.”

© 2013 G.Seni 2013 Strata Conference + Hadoop World 6

Motivation (3)

• “What are the best of the best techniques at winning Kaggle competitions?

– Ensembles of Decisions Trees – Deep Learning

account for 90% of top 3 winners!”

Jeremy Howard, Chief Scientist of Kaggle KDD 2013

⇒ Key common characteristics: – Resistance to overfitting – Universal approximations

© 2013 G.Seni 2013 Strata Conference + Hadoop World 7

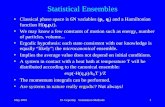

Ensemble Methods in a Nutshell

• “Algorithmic” statistical procedure

• Based on combining the fitted values from a number of fitting attempts

• Loosely related to:

– Iterative procedures – Bootstrap procedures

• Original idea: a “weak” procedure can be strengthened if it can operate “by committee”

– e.g., combining low-bias/high-variance procedures

• Accompanied by interpretation methodology

© 2013 G.Seni 2013 Strata Conference + Hadoop World 8

Timeline

• CART (Breiman, Friedman, Stone, Olshen, 1983)

• Bagging (Breiman, 1996)

– Random Forest (Ho, 1995; Breiman 2001)

• AdaBoost (Freund, Schapire, 1997)

• Boosting – a statistical view (Friedman et. al., 2000)

– Gradient Boosting (Friedman, 2001) – Stochastic Gradient Boosting (Friedman, 1999)

• Importance Sampling Learning Ensembles (ISLE) (Friedman, Popescu, 2003)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 9

Timeline (2)

• Regularization – variance control techniques:

– Lasso (Tibshirani, 1996) – LARS (Efron, 2004) – Elastic Net (Zou, Hastie, 2005) – GLMs via Coordinate Descent (Friedman, Hastie, Tibshirani, 2008)

• Rule Ensembles (Friedman, Popescu, 2008)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 10

Overview

• Motivation, In a Nutshell & Timeline

Ø Predictive Learning & Decision Trees

• Ensemble Methods

• Summary

© 2013 G.Seni 2013 Strata Conference + Hadoop World 11

Predictive Learning Procedure Summary

• Given "training" data

– is a random sample from some unknown (joint) distribution

• Build a functional model

– Offers adequate and interpretable description of how the inputs affect the outputs

– Parsimony is an important criterion: simpler models are preferred for the sake of scientific insight into the relationship

• Need to specify:

Nii

Niniii yxxxyD 1121 },{},,,,{ x==

D

)(),,,( 21 xFxxxFy n

==

y-x

>< strategy search criterion, score model,

© 2013 G.Seni 2013 Strata Conference + Hadoop World 12

Predictive Learning Procedure Summary (2)

• Model: underlying functional form sought from data

• Score criterion: judges (lack of) quality of fitted model

– Loss function : penalizes individual errors in prediction

– Risk : the expected loss over all predictions

• Search Strategy: minimization procedure of score criterion

∈= );()( axx FF

ℱ family of functions indexed by a

)(minarg* aaa

R=

));(,()( , axa x FyLER y

=

),( FyL

© 2013 G.Seni 2013 Strata Conference + Hadoop World 13

Predictive Learning Procedure Summary (3)

• “Surrogate” Score criterion:

– Training data:

– unknown unknown

⇒ Use approximation: Empirical Risk

•

• If not ,

)(minarg aaa

R

=∑=

=N

iiFyL

NR

1

));(,(1)( axa

),(~},{ 1 ypy Nii xx

),( yp x * a⇒

⇒

nN >> )()( *aa RR >>

© 2013 G.Seni 2013 Strata Conference + Hadoop World 14

Predictive Learning Example

• A simple data set

• What is the class of new point ?

• Many approaches… no method is universally better; try several / use committee

Attribute-1

( x1 )

Attribute-2

( x2 )

Class

(y)

1.0 2.0 blue

2.0 1.0 green

… … …

4.5 3.5 ? 1x

2x

© 2013 G.Seni 2013 Strata Conference + Hadoop World 15

• Ordinary Linear Regression (OLR)

– Model:

1x

2x

F(x) = a0 + ajx j

j=1

n

∑

⇒ Not flexible enough

⎩⎨⎧

≥else

0)(xF

Predictive Learning Example (2)

;

© 2013 G.Seni 2013 Strata Conference + Hadoop World 16

• Model:

where if , otherwise

1x

2x

2 5

3

51 ≥x

32 ≥x

21 ≥x

Sub-regions of input variable space

{ } ==

MmmR 1

Decision Trees Overview

R1 R2

R3 R4

∑=

==M

mRm mIcTy

1ˆ )(ˆ)(ˆ xx

IR (x) =1 x ∈ R 0

© 2013 G.Seni 2013 Strata Conference + Hadoop World 17

Decision Trees Overview (2)

• Score criterion:

– Classification – "0-1 loss" ⇒ misclassification error (or surrogate)

– Regression – least squares – i.e.,

• Search: Find

– i.e., find best regions and constants

cm, Rm{ }1

M= argmin

TM = cm ,Rm{ }1M

yi −TM (xi )( )2

i=1

N

∑

cm, Rm{ }1

M= argmin

TM = cm ,Rm{ }1M

I yi ≠TM (xi )( )i=1

N

∑

( )2ˆ)ˆ,( yyyyL −= R(TM )

mR mc

T = argminT

R(T )

© 2013 G.Seni 2013 Strata Conference + Hadoop World 18

Decision Trees Overview (3)

• Join optimization with respect to and simultaneously is very difficult

⇒ use a greedy iterative procedure

mR mc

• j1, s1 R0

R0

R1

• j1, s1 R0 j2, s2 •

R1

R2

R3

R4

• j1, s1 R0 j2, s2 •

R1 •

R3

• R4

• j3, s3 R2

R6

R5

• j1, s1 R0 j2, s2 •

R1 •

R3

• R4

• j3, s3 R2 •

R5

• j4, s4 R6 •

R7

• R8

© 2013 G.Seni 2013 Strata Conference + Hadoop World 19

Decision Trees What is the “right” size of a model?

• Dilemma

– If model (# of splits) is too small, then approximation is too crude (bias) ⇒ increased errors

– If model is too large, then it fits the training data too closely (overfitting, increased variance) ⇒ increased errors

⇒ο ο

ο ο ο

ο

ο ο

ο ο

ο ο ο

ο

ο

ο

x

y

ο ο

ο ο ο

ο

ο ο

ο ο

ο ο ο

ο

ο

ο 1c

2c

ο ο

ο ο ο

ο

ο ο

ο ο

ο ο ο

ο

ο

ο 1c

2c3c

x x

y y

vs

© 2013 G.Seni 2013 Strata Conference + Hadoop World 20

Decision Trees What is the “right” size of a model? (2)

– Right sized tree, , when test error is at a minimum – Error on the training is not a useful estimator!

• If test set is not available, need alternative method

Model Complexity

Pred

ictio

n Er

ror

Low Bias High Variance

High Bias Low Variance

Test Sample

Training Sample

High Low *M

*M

© 2013 G.Seni 2013 Strata Conference + Hadoop World 21

• Two strategies

– Prepruning - stop growing a branch when information becomes unreliable

• #(Rm) – i.e., number of data points, too small

⇒ same bound everywhere in the tree

• Next split not worthwhile

⇒ Not sufficient condition

– Postpruning - take a fully-grown tree and discard unreliable parts

(i.e., not supported by test data)

• C4.5: pessimistic pruning

• CART: cost-complexity pruning (more statistically grounded)

Decision Trees Pruning to obtain “right” size

© 2013 G.Seni 2013 Strata Conference + Hadoop World 22

Decision Trees Hands-on Exercise

• Start Rstudio

• Navigate to directory:

example.1.LinearBoundary

• Set working directory: use setwd() or with GUI

• Load and run “fitModel_CART.R”

• If curious, also see “gen2DdataLinear.R”

• After boosting discussion, load and run “fitModel_GBM.R

0.0 0.2 0.4 0.6 0.8 1.0

0.0

0.2

0.4

0.6

0.8

1.0

x1

x2

© 2013 G.Seni 2013 Strata Conference + Hadoop World 23

• Ability to deal with irrelevant inputs

– i.e., automatic variable subset selection

– Measure anything you can measure

– Score provided for selected variables ("importance")

• No data preprocessing needed

- Naturally handle all types of variables

• numeric, binary, categorical

- Invariant under monotone transformations:

• Variable scales are irrelevant

• Immune to bad distributions (e.g., outliers)

( )jjj xgx =

−jx

Decision Trees Key Features

© 2013 G.Seni 2013 Strata Conference + Hadoop World 24

• Computational scalability

– Relatively fast: ( )NnNO log

• Missing value tolerant

- Moderate loss of accuracy due to missing values

- Handling via "surrogate" splits

• "Off-the-shelf" procedure

- Few tunable parameters

• Interpretable model representation

- Binary tree graphic

Decision Trees Key Features (2)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 25

• Discontinuous piecewise constant model

– In order to have many splits you need to have a lot of data

• In high-dimensions, you often run out of data after a few splits

– Also note error is bigger near region boundaries

x

)(xF

Decision Trees Limitations

© 2013 G.Seni 2013 Strata Conference + Hadoop World 26

• Not good for low interaction

– e.g., is worst function for trees

– In order for to enter model, must split on it

• Path from root to node is a product of indicators

( )x*F( )

( )∑

∑

=

=

=

+=

n

jjj

n

jjjo

xf

xaaF

1

*

1

* x

(no interaction, additive)

lx

• Not good for that has dependence on many variables

- Each split reduces training data for subsequent splits (data fragmentation)

( )x*F

Decision Trees Limitations (2)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 27

• High variance caused by greedy search strategy (local optima)

– Errors in upper splits are propagated down to affect all splits below it

⇒ Small changes in data (sampling fluctuations) can cause big changes in tree

- Very deep trees might be questionable

- Pruning is important

• What to do next? – Live with problems – Use other methods (when possible) – Fix-up trees: use ensembles

Decision Trees Limitations (3)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 28

Overview

• In a Nutshell & Timeline

• Predictive Learning & Decision Trees

Ø Ensemble Methods

– In a Nutshell, Diversity & Importance Sampling – Generic Ensemble Generation – Bagging, RF, AdaBoost, Boosting, Rule Ensembles

• Summary

© 2013 G.Seni 2013 Strata Conference + Hadoop World 29

Ensemble Methods In a Nutshell

• Model:

– : “basis” functions (or “base learners”)

– i.e., linear model in a (very) high dimensional space of derived variables

• Learner characterization:

– : a specific set of joint parameter values – e.g., split definitions at internal nodes and predictions at terminal nodes

– : function class – i.e., set of all base learners of specified family

∑ =+=

M

m mmTccF10 )()( xx

{ }MmT 1)(x

);()( mm TT pxx =

mp

{ } PT ∈ppx );(

© 2013 G.Seni 2013 Strata Conference + Hadoop World 30

Ensemble Methods In a Nutshell (2)

• Learning: two-step process; approximate solution to

– Step 1: Choose points

• i.e., select

– Step 2: Determine weights

• e.g., via regularized LR

{cm, pm}oM = argmin

{cm , pm}oM

L yi, c0 + cmT (x;pm )m=1

M∑( )

i=1

N

∑

{ }Mm 1p

{ } { } PM

m TT ∈⊂ ppxx );()( 1

{ }Mmc 0

© 2013 G.Seni 2013 Strata Conference + Hadoop World 31

Ensemble Methods Importance Sampling (Friedman, 2003)

• How to judiciously choose the “basis” functions (i.e., )?

• Goal: find “good” so that

• Connection with numerical integration:

–

{ }Mm 1p

)( )}{,}{;( *11 xpx FcF M

mM

m ≅{ }Mm 1p

)(w )( M

1m m mII ppp ∑∫ =Ρ≈∂

vs. Accuracy improves when we choose more points from this region…

© 2013 G.Seni 2013 Strata Conference + Hadoop World 32

Importance Sampling Numerical Integration via Monte Carlo Methods

• = sampling pdf of -- i.e,

– Simple approach: -- i.e., uniform

– In our problem: inversely related to ’s “risk”

• i.e., has high error ⇒ lack of relevance of ⇒ low

• “Quasi” Monte Carlo:

– with/out knowledge of the other points that will be used

• i.e., single point vs. group importance

– Sequential approximation: ’s relevance judged in the context of the (fixed) previously selected points

P∈p)(pr

iidr m )(p

mp);( mT px

mp

{ }Mm r 1)(~ pp

p

)( mr p

© 2013 G.Seni 2013 Strata Conference + Hadoop World 33

Ensemble Methods Importance Sampling – Characterization of

• Let

)(prBroad

{ }MmT 1);( px• Ensemble of “strong” base learners - i.e., all with

)()( ∗≈ pp RiskRisk m

);( mT px• ’s yield similar highly correlated predictions ⇒ unexceptional performance

)(prNarrow

• Diverse ensemble - i.e., predictions are not highly correlated with each other

• However, many “weak” base learners - i.e., ⇒ poor performance )()( ∗>> pp RiskRisk m

( )pp p Riskminarg=∗

© 2013 G.Seni 2013 Strata Conference + Hadoop World 34

Ensemble Methods Approximate Process of Drawing from

• Heuristic sampling strategy: sampling around by iteratively applying small perturbations to existing problem structure

– Generating ensemble members

– is a (random) modification of any of • Data distribution - e.g., by re-weighting the observations

• Loss function - e.g., by modifying its argument

• Search algorithm (used to find minp)

– Width of is controlled by degree of perturbation

∗p

);()( mm TT pxx =

( ){ }}

);( ,minarg { to1For

pxp p TyLPERTURBMm

xymm Ε=

=

{}⋅ PERTURB

)(pr

© 2013 G.Seni 2013 Strata Conference + Hadoop World

Generic Ensemble Generation Step 1: Choose Base Learners

35

• Forward Stagewise Fitting Procedure:

– Algorithm control:

• : random sub-sample of size ⇒ impacts ensemble "diversity"

• : “memory” function ( )

υη , ,L

N≤η)(ηmS

∑−

=− ⋅=1

11 )()( m

k km TF xx υ 10 ≤≤υ

Modification of data distribution Modification of loss function

(“sequential” approximation)

𝐹0(x) = 0 For 𝑚 = 1 to 𝑀 { // Fit a single base learner p𝑚 = argmin

p. 𝐿0𝑦𝑖 , 𝐹𝑚−1 + 𝑇(x𝑖 ;p)8

𝑖∈𝑆𝑚 (𝜂)

// Update additive expansion 𝑇𝑚(𝑥) = 𝑇0x; p𝒎8 𝐹𝑚(x) = 𝐹𝑚−1(x) + 𝜐 ∙ 𝑇𝑚(x) } write {𝑇𝑚(x)}1𝑀

p! !!

!

© 2013 G.Seni 2013 Strata Conference + Hadoop World 36

• Given , coefficients can be obtained by a

regularized linear regression

– Regularization here helps reduce bias (in addition to variance) of the model

– New iterative fast algorithms for various loss/penalty combinations

• “GLMs via Coordinate Descent” (2008)

{ } { }MmmMmm TT 11 );()( == = pxx

+⎟⎠

⎞⎜⎝

⎛+= ∑ ∑

= =

)( ,minarg}{N

1i 10

}{

M

mimmi

cm TccyLc

m

x)(cP⋅λ

Generic Ensemble Generation Step 2: Choose Coefficients c! !

!!

© 2013 G.Seni 2013 Strata Conference + Hadoop World 37

• Bagging = Bootstrap Aggregation

•

•

•

•

•

– i.e., perturbation of the data distribution only – Potential improvements? – R package: ipred

L(y, y)

2/N=η

)(xmT

0=υ

{ }M1/1 ,0 Mcc mo ==

( )

{ }Mm

mmm

mm

Siiimim

T

TFFTT

TFyLMm

F

m

1

1

)(1

0

)( write

})( )()(

);()(

);()( ,minarg { to1For

0)(

x

xxxpxx

pxxp

x

p

⋅+=

=

+=

=

=

−

∈−∑

υ

ηη

υ

( ) L⇒ no memory

⇒ are large un-pruned trees

i.e., not fit to the data (avg)

Bagging (Breiman, 1996)

: as available for single tree

© 2013 G.Seni 2013 Strata Conference + Hadoop World 38

Bagging Hands-on Exercise

-2 -1 0 1 2

-1.0

-0.5

0.0

0.5

1.0

x1

x2

• Navigate to directory:

example.2.EllipticalBoundary

• Set working directory: use setwd() or with GUI

• Load and run

– fitModel_Bagging_by_hand.R – fitModel_CART.R (optional)

• If curious, also see gen2DdataNonLinear.R

• After class, load and run fitModel_Bagging.R

© 2013 G.Seni 2013 Strata Conference + Hadoop World 39

• Under , averaging reduces variance and leaves bias unchanged

• Consider “idealized” bagging (aggregate) estimator:

– fit to bootstrap data set

– is sampled from actual population distribution (not training data)

– We can write:

⇒ true population aggregation never increases mean squared error!

⇒ Bagging will often decrease MSE…

2)()ˆ,( yyyyL −=

)( )( xx Zff

Ε=

Zf

{ }Nii xyZ 1,=

Z

[ ] [ ][ ] [ ][ ]2

2 2

2 2

)(

)()()(

)()()()(

x

xxx

xxxx

fY

fffY

fffYfY

Z

ZZ

−Ε≥

−Ε+−Ε=

−+−Ε=−Ε

Bagging Why it helps?

© 2013 G.Seni 2013 Strata Conference + Hadoop World 40

• Random Forest = Bagging + algorithm randomizing

– Subset splitting As each tree is constructed…

• Draw a random sample of predictors before each node is split

• Find best split as usual but selecting only from subset of predictors

⇒ Increased diversity among - i.e., wider

• Width (inversely) controlled by

– Speed improvement over Bagging – R package: randomForest

⎣ ⎦1)(log2 += nns

{ }MmT 1)(x )(pr

sn

Random Forest (Ho, 1995; Breiman, 2001)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 41

• ISLE improvements:

– Different data sampling strategy (not fixed) – Fit coefficients to data

• xxx_6_5%_P : 6 terminal nodes trees 5% samples without replacement Post-processing – i.e., using estimated “optimal” quadrature coefficients

⇒ Significantly faster to build!

Bag RF Bag_6_5%_P RF_6_5%_P

Co

mp

arat

ive

RM

S E

rro

r

Bagging vs. Random Forest vs. ISLE 100 Target Functions Comparison (Popescu, 2005)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 42

• Equivalence to Forward Stagewise Fitting Procedure – We need to show is equivalent to line a. above

– is equivalent to line c.

• R package adabag

[ ]

( )∑

∑∑

=

+

=

=

≠⋅⋅=

−=

≠=

=

=

M

m mm

imimmi

mi

mmm

N

imi

imiN

imi

m

mim

i

Tsign

TyIwwerrerr

w

TyIwerr

wTMm

Nw

1

)()1(

1)(

1)(

)(

)0(

)(Output

})((expSet d.

))1(log( Compue c.

))((

Compute b. with data training to)( classifier aFit a.

{ to1For 1 :n weightsobservatio

x

x

x

x

α

α

α

( )p

p ⋅= minargm

( )

Mmm

mmmm

mm

Siiimi

cmm

Tc

TcFFTT

TcFyLcMm

F

m

1

1

)(1

,

0

)}(,{ write}

)( )()( );()(

);()( ,minarg),( { to1For

0)(

x

xxxpxx

pxxp

x

p

⋅⋅+=

=

⋅+=

=

=

−

∈−∑

υ

η

( )c

mc ⋅= minarg

Boo

k

AdaBoost (Freund & Schapire, 1997)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 43

AdaBoost Hands-on Exercise

• Navigate to directory:

example.2.EllipticalBoundary

• Set working directory: use setwd() or with GUI

• Load and run

– fitModel_Adaboost_by_hand.R

• After class, load and run fitModel_Adaboost.R and fitModel_RandomForest.R

-2 -1 0 1 2

-1.0

-0.5

0.0

0.5

1.0

x1

x2

© 2013 G.Seni 2013 Strata Conference + Hadoop World 44

• Boosting with any differentiable loss criterion

• General

•

•

•

•

•

– Potential improvements? – R package: gbm

( )

Mmm

mmmm

mm

Siiimi

cmm

Tc

TcFFTT

TcFyLcMm

cF

m

1

1

)(1

,

00

)}(),{( write}

)( )()( );()(

);()( ,minarg),( { to1For

)(

x

xxxpxx

pxxp

x

p

⋅

⋅⋅+=

=

⋅+=

=

=

−

∈−∑

υ

υ

η

⇒ “shrunk” sequential partial regression coefficients { }M1mc

η

υ

mc ( ) L

)ˆ,( yyL

⇒= 1.0υ Sequential sampling

2N=η

⇒ )(xmT Any “weak” learner

∑ ==

N

i ico cyLc1

),(minarg

0c

Stochastic Gradient Boosting (Friedman, 2001)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 45

• More robust than

• Resistant to outliers in …trees already providing resistance to outliers in

• Note:

– Trees are fitted to pseudo-response

⇒ Can’t interpret interpret individual trees

– Original tree constants are overwritten

– “shrunk” version of tree gets added to ensemble

( )2Fy −

y

x

{ }

( )

{ } { }( )

{ }

( )

}

ˆ )()(

expansion // Update

1 )(ˆ

tscoefficien find :Step2//

,~ treeregression-LS node terminal

)( ~

)( find :Step1//

{ to1For )(

11

11

11

1

10

∑=

−

−∈

−

∈⋅+=

=−=

−=

−=

=

=

J

jjmijmmm

NimiRjm

Nii

Jjm

imii

m

Ni

RIFF

JjFymedian

yJR

Fysigny

T

MmymedianF

jmi

…

xxx

x

x

x

x

x

x

γυ

γ

Stochastic Gradient Boosting LAD Regression – L !,! = ! − ! !

© 2013 G.Seni 2013 Strata Conference + Hadoop World 46

• Sequential ISLE tend to perform better than parallel ones

– Consistent with results observed in classical Monte Carlo integration

Bag RF

Bag_6_5%_P RF_6_5%_P

Co

mp

arat

ive

RM

S E

rro

r • xxx_6_5%_P : 6 terminal nodes trees 5% samples without replacement Post-processing – i.e., using estimated “optimal” quadrature coefficients

• Seq_υ_η%_P : “Sequential” ensemble 6 terminal nodes trees υ : “memory” factor η % samples without replacement Post-processing

Boost Seq_0.01_20%_P

Seq_0.1_50%_P

“Sequential”

“Parallel”

Parallel vs. Sequential Ensembles 100 Target Functions Comparison (Popescu, 2005)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 47

• Trees as collection of conjunctive rules:

– These simple rules, , can be used as base learners

– Main motivation is interpretability

∑=

∈=J

jjmjmm RIcT

1)ˆ(ˆ)( xx

1x2x

yR2

R1

R3

R5

R4

152215

27

)27()22()( 211 >⋅>= xIxIr x⇒ 1R

⇒ 2R )270()22()( 212 ≤≤⋅>= xIxIr x

⇒ 3R )0()2215()( 213 xIxIr ≤⋅≤<=x

⇒ 4R )15()150()( 214 >⋅≤≤= xIxIr x

⇒ 5R )150()150()( 215 ≤≤⋅≤≤= xIxIr x

{ }1,0)( ∈xmr

Rule Ensembles (Friedman & Popescu, 2005)

© 2013 G.Seni 2013 Strata Conference + Hadoop World 48

• Rule-based model:

– Still a piecewise constant model

• Fitting

– Step 1: derive rules from tree ensemble (shortcut)

• Tree size controls rule “complexity” (interaction order)

– Step 2: fit coefficients using linear regularized procedure:

∑+=m

mmraaF )()( 0 xx

({ak},{bj}) = argmin{ak },{bj }

L yi, F x; ak{ }0

K , bj{ }1

P( )( )i=1

N∑

⇒ complement the non-linear rules

with purely linear terms:

Rule Ensembles ISLE Procedure

+!!! ⋅ !(a)+ !(b) !

© 2013 G.Seni 2013 Strata Conference + Hadoop World 49

Boosting & Rule Ensembles Hands-on Exercise

0 200 400 600 800 1000

500

1000

1500

2000

2500

Iteration

Abs

olut

e lo

ss

• Navigate to directory:

example.3.Diamonds

• Set working directory: use setwd() or with GUI

• Load and run

– viewDiamondData.R – fitModel_GBM.R – fitModel_RE.R

• After class, go to:

example.1.LinearBoundary

Run fitModel_GBM.R

© 2013 G.Seni 2013 Strata Conference + Hadoop World 50

Overview

• Motivation, In a Nutshell & Timeline

• Predictive Learning & Decision Trees

• Ensemble Methods

Ø Summary

© 2013 G.Seni 2013 Strata Conference + Hadoop World 51

Summary

• Ensemble methods have been found to perform extremely well in a variety of problem domains

• Shown to have desirable statistical properties

• Latest ensemble research brings together important foundational strands of statistics

• Emphasis on accuracy but significant progress has been made on interpretability

Go build Ensembles and keep in touch!

![Strata Schemes Management Regulation 2016€¦ · Strata Schemes Management Regulation 2016 [NSW] Part 2 Owners corporations and strata committees Part 2 Owners corporations and strata](https://static.fdocuments.us/doc/165x107/5ea65b07c6140324195ce6bc/strata-schemes-management-regulation-2016-strata-schemes-management-regulation-2016.jpg)

![BC Strata Property Act - bazingahelp.zendesk.com · STRATA PROPERTY ACT [SBC 1998] ... 78 Acquisition of land by strata corporation ... Part 15 — Strata Plan Amendment and Amalgamation](https://static.fdocuments.us/doc/165x107/5b1695857f8b9a596d8cce51/bc-strata-property-act-strata-property-act-sbc-1998-78-acquisition-of.jpg)