Sparkk: Quality-Scalable Approximate Storage in DRAM · THE MEMORY FORUM 1 Sparkk: Quality-Scalable...

Transcript of Sparkk: Quality-Scalable Approximate Storage in DRAM · THE MEMORY FORUM 1 Sparkk: Quality-Scalable...

THE MEMORY FORUM 1

Sparkk: Quality-Scalable Approximate Storage in DRAMJan Lucas, Mauricio Alvarez-Mesa, Michael Andersch and Ben Juurlink

Abstract—DRAM memory stores its contents in leaky cells that require periodic refresh to prevent data loss. The refresh operationdoes not only degrade system performance, but also consumes significant amounts of energy in mobile systems. Relaxed DRAMrefresh has been proposed as one possible building block of approximate computing. Multiple authors have suggested techniqueswhere programmers can specify which data is critical and can not tolerate any bit errors and which data can be stored approximately.However, in these approaches all bits in the approximate area are treated as equally important. We show that this produces suboptimalresults and higher energy savings or better quality can be achieved, if a more fine-grained approach is used. Our proposal is able tosave more refresh power and enables a more effective storage of non-critical data by utilizing a non-uniform refresh of multiple DRAMchips and a permutation of the bits to the DRAM chips. In our proposal bits of high importance are stored in a high quality storage bitsand bits of low importance are stored in low quality storage bits. The proposed technique works with commodity DRAMs.

Index Terms—DRAM, Refresh, Approximate Computing

F

1 INTRODUCTION

R EFRESH is projected to consume almost 50% of totalDRAM power only a few generations ahead [1]. For

mobile devices DRAM refresh is already a big concern. Atthe same time the DRAM capacity of modern smart phonesand tablets lags just slightly behind regular PCs. Complexmultimedia application are now used on phones and often uselarge parts of their DRAM usage to store uncompressed audioor picture data. DRAM refresh must be performed even if theCPU is in sleep mode. This makes reducing refresh energyimportant for battery life. Short refresh periods such as 64 msare required for error-free storage. Most bit cells can hold theirdata for many seconds, but to ensure error-free storage all cellsare refreshed at a rate sufficient for even the most leaky cells.But not all data requires an error-free storage, some types ofdata can accept an approximate storage that introduces someerrors. This provides an opportunity to reduce the refresh rate.Uncompressed media data is a good candidate for approximatestorage. However not all media data suitable for approximatestorage is equally tolerant to the errors caused by the storage.In this paper we propose a lightweight modification to DRAM-based memory systems that provides the user with an ap-proximate storage area in which accuracy can be traded forreduced power consumption. We show how data and refreshoperations can be distributed over this storage area to reachbetter quality levels than previously proposed techniques withthe same energy or the same quality with less energy. Ourtechnique allows an analog-like scaling of energy and qualitywithout requiring any change to the DRAM architecture. Thistechnique could be a key part of an approximate computingsystem because it enlarges the region of operation where usefulquality levels can be achieved.

2 APPROXIMATIVE STORAGE

Reducing the energy consumption is a topic of ever increasingimportance. By relaxing the normal correctness constraints,approximate computing opens many possibilities for energyreduction. Many applications can tolerate small errors andstill provide a great user experience [2].

• All authors are with the Embedded Systems Architecture Department ofTU Berlin, Germany.

This does not only apply to correctness of calculations butalso applies to the storage of data values. Often a bit-exactstorage is not required and small deviations do not hurt.The amount of variation applications can tolerate dependson the application [2]. We therefore argue that a practicalapproximative storage system should be able to providedifferent quality levels. This allows programmers or tools tofind a good trade-off between power consumption and quality.Even within a single application, many different storage areaswith vastly different quality requirements can exist. Previouswork often used binary classifications such as critical and non-critical data [2][3]. This binary classification, however, limitsthe usefulness of approximative storage. With only a singlequality level for approximate storage, applications cannotchoose the best accuracy and power consumption trade-offfor each storage area, but are forced to pick a configurationthat still provides acceptable quality for the data that is mostsensitive to errors.

3 RELATED WORK

Among others, Jamie Liu et al. [1] recognized that mostDRAM rows do not contain high leakage cells and thus cantolerate lower refresh rates. Most cells retain their data fora much longer time than the short regular refresh period,that is required for error-free storage [4]. They proposed amechanism named RAIDR to refresh these rows at a lowerrate. Because different refresh periods cannot be achievedwith the conventional DRAM internal refresh counters, theyadd a refresh counter to the memory controller. This refreshcounter runs at the rate necessary to achieve bit-error freeoperation, including rows that contain cells with high leakage.The memory controller then generates activate and prechargecommands to manually refresh the rows. Manual refreshcycles are skipped if the memory controller determines thatthey are not necessary. The manual refresh by the memorycontroller needs slightly more power per refresh operationthan the normal auto refresh as the row addresses need to betransmitted to memory. But the authors show that the powersaved by the reduced refresh frequency outweighs the powerconsumed by the more complex refresh signaling. The idea ofa memory controller managed refresh and memory controller

THE MEMORY FORUM 2

01234567012345670123456701234567

012345678910111213141516171819202122232425262728293031 App.

DRAM

DRAM chip 0DRAM chip 1DRAM chip 2DRAM chip 3

Byte 0Byte 1Byte 2Byte 3

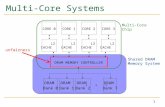

Fig. 1: Mapping of bits to 4 DRAM chips for approximate byte storage

internal row counter is also used in this paper. In RAIDR, thememory controller uses bloom filters to classify row addressesinto different refresh bins. Depending on a row’s refresh bin,the memory controller issues an actual refresh command onlyevery fourth, second or every time the refresh counter reachesit. This results in rows getting refreshed at 256, 128 or 64 msperiods depending on their refresh binning. Our proposalextends RAIDR by introducing additional refresh bins forapproximate data.

Song Liu et al. propose Flikker [3], a memory area withreduced refresh periods for non-critical data. They propose amodified DRAM to enable longer refresh periods on a part ofthe DRAM. The authors of RAIDR recognized that their workcan be combined with the Flikker proposal of Liu et al. Ourapproach can been seen as an extension of Flikker. It providesan additional reduction of refresh activity for non-critical data.Different from the storage area proposed by Liu et al. thisstorage area uses varying refresh periods for different bitsbased on their importance.

Ware and Hampel proposed threaded memory modules [5].In a threaded memory module, a DRAM rank is splitinto multiple subranks. The subranks have separated chipselect(CS) lines but otherwise share the address and controlsignals. The CS line controls whether the DRAM chip reacts totransmitted commands or ignores them. By providing multipleCS lines instead of a single CS signal per rank, commands canbe issued to a subset of the DRAM rank. Ware and Hampellist various advantages, such as finer granularity transactions,higher performance and lower power consumption. Ourproposal also relies on subranks, but uses them to providebits with different energy/error rate trade-offs simultaneously.

Sampson et al. worked on approximate storage in multilevel cells in Flash or PCM [6]. They recognized that storagebits from one cell have different reliabilities and errors canbe minimized with their so called striping code. This stripingcode is very similar to the permutation proposed in this paper.

4 SPARKK

In this section, the first key ideas behind the Sparkk storageare described. Then, it is explained how the DRAM controllermanages the refresh of these storage areas.

4.1 Sparkk StorageOur proposed extension to Flikker and RAIDR is based on twokey observations:

1. Even within a single storage area, not all bits are equallycritical.2. Most memory systems require multiple DRAM chips (ormultiple dies in a single package) to reach the required band-width and capacity.Applications mostly use and store multi-bit symbols suchas bytes. A bit error in the most significant bit of a bytechanges the value of the byte by 128, while a bit error ofthe least significant bit will only change the value by one.Many applications can tolerate errors that change the leastsignificant bit, but will provide an unacceptable quality if themost significant bit fails often.Regular DDR3 chips have 4, 8 or 16 bit wide interfaces, butmost CPUs use wider interfaces. Building a 64-bit wide DRAMinterface with regular DDR3 requires at least four 16-bit widechips or eight 8-bit wide chips. Chip select signals are normallyused to control multiple ranks that share the same data lines.The chip select signals for all DRAM chips of a single rankare usually connected together. Only the whole rank can beenabled or disabled. Thus a command is always executedon the whole rank. We propose that the memory controllerprovides separate CS signals for every chip of the DRAM.This way commands can be issued to a subset of the DRAMchips of a rank. While many additional uses are possible [5],Sparkk uses this to gain more fine grained control over therefresh. With this modification to the traditional interface,different DRAM chips of a rank do not need to share the samerefresh profile, as refresh commands can be issued selectivelyto DRAM chips. Using manual refresh does not work togetherwith self-refresh, as during self-refresh mode the interface tothe memory controller is disabled and self-refresh is performedautonomously by the DRAM chip. Requiring one CS line persubrank can also be problematic in systems with many sub-ranks. These problems can be solved by making changes to theDRAM refresh signaling. This, however, requires modificationsto the DRAM chips. The exact details of such a scheme remainfuture work.

Different refresh periods for the same row of different DRAMchips of one rank can make multiple storage bits with differentquality levels available simultaneously. But without additionalmodifications to the usual memory system this does not solvethe problem: Some high quality storage bits would used tostore low-importance bits and vice versa. It is therefore neces-sary to permute the data bits so that high-importance bits arestored in high-quality storage bits and low quality storage bitsare only used for bits of low importance.Figure 1 shows how four bytes can be mapped to a 32-bitwide memory interface built from four DRAM chips. The

THE MEMORY FORUM 3

red dashed lines show a commonly used mapping betweenapplication bits and DRAM bits. The green lines show theproposed mapping: The two highest significance bits from allfour bytes transmitted per transfer are mapped into DRAMchip 3. The two smallest significance bits are all mapped intoDRAM chip 0. Bit errors in DRAM chip 3 now have a muchhigher impact on the stored values. They will change the storedvalues by 64, 128 or 192, while bit errors in DRAM chip 0will only cause small errors in range of 1-3. Data types withmore than 8 bits per value have even larger differences insignificance between the bits. To find a good trade-off betweenthe number of refresh operations and quality, more refreshcycles need to be allocated for the refresh of DRAM chip 3than for DRAM chip 0.Which bits are important varies between data types; here, anexamples is shown for bytes. We propose to add a permutationstage to the memory controller that provides a small numberof permutations selected for the most common data types. Theused permutation could be controlled by the type of instructionused for access, additional page table flags or a small numberof high address bits of the physical address. Permutationsare not limited to blocks equal to the width of the memoryinterface: It is also possible to permute the bits within one burstor one cache line. With a 32-bit wide DDR3 interface one burstis 256-bit long, so it may be used to map four 64-bit values toDRAM chips in a way that minimizes errors.

4.2 Controlling the refreshAside from the permutation, an effective way for the memorycontroller to decide whether a row needs to be refreshed isalso required. Unfortunately, the solution used by RAIDRcannot be adapted for this task. In RAIDR, the memorycontroller uses bloom filters to decide which rows need to berefreshed more often. These bloom filters are initialized withthe set of rows that contain high leakage cells. A bloom filtercan produce false positives, but refreshing some rows moreoften than needed does not hurt but only causes additionalpower usage. For schemes such as Sparkk or Flikker, a bloomfilter cannot be used: Areas falsely binned as approximatewould cause data corruption in critical memory locations anda bloom filter containing all non-approximate rows would uselarge amounts of memory.A different solution is required. The authors of Flikkerproposed a single boundary in memory space between criticaland non-critical data. While this solution is simple, it doesnot offer enough flexibility as it does not allow for multipleapproximate storage areas with different quality levels at thesame time. It should be possible to run multiple applicationseach using multiple approximative storage areas at differentquality levels. A practical approximate storage system shouldthus offer the ability to configure multiple memory areaswith different quality levels. This way, a trade-off betweenpower and accuracy can be chosen for each area. Using ourproposal, it is also possible to turn off refresh completelyfor unused bits, e.g. if 16-bit data types are used to store12-bit data. It is also possible to built approximate storagethat guarantees that errors directly caused by the memorystay within configured bounds: More significant bits canbe configured to be refreshed at the rate required for errorfree operation. Only less significant bits are then storedapproximately.

Fortunately, we can exploit the characteristics of the refreshcounter to build a flexible mechanism that enables thehandling of hundreds or thousands of memory areas withdifferent refresh characteristics with a very simple hardwareunit and low storage requirements. Every time the refreshcounter switches to the next row, the memory controllermust decide if a refresh operation should be triggered in thememory and, if so, on which subranks. The refresh counterin the memory controller counts through the rows in amonotonic and gap-less fashion. A mechanism that providesretrieval of the refresh characteristics of arbitrary addressesis therefore not required. At every point in time, it is onlynecessary to have access to the refresh properties of thecurrent block and information about the end of current block.We propose to store the refresh properties in an ordered list.Each entry contains the address of the end of the current areaand informations about the refresh properties of this block.When the refresh counter address and the end of the blockaddress match, we advance to the next entry in the table.Figure 2 shows the proposed hardware unit. The unit usesa small single ported memory to store the list of memoryareas. The exact storage requirements depend on the numberof DRAM chips per rank, the maximum number of DRAMrows and the maximum refresh period at which a DRAM chipstill provides at least some working storage bits. Normallyless than 100 bits are required per memory area. Because ofthe list structure each storage area can be composed from anynumber of consecutive rows. For each area, the refresh periodof each DRAM chip can be freely configured to any multipleof the base refresh rate.

The refresh settings table stores phase and periodinformation for each DRAM chip. Every time a new areais accessed, the controller determines which memory chipsshould be refreshed within this area: The phase countersfor each memory chip are decremented and a counter readszero, the chip is refreshed and the phase is reset to the valueof the period information. A special period value can beused to indicate to omit refresh completely, this be can beanother useful feature for some applications: Reading orwriting data from a row also causes a refresh of the data.A GPU that renders a new image into a framebuffer every16.6 ms does not need to refresh the framebuffer. The accesspattern guarantees refresh, even without explicit refresh cycles.

refresh addr next block

=

table entry

next

10

+1

phase period

Fig. 2: Refresh settings table

THE MEMORY FORUM 4

0 10 20 30 40 50 60 70 80 90

100

1 10 100

PS

NR

[dB

]

Average refresh period[s]

Sparkk 8Sparkk 4Sparkk 2

Flikker

Fig. 3: PSNR for Flikker and Sparkk with 2, 4, 8 DRAM chips

10-5

10-4

10-3

10-2

10-1

100

1 10 100

Cu

mu

lative

fa

ilure

pro

ba

bili

ty

Average refresh period[s]

Bit 7 & 6Bit 5 & 4Bit 3 & 2Bit 1 & 0

Flikker

Fig. 4: Effect of variable refresh on failure probability forindividual bits for Sparkk with 4 DRAM chips

Different software interfaces to such a storage system arepossible: It can be part of a system that is designed from topto bottom for approximate computing and uses specializedlanguages, specialized instruction sets and specializedhardware such as proposed by Esmaeilzade et al. [7]. Insuch a system specialized load and stored instructions couldselect different permutations for each access based on thedata type. A compiler aware of these permutation rulescould create data structures containing a mix of data types.Even with such a specialized instruction set, refresh settingscan only be selected on a per-page granularity. Applicationsthat organize data in arrays of structures thus often needto make problematic trade-offs if the structure membershave different accuracy requirements. If applications operateon structures of arrays as it is popular with GPU or DSPapplications or data such as pictures or audio, the interfaceto software can be much simpler: Regular languages such asC, C++ or OpenCL can be used and approximate memoryareas may simply be allocated using a specialized malloc.The application must provide informations about size, datatype and quality requirements to the specialized malloc. Theoperating system then calculates refresh settings for meetingthe quality requirements, reserves memory and inserts theappropriate entries into the refresh list. If not enough spaceis available in the refresh list to allocate a new block with anew refresh list entry, the operating system can always chooseto provide better than requested storage quality. Adjacent listentries can be merged by choosing the maximum refresh ratefrom each of the two merged blocks. One important limitationof the memory management of approximate storage blocksshould be noted: Approximately stored memory blocks shouldnot be moved from one approximate location to another, as

this will cause an accumulation of errors. In two differentblocks, different bit cells will fail at a selected refresh rateand bit errors already added to the data will not disappearby moving these bits into new bit cells. In some cases, itmight be required to reserve error headroom to allow for datamovement. Another possibility to allow data reallocation isto ask the application to restore data to an error-free stateby, for example, recalculating the data or reloading it from acompressed file.

5 MODELING OF SPARKK

We model the expected number of non-functional DRAMcells at a given refresh period using data for a 50nm DRAMprovided by Kim and Lee [4]. Our model assumes thatnon-functional cells will statically stick to either zero or oneon readout. We model cells as equally likely to stick to zeroor one. Thus, even a non-functional cell will return a correctresult in half the cases.

Pbytechange(k) =

7∏i=0

{Pbitflip(i) if biti in k = 1

1− Pbitflip(i) if biti in k = 0(1)

As shown in Equation 1, the peak signal to noise ratio(PSNR)can be estimated by first calculating the probabilities of the255 possible changes to a byte, based on the probabilities ofchanges to the individual bits. Pbitflip(i) is the probability ofa bitflip in bit i. As already mentioned, this is estimated usingthe data from Kim and Lee [4]. Pbytechange(k) is the probabilityof the change of a byte by mask k, e.g. Pbytechange(129) is theprobability of a byte with a bitflip in bit 7 and bit 0 and allother bits without storage errors.

MSE =

255∑k=1

k2Pbytechange(k) (2)

These probabilities are then weighted by their square errorto calculate the mean square error (MSE) as presented in Equa-tion 2. This is slightly simplified and assumes that additionalbit flips always increase the error. This is true, if a single bitper byte flips, but not necessarily true if multiple bits in asingle byte flip., e.g.: If 128 should be stored and bit 7 flips,the absolute error is 128, but if bit 5 flips as well, the error isreduced to 96. On the other hand, if 0 should be stored and bit 7flips the error is 128 and if bit 5 flips as well, the error increasesto 160. We found that this effect is negligible in practice. Thissimplification makes it possible to pick good refresh settingsfor a given quality without knowledge of the data statistics.

PSNR = 10 log10

(2552

MSE

)(3)

From the mean square error the PSNR can be calculated,using the well known equation 3. To compare Sparkk withFlikker, the harmonic means of the per chip/subrank refreshrates are calculated. Refresh schemes with an identical har-monic mean trigger the same number of refresh operations perchip/subrank.

Before we can estimate the benefits from Sparkk, it is nec-essary to find suitable refresh settings that maximize qualityat a given energy. With Sparkk, the rows of one approximatestorage area within every DRAM subrank can be refreshed at

THE MEMORY FORUM 5

(a) Flikker (b) Sparkk 8

Fig. 5: Pictures stored in DRAM with 8 seconds average refresh

multiples of the base refresh rate of 64ms. Thus a suitable set ofmultiples of the base refresh rate must be found. We used hillclimbing to find our refresh settings: Starting from the baserefresh period the refresh periods of single DRAM chips isgradually increased until a solution is found that meets theaverage refresh requirements. During this process the refreshperiod of the DRAM subrank is increased by one base periodlength that offers the best ratio of newly introduced error andenergy saved by the prolonged refresh.

To enable a visual evaluation of Sparkk, we simulated theeffect of the approximate storage on image data. Our modelwas used estimate how many bits of each significance wouldflip at a given average refresh period. Then, this number ofbits of each type was randomly selected and flipped. Kim andLee modeled in their simulations that retention times of thebit cells are distributed randomly over all cells [4]. Rahmati etal. verified this assumption experimentally and did not findany specific patterns in the retention times of the measuredDRAM [8]. There might be patterns regarding which bits tendto stick to zero or one if their retention time is not met. Weassume that there are no such patterns and that both stuck-at cases have the same likelihood. In case real DRAM showspatterns, an address based scrambler could be used to preventpatterns caused by the internal DRAM array architecture.

6 EVALUATION

Figure 3 shows the expected PSNR as predicted by our stochas-tic model for Sparkk and Flikker. This model predicts themean PSNR over an infinite set of samples. The PSNR insingle samples can be better or worse, depending on the exactdistribution of weak bitcells within the used DRAM rows andstored data, but large derivations from the expected PSNR areunlikely. At all tested refresh rates, Sparkk performs better thanFlikker. Even Sparkk with just two subranks provides benefitsover Flikker. Sparkk with 4 subranks provides almost all thebenefits of Sparkk with 8 subranks.Figure 4 displays how Flikker and Sparkk distribute the errorsover the bits on memory interface with 4 subranks. In Flikker,all bits have the same error rate. In Sparkk, the subrank storingBits 0-3 is refreshed at lower rate than in Flikker, the subrankwith bit 4 & 5 is refreshed at approximately the same rate asin Flikker and the subrank with the two most important bits

is refreshed at a higher rate than in Flikker.To test if the PSNR also provides a good estimate of subjectivequality, we generated pictures simulating the effects of Sparkkand Flikker. These pictures can be seen in Figure 5. For Sparkka configuration with 8 subranks was used. The average refreshrate in both cases was 8 seconds. The image saved in theFlikker storage shows many easy-to-spot bit errors of the mostsignificant bit. The Sparkk storage shows only a few of thoseerrors and despite the extremely long refresh period, the imagestill seems to have an acceptable subjective quality for someapplications such as texturing. The background of the Sparkkstored picture looks grainy which is the result of the highnumber of bit errors in the less important bits.Sparkk is able to reduce the number of refresh operationson the DRAM arrays at a given quality level. This reducesthe power required for the chip internal refresh operation.However Sparkk requires a more complex refresh signalingand the additional energy consumed by this could potentiallymitigate the energy advantage in some use cases. While weproposed one refresh signaling scheme that can be used usingunmodified DRAMs, with modifications to the DRAM manyother schemes are possible and likely more efficient. It remainsan open research question how much energy could be savedexactly.

7 CONCLUSION

We proposed Sparkk, an effective approximate storage usingcommodity DRAMs. It achieves more than 10dB PSNR im-provement over Flikker at the same average refresh rate orreaches the same quality at less than half the refresh rate ofFlikker. We also proposed a simple, small and flexible hardwareunit to control how the memory controller refreshes multipleconfigurable memory areas for approximate storage.

ACKNOWLEDGMENTS

This project receives funding from the European Community’sSeventh Framework Programme [FP7/2007-2013] under theLPGPU Project (www.lpgpu.org), grant agreement n◦ 288653.We like to thank the anonymous reviewers for their valuablecomments.

THE MEMORY FORUM 6

REFERENCES

[1] J. Liu, B. Jaiyen, R. Veras, and O. Mutlu, “RAIDR: Retention-AwareIntelligent DRAM Refresh,” in Proc. of the International Symposiumon Computer Architecture, ISCA, 2012.

[2] A. Sampson, W. Dietl, E. Fortuna, D. Gnanapragasam, L. Ceze,and D. Grossman, “EnerJ: Approximate Data Types for Safe andGeneral Low-power Computation,” in Proc. of the Conference onProgramming Language Design and Implementation, PLDI, 2011.

[3] S. Liu, K. Pattabiraman, T. Moscibroda, and B. G. Zorn, “Flikker:Saving DRAM Refresh-power Through Critical Data Partitioning,”in Proc. of the Conference on Programming Language Design andImplementation, PLDI, 2011.

[4] K. Kim and J. Lee, “A New Investigation of Data Retention Time inTruly Nanoscaled DRAMs,” IEEE Electron Device Letters, Aug 2009.

[5] F. Ware and C. Hampel, “Improving Power and Data Efficiencywith Threaded Memory Modules,” in Proceedings of the InternationalConference on Computer Design, ICCD, 2006.

[6] A. Sampson, J. Nelson, K. Strauss, and L. Ceze, “Approximate Stor-age in Solid-State Memories,” in Proc. of the International Symposiumon Microarchitecture, MICRO, 2013.

[7] H. Esmaeilzadeh, A. Sampson, L. Ceze, and D. Burger, “Architec-ture Support for Disciplined Approximate Programming,” in Proc.of the international conference on Architectural Support for ProgrammingLanguages and Operating Systems, ASPLOS, 2012.

[8] A. Rahmati, M. Hicks, D. E. Holcomb, and K. Fu, “RefreshingThoughts on DRAM: Power Saving vs. Data Integrity,” in Workshopon Approximate Computing Across the System Stack, WACAS, 2014.