Nash

-

Upload

takarinkizuka -

Category

Documents

-

view

8 -

download

4

description

Transcript of Nash

-

Towards an Algorithmic Realization of Nashs Embedding Theorem

Nakul VermaCSE, UC San Diego

Abstract

It is well known from differential geometry that an n-dimensional Riemannian manifold can be iso-metrically embedded in a Euclidean space of dimension 2n+1 [Nas54]. Though the proof by Nashis intuitive, it is not clear whether such a construction is achievable by an algorithm that only hasaccess to a finite-size sample from the manifold. In this paper, we study Nashs construction anddevelop two algorithms for embedding a fairly general class of n-dimensional Riemannian mani-folds (initially residing in RD) into Rk (where k only depends on some key manifold properties,such as its intrinsic dimension, its volume, and its curvature) that approximately preserves geodesicdistances between all pairs of points. The first algorithm we propose is computationally fast andembeds the given manifold approximately isometrically into about O(2cn) dimensions (where c isan absolute constant). The second algorithm, although computationally more involved, attempts tominimize the dimension of the target space and (approximately isometrically) embeds the manifoldin about O(n) dimensions.

1 IntroductionFinding low-dimensional representations of manifolds has proven to be an important task in data analysis anddata visualization. Typically, one wants a low-dimensional embedding to reduce computational costs whilemaintaining relevant information in the data. For many learning tasks, distances between data-points serve asan important approximation to gauge similarity between the observations. Thus, it comes as no surprise thatdistance-preserving or isometric embeddings are popular.

The problem of isometrically embedding a differentiable manifold into a low dimensional Euclideanspace has received considerable attention from the differential geometry community and, more recently, fromthe manifold learning community. The classic results by Nash [Nas54, Nas56] and Kuiper [Kui55] show thatany compact Riemannian manifold of dimension n can be isometrically C1-embedded1 in Euclidean space ofdimension 2n+ 1, and C-embedded in dimension O(n2) (see [HH06] for an excellent reference). Thoughthese results are theoretically appealing, they rely on delicate handling of metric tensors and solving a systemof PDEs, making their constructions difficult to compute by a discrete algorithm.

On the algorithmic front, researchers in the manifold learning community have devised a number ofspectral algorithms for finding low-dimensional representations of manifold data [TdSL00, RS00, BN03,DG03, WS04]. These algorithms are often successful in unravelling non-linear manifold structure fromsamples, but lack rigorous guarantees that isometry will be preserved for unseen data.

Recently, Baraniuk and Wakin [BW07] and Clarkson [Cla07] showed that one can achieve approximateisometry via the technique of random projections. It turns out that projecting an n-dimensional manifold(initially residing in RD) into a sufficiently high dimensional random subspace is enough to approximatelypreserve all pairwise distances. Interestingly, this linear embedding guarantees to preserve both the ambientEuclidean distances as well as the geodesic distances between all pairs of points on the manifold withouteven looking at the samples from the manifold. Such a strong result comes at the cost of the dimensionof the embedded space. To get (1 )-isometry2, for instance, Baraniuk and Wakin [BW07] show thata target dimension of size about O

(n2 log

V D

)is sufficient, where V is the n-dimensional volume of the

manifold and is a global bound on the curvature. This result was sharpened by Clarkson [Cla07] by1A Ck-embedding of a smooth manifold M is an embedding of M that has k continuous derivatives.2A (1 )-isometry means that all distances are within a multiplicative factor of (1 ).

-

Figure 1: A simple example demonstrating Nashs embedding technique on a 1-manifold. Left: Original 1-manifoldin some high dimensional space. Middle: A contractive mapping of the original manifold via a linear projection ontothe vertical plane. Different parts of the manifold are contracted by different amounts distances at the tail-ends arecontracted more than the distances in the middle. Right: Final embedding after applying a series of spiralling corrections.Small spirals are applied to regions with small distortion (middle), large spirals are applied to regions with large distortions(tail-ends). Resulting embedding is isometric (i.e., geodesic distance preserving) to the original manifold.

completely removing the dependence on ambient dimensionD and partially substituting with more average-case manifold properties. In either case, the 1/2 dependence is troublesome: if we want an embedding withall distances within 99% of the original distances (i.e., = 0.01), the bounds require the dimension of thetarget space to be at least 10,000!

One may wonder whether the dependence on is really necessary to achieve isometry. Nashs theoremsuggests that an -free bound on the target space should be possible.

1.1 Our ContributionsIn this work, we elucidate Nashs C1 construction, and take the first step in making Nashs theorem algorith-mic by providing two simple algorithms for approximately isometrically embedding n-manifolds (manifoldswith intrinsic dimension n), where the dimension of the target space is independent of the ambient dimensionD and the isometry constant . The first algorithm we propose is simple and fast in computing the targetembedding but embeds the given n-manifold in about 2cn dimensions (where c is an absolute constant).The second algorithm we propose focuses on minimizing the target dimension. It is computationally moreinvolved but embeds the given n-manifold in about O(n) dimensions.

We would like to highlight that both our proposed algorithms work for a fairly general class of manifolds.There is no requirement that the original n-manifold is connected, or is globally isometric (or even globallydiffeomorphic) to some subset of Rn as is frequently assumed by several manifold embedding algorithms.In addition, unlike spectrum-based embedding algorithms available in the literature, our algorithms yield anexplicit C-embedding that cleanly embeds out-of-sample data points, and provide isometry guarantees overthe entire manifold (not just the input samples).

On the technical side, we emphasize that the techniques used in our proof are different from what Nashuses in his work; unlike traditional differential-geometric settings, we can only access the underlying mani-fold through a finite size sample. This makes it difficult to compute quantities (such as the curvature tensorand local functional form of the input manifold, etc.) that are important in Nashs approach for constructingan isometric embedding. Our techniques do, however, use various differential-geometric concepts and ourhope is to make such techniques mainstream in analyzing manifold learning algorithms.

2 Nashs Construction for C1-Isometric EmbeddingGiven an n-dimensional manifold M (initially residing in RD), Nashs embedding can be summarized in twosteps (see also [Nas54]). (1) Find a contractive3 mapping of M in the desired dimensional Euclidean space.(2) Apply an infinite series of corrections to restore the distances to their original lengths.

In order to maintain the smoothness, the contraction and the target dimension in step one should be chosencarefully. Nash notes that one can use Whitneys construction [Whi36] to embed M in R2n+1 withoutintroducing any kinks, tears, or discontinuities in the embedding. This initial embedding, which does notnecessarily preserve any distances, can be made into a contraction by adjusting the scale.

The corrections in step two should also be done with care. Each correction stretches out a small region ofthe contracted manifold to restore local distances as much as possible. Nash shows that applying a successivesequence of spirals4 in directions normal to the embedded M is a simple way to stretch the distances whilemaintaining differentiability. The aggregate effect of applying these spiralling perturbations is a globally-isometric mapping of M in R2n+1. See Figure 1 for an illustration.

3A contractive mapping or a contraction is a mapping that doesnt increase the distance between points.4A spiral map is a mapping of the form t 7 (t, sin(t), cos(t)).

2

-

Remark 1 Adjusting the lengths by applying spirals is one of many ways to do local corrections. Kuiper[Kui55], for instance, discusses an alternative way to stretch the contracted manifold by applying corruga-tions and gets a similar isometry result.

2.1 Algorithm for Embedding n-Manifolds: IntuitionTaking inspiration from Nashs construction, our proposed embedding will also be divided in two stages. Thefirst stage will attempt to find a contraction : RD Rd of our given n-manifold M RD in low dimen-sions. The second will apply a series of local corrections 1,2, . . . (collectively refered to as the mapping : Rd Rd+k) to restore the geodesic distances.

Contraction stage: A pleasantly surprising observation is that a random projection of M into d = O(n)dimensions is a bona fide injective, differential-structure preserving contraction with high probability (detailsin Section 5.1). Since we dont require isometry in the first stage (only a contraction), we can use a randomprojection as our contraction mapping without having to pay the 1/2 penalty.Correction stage: We will apply several corrections to stretch-out our contracted manifold (M). To un-derstand a single correction i better, we can consider its effect on a small section of (M). Since, locally,the section effectively looks like a contracted n dimensional affine space, our correction map needs to restoredistances over this n-flat. Let U := [u1, . . . , un] be a d n matrix whose columns form an orthonormalbasis for this n-flat in Rd and let s1, . . . , sn be the corresponding shrinkages along the n directions. Then onecan consider applying an n-dimensional analog of the spiral mapping: i(t) := (t,sin(t),cos(t)), wheresin(t) := (sin((Ct)1), . . . , sin((Ct)n)) and cos(t) := (cos((Ct)1), . . . , cos((Ct)n)). Here C serves asan n d correction matrix that controls how much of the surface needs to stretch. It turns out that if onesets C to be the matrix SUT (where S is a diagonal matrix with entry Sii :=

(1/si)2 1, recall that si

was the shrinkage along direction ui), then the correction i precisely restores the shrinkages along the northonormal directions on the resultant surface (see our discussion in Section 5.2 for details).

Since different parts of the contracted manifold need to be stretched by different amounts, we localizethe effect of i to a small enough neighborhood by applying a specific kind of kernel function known as abump function in the analysis literature (details in Section 5.2, cf. Figure 5 middle). Applying differentis at different parts of the manifold should have an aggregate effect of creating an (approximate) isometricembedding.

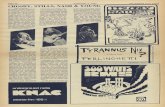

We now have a basic outline of our algorithm. Let M be an n-dimensional manifold in RD. We firstfind a contraction of M in d = O(n) dimensions via a random projection. This preserves the differentialstructure but distorts the interpoint geodesic distances. We estimate the distortion at different regions of theprojected manifold by comparing a sample from M with its projection. We then perform a series of spiralcorrectionseach applied locallyto adjust the lengths in the local neighborhoods. We will conclude thatrestoring the lengths in all neighborhoods yields a globally consistent (approximately) isometric embeddingof M . Figure 4 shows a quick schematic of our two stage embedding with various quantities of interest.

Based on exactly how these different local is are applied gives rise to our two algorithms. For thefirst algorithm, we shall apply i maps simultaneously by making use of extra coordinates so that differentcorrections dont interfere with each other. This yields a simple and computationally fast embedding. Weshall require about 2cn additional coordinates to apply the corrections, making the final embedding size of2cn (here c is an absolute constant). For the second algorithm, we will follow Nashs technique more closelyand apply i maps iteratively in the same embedding space without the use of extra coordinates. Since allis will share the same coordinate space, extra care needs to be taken in applying the corrections. This willrequire additional computational effort in terms of computing normals to the embedded manifold (detailslater), but will result in an embedding of size O(n).

3 PreliminariesLet M be a smooth, n-dimensional compact Riemannian submanifold of RD. Since we will be working withsamples from M , we need to ensure certain amount of regularity. Here we borrow the notation from Niyogiet al. [NSW06] about the condition number of M .Definition 1 (condition number [NSW06]) Let M RD be a compact Riemannian manifold. The condi-tion number of M is 1 , if is the largest number such that the normals of length r < at any two distinctpoints p, q M dont intersect.

The condition number 1/ is an intuitive notion that captures the complexity of M in terms of itscurvature. We can, for instance, bound the directional curvature at any p M by . Figure 2 depicts the

3

-

Figure 2: Tubular neighborhood of a manifold. Note that the normals (dotted lines) of a particular length incident ateach point of the manifold (solid line) will intersect if the manifold is too curvy.

normals of a manifold. Notice that long non-intersecting normals are possible only if the manifold is relativelyflat. Hence, the condition number of M gives us a handle on how curvy can M be. As a quick example, letscalculate the condition number of an n-dimensional sphere of radius r (embedded in RD). Note that in thiscase one can have non-intersecting normals of length less than r (since otherwise they will start intersectingat the center of the sphere). Thus the condition number of such a sphere is 1/r. Throughout the text we willassume that M has condition number 1/ .

We will use DG(p, q) to indicate the geodesic distance between points p and q where the underlyingmanifold is understood from the context, and p q to indicate the Euclidean distance between points p andq where the ambient space is understood from the context.

To correctly estimate the distortion induced by the initial contraction mapping, we will additionally re-quire a high-resolution covering of our manifold.

Definition 2 (bounded manifold cover) Let M RD be a Riemannian n-manifold. We call X M an-bounded (, )-cover of M if for all p M and -neighborhood Xp := {x X : x p < } aroundp, we have

exist points x0, . . . , xn Xp such that xix0xix0 xjx0xjx0 1/2n, for i 6= j. (covering criterion)

|Xp| . (local boundedness criterion) exists point x Xp such that x p /2. (point representation criterion) for any n+1 points in Xp satisfying the covering criterion, let Tp denote the n-dimensional affine space

passing through them (note that Tp does not necessarily pass through p). Then, for any unit vector v inTp, we have

v vv 1 , where v is the projection of v onto the tangent space of M at p. (tangentspace approximation criterion)

The above is an intuitive notion of manifold sampling that can estimate the local tangent spaces. Curiously,we havent found such tangent-space approximating notions of manifold sampling in the literature. We donote in passing that our sampling criterion is similar in spirit to the (, )-sampling (also known as tight-sampling) criterion popular in the Computational Geometry literature (see e.g. [DGGZ02, GW03]).

Remark 2 Given an n-manifold M with condition number 1/ , and some 0 < 1, if /32n, thenone can construct a 210n+1-bounded (, )-cover of M see Appendix A.2 for details.We can now state our two algorithms.

4 The AlgorithmsInputs. We assume the following quantities are given

(i) n the intrinsic dimension of M .(ii) 1/ the condition number of M .

(iii) X an -bounded (, )-cover of M .(iv) the parameter of the cover.

4

-

Notation. Let be a random orthogonal projection map that maps points from RD into a random subspaceof dimension d (n d D). We will have d to be about O(n). Set := (2/3)(D/d) as a scaled versionof . Since is linear, can also be represented as a dD matrix. In our discussion below we will use thefunction notation and the matrix notation interchangeably, that is, for any p RD, we will use the notation(p) (applying function to p) and the notation p (matrix-vector multiplication) interchangeably.

For any x X , let x0, . . . , xn be n + 1 points from the set {x X : x x < } such that xix0xix0

xjx0xjx0 1/2n, for i 6= j (cf. Definition 2). Let Fx be the D n matrix whose column vectors

form some orthonormal basis of the n-dimensional subspace spanned by the vectors {xi x0}i[n].

Estimating local contractions. We estimate the contraction caused by at a small enough neighborhoodof M containing the point x X , by computing the thin Singular Value Decomposition (SVD) UxxV Txof the d n matrix Fx and representing the singular values in the conventional descending order. That is,Fx = UxxV

T

x , and since Fx is a tall matrix (n d), we know that the bottom d n singular values arezero. Thus, we only consider the top n (of d) left singular vectors in the SVD (so, Ux is d n, x is n n,and Vx is n n) and 1x 2x . . . nx where ix is the ith largest singular value.

Observe that the singular values 1x, . . . , nx are precisely the distortion amounts in the directions u1x, . . . , unxat (x) Rd ([u1x, . . . , unx ] = Ux) when we apply . To see this, consider the direction wi := Fxvix in thecolumn-span of Fx ([v1x, . . . , vnx ] = Vx). Then wi = (Fx)vix = ixuix, which can be interpreted as: maps the vector wi in the subspace Fx (in RD) to the vector uix (in Rd) with the scaling of ix.

Note that if 0 < ix 1 (for all x X and 1 i n), we can define an n d correction matrix(corresponding to each x X) Cx := SxUTx , where Sx is a diagonal matrix with (Sx)ii :=

(1/ix)

2 1.We can also write Sx as (2x I)1/2. The correction matrix Cx will have an effect of stretching the directionuix by the amount (Sx)ii and killing any direction v that is orthogonal to (column-span of) Ux.

Algorithm 1 Compute Corrections Cxs1: for x X (in any order) do2: Let x0, . . . , xn {x X : x x < } be such that

xix0xix0

xjx0xjx0 1/2n (for i 6= j).

3: Let Fx be a Dn matrix whose columns form an orthonormal basis of the n-dimensional span of thevectors {xi x0}i[n].

4: Let UxxV Tx be the thin SVD of Fx.5: Set Cx := (2x I)1/2UTx .6: end for

Algorithm 2 Embedding Technique IPreprocessing Stage: We will first partition the given covering X into disjoint subsets such that no subsetcontains points that are too close to each other. Let x1, . . . , x|X| be the points in X in some arbitrary butfixed order. We can do the partition as follows:

1: Initialize X(1), . . . , X(K) as empty sets.2: for xi X (in any fixed order) do3: Let j be the smallest positive integer such that xi is not within distance 2 of any element in X(j).

That is, the smallest j such that for all x X(j), x xi 2.4: X(j) X(j) {xi}.5: end for

The Embedding: For any p M RD, we embed it in Rd+2nK as follows:1: Let t = (p).2: Define (t) := (t,1,sin(t),1,cos(t), . . . ,K,sin(t),K,cos(t)) where j,sin(t) :=

(1j,sin(t), . . . , nj,sin(t)) and j,cos(t) := (1j,cos(t), . . . , nj,cos(t)). The individual terms are given

byij,sin(t) :=

xX(j) (

(x)(t)/) sin((C

xt)i)

ij,cos(t) :=

xX(j) ((x)(t)/) cos((C

xt)i)i = 1, . . . , n; j = 1, . . . ,K

where a(b) =11{ab

-

Algorithm 3 Embedding Technique IIThe Embedding: Let x1, . . . , x|X| be the points in X in some arbitrary but fixed order. Now, for any pointp M RD, we embed it in R2d+3 as follows:

1: Let t = (p).2: Define 0,n(t) := (t, 0, . . . , 0

d+3

)

3: for i = 1, . . . , |X| do4: Define i,0 := i1,n.5: for j = 1, . . . , n do6: Let i,j(t) and i,j(t) be two mutually orthogonal unit vectors normal to i,j1(M) at i,j1(t).7: Define

i,j(t) := i,j1(t) + i,j(t)((xi)(t)

i,j

)sin(i,j(C

xit)j) + i,j(t)((xi)(t)

i,j

)cos(i,j(C

xit)j)

where a(b) =11{ab 0(for Embedding II) are free parameters controlling the frequency of the sinusoidal terms.Remark 4 If /4, the number of subsets (i.e. K) produced by Embedding I is at most 2cn for an-bounded (, ) cover X of M (where c 4). See Appendix A.3 for details.Remark 5 The success of Embedding II crucially depends upon finding a pair of normal unit vectors and in each iteration; we discuss how to approximate these in Appendix A.9.

We shall see that for appropriate choice of d, , and (or i,j), our algorithm yields an approximateisometric embedding of M .

4.1 Main ResultTheorem 3 LetM RD be a compact n-manifold with volume V and condition number 1/ (as above). Letd = (n+ ln(V/n)) be the target dimension of the initial random projection mapping such that d D.For any 0 < 1, let (d/D)(/350)2, (d/D)(/250)2, and let X M be an -bounded(, )-cover of M . Now, let

i. NI Rd+2n2cn

be the embedding of M returned by Algorithm I (where c 4),ii. NII R2d+3 be the embedding of M returned by Algorithm II.

Then, with probability at least 11/poly(n) over the choice of the initial random projection, for all p, q Mand their corresponding mappings pI, qI NI and pII, qII NII, we have

i. (1 )DG(p, q) DG(pI, qI) (1 + )DG(p, q),ii. (1 )DG(p, q) DG(pII, qII) (1 + )DG(p, q).

5 ProofOur goal is to show that the two proposed embeddings approximately preserve the length of all geodesiccurves. Now, since the length of any given curve : [a, b]M is given by b

a(s)ds, it is vital to study

how our embeddings modify the length of the tangent vectors at any point p M .In order to discuss tangent vectors, we need to introduce the notion of a tangent space TpM at a particular

point p M . Consider any smooth curve c : (, )M such that c(0) = p, then we know that c(0) is thevector tangent to c at p. The collection of all such vectors formed by all such curves is a well defined vector

6

-

pv

M

TpM

TF (p)F (M)

F (M)F (p) (DF )p(v)

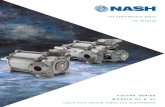

Figure 3: Effects of applying a smooth map F on various quantities of interest. Left: A manifold M containing pointp. v is a vector tangent to M at p. Right: Mapping of M under F . Point p maps to F (p), tangent vector v maps to(DF )p(v).

space (with origin at p), called the tangent space TpM . In what follows, we will fix an arbitrary point p Mand a tangent vector v TpM and analyze how the various steps of the algorithm modify the length of v.

Let be the initial (scaled) random projection map (from RD to Rd) that may contract distances on Mby various amounts, and let be the subsequent correction map that attempts to restore these distances (asdefined in Step 2 for Embedding I or as a sequence of maps in Step 7 for Embedding II). To get a firm footingfor our analysis, we need to study how and modify the tangent vector v. It is well known from differentialgeometry that for any smooth map F : M N that maps a manifold M Rk to a manifold N Rk ,there exists a linear map (DF )p : TpM TF (p)N , known as the derivative map or the pushforward (at p),that maps tangent vectors incident at p in M to tangent vectors incident at F (p) in N . To see this, considera vector u tangent to M at some point p. Then, there is some smooth curve c : (, ) M such thatc(0) = p and c(0) = u. By mapping the curve c into N , i.e. F (c(t)), we see that F (c(t)) includes the pointF (p) at t = 0. Now, by calculus, we know that the derivative at this point, dF (c(t))dt

t=0

is the directionalderivative (F )p(u), where (F )p is a k k matrix called the gradient (at p). The quantity (F )p isprecisely the matrix representation of this linear pushforward map that sends tangent vectors of M (at p)to the corresponding tangent vectors of N (at F (p)). Figure 3 depicts how these quantities are affected byapplying F . Also note that if F is linear then DF = F .

Observe that since pushforward maps are linear, without loss of generality we can assume that v has unitlength.

A quick roadmap for the proof. In the next three sections, we take a brief detour to study the effects ofapplying , applying for Algorithm I, and applying for Algorithm II separately. This will give us thenecessary tools to analyze the combined effect of applying on v (Section 5.4). We will conclude byrelating tangent vectors to lengths of curves, showing approximate isometry (Section 5.5). Figure 4 provides aquick sketch of our two stage mapping with the quantities of interest. We defer the proofs of all the supportinglemmas to the Appendix.

5.1 Effects of Applying It is well known as an application of Sards theorem from differential topology (see e.g. [Mil72]) that almostevery smooth mapping of an n-dimensional manifold into R2n+1 is a differential structure preserving em-bedding of M . In particular, a projection onto a random subspace (of dimension 2n+ 1) constitutes such anembedding with probability 1.

This translates to stating that a random projection into R2n+1 is enough to guarantee that doesntcollapse the lengths of non-zero tangent vectors. However, due to computational issues, we additionallyrequire that the lengths are bounded away from zero (that is, a statement of the form (D)p(v) (1)vfor all v tangent to M at all points p).

We can thus appeal to the random projections result by Clarkson [Cla07] (with the isometry parameterset to a constant, say 1/4) to ensure this condition. In particular, it follows

Lemma 4 Let M RD be a smooth n-manifold (as defined above) with volume V and condition number1/ . Let R be a random projection matrix that maps points from RD into a random subspace of dimension d(d D). Define := (2/3)(D/d)R as a scaled projection mapping. If d = (n+ ln(V/n)), then withprobability at least 1 1/poly(n) over the choice of the random projection matrix, we have(a) For all p M and all tangent vectors v TpM , (1/2)v (D)p(v) (5/6)v.(b) For all p, q M , (1/2)p q p q (5/6)p q.

7

-

Rd+k

t = p

M M

RD

Rd

M

p (t)

v

v = 1

u = v (D)t(u)

(D)t(u) vu 1Figure 4: Two stage mapping of our embedding technique. Left: Underlying manifold M RD with the quantities ofinterest a fixed point p and a fixed unit-vector v tangent to M at p. Center: A (scaled) linear projection of M into arandom subspace of d dimensions. The point p maps to p and the tangent vector v maps to u := (D)p(v) = v. Thelength of v contracts to u. Right: Correction of M via a non-linear mapping into Rd+k. We have k = O(2cn)for correction technique I, and k = d + 3 for correction technique II (see also Section 4). Our goal is to show that stretches length of contracted v (i.e. u) back to approximately its original length.

(c) For all x RD, x (2/3)(D/d)x.

In what follows, we assume that is such a scaled random projection map. Then, a bound on the length oftangent vectors also gives us a bound on the spectrum of Fx (recall the definition of Fx from Section 4).Corollary 5 Let , Fx and n be as described above (recall that x X that forms a bounded (, )-coverof M ). Let ix represent the ith largest singular value of the matrix Fx. Then, for d/32D, we have1/4 nx 1x 1 (for all x X).We will be using these facts in our discussion below in Section 5.4.

5.2 Effects of Applying (Algorithm I)As discussed in Section 2.1, the goal of is to restore the contraction induced by on M . To understand theaction of on a tangent vector better, we will first consider a simple case of flat manifolds (Section 5.2.1),and then develop the general case (Section 5.2.2).5.2.1 Warm-up: flat MLets first consider applying a simple one-dimensional spiral map : R R3 given by t 7 (t, sin(Ct), cos(Ct)),where t I = (, ). Let v be a unit vector tangent to I (at, say, 0). Then note that

(D)t=0(v) =d

dt

t=0

= (1, C cos(Ct),C sin(Ct))t=0

.

Thus, applying stretches the length of v from 1 to(1, C cos(Ct),C sin(Ct))|t=0 = 1 + C2. No-

tice the advantage of applying the spiral map in computing the lengths: the sine and cosine terms combinetogether to yield a simple expression for the size of the stretch. In particular, if we want to stretch the lengthof v from 1 to, say, L 1, then we simply need C = L2 1 (notice the similarity between this expressionand our expression for the diagonal component Sx of the correction matrix Cx in Section 4).

We can generalize this to the case of n-dimensional flat manifold (a section of an n-flat) by considering amap similar to . For concreteness, let F be a D n matrix whose column vectors form some orthonormalbasis of the n-flat manifold (in the original space RD). Let UV T be the thin SVD of F . Then FVforms an orthonormal basis of the n-flat manifold (in RD) that maps to an orthogonal basis U of theprojected n-flat manifold (in Rd) via the contraction mapping . Define the spiral map : Rd Rd+2nin this case as follows. (t) := (t, sin(t), cos(t)), with sin(t) := (1sin(t), . . . , nsin(t)) and cos(t) :=(1cos(t), . . . ,

ncos(t)). The individual terms are given as

isin(t) := sin((Ct)i)icos(t) := cos((Ct)i)

i = 1, . . . , n,

where C is now an n d correction matrix. It turns out that setting C = (2 I)1/2UT precisely restoresthe contraction caused by to the tangent vectors (notice the similarity between this expression with the

8

-

correction matrix in the general case Cx in Section 4 and our motivating intuition in Section 2.1). To see this,let v be a vector tangent to the n-flat at some point p (in RD). We will represent v in the FV basis (that is,v =

i i(Fv

i) where [Fv1, . . . , Fvn] = FV ). Note that v2 = i iFvi2 = i iiui2 =i(i

i)2 (where i are the individual singular values of and ui are the left singular vectors formingthe columns of U ). Now, let w be the pushforward of v (that is, w = (D)p(v) = v =

i wie

i,

where {ei}i forms the standard basis of Rd). Now, since D is linear, we have (D)(p)(w)2 =i wi(D)(p)(ei)2, where (D)(p)(ei) = ddti t=(p) = ( dtdti , dsin(t)dti , dcos(t)dti ) t=(p). The in-dividual components are given by

dksin(t)/dti = +cos((Ct)k)Ck,i

dkcos(t)/dti = sin((Ct)k)Ck,i k = 1, . . . , n; i = 1, . . . , d.

By algebra, we see that

(D( ))p(v)2 = (D)(p)((D)p(v))2 = (D)(p)(w)2

=

dk=1

w2k +

nk=1

cos2((C(p))k)((Cv)k)2 +

nk=1

sin2((C(p))k)((Cv)k)2

=

dk=1

w2k +

nk=1

((Cv)k)2 = v2 + Cv2 = v2 + (v)TCTC(v)

= v2 + (i

iiui)TU(2 I)UT(

i

iiui)

= v2 + [11, . . . , nn](2 I)[11, . . . , nn]T= v2 + (

i

2i i

(ii)2) = v2 + v2 v2 = v2.

In other words, our non-linear correction map can exactly restore the contraction caused by for anyvector tangent to an n-flat manifold.

In the fully general case, the situation gets slightly more complicated since we need to apply differentspiral maps, each corresponding to a different size correction at different locations on the contracted manifold.Recall that we localize the effect of a correction by applying the so-called bump function (details below).These bump functions, although important for localization, have an undesirable effect on the stretched lengthof the tangent vector. Thus, to ameliorate their effect on the length of the resulting tangent vector, we controltheir contribution via a free parameter .

5.2.2 The General CaseMore specifically, Embedding Technique I restores the contraction induced by by applying a non-linearmap (t) := (t,1,sin(t),1,cos(t), . . . ,K,sin(t),K,cos(t)) (recall that K is the number of subsets wedecompose X into cf. description in Embedding I in Section 4), with j,sin(t) := (1j,sin(t), . . . , nj,sin(t))and j,cos(t) := (1j,cos(t), . . . , nj,cos(t)). The individual terms are given as

ij,sin(t) :=

xX(j) ((x)(t)/) sin((C

xt)i)

ij,cos(t) :=

xX(j) ((x)(t)/) cos((C

xt)i)i = 1, . . . , n; j = 1, . . . ,K,

where Cxs are the correction amounts for different locations x on the manifold, > 0 controls the frequency(cf. Section 4), and (x)(t) is defined to be (x)(t)/

qX (q)(t), with

(x)(t) :=

{exp(1/(1 t (x)2/2)) if t (x) < .0 otherwise.

is a classic example of a bump function (see Figure 5 middle). It is a smooth function with compactsupport. Its applicability arises from the fact that it can be made to specifications. That is, it can bemade to vanish outside any interval of our choice. Here we exploit this property to localize the effect of ourcorrections. The normalization of (the function ) creates the so-called smooth partition of unity that helpsto vary smoothly between the spirals applied at different regions of M .

Since any tangent vector in Rd can be expressed in terms of the basis vectors, it suffices to study how Dacts on the standard basis {ei}. Note that (D)t(ei) =

(dtdti ,

d1,sin(t)dti ,

d1,cos(t)dti , . . . ,

dK,sin(t)dti ,

dK,cos(t)dti

)t,

9

-

4 3 21 0 1

2 3 4

10.5

00.5

1

1

0.5

0

0.5

1

2 1.5 1 0.5 0 0.5 1 1.5 20

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

|tx|/

x(t)

4 3 21 0 1

2 3 4

0.40.2

00.2

0.4

0.4

0.3

0.2

0.1

0

0.1

0.2

0.3

0.4

Figure 5: Effects of applying a bump function on a spiral mapping. Left: Spiral mapping t 7 (t, sin(t), cos(t)).Middle: Bump function x: a smooth function with compact support. The parameter x controls the location while controls the width. Right: The combined effect: t 7 (t, x(t) sin(t), x(t) cos(t)). Note that the effect of the spiral islocalized while keeping the mapping smooth.

where

dkj,sin(t)/dti =

xX(j)

1

(sin((Cxt)k)

d1/2

(x)(t)

dti

)+(x)(t) cos((C

xt)k)Cxk,i

dkj,cos(t)/dti =

xX(j)

1

(cos((Cxt)k)

d1/2

(x)(t)

dti

)(x)(t) sin((Cxt)k)Cxk,i

k = 1, . . . , n; i = 1, . . . , dj = 1, . . . ,K

.

One can now observe the advantage of having the term . By picking sufficiently large, we can make thefirst part of the expression sufficiently small. Now, for any tangent vector u =

i uie

i such that u 1,we have (by algebra)

(D)t(u)2 =ui(D)t(ei)2

=d

k=1

u2k +n

k=1

Kj=1

[ xX(j)

(Ak,xsin (t)

)+(x)(t) cos((C

xt)k)(Cxu)k

]2

+[ xX(j)

(Ak,xcos (t)

)(x)(t) sin((C

xt)k)(Cxu)k

]2(1)

whereAk,xsin (t) :=

i ui sin((Cxt)k)(d

1/2(x)(t)/dt

i) andAk,xcos (t) :=

i ui cos((Cxt)k)(d

1/2(x)(t)/dt

i).

We can further simplify Eq. (1) and getLemma 6 Let t be any point in (M) and u be any vector tagent to (M) at t such that u 1. Let bethe isometry parameter chosen in Theorem 3. Pick (n29nd/), then

(D)t(u)2 = u2 +xX

(x)(t)

nk=1

(Cxu)2k + , (2)

where || /2.We will use this derivation of (D)t(u)2 to study the combined effect of on M in Section 5.4.

5.3 Effects of Applying (Algorithm II)The goal of the second algorithm is to apply the spiralling corrections while using the coordinates moreeconomically. We achieve this goal by applying them sequentially in the same embedding space (rather thansimultaneously by making use of extra 2nK coordinates as done in the first algorithm), see also [Nas54].Since all the corrections will be sharing the same coordinate space, one needs to keep track of a pair ofnormal vectors in order to prevent interference among the different local corrections.

More specifically, : Rd R2d+3 (in Algorithm II) is defined recursively as := |X|,n such that(see also Embedding II in Section 4)

i,j(t) := i,j1(t) + i,j(t)

(xi)(t)

i,jsin(i,j(C

xit)j) + i,j(t)

(xi)(t)

i,jcos(i,j(C

xit)j),

where i,0(t) := i1,n(t), and the base function 0,n(t) is given as t 7 (t,d+3

0, . . . , 0). i,j(t) and i,j(t)are mutually orthogonal unit vectors that are approximately normal to i,j1(M) at i,j1(t). In thissection we assume that the normals and have the following properties:

10

-

- |i,j(t) v| 0 and |i,j(t) v| 0 for all unit-length v tangent to i,j1(M) at i,j1(t). (quality ofnormal approximation)

- For all 1 l d, we have di,j(t)/dtl Ki,j and di,j(t)/dtl Ki,j . (bounded directionalderivatives)

We refer the reader to Section A.9 for details on how to estimate such normals.

Now, as before, representing a tangent vector u =

l ulel (such that u2 1) in terms of its basis

vectors, it suffices to study how D acts on basis vectors. Observe that (Di,j)t(el) =(di,j(t)

dtl

)2d+3k=1

t,

with the kth component given as(di,j1(t)

dtl

)k

+ (i,j(t))k

(xi)(t)C

xij,lB

i,jcos(t) (i,j(t))k

(xi)(t)C

xij,lB

i,jsin(t)

+1

i,j

[(di,j(t)dtl

)k

(xi)(t)B

i,jsin(t) +

(di,j(t)dtl

)k

(xi)(t)B

i,jcos(t)

+ (i,j(t))kd

1/2(xi)

(t)

dtlBi,jsin(t) + (i,j(t))k

d1/2(xi)

(t)

dtlBi,jcos(t)

],

where Bi,jcos(t) := cos(i,j(Cxit)j) and Bi,jsin(t) := sin(i,j(C

xit)j). For ease of notation, let Rk,li,j be theterms in the bracket (being multiplied to 1/i,j) in the above expression. Then, we have (for any i, j)

(Di,j)t(u)2 =

l

ul(Di,j)t(el)2

=

2d+3k=1

[l

ul

(di,j1(t)

dtl

)k

k,1i,j

+(i,j(t))k

(xi)(t) cos(i,j(C

xit)j)l

Cxij,lul k,2i,j

(i,j(t))k(xi)(t) sin(i,j(C

xit)j)l

Cxij,lul k,3i,j

+(1/i,j)l

ulRk,li,j

k,4i,j

]2

= (Di,j1)t(u)2 =

k

(k,1i,j

)2 + (xi)(t)(Cxiu)2j

=

k

(k,2i,j

)2+(k,3i,j

)2+k

[(k,4i,j /i,j

)2+(2k,4i,j /i,j

)(k,1i,j +

k,2i,j +

k,3i,j

)+ 2

(k,1i,j

k,2i,j +

k,1i,j

k,3i,j

)]

Zi,j

, (3)

where the last equality is by expanding the square and by noting that

k k,2i,j

k,3i,j = 0 since and are

orthogonal to each other. The base case (D0,n)t(u)2 equals u2.

Again, by picking i,j sufficiently large, and by noting that the cross terms

k(k,1i,j

k,2i,j ) and

k(

k,1i,j

k,3i,j )

are very close to zero since and are approximately normal to the tangent vector, we have

Lemma 7 Let t be any point in (M) and u be any vector tagent to (M) at t such that u 1. Let bethe isometry parameter chosen in Theorem 3. Pick i,j

((Ki,j +(9

n/))(nd|X|)2/) (recall that Ki,jis the bound on the directional derivate of and ). If 0 O

(/d(n|X|)2) (recall that 0 is the quality of

approximation of the normals and ), then we have

(D)t(u)2 = (D|X|,n)t(u)2 = u2 +|X|i=1

(xi)(t)nj=1

(Cxiu)2j + , (4)

where || /2.

11

-

5.4 Combined Effect of ((M))We can now analyze the aggregate effect of both our embeddings on the length of an arbitrary unit vector vtangent to M at p. Let u := (D)p(v) = v be the pushforward of v. Then u 1 (cf. Lemma 4). Seealso Figure 4.

Now, recalling that D( ) = D D, and noting that pushforward maps are linear, we have(D( ))p(v)2 =

(D)(p)(u)2. Thus, representing u asi uiei in ambient coordinates of Rd, andusing Eq. (2) (for Algorithm I) or Eq. (4) (for Algorithm II), we get(D( ))p(v)2 = (D)(p)(u)2 = u2 +

xX

(x)((p))Cxu2 + ,

where || /2. We can give simple lower and upper bounds for the above expression by noting that(x) is a localization function. Define Np := {x X : (x) (p) < } as the neighborhoodaround p ( as per the theorem statement). Then only the points in Np contribute to above equation, since(x)((p)) = d(x)((p))/dt

i = 0 for (x)(p) . Also note that for all x Np, xp < 2(cf. Lemma 4).

Let xM := argmaxxNp Cxu2 and xm := argminxNp Cxu2 are quantities that attain the maxi-mum and the minimum respectively, then:

u2 + Cxmu2 /2 (D( ))p(v)2 u2 + CxMu2 + /2. (5)

Notice that ideally we would like to have the correction factor Cpu in Eq. (5) since that would give theperfect stretch around the point p. But what about correction Cxu for closeby xs? The following lemmahelps us continue in this situation.

Lemma 8 Let p, v, u be as above. For any x Np X , let Cx and Fx also be as discussed above (recallthat p x < 2, and X M forms a bounded (, )-cover of the fixed underlying manifold M withcondition number 1/ ). Define := (4/) + + 4/ . If /4 and d/32D, then

1 u2 40 max{D/d, D/d} Cxu2 1 u2 + 51 max{D/d, D/d}.Note that we chose (d/D)(/350)2 and (d/D)(/250)2 (cf. theorem statement). Thus,

combining Eq. (5) and Lemma 8, we get (recall v = 1)

(1 )v2 (D( ))p(v)2 (1 + )v2.

So far we have shown that our embedding approximately preserves the length of a fixed tangent vectorat a fixed point. Since the choice of the vector and the point was arbitrary, it follows that our embeddingapproximately preserves the tangent vector lengths throughout the embedded manifold uniformly. We willnow show that preserving the tangent vector lengths implies preserving the geodesic curve lengths.

5.5 Preservation of the GeodesicsPick any two (path-connected) points p and q in M , and let be the geodesic5 path between p and q. Furtherlet p, q and be the images of p, q and under our embedding. Note that is not necessarily the geodesicpath between p and q, thus we need an extra piece of notation: let be the geodesic path between p and q(under the embedded manifold) and be its inverse image in M . We need to show (1 )L() L() (1 + )L(), where L() denotes the length of the path (end points are understood).

First recall that for any differentiable map F and curve , = F () = (DF )(). By (1 )-isometry of tangent vectors, this immediately gives us (1 )L() L() (1 + )L() for any path inM and its image in embedding of M . So,

(1 )DG(p, q) = (1 )L() (1 )L() L() = DG(p, q).Similarly,

DG(p, q) = L() L() (1 + )L() = (1 + )DG(p, q).5Globally, geodesic paths between points are not necessarily unique; we are interested in a path that yields the shortest

distance between the points.

12

-

6 ConclusionThis work provides two simple algorithms for approximate isometric embedding of manifolds. Our algo-rithms are similar in spirit to Nashs C1 construction [Nas54], and manage to remove the dependence on theisometry constant from the target dimension. One should observe that this dependency does however showup in the sampling density required to make the necessary corrections.

The correction procedure discussed here can also be readily adapted to create isometric embeddings fromany manifold embedding procedure (under some mild conditions). Take any off-the-shelf manifold embed-ding algorithm A (such as LLE, Laplacian Eigenmaps, etc.) that maps an n-manifold in, say, d dimensions,but does not necessarily guarantee an approximate isometric embedding. Then as long as one can ensure thatthe embedding produced by A is a one-to-one contraction6 (basically ensuring conditions similar to Lemma4), we can apply corrections similar to those discussed in Algorithms I or II to produce an approximate iso-metric embedding of the given manifold in slightly higher dimensions. In this sense, the correction procedurepresented here serves as a universal procedure for approximate isometric manifold embeddings.

AcknowledgementsThe author would like to thank Sanjoy Dasgupta for introducing the subject, and for his guidance throughoutthe project.

References[BN03] M. Belkin and P. Niyogi. Laplacian eigenmaps for dimensionality reduction and data represen-

tation. Neural Computation, 15(6):13731396, 2003.[BW07] R. Baraniuk and M. Wakin. Random projections of smooth manifolds. Foundations of Compu-

tational Mathematics, 2007.[Cla07] K. Clarkson. Tighter bounds for random projections of manifolds. Comp. Geometry, 2007.[DF08] S. Dasgupta and Y. Freund. Random projection trees and low dimensional manifolds. ACM

Symposium on Theory of Computing, 2008.[DG03] D. Donoho and C. Grimes. Hessian eigenmaps: locally linear embedding techniques for high

dimensional data. Proc. of National Academy of Sciences, 100(10):55915596, 2003.[DGGZ02] T. Dey, J. Giesen, S. Goswami, and W. Zhao. Shape dimension and approximation from samples.

Symposium on Discrete Algorithms, 2002.[GW03] J. Giesen and U. Wagner. Shape dimension and intrinsic metric from samples of manifolds with

high co-dimension. Symposium on Computational Geometry, 2003.[HH06] Q. Han and J. Hong. Isometric embedding of Riemannian manifolds in Euclidean spaces. Amer-

ican Mathematical Society, 2006.[JL84] W. Johnson and J. Lindenstrauss. Extensions of Lipschitz mappings into a Hilbert space. Conf.

in Modern Analysis and Probability, pages 189206, 1984.[Kui55] N. Kuiper. On C1-isometric embeddings, I, II. Indag. Math., 17:545556, 683689, 1955.[Mil72] J. Milnor. Topology from the differential viewpoint. Univ. of Virginia Press, 1972.[Nas54] J. Nash. C1 isometric imbeddings. Annals of Mathematics, 60(3):383396, 1954.[Nas56] J. Nash. The imbedding problem for Riemannian manifolds. Annals of Mathematics, 63(1):20

63, 1956.[NSW06] P. Niyogi, S. Smale, and S. Weinberger. Finding the homology of submanifolds with high confi-

dence from random samples. Disc. Computational Geometry, 2006.[RS00] S. Roweis and L. Saul. Nonlinear dimensionality reduction by locally linear embedding. Science,

290, 2000.[TdSL00] J. Tenebaum, V. de Silva, and J. Langford. A global geometric framework for nonlinear dimen-

sionality reduction. Science, 290, 2000.[Whi36] H. Whitney. Differentiable manifolds. Annals of Mathematics, 37:645680, 1936.[WS04] K. Weinberger and L. Saul. Unsupervised learning of image manifolds by semidefinite program-

ming. Computer Vision and Pattern Recognition, 2004.

6One can modify A to produce a contraction by simple scaling.

13

-

A AppendixA.1 Properties of a Well-conditioned ManifoldThroughout this section we will assume that M is a compact submanifold of RD of dimension n, and condi-tion number 1/ . The following are some properties of such a manifold that would be useful throughout thetext.

Lemma 9 (relating closeby tangent vectors implicit in the proof of Proposition 6.2 [NSW06]) Pickany two (path-connected) points p, q M . Let u TpM be a unit length tangent vector and v TqMbe its parallel transport along the (shortest) geodesic path to q. Then7, i) u v 1 DG(p, q)/ , ii)u v

2DG(p, q)/ .

Lemma 10 (relating geodesic distances to ambient distances Proposition 6.3 of [NSW06]) If p, q Msuch that p q /2, then DG(p, q) (1

1 2p q/) 2p q.

Lemma 11 (projection of a section of a manifold onto the tangent space) Pick any p M and defineMp,r := {q M : q p r}. Let f denote the orthogonal linear projection of Mp,r onto the tangentspace TpM . Then, for any r /2

(i) the map f : Mp,r TpM is 1 1. (see Lemma 5.4 of [NSW06])(ii) for any x, y Mp,r, f(x) f(y)2 (1 (r/)2) x y2. (implicit in the proof of Lemma 5.3

of [NSW06])Lemma 12 (coverings of a section of a manifold) Pick any p M and define Mp,r := {q M : qp r}. If r /2, then there exists C Mp,r of size at most 9n with the property: for any p Mp,r, existsc C such that p c r/2.

Proof: The proof closely follows the arguments presented in the proof of Theorem 22 of [DF08].For r /2, note that Mp,r RD is (path-)connected. Let f denote the projection of Mp,r onto

TpM = Rn. Quickly note that f is 1 1 (see Lemma 11(i)). Then, f(Mp,r) Rn is contained in ann-dimensional ball of radius r. By standard volume arguments, f(Mp,r) can be covered by at most 9n ballsof radius r/4. WLOG we can assume that the centers of these covering balls are in f(Mp,r). Now, notingthat the inverse image of each of these covering balls (in Rn) is contained in a D-dimensional ball of radiusr/2 (see Lemma 11(ii)) finishes the proof.

Lemma 13 (relating closeby manifold points to tangent vectors) Pick any point p M and let q M(distinct from p) be such that DG(p, q) . Let v TpM be the projection of the vector q p onto TpM .Then, i)

vv qpqp 1 (DG(p, q)/2)2, ii) vv qpqp DG(p, q)/2.Proof: If vectors v and q p are in the same direction, we are done. Otherwise, consider the plane spannedby vectors v and q p. Then since M has condition number 1/ , we know that the point q cannot lie withinany -ball tangent to M at p (see Figure 6). Consider such a -ball (with center c) whose center is closest toq and let q be the point on the surface of the ball which subtends the same angle (pcq) as the angle formedby q (pcq). Let this angle be called . Then using cosine rule, we have cos = 1 q p2/22.

Define as the angle subtended by vectors v and q p, and the angle subtended by vectors v andq p. WLOG we can assume that the angles and are less than . Then, cos cos = cos /2.Using the trig identity cos = 2 cos2

(2

) 1, and noting q p2 q p2, we have vv q pq p = cos cos 2 1 q p2/42 1 (DG(p, q)/2)2.

Now, by applying the cosine rule, we have vv qpqp

2 = 2(1 cos). The lemma follows.7Technically, it is not possible to directly compare two vectors that reside in different tangent spaces. However, since

we only deal with manifolds that are immersed in some ambient space, we can treat the tangent spaces as n-dimensionalaffine subspaces. We can thus parallel translate the vectors to the origin of the ambient space, and do the necessarycomparison (such as take the dot product, etc.). We will make a similar abuse of notation for any calculation that usesvectors from different affine subspaces to mean to first translate the vectors and then perform the necessary calculation.

14

-

qTpM

q

p

c

v

Figure 6: Plane spanned by vectors q p and v TpM (where v is the projection of q p onto TpM ), with -ballstangent to p. Note that q is the point on the ball such that pcq = pcq = .

Lemma 14 (approximating tangent space by closeby samples) Let 0 < 1. Pick any point p0 Mand let p1, . . . , pn M be n points distinct from p0 such that (for all 1 i n)

(i) DG(p0, pi) /n,

(ii) pip0pip0

pjp0pjp0 1/2n (for i 6= j).

Let T be the n dimensional subspace spanned by vectors {pi p0}i[n]. For any unit vector u T , let u bethe projection of u onto Tp0M . Then,

u uu 1 .Proof: Define the vectors vi := pip0pip0 (for 1 i n). Observe that {vi}i[n] forms a basis of T . For1 i n, define vi as the projection of vector vi onto Tp0M . Also note that by applying Lemma 13, wehave that for all 1 i n, vi vi2 2/2n.

Let V = [v1, . . . , vn] be the D n matrix. We represent the unit vector u as V =

i ivi. Also, sinceu is the projection of u, we have u = i ivi. Then, 2 2. To see this, we first identify T with Rnvia an isometry S (a linear map that preserves the lengths and angles of all vectors in T ). Note that S can berepresented as an n D matrix, and since V forms a basis for T , SV is an n n invertible matrix. Then,since Su = SV , we have = (SV )1Su. Thus, (recall Su = 1)

2 maxxSn1

(SV )1x2 = max((SV )T(SV )1)

= max((SV )1(SV )T) = max((V

TV )1) = 1/min(VTV )

1/1 ((n 1)/2n) 2,where i) max(A) and min(A) denote the largest and smallest eigenvalues of a square symmetric matrix Arespectively, and ii) the second inequality is by noting that V TV is an n n matrix with 1s on the diagonaland at most 1/2n on the off-diagonal elements, and applying the Gershgorin circle theorem.

Now we can bound the quantity of interest. Note thatu uu |uT(u (u u))| 1 u u = 1

i

i(vi vi)

1i

|i|vi vi 1 (/2n)

i

|i| 1 ,

where the last inequality is by noting 1 2n.

A.2 On Constructing a Bounded Manifold CoverGiven a compact n-manifold M RD with condition number 1/ , and some 0 < 1. We can constructan -bounded (, ) cover X of M (with 210n+1 and /32n) as follows.

Set /32n and pick a (/2)-net C of M (that is C M such that, i. for c, c C such thatc 6= c, cc /2, ii. for all p M , exists c C such that cp < /2). WLOG we shall assume thatall points of C are in the interior of M . Then, for each c C, define Mc,/2 := {p M : p c /2},and the orthogonal projection map fc : Mc,/2 TcM that projects Mc,/2 onto TcM (note that, cf. Lemma

15

-

11(i), fc is 11). Note that TcM can be identified with Rn with the c as the origin. We will denote the originas x

(c)0 , that is, x

(c)0 = fc(c).

Now, let Bc be any n-dimensional closed ball centered at the origin x(c)0 TcM of radius r > 0 that iscompletely contained in fc(Mc,/2) (that is, Bc fc(Mc,/2)). Pick a set of n points x(c)1 , . . . , x(c)n on thesurface of the ball Bc such that (x(c)i x(c)0 ) (x(c)j x(c)0 ) = 0 for i 6= j.

Define the bounded manifold cover as

X :=

cC,i=0,...,n

f1c (x(c)i ). (6)

Lemma 15 Let 0 < 1 and /32n. Let C be a (/2)-net of M as described above, and X be asin Eq. (6). Then X forms a 210n+1-bounded (, ) cover of M .Proof: Pick any point p M and define Xp := {x X : x p < }. Let c C be such thatp c < /2. Then Xp has the following properties.

Covering criterion: For 0 i n, since f1c (x(c)i ) c /2 (by construction), we have f1c (x(c)i ) p < . Thus, f1c (x(c)i ) Xp (for 0 i n). Now, for 1 i n, noting thatDG(f1c (x(c)i ), f1c (x(c)0 )) 2f1c (x(c)i )f1c (x(c)0 ) (cf. Lemma 10), we have that for the vector v(c)i := f

1c (x

(c)i )f

1c (x

(c)0 )

f1c (x(c)i )f

1c (x

(c)0 )

and

its (normalized) projection v(c)i := x(c)i x

(c)0

x(c)i x

(c)0

onto TcM ,v(c)i v(c)i /2 (cf. Lemma 13). Thus,

for i 6= j, we have (recall, by construction, we have v(c)i v(c)j = 0)

|v(c)i v(c)j | = |(v(c)i v(c)i + v(c)i ) (v(c)j v(c)j + v(c)j )|= |(v(c)i v(c)i ) (v(c)j v(c)j ) + v(c)i (v(c)j v(c)j ) + (v(c)i v(c)i ) v(c)j | (v(c)i v(c)i )(v(c)j v(c)j )+ v(c)i v(c)i + v(c)j v(c)j 3/

2 1/2n.

Point representation criterion: There exists x Xp, namely f1c (x(c)0 ) (= c), such that p x /2.

Local boundedness criterion: Define Mp,3/2 := {q M : q p < 3/2}. Note that Xp {f1c (x(c)i ) :c C Mp,3/2, 0 i n}. Now, using Lemma 12 we have that exists a cover N Mp,3/2 of sizeat most 93n such that for any point q Mp,3/2, there exists n N such that q n < /4. Note that,by construction of C, there cannot be an n N such that it is within distance /4 of two (or more) distinctc, c C (since otherwise the distance c c will be less than /2, contradicting the packing of C). Thus,|C Mp,3/2| 93n. It follows that |Xp| (n+ 1)93n 210n+1.

Tangent space approximation criterion: Let Tp be the n-dimensional span of {v(c)i }i[n] (note that Tp maynot necessarily pass through p). Then, for any unit vector u Tp, we need to show that its projection uponto TpM has the property |u upup | 1 . Let be the angle between vectors u and up. Let uc be theprojection of u onto TcM , and 1 be the angle between vectors u and uc, and let 2 be the angle betweenvectors uc (at c) and its parallel transport along the geodesic path to p. WLOG we can assume that 1 and 2are at most /2. Then, 1 + 2 . We get the bound on the individual angles as follows. By applyingLemma 14, cos(1) 1 /4, and by applying Lemma 9, cos(2) 1 /4. Finally, by using Lemma 16,we have

u upup = cos() cos(1 + 2) 1 .Lemma 16 Let 0 1, 2 1. If cos 11 and cos 12, then cos(+) 112212.

Proof: Applying the identity sin =1 cos2 immediately yields sin 21 and sin

22.

Now, cos(+ ) = cos cos sin sin (1 1)(1 2) 212 1 1 2 212.

Remark 6 A dense enough sample from M constitutes as a bounded cover. One can selectively prune thedense sampling to control the total number of points in each neighborhood, while still maintaining the coverproperties.

16

-

A.3 Bounding the number of subsets K in Embedding I

By construction (see the preprocessing stage of Embedding I), K = maxxX |X B(x, 2)| (where B(x, r)denotes a Euclidean ball centered at x of radius r). That is, K is the largest number of xs ( X) that arewithin a 2 ball of some x X .

Now, pick any x X and consider the set Mx := M B(x, 2). Then, if /4, Mx can be coveredby 2cn balls of radius (see Lemma 12). By recalling that X forms an -bounded (, )-cover, we have|X B(x, 2)| = |X Mx| 2cn (where c 4).

A.4 Proof of Lemma 4

Since R is a random orthoprojector from RD to Rd, it follows that

Lemma 17 (random projection of n-manifolds adapted from Theorem 1.5 of [Cla07]) LetM be a smoothcompact n-manifold with volume V and condition number 1/ . Let R := D/dR be a scaling of R. Pickany 0 < 1 and 0 < 1. If d = (2 log(V/n) + 2n log(1/) + ln(1/)), then with probabilityat least 1 , for all p, q M

(1 )p q Rp Rq (1 + )p q.

We apply this result with = 1/4. Then, for d = (log(V/n)+n), with probability at least 1 1/poly(n),(3/4)p q Rp Rq (5/4)p q. Now let : RD Rd be defined as x := (2/3)Rx =(2/3)(

D/d)x (as per the lemma statement). Then we immediately get (1/2)p q p q

(5/6)p q.Also note that for any x RD, we have x = (2/3)(D/d)Rx (2/3)(D/d)x (since R is

an orthoprojector).Finally, for any point p M , a unit vector u tangent to M at p can be approximated arbitrarily well by

considering a sequence {pi}i of points (in M ) converging to p (in M ) such that (pi p)/pi p convergesto u. Since for all points pi, (1/2) pi p/pi p (5/6) (with high probability), it follows that(1/2) (D)p(u) (5/6).

A.5 Proof of Corollary 5

Let v1x and vnx ( Rn) be the right singular vectors corresponding to singular values 1x and nx respectivelyof the matrix Fx. Then, quickly note that 1x = Fxv1, and nx = Fxvn. Note that since Fx isorthonormal, we have that Fxv1 = Fxvn = 1. Now, since Fxvn is in the span of column vectors ofFx, by the sampling condition (cf. Definition 2), there exists a unit length vector vnx tangent to M (at x)such that |Fxvnx vnx | 1 . Thus, decomposing Fxvnx into two vectors anx and bnx such that anxbnx andanx := (Fxv

nx vnx )vnx , we have

nx = (Fxvn) = ((Fxvnx vnx )vnx ) + bnx (1 ) vnx bnx (1 )(1/2) (2/3)

2D/d,

since bnx2 = Fxvnx2anx2 1 (1 )2 2 and bnx (2/3)(D/d)bnx (2/3)

2D/d.

Similarly decomposing Fxv1x into two vectors a1x and b1x such that a1xb1x and a1x := (Fxv1x v1x)v1x, we have

1x = (Fxv1x) = ((Fxv1x v1x)v1x) + b1x v1x+ b1x (5/6) + (2/3)

2D/d,

where the last inequality is by noting b1x (2/3)2D/d. Now, by our choice of ( d/32D), and by

noting that d D, the corollary follows.

17

-

A.6 Proof of Lemma 6

We can simplify Eq. (1) by recalling how the subsets X(j) were constructed (see preprocessing stage ofEmbedding I). Note that for any fixed t, at most one term in the set {(x)(t)}xX(j) is non-zero. Thus,

(D)t(u)2 =d

k=1

u2k +

nk=1

xX

(x)(t) cos2((Cxt)k)(C

xu)2k + (x)(t) sin2((Cxt)k)(C

xu)2k

+1

[ ((Ak,xsin (t)

)2+(Ak,xcos(t)

)2)/

1

+2Ak,xsin (t)(x)(t) cos((C

xt)k)(Cxu)k

2

2Ak,xcos(t)(x)(t) sin((C

xt)k)(Cxu)k

3

]

= u2 +xX

(x)(t)n

k=1

(Cxu)2k + ,

where := (1 + 2 + 3)/. Noting that i) the terms |Ak,xsin (t)| and |Ak,xcos(t)| are at most O(9nd/)

(see Lemma 18), ii) |(Cxu)k| 4, and iii)(x)(t) 1, we can pick sufficiently large (say,

(n29nd/) such that || /2 (where is the isometry constant from our main theorem).

Lemma 18 For all k, x and t, the terms |Ak,xsin (t)| and |Ak,xcos(t)| are at most O(9nd/).

Proof: We shall focus on bounding |Ak,xsin (t)| (the steps for bounding |Ak,xcos(t)| are similar). Note that

|Ak,xsin (t)| = di=1

uisin((Cxt)k)

d1/2(x)(t)

dti

di=1

|ui| d1/2(x)(t)

dti

d

i=1

d1/2(x)(t)dti

2,

since u 1. Thus, we can bound |Ak,xsin (t)| by O(9nd/) by noting the following lemma.

Lemma 19 For all i, x and t, |d1/2(x)(t)/dti| O(9n/).

Proof: Pick any t (M), and let p0 M be (the unique element) such that (p0) = t. Define Np0 :={x X : (x) (p0) < } as the neighborhood around p0. Fix an arbitrary x0 Np0 X (since ifx0 / Np0 then d1/2(x0)(t)/dti = 0), and consider the function

1/2(x0)

(t) =

((x0)(t)

xNp0(x)(t)

)1/2=

(e1/(1(t(x0)

2/2))xNp0

e1/(1(t(x)2/2))

)1/2.

18

-

Pick an arbitrary coordinate i0 {1, . . . , d} and consider the (directional) derivative of this function

d1/2(x0)

(t)

dti0=

1

2

(1/2(x0)

(t))(d(x0)(t)

dti0

)

=

( xNp0

eAt(x))1/2

2(eAt(x0)

)1/2( xNp0

eAt(x))(2(ti0 (x0)i0)

2(At(x0))

2)(

eAt(x0))

( xNp0

eAt(x))2

(eAt(x0)

)( xNp0

2(ti0 (x)i0)2

(At(x))2eAt(x)

)( xNp0

eAt(x))2

=

( xNp0

eAt(x))(2(ti0 (x0)i0)

2(At(x0))

2)(

eAt(x0))1/2

2( xNp0

eAt(x))1.5

(eAt(x0)

)1/2( xNp0

2(ti0 (x)i0)2

(At(x))2eAt(x)

)

2( xNp0

eAt(x))1.5 ,

where At(x) := 1/(1 (t (x)2/2)). Observe that the domain of At is {x X : t (x) < }and the range is [1,). Recalling that for any 1, |2e | 1 and |2e/2| 3, we have that|At()2eAt()| 1 and |At()2eAt()/2| 3. Thus,

d1/2(x0)(t)dti0

3 xNp0

eAt(x) 2(ti0 (x0)i0)

2

+ eAt(x0)/2 xNp0

2(ti0 (x)i0)2

2( xNp0

eAt(x))1.5

(3)(2/)

xNp0

eAt(x)+ eAt(x0)/2

xNp0

(2/)

2( xNp0

eAt(x))1.5

O(9n/),

where the last inequality is by noting: i) |Np0 | 9n (since for all x Np0 , x p0 2 cf. Lemma4, X is an -bounded cover, and by noting that for /4, a ball of radius 2 can be covered by 9n ballsof radius on the given n-manifold cf. Lemma 12), ii) |eAt(x)| |eAt(x)/2| 1 (for all x), and iii)

xNp0eAt(x) (1) (since our cover X ensures that for any p0, there exists x Np0 X such that

p0 x /2 see also Remark 2, and hence eAt(x) is non-negligible for some x Np0 ).

19

-

A.7 Proof of Lemma 7Note that by definition, (D)t(u)2 = (D|X|,n)t(u)2. Thus, using Eq. (3) and expanding the recur-sion, we have

(D)t(u)2 = (D|X|,n)t(u)2= (D|X|,n1)t(u)2 + (x|X|)(t)(Cx|X|u)2n + Z|X|,n

.

.

.

= (D0,n)t(u)2 +[ |X|i=1

(xi)(t)

nj=1

(Cxiu)2j

]+i,j

Zi,j .

Note that (Di,0)t(u) := (Di1,n)t(u). Now recalling that (D0,n)t(u)2 = u2 (the base case of therecursion), all we need to show is that |i,j Zi,j | /2. This follows directly from the lemma below.Lemma 20 Let 0 O

(/d(n|X|)2), and for any i, j, let i,j ((Ki,j + (9n/))(nd|X|)2/) (as per

the statement of Lemma 7). Then, for any i, j, |Zi,j | /2n|X|.Proof: Recall that (cf. Eq. (3))

Zi,j =1

2i,j

k

(k,4i,j

)2

(a)

+2k

k,4i,ji,j

(k,1i,j +

k,2i,j +

k,3i,j

)

(b)

+2k

k,1i,j k,2i,j

(c)

+2k

k,1i,j k,3i,j

(d)

.

Term (a): Note that |k(k,4i,j )2| O(d3(Ki,j + (9n/))2) (cf. Lemma 21 (iv)). By our choice of i,j ,we have term (a) at most O(/n|X|).

Term (b): Note thatk,1i,j + k,2i,j + k,3i,j O(n|X| + (/dn|X|)) (by noting Lemma 21 (i)-(iii), recall-

ing the choice of i,j , and summing over all i, j). Thus,

k k,4i,j (

k,1i,j +

k,2i,j +

k,3i,j )

O((d2(Ki,j +(9n/))

)(n|X|+ (/dn|X|))). Again, by our choice of i,j , term (b) is at most O(/n|X|).

Terms (c) and (d): We focus on bounding term (c) (the steps for bounding term (d) are same). Note that|k k,1i,j k,2i,j | 4|k k,1i,j (i,j(t))k|. Now, observe that (k,1i,j )k=1,...,2d+3 is a tangent vector with lengthat most O(dn|X| + (/dn|X|)) (cf. Lemma 21 (i)). Thus, by noting that i,j is almost normal (with qualityof approximation 0), we have term (c) at most O(/n|X|).

By choosing the constants in the order terms appropriately, we can get the lemma.

Lemma 21 Let k,1i,j , k,2i,j ,

k,3i,j , and

k,4i,j be as defined in Eq. (3). Then for all 1 i |X| and 1 j n,

we have(i) |k,1i,j | 1 + 8n|X|+

ii=1

j1j=1O(d(Ki,j + (9

n/))/i,j),

(ii) |k,2i,j | 4,(iii) |k,3i,j | 4,(iv) |k,4i,j | O(d(Ki,j + (9n/))).

Proof: First note for any u 1 and for any xi X , 1 j n and 1 l d, we have |

l Cxij,lul| =

|(Cxiu)j | 4 (cf. Lemma 23 (b) and Corollary 5).

Noting that for all i and j, i,j = i,j = 1, we have |2,ki,j | 4 and |3,ki,j | 4.

Observe that k,4i,j =

l ulRk,li,j . For all i, j, k and l, note that i) di,j(t)/dtl Ki,j and di,j(t)/dtl

Ki,j and ii) |d1/2(xi)(t)/dtl| O(9n/) (cf. Lemma 19). Thus we have |k,4i,j | O(d(Ki,j + (9n/))).

Now for any i, j, note that k,1i,j =

l uldi,j1(t)/dtl. Thus by recursively expanding, |k,1i,j | 1 +

8n|X|+ii=1j1j=1O(d(Ki,j + (9n/))/i,j).20

-

A.8 Proof of Lemma 8We start by stating the following useful observations:

Lemma 22 Let A be a linear operator such that maxx=1 Ax max. Let u be a unit-length vector. IfAu min > 0, then for any unit-length vector v such that |u v| 1 , we have

1 max2

min AvAu 1 +

max2

min.

Proof: Let v = v if u v > 0, otherwise let v = v. Quickly note that uv2 = u2+v22u v =2(1 u v) 2. Thus, we have,

i. Av = Av Au+ A(u v) Au+ max2,

ii. Av = Av Au A(u v) Au max2.

Noting that Au min yields the result.

Lemma 23 Let x1, . . . , xn RD be a set of orthonormal vectors, F := [x1, . . . , xn] be a D n matrixand let be a linear map from RD to Rd (n d D) such that for all non-zero a span(F ) we have0 < a a. Let UV T be the thin SVD of F . Define C = (2 I)1/2UT. Then,(a) C(a)2 = a2 a2, for any a span(F ),(b) C2 (1/n)2, where denotes the spectral norm of a matrix and n is the nth largest singular

value of F .Proof: Note that FV forms an orthonormal basis for the subspace spanned by columns of F that maps toU via the mapping . Thus, since a span(F ), let y be such that a = FV y. Note that i) a2 = y2, ii)a2 = Uy2 = yT2y. Now,

Ca2 = ((2 I)1/2UT)FV y2= (2 I)1/2UTUV TV y2= (2 I)1/2y2= yTy yT2y= a2 a2.

Now, consider C2.C2 (2 I)1/22UT2

maxx=1

(2 I)1/2x2

maxx=1

xT2x

= maxx=1

i

x2i /(i)2

(1/n)2,where i are the (top n) singular values forming the diagonal matrix .

Lemma 24 Let M RD be a compact Riemannian n-manifold with condition number 1/ . Pick anyx M and let Fx be any n-dimensional affine space with the property: for any unit vector vx tangent to Mat x, and its projection vxF onto Fx,

vx vxFvxF 1. Then for any p M such that xp /2,and any unit vector v tangent to M at p, ( := (2/) + + 2

2/ )

i.v vFvF 1 ,

ii. vF 2 1 2,

21

-

iii. vr2 2,where vF is the projection of v onto Fx and vr is the residual (i.e. v = vF + vr and vFvr).Proof: Let be the angle between vF and v. We will bound this angle.

Let vx (at x) be the parallel transport of v (at p) via the (shortest) geodesic path via the manifold connec-tion. Let the angle between vectors v and vx be . Let vxF be the projection of vx onto the subspace Fx,and let the angle between vx and vxF be . WLOG, we can assume that the angles and are acute. Then,since + , we have that

v vFvF = cos cos( + ). We bound the individual terms cosand cos as follows.

Now, since p x , using Lemmas 9 and 10, we have cos() = |v vx| 1 2/ . We alsohave cos() =

vx vxFvxF 1 . Then, using Lemma 16, we finally get v vFvF = | cos()| 1 2/ 2

2/ = 1 .

Also note since 1 = v2 = (v vFvF )2 vFvF 2 + vr2, we have vr2 = 1 (v vFvF )2 2, and

vF 2 = 1 vr2 1 2.Now we are in a position to prove Lemma 8. Let vF be the projection of the unit vector v (at p) onto the

subspace spanned by (the columns of) Fx and vr be the residual (i.e. v = vF + vr and vFvr). Then, notingthat p, x, v and Fx satisfy the conditions of Lemma 24 (with in the Lemma 24 replaced with 2 from thestatement of Lemma 8), we have ( := (4/) + + 4

/ )

a) v vFvF 1 ,b) vF 2 1 2,c) vr2 2.

We can now bound the required quantity Cxu2. Note thatCxu2 = Cxv2 = Cx(vF + vr)2

= CxvF 2 + Cxvr2 + 2CxvF Cxvr= vF 2 vF 2

(a)

+ Cxvr2 (b)

+2CxvF Cxvr (c)

where the last equality is by observing vF is in the span of Fx and applying Lemma 23 (a). We now boundthe terms (a),(b), and (c) individually.

Term (a): Note that 1 2 vF 2 1 and observing that satisfies the conditions of Lemma 22with max = (2/3)

D/d, min = (1/2) v (cf. Lemma 4) and

v vFvF 1 , we have (recallv = u 1)

vF 2 vF 2 1 vF 2 vFvF

2

1 (1 2) vFvF

2

1 + 2 vFvF

2 1 + 2 (1 (4/3)2D/d)2 v2 1 u2 + (2 + (8/3)2D/d), (7)

where the fourth inequality is by using Lemma 22. Similarly, in the other direction

vF 2 vF 2 1 2 vF 2 vFvF

2

1 2 vFvF

2 1 2 (1 + (4/3)2D/d)2 v2 1 u2 (2 + (32/9)(D/d) + (8/3)2D/d). (8)

22

-

R2d+3

N = Rd

M

p

x1

x2x3

x4

Figure 7: Basic setup for computing the normals to the underlying n-manifold M at the point of interest p. Observethat even though it is difficult to find vectors normal to M at p within the containing space Rd (because we only havea finite-size sample from M , viz. x1, x2, etc.), we can treat the point p as part of the bigger ambient manifold N(= Rd, that contains M ) and compute the desired normals in a space that contains N itself. Now, for each i, j iterationof Algorithm II, i,j acts on the entire N , and since we have complete knowledge about N , we can compute the desirednormals.

Term (b): Note that for any x, x (2/3)(D/d)x. We can apply Lemma 23 (b) with nx 1/4 (cf.

Corollary 5) and noting that vr2 2, we immediately get

0 Cxvr2 42 (4/9)(D/d)vr2 (128/9)(D/d). (9)

Term (c): Recall that for any x, x (2/3)(D/d)x, and using Lemma 23 (b) we have that Cx2 16 (since nx 1/4 cf. Corollary 5).

Now let a := CxvF and b := Cxvr. Then a = CxvF CxvF 4, and b =Cxvr (8/3)

2D/d (see Eq. (9)).

Thus, |2a b| 2ab 2 4 (8/3)2D/d = (64/3)2D/d. Equivalently,(64/3)

2D/d 2CxvF Cxvr (64/3)

2D/d. (10)

Combining (7)-(10), and noting d D, yields the lemma.

A.9 Computing the Normal Vectors

The success of the second embedding technique crucially depends upon finding (at each iteration step) a pairof mutually orthogonal unit vectors that are normal to the embedding of manifold M (from the previousiteration step) at a given point p. At a first glance finding such normal vectors seems infeasible since weonly have access to a finite size sample X from M . The saving grace comes from noting that the correctionsare applied to the n-dimensional manifold (M) that is actually a submanifold of d-dimensional space Rd.Lets denote this space Rd as a flat d-manifold N (containing our manifold of interest (M)). Note thateven though we only have partial information about (M) (since we only have samples from it), we havefull information about N (since it is the entire space Rd). What it means is that given some point of interestp (M) N , finding a vector normal to N (at p) automatically is a vector normal to (M) (at p).Of course, to find two mutually orthogonal normals to a d-manifold N , N itself needs to be embedded in alarger dimensional Euclidean space (although embedding into d+2 should suffice, for computational reasonswe will embed N into Euclidean space of dimension 2d + 3). This is precisely the first thing we do beforeapplying any corrections (cf. Step 2 of Embedding II in Section 4). See Figure 7 for an illustration of thesetup before finding any normals.

Now for every iteration of the algorithm, note that we have complete knowledge of N and exactly whatfunction (namely i,j for iteration i, j) is being applied to N . Thus with additional computation effort, onecan compute the necessary normal vectors.

More specifically, We can estimate a pair of mutually orthogonal unit vectors that are normal to i,j(N)at p (for any step i, j) as follows.

23

-

Algorithm 4 Compute Normal VectorsPreprocessing Stage:

1: Let randi,j and randi,j be vectors in R2d+3 drawn independently at random from the surface of the unit-sphere

(for 1 i |X|, 1 j n).Compute Normals: For any point of interest p M , let t := p denote its projection into Rd. Now, for anyiteration i, j (where 1 i |X|, and 1 j n), we shall assume that vectors and upto iterations i, j1are already given. Then we can compute the (approximated) normals i,j(t) and i,j(t) for the iteration i, jas follows.

1: Let > 0 be the quality of approximation.2: for k = 1, . . . , d do3: Approximate the kth tangent vector as

T k :=i,j1(t+e

k)i,j1(t)

,

where i,j1 is as defined in Section 5.3, and ek is the kth standard vector.4: end for5: Let = randi,j , and = randi,j .6: Use Gram-Schmidt orthogonalization process to extract (from ) that is orthogonal to vectors{T 1, . . . , T d}.

7: Use Gram-Schmidt orthogonalization process to extract (from ) that is orthogonal to vectors{T 1, . . . , T d, }.

8: return / and / as mutually orthogonal unit vectors that are approximately normal toi,j1(M) at i,j1(t).

A few remarks are in order.

Remark 7 The choice of target dimension of size 2d + 3 (instead of d + 2) ensures that a pair of randomunit-vectors and are not parallel to any vector in the tangent bundle of i,j1(N) with probability 1. Thisagain follows from Sards theorem, and is the key observation in reducing the embedding size in Whitneysembedding [Whi36]. This also ensures that our orthogonalization process (Steps 6 and 7) will not result in anull vector.

Remark 8 By picking sufficiently small, we can approximate the normals and arbitrarily well byapproximating the tangents T 1, . . . , T d well.

Remark 9 For each iteration i, j, the vectors / and / that are returned (in Step 8) are a smoothmodification to the starting vectors randi,j and randi,j respectively. Now, since we use the same starting vectorsrandi,j and randi,j regardless of the point of application (t = p), it follows that the respective directionalderivates of the returned vectors are bounded as well.

By noting Remarks 8 and 9, the approximate normals we return satisfy the conditions needed for Embed-ding II (see our discussion in Section 5.3).

24