Local Image Histograms for Learning Exposure...

Transcript of Local Image Histograms for Learning Exposure...

Local Image Histograms for Learning Exposure Fusion

Justin Solomon and Ravi Sankar

1 Introduction

Local image histograms provide valuable descriptors ofthe behavior of an image around a given pixel. Ratherthan bucketing intensity values for all the pixels in animage, local histograms are defined separately for eachpixel and represent the distribution of nearby intensities.Several image filters, including the median, mean-shift,and bilateral, can be described and computed using thesehistograms.

In the SIGGRAPH 2010 conference, a new techniquewas introduced for efficiently computing such local his-tograms at all points in an image [2]. While previousalgorithms had time complexity proportional to the sup-port of the histogram, the new approach always runs inO(1) time per pixel, taking advantage of efficient Gaus-sian blur operations to simplify the local histogram com-putation process.

While [2] applies local image histograms to the re-expression of previously-known image filters, these his-tograms also provide interesting features that could beused to apply machine learning to computational photog-raphy. In this project, we propose the application of thistechnique to learning reasonable approaches to exposurefusion, in which images of a scene taken at different ex-posures are linearly combined at each pixel to generate ameaningful output using the entire dynamic range of thedisplay device.

Ideally, although there obviously is a strong correla-tion between the histograms and values of adjacent pix-els in a photograph, the histograms provide sufficientlystrong descriptions of local behavior that this dependencecan be ignored. Thus, we use examples of successfully-fused images and their per-pixel histograms as trainingdata to learn a function mapping a single pixel and itscorresponding histogram in each of the different expo-sures to a single output. This dependence likely is non-linear, since local histograms often exhibit complex oreven bimodal distributions. For this reason, we apply the“Least-Squares SVM” kernelized regression technique,which implicitly makes use of high-order features [3]. Inthe particular setting of image processing with locally-

weighted histograms, evaluation of the learned expo-sure fusion function can be made considerably more ef-ficient by taking advantage of the fact that histogramschange with relatively low frequency across most im-ages; a key contribution of this project is a new algorithmfor efficient evaluation that makes image-processing-by-example a more feasible task.

This project represents one of the first data-driventechniques in computational photography introduced tothe graphics community. Its timings compare favorablywith comparable approaches and more standard imageprocessing techniques, and the output exhibits relativelyfew undesirable artifacts.

2 Background

Local image histograms are well-explored objects incomputer graphics and provide a generalized frameworkfor expressing several common image filters. The his-tograms are stored at all pixels of an image and representthe distribution of nearby intensities. A general theory ofimage processing can be built around the processing andmanipulation of these histograms, providing a commonlanguage used to describe various operations. For in-stance, the median filter seeks the 50% point of the CDFof each pixel’s local histogram, the mean-shift snaps tonearby histogram peaks, and the bilateral can be writtenas the ratio of two histogram values. These simple oper-ations provide strong evidence that local histograms arevaluable features in themselves that contain considerableinformation about a pixel and its neighborhood.

Here, we apply the technique introduced in [2] forcomputing per-pixel local histograms. While naı̈ve meth-ods have existed for some time in which the intensities ofa given neighborhood of a pixel are simply binned, thesemethods have two severe drawbacks. First, the binningprocedure takes longer depending on neighborhood size;“local” histograms representing hundreds of nearby pix-els will take much more time to compute than histogramsof the one-ring of a pixel. More importantly, these sortsof local histograms are anisotropic, often accidentallyfavoring certain directions over others by using square

1

neighborhoods and 1-0 weights. Instead, [2] describes anefficient technique for using isotropic, weighted neigh-borhoods around each point. The algorithm can be de-scribed using Gaussian blurs of the input image passedthrough various look-up tables, and thus the local his-tograms for all pixels can be computed in O(nmh) timefor an n×m image with h histogram bins.

Of course, there is no reason why an exposure fusionfunction should be a linear function of the counts in localhistogram bins. Thus, it is necessary to apply a nonlinearregression technique that is able to find more complexrelationships between input and output variables. Luck-ily, although we have a relatively high-dimensional prob-lem, a glut of training data is available: every image con-tains a diverse set of features and thousands of pixels withunique–if similar–histograms.

Still, the regression problem here is difficult, since itinvolves fitting a fairly complex function of several vari-ables. Several parametric and nonparametric techniqueswere implemented and tested, including regression trees,Gaussian processes, and support vector regression; forthe most part, these techniques were fairly extreme ex-amples of either under-fitting or unacceptable timing andwere discarded. One algorithm, however, that performedfairly well in both arenas is the least-squares support vec-tor machine (LS-SVM), introduced in [4]. The LS-SVMattempts to re-express a least-squares problem similar toGaussian process regression in the language of supportvector machines. LS-SVM’s tend to produce consider-ably more support vectors, raising the amount of time ittakes to evaluate the regression function, but produce re-liable fits for exposure fusion.

3 Performing Fusion

In the end, performing exposure fusion from the LS-SVM output involves evaluating the following functionat each pixel:

f(x) = b+m∑i=1

αiK(x, x(i)) (1)

where x(1), . . . , x(m) is the set of support vectors, b and{αi} are values from regression, and K is the kernelfunction. For this project, we use the Gaussian radialbasis functions K(x, y) = exp(−γ‖x− y‖2) as kernels,although the algorithm easily could be adapted to otherkernel functions.

Since the regression function f is evaluated at eachpixel of the image, it is highly important to make the

evaluation as efficient as possible. Thus, before describ-ing the entire exposure fusion algorithm, we proceed bydescribing an algorithm for efficient evaluation of f .

Substituting in the Gaussian radial basis into Equa-tion 1, we find our final expression for the exposure fu-sion function:

f(x) = b+m∑i=1

αie−γ‖x−x(i)‖2 (2)

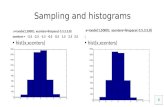

For exposure fusion, our feature vector x can be writ-ten as the sum of orthogonal vectors x = xhist + xrgb,where xhist contains histogram samples from the differ-ent exposure images and xrgb contains the pixel color ineach exposure. The key observation here is that xhistchanges with relatively low frequency across the imagedetermined by the neighborhood size of the histograms;intuitively this makes sense since the sets of nearby in-tensities to two adjacent pixels should have considerableoverlap. Thus, xhist can be computed and manipulatedin a much smaller image than the full-sized output.

In particular, we expand the exponent as follows:

‖x− x(i)‖2 =‖x‖2 − 2xhist · x(i)hist

− 2xrgb · x(i)rgb + ‖x(i)‖2

The ‖x(i)‖2 term can be precomputed for each data pointx(i) ahead of time. Similarly, the ‖x‖2 term can be com-puted once for an input x before evaluating the sum overi. These simplifications leave only the two inner productterms. The xhist · x

(i)hist term comprises the most com-

putational work if computed directly, since there are 10-20 samples in histogram space over multiple exposuresthat must be multiplied. Fortunately, as argued above,the histogram dot product terms can be computed on alower-resolution grid and upsampled since they changewith low frequency; this leaves only the rgb inner prod-ucts, which take considerably less time.

The expansion above moves the learned exposurefunction from an intractable curiosity to a practical ap-proach that can be applied to photographs in reasonabletime. To demonstrate that approximating xhist · x

(i)hist on

a low-resolution grid does not significantly affect output,Figure 1 compares upsampled and exact versions of thesevalues to show how similar they are.

4 Technical Approach

As described earlier, our algorithm learns a function fromRN to [0, 1]3, where N is the number of histogram fea-tures and colors are the output in RGB. As input, the

2

(a) (b)

Figure 1: (a) Exact and (b) upsampled values of ‖x −x(i)‖ for a sample image; note that they are virtually in-distinguishable other than boundary artifacts, despite thefact that (b) was computed on a grid 10× smaller.

learning algorithm takes p input photographs, taken atdifferent exposures and aligned ahead of time using astandard approach. For each image, the algorithm pro-duces local histograms at all the pixels using h bins. Thevector for a given pixel is computed by concatenating thehistograms from each of the exposure images and ap-pending the color of the pixel at each exposure. Thus,we have N = p(h + 3), since colors are represented us-ing RGB values. In practice, we use 10 buckets (h = 10)and three exposure images (p = 3), giving N = 39 di-mensions for each feature vector.

As training data, a number of photos were taken in-doors and outdoors on the Stanford campus under vary-ing lighting conditions. These images were fused usingtwo different algorithms, both implemented in the Pho-tomatix Pro software system. Both of these approaches,however, generated artifacts in various parts of the inputimages. To ensure that training data did not include theseincorrect pixels, masks were drawn by hand highlightingparts of input images that were particularly well-fused.Since each image had a relatively large number of pix-els and inputs were chosen because of their interest orvariety of lighting, it was possible to be relatively con-servative in choosing only particularly well-fused pixelsfor training. Given this conservative selection process aswell as the use of two different algorithms for generatingtraining data, an ideal learned model might even outper-form either of the two training techniques.

The training data process described above generatesmore data than is usable or necessary for training a 39-dimensional LS-SVM; each input image contains mil-lions of pixels, much of which are redundant for trainingpurposes since they are similar to pixels nearby. Thus,images were downsized to 800 × 600 before histogramcomputation. Additionally, input pixels were chosen ran-domly from the masked regions.

The learning stage outputs LS-SVM parameters b andαi described in Equation 1. The number m of supportvectors often is close to the number of input images,which images it slow to evaluate the regression function.To speed up evaluation, the simple pruning technique de-scribed in [3] is implemented, in which support vectorscorresponding to small αi are discarded after fitting anLS-SVM; the reduced set of support vectors is used totrain a new LS-SVM. This process is repeated severaltimes, each time eliminating 5% of the training data untilthe set of support vectors is approximately 50% smaller.

Given the trained LS-SVM, running exposure fusionon a series of input images is a relatively straightforwardprocess. Each pixel of the input is independently mappedto RN using its local histograms and intensities. Then, theLS-SVM is used to predict the fused intensity in RGBspace by evaluating f with the acceleration described inSection 3.

5 Implementation

The method described above was implemented usingC++ and Matlab. We implemented [2] as an addition tothe ImageStack image processing library in C++,1 usinga fast IIR Gaussian kernel for generating histograms. Im-ageStack’s built-in RANSAC image alignment methodwas used to align images before fusion or training to en-sure that the same pixel in the different exposures corre-sponds to the same location in the scene. Alignment andhistogram generation take place on the order of secondsfor even relatively large-sized images and thus representa reasonable approach for generating training data andfeature vectors on input images; additionally, more suit-able camera hardware could reduce alignment computa-tion time or make it unnecessary, if photos could be takenone after the other.

The LS-SVMlab library was used to learn the regres-sion function f .2 The library not only finds αi and b butalso estimates the standard deviation parameter γ usingheuristics from the set of training data. LS-SVMlab’smethods for evaluating the resulting function, however,were deemed slow and were re-implemented with themodifications from Section 3 in C++.

We collected 63 test case photographs, creating 42 im-ages worth of training data (one for each fusion methodimplemented in Photomatix Pro); this amount is clearlyoverkill given the dimensionality of our features and can

1http://code.google.com/p/imagestack/2http://www.esat.kuleuven.be/sista/lssvmlab/

3

(a) (b) (c)

(d) (e)

Figure 2: A sample training image at (a) dark, (b)medium, and (c) light exposures; Photomatix Pro fusedthese together to produce (d), and the pixels marked inthe mask (e) were selected as potential training data.

Figure 3: An example of successful learned exposure fu-sion with source images above.

be used for testing as well as model fitting. Figure 2shows an example training image.

6 Results

Figures 3 and 5 (final page) show examples of successfullearned exposure fusion output. In general, the algorithmoutlined above was successful for fusing a variety of in-put images, many of which contrasted significantly withthose found in the training data.

The examples in Figures 3 and 5 were produced af-ter pruning over 50% of the support vectors using themethod described in Section 4. Additional pruning led tovisible artifacts in the output images, whereas less prun-ing created no noticeable increase in quality. The finalmethod runs in approximately one to two minutes permegapixel on a laptop equipped with an Intel Core 2 Ex-

treme CPU.Figure 4 (final page) shows one example where the

learned fusion function was less successful. Visible arti-facts appear in regions that are not well-represented in thetraining data and manifest themselves as low-frequencynoise similar to the histogram distance functions. Themain source of artifacts in the other examples providedhere are due to problems with the alignment programrather than the exposure fusion function.

7 Conclusions and Future Work

The proposed technique yields a successful approach toexposure fusion that has proven effective on a variety ofinput images. With the modifications explained in Sec-tion 3, the approach can be carried out very efficiently,especially when compared to the hours taken to evaluatethe learned filters proposed in [1], the closest comparablepaper in the graphics literature.

Although exposure fusion is a valuable application initself, perhaps the most worthwhile result of this projectis a generalized approach to using LS-SVM regressionfor learning image filters using local histograms. Theconcept of “learning” an image filter is a valuable one,since artists manipulating photographs may prefer de-scribing a complex filter by example rather than in themathematical formalism of image processing; addition-ally, a system that could recognize patterns in how photosare edited could attempt to predict desirable filters beforean artist begins his or her work.

A number of filtering applications could benefit froma similar approach. Potential applications of histogram-based image filter learning could include:

• Edge-preserving blurring

• Deconvolution

• Automatic upsampling

• Application of painterly or other artistic effects

• Scratch removal

• Depth of field fusion

Each of these applications could be approached with lit-tle to no modification of the LS-SVM method detailedhere. Thus, an additional worthwhile goal for future re-search would be to improve the evaluation time of Equa-tion 1 as much as possible. While the downsampling ap-proach presented here helps improve runtimes consider-ably, Equation 1 still requires repeated evaluation of the

4

exp(·) function for each pixel, which could be improvedby use of various approximations and look-up tables.

Potential improvements aside, the success of the ap-proach as-is indicates that automatic learning and evalu-ation of image filters is a worthwhile and promising av-enue for future research.

References

[1] Hertzmann, Aaron et al. “Image Analogies.” SIG-GRAPH 2001, Los Angeles.

[2] Kass, Michael and Justin Solomon. “Smoothed Lo-cal Histogram Filters.” SIGGRAPH 2010, Los An-geles.

[3] Suykens, J.A.K. et al. Least Squares Support VectorMachines. Singapore: World Scientific, 2002.

[4] Suykens J.A.K. and J. Vandewalle J.. “Leastsquares support vector machine classifiers.” NeuralProcessing Letters 9.3 (1999): 293–300.

Figure 4: A less successful exposure fusion test.

Figure 5: Additional examples of fused images.

5