IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5,...

Transcript of IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5,...

IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009 1945

Joint Universal Lossy Coding and Identification ofStationary Mixing Sources With General Alphabets

Maxim Raginsky, Member, IEEE

Abstract—In this paper, we consider the problem of jointuniversal variable-rate lossy coding and identification for para-metric classes of stationary �-mixing sources with general (Polish)alphabets. Compression performance is measured in terms ofLagrangians, while identification performance is measured by thevariational distance between the true source and the estimatedsource. Provided that the sources are mixing at a sufficientlyfast rate and satisfy certain smoothness and Vapnik–Chervo-nenkis (VC) learnability conditions, it is shown that, for boundedmetric distortions, there exist universal schemes for joint lossycompression and identification whose Lagrangian redundanciesconverge to zero as �� ������ as the block length � tends toinfinity, where �� is the VC dimension of a certain class of decisionregions defined by the �-dimensional marginal distributions ofthe sources; furthermore, for each �, the decoder can identify�-dimensional marginal of the active source up to a ball of radius�� �� ������� in variational distance, eventually with proba-bility one. The results are supplemented by several examples ofparametric sources satisfying the regularity conditions.

Index Terms—Learning, minimum-distance density estimation,two-stage codes, universal vector quantization, Vapnik–Chervo-nenkis (VC) dimension.

I. INTRODUCTION

I T is well known that lossless source coding and statisticalmodeling are complementary objectives. This fact is cap-

tured by the Kraft inequality (see [1, Sec. 5.2]), which pro-vides a correspondence between uniquely decodable codes andprobability distributions on a discrete alphabet. If one has fullknowledge of the source statistics, then one can design an op-timal lossless code for the source, and vice versa. However, inpractice it is unreasonable to expect that the source statisticsare known precisely, so one has to design universal schemesthat perform asymptotically optimally within a given class ofsources. In universal coding, too, as Rissanen has shown in[2] and [3], the coding and modeling objectives can be accom-plished jointly: given a sufficiently regular parametric family ofdiscrete-alphabet sources, the encoder can acquire the sourcestatistics via maximum-likelihood estimation on a sufficiently

Manuscript received December 19, 2006; revised January 08, 2009. Currentversion published April 22, 2009. This work was supported by the BeckmanInstitute Fellowship. The material in this paper was presented in part at theIEEE International Symposium on Information Theory, (ISIT) Nice, France,June 2007.

The author was with the Beckman Institute for Advanced Science and Tech-nology, University of Illinois, Urbana, IL 61801 USA. He is now with the De-partment of Electrical and Computer Engineering, Duke University, Durham,NC 27708 USA (e-mail: [email protected]).

Communicated by M. Effros, Associate Editor for Source Coding.Digital Object Identifier 10.1109/TIT.2009.2015987

long data sequence and use this knowledge to select an appro-priate coding scheme. Even in nonparametric settings (e.g., theclass of all stationary ergodic discrete-alphabet sources), uni-versal schemes such as Ziv–Lempel [4] amount to constructinga probabilistic model for the source. In the reverse direction,Kieffer [5] and Merhav [6], among others, have addressed theproblem of statistical modeling (parameter estimation or modelidentification) via universal lossless coding.

Once we consider lossy coding, though, the relationship be-tween coding and modeling is no longer so simple. On the onehand, having full knowledge of the source statistics is certainlyhelpful for designing optimal rate-distortion codebooks. On theother hand, apart from some special cases [e.g., for indepen-dent and identically distributed (i.i.d.) Bernoulli sources and theHamming distortion measure or for i.i.d. Gaussian sources andthe squared-error distortion measure], it is not at all clear how toextract a reliable statistical model of the source from its repro-duction via a rate-distortion code (although, as shown recentlyby Weissman and Ordentlich [7], the joint empirical distributionof the source realization and the corresponding codeword of a“good” rate-distortion code converges to the distribution solvingthe rate-distortion problem for the source). This is not a problemwhen the emphasis is on compression, but there are situations inwhich one would like to compress the source and identify its sta-tistics at the same time. For instance, in indirect adaptive control(see, e.g., [8, Ch. 7]) the parameters of the plant (the controlledsystem) are estimated on the basis of observation, and the con-troller is modified accordingly. Consider the discrete-time sto-chastic setting, in which the plant state sequence is a randomprocess whose statistics are governed by a finite set of param-eters. Suppose that the controller is geographically separatedfrom the plant and connected to it via a noiseless digital channelwhose capacity is bits per use. Then, given the time horizon

, the objective is to design an encoder and a decoder for thecontroller to obtain reliable estimates of both the plant parame-ters and the plant state sequence from the possible outputsof the decoder.

To state the problem in general terms, consider an informationsource emitting a sequence of random variablestaking values in an alphabet . Suppose that the process distri-bution of is not specified completely, but it is known to be amember of some parametric class . We wish toanswer the following two questions:

1) Is the class universally encodable with re-spect to a given single-letter distortion measure , by codeswith a given structure (e.g., all fixed-rate block codes witha given per-letter rate, all variable-rate block codes, etc.)?

0018-9448/$25.00 © 2009 IEEE

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1946 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

In other words, does there exist a scheme that is asymptot-ically optimal for each , ?

2) If the answer to question 1) is positive, can the codes beconstructed in such a way that the decoder can not onlyreconstruct the source, but also identify its process distri-bution , in an asymptotically optimal fashion?

In previous work [9], [10], we have addressed these two ques-tions in the context of fixed-rate lossy block coding of stationarymemoryless (i.i.d.) continuous-alphabet sources with parameterspace a bounded subset of for some finite . We haveshown that, under appropriate regularity conditions on the dis-tortion measure and on the source models, there exist joint uni-versal schemes for lossy coding and source identification whoseredundancies (that is, the gap between the actual performanceand the theoretical optimum given by the Shannon distortion-rate function) and source estimation fidelity both converge tozero as , as the block length tends to infinity.The code operates by coding each block with the code matchedto the source with the parameters estimated from the precedingblock. Comparing this convergence rate to the conver-gence rate, which is optimal for redundancies of fixed-rate lossyblock codes [11], we see that there is, in general, a price to bepaid for doing compression and identification simultaneously.Furthermore, the constant hidden in the notation increaseswith the “richness” of the model class , as mea-sured by the Vapnik–Chervonenkis (VC) dimension [12] of acertain class of measurable subsets of the source alphabet asso-ciated with the sources.

The main limitation of the results of [9] and [10] is the i.i.d.assumption, which is rather restrictive as it excludes many prac-tically relevant model classes (e.g., autoregressive sources, orMarkov and hidden Markov processes). Furthermore, the as-sumption that the parameter space is bounded may not alwayshold, at least in the sense that we may not know the diameter of

a priori. In this paper, we relax both of these assumptions andstudy the existence and the performance of universal schemesfor joint lossy coding and identification of stationary sourcessatisfying a mixing condition, when the sources are assumed tobelong to a parametric model class , beingan open subset of for some finite . Because the parameterspace is not bounded, we have to use variable-rate codes withcountably infinite codebooks, and the performance of the codeis assessed by a composite Lagrangian functional [13], whichcaptures the tradeoff between the expected distortion and theexpected rate of the code. Our result is that, under certain reg-ularity conditions on the distortion measure and on the modelclass, there exist universal schemes for joint lossy source codingand identification such that, as the block length tends to in-finity, the gap between the actual Lagrangian performance andthe optimal Lagrangian performance achievable by variable-ratecodes at that block length, as well as the source estimation fi-delity at the decoder, converge to zero as ,where is the VC dimension of a certain class of decisionregions induced by the collection of the -di-mensional marginals of the source process distributions.

This result shows very clearly that the price to be paid foruniversality, in terms of both compression and identification,

grows with the richness of the underlying model class, as cap-tured by the VC dimension sequence . The richer the modelclass, the harder it is to learn, which affects the compressionperformance of our scheme because we use the source param-eters learned from past data to decide how to encode the cur-rent block. Furthermore, comparing the rate at which the La-grangian redundancy decays to zero under our scheme with the

result of Chou, Effros, and Gray [14], whose uni-versal scheme is not aimed at identification, we immediately seethat, in ensuring to satisfy the twin objectives of compressionand modeling, we inevitably sacrifice some compression per-formance.

The paper is organized as follows. Section II introducesnotation and basic concepts related to sources, codes, and VCclasses. Section III lists and discusses the regularity conditionsthat have to be satisfied by the source model class, and containsthe statement of our result. The result is proved in Section IV.Next, in Section V, we give three examples of parametricsource families (namely, i.i.d. Gaussian sources, Gaussianautoregressive sources, and hidden Markov processes), whichfit the framework of this paper under suitable regularity con-ditions. We conclude in Section VI and outline directions forfuture research. Finally, the Appendix contains some technicalresults on Lagrange-optimal variable-rate quantizers.

II. PRELIMINARIES

A. Sources

In this paper, a source is a discrete-time stationary ergodicrandom process with alphabet . We assumethat is a Polish space (i.e., a complete separable metric space1)and equip with its Borel -field. For any pair of indices

with , let denote the segmentof . If is the process distribution of , then we letdenote expectation with respect to , and let denote themarginal distribution of . Whenever carries a subscript,e.g., , we write instead. We assume that thereexists a fixed -finite measure on , such that the -dimen-sional marginal of any process distribution of interest is abso-lutely continuous with respect to the product measure , forall . We denote the corresponding densities by

. To avoid notational clutter, we omit the superscript from, , and whenever it is clear from the argument, as in

, , or .Given two probability measures and on a measurable

space , the variational distance between them is definedby

where the supremum is over all finite -measurable partitionsof (see, e.g., [15, Sec. 5.2]). If and are the densities of

and , respectively, with respect to a dominating measure ,then we can write

1The canonical example is the Euclidean space for some � ��.

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1947

A useful property of the variational distance is that, for any mea-surable function , .When and are -dimensional marginals of and , re-spectively, i.e., and , we write for

. If is a -subfield of , we define the variationaldistance between and with respect to by

where the supremum is over all finite -measurable partitionsof . Given a and a probability measure , the vari-ational ball of radius around is the set of all probabilitymeasures with .

Given a source with process distribution , let anddenote the marginal distributions of on and

, respectively. For each , the th-order absoluteregularity coefficient (or -mixing coefficient) of is defined as[16], [17]

where the supremum is over all finite -measurable par-titions and all finite -measurable partitions .Observe that

(1)

the variational distance between and the product distributionwith respect to the -algebra . Since

is stationary, we can “split” its process distribution at anypoint and define equivalently by

(2)

Again, if is subscripted by some , , then we write.

B. Codes

The class of codes we consider here is the collection of all fi-nite-memory variable-rate vector quantizers. Let be a repro-duction alphabet, also assumed to be Polish. We assume that

is a subset of a Polish space with a bounded metric: there exists some , such that

for all . We take ,, as our (single-letter) distortion function. A

variable-rate vector quantizer with block length and memorylength is a pair , whereis the encoder, is the decoder, andis a countable collection of binary strings satisfying the prefixcondition or, equivalently, the Kraft inequality

where denotes the length of in bits. The mapping of thesource into the reproduction process is defined by

That is, the encoding is done in blocks of length , but the en-coder is also allowed to observe the symbols immediatelypreceding each block. The effective memory of is definedas the set , such that

The size of is called the effective memory length of. We will often use to also denote the composite

mapping : . When the codehas zero memory , we will denote it more compactlyby .

The performance of the code on the source with process dis-tribution is measured by its expected distortion

where for and ,is the per-letter distortion incurred in

reproducing by , and by its expected rate

where denotes the length of a binary string in bits, nor-malized by . (We follow Neuhoff and Gilbert [18] and nor-malize the distortion and the rate by the length of the repro-duction block, not by the combined length of the sourceblock plus the memory input.) When working with variable-ratequantizers, it is convenient [13], [19] to absorb the distortion andthe rate into a single performance measure, the Lagrangian dis-tortion

where is the Lagrange multiplier which controls thedistortion-rate tradeoff. Geometrically, is the -in-tercept of the line with slope , passing through the point

in the rate-distortion plane [20]. Ifcarries a subscript, , then we write , , and

.

C. Vapnik–Chervonenkis Classes

In this paper, we make heavy use of VC theory (see [21],[22], [23], or [24] for detailed treatments). This section con-tains a brief summary of the needed concepts and results. Let

be a measurable space. For any collection ofmeasurable subsets of and any -tuple , define theset consisting of all distinct binary strings ofthe form , . Then

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1948 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

is called the th shatter coefficient of . The VC dimension of ,denoted by , is defined as the largest for which

(if for all , then we set ).If , then is called a VC class. If is a VC classwith , then it follows from the results of Vapnik andChervonenkis [12] and Sauer [25] that .

For a VC class , the so-called VC inequalities (see Lemma2.1) relate its VC dimension to maximal deviations of theprobabilities of the events in from their relative frequencieswith respect to an i.i.d. sample of size . For any , let

denote the induced empirical distribution, where is the Diracmeasure (point mass) concentrated at . We then have the fol-lowing.

Lemma 2.1 (VC Inequalities): Let be a probability measureon , and an -tuple of independentrandom variables with , . Let be a VC classwith . Then, for every

(3)

and

(4)

where is a universal constant. The probabilities and expec-tations are with respect to the product measure on .

Remark 2.1: A more refined technique involving metric en-tropies and empirical covering numbers, due to Dudley [26], canyield a much better bound on the expected maximaldeviation between the true and the empirical probabilities. Thisimprovement, however, comes at the expense of a much largerconstant hidden in the notation.

Finally, we will need the following lemma, which is a simplecorollary of the results of Karpinski and Macintyre [27] (seealso [24, Sec. 10.3.5]).

Lemma 2.2: Let be a collection ofmeasurable subsets of , such that

where for each , is a polynomial of degreein the components of . Then, is a VC class with

.

III. STATEMENT OF RESULTS

In this section, we state our result concerning universalschemes for joint lossy compression and identification of sta-tionary sources under certain regularity conditions. We workin the usual setting of universal source coding: we are givena source whose process distribution is knownto be a member of some parametric class . The

parameter space is an open subset of the Euclidean spacefor some finite , and we assume that has nonempty

interior. We wish to design a sequence of variable-rate vectorquantizers, such that the decoder can reliably reconstruct theoriginal source sequence and reliably identify the activesource in an asymptotically optimal manner for all . Webegin by listing the regularity conditions.

Condition 1: The sources in are algebraically-mixing: there exists a constant , such that

where the constant implicit in the notation may depend on.

This condition ensures that certain finite-block functions ofthe source can be approximated in distribution by i.i.d. pro-cesses, so that we can invoke the VC machinery of Section II-C.This “blocking” technique, which we exploit in the proof of ourTheorem 3.1, dates back to Bernstein [28], and was used by Yu[29] to derive rates of convergence in the uniform laws of largenumbers for stationary mixing processes, and by Meir [30] inthe context of nonparametric adaptive prediction of stationarytime series. As an example of when an even stronger decay con-dition holds, let be a finite-order autoregressivemoving-average (ARMA) process driven by a zero-mean i.i.d.process , i.e., there exist positive integers , , and

real constants such that

Mokkadem [31] has shown that, provided the common distri-bution of the is absolutely continuous and the roots of thepolynomial lie outside the unit circle in thecomplex plane, the -mixing coefficients of decay to zeroexponentially.

Condition 2: For each , there exist constantsand , such that

for all in the open ball of radius centered at , whereis the Euclidean norm on .

This condition guarantees that, for any sequence ofpositive reals such that

as

and any sequence in satisfying fora given , we have

as

It is weaker (i.e., more general) than the conditions of Rissanen[2], [3] which control the behavior of the relative entropy (in-formation divergence) as a function of the source parameters in

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1949

terms of the Fisher information and related quantities. Indeed,for each , let

be the normalized th-order relative entropy (informationdivergence) between and . Suppose that, for each ,

is twice continuously differentiable as a func-tion of . Let lie in an open ball of radius around .Since attains its minimum at , the gradient

evaluated at is zero, and we can writethe second-order Taylor expansion of about as

(5)

where the Hessian matrix

under additional regularity conditions, is equal to the Fisher in-formation matrix

(see [32]). Assume now, following [2] and [3], that the sequenceof matrix norms is bounded (by a constant dependingon ). Then, we can write

i.e., the normalized relative entropies are locallyquadratic in . Then, Pinsker’s inequality (see, e.g., [15,Lemma 5.2.8]) implies that ,and we recover our Condition 2. Rissanen’s condition, whilestronger than our Condition 2, is easier to check, the fact whichwe exploit in our discussion of examples of Section V.

Condition 3: For each , let be the collection of all setsof the form

Then we require that, for each , is a VC class, and.

This condition is satisfied, for example, whenindependently of , or when . The use of the

class dates back to the work of Yatracos [33] on minimum-distance density estimation. The ideas of Yatracos were furtherdeveloped by Devroye and Lugosi [34], [35], who dubbedthe Yatracos class (associated with the densities ). We willadhere to this terminology. To give an intuitive interpretation to

, let us consider a pair of distinct parameter vectorsand note that the set consists of all

for which the simple hypothesis test

versus (6)

is passed by the null hypothesis under the likelihood-ratiodecision rule. Now, suppose that are drawn inde-pendently from . To each , we can associate a classi-fier defined by . Calltwo sets equivalent with respect to the sample

, and write , if their associatedclassifiers yield identical classification patterns

It is easy to see that is an equivalence relation. From thedefinitions of the shatter coefficients and the VC di-mension (cf., Section II-C), we see that the cardinalityof the quotient set is equal to for all sample sizes

, whereas for , it is bounded fromabove by , which is strictly less than . Thus, the factthat the Yatracos class has finite VC dimension impliesthat the problem of estimating the density from a large i.i.d.sample reduces, in a sense, to a finite number [in fact, polyno-mial in the sample size , for ] of simple hypoth-esis tests of the type (6). Our Condition 1 will then allow us totransfer this intuition to (weakly) dependent samples.

Now that we have listed the regularity conditions that musthold for the sources in , we can state our mainresult.

Theorem 3.1: Let be a parametric class ofsources satisfying Conditions 1–3. Then, for every andevery , there exists a sequence of vari-able-rate vector quantizers with memory length

and effective memory length , such that, for all

(7)where the constants implicit in the notation depend on .Furthermore, for each , the binary description produced by theencoder is such that the decoder can identify the -dimensionalmarginal of the active source up to a variational ball of radius

with probability one.

What (7) says is that, for each block length and each ,the code , which is independent of , performs almost aswell as the best finite-memory quantizer with block lengththat can be designed with full a priori knowledge of the -di-mensional marginal . Thus, as far as compression goes, ourscheme can compete with all finite-memory variable-rate lossyblock codes (vector quantizers), with the additional bonus ofallowing the decoder to identify the active source in an asymp-totically optimal manner.

It is not hard to see that the double infimum in (7) is achievedalready in the zero-memory case . Indeed, it is immediate

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1950 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

that having nonzero memory can only improve the Lagrangianperformance, i.e.,

On the other hand, given any code , we canconstruct a zero-memory code , such that

for all . To see this, definefor each the set

for some

and let

and . Then, given any , let. We then have

Taking expectations, we see that forall , which proves that

The infimum of over all zero-memory variable-ratequantizers with block length is the operational th-orderdistortion-rate Lagrangian [20]. Because each is er-godic, converges to the distortion-rate Lagrangian

where is the Shannon distortion-rate function of (seeLemma 2 in Appendix of [14]). Thus, our scheme is universalnot only in the th-order sense of (7), but also in the distortion-rate sense, i.e.,

as

for every . Thus, in the terminology of [14], our schemeis weakly minimax universal for .

IV. PROOF OF THEOREM 3.1

A. The Main Idea

In this section, we describe the main idea behind the proofand fix some notation. We have already seen that it suffices toconstruct a universal scheme that can compete with all zero-memory variable-rate quantizers. That is, it suffices to show thatthere exists a sequence of codes, such that

(8)This is what we will prove.

We assume throughout that the “true” source is for some. Our code operates as follows. Suppose the following.

• Both the encoder and the decoder have access to a count-ably infinite “database” , where each

. Using Elias’ universal representation of the integers[36], we can associate to each a unique binary string

with bits.• A sequence of positive reals is given, such that

as

(we will specify the sequence later in the proof).• For each and each , there exists a zero-

memory -block code that achieves (orcomes arbitrarily close to) the th-order Lagrangian op-timum for : .

Fix the block length . Because the source is stationary, it suf-fices to describe the mapping of into . The encoding isdone as follows

1) The encoder estimates from the -block as, where .

2) The encoder then computes the waiting time

with the standard convention that the infimum of the emptyset is equal to . That is, the encoder looks through thedatabase and finds the first , such that the -dimen-sional distribution is in the variational ball of radius

around .

3) If , the encoder sets ; otherwise, the en-coder sets , where is some default parametervector, say, .

4) The binary description of is a concatenation of the fol-lowing three binary strings: i) a 1-bit flag to tell whether

is finite or infinite ; ii) a binary stringwhich is equal to if or to an empty

string if ; iii) . The stringis called the first-stage description, while is called thesecond-stage description.

The decoder receives , determines from , and producesthe reproduction . Note that when (which,as we will show, will happen eventually a.s.), lies in thevariational ball of radius around the estimated source .

If the latter is a good estimate of , i.e., asalmost surely (a.s.), then the estimate of the true source

computed by the decoder is only slightly worse. Furthermore, aswe will show, the almost-sure convergence of to zeroas implies that the Lagrangian performance of on

is close to the optimum .Formally, the code comprises the following maps:• the parameter estimator ;• the parameter encoder , where

;• the parameter decoder .

Let denote the composition of the parameter estimatorand the parameter encoder, which we refer to as the first-stageencoder, and let denote the composition of the parameterdecoder and the first-stage encoder. The decoder is the first-stage decoder. The collection defines the second-

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1951

stage codes. The encoder and thedecoder of are defined as

and

respectively. To assess the performance of , consider thefunction

The expectation of with respect tois precisely the Lagrangian performance of , at La-

grange multiplier , on the source . We consider separatelythe contributions of the first-stage and the second-stage codes.Define another function by

so that is the (random) La-grangian performance of the code on . Hence

so, taking expectations, we get

(9)

Our goal is to show that the first term in (9) converges to theth-order optimum , and that the second term is .The proof itself is organized as follows. First, we motivate

the choice of the memory lengths in Section IV-B. Then,we indicate how to select the database (Section IV-C) andhow to implement the parameter estimator (Section IV-D) andthe parameter encoder/decoder pair (Section IV-E). Theproof is concluded by estimating the Lagrangian performance ofthe resulting code (Section IV-F) and the fidelity of the sourceidentification at the decoder (Section IV-G). In the following,(in)equalities involving the relevant random variables are as-sumed to hold for all realizations and not just a.s., unless spec-ified otherwise.

B. The Memory Length

Let , where is the common decay exponentof the -mixing coefficients in Condition 1, and let

. We divide the -block into blocksof length interleaved by blocks of

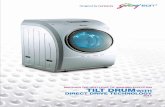

Fig. 1. The structure of the code� . The shaded blocks are those used forestimating the source parameters.

length (see Fig. 1). The parameter estimator , although de-fined as acting on the entire , effectively will make useonly of . The ’s are each distributed ac-cording to , but they are not independent. Thus, the set

is the effective memory of , and the effective memorylength is .

Let denote the marginal distribution of , and letdenote the product of copies of . We now show that we canapproximate by in variational distance, increasinglyfinely with . Note that both and are defined on the

-algebra , generated by all except those in ,so that . Therefore, usinginduction and the definition of the -mixing coefficient (cf.,Section II-A), we have

where the last equality follows from Condition 1 and from ourchoice of . This in turn implies the following useful fact (seealso [29, Lemma 4.1]), which we will heavily use in the proof:for any measurable function with

(10)

where the constant hidden in the notation depends onand on .

C. Construction of the Database

The database, or the first-stage codebook, is constructedby random selection. Let be a probability distribution on

which is absolutely continuous with respect to the Lebesguemeasure and has an everywhere positive and continuous den-sity . Let be a collection of independentrandom vectors taking values in , each generated according to

independently of . We use to denote the process distri-bution of .

Note that the first-stage codebook is countably infinite, whichmeans that, in principle, both the encoder and the decoder musthave unbounded memory in order to store it. This difficulty canbe circumvented by using synchronized random number gen-erators at the encoder and at the decoder, so that the entries of

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1952 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

can be generated as needed. Thus, by construction, the en-coder will generate samples (where is the waiting time)and then communicate (a binary encoding of) to the decoder.Since the decoder’s random number generator is synchronizedwith that of the encoder’s, the decoder will be able to recoverthe required entry of .

D. Parameter Estimation

The parameter estimator is constructed as fol-lows. Because the source is stationary, it suffices to describethe action of on . In the notation of Section IV-A, let

be the empirical distribution of . Forevery , define

where is the Yatracos class defined by the th-order densi-ties (see Section III). Finally, defineas any satisfying

where the extra term is there to ensure that at least onesuch exists. This is the so-called minimum-distance (MD)density estimator of Devroye and Lugosi [34], [35] (see also[37]), adapted to the dependent-process setting of this paper.The key property of the MD estimator is that

(11)

(see, e.g., [37, Th. 5.1]). This holds regardless of whether thesamples are independent.

E. Encoding and Decoding of Parameter Estimates

Next we construct the parameter encoder-decoder pair .Given a , define the waiting time

with the standard convention that the infimum of the empty setis equal to . That is, given a , the parameter encoderlooks through the codebook and finds the position of the first

such that the variational distance between the th-orderdistributions and is at most . If no such isfound, the encoder sets . We then define the mapsand by

ifif

and

respectively. Thus, , and the bound

(12)

holds for every , regardless of whether is finite orinfinite.

F. Performance of the Code

Given the random codebook , the expected Lagrangian per-formance of our code on the source is

(13)

We now upper-bound the two terms in (13). We start with thesecond term.

We need to bound the expectation of the waiting time. Our strategy borrows some elements from

[38]. Consider the probability

which is a random function of . From Condition 2, itfollows for sufficiently large that

where . Because the density is every-where positive, the latter probability is strictly positive for al-most all , and so eventually a.s. Thus, thewaiting times will be finite eventually a.s. (with respect toboth the source and the first-stage codebook ). Now, if

, then, conditioned on , the waitingtime is a geometric random variable with parameter , andit is not difficult to show (see, e.g., [38, Lemma 3]) that for any

Setting , we have, for almost all , that

Then, by the Borel–Cantelli lemma

eventually a.s., so that

(14)

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1953

for almost every realization of the random codebook and forsufficiently large . We now obtain an asymptotic lower boundon . Define the events

Then, by the triangle inequality, we have

and

and, for sufficiently large, we can write

where (a) follows from the independence of and , (b) fol-lows from the fact that the parameter estimator de-pends only on , and (c) follows from Condition 2 and thefact that . Since the density is everywhere positiveand continuous at , for all forsufficiently large, so

(15)

where is the volume of the unit sphere in . Next, the factthat the minimum-distance estimate depends onlyon implies that the event belongs to the -algebra ,and from (10), we get

(16)

Under , the -blocks are i.i.d. according to ,and we can invoke the VC machinery to lower bound .In the notation of Section IV-D, define the event

Then, implies by (11), and

(17)

where the second bound is by the VC inequality (3) of Lemma2.1. Combining the bounds (16) and (17) and using Condition1, we obtain

(18)Now, if we choose

then the right-hand side of (18) can be further lower bounded by. Combining this with (15), taking logarithms, and

then taking expectations, we obtain

where is a constant that depends only on and . Usingthis and (14), we get that

for -almost every realization of the random codebook , forsufficiently large. Together with (12), this implies that

for -almost all realizations of the first-stage codebook.We now turn to the first term in (13). Recall that, for each

, the code is th-order optimal for . Using this facttogether with the boundedness of the distortion measure , wecan invoke Lemma A.3 in the Appendix and assume withoutloss of generality that each has a finite codebook (of sizenot exceeding ), and each codevector can be describedby a binary string of no more than bits. Hence,

. Let and be the marginaldistributions of on and , respectively.Note that does not depend on . This,together with Condition 1 and the choice of , implies that

Furthermore

where (a) follows by Fubini’s theorem and the boundedness of, while (b) follows from the definition of . The Lagrangian

performance of the code , where , can befurther bounded as

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1954 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

where (a) follows from Lemma A.3 in the Appendix, (b) fol-lows from the th-order optimality of for , (c) follows,overbounding slightly, from the Lagrangian mismatch bound ofLemma A.2 in the Appendix, and (d) follows from the triangleinequality. Taking expectations, we obtain

(19)

The second term in (19) can be interpreted as the esti-mation error due to estimating by , while the firstis the approximation error due to quantization of the parameterestimate . We examine the estimation error first. Using (11),we can write

(20)

Now, each is distributed according to , and we can ap-proximate the expectation of with respect to bythe expectation of with respect to the product measure

where the second estimate follows from the VC inequality (4)and from the choice of . This, together with (20), yields

(21)

As for the first term in (19), we have, by construction ofthe first-stage encoder, that

(22)

eventually a.s., so the corresponding expectation isas well. Summing the estimates (21) and

(22), we obtain

Finally, putting everything together, we see that, eventually

(23)

for -almost every realization of the first-stage codebook .This proves (8), and hence (7).

G. Identification of the Active Source

We have seen that the expected variational distancebetween the -dimensional

marginals of the true source and the estimated sourceconverges to zero as . We wish

to show that this convergence also holds eventually with prob-ability one, i.e.,

(24)

-a.s.Given an , we have by the triangle inequality that

implies

where is the minimum-distance estimate offrom (cf., Section IV-D). Recalling our constructionof the first-stage encoder, we see that this further implies

Finally, using the property (11) of the minimum-distance esti-mator, we obtain that

implies

Therefore

(25)

where (a) follows, as before, from the definition of the -mixingcoefficient and (b) follows by the VC inequality. Now, if wechoose

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1955

for an arbitrary small , then (25) can be further upperbounded by , which, owing to Condition1 and the choice , is summable in . Thus

and we obtain (24) by the Borel–Cantelli lemma.

V. EXAMPLES

A. Stationary Memoryless Sources

As a basic check, let us see how Theorem 3.1 applies tostationary memoryless (i.i.d.) sources. Let , and let

be the collection of all Gaussian i.i.d. processes,where

Then, the -dimensional marginal for a given hasthe Gaussian density

with respect to the Lebesgue measure. This class of sources triv-ially satisfies Condition 1 with , and it remains to checkConditions 2 and 3.

To check Condition 2, let us examine the normalizedth-order relative entropy between and , with

and . Because the sources are i.i.d.,

Applying the inequality and some straightforwardalgebra, we get the bound

Now fix a small , and suppose that . Then,, so we can further upper bound by

Thus, for a given , we see that

for all in the open ball of radius around , with. Using Pinsker’s inequality, we have

for all . Thus, Condition 2 holds.

To check Condition 3, note that, for each , the Yatracos classconsists of all sets of the form

(26)

for all ; . Let and. Then, we can rewrite (26) as

This is the set of all such that

where is a third-degree polynomial in the six param-eters . It then follows from Lemma2.2 that is a VC class with . There-fore, Condition 3 holds as well.

B. Autoregressive Sources

Again, let and consider the case when is a Gaussianautoregressive source of order , i.e., it is the output of an autore-gressive filter of order driven by white Gaussian noise. Then,there exist real parameters (the filter coefficients),such that

where is an i.i.d. Gaussian process with zeromean and unit variance. Let be the set of all ,such that the roots of the polynomial , where

, lie outside the unit circle in the complex plane. Thisensures that is a stationary process. We now proceed to checkthat Conditions 1–3 of Section III are satisfied.

The distribution of each is absolutely continuous, and wecan invoke the result of Mokkadem [31] to conclude that, foreach , the process is geometrically mixing, i.e., forevery , there exists some , such that

. Now, for any fixed , forsufficiently large, so Condition 1 holds.

To check Condition 2, note that, for each , the Fisher in-formation matrix is independent of (see, e.g., [39, Sec.VI]). Thus, the asymptotic Fisher information matrix

exists and is nonsingular [39, Th. 6.1], so, re-calling the discussion in Section III, we conclude that Condition2 holds also.

To verify Condition 3, consider the -dimensional marginal, which has the Gaussian density

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1956 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

where is the th-order autocorrela-tion matrix of . Thus, the Yatracos class consists of setsof the form

for all . Now, for every , let. Since is uniquely deter-

mined by , we have for all . Usingthis fact, as well as the easily established fact that the entriesof the inverse covariance matrix are second-degreepolynomials in the filter coefficients , we see that,for each , the condition can be expressed as

, where is quadratic in thereal variables . Thus, we can applyLemma 2.2 to conclude that .Therefore, Condition 3 is satisfied as well.

C. Hidden Markov Processes

A hidden Markov process (or a hidden Markov model;see, e.g., [40]) is a discrete-time bivariate random process

, where is a homogeneous Markov chainand is a sequence of random variables whichare conditionally independent given , and the conditionaldistribution of is time-invariant and depends on onlythrough . The Markov chain , also called the regime, is notavailable for observation. The observable component is thesource of interest. In information theory (see, e.g., [41] andreferences therein), a hidden Markov process is a discrete-timefinite-state homogeneous Markov chain , observed througha discrete-time memoryless channel, so that is theobservation sequence at the output of the channel.

Let denote the number of states of . We assumewithout loss of generality that the state space of is the set

. Let denote thetransition matrix of , where . Ifis ergodic (i.e., irreducible and aperiodic), then there exists aunique probability distribution on such that (thestationary distribution of ); see, e.g., [42, Sec. 8]. Because inthis paper we deal with two-sided random processes, we assumethat has been initialized with its stationary distribution atsome time sufficiently far away in the past, and can thereforebe thought of as a two-sided stationary process. Now considera discrete-time memoryless channel with input alphabet andoutput (observation) alphabet for some . Itis specified by a set of transitiondensities (with respect to , the Lebesgue measure on ). Thechannel output sequence is the source of interest.

Let us take as the parameter space the set ofall transition matrices , such that all forsome fixed . For each and each ,the density is given by

where for every . We assume that the channeltransition densities , are fixed a priori, and do notinclude them in the parametric description of the sources. Wedo require, though, that

and

We now proceed to verify that Conditions 1–3 of Section III aremet.

Let denote the -step transitionprobability for states . The positivity of implies thatthe Markov chain is geometrically ergodic, i.e.,

(27)

where and ; see [42, Th. 8.9]. Note that (27)implies that

This in turn implies that the sequence is exponentially-mixing; see [24, Th. 3.10]. Now, one can show (see [24, Sec.

3.5.3]) that there exists a measurable mapping, such that , where is an i.i.d. se-

quence of random variables distributed uniformly on , in-dependently of . It is not hard to show that, if is exponentially

-mixing, then so is the bivariate process . Finally,because is given by a time-invariant deterministic functionof , the -mixing coefficients of are bounded by thecorresponding -mixing coefficients of , and so is ex-ponentially -mixing as well. Thus, for each , there existsa , such that , and conse-quently Condition 1 holds.

To show that Condition 2 holds, we again examine the asymp-totic behavior of the Fisher information matrix as .Under our assumptions on the state transition matrices in andon the channel transition densities , we can in-voke the results of [43, Sec. 6.2] to conclude that the asymptoticFisher information matrix exists (thoughit is not necessarily nonsingular). Thus, Condition 2 is satisfied.

Finally, we check Condition 3. The Yatracos class con-sists of all sets of the form

for all . The condition canbe written as , where for each ,is a polynomial of degree in the parameters , ,

. Thus, Lemma 2.2 implies that, so Condition 3 is satisfied as well.

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1957

VI. CONCLUSION AND FUTURE DIRECTIONS

We have shown that, given a parametric family of stationarymixing sources satisfying some regularity conditions, there ex-ists a universal scheme for joint lossy compression and sourceidentification, with the th-order Lagrangian redundancy andthe variational distance between -dimensional marginals ofthe true and the estimated source both converging to zero as

, as the block length tends to infinity. The se-quence quantifies the learnability of the -dimensionalmarginals. This generalizes our previous results from [9] and[10] for i.i.d. sources.

We can outline some directions for future research.

• Both in our earlier work [9], [10] and in this paper, we as-sume that the dimension of the parameter space is known apriori. It would be of interest to consider the case when theparameter space is finite dimensional, but its dimension isnot known. Thus, we would have a hierarchical model class

, where, for each , is an opensubset of , and we could use a complexity regularizationtechnique, such as “structural risk minimization” (see, e.g.,[44] or [22, Ch. 6]), to adaptively trade off the estimationand the approximation errors.

• The minimum-distance density estimator of [34] and [35],which plays the key role in our scheme both here and in[9] and [10], is not easy to implement in practice, espe-cially for multidimensional alphabets. On the other hand,there are two-stage universal schemes, such as that of [14],which do not require memory and select the second-stagecode based on pointwise, rather than average, behavior ofthe source. These schemes, however, are geared towardcompression, and do not emphasize identification. It wouldbe worthwhile to devise practically implementable uni-versal schemes that strike a reasonable compromise be-tween these two objectives.

• Finally, neither here nor in our earlier work [9], [10] havewe considered the issues of optimality. It would be of in-terest to obtain lower bounds on the performance of anyuniversal scheme for joint lossy compression and identifi-cation, say, in the spirit of minimax lower bounds in statis-tical learning theory (cf., e.g., [21, Ch. 14]).

Conceptually, our results indicate that links between sta-tistical modeling (parameter estimation) and universal sourcecoding, exploited in the lossless case by Rissanen [2], [3], arepresent in the domain of lossy coding as well. We should alsomention that another modeling-based approach to universallossy source coding, due to Kontoyiannis and others (see, e.g.,[45] and references therein), treats code selection as a statis-tical estimation problem over a class of model distributions inthe reproduction space. This approach, while closer in spiritto Rissanen’s minimum description length (MDL) principle[46], does not address the problem of joint source coding andidentification, but it provides a complementary perspective on

the connections between lossy source coding and statisticalmodeling.

APPENDIX

PROPERTIES OF LAGRANGE-OPTIMAL

VARIABLE-RATE QUANTIZERS

In this Appendix, we detail some properties of Lagrange-op-timal variable-rate vector quantizers. Our exposition is patternedon the work of Linder [19], with appropriate modifications.

As elsewhere in the paper, let be the source alphabetand the reproduction alphabet, both assumed to be Polishspaces. As before, let the distortion function be induced by a

-bounded metric on a Polish space containing .For every , define the metric on by

For any pair of probability measures on , letbe the set of all probability measures on

having and as marginals, and define theWasserstein metric

(see [47] for more details and applications.) Note that, becauseis a bounded metric

for all . Taking the infimum of both sidesover all and observing that

(see, e.g., [48, Sec. I.5]), we get the useful bound

(A.1)

Now, for each , let denote the set of all discrete proba-bility distributions on with finite entropy. That is,if and only if it is concentrated on a finite or a countable set

, and

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1958 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

For every , consider the set of all one-to-onemaps , such that, for each , thecollection satisfies the Kraft inequality, and let

be the minimum expected code length. Since the entropy of isfinite, there is always a minimizing , and the Shannon–Fanobound (see [1, Sec. 5.4]) guarantees that .

Now, for any , any probability distribution on ,and any , define

To give an intuitive meaning to , let and bejointly distributed random variables with and

, such that their joint distribution achieves. Then, is the expected Lagrangian per-

formance, at Lagrange multiplier , of a stochastic variable-ratequantizer which encodes each point as a binary code-word with length and decodes it to in the support ofwith probability .

The following lemma shows that deterministic quantizers areas good as random ones.

Lemma A.1: Let be the expected Lagrangianperformance of an -block variable rate quantizer operating on

, and let be the expected Lagrangian perfor-mance, with respect to , of the best -block variable-rate quan-tizer. Then

Proof: Consider any quantizer with. Let be the distribution of .

Clearly, , and

Hence, . To prove the reverseinequality, suppose that and achievefor some . Let be their joint distribution. Let

be the support of , letachieve , and let be the associated binary

code. Define the quantizer by

if

and

Then

On the other hand

so that , and the lemma isproved.

The following lemma gives a useful upper bound on the La-grangian mismatch.

Lemma A.2: Let , be probability distributions on .Then

Proof: Suppose . Let achieve(or be arbitrarily close). Then

where in (a) we used Lemma A.1, in (b) we used the definitionof , in (c) we used the fact that is a metric andthe triangle inequality, and in (d) we used the bound (A.1).

Finally, Lemma A.3 below shows that, for bounded distortionfunctions, Lagrange-optimal quantizers have finite codebooks.

Lemma A.3: For positive integers and , letdenote the set of all zero-memory variable-rate quantizers withblock length , such that for every , the asso-ciated binary code of satisfies and forevery . Let be a probability distribution on . Then

with and .

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

RAGINSKY: JOINT UNIVERSAL LOSSY CODING AND IDENTIFICATION OF STATIONARY MIXING SOURCES 1959

Proof: Let with encoder and decoderachieve the th-order optimum for .

Let be the shortest binary string in , i.e.,

Without loss of generality, we can take as the minimum-dis-tortion encoder, i.e.,

Thus, for any and any

Hence, for all . Furthermore,.

Now pick an arbitrary reproduction string , let bethe empty binary string (of length zero), and let be the zero-rate quantizer with the constant encoder and thedecoder . Then,

. On the other hand, .Therefore

so that . Hence

Since the strings in must satisfy Kraft’s inequality, we have

which implies that .

ACKNOWLEDGMENT

The author would like to thank A. R. Barron, I. Kontoyiannis,and M. Madiman for stimulating discussions, and the anony-mous reviewers for several useful suggestions that helped im-prove the paper.

REFERENCES

[1] T. M. Cover and J. A. Thomas, Elements of Information Theory. NewYork: Wiley, 1991.

[2] J. Rissanen, “Universal coding, information, prediction, and estima-tion,” IEEE Trans. Inf. Theory, vol. IT-30, no. 4, pp. 629–636, Jul.1984.

[3] J. Rissanen, “Fisher information and stochastic complexity,” IEEETrans. Inf. Theory, vol. 42, no. 1, pp. 40–47, Jan. 1996.

[4] J. Ziv and A. Lempel, “Compression of individual sequences byvariable-rate coding,” IEEE Trans. Inf. Theory, vol. IT-24, no. 5, pp.530–536, Sep. 1978.

[5] J. C. Kieffer, “Strongly consistent code-based identification and orderestimation for constrained finite-state model classes,” IEEE Trans. Inf.Theory, vol. 39, no. 3, pp. 893–902, May 1993.

[6] N. Merhav, “Bounds on achievable convergence rates of parameter es-timation via universal coding,” IEEE Trans. Inf. Theory, vol. 40, no. 4,pp. 1210–1215, Jul. 1994.

[7] T. Weissman and E. Ordentlich, “The empirical distribution of rate-constrained source codes,” IEEE Trans. Inf. Theory, vol. 51, no. 11,pp. 3718–3733, Nov. 2005.

[8] G. Tao, Adaptive Control Design and Analysis. Hoboken, NJ: Wiley,2003.

[9] M. Raginsky, “Joint fixed-rate universal lossy coding and identificationof continuous-alphabet memoryless sources,” IEEE Trans. Inf. Theory,vol. 54, no. 7, pp. 3059–3077, Jul. 2008.

[10] M. Raginsky, “Joint universal lossy coding and identification of i.i.d.vector sources,” in Proc. IEEE Int. Symp. Inf. Theory, Seattle, WA, Jul.2006, pp. 577–581.

[11] E.-H. Yang and Z. Zhang, “On the redundancy of lossy source codingwith abstract alphabets,” IEEE Trans. Inf. Theory, vol. 45, no. 4, pp.1092–1110, May 1999.

[12] V. N. Vapnik and A. Y. Chervonenkis, “On the uniform convergenceof relative frequencies of events to their probabilities,” Theory Probab.Appl., vol. 16, pp. 264–280, 1971.

[13] P. A. Chou, T. Lookabaugh, and R. M. Gray, “Entropy-constrainedvector quantization,” IEEE Trans. Acoust. Speech Signal Process., vol.37, no. 1, pp. 31–42, Jan. 1989.

[14] P. A. Chou, M. Effros, and R. M. Gray, “A vector quantization ap-proach to universal noiseless coding and quantization,” IEEE Trans.Inf. Theory, vol. 42, no. 4, pp. 1109–1138, Jul. 1996.

[15] R. M. Gray, Entropy and Information Theory. New York: Springer-Verlag, 1990.

[16] V. A. Volkonskii and Y. A. Rozanov, “Some limit theorems for randomfunctions, I,” Theory Probab. Appl., vol. 4, pp. 178–197, 1959.

[17] V. A. Volkonskii and Y. A. Rozanov, “Some limit theorems for randomfunctions, II,” Theory Probab. Appl., vol. 6, pp. 186–198, 1961.

[18] D. L. Neuhoff and R. K. Gilbert, “Causal source codes,” IEEE Trans.Inf. Theory, vol. IT-28, no. 5, pp. 701–713, Sep. 1982.

[19] T. Linder, “Learning-theoretic methods in vector quantization,” inPrinciples of Nonparametric Learning, L. Györfi, Ed. New York:Springer-Verlag, 2001.

[20] M. Effros, P. A. Chou, and R. M. Gray, “Variable-rate source codingtheorems for stationary nonergodic sources,” IEEE Trans. Inf. Theory,vol. 40, no. 6, pp. 1920–1925, Nov. 1994.

[21] L. Devroye, L. Györfi, and G. Lugosi, A Probabilistic Theory of PatternRecognition. New York: Springer-Verlag, 1996.

[22] V. N. Vapnik, Statistical Learning Theory. New York: Wiley, 1998.[23] L. Devroye and G. Lugosi, Combinatorial Methods in Density Estima-

tion. New York: Springer-Verlag, 2001.[24] M. Vidyasagar, Learning and Generalization, 2nd ed. London, U.K.:

Springer-Verlag, 2003.[25] N. Sauer, “On the density of families of sets,” J. Combin. Theory Series

A, vol. 13, pp. 145–147, 1972.[26] R. M. Dudley, “Central limit theorems for empirical measures,” Ann.

Probab., vol. 6, pp. 898–929, 1978.[27] M. Karpinski and A. Macintyre, “Polynomial bounds for VC dimen-

sion of sigmoidal and general Pfaffian neural networks,” J. Comput.Syst. Sci., vol. 54, pp. 169–176, 1997.

[28] S. N. Bernstein, “Sur l’extension du théorème limite du calcul des prob-abilités aux sommes de quantités dependantes,” Math. Ann., vol. 97, pp.1–59, 1927.

[29] B. Yu, “Rates of convergence for empirical processes of stationarymixing sequences,” Ann. Probab., vol. 22, no. 1, pp. 94–116, 1994.

[30] R. Meir, “Nonparametric time series prediction through adaptive modelselection,” Mach. Learn., vol. 39, pp. 5–34, 2000.

[31] A. Mokkadem, “Mixing properties of ARMA processes,” StochasticProcess. Appl., vol. 29, pp. 309–315, 1988.

[32] B. S. Clarke and A. R. Barron, “Information-theoretic asymptotics ofBayes methods,” IEEE Trans. Inf. Theory, vol. 36, no. 3, pp. 453–471,May 1990.

[33] Y. G. Yatracos, “Rates of convergence of minimum distance estimatesand Kolmogorov’s entropy,” Ann. Math. Statist., vol. 13, pp. 768–774,1985.

[34] L. Devroye and G. Lugosi, “A universally acceptable smoothing factorfor kernel density estimation,” Ann. Statist., vol. 24, pp. 2499–2512,1996.

[35] L. Devroye and G. Lugosi, “Nonasymptotic universal smoothing fac-tors, kernel complexity and Yatracos classes,” Ann. Statist., vol. 25, pp.2626–2637, 1997.

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

1960 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 55, NO. 5, MAY 2009

[36] P. Elias, “Universal codeword sets and representations of the integers,”IEEE Trans. Inf. Theory, vol. IT-21, no. 2, pp. 194–203, Mar. 1975.

[37] L. Devroye and L. Györfi, “Distribution and density estimation,” inPrinciples of Nonparametric Learning, L. Györfi, Ed. New York:Springer-Verlag, 2001.

[38] I. Kontoyiannis and J. Zhang, “Arbitrary source models and Bayesiancodebooks in rate-distortion theory,” IEEE Trans. Inf. Theory, vol. 48,no. 8, pp. 2276–2290, Aug. 2002.

[39] A. Klein and P. Spreij, “The Bezoutian, state space realizations andFisher’s information matrix of an ARMA process,” Lin. Algebra Appl.,vol. 416, pp. 160–174, 2006.

[40] P. J. Bickel, Y. Ritov, and T. Rydén, “Asymptotic normality of the max-imum-likelihood estimator for general hidden Markov models,” Ann.Statist., vol. 26, no. 4, pp. 1614–1635, 1997.

[41] Y. Ephraim and N. Merhav, “Hidden Markov processes,” IEEE Trans.Inf. Theory, vol. 48, no. 6, pp. 1518–1569, Jun. 2002.

[42] P. Billingsley, Probability and Measure, 3rd ed. New York: Wiley.[43] R. Douc, É. Moulines, and T. Rydén, “Asymptotic properties of the

maximum likelihood estimator in autoregressive models with Markovregime,” Ann. Statist., vol. 32, no. 5, pp. 2254–2304, 2004.

[44] G. Lugosi and K. Zeger, “Concept learning using complexity regular-ization,” IEEE Trans. Inf. Theory, vol. 42, no. 1, pp. 48–54, Jan. 1996.

[45] M. Madiman and I. Kontoyiannis, “Second-order properties of lossylikelihoods and the MLE/MDL dichotomy in lossy compression,”Brown University, APPTS Report-5, 2004 [Online]. Available:http://www.dam.brown.edu/ptg/REPORTS/04–5.pdf

[46] A. Barron, J. Rissanen, and B. Yu, “Minimum description length prin-ciple in coding and modeling,” IEEE Trans. Inf. Theory, vol. 44, no. 6,pp. 2743–2760, Oct. 1998.

[47] R. M. Gray, D. L. Neuhoff, and P. S. Shields, “A generalization ofOrnstein’s �� distance with applications to information theory,” Ann.Probab., vol. 3, no. 2, pp. 315–328, 1975.

[48] T. Lindvall, Lectures on the Coupling Method. New York: Dover,2002.

Maxim Raginsky (S’99–M’00) received the B.S. and M.S. degrees in 2000and the Ph.D. degree in 2002 from Northwestern University, Chicago, IL, all inelectrical engineering.

From 2002 to 2004, he was a Postdoctoral Researcher at the Center for Pho-tonic Communication and Computing, Northwestern University, where he pur-sued work on quantum cryptography and quantum communication and infor-mation theory. From 2004 to 2007, he was a Beckman Foundation PostdoctoralFellow at the University of Illinois in Urbana-Champaign, where he carried outresearch on information theory, statistical learning and computational neuro-science. In September 2007, he has joined the Department of Electrical andComputer Engineering at Duke University as a Research Scientist. His interestsinclude statistical signal processing, information theory, statistical learning, andnonparametric estimation. He is particularly interested in problems that combinethe communication, signal processing, and machine learning components in anovel and nontrivial way, as well as in the theory and practice of robust statis-tical inference with limited information.

Authorized licensed use limited to: IEEE Xplore. Downloaded on April 22, 2009 at 13:33 from IEEE Xplore. Restrictions apply.

![On the Policy Iteration algorithm for PageRank Optimization reddot/commission-map... · this, [IT09] mentioned the lack of efficient methods to solve this problem and they suggested](https://static.fdocuments.us/doc/165x107/5c62b90b09d3f291208b5f18/on-the-policy-iteration-algorithm-for-pagerank-optimization-reddotcommission-map.jpg)