Feature Forest Models for Syntactic Parsing Yusuke Miyao University of Tokyo.

-

Upload

michael-cochran -

Category

Documents

-

view

217 -

download

0

Transcript of Feature Forest Models for Syntactic Parsing Yusuke Miyao University of Tokyo.

Feature Forest Modelsfor Syntactic Parsing

Yusuke Miyao

University of Tokyo

Probabilistic models for NLP

• Widely used for disambiguation of linguistic structures

• Ex.) POS tagging

A pretty girl is cryingNN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBGP(NN|a/NN, pretty)

Probabilistic models for NLP

• Widely used for disambiguation of linguistic structures

• Ex.) POS tagging

A pretty girl is cryingNN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

Probabilistic models for NLP

• Widely used for disambiguation of linguistic structures

• Ex.) POS tagging

A pretty girl is cryingNN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

Implicit assumption

• Processing state = Primitive probability– Efficient algorithm for searching– Avoid exponential explosion of ambiguities

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

NN

DT

VBZ

JJ

VBG

A pretty girl is crying

POS tag = processing state = primitive probability

The assumption is right?

• Ex.) Shallow parsing, NE recognition

The assumption is right?

• Ex.) Shallow parsing, NE recognition

NP-B

VP-I

NP-I

O

VP-B

A pretty girl is cryingNP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

The assumption is right?

• Ex.) Shallow parsing, NE recognition– B(Begin), I(Internal), O(Other) tags are

introduced to represent multi-word tags

NP-B

VP-I

NP-I

O

VP-B

A pretty girl is cryingNP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

The assumption is right?

• Ex.) Syntactic parsing

The assumption is right?

• Ex.) Syntactic parsing

What do you want to give?

VP

VP

S

S

S

P(VP|VP→to give)

The assumption is right?

• Ex.) Syntactic parsing– Non-local dependencies are not

represented

What do you want to give?

VP

VP

S

S

S

P(VP|VP→to give)

Problem of existing models

• Processing state Primitive probability

Problem of existing models

• Processing state Primitive probability

• How to model the probability of ambiguous structures with more flexibility?

Possible solution

• A complete structure is a primitive event– Ex.) Shallow parsing

NP-B

VP-I

NP-I

O

VP-B

A pretty girl is cryingNP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

NP-B

VP-I

NP-I

O

VP-B

Possible solution

• A complete structure is a primitive event– Ex.) Shallow parsing

NP VP

NP VP

A pretty girl is cryingNP VP

NP VP NP

NP VP NP

All possiblesequences

Possible solution

• A complete structure is a primitive event– Ex.) Shallow parsing

• Probability of the sequence of multi-word tags

NP VP

NP VP

A pretty girl is cryingNP VP

NP VP NP

NP VP NP

All possiblesequences

Possible solution

• A complete structure is a primitive event– Ex.) Shallow parsing

• Probability of the sequence of multi-word tags

NP VP

NP VP

A pretty girl is cryingNP VP

NP VP NP

NP VP NP

All possiblesequences

Possible solution

• A complete structure is a primitive event– Ex.) Syntactic parsing

What do you want to give?

VP

VP

S

S

S

Possible solution

• A complete structure is a primitive event– Ex.) Syntactic parsing

whatdo

youwant

to

give

ARG1

ARG1

ARG2

MODIFY

MODIFY

ARG2

Possible solution

• A complete structure is a primitive event– Ex.) Syntactic parsing

• Probability of argument structures

whatdo

youwant

to

give

ARG1

ARG1

ARG2

MODIFY

MODIFY

ARG2

Problem

• Complete structures have exponentially many ambiguities

NP VP

NP VP

A pretty girl is cryingNP VP

NP VP NP

NP VP NP

Exponentiallymany

sequences

Proposal

• Feature forest model [Miyao and Tsujii, 2002]

Proposal

• Feature forest model [Miyao and Tsujii, 2002]

1d

4d 5d3d2d

1c

3c

2c

4c 6c5c

7d6d

7c 8c 10c9c

Conjunctive node

Conjunctive node

Disjunctive node

Disjunctive node

FeaturesFeatures ,,)( 216 ffc

• Exponentially many trees are packed

• Features are assigned to each conjunctive node

Feature forest model

• Feature forest models can be efficiently estimated without exponential explosion [Miyao and Tsujii, 2002]

Feature forest model

• Feature forest models can be efficiently estimated without exponential explosion [Miyao and Tsujii, 2002]

• When unpacking the forest, the model is equivalent to maximum entropy models [Berger et al., 1996]

Application to parsing

• Applying a feature forest model to disambiguation of argument structures

Application to parsing

• Applying a feature forest model to disambiguation of argument structures

• How to represent exponential ambiguities of argument structures with a feature forest?

Application to parsing

• Applying a feature forest model to disambiguation of argument structures

• How to represent exponential ambiguities of argument structures with a feature forest?– Argument structures are not trees, but

DAGs (including reentrant structures)

wantARG1

ARG2

Iargue11

ARG1 1

fact

ARG1

wantARG1

ARG2

Iargue21

ARG1 1ARG2 fact

Packing argument structures

• An example including reentrant structures

She neglected the fact that I wanted to argue.

I

Packing argument structures

She neglected the fact that I wanted to argue.

wantARG1

ARG2

Iargue11

ARG1 1

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

She neglected the fact that I wanted to argue.

I

wantARG1

ARG2

Iargue11

ARG1 1

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

wantARG1

ARG2

Iargue21

ARG1 1ARG2 ?

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

wantA1A2 argue2

I

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

wantA1A2 argue2

I

wantARG1

ARG2

Iargue21

ARG1 1ARG2 fact

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

wantA1A2 argue2

I

factargue2A1A2 fact

I

Packing argument structures

• Inactive parts: Argument structures whose arguments are all instantiated

• Inactive parts are packed into conjunctive nodes

She neglected the fact that I wanted to argue.

I

wantA1A2

argue1I A1

argue1I

wantA1A2 argue2

I

factargue2A1A2 fact

IfactA1 want

Feature forest representationof argument structures

factA1 want

factargue2A1A2

wantA1A2

argue1I A1

She neglected the fact that I wanted to argue.

I

argue1I

wantA1A2 argue2

I

factI

she

neglectA1A2 fact

sheConjunctive nodes correspond to argument structures whose arguments are all instantiated

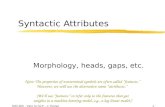

Experiments

• Grammar: a treebank grammar of HPSG [Miyao and Tsujii, 2003]

– Extracted from the Penn Treebank [Marcus et al., 1994] Section 02-21

• Training: Section 02-21 of the Penn Treebank• Test: sentences from Section 22 covered by

the grammar• Measure: Accuracy of dependencies in

argument structures

Experiments

• Features: the combinations of– Surface strings/POS– Labels of dependencies (ARG1, ARG2, …)– Labels of lexical entries (head noun, transitive, …)– Distance

• Estimation algorithm: Limited-memory BFGS algorithm [Nocedal, 1980] with MAP estimation [Chen & Rosenfeld, 1999]

Preliminary results

• Estimation time: 143 min.

• Accuracy (precision/recall):

exact partial

Baseline 48.1 / 47.4 57.1 / 56.2

Unigram 77.3 / 77.4 81.1 / 81.3

Feature forest 85.5 / 85.3 88.4 / 88.2

Conclusion

• Feature forest models allow the probabilistic modeling of complete structures without exponential explosion

• The application to syntactic parsing resulted in the high accuracy

Ongoing work

• Refinement of the grammar and tuning of estimation parameters

• Development of efficient algorithms for best-first/beam search