DELE: a Deaf-centred E-Learning Environment · DELE: a Deaf-centred E-Learning Environment Paolo...

Transcript of DELE: a Deaf-centred E-Learning Environment · DELE: a Deaf-centred E-Learning Environment Paolo...

Chiang Mai J. Sci. 2011; 38 (Special Issue) 31

Chiang Mai J. Sci. 2011; 38 (Special Issue) : 31-57www.science.cmu.ac.th/journal-science/josci.htmlContributed Paper

DELE: a Deaf-centred E-Learning EnvironmentPaolo Bottoni*, Daniele Capuano, Maria De Marsico, Anna Labella, and Stefano LevialdiPictorial Computing Laboratory, Department of Computer Science , “Sapienza” University of Rome,Via Salaria 113, 00198 Rome, Italy.*Author for correspondence; e-mail: [email protected]

Received: 15 July 2010 Accepted: 31 January 2011

ABSTRACTWe present a framework to address educational issues, especially focusing on deaf

people, and present a preliminary model based on recent methodological findings. We startedto develop multimedia learning environments based on Storytelling and Conceptual Metaphors,and adopt Cognitive Embodiment as a framework to address sensorially-critical subjects. Weare using such methods to develop a Deaf-centered E-Learning Environment (DELE).Therefore, a Story Controller is designed for supporting stories handling. Its behaviour isformally specified through a formal model based on statecharts.

Keywords: storytelling, conceptual metaphor, cognitive enbodiment, blending, sensorially-critical, e-learning.

1. INTRODUCTIONThere is wide consensus that teaching

effectiveness and student motivation areseriously challenged in the Internet era. Onone hand, novel technologies allow to devisenew teaching strategies, which may help toovercome the traditional limitations anddifficulties of classroom teaching. As anexample, learning material and its presentationcan be effectively adapted to personalpreferences/features of single learners.Motivation and individual learning style canbe supported by structured approaches likemultimedia storytelling. On the other hand,there is high risk of an improper use oftechnology, causing more disorientation thanadvantages, or to use it without fully exploitingits potential. There are many examples aroundof old-fashioned materials and processes

which are just trivially translated in a newelectronic format. One of the issues to takeinto account is the gap between the traditionalways of presenting material in the classroomand the exposure to fast-paced presentationsof information through the media. Suchproblems become specially relevant whenaddressing learners with critical sensorialabilities, as deaf people. It is worth noticingthe scarce attention which is devoted todeafness problems in the web era, in contrastwith a plenty of guidelines regarding forexample blind people. Nevertheless, deafpeople experience problems both withfollowing oral presentations in the classroomand with access to media presentations basedon verbal rather than visual or multimodalcommunication. According to many field

32 Chiang Mai J. Sci. 2011; 38 (Special Issue)

studies, this might be due to a different wayof conceptualizing the reality and to map iton cognitive structures. Moreover, suchstructures do not find correspondence in thespoken language, and most of all in itsrepresentation (i.e. the corresponding writtenlanguage). They are mainly multidimensional,and tightly bound to concrete experience.Scientific disciplines, with their frequent turnto completely abstract concepts, are one ofthe main challenges for both “normal” andimpaired students. New terms, such as“innumeracy” (mathematical illiteracy), “mathanxiety”, or “math phobia”, have been coined todescribe students’ mood about mathematics[1,2]. In fact, one-third of all America’sincoming college freshmen enrol in a remedialreading, writing, or maths class [3]. In Italy,most teenagers have read no more than threebooks in their lives [4].

In a search for alternative ways ofpresentations, more able to engage studentsin interactive learning activities, we explore thepotential of techniques based on metaphorsand storytelling. These are expected to offernew and more participative approaches to thefruition of learning material. This shouldparticularly hold for deaf students, for whomthe mapping of a learning topic on a structuredstory, with its characters and “screenplay”,might better and more effectively support apeculiar cognitive style. We set an approachto metaphor-based teaching within amultimedia learning environment, which isorganised along the notion of stories that canbe told by students and teachers alike. To thisend, we adopt the point of view of cognitiveembodiment to situate learning within thebodily experience of deaf students. We furtherpropose an abstract model of interaction withlearning material as the exploration of stories,alternating individual and collaborativeactivities. The exploration, as well as the storyflow, is not rigidly fixed and can be

personalized by the student, since the model“one size fits all” is particularly inappropriatein the context at hand This means to also takeinto account the past development of theindividual story exploration. Among otheradvantages, this allows to contextualize bothrevisited sections and newly entered onesaccording to the acquired cognitive fund of thesingle student. This appears to be one of thegreatest advantages of the new technologies,as this kind of strong personalization wouldbe very difficult, if not impossible, to achieve.This feature is always of paramountimportance, but becomes absolutely necessarywhen learners with special needs areaddressed.

Paper organization. Section 2 introducesrelevant pedagogical ideas, while Section 3defines the notion of “sensorial criticality” andits influence on the learning process of deafstudents. The strategies and conceptual toolsused for our teaching model are presented inSection 4. A Deaf-centered E-LearningEnvironment (DELE) is described in Section5, while its Story Controller is detailed inSection 6. Section 7 closes the paper.

2. EDUCATIONAL MODELSHistorically, the Western teaching tradition

seems to have followed a path moving fromstatic-abstract to dynamic-concrete. Socraticmaieutics is presented in Plato’s Symposium[5] and Theaetetus [6] dialogues. Knowledgeis supposed to be already entirely presentwithin the student as a forgotten and latentwisdom that all human beings possess.The teacher’s role is to help students to reachtruth through dialogue, by helping them toremember the true knowledge. Hence theknowledge-building process, becomes a sortof rediscovery of something which is alreadyknown. On the other hand, Aristotle offers adifferent view, where an inductive approachfor the knowledge-building process is argued

Chiang Mai J. Sci. 2011; 38 (Special Issue) 33

to occur, moving from particular to universal.Contrary to Plato’s view, human beings arenot supposed to have any latent knowledgeto be simply remembered. Aristotle’s approachinfluenced all the western philosophical andscientific traditions.

Much more recently, after a long periodof education intended as mere mechanicaltransmission or positive conditioning, anincreasingly student-centred, experimental-based teaching strategy has been proposed.In his novel called “Emile: or, On Education”[7], Jean-Jacques Rousseau describes a“natural” teaching approach, in which a tutorguides the student through several learningexperiences, so that children can tell right fromwrong by experiencing the consequences oftheir acts. Jean Piaget’s constructivist learningtheory provides a general theoreticalframework about psychological aspects of thelearning process [8]. In his view, the learner’sdirect experience within the environment isthe first tool to be used to build a cognitivemodel of the world, strongly emphasizingthe importance of the learner’s activeinvolvement in the learning process. Thestudent carries out the learning process byinteracting and collaborating, rather than bypassively listening to a lesson, and the teacheris a facilitator, helping students to reach theirown understanding of concepts.

Also the psychologist Lev Vygotsky hasa similar consideration of the teacher’s role[9]. He coines the term “Zone of proximaldevelopment” to express the range of conceptsthat are too difficult for the student toconfront them alone, but that can beunderstood with the help of a teacher. Thusthe teacher has to provide the right learningsetting for the student to autonomously reachthe learning goals. Moreover, Vygotsky stressesthe relevance of the social and cultural contextin the whole learning activity, as well as in thestudy of the psychological processes of

learning. It is impossible for him, unlike Piaget,to achieve an universal theory of the learningdevelopment stages, since every individual isnot separable from the context in which heor she lives.

Finally, Bruner’s cognitive learning theoryis particularly related to our approach. In [10],he claims that our mental representation ofreality is structured by means of culturalproducts such as language and other symbolicsystems. One of the most important isnarrative. For Bruner, “we organize ourexperience and our memory of humanhappenings mainly in the form of narrative –stories, excuses, myths, reasons for doing andnot doing, and so on” [10], .

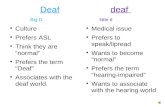

3. SENSORIAL CRITICALITY, DEAFNESS ANDLEARNING

As stated by Vygotsky, learning contextsare fundamental in the teaching processdesign. Sensorial criticalities are an underminingfactor within them. With “sensorial criticality”,we refer here to the lack of some sensorialreference experienced by students within thelearning process. Therefore, the presentationof totally abstract concepts, in specific cases,as well as a physical disability or a moregeneral hindering factor, can entail severelearning problems. For example, mathematicscan be seen as a sensorially critical discipline,since it seemingly lacks of concrete, sense-related concepts. This paper is mainlyconcerned with the physical kind of criticality,even though examples of the other one arealso provided. In particular, we focus ondeafness as a learning-critical disability.

Deafness is a very hidden handicap, sincemost people simply don’t know anythingabout the communication-related problemsthat deaf people do experience. In fact, dueto their hearing disability, all deaf people meetdramatic difficulties in acquiring both writtenand vocal language skills, so that many social

34 Chiang Mai J. Sci. 2011; 38 (Special Issue)

communication problems arise. Deaf areessentially visual people, primarily using thevisual channel both for grasping informationsand for communicating with others. Eventhough reading and writing can be regardedas visually supported activities as well, they areindeed sequential processes. This characteristicis deeply connected with the serialisation ofcommunication which is peculiar of traditionalaural-based experience of language. Onthe other hand, deaf interpretation andtransmission of (visual) information seemsto be basically multidimensional, with aprevalence of synchronous constructions. Thiscan be especially observed in Sign Languages(SLs), visual-gestural language used by mostdeaf people. When exploited, the national signlanguage is a very important resource to beused in classrooms, since it can help toovercome some comprehension difficulties.Specialized professional figures are sometimesprovided by schools, giving communicationassistance for some difficult parts of the lessonto be translated in sign language. However,several deaf persons, due to socio-culturalconstraints and prejudices, do not use theirnational sign language and communicate viaspoken and written language. Lip-reading andspeech therapy techniques are in this case usedas a support. Nevertheless, these persons doexperience difficulties with their literacy skillsanyway. In particular, it is worth noticing thatboth signing and non-signing deaf makesimilar mistakes with spoken and writtenlanguage [11]. Therefore, a deaf-peculiarcommon approach to spoken/writtenlanguage might be supposed. Moreover,due to the above underlined sequential/simultaneous opposition in communicationstructures, it is often difficult to provide anaccurate sign language translation of text andspoken materials, and vice versa. As a matterof fact, it is widely accepted in most researchlines that SLs extensively use complex signs,

defined as Highly Iconic Structures [11], whichgo well beyond the usual single words in avocabulary. These provide information in amultilinear, simultaneous fashion withoutparallel in spoken/written language. Due tothe mentioned issues, the learning process isquite problematic for deaf students. In orderto design a teaching method addressing thewhole deaf community, both signing andnot-signing, a more comprehensive strategyhas to be devised. We argue for a visual, bodily-based interactive teaching approach, exploitingthe peculiar way of communicating andunderstanding concepts by deaf people.Storytelling and computer-aided metaphorsare presented in this paper as the main toolsfor implementing such an approach.

4. CONCEPTUAL TOOLS AND STRATEGIESIn the following subsections we describe

the main concepts influencing the creation ofthe proposed teaching model and the derivedsupport architecture.

4.1 Storytelling, Learning and MultimediaFor Bruner, narrative operates as a mind’

s tool in interpreting reality [10]. Storytelling isa pervasive way of modelling experience.We live within stories and we see ourselvesas the main characters of our own lives.Stories help us to give a personal interpretationof events. Moreover, storytelling is aptto implement a constructivist learningmethodology [12]: students trying to constructstories must carefully design their goals, haveto make sense of their experiences, and mustdeeply reflect on their understanding.Afterwards, storytelling allows them to sharea reflection on the results of this meta-cognitive process with others.

Along the above line, the storytelling-based model by McDrury and Alterioorganizes the educational activity into five steps[13]. Stories have, first, to be devised by

Chiang Mai J. Sci. 2011; 38 (Special Issue) 35

students and, second, to be told. Hence,students need to organize their own thoughtsand establish their narrative goals. The thirdand fourth steps are story “expansion” and“processing”. Additional insight is reachedthrough dialogue, i.e. by seeing the story fromdifferent points of view or comparing it withsimilar ones. Finally, the teacher evaluates suchstories, showing students some alternativepractices that could have been used.

From the mid-1990s the use of multimediatechnology as a storytelling tool has beenincreasingly exploited for educational,artistic and general communicative purposes.People started developing their own briefmultimedia movies, using some softwaretool, and telling stories about many differentsubjects. Recent advances in multimediatechnologies and their increasing diffusion,lend themselves to be of special interest withinthe above teaching/learning perspective.As an example, the increasing appeal thatstorytelling is inspiring to the educationaland psychological research community isshown by the Digital Storytelling project[14-16]. Due to the great emotional impactthat can be offered and the expressivepossibilities allowed, multimedia-aidedstorytelling is going to be more and moreused at school, where it may represent amotivational improvement for both studentsand teachers, and provide a more attractiveexperience of learning [16]. Classroom lessonscould be enriched by means of students’ orteachers’ stories, thus increasing the student’sdirect involvement. More in general, in somelearning domains, the storytelling approach canbe particularly powerful. For instance, it offersseveral interesting applications for teachingHuman-Computer Interaction and SoftwareEngineering [17], where stories are both themean and the object of teaching. First,concerning the system design process,storytelling enables a bottom-up approach in

presenting the design of a new system. Withinthe engineering storyboard, target user storiesare collected and analyzed at the very beginningof the design (requirements finding). Suchstories have to be rich of details, e.g includingthe names of the users and their general habitswithin the use-cases contexts. Thus, designers’efforts are aimed at creating a story byfocusing on the real needs of the users. Goingfurther along this line, in presenting an ObjectOriented system, every object can be seen asan agent living its own business story. As Imazand Benyon said, “during their travels, theobjects will encounter other objects, whichthey will interact with. Each interaction willbe compressed as an operation and eventuallyimplemented as a method into the appropriatepart of the class construct” [17]. Figure 1shows a representation of such idea, whereobjects are agentified and interact in a virtualenvironment.

Figure 1. A representation objects as agentsliving their own, possibly interlaced stories [17].

In this scenario, instantiating a class meansletting the class-agent’s story to be started. Theadvantage of using such an approach istwofold: from the design perspective, a morenatural way of thinking can be used, helpingthe designer to maintain a more organic and

36 Chiang Mai J. Sci. 2011; 38 (Special Issue)

holistic view of the system; from the analysisperspective, stories can be searched for withinall the system documentation. For example, aclass diagram could be seen as a particularview of the system’s story, showing it as asort of “society” of interacting people. Thispoint of view can make the system analysiseasier and nearer to the human level.

Finally, from the human-computerinteraction point of view, an interactivecomputer system has to “tell a good storythat engages the imagination” [17], and thisespecially holds in educational electronicenvironments. The learners’ motivation andinvolvement could be improved by imple-menting such a “good story”. This correspondsto providing an intuitive and comfortableenvironment where the users’ actions have tobe performed and a suitable mapping of thelearning domain concepts can be imple-mented. Designing such appealing interactionentails a deeper understanding of one of themost important tools we aim to use: metaphor,which is one of the main concerns of the nextsections.

4.2 Cognitive Embodiment and MetaphorWe embed storytelling into the more

general framework of Cognitive Embodi-ment (CE), for which all knowledge stemsfrom the body [18-21] and fundamentalbodily-based Image Schemata, e.g. link, force,containment, balance, path, are built via body-mediated experiences. Other cognitivefunctions project this bodily, image-schematicknowledge onto conceptual and abstractdomains. A further powerful tool is theConceptual Metaphor.

The Conceptual Metaphor (CM) establishesa mapping between two domains, usually aconcrete, body-related domain and a moreabstract one. Examples of CMs are foundalmost everywhere. Every human languageuses CMs to communicate meanings at

different levels of complexity. For example,in the worldwide used metaphor “Love Is aJourney” [18], we use sentences like: we aredriving too fast, we are at a crossroad, ourrelationship has hit a dead-end, etc, using themapping from lovers to travellers, from loverelationship to vehicle, from lovers’ goals totravel destinations, and from relationshipdifficulties to travel impediments. In general,a CM is a way of understanding situationsand concepts. In [21] four metaphors areproposed for the arithmetical domain, viz:Arithmetic as an Object Collection, as anObject Construction, as a Measuring Stick, andas Motion Along a Path. To better understandthe entailments of changing the referencegrounding metaphor, let us compare two ofthe above arithmetic metaphors: Arithmeticas Object Collection and Arithmetic asMotion Along a Path, with the followingmapping [21]:

Arithmetic as Object Collection• Collections of objects of the same size →

Numbers;• The size of the collection→ The size of the

number;• Bigger→ Greater;• Smaller→ Less;• The smallest collection→ The unit;• Putting collections together→ Addition;• Taking a smaller collection from a larger

collection→ Subtraction

Arithmetic as Moving Along a Path• Acts of moving along a path→ Arithmetic

operations;• A point-location on the path→ The result

of an arithmetic operation;• The origin, the beginning of the path→

Zero;• Point-locations on a path→ Numbers;• The unit location, a point location distinct

from the origin→ One;

Chiang Mai J. Sci. 2011; 38 (Special Issue) 37

• Further from the origin than→ Greater than;• Closer to the origin than→ Less than;• Moving from a point-location A away from

the origin, a distance that is the same as thedistance from the origin to a point-locationB→ Addition of B to A;

• Moving toward the origin from A, adistance that is the same as the distance fromthe origin to B→ subtraction of B from A.

Other operations, like multiplication anddivision, can be obtained from these basemappings. The first worth noting thing is thepresence of a natural element that maps theZero in the Arithmetic as Motion Along a Pathmetaphor, while such a natural mappingcannot be found in the first metaphor. In fact,thinking of the Zero element as a collection isnot so obvious, because it implies acceptingthat a collection with no elements is acollection at all. Actually, such an operation isa metaphor itself. A further fundamentaldifference is that the “moving along a path”metaphor allows one to imagine moving awayfrom the origin in both directions of the path,thus obtaining negative numbers. The set ofnegative numbers cannot be obtained by usingthe Arithmetic as Object Collection metaphor.We can conclude that our way of thinking isconstrained by the metaphor we use.

Another example of CM is found in thedifferent ways of writing music, wherereference to the body movement is alwayspresent.

Recent studies with neuron-imagingtechniques prove that CMs are not arbitrary[22], as metaphors activate coherent sensori-motor neural circuits. For example, hearing“he handed me the theory” activates the brainregions connected to hands movement.

CM goes beyond concept transmission,and has been proposed in HCI as a sourceof guidance for inclusive design [23] . Sincemetaphor is a subconscious mental represen-

tation used to understand concepts, it hasbeen supposed to be an almost universalpresentation tool, despite the different levelsof cognitive ability and experience withtechnology that people do have. An experimenthas been conducted using 12 “primarymetaphors”, i.e. metaphors that map sensori-motor, image-schematic base patterns intoabstract domains (e.g. the MORE IS UP –LESS IS DOWN metaphor). Such metaphorswere chosen among the 250 that have beendocumented in literature [24, 25]. One image-schematic spatial dimension has beenpresented to the participants together with atarget phrase for an abstract concept.Participants were asked to perform free armgestures on the given spatial dimension torepresent the target phrase. For example, theUP-DOWN image-schematic spatial dimensionhas been proposed together with the“powerful, powerless” target phrase. Partici-pants could perform any arm gesture on theUP-DOWN dimension to represent the“powerful, powerless” phrase (e.g. movingthe arm up to represent “powerful” and downto represent “powerless”). In this case, theconnection between the performed gesturesand the primary metaphor POWER IS UP –POWERLESS IS DOWN [25] can be tested.The results show that in 92% of all casesusers choose gestures that confirmed thedocumented metaphors. Thus CMs can beemployed in interactive computer systems toachieve intuitive and familiar interfaces alsofor educational purposes. This is extensivelyexplained in the next section.

4.3 Conceptual Blend and HCIThe cognitive process defined by the

Conceptual Blend allows one to build a newconceptual domain from two existing ones.As shown in Figure 2, a Conceptual Metaphorcan provide the two input domains, wherethe third domain represents a synthesis and

38 Chiang Mai J. Sci. 2011; 38 (Special Issue)

an implementation of the metaphor itself.Such a new domain is composed by usingthe inferential structure of either one or bothof the two input domains, and it also revealsa new emergent structure. Computer Scienceand, in particular, Human-ComputerInteraction are fundamentally built viaConceptual Blends [17]. In fact, graphicinterfaces as well as old-style consoles,windows, widgets and all interactionparadigms are implementations of hiddenmetaphors: in other words, ConceptualBlends.

Computer Desktop is perhaps the mostremarkable and known example. It takes itsinput domains from the Computer as anOffice metaphor (see Figure 3).

Figure 2. A Conceptual Blend schematic.

Figure 3. The Desktop Blend.

The Office domain provides the well-known inferential environment, while theComputer-commands domain should behidden to the end users. In fact, only the Officestructure (i.e. the general appearance of theenvironment and some basic interactionparadigms) is projected into the blend, toexploit user’s familiarity with such anenvironment. Computer commands are onlyprovided through the interface but are notexplicitly shown. As in a real office, users canfind common objects that they can interactwith, such as documents, folders, a trashcan,and computer commands are executedthrough the interactions with such elements.Nevertheless, several differences with respectto a real office environment can be found too.Due mainly to the bidimensional perspectiveof this blend, a new structure emerges,concerning topological, spatial and interactionissues.

4.4 Story-centered Educational ToolsSo far, we have addressed issues concerning

learning and computer systems: storytelling isone of the most powerful tools to be used ina learning environment, and it can be seen asa very pervasive metaphor in our experience.Metaphors can in their turn implement stories,providing the story’s structural details and“embodiedness”. As a further conceptual step,the Conceptual Blend in HCI is the concreteimplementation of a story via a groundingmetaphor. We can say that every humanlanguage provides its own stories and, whilestories are usually built up through ConceptualMetaphors, the Metaphor itself cannot beimplemented without a Conceptual Blend.Such a blending process creates a sort of“conceptual artefact” that substantiates themetaphor, hence the story it describes. Thus,for an interactive system to “tell a good story”it has to use a good metaphor. Also the AppleHuman Interface Guidelines [26] argue that

Chiang Mai J. Sci. 2011; 38 (Special Issue) 39

“Metaphors are the building blocks in the user’s mental model of a task”, suggesting to usemetaphors as a starting place in the humaninterface design.

How can we exploit the above conceptsto give an answer to the crisis and to currentissues on learning? Present-day teachingmethods are primarily implemented by meansof classroom blackboard-aided lessons andstandard learning materials (e.g. books, slides,etc.), while audits are provided basicallythrough oral and written standardized tests.Such an approach can be experienced as quitedifficult, especially for abstraction-baseddisciplines, like mathematics, where concretereference points could be hard to find bystudents. As stated above, stories represent oneof our most important conceptualizationtools, since detailed and human-leveldescriptions are provided by them. Human-level, rich-of-details descriptions have alwaysto be provided as a fundamental part oflessons. In fact, even the use of simple image-schematic representations alone, e.g. instancesof containers for sets in a sets-basedmathematical explanation process, could leadto hard-to-understand concepts. This is dueto the quite abstract context in which suchimage-schematic representation is presented.This kind of problems can be compoundedby having story-based descriptions as astructural aspect of lessons, thus facilitatingthe students’ conceptualization process.

Multimodal interactive computer systemscan play a very important role in this approach.In fact, graphic visualizations can enhance thestory vividness and attractiveness, while task-oriented interactive environments can improvestudents’ involvement, giving a non-mediationillusion in which the user seems to “live”the learning process. Virtual graphicalenvironments, augmented reality, multimodaland multisensory interaction strategies are bestexamples of technologies to be used. An

e-learning environment exploiting virtualgraphic spaces (Virtual Worlds) in Second Lifeis presented in [27]. Virtual Worlds focus oncollaboration in social networks, withoutreference to Storytelling or CM. While manystatic graphic representations of knowledge(e.g. storyboards, charts, diagrams, etc.) canbe provided even without using computersystems, their interactive and animatedversions can be implemented only withsuitable software systems, but they could yieldgreat motivational improvements. Virtualenvironments can also visually includemetaphors and stories: storytelling has a naturalvisual representation as a motion along a path.Providing such a path to be explicitly visualizedcan enhance the students’ feeling of continuityof the learning process. Furthermore, seeinghimself or herself physically moving – withinthe virtual environment – along such path,can increase the students’ motivation andinvolvement.

4.5 A Story-Centered Educational ModelThe reflection on the issues and concepts

presented above has driven the specificationof the proposed teaching model, especiallydevised for sensorially-critical subjects.It implements a constructivist-like, metaphor-based and story-centred approach supportedby multimedia and multimodal computersystems. Students’ involvement and learningthread maintenance are targeted by usingstorytelling as a general approach, whilemotivation and user friendliness canbe provided by designing task-specificmetaphors.

Figure 4 shows a schematic view of themodel, which is entirely based on the functionalcoupling of the metaphor tool with thestorytelling strategy. A main metaphorimplements the main story of the learningmodule. Each Learning Unit (labelled LU inFigure 4) is further represented as a metaphor-

40 Chiang Mai J. Sci. 2011; 38 (Special Issue)

story coupling in itself. In fact, containmentand path image schemata provide the wholemodel structure, i.e. metaphor-story couplingsare arranged in through recursive containment.Each story maintains the path to be followedthrough its units, and presents it in an easyand recognizable fashion, while supportingmany possible alternative paths in order torespond to each student’s needs. Differentmetaphors allow both context-specificgraphical presentations and task-orientedinteraction strategies to be offered, whileembodiment principles are always taken intoaccount. Learning Units should contain bothStory-based Learning Activities (SLA) andaudits.

Collaboration is another important issueto consider. Stories should provide a socialenvironment in which students can collaborateby communicating with each other (e.g. askingfor help on something that has not beenunderstood) as well as by working togetherfor some task to be accomplished. Thestudents’ story design can therefore be alsoused as a collaboration tool in order toenhance discussion and problem solving ingroup.

Finally, both student’s storytelling strategiesand audit results can be evaluated to assessand improve students’ story interpretation anddesign practices, providing new perspectivesand developments.

5. THE DELE SYSTEMThe model presented in the preceding

section is being exploited in the Italian projectVISEL [11], aimed at designing a Deaf-centred E-Learning Environment (DELE).Due to the deaf literacy problems discussedhere, designing a DELE is a challenging task.Moreover, accessibility guidelines for deafpeople are only sketched in [11], providingonly some fairly general indications about theimportance of using sing language videos,video conferencing and tools equipped withsigning avatars. Most existing e-learningenvironments designed for deaf peopleprovide them accessibility only by meansof SL videos, to be shown near the textualmaterials. Nevertheless, we have underlinedabove that such a feature does not help thewhole deaf community, which also includesnon-signing people. In order to addressthese issues, we are designing a story-basedDELE that provides an immersive graphicenvironment based on metaphoric construc-tions, where the use of text-based materials islimited whenever possible.

Such a model can be considered as animplementation of an e-learning approachin its broadest sense. It could be seen as acomputer-aided teaching method to beexploited within classroom as well as indistance learning, or in a blending of both.The teacher can design the stories andmetaphors to be used, encouraging commu-nication among students through face-to-facedialogue as well as by means of electronicmedia (textual chat, video chat, messages, etc.)and letting the students themselves developtheir own multimedia-based stories.

Figure 4. A schematic view of our model.

Chiang Mai J. Sci. 2011; 38 (Special Issue) 41

5.1 DELE System ModelFigure 5 shows the main top-story told

by the DELE as an instance of the generalmodel above. The underlying system itselfis presented through a story. Users areaccompanied to choose the system settingsbefore the actual learning process starts: forexample, they can set their personal details(e.g. name, avatar, etc.) through the “Usersettings” sub-story, they can choose somegeneral graphical settings (e.g. colours, fonts,etc.) by entering the “Appearance settings”sub-story. After setting preferences, the usersare projected on the true learning story.

Since the project’s target populationis High School and University studentsand young deaf professionals, the storydevelops within a university campus metaphor(Figure 6). Here the “campus” refers both tothe metaphor employed for the system andto the virtual graphic environment in whichthe stories of each user develop. Entering thecampus, a virtual avatar is provided for theuser to explore the graphical environment.Thus, deaf people’s peculiar visual-bodily wayof grasping information can be exploited.

Information browsing is not arranged ina textual/tabular fashion, but the user is askedto move within the various sub-environments(i.e. sub-stories) to accomplish several learningtasks.

Figure 5. A schematc view of DELE.

The main goal of the campus environmentis to enrich the entire society by means ofknowledge. No challenges or competitionsamong users are allowed; they are rather askedto share the knowledge they acquire, since agreater virtual-society wellness can only beachieved in this way. Each learning unitengages the user in several vocal/writtenlanguage comprehension and productionactivities, since the general competenceimprovement on a specific discipline is thefinal goal of each learning unit. The user canmeet other users and they can engage togetherfor communication and collaborationactivities. Communication will be fosteredthrough several different codes: writtenlanguage, sign language and written signlanguage. The latter will be encoded inSignWriting, an experimental coding resourcewhich is increasingly used by the deafcommunity due to its ease of use. In fact,while many formal representations of signlanguages have been provided by worldwideresearch, such languages do not have an officialwritten form yet. SignWriting seems to be apromising encoding, since all the informationconveyed into sign languages (i.e. hands motionand configuration, facial expression, bodypostures, etc.) can be naturally representedthrough it.

Figure 6. A schematic view of the campus story.

42 Chiang Mai J. Sci. 2011; 38 (Special Issue)

involve impaired people directly in the designprocess in order to achieve learner-centrede-learning environments. Along this line, inour case several deaf researchers have beenactively involved both in the design phaseand in the successive prototypes evaluation.We think that such an approach will ensurethat the actual needs of deaf people will betaken into account, and that appealing andintuitive metaphors will be designed.

DELE design entails many interactionissues. Since only the visual channel can be usedby deaf people both to transmit and receiveinformation, a forced mono-modality seemsto be needed. For example, in a typicalclassroom-based lesson students can listento the lesson and write down notes, or theteacher can speak while they are looking atthe blackboard. When deaf students areinvolved, such interaction patterns areimpossible, since all information must beconveyed through the only visual channel.Moreover, if the teacher looks at theblackboard, no spoken word can be graspedby means of lip-reading. The DELEinteraction model is shown in Figure 7.

The Home Environment provides thebase learning place. It implements a sub-storywhere several learning materials can be found.By selecting one of them, the user is projectedinto the corresponding sub-story implementingthe SLA for the related argument. SLAs willbe designed in order to “facilitate” textualmaterial, providing iconic as well as animatedresources to enrich some difficult parts ofthe text. Text authenticity/semantics is notchanged, but additional materials are providedto facilitate/support the mental processesthat vocal/written language entails bycomplementing it with bodily-rootedelements.

Finally, the University Environment isanother example of sub-story that will beimplemented. Here, students can share theirknowledge with the entire campus community,receiving credits for this. Following the DigitalStorytelling lesson, students’ own multimediastories could be developed and presented, thusexploiting the pedagogical benefits ofstorytelling.

The presented environments are just afew examples of what can be provided byDELE. As stated in [28], it is important to

Figure 7. The DELE interaction model.

Chiang Mai J. Sci. 2011; 38 (Special Issue) 43

Our multi-modal interaction paradigmallows not only keyboard and mousecommands, but also web-cam capturedgestures. This splits the virtual space ofinteraction into two parts: a Virtual CommandsSpace, where gestures are interpreted andmapped to commands, and a Virtual ActionSpace to execute commands. Such a divisionis needed as each Virtual Space represents adifferent GUI execution mode: for example,when the user’s hands are recognized by theweb-cam, the GUI may enter a graphical menuspecialized for gesture commands, thus lettingthe user understand this interaction modeswitch. The Virtual Action Space is anenvironment where visual and bodily-relatedactions and events are presented as reallyhappening. Users move, grasp objects, enterbuildings, meet people, etc, receiving real-timeinformation as in real life via the visualchannel.

5.2 DELE Architectural DetailsFigure 8 represents the main features of

DELE, with different layers shown indifferent colors. Grey modules within eachblock represent block-specific elements, whilearrows describe dependencies. An arrow goesfrom one module to another if the sourcemodule provides the functionalities for thetarget to be implemented. Arrows directedfrom one module to an entire block specifythat each module within such block dependson the source module. The user interaction inDELE does not require special hardware. Anadditional software layer implements a gesturalrecognition module exploiting the webcamvideo stream acquisition. Simple gestures canbe recognized to implement alternative waysto launch some general system commands,such as browsing the environment or askingon-line help. This feature seems to beimportant for deaf users, due to their naturalgestural approach to communication.

Figure 8. The DELE Architecture.

The environment implements severalVerbal Language (VL) as well as Italian SignLanguage (LIS) modules, addressing commu-nicative needs of various users. LIS modulesare implemented both in video streamingand in written form. For the latter to beprovided, an ad hoc SignWriting (SW) editoris being developed, providing a more usableGUI than [29].

Video functions are fundamental toDELE: signing deaf users can communicatevia SL through video tools, while non-signingusers can use them for digital storytellingactivities. User functions of DELE include:synchronous/asynchronous collaboration,textual and video chats for both one-to-oneand conference communication, privatemessages, and a discussion forum. Learningmaterials use animation, VL, and LIS.Cognitive Embodiment (CE)-based textfacilitation techniques enrich text materialswith additional contents, viz. iconic representa-tions of bodily and socio-cultural meaningsembedded in a word. To explain a word like“into”, short videos (or animations) showinga person entering a house, a girl entering awood or a child entering the last piece intoa puzzle, can be shown on demand to inferthe concept of “into”. We envisage logical,associative or game-like learning activities

44 Chiang Mai J. Sci. 2011; 38 (Special Issue)

(top-left module in Figure 8), and design real-time cooperative tasks (laboratories).

Typical in DELE is the fact that theimplementation of the presentation-relatedarchitectural modules is based on themetaphor-story coupling. For example, theforum is metaphorically represented as adiscussion board in the campus environment,laboratory activities are presented throughavatars collaborating on tasks in virtualclassrooms, etc.

6. THE DELE STORY CONTROLLERFigure 8 shows that all the learning

activities depend upon a “Story Controller”module, which is detailed in Figure 9 andmaintains information on story-relatedstructural aspects of DELE. In particular, theDELE “narrative structure” can be thoughtas a two-level story: the first level isrepresented by a sort of “global view”, whileThe second one is represented by the

“Personal Story” module of Figure 9. At thefirst level the Campus Story provides thegeneral environment in which users canfreely move between different virtual places(i.e. home, library, coffee, etc.) using avatars.Usage statistics and other information affectingthe entire virtual society are collected by thesystem within this narrative level. Moreover,a Social Culture is created. As each narrationparticipates in culture creation [10], a generalview of common beliefs and of the resultsof exchanging different opinions must bemaintained. General (common) beliefs canderive from knowledge acquired in learningactivities, as well as from discussions amongstudents and with the tutor. The Social Cultureinformation can be also used to improve theuser’s motivation to actively participate in thevirtual society: for example, users can challengebeliefs which are commonly accepted by themajority by taking part in discussions.

Figure 9. The Story Controller module.

Chiang Mai J. Sci. 2011; 38 (Special Issue) 45

At the second narrative level, the “PersonalStory” module manages all information aboutthe users-specific learning stories. Several othermodules are connected to this: “ObtainedResults” sums up the current user level and“Choices along the path” manages data onperformed choices and actions. “Followedpaths” stores pointers to the user learningactivities. “Participation in the society” refersto intentional interaction with other users andis the main source of the virtual society’sculture. The Digital Storytelling project andMcDrury’s and Alterio’s model [13] areimplemented, taking into account users’motivations, produced stories and conclusionsof tutored public discussions. Users’ storiescan deal with topics related to learningcontents, but also with their personalinterpretations and expansions. Conclusionsare next processed in order to develop acurrent society’s culture, for example presentedas news published on the campus main square.

Finally, the Diachronicity module specifiesthe chronological structure of each PersonalStory. Specific SLAs provide a representationof university exams and other learningsub-environments which are organized asstrongly structured stories generated by theDiachronicity module. In this context thepath is generated in order to control thediachronic process of the student’s learning.In fact, every student’s Personal Story representsa learning process, shown as a path alongseveral learning stages (Figure 10).

In every moment, a student is in exactlyone location but, as in a real path, past choicesand possible future ones are available. Everynode along the path represents either a learning

6.1 Leaf-Nodes and Story-PathsWe define two different kinds of learning

node:• A learning activity node, called Leaf-Node

(LN). A state machine MLN can be used tomodel such kind of node, which iscomposed of a learning activity and itsassessment (Figure 12).

• A Story-Path (SP): a generic path with anarbitrary topological structure. The statemachine MMSP can be used to describe allthe possible learning paths and choices tobe presented to users (Figure 13). MMSPprovides a meta-model of SP, hence a statemachine MSP is generated as an instance ofMMSP in order to model a SP.

Either LN or sub-stories (recursivelynested SPs) can be found as possible nodesin each SP. Figure 13 shows a separationFigure 10. The Personal Story as a path.

Figure 11. A “star story”.

activity or a sub-story. Although every learningprocess can be represented as a linear pathfrom a starting place to a final goal, arbitrarypath topologies are allowed. For example, a“star” topology can represent a sub-story(Figure 11).

It is worth mentioning that Figures 10and 11 provide a qualitative graphical viewof learning paths as they will be visualized byusers. In order to provide a formal model ofthe Story Controller, a more detailed structuraldescription of learning paths follows.

46 Chiang Mai J. Sci. 2011; 38 (Special Issue)

between state machine structure and,consequently, protocol nodes (i.e. nodes thatdefine the story path) and LNs. Entering a SP,a choice between “permitted” nodes (e.g. thefirst node in a linear path) is given to the useruntil all nodes have been visited. This choicecan take the user either to a LN (called LNcurrin Figure 13), which represents an instanceof MLN, or to a sub-story, represented as arecursive call to SPcurr. If a LN is chosen,a learning activity and its assessment are

Figure 12. A LN model using state machines.

Figure 13. A meta-model of all possible SPs using state machines.

presented to the user. When the user returnsto a non-finished sub-story, the global storycontext is recreated thanks to the deep-historystate machine pseudo-state.

The LN assessment affects the systembehavior: when the user obtains a weak orinsufficient evaluation, the possibility to returnto the last visited node is offered, as well as avisualization of in-depth activities for thatnode. If an insufficient evaluation is obtained,next nodes in the ordered path are shown as

Chiang Mai J. Sci. 2011; 38 (Special Issue) 47

not permitted to the user. Finally, in-depthactivities are not presented within the mainpath as possible choices when a positiveassessment is obtained, even though they canbe shown on demand.

In order to clarify this point, let usdescribe an high-level scenario. Suppose thatthe SP shown in Figure 14 is being visited bythe user.

Hence, at the beginning, only the way

Figure 14. A story with only one allowed node.

Figure 16. A scenario path when A1 has been passed with good results.

going to A1 is marked as “active”, while allthe others are not allowed. A1 might be passedwith a weak or insufficient assessment, asshown in Figure 15. If A1 has been passedwith weak results (Figure 15-a), the way to A2

is activated, but the way to A1 is still activetoo, and all its in-depth activities are exposed.On the contrary, if A1 hasn’t been passed(Figure 15-b), in-depth activities for A1 areshown, but the way to A2 is left forbidden.

(a) (b)Figure 15. The in-depth activities manage-ment: (a) A1 has been passed with weak results; (b)A1 hasn’t been passed (insufficient result).

48 Chiang Mai J. Sci. 2011; 38 (Special Issue)

Figure 17. some MMSP states enriched with behaviors defining the contextual/semanticneighbourhood.

Finally, Figure 16 shows the system behaviorfor a good result assessment. Here, the wayto A1 changes its aspect underlining the goodresult obtained, no in-depth activities are addedto the path and the way to A2 is activated.

6.2 Contextual/Semantic NeighbourhoodStarting from each LN, other “neigh-

bouring” LNs will be accessible by the user.Such neighbourhood can be built accordingto a relation of semantic proximity of nodeactivities. In this way, links to semantically nearactivities are presented to the users while theyare visiting the current one. For example,suppose that the user is visiting a LN providinga learning activity about articles. A link toanother node about substantives would beinteresting as a possible in-depth activityconcerning the current one. In fact, as provedby many learning environments such as thepopular BBC Learning English [30], repetition

and memory re-elaboration are main concernsin any learning process.

According to the identifiable semanticrelations, each selected LN (LNcurr) might beenriched with some behaviors within the MMSP

meta-model, by defining its Contextual/Semantic Neighbourhood (CSN), i.e. a set ofLNs {LNsem_near} which are semantically nearto LNcurr (see Figure 17).

Figure 17 provides a possible expansionof a part of the MMSP meta-model in Figure13 that includes additional behaviors for theLNcurr and “Give choice with repetition andin-depth activities” states. Such behaviors allowthe user to visit the CSN from LNcurr. Hencea “normal” path, defined together with theSP, is provided by the system as well as severalCSNs, one for each LNcurr choice. Nodesselected as present LNcurr are alwaysconsidered as the root of their ownneighbourhoods; in fact they are stored as a

Chiang Mai J. Sci. 2011; 38 (Special Issue) 49

Figure 18. Instantiation of concepts in present context.

reference called LNroot in Figure 17; fromevery node of a CSN it is always possible tovisit another CSN node, to move backwardsof one hop, or to return to the CSN root.

To provide a clearer reading, in thefollowing, a state called “Contextual/SemanticNeighbourhood” (or “CSN”) will be shownto reference the part extending the originalmeta-model described in Figure 17.

As stated above, LNcurr is recognized asthe present CSN root, thus the CSNexploration always ends with a transition backto this node. The LNs belonging to the CSN,in general, do not match LNs defined for inMSP: in fact the choice order and topologicalstructure of the main SP is not followed inCSNs, and arbitrary browsing leaps areintroduced. Once entered a CSN, users cango deeper and deeper into it, thus theoreticalbounds to the CSN depth are not given.Nevertheless a pointer to the CSN root isstored in order to keep browsing easiness andcoherence, and allow an “emergency exit” ifthe user gets lost.

6.3 CSN and Context InformationFigures 12 and 17 often refer to the

“context” of a LN. In fact, each LN relies onthe present context of the user’s learning path.Let us describe in more detail such conceptby using a metaphor that we called “Presentinstantiation of memories”.

Imagine a book. We could describewritten characters as a code used to store aset of concepts. When we read, we use thatcode to recreate such concepts in our mindsand understand the book story, but when weare recreating concepts in our mind, they donot always contribute in the same way to theresulting cognitive/emotional structure. Sincethe present context affects the general “quality”of concepts, reading a book in two verydifferent situations does not lead to the sameemotions felt, even though the mere textualcomponent (lexical and conceptual) of thebook is the same.

Hence the act of “rebuilding” a conceptin memory can be described as a conceptualblend, where the input domains are the conceptsto be instantiated and the present context. Thisscenario is depicted in Figure 18.

50 Chiang Mai J. Sci. 2011; 38 (Special Issue)

Although Figure 18 shows a ratherextreme example, it is useful for understandingour idea: the return of the concept to beinstantiated is modified through the conceptualblend, taking into account the present context;in this way a qualitative, but non structural,change is applied to the concept itself.

This metaphor can also be used for ourCSN: LNcurr represents the current context ofthe user, applied to the related CSN nodes. Inparticular, the context of LNcurr must bepreserved when the user chooses to visit aLNsem_near which is semantically near to LNcurr.For example, if LNcurr containts an activityabout substantives and LNsem_near contains anactivity about articles, the system could presentLNsem_near changing all substantives appearingin its content by default with those used inLNcurr; as another example, the generalgraphical appearance (background, font, ...) ofLNcurr could be applied to LNsem_near, ecc.

To make the LN structure clearer, botha set of structural elements (not context-sensitive) and a set of Context-SensitiveElements (CSEs) must be defined for eachLN, and the “Activity Context setup” state(shown in Figures 12 and 17) manages thecontext setup for LNcurr. For example, the listof substantives of a phrase, the backgroundimage of a page, the fonts type used are allpossible CSEs. In particular, the default CSEsfor each LN are loaded when the user followsthe “normal” path, while the LNroot’s CSEsare loaded during CSN exploration.

How can we actually implement CSNs?A static structure is stored in order to keepthe entire implementation model simple.A set of semantic attributes, stored into a shareddatabase, are defined for each LN. Suchattributes define a simple semantic descriptionof the activity. For example, a SUBSTANTIVESattribute might be defined for the activity aboutsubstantives described above. Then, a set ofsubstantives found within the activity can

represent an activity’s CSE, that can beassociated to the attribute. Whenever the uservisits a node (LNcurr), a request to the serveris sent in order to find every nodewhich declared any subset of the LNcurr’sCSEs: such nodes will make up the LNcurr’sneighbourhood. Moreover, attributes alsoprovide the definition of the context. In fact,when an attribute is declared by a LN, anassociation between this attribute and a subsetof the LN’s CSEs must be provided. In thisway, if LNcurr represents the current contextand LNsem_near belongs to LNcurr’s CSN, e.g.because both LNcurr and LNsem_near share anattribute called a1, LNcurr’s context can beapplied to LNsem_near as follows: everyLNsem_near’s CSEs associated with a1 arereplaced with the LNcurr’s CSEs associatedwith the same attribute.

A further elaboration of the simpleexample above will clarify this point. Supposethat an exercise about articles represents theLNsem_near’s activity. We can imagine theexercise as follows:

__ child eats __ sandwich.

The user can pass this activity by justreplacing the blank spaces with a possible rightarticle (e.g. “The”, “a”). Substantives withinthe phrase are a LNsem_near’s CSE (i.e. “child”,“sandwich”). In the LNsem_near definition anattribute called SUBSTANTIVES has beendefined and the substantives contained inLNsem_near’s exercise phrase have beenassociated to such attribute (S1 = child, S2 =sandwich). Suppose that the user passes theLNsem_near’s activity and then enters LNcurr. Thisnode contains an activity about substantivesin which a sentence (containing somesubstantives) is shown to the user and he/shehas to choose an image to be matchedwith each substantive. Suppose to have thefollowing sentence:

Chiang Mai J. Sci. 2011; 38 (Special Issue) 51

The dog bites its bone.

A LNcurr’s CSE are the list of substantiveswithin the phrase (i.e. “dog”, bone”) as in theprecedent activity. The SUBSTANTIVESattribute is declared also by LNcurr, with a self-explanatory association (i.e. S1 = dog, S2 =bone). If the exercise about articles is enteredas a neighbour of LNcurr, the context definedby LNcurr is applied to this LNsem_near: in orderto accomplish this, the shared attributesbetween both LNs are taken (onlySUBSTANTIVES in this case) and, for eachi-th LNsem_near’s CSE associated with theattribute, the i-th LNcurr’s CSE associated withthe same attribute is replaced to it. In this case,the list of substantives within LNsem_near isreplaced with the list of substantives of LNcurr.Thus, the LNsem_near’s (articles) activity is shownto the user as follows:

__ dog eats __ bone.This is clearly an extremely simplified example,but arbitrary contexts can be defined usingthe same mechanism.

Three different kinds of materials can beused to define a number of different kindsof CSNs:1. Recommended In-Depth Activity (RIDA):

material that is a-priori chosen by the tutor,who statically defines attributes in order tosemantically connect activities with eachother.

2. Past Correlated Activity (PCA): materialcoming from the user’s interaction pasthistory that is semantically correlated withthe current activity. It is dynamicallycalculated as the user proceeds along thelearning path. In particular, the first time auser enters a node (LNcurr), each nodebelonging to the user’s interaction history isanalysed in order to test its semanticcorrelation with LNcurr.

3. Personal In-Depth Activity (PIDA): materialstored by the user due to personal interest.

Customized learning paths can be createdwith this kind of materials. Custom tags canalso be defined by the user to semanticallyconnect activities.

In the rest of the paper, we will referto CSN based on RIDA materials as“tutor-defined CSNs”, and to PCA/PIDA-based CSNs as “user-defined CSNs”.

Finally, each LN can be associated withresources other than CSN activities: forexample, a list of links to learning materialsstored in the Campus society and semanticallyrelated to the current activity are presented, aswell as a list of users which passed the currentactivity with good results. These users couldbe contacted for consults and informationsharing.

6.4 LaboratoriesCollaborative activities, called laboratories,

need particular care. Here the informationabout the set of involved users has to bemaintained, but user CSNs must bedistinguished from tutor ones. In fact, sincethe past/preferred activities are generallydifferent for each user, different user-definedCSNs are to be shown to each one. A high-level view of this structure is presented inFigure 19.

Two parallel SPs are shown, each onedefining its own flow of execution (thickarrows) driving the users through several sub-stories. Each sub-story has an arbitrarytopological structure and contains several LNs.Only two levels of browsing depth are shownin Figure 19, (i.e. starting from the main SP,users reach one sub-story and then return backto the main SP), but there’s no theoreticalbound to this, as demonstrated in the MMSP

meta-model (see Figures 18). The user-definedCSN nodes are accessed through the dottedarrows. The laboratory activity is a LN itself,which belongs to both paths. Since the personalinteraction history changes among users, in

52 Chiang Mai J. Sci. 2011; 38 (Special Issue)

general different paths are defined fordifferent SPs and different users.

Therefore, two distinct sets of links tothe user-defined CSN nodes are provided forthe two users from the laboratory LN, andthey have been drawn using two differentcolors in Figure 19.

A different statecharts-based model isneeded for laboratories. In fact, informationabout several users must be maintained, whereeach user has both a personal and a sharedwork to be done. Figure 20 shows the MMLAB

meta-model used to describe the possiblekinds of laboratories.

Laboratory work is divided into twoparts: an asynchronous personal work and asynchronous shared one. Parallel asynchronousregions within the “Personal Work” statemodel users’ personal activities. Users canfreely join and exit the laboratory and apossible exit point is reached when all usersexit before all the work has been done. Thedeep history pseudo-state re-establishes the lab

global state when the work is restarted.Moreover, CSN nodes can be reached: frompersonal work regions distinct user-definedCSNs are accessible as well as a unique tutor-defined CSN.

In particular, even though the same tutor-defined CSN nodes are shown for all users,actually different users access differentinstances of such nodes. A state with mandatorysynchronous access models the shared work.From their own personal works, users canenter the shared work only if all participantsare available.

The “shared work needs to be done”condition is true whenever the shared work isnot completed. In any case the user can decideto enter the shared work even when personalwork has not been done. A message is alwayssent to all participants asking them to enterthe shared work when some users request it.In this way synchronization is set up amongall participants. A lack of synchronization canoccur if some of the users cannot attend to

Figure 19. A scheme of two possible stories with a common collaborative activity (laboratory).

Chiang Mai J. Sci. 2011; 38 (Special Issue) 53

Figure 20. The meta-model for all the possible laboratories.

the shared work when the request is sent. Inthis case the other users waiting on the “UsersSynchronization” state can go back to theirpersonal works. Since both the personal workregions and the shared work state can beaccessed an arbitrary number of times withina single laboratory execution, a shallow historypseudo-state has been included in suchmachines in order to recreate the right contexts.When users synchronization is obtained andthe shared work state is accessed, users canexit from this state at any time, going back totheir personal works. Such loop can beexecuted until all work, both personal andshared, has been finished. An assessment isobtained when this condition occurs and, ifan insufficient result is achieved, users can

choose either to return back to the lab activityor to exit.

In the MMLAB meta-model we added apiece of custom notation to overcome thelimitations of the concrete syntax of statemachines: the presence of an arbitrary numberof parallel regions within the “Personal Work”state is expressed with theadditional notation(“. . .”). Following [31], the abstract syntaxmodel depicted in Figure 21 provides ascheme to formally define the additionalnotation.

More precisely, a state machine with twoorthogonal regions is defined. Then, thecolored block shows that an arbitrary number(vars e” 0) of additional regions can be addedto the machine.

54 Chiang Mai J. Sci. 2011; 38 (Special Issue)

6.5 Story Controller Architectural DetailsSumming up, the following information

needs to be stored in each instance of a specificnode, which may be visited by single differentusers at any time:• Information concerning a Leaf-Node

instance (LN_infos):° father SP;° structural elements (non context-sensitive);° Context-Sensitive Elements (CSEs);° semantical attributes;° list of semantically near LNs (RIDA, PCA,

PIDA);° Campus learning materials which can be

useful for the activity;° assessment criteria;° flag indicating if the activity is a laboratory.

In this case two additional pieces ofinformation are needed:

list of other participants. The group ofparticipants are usually establishedbetween users themselves;shared resources for the laboratory;

° flag indicating if the activity has beenpassed or not;

° activity assessment.• Informations concerning a Story-Path

instance (SP-infos):° SP nodes set. Each node can be either a

LN or a sub-story;° SP’s difficulty;° topological structure;° current stage within the SP;° possible next choices;° next stage suggested choice;

° list of user which passed this SP with goodresults;

° SP finished or not;° general assessment of SP.

• General informations:° chronological list of all activities in the user’s

interaction history.

As stated above, the main data structuresare statically stored from the SPs descriptionswhich are designed by a tutor. In this way,once the SPs descriptions as well as the tutorCSN nodes are provided, the needed MMSP

instances are generated by the Story Controller.Then, Campus resources, user CSNs and on-line users lists are dynamically computed andpresented to the user. About contexts building,all CSN nodes for a LN are obtained throughdatabase queries by comparing attributesinformation.

In order to limit transactions to/fromthe server, SPs execution is mainly performedwithin clients, while communication with theserver is started only in particular cases:information about the next stage on the pathare needed at the beginning of an activity anda sub-story; information are sent by the clientonly at the end of sub-stories. This leaves Leaf-Nodes execution management entirely withinclients. Figure 22 shows this behavior.

SP_infos described above are sent by theserver once the sub-story information isrequested. In the same way, LN_infos arerequested from within each sub-story. LNsare executed on the client and local data are

Figure 21. The abstract syntax model to describe the “Personal Work” state of Figure 20.

Chiang Mai J. Sci. 2011; 38 (Special Issue) 55

Figure 22. client-server dialog sequence.

stored when each LN belonging to the samesub-story is finished. Finally, when the userreaches the end of a SP, the complete reportdescribing the visited LNs list and obtainedresults for that SP is sent to the server.

6.6 A Story ScenarioFinally, a meta-model of the system

interface during a SP execution is shown inFigure 23. Notice that, in this case, a noderefers to a screen element and not to a specific

activity.A global view of SP path is shown to

the user on the first screen. Here all SP stages(i.e. both LNs and sub-stories) are presented,suggesting the next one(s) to be visited. Asstated above, such suggestions are dynamicallycomputed by the system during execution,following the imposed order of nodes andactivities assessments. A recursive call to thestarting state is shown in Figure 23 to representthe choice of a sub-story, and a similarbehavior is presented when a sub-story is left.When a learning activity (i.e. a LN) is entered,the activity itself is presented on the main partof the screen, while CSN nodes, users andresources links related to the activity aresimultaneously shown.

Parallel regions within the “GUI-Leaf-Node LNk” state manage all parts ofgraphics, hence links could be selected at anymoment by users in an asynchronous mannerwith respect to the activity flow of execution.GUI’s elements that have to be shown at eachLN execution are represented by such parallelmachines.

Moreover, LNj represents a user/tutor-defined CSN node. As already shown inFigure 17, no depth bounds are fixed to CSNs,therefore users can go deeper and deeper intoit. From within each node of a CSN, userscan choose to go back one hop or to returnto the CSN root. Finally, a LNj representingan in-depth activity for LNk can be accessedfrom the main screen of the SP.

7. CONCLUSIONSWe suggest storytelling as the basis for

the development of a a Deaf-centered E-Learning Environment, within the frameworkdefined by Conceptual Metaphors andEmbodied Cognition. The present work aimsto underline how such a set of conceptualtools, which are usually singularly exploited indifferent domains, can be synergicallycombined to design a novel educationalstrategy. Following this, we started to develop

56 Chiang Mai J. Sci. 2011; 38 (Special Issue)

Figure 23. A Meta-model for a Typical Scenario of SP Execution.

istat.it/sito/libri/letturalibrinitalia.pdf[5] Plato, The Symposium, Greek text with

commentary by Kenneth Dover.Cambridge: Cambridge University Press.,1980.

[6] Plato, Theaetetus <http://onlinebooks.library.upenn.edu/webbin/gutbook/lookup?num=1726>.

[7] Jean-Jacques Rousseau, Emile, or OnEducation. Trans. Allan Bloom. NewYork: Basic Books., 1979.

[8] Piaget J., The Psychology of Intelligence.New York: Routledge., 1950.

[9] Vygotskij L., et al., Mind in society.Harvard University Press., 1978.

[10] Bruner J.S., The Narrative Constructionof Reality. Critical Inquiry, 1991; 18(1):1-21.

[11] Antinoro Pizzuto E., et. al., Language

ACKNOWLEDGMENTSWork partially funded by Italian Ministry

of Education and Research (MIUR-FIRB),VISEL–RBNE074T5L E-learning, Deafnessand Written Language (2009-2012), see http://www.visel.cnr.it.

REFERENCES

[1] Paulos J.A., Innumeracy-mathematicalilliteracy and its consequences, First Hilland Wang ed., 1988.

[2] Math anxiety, http://www.counseling.txstate.edu/ resources/shoverview/bro/math.html

[3] Literacy and national reading statistics,teaching reading: educational cyberplay-ground, http://www.edu-cyberpg.com/Literacy/stats.asp

[4] Lettura di libri in Italia, http://culturaincifre.

Chiang Mai J. Sci. 2011; 38 (Special Issue) 57

Resources and Visual Communication ina Deaf-Centered Multimodal E-LearningEnvironment: Issues to be Addressed.Proc. of 7-th Int. Conf. on LanguageResources and Evaluation LREC, 2010.

[12] McKillop C., Storytelling grows up: usingstorytelling as a reflective tool in highereducation, Proc. of Scottish EducationalResearch Association conference, SERA,2005: 24-26th November.

[13] McDrury J., Alterio M., Learning ThroughStorytelling in Higher Education:Using Reflection & Experience toImprove Learning. Dunmore Press.,2002.

[14] Center for Digital Storytelling, <http://www.storycenter.org/>

[15] The educational use of Digital Storytelling,University of Huston. <http://digitalstorytelling.coe.uh.edu/>

[16] Rudnicki A., et al., The Buzz Continues..The Diffusion of Digital Storytellingacross disciplines and colleges at theUniversity of Houston, In C. Crawfordet al. (Eds.), Proc. of Soc. for Inf. Tech.& Teacher Ed. Int. Conf. Chesapeake,2006; 717-723.

[17] Imaz M., Benyon D., Designing withblends, MIT Press., 2007.

[18] Lakoff G. and Johnson M., MetaphorWe Live By. University of Chicago Press.,1980.

[19] Johnson M., The meaning of the body,University of Chicago Press., 2007.

[20] Lakoff G., The Contemporary Theoryof Metaphor, in E. Ortony (curated by)Metaphor and Thought, CambridgeUniversity Press., 1992.

[21] Lakoff G., Núñez R. E.(2000). WhereMathematics comes from, Basic BooksEd.

[22] Rohrer T., Understanding through thebody: fMRI and ERP Studies ofMetaphoric and Literal Language. 7thInt. Cognitive Linguistics Associationconf., 2001.

[23] Hurtienne J., Stößel C., Sturm C., MausA., Rötting M., Langdon P.M., andClarkson P.J., Physical gestures for abstractconcepts: inclusive design with primarymethaphors. In Interacting withComputers, Special Issue on Inclusionand Interaction: Designing Interactionfor Inclusive Populations., 2010; 22(6):475-484.

[24] Lakoff G., Espenson J., Goldberg A. andSchwartz A., Master Metaphor List,second ed. <http://araw.mede.uic.edu/~alansz/metaphor/METAPHORLIST.pdf>, 1991.

[25] Hurtienne J., Stößel C., Weber K., Sad isheavy and happy is light: populationstereotypes of tangible object attributes.In Proceedings of the 3rd InternationalConference on Tangible and EmbeddedInteraction (TEI ’09): 2009.

[26] Apple Human Interface Guidelines,<http://developer.apple.com/mac/library/documenta t ion/userexper ience/c o n c e p t u a l / a p p l e h i g u i d e l i n e s /XHIGIntro/XHIGIntro.html>

[27] Camilleri V. and Montebello M., SocialE-SPACES; socio-collaborative spaceswithin the virtual world ecosystem. Proc.of 7-th Int. Conf. on LanguageResources and Evaluation, LREC, 2010.

[28] De Marsico et. al., A proposal towardthe development of accessible e-learningcontent by human involvement. InUniversal Access in the InformationSociety, 2006; 5(2): 2006.

[29] Sign Writing site, <http://www.signwriting.org/>

[30] BBC Learning English, <http://www.b b c . c o . u k / w o r l d s e r v i c e /learningenglish/ >

[31] Bottoni P., Guerra E. and de Lara E.,Enforced generative patterns for thespecification of the syntax and semanticsof visual languages. In Journal of VisualLanguages and Computing, 2008; 19(4):429-455.