DB Miner

-

Upload

nishant-kumar -

Category

Documents

-

view

88 -

download

0

Transcript of DB Miner

DBMiner: A System for Mining Knowledge in Large RelationalDatabases�

Jiawei Han Yongjian Fu Wei Wang Jenny Chiang Wan Gong Krzysztof Koperski Deyi Li

Yijun Lu Amynmohamed Rajan Nebojsa Stefanovic Betty Xia Osmar R. Zaiane

Data Mining Research Group, Database Systems Research LaboratorySchool of Computing Science, Simon Fraser University, British Columbia, Canada V5A 1S6

E-mail: fhan, yongjian, weiw, ychiang, wgong, koperski, dli, yijunl, arajan, nstefano, bxia, [email protected]

URL: http://db.cs.sfu.ca/ (for research group) http://db.cs.sfu.ca/DBMiner (for system)

Abstract

A data mining system, DBMiner, has been developedfor interactive mining of multiple-level knowledge inlarge relational databases. The system implementsa wide spectrum of data mining functions, includinggeneralization, characterization, association, classi�-cation, and prediction. By incorporating several in-teresting data mining techniques, including attribute-oriented induction, statistical analysis, progressivedeepening for mining multiple-level knowledge, andmeta-rule guided mining, the system provides a user-friendly, interactive data mining environment withgood performance.

Introduction

With the upsurge of research and development activ-ities on knowledge discovery in databases (Piatetsky-Shapiro & Frawley 1991; Fayyad et al. 1996), a datamining system, DBMiner, has been developed based onour studies of data mining techniques, and our experi-ence in the development of an early system prototype,DBLearn. The system integrates data mining tech-niques with database technologies, and discovers vari-ous kinds of knowledge at multiple concept levels fromlarge relational databases e�ciently and e�ectively.

The system has the following distinct features:

1. It incorporates several interesting data mining tech-niques, including attribute-oriented induction (Han,Cai, & Cercone 1993; Han & Fu 1996), statisticalanalysis, progressive deepening for mining multiple-level rules (Han & Fu 1995; 1996), and meta-ruleguided knowledge mining (Fu & Han 1995). It alsoimplements a wide spectrum of data mining func-tions including generalization, characterization, as-sociation, classi�cation, and prediction.

�Research was supported in part by the grant NSERC-OPG003723 from the Natural Sciences and Engineering Re-search Council of Canada, the grant NCE:IRIS/Precarn-HMI-5 from the Networks of Centres of Excellence ofCanada, and grants from B.C. Advanced Systems Institute,MPR Teltech Ltd., and Hughes Research Laboratories.

2. It performs interactive data mining at multiple con-cept levels on any user-speci�ed set of data in adatabase using an SQL-like Data Mining QueryLanguage, DMQL, or a graphical user interface.Users may interactively set and adjust variousthresholds, control a data mining process, performroll-up or drill-down at multiple concept levels, andgenerate di�erent forms of outputs, including gen-eralized relations, generalized feature tables, multi-ple forms of generalized rules, visual presentation ofrules, charts, curves, etc.

3. E�cient implementation techniques have been ex-plored using di�erent data structures, includinggeneralized relations and multiple-dimensional datacubes. The implementations have been integratedsmoothly with relational database systems.

4. The data mining process may utilize user- or expert-de�ned set-grouping or schema-level concept hierar-chies which can be speci�ed exibly, adjusted dy-namically based on data distribution, and generatedautomatically for numerical attributes. Concept hi-erarchies are being taken as an integrated compo-nent of the system and are stored as a relation inthe database.

5. Both UNIX and PC (Windows/NT) versions of thesystem adopt a client/server architecture. The lattermay communicate with various commercial databasesystems for data mining using the ODBC technology.

The system has been tested on several large rela-tional databases, including NSERC (Natural Scienceand Engineering Research Council of Canada) researchgrant information system, with satisfactory perfor-mance. Additional data mining functionalities are be-ing designed and will be added incrementally to thesystem along with the progress of our research.

Architecture and Functionalities

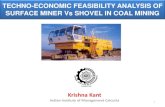

The general architecture of DBMiner, shown in Fig-ure 1, tightly integrates a relational database system,such as a Sybase SQL server, with a concept hierar-chy module, and a set of knowledge discovery mod-ules. The discovery modules of DBMiner, shown in Fig-

SQL Server

Data Concept Hierarchy

Graphical User Interface

Discovery Modules

Figure 1: General architecture of DBMiner

DBMiner:

FutureModules

Meta-ruleGuided Miner

Deviation Evaluator

EvolutionEvaluator

AssociationRule Finder

Discovery Modules

Characterizer Discriminator Classifier

Predictor

Figure 2: Knowledge discovery modules of DBMiner

ure 2, include characterizer, discriminator, classi�er,association rule �nder, meta-rule guided miner, pre-dictor, evolution evaluator, deviation evaluator, andsome planned future modules.

The functionalities of the knowledge discovery mod-ules are brie y described as follows:

� The characterizer generalizes a set of task-relevantdata into a generalized relation which can then beused for extraction of di�erent kinds of rules or beviewed at multiple concept levels from di�erent an-gles. In particular, it derives a set of characteristicrules which summarizes the general characteristics ofa set of user-speci�ed data (called the target class).For example, the symptoms of a speci�c disease canbe summarized by a characteristic rule.

� A discriminator discovers a set of discriminant ruleswhich summarize the features that distinguish theclass being examined (the target class) from otherclasses (called contrasting classes). For example,to distinguish one disease from others, a discrimi-nant rule summarizes the symptoms that discrimi-nate this disease from others.

� A classi�er analyzes a set of training data (i.e., a setof objects whose class label is known) and constructsa model for each class based on the features in thedata. A set of classi�cation rules is generated by sucha classi�cation process, which can be used to classifyfuture data and develop a better understanding of

each class in the database. For example, one mayclassify diseases and provide the symptoms whichdescribe each class or subclass.

� An association rule �nder discovers a set of asso-ciation rules (in the form of \A1 ^ � � � ^ Ai !

B1 ^ � � � ^ Bj") at multiple concept levels from therelevant set(s) of data in a database. For example,one may discover a set of symptoms often occurringtogether with certain kinds of diseases and furtherstudy the reasons behind them.

� Ameta-rule guided miner is a data miningmechanismwhich takes a user-speci�ed meta-rule form, such as\P (x; y) ^ Q(y; z) ! R(x; z)" as a pattern to con-�ne the search for desired rules. For example, onemay specify the discovered rules to be in the formof \major(s : student; x) ^ P (s; y) ! gpa(s; z)" inorder to �nd the relationships between a student'smajor and his/her gpa in a university database.

� A predictor predicts the possible values of some miss-ing data or the value distribution of certain at-tributes in a set of objects. This involves �nding theset of attributes relevant to the attribute of inter-est (by some statistical analysis) and predicting thevalue distribution based on the set of data similar tothe selected object(s). For example, an employee'spotential salary can be predicted based on the salarydistribution of similar employees in the company.

� A data evolution evaluator evaluates the data evolu-tion regularities for certain objects whose behaviorchanges over time. This may include characteriza-tion, classi�cation, association, or clustering of time-related data. For example, one may �nd the generalcharacteristics of the companies whose stock pricehas gone up over 20% last year or evaluate the trendor particular growth patterns of certain stocks.

� A deviation evaluator evaluates the deviation pat-terns for a set of task-relevant data in the database.For example, one may discover and evaluate a set ofstocks whose behavior deviates from the trend of themajority of stocks during a certain period of time.

Another important function module of DBMiner isconcept hierarchy which provides essential backgroundknowledge for data generalization and multiple-leveldata mining. Concept hierarchies can be speci�edbased on the relationships among database attributes(called schema-level hierarchy) or by set groupings(called set-grouping hierarchy) and be stored in theform of relations in the same database. Moreover,they can be adjusted dynamically based on the dis-tribution of the set of data relevant to the data miningtask. Also, hierarchies for numerical attributes can beconstructed automatically based on data distributionanalysis (Han & Fu 1994).

DMQL and Interactive Data Mining

DBMiner o�ers both an SQL-like data mining querylanguage, DMQL, and a graphical user interface forinteractive mining of multiple-level knowledge.

Example 1. To characterize CS grants in theNSERC96 database related to discipline code andamount category in terms of count% and amount%,the query is expressed in DMQL as follows,

use NSERC96�nd characteristic rules for \CS Discipline Grants"from award A, grant type Grelated to disc code, amount, count(*)%, amount(*)%where A.grant code = G.grant code

and A.disc code = \Computer Science"

The query is processed as follows: The systemcollects the relevant set of data by processing atransformed relational query, generalizes the data byattribute-oriented induction, and then presents the out-puts in di�erent forms, including generalized relations,generalized feature tables, multiple (including visual)forms of generalized rules, pie/bar charts, curves, etc.

A user may interactively set and adjust various kindsof thresholds to control the data mining process. Forexample, one may adjust the generalization thresholdfor an attribute to allow more or less distinct values inthis attribute. A user may also roll-up or drill-downthe generalized data at multiple concept levels. 2

A data mining query language such as DMQL fa-cilitates the standardization of data mining functions,systematic development of data mining systems, andintegration with standard relational database systems.Various kinds of graphical user interfaces can be de-veloped based on such a data mining query language.Such interfaces have been implemented in DBMiner onthree platforms: Windows/NT, UNIX, and Netscape.A graphical user interface facilitates interactive speci-�cation and modi�cation of data mining queries, con-cept hierarchies, and various kinds of thresholds, selec-tion and change of output forms, roll-up or drill-down,and dynamic control of a data mining process.

Implementation of DBMiner

Data structures: Generalized relation vs.multi-dimensional data cube

Data generalization is a core function of DBMiner.Two data structures, generalized relation, and multi-dimensional data cube, can be considered in the imple-mentation of data generalization.

A generalized relation is a relation which consistsof a set of (generalized) attributes (storing generalizedvalues of the corresponding attributes in the originalrelation) and a set of \aggregate" (measure) attributes(storing the values resulted from executing aggregatefunctions, such as count, sum, etc.), and in which each

60k-

Theory

Hardware

Software Eng.

Databases

AI

B.C. Ontario Quebec

0-20k20-40k

40-60k

Comp_method

Prairies Maritime

Amount

Discipline_code

Province

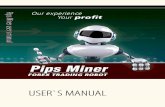

Figure 3: A multi-dimensional data cube

tuple is the result of generalization of a set of tuplesin the original data relation. For example, a general-ized relation award may store a set of tuples, such as\award(AI; 20 40k; 37; 835900)", which represents thegeneralized data for discipline code is \AI", the amountcategory is \20 40k", and such kind of data takes 37in count and $835,900 in (total) amount.

Amulti-dimensional data cubeis a multi-dimensionalarray structure, as shown in Figure 3, in which eachdimension represents a generalized attribute and eachcell stores the value of some aggregate attribute, suchas count, sum, etc. For example, a multi-dimensionaldata cube award may have two dimensions: \disci-pline code" and \amount category". The value \AI"in the \discipline code" dimension and \20-40k" in the\amount category" dimension locate the correspondingvalues in the two aggregate attributes, count and sum,in the cube. Then the values, count% and amount%,can be derived easily.

In comparison with the generalized relation struc-ture, a multi-dimensional data cube structure has thefollowing advantages: First, it may often save stor-age space since only the measurement attribute valuesneed to be stored in the cube and the generalized (di-mensional) attribute values will serve only as dimen-sional indices to the cube; second, it leads to fast accessto particular cells (or slices) of the cube using index-ing structures; third, it usually costs less to produce acube than a generalized relation in the process of gen-eralization since the right cell in the cube can be lo-cated easily. However, if a multi-dimensional data cubestructure is quite sparse, the storage space of a cube islargely wasted, and the generalized relation structureshould be adopted to save the overall storage space.

Both data structures have been explored in the DB-Miner implementations: the generalized relation struc-ture is adopted in version 1.0, and a multi-dimensionaldata cube structure in version 2.0. A more exibleimplementation is to consider both structures, adoptthe multi-dimensional data cube structure when thesize of the data cube is reasonable, and switch to the

generalized relation structure (by dynamic allocation)otherwise (this can be estimated based on the num-ber of dimensions being considered, and the attributethreshold of each dimension). Such an alternative willbe considered in our future implementation.

Besides designing good data structures, e�cient im-plementation of each discovery module has been ex-plored, as discussed below.

Multiple-level characterization

Data characterization summarizes and characterizes aset of task-relevant data, usually based on generaliza-tion. For mining multiple-level knowledge, progressivedeepening (drill-down) and progressive generalization(roll-up) techniques can be applied.

Progressive generalization starts with a conservativegeneralization process which �rst generalizes the datato slightly higher concept levels than the primitive datain the relation. Further generalizations can be per-formed on it progressively by selecting appropriate at-tributes for step-by-step generalization. Strong charac-teristic rules can be discovered at multiple abstractionlevels by �ltering (based on the corresponding thresh-olds at di�erent levels of generalization) generalizedtuples with weak support or weak con�dence in therule generation process.

Progressive deepening starts with a relatively high-level generalized relation, selectively and progressivelyspecializes some of the generalized tuples or attributesto lower abstraction levels.

Conceptually, a top-down, progressive deepeningprocess is preferable since it is natural to �rst �nd gen-eral data characteristics at a high concept level andthen follow certain interesting paths to step down tospecialized cases. However, from the implementationpoint of view, it is easier to perform generalization thanspecialization because generalization replaces low leveltuples by high ones through ascension of a concept hi-erarchy. Since generalized tuples do not register thedetailed original information, it is di�cult to get suchinformation back when specialization is required later.

Our technique which facilitates specializations ongeneralized relations is to save a \minimally general-ized relation/cube" in the early stage of generalization.That is, each attribute in the relevant set of data isgeneralized to minimally generalized concepts (whichcan be done in one scan of the data relation) and thenidentical tuples in such a generalized relation/cube aremerged together, which derives the minimally gener-alized relation. After that, both progressive deepeningand interactive up-and-down can be performed withreasonable e�ciency: If the data at the current ab-straction level is to be further generalized, generaliza-tion can be performed directly on it; on the other hand,if it is to be specialized, the desired result can be de-rived by generalizing the minimally generalized rela-

tion/cube to appropriate level(s).

Discovery of discriminant rules

The discriminator of DBMiner �nds a set of discrimi-nant rules which distinguishes the general features of atarget class from that of contrasting class(es) speci�edby a user. It is implemented as follows.

First, the set of relevant data in the database hasbeen collected by query processing and is partitionedrespectively into a target class and one or a set of con-trasting class(es). Second, attribute-oriented inductionis performed on the target class to extract a prime tar-get relation/cube, where a prime target relation is ageneralized relation in which each attribute containsno more than but close to the threshold value of thecorresponding attribute. Then the concepts in the con-trasting class(es) are generalized to the same level asthose in the prime target relation/cube, forming theprime contrasting relation/cube. Finally, the informa-tion in these two classes is used to generate qualitativeor quantitative discriminant rules.

Moreover, interactive drill-down and roll-up can beperformed synchronously in both target class and con-trasting class(es) in a similar way as that explained inthe last subsection (characterization). These functionshave been implemented in the discriminator.

Multiple-level association

Based on many studies on e�cient mining of associa-tion rules (Agrawal & Srikant 1994; Srikant & Agrawal1995; Han & Fu 1995), a multiple-level association rule�nder has been implemented in DBMiner.

Di�erent from mining association rules in transac-tion databases, a relational association rule miner may�nd two kinds of associations: nested association and at association, as illustrated in the following example.

Example 2. Suppose the \course taken" relation ina university database has the following schema:

course taken = (student id; course; semester; grade):

Nested association is the association between a dataobject and a set of attributes in a relation by view-ing data in this set of attributes as a nested rela-tion. For example, one may �nd the associations be-tween students and their course performance by view-ing \(course; semester; grade)" as a nested relation as-sociated with student id.

Flat association is the association among di�er-ent attributes in a relation without viewing any at-tribute(s) as a nested relation. For example, one may�nd the relationships between course and grade in thecourse taken relation such as \the courses in comput-ing science tend to have good grades", etc.

Two associations require di�erent data mining tech-niques.

For mining nested associations, a data relation canbe transformed into a nested relation in which thetuples which share the same values in the unnestedattributes are merged into one. For example, thecourse taken relation can be folded into a nested re-lation with the schema,

course taken = (student id; course history)course history = (course; semester; grade).

By such transformation, it is easy to derive associ-ation rules like \90% senior CS students tend to takeat least three CS courses at 300-level or up in eachsemester". Since the nested tuples (or values) canbe viewed as data items in the same transaction, themethods for mining association rules in transactiondatabases, such as (Han & Fu 1995), can be appliedto such transformed relations in relational databases.

Multi-dimensional data cube structure facilitates ef-�cient mining of multi-level at association rules. Acount cell of a cube stores the number of occurrencesof the corresponding multi-dimensional data values,whereas a dimension count cell stores the sum of countsin the whole dimension. With this structure, it isstraightforward to calculate the measurements such assupport and con�dence of association rules. A set ofsuch cubes, ranging from the least generalized cube torather high level cubes, facilitate mining of associationrules at multiple concept levels. 2

Meta-rule guided mining

Since there are many ways to derive association rulesin relational databases, it is preferable to have usersto specify some interesting constraints to guide a datamining process. Such constraints can be speci�ed in ameta-rule (or meta-pattern) form (Shen et al. 1996),which con�nes the search to speci�c forms of rules. Forexample, a meta-rule \P (x; y) ! Q(x; y; z)", where Pand Q are predicate variables matching di�erent prop-erties in a database, can be used as a rule-form con-straint in the search.

In principle, a meta-rule can be used to guide themining of many kinds of rules. Since the associationrules are in the form similar to logic rules, we have �rststudied meta-rule guided mining of association rules inrelational databases (Fu & Han 1995). Di�erent fromthe study by (Shen et al. 1996) where a meta-predicatemay match any relation predicates, deductive predi-cates, attributes, etc., we con�ne the search to thosepredicates corresponding to the attributes in one rela-tion. One such example is illustrated as follows.

Example 3. A meta-rule guided data mining querycan be speci�ed in DMQL as follows for mining a spe-ci�c form of rules related to a set of attributes: \major,gpa, status, birth place, address" in relation student forthose born in Canada in a university database.

�nd association rules in the form of

major(s : student; x) ^Q(s; y) ! R(s; z)

related to major, gpa, status, birth place, addressfrom studentwhere birth place = \Canada"

Multi-level association rules can be discovered insuch a database, as illustrated below:

major(s; \Science") ^ gpa(s; \Excellent") !status(s; \Graduate") (60%)

major(s; \Physics") ^ status(s; \M:Sc") !gpa(s; \3:8 4:0") (76%)

The mining of such multi-level rules can be imple-mented in a similar way as mining multiple-level asso-ciation rules in a multi-dimensional data cube. 2

Classi�cation

Data classi�cation is to develop a description or modelfor each class in a database, based on the featurespresent in a set of class-labeled training data.

There have been many data classi�cation methodsstudied, including decision-tree methods, such as ID-3and C4.5 (Quinlan 1993), statistical methods, neuralnetworks, rough sets, etc. Recently, some database-oriented classi�cation methods have also been investi-gated (Mehta, Agrawal, & Rissanen 1996).

Our classi�er adopts a generalization-based decision-tree induction method which integrates attribute-oriented induction with a decision-tree induction tech-nique, by �rst performing attribute-oriented inductionon the set of training data to generalize attribute val-ues in the training set, and then performing decisiontree induction on the generalized data.

Since a generalized tuple comes from the generaliza-tion of a number of original tuples, the count informa-tion is associated with each generalized tuple and playsan important role in classi�cation. To handle noiseand exceptional data and facilitate statistical analysis,two thresholds, classi�cation threshold and exceptionthreshold, are introduced. The former helps justi�ca-tion of the classi�cation at a node when a signi�cantset of the examples belong to the same class; whereasthe latter helps ignore a node in classi�cation if it con-tains only a negligible number of examples.

There are several alternatives for doing generaliza-tion before classi�cation: A data set can be generalizedto either a minimally generalized concept level, an in-termediate concept level, or a rather high concept level.Too low a concept level may result in scattered classes,bushy classi�cation trees, and di�culty at concise se-mantic interpretation; whereas too high a level mayresult in the loss of classi�cation accuracy.

Currently, we are testing several alternatives atintegration of generalization and classi�cation indatabases, such as (1) generalize data to some mediumconcept levels; (2) generalize data to intermediate con-cept level(s), and then perform node merge and split

for better class representation and classi�cation accu-racy; and (3) perform multi-level classi�cation and se-lect a desired level by a comparison of the classi�cationquality at di�erent levels. Since all three classi�ca-tion processes are performed in relatively small, com-pressed, generalized relations, it is expected to resultin e�cient classi�cation algorithms in large databases.

Prediction

A predictor predicts data values or value distributionson the attributes of interest based on similar groups ofdata in the database. For example, one may predictthe amount of research grants that an applicant mayreceive based on the data about the similar groups ofresearchers.

The power of data prediction should be con�ned tothe ranges of numerical data or the nominal data gen-eralizable to only a small number of categories. It isunlikely to give reasonable prediction on one's name orsocial insurance number based on other persons' data.

For successful prediction, the factors (or attributes)which strongly in uence the values of the attributes ofinterest should be identi�ed �rst. This can be done bythe analysis of data relevance or correlations by statis-tical methods, decision-tree classi�cation techniques,or simply be based on expert judgement. To analyzeattribute correlation, our predictor constructs a contin-gency table followed by association coe�cient calcula-tion based on �2-test by the analysis of minimally gen-eralized data in databases. The attribute correlationassociated with each attribute of interest is precom-puted and stored in a special relation in the database.

When a prediction query is submitted, the set ofdata relevant to the requested prediction is collected,where the relevance is based on the attribute correla-tions derived by the query-independent analysis. Theset of data which matches or is close to the query con-dition can be viewed as similar group(s) of data. Ifthis set is big enough (i.e., su�cient evidence exists),its value distribution on the attribute of interest can betaken as predicted value distribution. Otherwise, theset should be appropriately enlarged by generalizationon less relevant attributes to certain high concept levelto collect enough evidence for trustable prediction.

Further Development of DBMiner

The DBMiner system is currently being extended inseveral directions, as illustrated below.

� Further enhancement of the power and e�ciency ofdata mining in relational database systems, includ-ing the improvement of system performance and rulediscovery quality for the existing functional modules,and the development of techniques for mining newkinds of rules, especially on time-related data.

� Integration, maintenance and application of discov-ered knowledge, including incremental update ofdiscovered rules, removal of redundant or less in-teresting rules, merging of discovered rules into aknowledge-base, intelligent query answering usingdiscovered knowledge, and the construction of mul-tiple layered databases.

� Extension of data mining technique towards ad-vanced and/or special purpose database systems,including extended-relational, object-oriented, text,spatial, temporal, and heterogeneous databases.Currently, two such data mining systems, GeoMinerand WebMiner, for mining knowledge in spatialdatabases and the Internet information-base respec-tively, are being under design and construction.

References

Agrawal, R., and Srikant, R. 1994. Fast algorithms formining association rules. In Proc. 1994 Int. Conf. Very

Large Data Bases, 487{499.

Fayyad, U. M.; Piatetsky-Shapiro, G.; Smyth, P.; andUthurusamy, R. 1996. Advances in Knowledge Discovery

and Data Mining. AAAI/MIT Press.

Fu, Y., and Han, J. 1995. Meta-rule-guided mining ofassociation rules in relational databases. In Proc. 1st Int'l

Workshop on Integration of Knowledge Discovery with De-

ductive and Object-Oriented Databases, 39{46.

Han, J., and Fu, Y. 1994. Dynamic generation and re-�nement of concept hierarchies for knowledge discoveryin databases. In Proc. AAAI'94 Workshop on Knowledge

Discovery in Databases (KDD'94), 157{168.

Han, J., and Fu, Y. 1995. Discovery of multiple-levelassociation rules from large databases. In Proc. 1995 Int.

Conf. Very Large Data Bases, 420{431.

Han, J., and Fu, Y. 1996. Exploration of the power ofattribute-oriented induction in data mining. In Fayyad,U.; Piatetsky-Shapiro, G.; Smyth, P.; and Uthurusamy,R., eds., Advances in Knowledge Discovery and Data Min-

ing. AAAI/MIT Press. 399{421.

Han, J.; Cai, Y.; and Cercone, N. 1993. Data-driven dis-covery of quantitative rules in relational databases. IEEETrans. Knowledge and Data Engineering 5:29{40.

Mehta, M.; Agrawal, R.; and Rissanen, J. 1996. SLIQ:A fast scalable classi�er for data mining. In Proc.

1996 Int. Conference on Extending Database Technology

(EDBT'96).

Piatetsky-Shapiro, G., and Frawley, W. J. 1991. Knowl-edge Discovery in Databases. AAAI/MIT Press.

Quinlan, J. R. 1993. C4.5: Programs for Machine Learn-

ing. Morgan Kaufmann.

Shen, W.; Ong, K.; Mitbander, B.; and Zaniolo, C.1996. Metaqueries for data mining. In Fayyad, U.;Piatetsky-Shapiro, G.; Smyth, P.; and Uthurusamy, R.,eds., Advances in Knowledge Discovery and Data Mining.AAAI/MIT Press. 375{398.

Srikant, R., and Agrawal, R. 1995. Mining generalizedassociation rules. In Proc. 1995 Int. Conf. Very Large

Data Bases, 407{419.