Byte 2

-

Upload

rudra-ghosh -

Category

Documents

-

view

219 -

download

0

Transcript of Byte 2

-

8/8/2019 Byte 2

1/3

Prefixes for bit and byte multiples

ByteFrom Wikipedia, the free encyclopedia

The byte (pronounced /bat/), is a unit of digital information in computing and telecommunications, that most commonly consists of eight bits.

Historically, a byte was the number of bits used to encode a single character of text in a computer[1][2]

and it is for this reason the basicaddressable element in many computer architectures.

The size of the byte has historically been hardware dependent and no definitive standards exist that mandate the size. The de facto standard of

eight bits is a convenient power of two permitting the values 0 through 255 for one byte. Many types of applications use variables representablein eight or fewer bits, and processor designers optimize for this common usage. The byte size and byte addressing are often used in place of

longer integers for size or speed optimizations in microcontrollers and CPUs. Floating point processors and signal processing applications tendto operate on larger values and some digital signal processors have 16 to 40 bits as the smallest unit of addressable storage. On such

processors a byte may be defined to contain this number of bits. The popularity of major commercial computing architectures have aided in the

ubiquitous acceptance of the 8-bit size.

The term octet was defined to explicitly denote a sequence of 8 bits because of the ambiguity associated with the term byte.

Contents

1 History

2 Size

3 Unit symbol4 Unit multiples5 Common uses

6 See also7 References

History

The term byte was coined by Dr. Werner Buchholz in July 1956, during the early design phase for the IBM Stretch computer.[3][4]

It is a

respelling ofbite to avoid accidental mutation to bit.[1]

The size of a byte was at fir st selected to be a multiple of existing teletypewriter codes, particularly the 6-bit codes used by the U.S. Army

(Fieldata) and Navy. A number of early computers were designed for 6-bit codes, including SAGE, the CDC 1604, IBM 1401, and PDP-8.

Historical IETF documents cite varying examples of byte sizes. RFC 608 mentions byte sizes for FTP hosts as the most computationally efficient

size of a given hardware platform.[5]

In 1963, to end the use of incompatible teleprinter codes by different branches of the U.S. government, ASCII, a 7-bit code, was adopted as a

Federal Information Processing Standard, making 6-bit by tes commercially obsolete. In the early 1960s, AT&T introduced digital telephony first

on long-distance trunk lines. These used the 8-bit -law encoding. This large investment promised to reduce transmission costs for 8-bit data.

IBM at that time extended its 6-bit code "BCD" to an 8-bit character code, "Extended BCD" in the System/360. The use of 8-bit codes for digitaltelephony also caused 8-bit data "octets" to be adopted as the basic data unit of the early Internet.

Since then, general-purpose computer designs have used eight bits in order to use standard memory parts, and communicate well, even thoughmodern character sets have grown to use as many as 32 bits per character.

In the late 1970s, microprocessors such as the Intel 8008 (the direct predecessor of the 8080, and then the 8086 used in early PCs) could

perform a small number of operations on four bits, such as the DAA (decimal adjust) instruction, and the half carryflag, which were used toimplement decimal arithmetic routines. These four-bit quantities were called nibbles, in homage to the then-common 8-bit bytes.

Size

Architectures that did not have eight-bit bytes include the CDC 6000 series scientific mainframes that divided their 60-bit floating-point words into10 six-bit bytes. These bytes conveniently held character data from 12-bit punched Hollerith cards, typically the upper-case alphabet and

decimal digits. CDC also often referred to 12-bit quantities as bytes, each holding two 6-bit display code characters, due to the 12-bit I/O

architecture of the machine. The PDP-10 used assembly instructions LDB and DPB to load and deposit bytes of any width from 1 to 36-bits.These operations survive today in Common Lisp. Bytes of six, seven, or nine bits were used on some computers, for example within the 36-bit

word of the PDP-10. The UNIVAC 1100/2200 series computers (now Unisys) addressed in both 6-bit (Fieldata) and nine-bit (ASCII) modeswithin its 36-bit word. Telex machines used 5 bits to encode a character.

Factors behind the ubiquity of the eight bit byte include the popularity of the IBM System/360 architecture, introduced in the 1960s, and the 8-bitmicroprocessors, introduced in the 1970s.

The term octet is used to unambiguously specify a size of eight bits, and is used extensively in protocol definitions, for example.

Unit symbol

The unit symbol for the byte is specified in IEEE 1541 and the Metric Interchange Format[6]

asthe upper-case character B, while other standards, such as the International Electrotechnical

1 of 3

-

8/8/2019 Byte 2

2/3

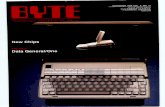

Decimal

Value SI

1000 k kilo

10002 M mega

10003 G giga

10004 T tera

10005 P peta

10006 E exa

10007 Z zetta

10008 Y yotta

Binary

Value IEC JEDEC

1024 Ki kibi K kilo

10242 Mi mebi M mega

10243 Gi gibi G giga

10244 Ti tebi

10245 Pi pebi

10246 Ei exbi

10247 Zi zebi

10248 Yi yobi

Percentage difference betweendecimal and binary interpretations of

the unit prefixes grows with increasing

storage size.

Commission (IEC) standard IEC 60027, appear silent on the subject.

In the International System of Units (SI), B is the symbol of the bel, a unit of logarithmic power

ratios named after Alexander Graham Bell. The usage of B for byte therefore conflicts with thisdefinition. It is also not consistent with the SI convention that only units named after persons

should be capitalized. However, there is little danger of confusion because the bel is a rarelyused unit. It is used primarily in its decadic fraction, the decibel (dB), for signal strength and

sound pressure level measurements, while a unit for one tenth of a byte, i.e. the decibyte, isnever used.

The unit symbol KB is commonly used for kilobyte, but may be confused with the common

meaning of Kb for kilobit. IEEE 1541 specifies the lower case character b as the symbol for bit;

however, the IEC 60027 and Metric-Interchange-Format specify bit (e.g., Mbit for megabit) forthe symbol, a sufficient disambiguation from byte.

The lowercase letter o for octet is a commonly used symbol in several non-English languages

(e.g., French[7]

and Romanian), and is also used with metric prefixes (for example, ko and Mo)

Today the harmonized ISO/IEC 80000-13:2008 - Quantities and units -- Part 13: Information science and technology standard cancels andreplaces subclauses 3.8 and 3.9 of IEC 60027-2:2005, namely those related to Information theory and Prefixes for binary multiples.

Unit multiples

See also: Binary prefix

There has been considerable confusion about the meanings of SI (or metric) prefixes used with the unit

byte, especially concerning prefixes such as kilo (k or K) and mega (M) as shown in the chart Prefixes

for bit and byte. Since computer memory is designed with binary logic, multiples are expressed in

powers of 2, rather than 10. The software and computer industries often use binary estimates of theSI-prefixed quantities, while producers of computer storage devices prefer the SI values. This is the

reason for specifying computer hard drive capacities of, say, 100 GB, when it contains 93 GiB ofstorage space.

While the numerical difference between the decimal and binary interpretations is small for the prefixeskilo and mega, it grows to over 20% for prefix yotta, illustrated in the linear-log graph (at right) of

difference versus storage size.

Common uses

The byte is also defined as a data type in certain programming languages. The C and C++ programming languages, for example, define byte as

an "addressable unit of data large enough to hold any member of the basic character set of the execution environment" (clause 3.6 of the Cstandard). The C standard requires that thechar integral data type is capable of holding at least 255 different values, and is represented by at

least 8 bits (clause 5.2.4.2.1). Various implementations of C and C++ define a byte as 8, 9, 16, 32, or 36 bits[8][9]

. The actual number of bits in a

particular implementation is documented as CHAR_BIT as implemented in the limits.h file. Java's primitive byte data type is always defined

as consisting of 8 bits and being a signed data type, holding values from 128 to 127.

In data transmission systems, a contiguous sequence of binary bits in a serial data stream, such as in modem or satellite communications, whichis the smallest meaningful unit of data. These bytes might include start bits, stop bits, or parity bits, and thus could vary from 7 to 12 bits to

contain a single 7-bit ASCII code.

2 of 3

-

8/8/2019 Byte 2

3/3

3 of 3

WHY IS A BYTE 8 BITS? OR IS IT?

Computer History Vignettes

By Bob Bemer

I recently received an e-mail from one Zeno Luiz Iensen Nadal, a worker for Siemens in Brazil. He asked "My Algorythms

teacher asked me and my colleagues 'Why a byte has eight bits?' Is there a technical answer for that?"

Of course I could not resist a reply to someone named Zeno, after that teacher of ancient times. Some people copied on thereply thought it a useful document, so (having done the hard work already) I add it to my site as further bite of history.

I am way behind in my work, but I just cannot resist trying to answer your question on why a "byte" has eight bits.

The answer is that some do, and some don't. But that takes explaining, as follows:

If computers worked entirely in binary (and some did a long time ago), and did nothing but calculations with binary numbers, there would be

no bytes.

But to use and manipulate character information we must have encodings for those symbols. And much of this was already known from

punch card days.

The punch card of IBM (others existed) had 12 rows and 80 columns. Each column was assigned to a symbol, a term I use here although

they have fancier names nowadays because computers have been used in so many new ways.

The columns, going down, starting from the top, were 12-11-0-1-2-3-4-5-6-7-8-9. A punch in the 0 to 9 rows signified the digits 0-9. A group

of columns could be called a "field", and a number in such a field could carry a plus sign for the number (an additional punch in top row 12 of

the units position of the number), or a minus sign (an additional punch in row 11 just under that).

Then they started to need alphabets. This was accomplished by adding the 12 punch to the digits 1-9 to make letters A through I, the 11

punch to make letters J through R. For S through Z they added the 0 punch to the digits 2 through 9 (the 0-1 combination was skipped --

3x9=27, but the English alphabet has only 26 letters). The 12, 11, and 0 punches were called "zones", and you'll notice them today lurking in

the high-order 4 bits. Remember that this was much prior to binary representations of those same characters.

The first bonus was that the 12 and 11 punches without any 0-9 punch gave us the characters + and -. But no other punctuation was

represented then, not even a period (dot, full stop) in IBM or telecommunication equipment. One can see this in early telegrams, where one

said "I MISS YOU STOP COME HOME STOP". "STOP" stood for the period the machine did not have.

Then punctuation and other marks had combinations of punches assigned, but there had to be 3 punches in a column to do this. In most case

the third punch was an extra "8".

In this way, with 10 digits, 26 alphabetic, and 11 others, IBM got to 47 characters. UNIVAC, with different punch cards (round holes, not

rectangles, and 90 columns, not 80) got to about 54. But most of these were commercial characters. When FORTRAN came along, they

needed, for example, a "divide" symbol, and an "=" symbol, and others not in the commercial set. So they had to use an alternate set of rules

for scientific and mathematical work. A set of FORTRAN cards would cause havoc in payroll!

With many early computers these punch cards were used as input and output, and inasmuch as the total number of characters representable

did not exceed 64, why not use just 6 bits each to represent them? The same applied to 6-track punched tape for teletypes.

In this period I came to work for IBM, and saw all the confusion caused by the 64-character limitation. Especially when we started to think

about word processing, which would require both upper and lower case. Add 26 lower case letters to 47 existing, and one got 73 -- 9 more

than 6 bits could represent.

I even made a proposal (in view of STRETCH, the very first computer I know of with an 8-bit byte) that would extend the number of punch

card character codes to 256 [1]. Some folks took it seriously. I thought of it as a spoof.

So some folks started thinking about 7-bit characters, but this was ridiculous. With IBM's STRETCH computer as background, handling

64-character words divisible into groups of 8 (I designed the character set for it, under the guidance of Dr. Werner Buchholz, the man who

DID coin the term "byte" for an 8-bit grouping). [2] It seemed reasonable to make a universal 8-bit character set, handling up to 256. In those

days my mantra was "powers of 2 are magic". And so the group I headed developed and justified such a proposal [3].

That was a little too much progress when presented to the standards group that was to formalize ASCII, so they stopped short for the

moment with a 7-bit set, or else an 8-bit set with the upper half left for future work.

The IBM 360 used 8-bit characters, although not ASCII directly. Thus Buchholz's "byte" caught on everywhere. I myself did not like the

name for many reasons. The design had 8 bits moving around in parallel. But then came a new IBM part, with 9 bits for self-checking, both

inside the CPU and in the tape drives. I exposed this 9-bit byte to the press in 1973. But long before that, when I headed software operations

for Cie. Bull in France in 1965-66, I insisted that "byte" be deprecated in favor of "octet".

You can notice that my preference then is now the preferred term. It is justified by new communications methods that can carry 16, 32, 64,

and even 128 bits in parallel. But some foolish people now refer to a "16-bit byte" because of this parallel transfer, which is visible in the

UNICODE set. I'm not sure, but maybe this should be called a "hextet".

But you will notice that I am still correct. Powers of 2 are still magic!

![X10 Tester - · PDF fileX10_Tester.can Page 3 of 128. 151 BYTE hasToSendWriteColumnConfig = 0; 152 BYTE hasToSendWriteColumnDefault = 0; 153 154 BYTE StartLogicControl[6]; 155 BYTE](https://static.fdocuments.us/doc/165x107/5aa9f1037f8b9a9a188d968e/x10-tester-page-3-of-128-151-byte-hastosendwritecolumnconfig-0-152-byte-hastosendwritecolumndefault.jpg)