BURT: BERT-inspired Universal Representation from Twin ...

Transcript of BURT: BERT-inspired Universal Representation from Twin ...

BURT: BERT-inspired Universal Representation from Twin Structure

Yian Li1,2,3, Hai Zhao1,2,3,∗

1Department of Computer Science and Engineering, Shanghai Jiao Tong University2Key Laboratory of Shanghai Education Commission for Intelligent Interaction

and Cognitive Engineering, Shanghai Jiao Tong University, Shanghai, China3MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University

[email protected], [email protected]

Abstract

Pre-trained contextualized language mod-els such as BERT (Devlin et al., 2019)have shown great effectiveness in a widerange of downstream Natural Language Pro-cessing (NLP) tasks. However, the effec-tive representations offered by the mod-els target at each token inside a sequencerather than each sequence and the fine-tuning step involves the input of both se-quences at one time, leading to unsatis-fying representations of various sequenceswith different granularities. Especially, assentence-level representations taken as thefull training context in these models, therecomes inferior performance on lower-levellinguistic units (phrases and words). Inthis work, we present BURT (BERT in-spired Universal Representation from TwinStructure) that is capable of generating uni-versal, fixed-size representations for inputsequences of any granularity, i.e., words,phrases, and sentences, using a large scaleof natural language inference and para-phrase data with multiple training objec-tives. Our proposed BURT adopts theSiamese network, learning sentence-levelrepresentations from natural language in-ference dataset and word/phrase-level rep-resentations from paraphrasing dataset, re-spectively. We evaluate BURT across differ-ent granularities of text similarity tasks, in-cluding STS tasks, SemEval2013 Task 5(a)and some commonly used word similar-ity tasks, where BURT substantially out-performs other representation models onsentence-level datasets and achieves sig-nificant improvements in word/phrase-levelrepresentation.

∗Corresponding author. This paper was partially sup-ported by National Key Research and Development Programof China (No. 2017YFB0304100) and Key Projects of Na-tional Natural Science Foundation of China (U1836222 and61733011).

1 Introduction

Representing words, phrases and sentences aslow-dimensional dense vectors has always beenthe key to many Natural Language Processing(NLP) tasks. Previous language representationlearning methods can be divided into two differentcategories based on the language unit they focuson, and therefore are suitable for different situa-tions. High-quality word vectors derived by wordembedding models (Mikolov et al., 2013a; Pen-nington et al., 2014; Joulin et al., 2016) are goodat measuring syntactic and semantic word similar-ities and significantly benefit a lot of NLP mod-els. Later proposed sentence encoders (Conneauet al., 2017; Subramanian et al., 2018; Cer et al.,2018) aim to learn generalized fixed-length sen-tence representations in supervised or multi-taskmanners, obtaining substantial results on multipledownstream tasks. Nevertheless, these models fo-cus on either words or sentences, achieving en-couraging performance at one level of linguisticunit but less satisfactory results at other levels.

Recently, contextualized representations with alanguage model training objective such as ELMo,OpenAI GPT, BERT, XLNet and ALBERT (Pe-ters et al., 2018; Radford et al., 2018; Devlin et al.,2019; Yang et al., 2019) (Lan et al., 2019) are ex-pected to capture complex features (syntax andsemantics) for sequences of any length. Espe-cially, BERT improves the pre-training and fine-tuning scenario, obtaining new state-of-the-art re-sults on multiple sentence-level tasks. On the ba-sis of BERT, ALBERT introduces three techniquesto reduce memory consumption and training time:decomposing embedding parameters into smallermatrices, sharing parameters cross layers and re-placing the next sentence prediction (NSP) train-ing objective with the sentence-order prediction(SOP). In the fine-tuning procedure of both mod-els, the [CLS] token is considered to be the rep-

arX

iv:2

004.

1394

7v2

[cs

.CL

] 3

Aug

202

0

resentation of the input sentence pair. Despite itseffectiveness, these representations are still token-based and the model requires both sequences to beencoded at one time, leading to unsatisfying rep-resentation of an individual sequence. Most im-portantly, there is a huge gap in representing lin-guistic units of different granularities. The modelability of handling lower-level linguistic units suchas phrases and words is not as good as pre-trainedword embeddings.

In this paper, we propose BURT (BERT in-spired Universal Representation from Twin Struc-ture) to learn universal representations for dif-ferent grained linguistic units (including words,phrases and sentences) through multi-task super-vised training on two kinds of datasets: NLI (Bow-man et al., 2015; Williams et al., 2018) and theParaphrase Database (PPDB) (Ganitkevitch et al.,2013). The former is usually used as a sentence-pair classification task to develop semantic rep-resentations of sentences. The latter contains alarge number of paraphrases, which in our exper-iments are considered as word/phrase-level para-phrase identification and pairwise text classifica-tion tasks. In order to let BURT learn the rep-resentation of a single sequence, we adopt theSiamese neural network where each word, phraseand sentence are encoded separately through thetwin networks, and then transformed into a fixed-length vector by mean-pooling. Finally, for eachsequence pair, the concatenation of the two vec-tors is fed into a softmax layer for classifica-tion. As experiments reveal, our multi-task learn-ing framework combines the characteristics of dif-ferent training objectives with respect to linguisticunits of different granularities.

Our BURT is evaluated on multiple levels ofsemantic similarity tasks. In addition to stan-dard datasets, we sample pairs of phrases fromthe Paraphrase Database to construct an addi-tional phrase similarity test set. Results show thatBURT substantially outperforms sentence repre-sentation models including Skip-thought vectors(Kiros et al., 2015), InferSent (Conneau et al.,2017), and GenSen (Subramanian et al., 2018) onseven STS test sets and two phrase-level similar-ity tasks. Evaluation on word similarity datasetssuch as SimLex, WS-353, MEN and SCWS alsodemonstrates that BURT is better at encodingwords than other sentence representation models,and even surpasses pre-trained word embedding

models by 10.5 points Spearman’s correlation onSimLex.

Generally, BURT can be used as a universal en-coder that produces fixed-size representations fordifferent grained input sequences of any granular-ity without additional training for specific tasks.Moreover, it can be further fine-tuned for down-stream tasks by simply adding an decoding layeron top of the model with few task-specific param-eters.

2 Related Work

Representing words as real-valued dense vectorsis a core technology of deep learning in NLP.Trained on massive unlabeled corpus, word em-bedding models (Mikolov et al., 2013a; Penning-ton et al., 2014; Joulin et al., 2016) map wordsinto a vector space where similar words have sim-ilar latent representations. Pre-trained word vec-tors are well known for their good performance onword similarity tasks, while they are less satisfac-tory in representing phrases and sentences. Differ-ent from the above mentioned static word embed-ding models, ELMo (Peters et al., 2018) attemptsto learn context-dependent word representationsthrough a two-layer bi-directional LSTM network,where each word is allowed to have different rep-resentations according to its contexts. The embed-ding of each token is the concatenation of hiddenstates from both directions. Nevertheless, mostNLP tasks require representations for higher levelsof linguistic units such as phrases and sentences.

Generally, phrase embeddings are more difficultto learn than word embeddings for the former hasmuch more diversities in forms and types than thelatter. One approach is to treat each phrase as anindividual unit and learn its embedding using thesame technique for words (Mikolov et al., 2013b).However, this method requires the preprocess stepof extracting frequent phrases in the corpus, andmay suffer from data sparsity. Therefore, embed-dings learned in this way are not able to truly rep-resent the meaning of phrases. Since distributedrepresentation of words is a powerful techniquethat has already been used as prior knowledge forall kinds of NLP tasks, one straightforward andsimple idea to obtain a phrase representation is tocombine the embeddings of all the words in it. Topreserve word order and better capture linguisticinformation, Yu and Dredze (2015) come up withcomplex composition functions rather than sim-

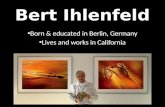

Figure 1: Illustration of the architecture of BURT.

ply averaging word embeddings. Based on theanalysis of the impact of training data on phraseembeddings, Zhou et al. (2017) propose to traintheir pairwise-GRU network utilizing large-scaledparaphrase database.

In recent years, more and more researchers havefocused on sentence representations since they arewidely used in various applications such as in-formation retrieval, sentiment analysis and ques-tion answering. The quality of sentence embed-dings are usually evaluated in a wide range ofdownstream NLP tasks. One simple but pow-erful baseline for learning sentence embeddingsis to represent sentence as a weighted sum ofword vectors. Inspired by the skip-gram algorithm(Mikolov et al., 2013a), the SkipThought model(Kiros et al., 2015), where both the encoder anddecoder are based on Recurrent Neural Network(RNN), is designed to predict the surrounding sen-tences for an given passage. Logeswaran and Lee(2018) improve the model structure by replacingthe decoder with a classifier that distinguishes con-texts from other sentences. Rather than unsuper-vised training, InferSent (Conneau et al., 2017)is designed as a bi-directional LSTM sentenceencoder that is trained on the Stanford NaturalLanguage Inference (SNLI) dataset. Subramanian

et al. (2018) introduce a multi-task framework thatcombines different training objectives and reportconsiderable improvements in downstream taskseven in low-resource settings. To encode a sen-tence, researchers turn their encoder architecturefrom RNN as used in SkipThought and InferSentto the Transformer (Vaswani et al., 2017) whichrelies completely on attention mechanism to per-form Seq2Seq training. Cer et al. (2018) developthe Universal Sentence Encoder and explore twovariants of model settings: the Transformer ar-chitecture and the deep averaging network (DAN)(Iyyer et al., 2015) for encoding sentences.

Most recently, Transformer-based languagemodels such as OpenAI GPT, BERT, Transformer-xl, XLNet and ALBERT (Radford et al., 2018,2019; Devlin et al., 2019; Dai et al., 2019; Yanget al., 2019) (Lan et al., 2019) play an increasinglyimportant role in NLP. Unlike feature-based rep-resentation methods, BERT follows the the fine-tuning approach where the model is first trainedon a large amount of unlabeled data includingtwo training targets: the masked language model(MLM) and the next sentence prediction (NSP)tasks. Then, the pre-trained model can be eas-ily applied to a wide range of downstream tasksthrough fine-tuning. During the fine-tuning step,

two sentences are concatenated and fed into theinput layer and the contextualized embedding ofthe special token [CLS] added in front of everyinput is considered as the representation of thesequence pair. Liu et al. (2019) further improvethe performance on ten downstream tasks by fine-tuning BERT through multiple training objectives.These deep neural models are theoretically capa-ble of representing sequences of arbitrary lengths,while experiments show that they perform unsat-isfactorily on word and phrase similarity tasks.

3 Methodology

The architecture of our universal representationmodel is shown in Figure 1. Our BURT fol-lows the Siamese neural network where the twinnetworks share the same parameters. The lowerlayers are initialized with either BERTbase orALBERTbase which contains 12 Transformerblocks (Vaswani et al., 2017), 12 self-attentionheads with hidden states of dimension 768. Thetop layers are task-specific decoders, each consist-ing of a fully-connected layer followed by a soft-max classification layer. We add an additionalmean-pooling layer in between to convert a se-ries of hidden states into one fixed-size vector sothat the representation of each input is indepen-dent of its length. Different from the default fine-tuning procedure of BERT and ALBERT wheretwo sentences are concatenated and encoded asa whole sequence, BURT processes and encodeseach word, phrase and sentence separately. Themodel is trained on two kinds of datasets with re-gard to different levels of linguistic unit on threetasks. In the following subsections, we present adetailed introduction of the datasets and trainingobjectives.

3.1 Datasets

SNLI and Multi-Genre NLI The Stanford Nat-ural Language Inference (SNLI) (Bowman et al.,2015) and the Multi-Genre NLI Corpus (Williamset al., 2018) are sentence-level datasets that arefrequently used to improve and evaluate the per-formance of sentence representation models. Theformer consists of 570k sentence pairs that aremanually annotated with the labels entailment,contradiction, and neutral. The latter is a col-lection of 433k sentence pairs annotated with tex-tual entailment information. Both datasets are dis-tributed in the same formats except the latter is de-

rived from multiple distinct genres. Therefore, inour experiment, these two corpora are combinedand serve as a single dataset for the sentence-levelnatural language inference task during training.The training and validation sets contain 942k and29k sentence pairs, respectively.

PPDB The Paraphrase Database (PPDB) (Gan-itkevitch et al., 2013) contains millions of mul-tilingual paraphrases that are automatically ex-tracted from bilingual parallel corpora. Eachpair includes one target phrase or word and itsparaphrase, companied with entailment informa-tion and their similarity score computed from theGoogle n-grams and the Annotated Gigaword cor-pus. Relationships between pairs fall into sixcategories: Equivalence, ForwardEntailment, Re-verseEntailment, Independent, Exclusion and Oth-erRelated. Pairs are marked with scores indicatingthe probabilities that they belong to each of theabove six categories. The PPDB database is di-vided into six sizes, from S up to XXXL, and con-tains three types of paraphrases: lexical, phrasal,and syntactic. To improve the model ability of rep-resenting phrases and words, we take advantage ofthe phrasal dataset with S size, which consists of1.53 million multiword to single/multiword pairs.We apply a preprocessing step to filter and normal-ize the data for the phrase and word level tasks.Specifically, pairs tagged with Exclusion or Oth-erRelated are removed, and both ForwardEntail-ment and ReverseEntailment are treated as entail-ment since our model structure is symmetrical. Fi-nally, we randomly select 354k pairs from each ofthe three labels: equivalence, entailment and inde-pendent, resulting in a total of 1.06 million exam-ples.

3.2 Training Objectives

Different from the two training targets of BERT,we introduce three training objectives for BURTas follows to further enhance the output universalrepresentations.

Sentence-level Natural Language InferenceNatural Language Inference (NLI) is a pairwiseclassification problem that is to identify the rela-tionship between a premise P = {p1, p2, . . . , plP }and a hypothesis H = {h1, h2, . . . , hlH} from en-tailment, contradiction, and neutral, where lP andlH are numbers of tokens inP andH , respectively.BURT is trained on the collection of SNLI and

Target Paraphrase Google n-gram Sim AGiga Sim equivalence score Entailment label

hundreds thousands 0. 0.92851 0.000457 independent

welcomes information that welcomes the fact that 0.16445 0.64532 0.227435 independentthe results of the work the outcome of the work 0.51793. 0.95426 0.442545 entailmentand the objectives of the and purpose of the 0.34082 0.66921 0.286791 entailmentdifferent parts of the world various parts of the world 0.72741 0.97907 0.520898 equivalencedrawn the attention of the drew the attention of the 0.72301 0.92588 0.509006 equivalence

Table 1: Examples from the PPDB database. Pairs of phrases and words are annotated with similarities computedfrom the Google n-grams and the Annotated Gigaword corpus, entailment labels and the score for each label.

Multi-Genre NLI corpora to perform sentence-level encoding. Different from the default pre-process procedure of BERT and ALBERT, thepremise and hypothesis are tokenized and encodedseparately, resulting in two fixed-length vectors uand v. Both sentences share the same set of modelparameters during the encoding procedure. Wethen compute [u; v; |u− v|], which is the concate-nation of the premise and hypothesis representa-tions and the absolute value of their difference, andfinally feed it to a fully-connected layer followedby a 3-way softmax classification layer. The prob-ability that a sentence pair is labeled as class a ispredicted as:

P (a|P,H) = softmax(W>1 · [u; v; |u− v|])

Phrase/word-level Paraphrase IdentificationIn order to map lower-level linguistic units intothe same vector space as sentences, BURT istrained to distinguish between paraphrases andnon-paraphrases using a large number of phraseand word pairs from the PPDB dataset. Each para-phrase pair involves a target T = {t1, t2, . . . , tlT }and its paraphrase S0 = {s1, s2, . . . , slS0

}, wherelT and lS0 are numbers of tokens in T and S0,respectively. Each target or its paraphrase iseither a single word or a phrase composed ofup to 6 words. Similar to Zhou et al. (2017),we use the negative sampling strategy (Mikolovet al., 2013b) to reconstruct the dataset. Foreach target T , we randomly sample k sequences{S1, S2, . . . , Sk} from the dataset and annotatepairs {(T, S1), (T, S2), . . . , (T, Sk)} with nega-tive labels, indicating they are not paraphrases.The encoding and predicting steps are the sameas mentioned in the previous paragraph. The rela-tionship b between T and S is predicted by a lo-gistic regression with softmax:

P (b|T, S) = softmax(W>2 · [u; v; |u− v|])

Phrase/word-level Pairwise Text ClassificationApart from the paraphrase identification task, we

design a word/phrase-level pairwise text classifi-cation task to make use of the phrasal entailmentinformation in the PPDB dataset. For each para-phrase pair (A,B), BURT is trained to recognizefrom three types of relationships: equivalence, en-tailment and independent. This task is more chal-lenging than the previous one, because the modeltries to capture the degree of similarity betweenphrases and words while words are considered dis-similar even if they are closely related. Examplesof paraphrase pairs with different entailment labelsand their similarity scores are presented in Table 1,where "hundreds" and "thousands" are labeled asindependent. A one-layer classifier is used to de-termine the entailment label c for each pair:

P (c|A,B) = softmax(W>3 · [u; v; |u− v|])

3.3 Training details

BURT contains one shared encoder with 12 Trans-former blocks, 12 self-attention heads and threetask-specific decoders. The dimension of hiddenstates are 768. In each iteration we train batchesfrom three tasks in turn and make sure that thesentence-level task is trained every 2 batches. Weuse the Adam optimizer with β1 = 0.9, β2 = 0.98,and ε = 10−9. We perform warmup over the first10% training data and linearly decay learning rate.The batch size is 16 and the dropout rate is 0.1.

4 Evaluation

BURT is evaluated on several text similarity taskswith respect to different levels of linguistic units,i.e, sentences, phrases and words. We use cosinesimilarity to measure the distance between two se-quences:

cosine(u, v) =u · v‖u‖‖v‖

where u · v is the dot product of u and v, and ‖u‖is the `2-norm of the vector. Then Spearman’s

Method STS12 STS13 STS14 STS15 STS16 STS B SICK-R Avg.

pre-trained word embedding modelsAvg. GloVe 53.3 50.8 55.6 59.2 57.9 63.0 71.8 58.8Avg. FastText 58.8 58.8 63.4 69.1 68.2 68.3 73.0 65.7

sentence representation modelsSkipThought 44.3 30.7 39.1 46.7 54.2 73.7 79.2 52.6InferSent-2 62.9 56.1 66.4 74.0 72.9 78.5 83.1 70.5GenSenLAST 60.9 55.6 62.8 73.5 66.6 78.6 82.6 68.6

pre-trained contextualized language modelsBERTCLS 32.5 24.0 28.5 35.5 51.1 50.4 64.2 60.6BERTMAX 47.9 45.3 52.6 60.8 60.9 61.3 71.2 57.1BERTMEAN 50.1 52.9 54.9 63.4 64.9 64.5 73.5 40.9

our methodsALBURT 67.2 72.6 71.4 77.8 73.5 79.7 83.5 75.1BURT 69.9 73.7 72.8 78.5 73.6 84.3 84.8 76.8

Table 2: Performance of BURT/ALBURT and baseline models on sentence similarity tasks. BURT and ALBURTare initialized with BERTbase and ALBERTbase, respectively. "SemEval" stands for the SemEval Task 5(a). Thesubscript refers to the pooling strategy used to obtain fixed-length representations. The best results are in bold.

correlation between these cosine similarities andgolden labels is computed to investigate how muchsemantic information is captured by our model.

Baselines We use several currently popularword embedding models and sentence represen-tation models as our baselines. Pre-trained wordembeddings used in this work include GloVe (Pen-nington et al., 2014) and FastText (Joulin et al.,2016), both of which have dimensionality 300.They represent a phrase or a sentence by averagingall the vectors of words it contains. SkipThought(Kiros et al., 2015) is an encoder-decoder structuretrained in an unsupervised way, where both the en-coder and decoder are composed of GRU units.Two versions of trained models are provided: uni-directional and bidirectional. In our experiment,the concatenation of the last hidden states pro-duced by the two models are considered to be therepresentation of the input sequence. The dimen-sionality is 4800. InferSent (Conneau et al., 2017)is a bidirectional LSTM encoder trained on theSNLI dataset with a max-pooling layer. It has twoversions: InferSent1 with GloVe vectors and In-ferSent2 with FastText vectors. The latter is evalu-ated in our experiment. The output vector is 4096-dimensional. GenSen (Subramanian et al., 2018)is trained through a multi-task learning frameworkto learn general-purpose representations for sen-

tences. The encoder is a bidirectional GRU. Multi-ple trained models of different training settings arepublicly available and we choose the single-layermodels that are trained on skip-thought vectors,neural machine translation, constituency parsingand natural language inference tasks. Since thelast hidden states work better than max-pooling onthe STS datasets, they are used as representationsfor sequences in our experiment. The dimension-ality of the embeddings is 4096. In addition, weexamine the pre-trained model BERTbase usingdifferent pooling strategies: mean-pooling, max-pooling and the [CLS] token. The dimension is768.

4.1 Sentence-level evaluation

We evaluate the model performance of encod-ing sentences on SentEval (Conneau and Kiela,2018), including datasets that require training: theSTS benchmark (Cer et al., 2017) and the SICK-Relatedness dataset (Marelli et al., 2014), anddatasets that do not require training: the STStasks 2012-2016 (Agirre et al., 2012, 2013, 2014,2015, 2016). For BURT and the baseline models,we encode each sentence into a fixed-length vec-tor with their corresponding encoders and pool-ing layers, and then compute cosine similarity be-tween each sentence pair. For the STS benchmarkand the SICK-Relatedness dataset, each model is

MethodPhrases Words

PPDB SemEval SimLex WS-sim WS-rel MEN SCWS Avg.

pre-trained word embedding modelsAvg. GloVe 35.9 68.2 40.8 80.2 64.4 80.5 62.9 65.8Avg. FastText 32.2 68.9 50.3 83.4 73.4 84.6 69.4 72.2

sentence representation modelsSkipThought 37.1 66.4 35.1 61.3 42.2 57.9 58.4 51.0InferSent-2 36.3 78.2 55.9 71.1 44.4 77.4 61.4 62.0GenSenLAST 48.6 64.3 50.0 56.0 34.0 59.3 59.0 51.7

pre-trained contextualized language modelsBERTCLS 30.2 75.9 7.3 23.1 1.8 19.1 28.1 14.4BERTMAX 44.9 77.0 16.7 30.7 14.6 26.8 34.5 24.6BERTMEAN 42.8 71.2 13.1 3.0 11.2 21.8 23.2 15.9

our methodsALBURT 66.7 75.9 59.6 73.9 61.0 69.5 63.9 65.6BURT 70.6 79.3 60.8 71.5 55.7 68.5 62.3 63.7

Table 3: Performance of BURT/ALBURT and baseline models on phrase and word similarity tasks. "WS-rel" =subtask of WS353 where words are related. "WS-sim" = subtask of WS353 where words are similar. The bestresults are in bold. Underlined cells shows tasks where BURT outperforms all the sentence representation modelsand the pre-trained BERT.

first trained on a regression task and then evaluatedon the test set. The results are displayed in Table2.

According to the results, BURT outperforms allthe baseline models on seven evaluated datasets,achieving significant improvements on sentencesimilarity tasks. As expected, averaging pre-trained word vectors leads to inferior sentenceembeddings than well-trained sentence represen-tation models. Among the three RNN-basedsentence encoders, InferSent and GenSen obtainhigher correlation than SkipThought, but not asgood as our model. The last five rows in thetable indicate that our multi-task framework es-sentially improves the quality of sentence embed-dings. With an average score of 76.8, BURT out-performs ALBURT of the same size. Benefitingfrom the training of SNLI and Multi-Genre NLIcorpora using the Siamese structure, BURT can bedirectly used as a sentence encoder to extract fea-tures for downstream tasks without further train-ing of the parameters. Besides, it can also be effi-ciently fine-tuned to generate sentence vectors forspecific tasks by adding an additional decodinglayer such as a softmax classifier.

4.2 Phrase-level evaluation

It is not enough to generate high-quality sentenceembeddings, BURT is expected to encode seman-tic information for lower-level linguistic units aswell. In this experiment, we perform phrase-levelevaluation on SemEval2013 Task 5(a) (Korkontze-los et al., 2013) which is a task to classify whethera pair of sequences are semantically similar or not.Each pair contains a word and a phrase consistingof multiple words, coming with either a negativeor a positive label. Examples like (megalomania,great madness) are labeled dissimilar although thetwo sequences are related in certain aspects. OurBURT and baseline models are first fine-tuned onthe training set of size 11,722 and then evalu-ated on 7,814 examples. Parameters of the pre-trained word embeddings, SkipThought, InferSentand GenSen are held fixed during training. Due tolimited resource of phrasal semantic datasets, wedesign a phrase-level semantic similarity test set inthe same format as the STS datasets. Specifically,we select pairs from the test set of PPDB and filterout ones containing only single words, resulting in32,202 phase pairs. In the following experiments,the score of equivalence annotated in the originaldataset is considered as the relative similarity be-tween the two phrases.

Setences Phrases WordsSTS* STS B SICK-R PPDB SemEval SimLex WS-sim WS-rel MEN SCWS Avg.

Traning ObjectivesNLI 73.5 85.4 85.2 31.5 77.3 57.9 24.9 30.0 55.1 47.6 30.8NLI+PI 73.0 82.7 84.7 40.4 77.3 59.6 69.4 57.4 67.0 64.4 63.6NLI+PTC 72.4 84.2 84.9 76.9 78.3 41.2 56.1 30.0 48.4 55.4 46.2NLI+PI+PTC 73.7 84.3 84.8 70.6 79.3 60.8 71.5 55.7 68.5 62.3 63.7Pooling StrategiesCLS 71.2 82.6 85.0 68.1 78.1 48.0 63.5 42.8 62.7 57.3 54.9MAX 72.3 78.5 83.6 68.5 77.6 52.4 64.6 46.0 62.0 61.0 57.2MEAN 73.7 84.3 84.8 70.6 79.3 60.8 71.5 55.7 68.5 62.3 63.7Concatenation Methods[u; v] 38.2 77.6 81.0 31.3 69.2 7.8 12.5 8.9 19.6 22.8 14.3[|u− v|] 56.6 84.2 84.1 41.5 76.9 50.8 51.6 22.4 49.0 53.9 45.5[u ∗ v] 67.1 81.7 84.2 70.2 75.2 51.4 62.8 42.8 62.9 58.3 55.6[u; v; |u− v|] 73.7 84.3 84.8 70.6 79.3 60.8 71.5 55.7 68.5 62.3 63.7[u; v;u ∗ v] 70.2 83.5 84.6 62.3 77.4 53.0 69.0 52.4 64.3 59.3 59.6[|u− v|;u ∗ v] 70.7 83.4 84.8 69.7 77.3 50.3 71.5 46.3 61.0 58.2 57.5[u; v; |u− v|;u ∗ v] 71.9 84.0 85.0 62.3 78.0 52.1 64.7 42.9 63.5 58.6 56.4

Negative Samplingk = 1 73.3 84.1 85.2 60.6 78.2 60.4 54.6 45.7 61.3 58.9 56.2k = 3 73.7 84.3 84.8 70.6 79.3 60.8 71.5 55.7 68.5 62.3 63.7k = 5 72.5 83.1 84.9 72.3 77.3 54.6 64.6 48.4 63.5 59.8 58.2k = 7 71.9 83.1 84.5 69.9 77.9 59.3 68.8 50.9 66.7 60.6 61.3

Table 4: Ablation study on training objectives, pooling strategies, concatenation methods and the value of k innegative sampling. Lower layers are initialized with BERTbase. "STS*" means the average score of STS12 ∼STS16. "NLI", "PI" and "PTC" stands for sentence-level natural language inference, phrase/word-level paraphraseidentification and phrase/word-level pairwise text classification tasks, respectively. "PPDB" represents the phrase-level similarity test set extracted from the PPDB database.

As shown in the first two columns of Ta-ble 3, BURT outperforms all the baseline mod-els on SemEval2013 Task 5(a) and PPDB, sug-gesting that BURT learns semantically coherentphrase embeddings. Note the inconsistent behav-ior among models on different datasets since thetwo datasets are distributed differently. Sequencesin SemEval2013 Task 5(a) are either single wordsor two-word phrases. Therefore, GloVe and Fast-Text outperform SkipThought and GenSen on thatdataset. Training BURT on BERTbase results inhigher accuracy than unsupervised models likeGloVe, FastText and SkipThought, and is com-parable to InferSent and the general-purpose sen-tence encoder. By first training using a largeamount of phrase-level paraphrase data, then fine-tuning on SemEval training data, BURT yields thehighest accuracy. On PPDB where most phrasesconsist more than two words, RNNs and Trans-formers are more preferable than simply averagingword embeddings. Especially, BURT obtains thehighest correlation of 70.6.

4.3 Word-level evaluation

Word-level evaluation are conducted on severalcommonly used word similarity task datasets:SimLex (Hill et al., 2015), WS-353 (Finkelsteinet al., 2001), MEN (Bruni et al., 2012), SCWS(Huang et al., 2012). As mentioned in Faruquiet al. (2016), word similarity is often confusedwith relatedness in some datasets due to the sub-jectivity of human annotations. To alleviate thisproblem, WS-353 is later divided into two sub-datasets (Agirre et al., 2009): pairs of words thatare similar, and pairs of words that are related.For example, "food" and "fruit" are similar while"computer" and "keyboard" are related. The newlyconstructed dataset SimLex aim to explicitly quan-tifies similarity rather than relatedness, where sim-ilar words are annotated with higher scores and re-lated words are considered dissimilar with lowerscores. The numbers of word pairs in SimLex,WS-353, MEN and SCWS are 999, 353, 3000and 2003, respectively. Cosine similarity betweenword vectors are computed and Spearman’s corre-lation is used for evaluation. Results are in the lastfive columns of Table 3.

Although pre-trained word embeddings are con-sidered excellent at representing words, BURTobtains the highest correlations on SimLex, 4.9higher than the best results reported by all thebaseline models. Compared with all the three sen-tence representation models and the pre-trainedBERT, BURT yields the best results on 4 out of5 datasets. The only dataset where InferSent per-forms better than BURT is MEN. Because In-ferSent is trained by initializing its embeddinglayers with pre-trained word vectors, it inher-ited high-quality word embeddings from FastTextto some extent. However, our model is initial-ized with pre-trained models and fine-tuned usingNLI and PPDB datasets, leading to significant im-provements in representing words, with an aver-age correlation of 63.7 using BURT and 65.6 us-ing ALBURT compared to only 24.6 using the pre-trained BERTbase.

5 Ablation Study

Our proposed BURT is trained on the NLI andPPDB datasets using three training objectives interms of different levels of linguistic units, lead-ing to powerful performance for mapping words,phrases and sentences into the same vector space.In this section, we look into how variants of train-ing objectives, pooling and concatenation strate-gies and some hyperparameters affect model per-formance and figure out the overall contribution ofeach module. The lower layers are initialized withBERTbase in the following experiments.

5.1 Training Objective

We train BURT on different combinations of train-ing objectives to investigate in what aspect doesthe model benefit from each of them. Accordingto the first block in Table 4, training on the NLIdataset through sentence-level task can effectivelyimprove the quality of sentence embeddings, butit is not helpful enough when it comes to phrasesand words. The phrase and word level tasks us-ing the PPDB dataset are able to address this lim-itation. Especially, the word/phrase-level pairwisetext classification task has an positive impact onphrase embeddings. Furthermore, when trained onthe paraphrase identification task, BURT is ableto produce word embeddings that are almost asgood as pre-trained word vectors. By combin-ing the characteristics of different training objec-tives, our model have an advantage in encoding

sentences, meanwhile achieving considerable im-provement in phrase-level and word-level tasks.

5.2 Pooling and Concatenation

In this subsection, we apply different poolingstrategies, including mean-pooling, max-poolingand the [CLS] token, and different feature con-catenation methods to BURT. When investigat-ing the former, we use [u; v; |u − v|] as the in-put feature for classification, and as for the lat-ter, we choose mean-pooling as the default strat-egy. Results are shown in the second and thirdblocks in Table 4. In accordance with Reimersand Gurevych (2019), we find averaging BURT to-ken embeddings outperforms other pooling meth-ods. Besides, Hadamard product u ∗ v is not help-ful in our experiment. Generally, BURT is moresensitive to concatenation methods while poolingstrategies have a minor influence. For a compre-hensive consideration, mean-pooling is preferred,and the concatenation of two vectors along withtheir absolute difference is more suitable in our ex-periments than other combinations.

5.3 Negative Sampling

In the paraphrase identification task, we ran-domly select k negative samples for each pair toforce the model to identify paraphrases from non-paraphrases. Evidence has shown that the value ofk in negative sampling has an impact on phraseand word embeddings (Mikolov et al., 2013b).When training word embeddings using negativesampling, setting k in the range of 5-20 is recom-mended for small training datasets, while for largedatasets the k can be as small as 2-5. In this abla-tion experiment, we explore the optimal value ofk for our paraphrase identification task. Since thePPDB dataset is extremely large, with more thanone million positive pairs, we perform four experi-ments, in which we only change the value of k andother model settings are maintained the same. Thelast block in Table 4 illustrates results using val-ues of 1, 3, 5 and 7, from which we can concludethat k = 3 is the best choice. Keeping increasingthe value of k has no positive effect on phrase andword embeddings and even decreases the perfor-mance in sentence tasks.

6 Conclusion

In this work, we propose to learn a universal en-coder that maps sequences of different linguis-

tic granularities into the same vector space wheresimilar sequences have similar representations.For this purpose, we present BURT (BERT in-spired Universal Representation from Twin Struc-ture), which is trained with three objectives onthe NLI and PPDB datasets through a Siamesenetwork. BURT is evaluated on a wide rangeof similarity tasks with regard to multiple levelsof linguistic units (sentences, phrases and words).Overall, our proposed BURT outperforms all thebaseline models on sentence and phrase level eval-uations, and generates high-quality word vectorsthat are almost as good as pre-trained word em-beddings.

References

Eneko Agirre, Enrique Alfonseca, Keith Hall, JanaKravalova, Marius Paundefinedca, and AitorSoroa. 2009. A study on similarity and related-ness using distributional and wordnet-based ap-proaches. In Proceedings of Human LanguageTechnologies: The 2009 Annual Conference ofthe North American Chapter of the Associationfor Computational Linguistics, NAACL âAZ09,page 19âAS27, USA. Association for Computa-tional Linguistics.

Eneko Agirre, Carmen Banea, Claire Cardie,Daniel Cer, Mona Diab, Aitor Gonzalez-Agirre,Weiwei Guo, Iñigo Lopez-Gazpio, MontseMaritxalar, Rada Mihalcea, German Rigau, Lar-raitz Uria, and Janyce Wiebe. 2015. SemEval-2015 task 2: Semantic textual similarity, En-glish, Spanish and pilot on interpretability. InProceedings of the 9th International Workshopon Semantic Evaluation (SemEval 2015), pages252–263, Denver, Colorado. Association forComputational Linguistics.

Eneko Agirre, Carmen Banea, Claire Cardie,Daniel Cer, Mona Diab, Aitor Gonzalez-Agirre,Weiwei Guo, Rada Mihalcea, German Rigau,and Janyce Wiebe. 2014. Semeval-2014 task10: Multilingual semantic textual similarity. InProceedings of the 8th international workshopon semantic evaluation (SemEval 2014), pages81–91.

Eneko Agirre, Carmen Banea, Daniel Cer, MonaDiab, Aitor Gonzalez-Agirre, Rada Mihal-cea, German Rigau, and Janyce Wiebe. 2016.

SemEval-2016 task 1: Semantic textual similar-ity, monolingual and cross-lingual evaluation.In Proceedings of the 10th International Work-shop on Semantic Evaluation (SemEval-2016),pages 497–511, San Diego, California. Associ-ation for Computational Linguistics.

Eneko Agirre, Daniel Cer, Mona Diab, and AitorGonzalez-Agirre. 2012. SemEval-2012 task 6:A pilot on semantic textual similarity. In *SEM2012: The First Joint Conference on Lexicaland Computational Semantics – Volume 1: Pro-ceedings of the main conference and the sharedtask, and Volume 2: Proceedings of the SixthInternational Workshop on Semantic Evalua-tion (SemEval 2012), pages 385–393, Mon-tréal, Canada. Association for ComputationalLinguistics.

Eneko Agirre, Daniel Cer, Mona Diab, AitorGonzalez-Agirre, and Weiwei Guo. 2013.*SEM 2013 shared task: Semantic textual sim-ilarity. In Second Joint Conference on Lexicaland Computational Semantics (*SEM), Volume1: Proceedings of the Main Conference and theShared Task: Semantic Textual Similarity, pages32–43, Atlanta, Georgia, USA. Association forComputational Linguistics.

Samuel R. Bowman, Gabor Angeli, ChristopherPotts, and Christopher D. Manning. 2015. Alarge annotated corpus for learning natural lan-guage inference. In Proceedings of the 2015Conference on Empirical Methods in NaturalLanguage Processing, pages 632–642, Lisbon,Portugal. Association for Computational Lin-guistics.

Elia Bruni, Gemma Boleda, Marco Baroni, andNam-Khanh Tran. 2012. Distributional seman-tics in technicolor. In Proceedings of the 50thAnnual Meeting of the Association for Compu-tational Linguistics (Volume 1: Long Papers),pages 136–145, Jeju Island, Korea. Associationfor Computational Linguistics.

Daniel Cer, Mona Diab, Eneko Agirre, IñigoLopez-Gazpio, and Lucia Specia. 2017.SemEval-2017 task 1: Semantic textual sim-ilarity multilingual and crosslingual focusedevaluation. In Proceedings of the 11th Inter-national Workshop on Semantic Evaluation(SemEval-2017), pages 1–14, Vancouver,

Canada. Association for Computational Lin-guistics.

Daniel Cer, Yinfei Yang, Sheng-yi Kong, NanHua, Nicole Limtiaco, Rhomni St. John, NoahConstant, Mario Guajardo-Cespedes, SteveYuan, Chris Tar, Brian Strope, and RayKurzweil. 2018. Universal sentence encoderfor English. In Proceedings of the 2018Conference on Empirical Methods in NaturalLanguage Processing: System Demonstrations,pages 169–174, Brussels, Belgium. Associationfor Computational Linguistics.

Alexis Conneau and Douwe Kiela. 2018. Sen-tEval: An evaluation toolkit for universalsentence representations. In Proceedingsof the Eleventh International Conference onLanguage Resources and Evaluation (LREC2018), Miyazaki, Japan. European LanguageResources Association (ELRA).

Alexis Conneau, Douwe Kiela, Holger Schwenk,Loïc Barrault, and Antoine Bordes. 2017. Su-pervised learning of universal sentence repre-sentations from natural language inference data.In Proceedings of the 2017 Conference on Em-pirical Methods in Natural Language Process-ing, pages 670–680, Copenhagen, Denmark.Association for Computational Linguistics.

Zihang Dai, Zhilin Yang, Yiming Yang, JaimeCarbonell, Quoc Le, and Ruslan Salakhutdi-nov. 2019. Transformer-XL: Attentive lan-guage models beyond a fixed-length context.In Proceedings of the 57th Annual Meeting ofthe Association for Computational Linguistics,pages 2978–2988, Florence, Italy. Associationfor Computational Linguistics.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, andKristina Toutanova. 2019. BERT: Pre-trainingof deep bidirectional transformers for languageunderstanding. In Proceedings of the 2019Conference of the North American Chapterof the Association for Computational Linguis-tics: Human Language Technologies, Volume1 (Long and Short Papers), pages 4171–4186,Minneapolis, Minnesota. Association for Com-putational Linguistics.

Manaal Faruqui, Yulia Tsvetkov, Pushpendre Ras-togi, and Chris Dyer. 2016. Problems with eval-uation of word embeddings using word similar-

ity tasks. In Proceedings of the 1st Workshopon Evaluating Vector-Space Representations forNLP, pages 30–35, Berlin, Germany. Associa-tion for Computational Linguistics.

Lev Finkelstein, Evgeniy Gabrilovich, Yossi Ma-tias, Ehud Rivlin, Zach Solan, Gadi Wolfman,and Eytan Ruppin. 2001. Placing search in con-text: The concept revisited. In Proceedingsof the 10th international conference on WorldWide Web, pages 406–414.

Juri Ganitkevitch, Benjamin Van Durme, andChris Callison-Burch. 2013. PPDB: The para-phrase database. In Proceedings of the 2013Conference of the North American Chapter ofthe Association for Computational Linguistics:Human Language Technologies, pages 758–764, Atlanta, Georgia. Association for Compu-tational Linguistics.

Felix Hill, Roi Reichart, and Anna Korhonen.2015. SimLex-999: Evaluating semantic mod-els with (genuine) similarity estimation. Com-putational Linguistics, 41(4):665–695.

Eric Huang, Richard Socher, Christopher Man-ning, and Andrew Ng. 2012. Improving wordrepresentations via global context and multipleword prototypes. In Proceedings of the 50thAnnual Meeting of the Association for Compu-tational Linguistics (Volume 1: Long Papers),pages 873–882, Jeju Island, Korea. Associationfor Computational Linguistics.

Mohit Iyyer, Varun Manjunatha, Jordan L. Boyd-Graber, and Hal DaumÃl’ III. 2015. Deep un-ordered composition rivals syntactic methodsfor text classification. In ACL (1), pages 1681–1691. The Association for Computer Linguis-tics.

Armand Joulin, Edouard Grave, Piotr Bojanowski,and Tomas Mikolov. 2016. Bag of tricksfor efficient text classification. arXiv preprintarXiv:1607.01759.

Ryan Kiros, Yukun Zhu, Ruslan R Salakhutdinov,Richard Zemel, Raquel Urtasun, Antonio Tor-ralba, and Sanja Fidler. 2015. Skip-thought vec-tors. In Advances in neural information pro-cessing systems, pages 3294–3302.

Ioannis Korkontzelos, Torsten Zesch, Fabio Mas-simo Zanzotto, and Chris Biemann. 2013.

SemEval-2013 task 5: Evaluating phrasal se-mantics. In Second Joint Conference on Lexi-cal and Computational Semantics (*SEM), Vol-ume 2: Proceedings of the Seventh Interna-tional Workshop on Semantic Evaluation (Se-mEval 2013), pages 39–47, Atlanta, Georgia,USA. Association for Computational Linguis-tics.

Zhenzhong Lan, Mingda Chen, Sebastian Good-man, Kevin Gimpel, Piyush Sharma, and RaduSoricut. 2019. Albert: A lite bert for self-supervised learning of language representa-tions.

Xiaodong Liu, Pengcheng He, Weizhu Chen, andJianfeng Gao. 2019. Multi-task deep neuralnetworks for natural language understanding.In Proceedings of the 57th Annual Meeting ofthe Association for Computational Linguistics,pages 4487–4496, Florence, Italy. Associationfor Computational Linguistics.

Lajanugen Logeswaran and Honglak Lee. 2018.An efficient framework for learning sentencerepresentations. In International Conference onLearning Representations (ICLR).

Marco Marelli, Stefano Menini, Marco Ba-roni, Luisa Bentivogli, Raffaella Bernardi, andRoberto Zamparelli. 2014. A SICK cure for theevaluation of compositional distributional se-mantic models. In Proceedings of the Ninth In-ternational Conference on Language Resourcesand Evaluation (LREC’14), pages 216–223,Reykjavik, Iceland. European Language Re-sources Association (ELRA).

Tomas Mikolov, Kai Chen, Greg Corrado, and Jef-frey Dean. 2013a. Efficient estimation of wordrepresentations in vector space. arXiv preprintarXiv:1301.3781.

Tomas Mikolov, Ilya Sutskever, Kai Chen, GregCorrado, and Jeffrey Dean. 2013b. Distributedrepresentations of words and phrases and theircompositionality.

Jeffrey Pennington, Richard Socher, and Christo-pher Manning. 2014. Glove: Global vectorsfor word representation. In Proceedings ofthe 2014 Conference on Empirical Methods inNatural Language Processing (EMNLP), pages1532–1543, Doha, Qatar. Association for Com-putational Linguistics.

Matthew E Peters, Mark Neumann, Mohit Iyyer,Matt Gardner, Christopher Clark, Kenton Lee,and Luke Zettlemoyer. 2018. Deep contextu-alized word representations. In Proceedings ofNAACL-HLT, pages 2227–2237.

Alec Radford, Karthik Narasimhan, Tim Sal-imans, and Ilya Sutskever. 2018. Im-proving language understanding by gen-erative pre-training. URL https://s3-us-west-2.amazonaws.com/openai-assets/researchcovers/languageunsupervised/languageunderstanding paper. pdf.

Alec Radford, Jeffrey Wu, Rewon Child, DavidLuan, Dario Amodei, and Ilya Sutskever. 2019.Language models are unsupervised multitasklearners. OpenAI Blog, 1(8).

Nils Reimers and Iryna Gurevych. 2019.Sentence-BERT: Sentence embeddings us-ing Siamese BERT-networks. In Proceedingsof the 2019 Conference on Empirical Methodsin Natural Language Processing and the 9thInternational Joint Conference on Natural Lan-guage Processing (EMNLP-IJCNLP), pages3982–3992, Hong Kong, China. Associationfor Computational Linguistics.

Sandeep Subramanian, Adam Trischler, YoshuaBengio, and Christopher J Pal. 2018. Learninggeneral purpose distributed sentence representa-tions via large scale multi-task learning. In In-ternational Conference on Learning Represen-tations.

Ashish Vaswani, Noam Shazeer, Niki Parmar,Jakob Uszkoreit, Llion Jones, Aidan N Gomez,Łukasz Kaiser, and Illia Polosukhin. 2017. At-tention is all you need. In Advances in neuralinformation processing systems, pages 5998–6008.

Adina Williams, Nikita Nangia, and Samuel Bow-man. 2018. A broad-coverage challenge corpusfor sentence understanding through inference.In Proceedings of the 2018 Conference of theNorth American Chapter of the Association forComputational Linguistics: Human LanguageTechnologies, Volume 1 (Long Papers), pages1112–1122, New Orleans, Louisiana. Associa-tion for Computational Linguistics.

Zhilin Yang, Zihang Dai, Yiming Yang, JaimeCarbonell, Ruslan Salakhutdinov, and Quoc V

Le. 2019. XLNet: Generalized autoregressivepretraining for language understanding. arXivpreprint arXiv:1906.08237.

Mo Yu and Mark Dredze. 2015. Learning compo-sition models for phrase embeddings. Transac-tions of the Association for Computational Lin-guistics, 3:227âAS 242.

Zhihao Zhou, Lifu Huang, and Heng Ji. 2017.Learning phrase embeddings from paraphraseswith GRUs. In Proceedings of the First Work-shop on Curation and Applications of Paralleland Comparable Corpora, pages 16–23, Taipei,Taiwan. Asian Federation of Natural LanguageProcessing.