B MANY-CORE P -DRAM NETWORKS M CMOS S P · Benjamin Moss Charles W. Holzwarth ... A key feature of...

Transcript of B MANY-CORE P -DRAM NETWORKS M CMOS S P · Benjamin Moss Charles W. Holzwarth ... A key feature of...

.........................................................................................................................................................................................................................

BUILDING MANY-COREPROCESSOR-TO-DRAM NETWORKS

WITH MONOLITHIC CMOSSILICON PHOTONICS

.........................................................................................................................................................................................................................

SILICON PHOTONICS IS A PROMISING TECHNOLOGY FOR ADDRESSING MEMORY

BANDWIDTH LIMITATIONS IN FUTURE MANY-CORE PROCESSORS. THIS ARTICLE FIRST

INTRODUCES A NEW MONOLITHIC SILICON-PHOTONIC TECHNOLOGY, WHICH USES A

STANDARD BULK CMOS PROCESS TO REDUCE COSTS AND IMPROVE ENERGY EFFICIENCY,

AND THEN EXPLORES THE LOGICAL AND PHYSICAL IMPLICATIONS OF LEVERAGING THIS

TECHNOLOGY IN PROCESSOR-TO-MEMORY NETWORKS.

......Modern embedded, server, graph-ics, and network processors already includetens to hundreds of cores on a single die,and this number will continue to increaseover the next decade. Correspondingincreases in main memory bandwidth arealso required, however, if the greater corecount is to result in improved applicationperformance. Projected enhancements ofexisting electrical DRAM interfaces are notexpected to supply sufficient bandwidthwith reasonable power consumption andpackaging cost. To meet this many-corememory bandwidth challenge, we are com-bining monolithic CMOS silicon photonicswith an optimized processor-memory net-work architecture.

Existing approaches to on-chip photonicinterconnect have required extensive processcustomizations, some of which are problematic

for integration with many-core processorsand memories.1,2 In contrast, we are develop-ing new photonic devices that use the existingmaterial layers and structures in a standardbulk CMOS flow. In addition to preservingthe massive investment in standard fabricationtechnology, monolithic integration reduces thearea and energy costs of interfacing electricaland optical components. Our technologysupports dense wavelength-division multi-plexing (DWDM) with dozens of wave-lengths packed onto the same waveguide tofurther improve area and energy efficiency.

The challenge when designing a photonicchip-level network is to turn the raw link-level benefits of energy-efficient DWDMphotonics into system-level performanceimprovements. Previous approaches haveused photonics for intrachip circuit-switchednetworks with very large messages,3 intrachip

Christopher BattenAjay Joshi

Jason OrcuttAnatol Khilo

Benjamin MossCharles W. Holzwarth

Milos A. PopovicHanqing Li

Henry I. SmithJudy L. Hoyt

Franz X. KartnerRajeev J. Ram

Vladimir StojanovicMassachusetts Institute

of Technology

Krste AsanovicUniversity of California,

Berkeley

..............................................................

8 Published by the IEEE Computer Society 0272-1732/09/$26.00 !c 2009 IEEE

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

crossbar networks for processor-to-L2 cachebank traffic,4,5 and general-purpose interchiplinks.6 Since main-memory bandwidth willbe a key bottleneck in future many-core sys-tems, this work considers leveraging pho-tonics in processor-to-DRAM networks.We propose using a local meshes to globalswitches (LMGS) topology that connectssmall meshes of cores on-chip to globalswitches located off-chip near the DRAMmodules. Our optoelectrical approach imple-ments both the local meshes and globalswitches electrically and uses seamlesson-chip/off-chip photonic links to imple-ment the global point-to-point channels con-necting every group to every DRAMmodule.A key feature of our architecture is that thephotonic links are not only used for interchipcommunication, but also to provide cross-chip transport to off-load intrachip globalelectrical wiring.

A given logical topology can have manydifferent physical implementations, eachwith different electrical, thermal, and opticalpower characteristics. In this work, we de-scribe a new ring-filter matrix template as away to efficiently implement our optoelectri-cal networks. We explore how the quality ofdifferent photonic devices impacts the areaoverhead and optical power of this template.As an example of our vertically integratedapproach, we identified waveguide crossingsas a critical component in the ring-filter ma-trix template, and this observation served asmotivation for the photonic device research-ers to investigate optimized waveguide cross-ing structures.

We have applied our approach to a targetsystem with 256 cores and 16 independentDRAM modules. Our simulation resultsshow that silicon photonics can improve

throughput by almost an order of magnitudecompared to pure electrical systems undersimilar power constraints. Our work suggeststhat the LMGS topology and correspondingring-filter matrix layout are promisingapproaches for turning the link-level advan-tages of photonics into system-level benefits.

Photonic technologyAlthough researchers have proposed many

types of devices for chip-scale optical net-works, the most promising approach usesan external laser source and small energy-efficient ring resonators for modulation andfiltering. Figure 1 illustrates the use of suchdevices to implement a simple wavelength-division multiplexed link. An optical fibercarries light from an off-chip laser source tochip A, where it is coupled into an on-chipwaveguide. The waveguide routes the lightpast a series of transmitters. Each transmitteruses a resonant ring modulator tuned to adifferent wavelength to modulate the inten-sity of the light passing by at that wave-length. Modulated light continues throughthe waveguide, exits chip A into anotherfiber, and is then coupled into a waveguideon chip B. This waveguide routes the lightby two receivers that use a tuned resonantring filter to ‘‘drop’’ the corresponding wave-length from the waveguide into a local photo-detector. The photodetector turns absorbedlight into current, which is amplified by theelectrical portion of the receiver. Althoughnot shown in Figure 1, we can simultaneouslysend information in the reverse direction byusing another external laser source producingdifferent wavelengths coupled into the samewaveguide on chip B and received by chip A.

We have developed a novel approach thatimplements these devices in a commercial

Ring filterwith !1 resonance

Receivers

Singlemodefiber

Waveguide

Ring modulatorwith !1 resonance

PhotodetectorChip BChip A Transmitters

Coupler

Externallaser

source

11 22

Figure 1. Two point-to-point photonic links implemented with wavelength division multiplexing.

....................................................................

JULY/AUGUST 2009 9

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

sub-100-nm bulk CMOS process.7,8 Thisallows photonic waveguides, ring filters,transmitters, and receivers to be monolithi-cally integrated with hundreds of cores onthe same die, which reduces cost andincreases energy efficiency. We use our expe-riences with a 65-nm test chip and our feasi-bility studies for a prototype 32-nm processto extrapolate photonic device parametersfor our target 22-nm technology node.

Previously, researchers implemented pho-tonic waveguides using the silicon body as acore in a silicon-on-insulator (SOI) processwith custom thick buried oxide (BOX) ascladding,2 or by depositing additional mate-rial layers (such as silicon nitride) on top ofthe interconnect stack.1 To avoid processchanges, we designed our photonic wave-guides in the polysilicon (poly-Si) layer ontop of the shallow trench isolation in a stan-dard CMOS bulk process (see Figure 2a).Unfortunately, the shallow-trench oxide istoo thin to form an effective cladding andto shield the core from optical mode leakagelosses into the silicon substrate. Hence, wedeveloped a novel self-aligned postprocessingprocedure to etch away the silicon substrateunderneath the waveguide forming an airgap.7 When the air gap is more than 5 mmdeep, it provides an effective optical cladding.

For this work, we assume up to eight wave-guides can use the same air gap with a 4-mmwaveguide pitch.

We use poly-Si resonant ring filters formodulating and filtering different wave-lengths (see Figure 2b). The ring radiusdetermines the resonant frequency, and wecascade rings to increase the filter’s selectiv-ity. The ring’s resonance is also sensitive totemperature and requires some form of activethermal tuning. Fortunately, the etched airgap under the ring provides isolation fromthe thermally conductive substrate, and weadd in-plane poly-Si heaters inside mostrings to improve heating efficiency. Thermalsimulations suggest that we will require 40 to100 mW of static power for each double-ringfilter assuming a temperature range of 20 K.We estimate that we can pack up to 64 wave-lengths per waveguide at a 60-GHz spacingand that interleaving wavelengths travelingin opposite directions (which helps mitigateinterference) could provide up to 128 wave-lengths per waveguide.

Our photonic transmitters are similar topast approaches that use minority charge in-jection to change the resonant frequency ofring modulators.9 Our racetrack modulatoris implemented by doping the edges of apoly-Si ring, creating a lateral PiN diode

Etch hole

Air gap

1 µm

Polysiliconwaveguide

(a) (c)

(b)

10 µm

Through

DropVertical coupler

In

Through

DropIn

Figure 2. Photonic devices implemented in a standard bulk CMOS process. Waveguides are implemented in poly-Si

with an etched air gap to provide optical cladding (a).7 Cascaded rings are used to filter the resonant wavelength to the

‘‘drop’’ port while all other wavelengths continue to the ‘‘through’’ port (b). Ring modulators use charge injection to

modulate a single wavelength: without charge injection the resonant wavelength is filtered to the ‘‘drop’’ port while all

other wavelengths continue to the ‘‘through’’ port; with charge injection, the resonant frequency changes such that no

wavelengths are filtered to the ‘‘drop’’ port (c).

..................................................................

10 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

with undoped poly-Si as the intrinsic region(see Figure 2c). Due to their smaller size(3 to 10 mm radius), ring modulators canhave lower power consumption than otherapproaches (such as Mach-Zehnder modula-tors). Our device simulations indicate thatwith poly-Si carrier lifetimes of 0.1 to 1 ns,it is possible to achieve sub-100 f J per bit(f J/b) for random data at up to 10 gigabitsper second (Gbps) speeds when usingadvanced driver circuits. To avoid robustnessand power issues from distributing a clock tohundreds of transmitters, we propose imple-menting an optical clock delivery schemeusing a simple single-diode receiver withduty-cycle correction. With a 4-mm wave-guide pitch and 64 to 128 wavelengths perwaveguide, we can achieve a data rate densityof 160 to 320 Gbps/mm, which is approxi-mately two orders of magnitude greaterthan the data rate density of optimally re-peated global on-chip electrical interconnect.10

Photonic receivers often use high-efficiency epitaxial Germanium (Ge) photo-detectors,2 but the lack of pure Ge presentsa challenge for mainstream bulk CMOS pro-cesses. We use the embedded SiGe (20 to30 percent Ge) in the p-MOSFET transistorsource and drain regions to create a photode-tector operating at approximately 1,200 nm.Simulation results show good capacitance(less than 1 fF/mm) and dark current (lessthan 10 fA/mm) at near-zero bias conditions,but the structure’s sensitivity must beimproved to meet our system specifications.In advanced process nodes, the responsivityand speed should improve through bettercoupling between the waveguide and thephotodetector in scaled device dimensions,and an increased percentage of Ge for devicestrain. Our photonic receiver circuits woulduse the same optical clocking scheme as ourtransmitters, and we estimate that the entirereceiver will consume less than 50 f J/b forrandom data.

Based on our device simulations andexperiments, we estimate the total electricaland thermal on-chip energy for a complete10-Gbps photonic link (including a double-ring modulator and filter at the receiver) tobe 100 to 250 fJ/b for random data. In addi-tion to the on-chip electrical power, the ex-ternal laser’s electrical power consumption

must also remain in a reasonable range.The light generated by the laser experiencesoptical losses in each photonic device,which reduces the amount of optical powerreaching the photodetector. Different net-work topologies and their correspondingphysical layout result in different opticallosses and thus require varying amounts ofoptical laser power. With current laser effi-ciencies, generating optical laser powerrequires three to four times greater electricallaser power. In addition to the photonic de-vice losses, there is also a limit to the totalamount of optical power that can be trans-mitted through a waveguide without largenonlinear losses. In this work, we assume amaximum of 50 mW per waveguide at 1 dBloss. Network architectures with high opticallosses per wavelength will need to distributethose wavelengths across many waveguides(increasing the overall area) to stay withinthis nonlinearity limit.

Many-core processor-to-DRAMnetwork topologies

Monolithic silicon photonics is a promis-ing technology for addressing the many-corememory bandwidth challenge. We present ahybrid optoelectrical approach that targetsthe advantages of each medium: photonicinterconnect for energy-efficient global com-munication and electrical interconnect forfast switching, efficient buffering, and localcommunication.

Our target system for this work is a 256-core processor running at 2.5 GHz with tensof DRAM modules. Although such a systemwill be feasible on a 400-mm2 die in the 22-nmnode, it will likely be power constrained asopposed to area constrained. The systemwill have abundant on-chip wiring resourcesand, to some extent, off-chip I/O pins, but itwill not be possible to drive them all withoutexceeding the chip’s thermal and power de-livery envelope. To compare across a rangeof network architectures, we assume a com-bined power budget for the on-chip networkand off-chip I/O, and we individually opti-mize each architecture’s distribution of powerbetween these two components.

To help navigate the large design space,we developed analytical models that connectcomponent energy models with performance

....................................................................

JULY/AUGUST 2009 11

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

metrics such as ideal throughput and zero-load latency. The ideal throughput is themaximum aggregate bandwidth that allcores can sustain under a uniform randomtraffic pattern with ideal flow-control andperfectly balanced routing. The zero-load la-tency is the average latency (including bothhop latency and serialization latency) of amemory request and corresponding responseunder a uniform random traffic pattern withno contention in the network. Analytical en-ergy models for electrical and photonicimplementations of on-chip interconnectand off-chip I/O are based on our insights

in the last section, previous work on optimalon-chip electrical interconnect,10 and a circuit-level analysis for our 22-nm technology.

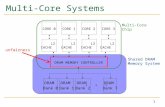

Mesh topologyFrom the wide variety of possible topolo-

gies for processor-memory networks, weselected the mesh topology in Figures 3aand 3b for our baseline network because ofits simplicity, use in practice, and reasonableefficiency. We also examined concentratedmesh topologies with four cores per meshrouter.11 Two logical networks separaterequests from responses to avoid protocol

C C C C

Request mesh network

Response mesh network

C C ••• C

C

DM DM DM DM

C C C C C ••• C

(a)

C C C C

C C C C

C C C C

C C C CDM

DM

DM

DM

(b)

C: CoreCx: Core in group xDM: DRAM moduleS: Global switch for DRAM module : Mesh router : Mesh router + access point

C0 C0 C0 C0

C0 C0 C0 C0

C1

DM

DM

SS

SS

DM

DM

C1 C1 C1

C1 C1 C1 C1

(d)

C0 C0 C1 C1C1C0

DM DM DM DMS S S S

C0 C0 C1 C1C1C0

Group 0 requestlocal mesh

Group 1 requestlocal mesh

Group 0 responselocal mesh

Group 1 responselocal mesh

(c)

••• •••

••• •••

Figure 3. Logical and physical views of mesh and local meshes to global switches (LMGS)

topologies: mesh logical view (a), mesh physical view (b), LMGS logical view (c), and

LMGS physical view (d).

..................................................................

12 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

deadlock, and we implement each logicalnetwork with a separate physical network.Some of the mesh routers include an accesspoint, which is the interface between theon-chip network and the channel that con-nects to a DRAM module. Cores sendrequests through the request mesh to the ap-propriate access point, which then forwardsrequests to the DRAM module. Responsesare sent back to the access point, throughthe response mesh, and eventually to theoriginal core. The DRAM address space iscache-line interleaved across access points tobalance the load and give good average-caseperformance. Our model is largely indepen-dent of whether the DRAM memory con-troller is located next to the access point, atthe edge of the chip, or off-chip near theDRAM module.

Figure 4 shows what fraction of the totalnetwork power is consumed in the on-chipmesh network as a function of the total net-work’s ideal throughput. To derive this plot,we first choose a bitwidth for the channel be-tween routers in the mesh, then we deter-mine the mesh’s ideal throughput. Finally,we assume that the off-chip I/O must havean equal ideal throughput as the on-chipmesh to balance the on-chip and off-chipbandwidths. We use our analytical modelsto determine the power required by the on-chip mesh and off-chip I/O under uniformrandom traffic with random data. We as-sume that an electrical off-chip I/O link inthe 22-nm node will require approximately5 pJ/b at 10 Gbps, while our photonic tech-nology can decrease this to 250 fJ/b. Forcomparison, our analytical models predictthat the mesh router-to-router link energywill be approximately 50 fJ/b. Figure 4 alsoshows configurations corresponding to 10-,20-, and 30-W power constraints on thesum of the on-chip network power and off-chip I/O power.

Focusing on the simple mesh line in Fig-ure 4a, we can see that with electrical off-chip I/O approximately 25 percent of thetotal power is consumed in the on-chipmesh network. The ideal throughput undera 20-W power constraint is approximately1 kilobit per cycle (Kbits/cycle). Energy-efficient photonic off-chip I/O enablesincreased off-chip bandwidth, but photonics

also leaves more energy to improve the on-chipelectrical network’s throughput. Figure 4bshows that photonics can theoretically in-crease the ideal throughput under a 20-Wpower constraint by a factor of 3.5 toabout 3.5 Kbits/cycle. With a simple meshand photonic off-chip I/O, almost all thepower is consumed in the on-chip network.

For all the configurations we discuss here,we assume a constant amount of on-chipnetwork buffering as measured by the totalnumber of bits. For example, configurationswith wider physical channels have fewerentries per queue. Figure 4 shows that forvery small throughputs the power overheaddue to a constant amount of buffering startsto outweigh the power savings from nar-rower mesh channels, so the mesh powerstarts to consume a larger fraction of thetotal power.

Figure 5 plots the ideal throughput andzero-load latency as a function of the energyefficiency of the off-chip I/O under a 20-Wpower constraint. Focusing on the simplemesh line, we can see that decreasing theoff-chip I/O link energy increases the idealthroughput with a slight reduction in thezero-load latency. Although using photonicsto implement energy-efficient off-chip I/Ochannels improves performance, messagesstill need to use the on-chip electrical net-work to reach the appropriate access point,and this on-chip global communication is asignificant bottleneck.

0 5 10 15 200 0.5 1.0Ideal throughput (Kbits/cycle)

(a) (b)Ideal throughput (Kbits/cycle)

1.5 2.0

1.0

Mes

h po

wer

/tota

l pow

er

0.8

0.6

0.4

0.2

0.0

Simple meshLMGS (4 groups)LMGS (16 groups)

10 W 20 W 30 W10 W 20 W 30 W

Figure 4. Fraction of total network power consumed in mesh versus ideal

throughput: electrical assuming 5 pJ/b (a) and photonic assuming 250 fJ/b

(b). Markers show configurations corresponding to 10-, 20-, and 30-W

power constraints.

....................................................................

JULY/AUGUST 2009 13

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

LMGS topologyWe can further improve system throughput

by moving this global traffic from energy-inefficient electrical mesh channels ontoenergy-efficient optical channels. Figures 3cand 3d illustrate a LMGS topology that par-titions the mesh into smaller groups of coresand then connects these groups to mainmemory with switches located off-chipnear the DRAM modules. Figures 3c and3d show 16 cores and two groups. Everygroup of cores has an independent accesspoint to each DRAM module so each mes-sage need only traverse its local group sub-mesh to reach an appropriate access point.Messages then quickly move across theglobal point-to-point channels and arbitratewith messages from other groups at aDRAM module switch before actually

accessing the DRAM module. As Figure 3dshows, each global point-to-point channeluses a combination of on-chip global linksand off-chip I/O links. The global switchesare located off-chip near the DRAM mod-ule, which helps reduce the processorchip’s power density and enables multi-socket configurations to easily share thesame DRAM modules.

Figures 4a and 4b show the theoreticalperformance of the LMGS topology com-pared to a simple mesh. For both electricaland photonic off-chip I/O, LMGS topolo-gies reduce the fraction of the total powerconsumed in the on-chip mesh since globaltraffic is effectively being moved from themesh network onto the on-chip global andoff-chip I/O channels. However, with elec-trical technology, most of the power is al-ready spent in the off-chip I/O so groupingdoesn’t significantly improve the idealthroughput. With photonic technology,most of the power is consumed in the on-chip mesh network, so offloading global traf-fic onto energy-efficient photonic channelscan significantly improve performance.This assumes that we use photonics forboth the off-chip I/O and on-chip globalchannels so that we can create seamless on-chip/off-chip photonic channels from eachlocal mesh to each global switch. Essentially,we’re exploiting the fact that once we pay totransmit a bit between chips optically, itdoesn’t cost any extra transceiver energy(although it might increase optical laserpower) to create such a seamless on-chip/off-chip link. Under a 20-W power con-straint, the ideal throughput improves by afactor of 2.5 to 3 compared to a simplemesh with photonic off-chip I/O. This ulti-mately suggests almost an order of magni-tude improvement compared to usingelectrical off-chip I/O.

Figure 5b shows that the LMGS topologycan also reduce hop latency since a messageneeds only a few hops in the group submeshbefore using the low-latency global point-to-point channels. Unfortunately, the powerconstraint means that for some configura-tions (such as 16 groups with electrical off-chip I/O), the global channels become narrow,significantly increasing the serialization latencyand the overall zero-load latency.

0

(a)

(b)

5

10

15

Idea

l thr

ough

put (

kbits

/cyc

le)

0 1 2 3 4 5

100

150

200

Photonicrange

Energy of global on-chip + off-chip I/O link (pJ/b)

Zero

-load

late

ncy

(cyc

les)

Simple meshLMGS (4 groups)LMGS (16 groups)

Electricalrange

Figure 5. Ideal throughput (a) and zero-load latency (b) under 20-W power

constraint.

..................................................................

14 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

Photonic ring-filter matrix implementationWe have developed a new approach based

on a ring-filter matrix for implementing themesh and LMGS topologies. Figure 6 illus-trates the proposed layout for a 16-group,256-core system running at 2.5 GHz with16 independent DRAMmodules. We assumea 400-mm2 die implemented in a 22-nmtechnology. Since each group has one globalchannel to each DRAM module, there are atotal of 256 processor-memory channelswith one photonic access point (PAP) perchannel. An external laser coupled to on-chip optical power waveguides distributesmultiwavelength light to the PAPs locatedacross the chip. PAPs modulate this lightto multiplex the global point-to-point chan-nels onto vertical waveguides that connectto the ring-filter matrix in the middle ofthe chip. The ring-filter matrix aggregates

all the channels destined for the sameDRAM module onto a small number of hor-izontal waveguides. These horizontal wave-guides are then connected to the DRAMmodule switch chip via optical fiber. Theswitch chip converts data on the photonicchannel back into the electrical domain forbuffering and arbitration. Responses uselight traveling in the opposite direction to re-turn along the same optical path. The globalchannels use credit-based flow control (piggy-backed onto response messages) to preventPAPs from overloading the buffering in theDRAM module switches.

For the example in Figure 6, we use our an-alytical model with a 20-W power constraintto help determine an appropriate mesh band-width (64 bits/cycle/channel) and off-chipI/O bandwidth (64 bits/cycle/channel),which gives a total peak bisection bandwidth

00004444

316 corecolumns

Photonicaccesspoint

(16!/dir)

Ring filter(16!/dir)

3337777

33337777

00004444

64 Ring-filter matrix

Horizontalwaveguide

(64!/dir)

64

00004444

00004444

33337777

33

Optical power waveguide

Core and two mesh routers(part of group 3)

Vertical waveguide(64! per direction)

Externallaser

source

DRAMchip

DRAMchip

Meshreq

router

Meshresp

router

Meshreq

router

Meshresp

router

Verticalcouplers

Opticalfiber

ribbon DRAM module 0

PAP

PAP

PAP

PAP

Cor

e

Cor

e

Switc

h ch

ip

Arbiterx16

x16 x16

x16 x16

Demuxx16

337777

8888CCCC

BBBBFFFF

BBBBFFFF

BBBBFFFF

BBBBFFFF

8888CCCC

8888CCCC

8888CCCC

•••

Figure 6. Ring-filter matrix implementation of LMGS topology with 256 cores, 16 groups, and 16 DRAM modules. Each

core is labeled with a hex number indicating its group. For simplicity, the electrical mesh channels are only shown in the

inset, each ring in the main figure represents 16 double rings, and each optical power waveguide represents 16 wave-

guides (one per vertical waveguide). The global request channel that connects group 3 to DRAM module 0 is highlighted.

....................................................................

JULY/AUGUST 2009 15

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

of 16 Kbits/cycle or 40 terabits per second(Tbps) in each direction. Since each ringmodulator operates at 10 Gbps, we need16 ring modulators per PAP and 16 ring fil-ters per connection in the matrix to achieveour target 64 bits/cycle/channel. Since eachwaveguide can support up to 64 ! in one di-rection, we need a total of 64 vertical wave-guides and 64 horizontal waveguides. Dueto the 50-mW nonlinearity waveguidelimit, we need one optical power waveguideper vertical waveguide. We aggregate wave-guides to help amortize the overheads associ-ated with our etched air-gap technique. Toease system integration, we envision using asingle optical ribbon with 64 fibers coupledto the 64 horizontal waveguides. Fibers arethen stripped off in groups of four to connectto each DRAM module switch.

The proposed ring-filter matrix templatecan be used for different numbers of groups,cores, DRAM modules, and target systembandwidths by simply varying the numberof horizontal and vertical waveguides.These different systems will have differentoptical power and area overheads. Figure 7shows the optical laser power as a functionof waveguide loss and waveguide crossingloss for 16-group networks with both less ag-gregate bandwidth (32 bits/cycle global I/Ochannels) and more aggregate bandwidth(128 bits/cycle global I/O channels) than

the system pictured in Figure 6. Higher-quality devices always result in lower totaloptical power. Systems with higher idealthroughput (see Figure 7b) have quadrati-cally more waveguide crossings, makingthem more sensitive to crossing losses. Addi-tionally, certain combinations of waveguideand crossing losses result in large cumulativelosses and require multiple waveguides tostay within the nonlinearity limit. These ad-ditional waveguides further increase the totalnumber of crossings, which in turn continuesto increase the power per wavelength, mean-ing that for some device parameters it isinfeasible to leverage the ring-filter matrixtemplate. This type of analysis can be usedto drive photonic device research, and wehave developed optimized waveguide cross-ings that can potentially reduce the crossingloss to 0.05 dB per crossing.12

We also studied the area overhead of thering-filter matrix template for a range ofwaveguide and crossing losses. We assumedeach waveguide is 0.5 mm wide on a 4-mmpitch, and each air gap requires an additional20 mm for etch holes and alignment margins.We use two cascaded 10-mm diameter ringsfor all modulators and filters. Althoughwaveguides can be routed at minimumpitch, they require additional spacing forthe rings in the PAPs and ring-filter matrix.Our study found that the total chip area

0.05

0.1

02.02.01.51.0

Waveguide loss (dB/cm) Waveguide loss (dB/cm)

(a) (b)

0.50 0 0.5 1.0 1.5

Cro

ssin

g lo

ss (d

B/c

ross

ing)

0.5

0.5 1

1

1

1 2

2

2

2

11

8

3

3

5

25

8

11

14

Infeasibleregion

Infeasibleregion

4

Figure 7. Optical laser power in watts for 32 bits/cycle global I/O channels (a) and 128 bits/

cycle global I/O channels (b), given the following optical loss parameters: 1 dB coupler loss,

0.2 dB splitter loss, 1 dB nonlinearity at 50 mW, 0.01 dB through loss, 1.5 dB drop loss,

0.5 dB modulator insertion loss, 0.1 dB photodetector loss, and !20 dBm receiver sensitivity.

..................................................................

16 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

overhead for the photonic components in thesystem shown in Figure 6 ranges from 5 to10 percent depending on the quality of thephotonic components. From these results,we can see that although this template pro-vides a compact and well-structured layout,it includes numerous waveguide crossingsthat must be carefully designed to limittotal optical laser power.

Simulation resultsTo more accurately evaluate the perfor-

mance of the various topologies, we used adetailed cycle-level microarchitectural simu-lator that models pipeline latencies, routercontention, credit-based flow control, and se-rialization overheads. The modeled systemincludes 256 cores and 16 DRAM modulesin a 22-nm technology with two-cyclemesh routers, one-cycle mesh channels,four-cycle global point-to-point channels,and 100-cycle DRAM array access latency.All mesh networks use dimension-orderedrouting and wormhole flow control. We con-strain all configurations to have an equalamount of network buffering, measured intotal number of bits. For this work, we usea synthetic uniform random traffic patternat a configurable injection rate. Due to thecache-line interleaving across access points,we believe this traffic pattern is representativeof many bandwidth-limited applications. Allrequest and response messages are 256 bits,which is a reasonable average assuming aload/store network with 64-bit addressesand 512-bit cache lines. We assume thatthe flow-control digit (flit) size is equal to thephysical channel bitwidth. We use warm-up, measure, and wait phases of several thou-sand cycles each and an infinite source queueto accurately determine the latency at a giveninjection rate. We augment our simulator tocount various events (such as channel utiliza-tion, queue accesses, and arbitration), whichwe then multiply by energy values derivedfrom our analytical models. For our energycalculations, we assume that all flits containrandom data.

Table 1 shows the simulated configurationsand the corresponding mesh and off-chip I/Ochannel bitwidths as derived from the analysispresented earlier in this article with a totalpower budget of 20 W. We also considered

various practical issues when rounding eachchannel bit width to an appropriate multipleof eight. In theory, all configurations shouldbalance the mesh’s throughput with thethroughput of the off-chip I/O so that nei-ther part of the system becomes a bottleneck.In practice, however, it can be difficult toachieve the ideal throughput in mesh topol-ogies due to multihop contention and load-balancing issues. Therefore, we also considerconfigurations that increase the mesh net-work’s overprovisioning factor (OPF) in aneffort to improve the expected achievablethroughput. The OPF is the ratio of theon-chip mesh ideal throughput to the off-chip I/O ideal throughput.

The Eg1x1, Eg4x1, and Eg16x1 configu-rations keep the OPF constant while varyingthe number of groups; Figure 8a shows thesimulation results. The peak throughput forEg1x1 and Eg4x1 are significantly less thanpredicted by the analytical model in Figure 4a.This is due to realistic flow-control androuting and the fact that our analyticalmodel assumes a large number of DRAMmodules (access points distributed through-out the mesh) while our simulated system

Table 1. Simulated configurations.

Name*

Mesh channel width

(bits per cycle)

Global I/O channel

width (bits per cycle)

Eg1x1 16 64

Eg4x1 8 16

Eg16x1 8 8

Eg1x4 64 64

Eg4x2 24 24

OCg1x1 64 256

OCg4x1 48 96

OCg16x1 48 48

OCg1x4 128 128

OCg4x2 80 80

OAg1x1 64 256

OAg4x1 64 128

OAg16x1 64 64

OAg1x4 128 128

OAg4x2 96 96.......................................................................................................* The name of each configuration indicates the technology we used toimplement the off-chip I/O (E " electrical, OC " conservative 250 f J/bphotonic links, OA " aggressive 100 f J/b photonic links), the number ofgroups (g1/g4/g16 " 1/4/16 groups), and the OPF (x1/x2/x4 " OPF of1/2/4).

....................................................................

JULY/AUGUST 2009 17

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

models a more realistic 16 DRAM modules(access points positioned in the middle ofthe mesh), resulting in a less uniform trafficdistribution. The lower saturation pointexplains why Eg1x1 and Eg4x1 consumesignificantly less than 20 W. We investigatedvarious OPF values for all three amounts ofgrouping and found that the Eg1x4 andEg4x2 configurations provide the best trade-off. Eg1x4 and Eg4x2 increase the through-put by three to four times over the balancedconfigurations. Overprovisioning had mini-mal impact on the 16-group configurationsince the local meshes are already small.Overall, Eg4x2 is the best electrical configura-tion. It consumes approximately 20 W nearsaturation.

Figures 8b and 8c show the power andperformance of the photonic networks. Justreplacing the off-chip I/O with photonicsin a simple mesh topology (for example,OCg1x4 and OAg1x4) results in a two-times improvement in throughput. However,the full benefit of photonic interconnect only

becomes apparent when we partition the on-chip mesh network and offload more trafficonto the energy-efficient photonic channels.The OAg16x1 configuration can achieve athroughput of 9 Kbits/cycle (22 Tbps),which is approximately an order of magni-tude improvement over the best electricalconfiguration (Eg4x2) at the same latency.The photonic configurations also provide aslight reduction in the zero-load latency.The best optical configurations consume ap-proximately 16 W near saturation. At verylight loads, the 16-group configurations con-sume more power than the other optical x1configurations. This is because the 16-group configuration has many more pho-tonic channels and thus higher static poweroverheads due to both leakage and thermaltuning power. The overprovisioned photonicconfigurations consume higher power sincethey require much wider mesh channels.

Figure 9 shows the power breakdown forthe Eg4x2, OCg16x1, and OAg16x1 config-urations near saturation. As expected, most

150

200

250

300

350

Ave

rage

late

ncy

(cyc

les)

0

(a) (b) (c)

0.25 0.5 0.75 1 1.255

10

15

20

25

Offered bandwidth (Kbits/cycle)

Tota

l pow

er (W

)

0 2 4 6Offered bandwidth (Kbits/cycle)

0 2 4 6 8 10Offered bandwidth (Kbits/cycle)

Eg1"1Eg4"1

Eg1"4Eg4"2

Eg16"1

OCg1"1OCg4"1

OCg1"4OCg4"2

OCg16"1

OAg1"1OAg4"1

OAg1"4OAg4"2

OAg16"1

Figure 8. Simulated performance and power for the topology configurations in Table 1 assuming electrical interconnect (a),

conservative photonic interconnect (b), and aggressive photonic interconnect (c).

..................................................................

18 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

of the power in the electrical configuration isspent on the global channels connecting theaccess points to the DRAM modules. Byimplementing these channels with energy-efficient photonic links, we have a largerportion of our energy budget for higher-bandwidth on-chip mesh networks evenafter including the overhead for thermal tun-ing. The photonic configurations consumealmost 15 W, leaving 5 W for on-chip opti-cal power dissipation as heat. Ultimately,photonics enables almost an order of magni-tude improvement in throughput at similarlatency and power consumption.

Although the results are not shown, wealso investigated a concentrated mesh topol-ogy with one mesh router for every fourcores.11 Concentration decreases the totalnumber of routers (which decreases thehop latency) at the expense of increasedenergy per router. Concentrated mesh con-figurations have similar throughput as theconfigurations in Figure 8a with slightlylower zero-load latencies. Concentrationhad little impact when combined with pho-tonic off-chip I/O.

O ur work at the network architecturelevel has helped identify which

photonic devices are the most critical andhelped establish new target device para-meters. These observations motivate furtherdevice-level research as illustrated by ourwork on optimized waveguide crossings. Wefeel this vertically integrated research ap-proach will be the key to fully realizing thepotential of silicon photonics in futuremany-core processors. MICRO

AcknowledgmentsWe acknowledge chip fabrication support

from Texas Instruments and partial fundingfrom DARPA/MTO award W911NF!06!1!0449. We also thank Yong-Jin Kwon forhis help with network simulations andImran Shamim for router power estimation.

....................................................................References1. T. Barwicz et al., ‘‘Silicon Photonics for

Compact, Energy-efficient Interconnects,’’

J. Optical Networking, vol. 6, no. 1, 2007,

pp. 63-73.

2. C. Gunn, ‘‘CMOS Photonics for High-speed

Interconnects,’’ IEEE Micro, vol. 26, no. 2,

2006, pp. 58-66.

3. A. Shacham et al., ‘‘Photonic NoC for DMA

Communications in Chip Multiprocessors,’’

Proc. Symp. High-Performance Interconnects

(HOTI), IEEE CS Press, 2007, pp. 29-38.

4. N. Kirman et al., ‘‘Leveraging Optical Technol-

ogy in Future Bus-based Chip Multiproces-

sors,’’ Proc. Int’l Symp. Microarchitecture,

IEEE CS Press, 2006, pp. 492-503.

5. D. Vantrease et al., ‘‘Corona: System Impli-

cations of Emerging Nanophotonic Tech-

nology,’’ Proc. Int’l Symp. Computer

Architecture (ISCA), IEEE CS Press, 2008,

pp. 153-164.

6. C. Schow et al., ‘‘A <5mW/Gb/s/link,

16x10Gb/s Bidirectional Single-chip CMOS

Optical Transceiver for Board Level Optical

Interconnects,’’ Proc. Int’l Solid-State Cir-

cuits Conf., 2008, pp. 294-295.

7. C. Holzwarth et al., ‘‘Localized Substrate

Removal Technique Enabling Strong-

confinement Microphotonics in Bulk Si

CMOS Processes,’’ Proc. Conf. Lasers and

Electro-Optics (CLEO), Optical Soc. of

America, 2008.

8. J. Orcutt et al., ‘‘Demonstration of an Elec-

tronic Photonic Integrated Circuit in a Com-

mercial Scaled Bulk CMOS Process,’’ Proc.

Conf. Lasers and Electro-Optics (CLEO), Op-

tical Soc. of America, 2008.

9. M. Lipson, ‘‘Compact Electro-optic Modula-

tors on a Silicon Chip,’’ J. Selected Topics in

20

15

10

5

0

Pow

er (W

)

Thermal tuning

Mesh routers

Mesh channels

Global on-chip +off-chip I/Ochannels

Eg4!2(0.8 Kbits/cycle)

OCg16!1(6 Kbits/cycle)

OAg16!1(9 Kbits/cycle)

Figure 9. Power breakdown near saturation for the best electrical and

optical configurations.

....................................................................

JULY/AUGUST 2009 19

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

Quantum Electronics, vol. 12, no. 6, 2006,

pp. 1520-1526.

10. B. Kim and V. Stojanovıc, ‘‘Characterization

of Equalized and Repeated Interconnects

for NoC Applications,’’ IEEE Design and

Test of Computers, vol. 25, no. 5, 2008,

pp. 430-439.

11. J. Balfour and W. Dally, ‘‘Design Tradeoffs

for Tiled CMP On-chip Networks,’’ Proc.

Int’l Conf. Supercomputing, ACM Press,

2006, pp. 187-198.

12. M. Popovıc, E. Ippen, and F. Kartner, ‘‘Low-

Loss Bloch Waves in Open Structures and

Highly Compact, Efficient Si Waveguide-

crossing Arrays,’’ Proc. 20th Ann. Mtg. of

IEEE Lasers and Electro-Optics Society,

IEEE Press, 2007, pp. 56-57.

Christopher Batten is a PhD candidate inthe Electrical Engineering and ComputerScience Department at the MassachusettsInstitute of Technology. His research interestsinclude energy-efficient parallel computersystems and architectures for emerging tech-nologies. Batten has an MPhil in engineeringfrom the University of Cambridge.

Ajay Joshi is a postdoctoral associate inMIT’s Research Laboratory of Electronics.His research interests include interconnectmodeling, network-on-chip design, high-speed low-power digital design, and physicaldesign. Joshi has a PhD in electrical engineer-ing from the Georgia Institute of Technology.

Jason Orcutt is a PhD candidate in MIT’sElectrical Engineering and ComputerScience Department. His research interestsinclude device and process design for CMOSphotonic integration. Orcutt has an MS inelectrical engineering from MIT.

Anatol Khilo is a PhD candidate in MIT’sElectrical Engineering and ComputerScience Department. His research interestsinclude the design of nanophotonic devicesand photonic analog-to-digital conversion.Khilo has an MS in electrical engineeringfrom MIT.

Benjamin Moss is an MS candidate inMIT’s Electrical Engineering and ComputerScience Department. His research interests

include circuit design and modeling forintegrated photonic CMOS systems. Mosshas a BS in electrical engineering, compu-ter science, and computer engineering fromthe Missouri University of Science andTechnology.

Charles W. Holzwarth is a PhD candidatein MIT’s Material Science and EngineeringDepartment. His research interests includethe development of nanofabrication tech-niques for integrated electronic-photonicsystems. Holzwarth has a BS in materialscience and engineering from the Universityof Illinois at Urbana-Champaign.

Milos A. Popovic is a postdoctoral associatein MIT’s Research Laboratory of Electronics.His research interests include the design andfundamental limitations of nanophotonicdevices, energy-efficient electronic-photoniccircuits, and nano-optomechanical photonicsbased on light forces. Popovic has a PhD inelectrical engineering from MIT.

Hanqing Li is a research scientist withMIT’s Microsystems Technology Labora-tories. His research interests include MEMSfabrication technologies, photonics, energyharvesting, and micro sensors and actuators.Li has a PhD in physics from the Universityof Nebraska-Lincoln.

Henry I. Smith is a professor in MIT’sElectrical Engineering and ComputerScience Department and is president ofLumArray, a spin-off from MIT developinga maskless photolithography system. Hesupervises research in nanofabrication tech-nology and applications thereof in electro-nics, photonics, and materials science. Smithhas a PhD from Boston College.

Judy L. Hoyt is a professor in MIT’sElectrical Engineering and ComputerScience Department and associate directorof MIT’s Microsystems Technology Labora-tories. Her research interests include fabrica-tion and device physics of silicon-basedheterostructures and nanostructures, such ashigh mobility Si and Ge-channel MOSFETs;nanowire FETs; novel transistor structures;and photodetectors for electronic/photonic

..................................................................

20 IEEE MICRO

...............................................................................................................................................................................................HOT INTERCONNECTS

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.

integrated circuits. Hoyt has a PhD inapplied physics from Stanford University.

Franz X. Kartner is a professor in MIT’sElectrical Engineering and Computer ScienceDepartment. His research interests includeclassical and quantum noise in electronic andoptical devices as well as femtosecond lasersand their applications in frequency metrol-ogy. Kartner has a PhD in electricalengineering from Technische UniversitatMunchen, Germany.

Rajeev J. Ram is a professor in MIT’sElectrical Engineering and ComputerScience Department, director of the MITCenter for Integrated Photonic Systems, andassociate director of the Research Laboratoryof Electronics. His research interests includeoptoelectronic devices for applications incommunications, biological sensing, andenergy production. Ram has a PhD inelectrical engineering from the University ofCalifornia, Santa Barbara.

Vladimir Stojanovic is an assistant profes-sor in MIT’s Electrical Engineering and

Computer Science Department. His re-search interests include high-speed electri-cal and optical links and networks,communications and signal-processing ar-chitectures, and high-speed digital andmixed-signal IC design. Stojanovic has aPhD in electrical engineering from StanfordUniversity.

Krste Asanovic is an associate professor inthe Electrical Engineering and ComputerScience Department at the University ofCalifornia, Berkeley. His research interestsinclude computer architecture, VLSI design,and parallel programming. Asanovic has aPhD in computer science from the Uni-versity of California, Berkeley.

Direct questions and comments aboutthis article to Christopher Batten, Univer-sity of California, 565 Soda Hall, Berkeley,CA 94704; [email protected].

For more information on this or anyother computing topic, please visit ourDigital Library at http://computer.org/csdl.

....................................................................

JULY/AUGUST 2009 21

Authorized licensed use limited to: International Computer Science Inst (ICSI). Downloaded on November 2, 2009 at 13:37 from IEEE Xplore. Restrictions apply.