AMD Opteron Overview Michael Trotter (mjt5v) Tim Kang (tjk2n) Jeff Barbieri (jjb3v)

-

Upload

albert-maxwell -

Category

Documents

-

view

220 -

download

3

Transcript of AMD Opteron Overview Michael Trotter (mjt5v) Tim Kang (tjk2n) Jeff Barbieri (jjb3v)

AMD Opteron Overview

Michael Trotter (mjt5v)Tim Kang (tjk2n)

Jeff Barbieri (jjb3v)

Introduction• AMD Opteron

– Focuses on Barcelona

• Barcelona is AMD’s 65nm 4-core CPU

Fetch

• Fetches 32B from L1 cache to pre-decode/Pick buffer• For simplicity, the Barcelona uses pre-decode

information to mark the end of an instruction.

Inst. Decode• The instruction cache contains a pre-decoder which

scans 4B of the instruction stream each cycle– Inserts pre-decode information from the ECC bits of the

L1I, L2 and L3 caches, along with each line of instructions• Instructions are then passed through the sideband

stack optimizer– x86 includes instructions to directly manipulate the stack

of each thread– AMD introduced a side-band stack optimizer to remove

these stack manipulations from the instruction stream– Thus, many stack operations can be processed in parallel

• Frees up the reservation stations, re-order buffers, and regular ALUs for other work

Branch Prediction• Branch selector chooses between a bi-modal predictor and a global

predictor– The bi-modal predictor and branch selector are both stored in the ECC

bits of the instruction cache, as pre-decode information– The global predictor combines the relative instruction pointer (RIP) for

a conditional branch with a global history register • Tracks last 12 branches with a 16K entry prediction table containing 2 bit

saturating counters – The branch target address calculator (BTAC) checks the targets for

relative branches• Can correct mis-predictions with a two cycle penalty.

• Barcelona uses an indirect predictor– Specifically designed to handle branches with multiple targets (e.g.

switch or case statements)• Return address stack has 24 entries

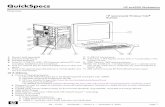

Pipeline

• Uses a 12 stage pipeline

OO (ROB)• The Pack Buffer (post-decoding buffer) sends

groups of 3 micro-ops to the re-order buffer (ROB)– The re-order buffer contains 24 entries, with 3 lanes

per entry• Holds a total of 72 instructions

– Instructions can be moved between lanes to avoid a congested reservation station or to observe issue restrictions

• From the ROB, instructions issue to the appropriate scheduler

ROB

Integer Future File and Register File (IFFRF)

• The IFFRF contains 40 registers broken up into three distinct sets– The Architectural Register File

• Contains 16x64 bit non-speculative registers• Instructions can only modify the Architectural Register File until they are

committed– Speculative instructions read from and write to the Future File

• Contains the most recent speculative state of the 16 architectural instructions

– The last 8 registers are scratchpad registers used by the microcode. • Should a branch mis-prediction or an exception occur, the pipeline

rolls back, and architectural register file overwrites the contents of the Future File

• There are three reservation stations, i.e. schedulers, within the integer cluster – Each station is tied to a specific lane in the ROB and holds 8

instructions

Integer Execution

• Barcelona uses three symmetric ALUs which can execute almost any integer instruction

• Three full featured ALUs require more die area and power• Can provide higher performance for certain edge

cases• Enables a simpler design for the ROB and

schedulers.

Floating Point Execution

• Floating Point operations are first sent to the FP Mapper and Renamer

• In the Renamer, up to 3 FP instructions each cycle are assigned a destination register from the 120 FP register file entries.

• Once the micro-ops have been renamed, they may be issued to the three FP schedulers

• Operands can be obtained from either the FP register file, or the forwarding network

Floating Point Execution (SIMD)

• The FPUs are 128 bits wide so that Streaming SIMD Extension (SSE) instructions can execute in a single pass.

• Similarly, the load-store units, and the FMISC unit load 128 bit wide data, to improve SSE performance.

Memory Overview

Memory Hierarchy

• 4 separate 128KB 2-way set associative L1 cache– Latency = 3 cycles– Write-back to L2– The data paths into and from the L1D cache also

widened to 256 bits (128 bits transmit and 128 bits receive)

• 4 separate 512KB 16-way set associative– Latency = 12 cycles– Line size is 64B

L3 Cache• Shared 2MB 32-way set associative L3

– Latency = 38 cycles– Uses 64B lines– The L3 cache was designed with data sharing in mind

• When a line is requested, if it is likely to be shared, then it will remain in the L3– This leads to duplication which would not happen in an exclusive hierarchy

• In the past, a pseudo-LRU algorithm would evict the oldest line in the cache. – In Barcelona’s L3, the replacement algorithm has been changed to prefer evicting unshared lines

• Access to the L3 must be arbitrated since the L3 is shared between four different cores– A round-robin algorithm is used to give access to one of the four cores each cycle.

• Each core has 8 data prefetchers (a total of 32 per device)– Fill the L1D cache– Can have up to 2 outstanding fetches to any address

Memory Controllers• Each memory controller supports independent 64B

transactions• Integrated DDR2 Memory controller ensures that L3 cache

miss is resolved in less than 60 nanoseconds

TLB• Barcelona offers non-speculative memory access re-ordering in the form of Load

Store Units (LSU)– Thus, some memory operations can be issued out-of-order

• In the 12 entry LSU1, the oldest operations translate their addresses from the virtual address space to the physical address space using the L1 DTLB

• During this translation, the lower 12 bits of the load operation’s address are tested against previously stored addresses– If they are different, then the load proceeds ahead of the store– If they are the same, load-store forwarding occurs

• Should a miss in the L1 DTLB occur, the L2 DTLB will be checked– Once the load or store has located address in the cache, the operation will move on to LSU2.

• LSU2 holds up to 32 memory accesses, where they stay until they are removed– The LSU2 handles any cache or TLB misses via scheduling and probing– In the case of a cache miss, the LSU2 will then look in the L2, L3 and then memory– In the case of TLB misses, it will look in the L2 TLB and then main memory– The LSU2 also holds store instructions, which are not allowed to actually modify the caches

until retirement to ensure correctness– Thus, the LSU2 reduces the majority of the complexity in the memory pipeline

Hypertransport

• Barcelona has four HyperTransport 3.0 lanes for inter-processor communications and I/O devices

• HyperTransport 3.0 adds a feature called ‘unganging’ or lane-splitting

• The HT3.0 links are composed of two 16 bit lanes ( in both directions)– Each can be split up into a pair of independent 8-

bit wide links

Shanghai

• The latest model of the Opteron series• Several improvements over Barcelona– 45nm– 6MB L3 cache– Improved clock speeds– A host of other improvements