z n z G) o c o z o z o o o o z o o o o z z z m z z c z o z ...

Z Shook2k4fulltext

description

Transcript of Z Shook2k4fulltext

-

Strategic Management JournalStrat. Mgmt. J., 25: 397404 (2004)

Published online in Wiley InterScience (www.interscience.wiley.com). DOI: 10.1002/smj.385

RESEARCH NOTES AND COMMENTARIES

AN ASSESSMENT OF THE USE OF STRUCTURALEQUATION MODELING IN STRATEGICMANAGEMENT RESEARCHCHRISTOPHER L. SHOOK,1 DAVID J. KETCHEN, JR.,2* G. TOMAS M. HULT3and K. MICHELE KACMAR21 College of Business, Auburn University, Auburn, Alabama, U.S.A.2 College of Business, Florida State University, Tallahassee, Florida, U.S.A.3 Eli Broad College of Business, Michigan State University, East Lansing, Michigan,U.S.A.

Structural equation modeling (SEM) is a powerful, yet complex, analytical technique. The useof SEM to examine strategic management phenomena has increased dramatically in recentyears, suggesting that a critical evaluation of the techniques implementation is needed. Wecompared the use of SEM in 92 strategic management studies published in nine prominentjournals from 1984 to 2002 to guidelines culled from methodological research. We found thatthe use and reporting of SEM often have been less than ideal, indicating that authors may bedrawing erroneous conclusions about relationships among variables. Given these results, weoffer suggestions for researchers on how to better deploy SEM within future inquiry. Copyright 2004 John Wiley & Sons, Ltd.

While the roots of strategic management researchcan be traced at least to the early 1960s, the fieldsprominence grew dramatically following the pub-lication of Schendel and Hofer (1979) and theemergence of the Strategic Management Journal(SMJ) in 1980. As the fields stature has devel-oped, so too has its theoretical sophistication. Thedesire to test complex models has led some authorsto embrace structural equation modeling (SEM).Although SEM was introduced into the strategyliterature in 1984 (Farh, Hoffman, and Hegarty,1984), the technique has only recently become

Key words: structural equation modeling; data analysis;statistical methods*Correspondence to: David J. Ketchen, Jr., College of Business,Florida State University, Tallahassee, FL 32306-1110, U.S.A.E-mail: [email protected]

popular. For example, only five studies using SEMwere published in SMJ prior to 1995, while 27such studies appeared between 1998 and 2002.

In its most general form, SEM consists of a setof linear equations that simultaneously test twoor more relationships among directly observableand/or unmeasured latent variables. While SEMserves purposes similar to multiple regression, dif-ferences exist between these techniques. SEM hasa unique ability to simultaneously examine a seriesof dependence relationships (where a dependentvariable becomes an independent variable in subse-quent relationships within the same analysis) whilealso simultaneously analyzing multiple dependentvariables (Joreskog et al., 1999).

Like any statistical tool, SEMs benefits areobtained only if properly applied. Because SEM

Copyright 2004 John Wiley & Sons, Ltd. Received 25 October 2001Final revision received 2 September 2003

-

398 C. L. Shook et al.

requires several complicated choices, insight andjudgment are crucial elements of its use (Joreskogand Sorbom, 1996). Missteps compromise resultsvalidity, inhibiting researchers ability to developknowledge and inform managers. Reviews of SEMusage in the fields of organizational behavior(Brannick, 1995), management information sys-tems (Chin, 1998), marketing (Steenkamp and vanTrijp, 1991), and logistics (Garver and Mentzer,1999) have unveiled serious flaws. Steiger (2001)found that SEM textbooks ignore many importantissues, suggesting that strategy researchers mayhave inadequate guidance to draw upon. To date,however, no systematic assessment has been madeof SEM use within strategic management. Given(a) the difficulties found in other fields, (b) thegrowing use of SEM within strategic management,(c) SEMs complexity, and (d) the need to developvalid insights about organizations, a critical exam-ination of SEM usage seems timely and warranted.

CRITICAL ISSUES IN THEAPPLICATION OF SEM

We focused on six critical issues discussed in otherfields SEM assessments: data characteristics, reli-ability and validity, evaluating model fit, modelrespecification, equivalent models, and reporting.Where appropriate, we culled best practices fromthe literature on SEM methodology (e.g., Bollen,1989; Fornell and Larcker, 1981; Joreskog andSorbom, 1996) to judge researchers decisions.Some of these best practices rely on statisticaltests, while others rely on rules of thumb. Forother issues, our goal was to describe trends inSEM usage.

We searched the 10 empirical journals withinMacMillans (1994) forum for strategy researchfrom 1984 to 2002 for strategic management stud-ies using SEM. As in Ketchen and Shook (1996),strategy studies were defined as studies exam-ining relationships among the four broad con-structs that represent the field according to Sum-mer et al. (1990): strategy, environment, leader-ship/organization, and performance. Two codershad to agree that a study fit within this domainfor the study to be included. We excluded stud-ies using partial least squares (PLS). AlthoughPLS is in some ways similar to SEM, it is nota covariance-based modeling technique and itsapplication is very different from covariance-based

methods. The 92 relevant studies we identified arelisted in Table 1. Most studies were found in SMJ(34 studies, 37%), the Academy of ManagementJournal (24, 26%), and the Journal of Manage-ment (12, 13%). To assess whether the applicationof SEM has changed over time, we grouped thestudies into two time periods: 1984 to 1995 (32studies), and 1996 to 2002 (60 studies).

Two coders independently coded each article.One professor coded all studies. The other cod-ing was split among another professor and twoadvanced PhD students. The percentage of codingagreement was 93 percent, which compares favor-ably to similar studies (e.g., 83%Ford, MacCal-lum, and Tait, 1986; 93%Ketchen and Shook,1996). Disagreements were discussed until bothcoders agreed on the proper coding.

Data characteristicsThe use of cross-sectional vs. longitudinal data isa basic issue in the application of SEM. Histori-cally, the term causal modeling has been used todescribe SEM. The strongest inference of causalitymay be made only when the temporal ordering ofvariables is demonstrated (Kelloway, 1995). How-ever, many strategy studies involve cross-sectionaldesigns; in such cases, strong theoretical underpin-nings are critical to causality inferences. We codeda study as cross-sectional if it involved one timeperiod (69 of 92 studies, 75%) or as longitudinal ifit involved two or more periods (23 studies, 25%).Cross-sectional designs were slightly, but not sig-nificantly (z = 0.95), more prevalent recently (22of 32 studies, 69%, from 1984 to 1995 vs. 47 of60, 78%, studies from 1996 to 2002).

Researchers should also ensure that their datameet the assumed distribution of their estimationapproach. The common approaches to estimatingstructural equation models assume that indicatorvariables have multivariate normal distributions.Non-normal data may lead to inflated goodness-of-fit statistics and underestimated standard errors(MacCallum, Roznowski, and Necowitz, 1992).Thus, the uncritical use of non-normal data mayhamper research progress by providing inaccuratefindings. Despite these concerns, 75 studies (81%)did not note if the sample was normally distributed.The percentage of studies not mentioning sampledistribution stayed constant (81% in 1984 to 1995;82% in 1996 to 2002). Of the 17 studies that dis-cussed normality, eight were based on normally

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

Research Notes and Commentaries 399

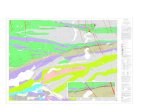

Table 1. Strategy articles that used SEMa

Academy of Management JournalSingh, 1986Walker and Weber, 1987Keats and Hitt, 1988Miller, Droge, and Toulouse, 1988Heide and Miner, 1992Judge and Zeithaml, 1992Hoskisson, Johnson, and Moesel,

1994Wally and Baum, 1994Phan and Hill, 1995Amason, 1996Hitt, Hoskisson, Johnson, and

Moesel, 1996Simonin, 1997Stimpert and Duhaime, 1997Daily, Johnson, Ellstrand, and Dalton,

1998Finkelstein and Boyd, 1988Johnson and Greening, 1999Busenitz, Gomez, and Spencer, 2000Isobe, Makino, and Montgomery,

2000Sharma, 2000Baum, Locke, and Smith, 2001Li and Atuahene-Gima, 2001Song and Montoya-Weiss, 2001Hoskisson, Hitt, Johnson, and

Grossman, 2002Hult, Ketchen, and Nichols, 2002

Administrative Science QuarterlyWalker and Poppo, 1991Gupta, Dirsmith, and Fogarty, 1994Dukerich, Golden, and Shortell, 2002

Decision ScienceFarh, Hoffman, and Hegarty, 1984Hult, 1998Daily, Johnson, and Dalton, 1999

Journal of Management StudiesVenkatraman, 1990Judge and Douglas, 1998Golden, Dukerich, and Fabian, 2000

Journal of ManagementVenkatraman and Ramanujam, 1987Bartunek and Franzak, 1988Fryxell, 1990Fryxell and Barton, 1990Keats, 1990Fryxell and Wang, 1994Gerbing, Hamilton, and Freeman,

1994Boyd and Fulk, 1996Daily and Johnson, 1997Miller, Droge, and Vickery, 1997Dooley, Fryxell, and Judge, 2000Lukas, Tan, and Hult, 2001

Management ScienceVenkatraman and Ramanujam, 1987Venkatraman, 1989Miller, 1991Sethi and King, 1994McGrath, Tsai, Venkatraman, and

MacMillan, 1996

Organization StudiesWong and Birnbaum-Moore, 1994Ginsberg and Venkatraman, 1995

Organization ScienceMarcoulides and Heck, 1993Mjoen and Tallman, 1997Zaheer, McEvily, and Perrone, 1998Larsson and Finkelstein, 1999Young-Ybarra and Wiersema, 1999Schilling and Steensma, 2002

Strategic Management JournalBoyd, 1990Hoskisson, Hitt, Johnson, and Moesel,

1993Boyd, 1994Hagedoorn and Schakenraad, 1994Kotha and Vadlamani, 1995Hopkins and Hopkins, 1997Stimpert and Duhaime, 1997Boyd and Reuning-Elliott, 1998Murtha, Lenway, and Bagozzi, 1998Bensaou, Coyne, and Venkatraman,

1999Capron, 1999Combs and Ketchen, 1999Holm, Eriksson, and Johanson, 1999Knight et al., 1999Kroll, Wright, and Heiens, 1999McEvily and Zaheer, 1999Palmer and Wiseman, 1999Simonin, 1999Yeoh and Roth, 1999Steensma and Lyles, 2000Kale, Singh, and Perlmutter 2000Capron, Mitchell, and Swaminathan,

2001Geletkanycz, Boyd, and Finkelstein,

2001Hult and Ketchen, 2001Spanos and Lioukas, 2001Yli-Renko, Autio, and Sapienza, 2001Andersson, Forsgren, and Holm, 2002Johnson, Korsgaard, and Sapienza,

2002Koka and Prescott, 2002Li and Atuahene-Gima, 2002Matusik, 2002McEvily and Chakravarthy, 2002Schroeder, Bates, and Junttila, 2002Worren, Moore, and Cardona, 2002

a Using MacCallum et al.s (1996) guidelines, the 29 studies marked with an asterisk had adequate power; 43 other studies did notand we were unable to assess power for 20 studies.

distributed data and nine were not. In all of thenine latter studies, authors took corrective actions(e.g., transforming the data). Data transformationis not without problems, however. To the extentthat a researcher has developed a strong theoreticalfoundation and believes in the original specifica-tion, transforming the data can provide an incorrectspecification.1 Thus, researchers must be mindfulof the trade-offs inherent in transforming data (cf.Satorra, 2001). One alternative to transformation

1 We thank an anonymous reviewer for offering this insight.

is to use an estimation approach available in EQS(i.e., ml,robust) that adjusts the model fit chi-square test statistic and standard errors of indi-vidual parameter estimates. Another approach isto select an estimation strategy, such as general-ized least squares in LISREL, that does not assumemultivariate normality (Hayduk, 1987: 334; cf.Joreskog et al., 1999).

Reliability and validityMeasures reliability and validity should beassessed when using SEM. Initially, the estimated

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

400 C. L. Shook et al.

reflective loadings and their accompanying signif-icance levels are examined. If the loadings arenot statistically significant, the researcher should(in most cases) eliminate such indicator(s) (Ander-son and Gerbing, 1988) or consider the possibilitythat the indicators may be formative (i.e., representone aspect of a multidimensional construct) ratherthan reflective (i.e., represent attributes of a uni-dimensional construct) (Bollen, 1989). Followingthe assessment of loadings and t-values, reliabilityand validity should be examined.

Coefficient alpha, the most common measure ofreliability, has several limitations. For example,coefficient alpha wrongly assumes that all itemscontribute equally to reliability (Bollen, 1989). Abetter choice is composite reliability, which drawson the standardized loadings and measurementerror for each item. A popular rule of thumb isthat 0.70 is an acceptable threshold for compositereliability, with each indicator reliability above0.50 (Fornell and Larcker, 1981).

We coded whether a study described reliabilityand what measure(s) were reported. In total, relia-bility was examined in 56 studies (61%). Assess-ing reliability was slightly, but not significantly(z = 1.12), more prevalent recently (17 studies,53%, early period vs. 39 studies, 65%, recentperiod). Overall, 34 studies (37%) relied on coef-ficient alpha. Composite reliability was reportedin 18 studies (20%), and both composite reliabil-ity and coefficient alphas were reported in threestudies (3%).

The means for assessing validity when usingSEM are reviewed by Bollen (1989). These meansrely on rules of thumb. At the most basic level,the measurement model itself offers evidence ofconvergent and discriminant validity (Andersonand Gerbing, 1988), assuming it is found to beacceptable (i.e., if it has significant factor loadings0.70 and fit indices 0.90). Acceptable conver-gent validity is achieved when the average varianceextracted is 50% (this measure roughly corre-sponds to the Eigenvalue in exploratory factoranalysis).

We coded whether a study examined convergentvalidity and what measure(s) were reported. Intotal, convergent validity was examined in 36studies (39%). Assessing convergent validity wasslightly, but not significantly (z = 1.59), moreprevalent recently (9, 28%, early period vs.27, 45%, recent period). Nine studies (10%)reported the average variance extracted. Less

stringent assessments of convergent validity (e.g.,examination of factor loadings and the correlationmatrix) were used in 24 studies (26%).

Calculating the shared variance between twoconstructs and verifying that it is lower thanthe average variances extracted for each individ-ual construct are the most common means toassess discriminant validity in the measurementmodel (Fornell and Larcker, 1981). Another viableapproach is to conduct pairwise tests of alltheoretically related constructs (Anderson, 1987;Bagozzi and Phillips, 1982). The pairwise analysistests whether a confirmatory factor analysis modelrepresenting two measures with two factors fits thedata significantly better than a one-factor model.

We coded whether a study examined discrimi-nant validity and what measure(s) were reported.Discriminant validity was examined in 37 studies,or 40% (11 in 1984 to 1995, 34%; 26 in 1996to 2002, 43%). Pairwise tests were reported in 21studies (23%), nine studies (10%) reported aver-age variance extracted compared to shared vari-ance, seven studies (7%) cited other measures (e.g.,examination of the correlation matrix).

Our results for reliability and validity offer causefor concern. Confidence in an SEM studys find-ings depends on a solid foundation of measureswith known and rigorous properties. Yet, littleattention has been paid to reliability and validity.When measures are unwittingly weak, the conse-quences may include inappropriate modificationsto structural models and spurious findings. Lowreliability and validity may cause relationships toappear non-significant, regardless of whether thelinks exist. As a result, promising research pathsmay have been prematurely cut short. To the extentthat such paths could have generated insightstransferable to managers, strategic managementsmission to inform practice was not served. Thus,careful attention to measurement issues is neededin future studies.

Evaluating model fitAssessing a models fit is one of SEMs mostcontroversial aspects. Before assessing individualparameters, one must assess the overall fit of theobserved data to an a priori model (Joreskog et al.,1999). Unlike traditional methods, SEM relies onnon-significance. Fit indices ascertain if the covari-ance matrix derived using the hypothesized modelis different from the covariance matrix derived

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

Research Notes and Commentaries 401

from the sample. A non-significant difference indi-cates that the errors are non-significant, lendingsupport to the model. A chi-square test is the mostcommon fit measure, but it is only recommendedwith moderate samples (e.g., 100 to 200; Tabach-nick and Fidell, 1996). With large samples, trivialdifferences between the two matrices become sig-nificant. The test also is suspect when using smallsamples because some are not distributed as chi-square populations.

Because of these limitations, several compara-tive fit indices that contrast the fit of one modelwith the fit of competing or baseline models haveemerged. Rules of thumb, not significance tests, areused in determining acceptable fit levels becausethe underlying sampling distributions for theseindices are unknown. These heuristics may or maynot be appropriate for a specific data set (Brannick,1995). Thus, until a definitive measure is devel-oped, researchers should use multiple measuresto provide evidence about their models (Breckler,1990). The use of multiple indices assures readersthat authors have not simply picked a supportiveindex. Research by Gerbing and Anderson (1992)suggests that among the most stable and robust fitindices are the DELTA2 index, the relative noncen-trality index (RNI), and the comparative fit index(CFI) (cf. Hu and Bentler, 1999).

We coded how many and which fit indices wereused. Studies reported between zero and nine fitmeasures. Multiple fit measures were used in 83studies (90%). Four studies (4%) did not reportany fit measures, and only one fit measure wasreported in five (6%). The most popular measurewas chi-square (79 studies, 86%), followed bythe Goodness of Fit Index (44, 48%), the CFI(38, 41%), and the root mean square residual (32,35%). Only one study used all three fit measuresrecommended by Gerbing and Anderson (1992).Encouragingly, the use of multiple indicators hasincreased over time. For example, from 1984 to1995, 13 of 32 studies (41%) used four or more,while 42 of 60 later studies (70%) used four ormore (z = 2.70, p < 0.01).

Adequate statistical power is essential toassessing model fit when using a chi-square test.More generally, adequate power makes it morelikely that a study will accurately depict variablesrelations. We attempted to code studies powerdirectly, but power was not described in 90 of the92 studies. To assess whether the studies powerwas adequate, we consulted MacCallum, Browne,

and Sugawara (1996), which lists the minimumsample size needed for adequate power (i.e., 0.80)at various degrees of freedom to assess closemodel fit. While this approach is somewhat crude,more precise assessment methods require statisticsauthors seldom report (cf. Satorra and Saris, 1985).Using MacCallum et al.s (1996) guidelines, 29studies (31%) had adequate power, while 43 (47%)did not. We could not assess power for 20 studies(22%) that did not report degrees of freedom andone that did not clearly report sample size.

Our findings suggest that many extant studieshave obtained significant results despite insuffi-cient sample sizes. A potential explanation for thenumber of studies that found significant resultsdespite lacking adequate statistical power is thatmodel respecifications were conducted.2 Regard-less of the underlying explanation, our findingsare troubling. Any author building on a past studyshould consider its power when interpreting thefindings. With this issue in mind, we note withasterisks in Table 1 the studies that we found tohave adequate power.

Future scholars have several tools to ensure ade-quate power. One is to perform the calculationsoutlined by Satorra and Saris (1985); these calcu-lations are complex and require the derivation ofvarious alternative models (Chin, 1998). Authorsalso can use a program listed in MacCallum et al.(1996) to calculate needed sample sizes. A finalapproach is to use the MacCallum et al. (1996)guidelines that we used. One caution is that thosepower estimates may be inflated when using smallsamples (MacCallum et al., 1996).

Model respecification

Model respecification occurs when one tests a pro-posed model and then seeks to improve model fit,often through adding or removing paths amongconstructs. Respecification is common in the socialsciences because a priori models often do notadequately fit the data, but respecification is alsocontroversial. Anderson and Gerbing (1988) arguethat respecifications should be based on theory andcontent considerations in order to avoid exploit-ing sampling error to achieve satisfactory goodnessof fit. Chin (1998) and Kelloway (1995) assert

2 We appreciate an anonymous reviewer for providing this idea.

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

402 C. L. Shook et al.

that changes should not only be theoretically jus-tified, but also validated with a new sample. Bran-nick (1995) argues that respecifications should notbe done at all. Indeed, fit indices assume suchsearches have not been done. Thus, after a spec-ification search, it is unclear how to interpret anindex score.3 If theoretical justification for modifi-cations exists, alternative models should have beenproposed a priori rather than making a posteriorichanges.

We coded whether specification searches werereported. Among the 92 studies, 43 (47%) listed arespecification. For those 43 studies, we coded thefollowing: (1) if authors explicitly stated that thespecification searches were exploratory in nature(16 did, 17%); (2) if the changes in the specifica-tion searches were validated on a hold-out sample(2 were, 2%); and (3) if the authors cited theo-retical reasons as justification for the changes (18did, 20%). While these results were disconcert-ing, there was some good news. Authors reportedperforming specification searches less frequentlyin recent studies (22 of 60, 37% vs. 21 of 32,66%; z = 2.65; p < 0.01), perhaps reflecting theincreasing robustness of strategy theory over time.

Equivalent modelsFor any proposed structural model, other structuralmodels tested using the same data may suggestdifferent relationships among latent constructs, butstill yield equivalent levels of fit. These alternativemodels may be radically different, leading Mac-Callum et al. (1993) to assert that the existenceof equivalent models could call into question theconclusions drawn from most SEM studies. Thus,authors should acknowledge the possible existenceof equivalent models as a limitation of resultsobtained via SEM (Breckler, 1990; MacCallumet al., 1993).

In 24 of the 92 studies, SEM was used onlyfor measurement validation via confirmatory fac-tor analysis (CFA). Eleven studies were focusedsolely on measurement validation. Thirteen stud-ies followed the CFA with other techniques (e.g.,regression) to examine relationships. In the stud-ies where SEM usage was limited to measurementvalidation, no relationships among latent variableswere examined using SEM; thus, we did not eval-uate the authors treatment of equivalent models

3 We thank an anonymous reviewer for this insight.

(MacCallum et al., 1993). Only one of the remain-ing 68 studies (2%) explicitly acknowledged thatthe possible existence of equivalent structural mod-els is problematic. Beyond acknowledging equiv-alent models, other responses are available. Forexample, Anderson and Gerbing (1988) outline atwo-step approach for identifying and evaluatingequivalent models that is popular in the psychologyand marketing literatures. Also, simultaneous rela-tions models can be tested wherein, for example,variable A influences variable B, and B influencesA. Parameters can be identified if additional vari-ables influence A that do not influence B or viceversa.4

ReportingIdeally, articles would provide enough detail topermit replication by others because replicationserves as a safeguard against the acceptance oferroneous findings. Chin (1998: 8) suggests thatthe input matrix, the software and version used,starting values, number of iterations, the modelstested, computational options used, and anomaliesencountered during the analytic process should bedescribed. No single study listed all of these items.

Readers can still gain a basic understanding ofthe analysis performed if the input matrix and soft-ware are listed. Although most statistical packagesassume the use of the covariance matrix (Steiger,2001), in practice both covariance and correla-tion matrices are used as the input for SEM. Themajority of studies (n = 75, 81%) did not iden-tify whether a correlation or covariance matrix wasused. Correlation matrices were used in eight stud-ies (9%) and covariance matrices were used innine studies (10%). Whether the input matrix wasprovided was also coded. The input matrix wasreproduced in eight studies (9%) and not repro-duced in 84 studies (91%).

Different software packages and versions of thesame software package sometimes have differentdefault parameters. Thus, authors should providethe name and version of the software. The softwarepackage used was reported in 81 studies (88%).LISREL was used in 66 studies (72%), EQS wasused in nine studies (10%), and CALIS, AMOS,and Mplus were each used in two studies (2%).The version of the software used was reported in51 studies (55%), but not in 41 studies (45%). Only

4 We thank an anonymous reviewer for this insight.

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

Research Notes and Commentaries 403

five studies (5%) reported both the input matrixand the software used. Overall, the reporting prac-tices to date inhibit strategic management scholarsability to replicate and build on past findings.

CONCLUSIONS

One limitation of our study is that we could onlyassess the application of SEM based on what pub-lished articles reported. In some cases, authors mayhave made appropriate decisions and discussedthese decisions with referees during the reviewprocess, but not included this material in the arti-cle. Yet, because the discipline desires knowledgeaccumulation, adequate information about statisti-cal procedures should be made available. Unfor-tunately, our results show that such information isoften not provided. In Table 2, we offer review-ers and editors a suggested checklist for assessingSEM studies. To enhance knowledge development,gatekeepers should require that authors address allof the issues listed. Recognizing the limitationson journal space, we also recommend that jour-nals provide vehicles for communicating space-consuming details of statistical analyses (such asinput matrices) to interested readers. For exam-ple, Management Science currently offers authorsspace on a journal-sponsored Internet web site.

Table 2. Checklist of issues that should be reported inSEM studies

1. Sample issues(a) General description(b) Number of observations(c) Distribution of sample(d) Statistical power

2. Measurement issues(a) Reliability of measures(b) Measures of discriminant validity(c) Measures of convergent validity

3. Reproduceability issues(a) Input matrix(b) Name and version of software package used(c) Starting values(d) Computational options used(e) Analytical anomalies encountered

4. Equivalent models issues(a) Potential existence acknowledged as a limitation

5. Respecification issues(a) Changes cross-validated(b) Respecified models not given status of

hypothesized model

In closing, we believe that significant oppor-tunities remain for SEM to generate insightswithin strategic management. For example, thefields core constructs (e.g., strategy, performance)are multidimensional and the relationships amongthem are complex (Summer et al., 1990). GivenSEMs ability to map and assess a web of relation-ships, it offers vast potential as a tool to diagnosekey links. Also, SEMs ability to tap intangiblelatent variables (cf. Hult and Ketchen, 2001) mighthelp unveil the unobservable constructs that arecentral to the resource-based view, transactionscosts economics, and agency theory (Godfrey andHill, 1995). These outcomes can only be realized,however, if the technique is used prudently. Ourhope is that future scholars will use the ideas pre-sented here in order to maximize SEMs utility.

ACKNOWLEDGEMENTS

The authors wish to acknowledge the help of JamesCombs, Karl Joreskog, Bruce Lamont, RobertMacCallum, Timothy Palmer, and Randy Settoonwith this paper.

REFERENCES

Anderson JC. 1987. An approach for confirmatorymeasurement and structural equation modelingof organizational properties. Management Science33(April): 525541.

Anderson JC, Gerbing DW. 1988. Structural equationmodeling in practice: a review and recommendedtwo-step approach. Psychological Bulletin 103(3):411423.

Bagozzi RP, Phillips LW. 1982. Representing andtesting organizational theories: a holistic construal.Administrative Science Quarterly 27(September):459489.

Bollen KA. 1989. Structural Equations with LatentVariables . Wiley: New York.

Brannick MT. 1995. Critical comments on applyingcovariance structure modeling introduction. Journal ofOrganizational Behavior 16: 201213.

Breckler SJ. 1990. Applications of covariance structuremodeling in psychology: cause for concern. Psycho-logical Bulletin 107(2): 260273.

Chin W. 1998. Issues and opinion on structural equationmodeling. MIS Quarterly 22(1): 716.

Farh JL, Hoffman RC, Hegarty WH. 1984. Assessingenvironmental scanning at the subunit level: amultitraitmultimethod analysis. Decision Sciences15: 197219.

Ford JK, MacCallum RC, Tait M. 1986. The applicationof exploratory factor analysis in applied psychology:

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

-

404 C. L. Shook et al.

a critical review and analysis. Personnel Psychology39: 291314.

Fornell C, Larcker DG. 1981. Evaluating structuralequation models with unobservable variables andmeasurement error. Journal of Marketing Research 18:3950.

Garver MS, Mentzer JT. 1999. Logistics research meth-ods: employing structural equation modeling to test forconstruct validity. Journal of Business Logistics 20(1):3358.

Gerbing DA, Anderson JC. 1992. Monte Carlo evalua-tions of goodness of fit indices for structural equationmodels. Sociological Methods and Research 21(2):132160.

Godfrey PC, Hill CWL. 1995. The problem of unob-servables in strategic management research. StrategicManagement Journal 16(7): 519534.

Hayduk LA. 1987. Structural Equation Modeling withLISREL: Essentials and Advances . Johns HopkinsPress: Baltimore, MD.

Hu L, Bentler PM. 1999. Cutoff criteria for fit indexesin covariance structure analysis: conventional criteriaversus new alternatives. Structural Equation Modeling6(1): 155.

Hult GTM, Ketchen DJ. 2001. Does market orientationmatter? A test of the relationship between positionaladvantage and performance. Strategic ManagementJournal 22(9): 899906.

Joreskog KG, Sorbom D. 1996. LISREL 8: UsersReference Guide. Scientific Software International:Chicago, IL.

Joreskog KG, Sorbom D, du Toit S, du Toit M. 1999.LISREL 8: New Statistical Features . ScientificSoftware International: Chicago, IL.

Kelloway EK. 1995. Structural equation modeling inperspective introduction. Journal of OrganizationalBehavior 16: 215224.

Ketchen DJ, Shook CL. 1996. The application of clusteranalysis in strategic management research: an analysisand critique. Strategic Management Journal 17(6):441458.

MacCallum RC, Browne MW, Sugawara HM. 1996.Power analysis and determination of sample size forcovariance structure modeling. Psychological Methods1(2): 130149.

MacCallum RC, Roznowski M, Necowitz L. 1992. Mo-del modifications in covariance structure analysis: theproblem of capitalization on chance. PsychologicalBulletin 111(3): 490504.

MacCallum RC, Wegner DT, Uchino BN, Farbigar LR.1993. The problem of equivalent models in applica-tions of covariance structure analysis. PsychologicalBulletin 114(1): 185199.

MacMillan I. 1994. The emerging forum for businesspolicy scholars. Journal of Business Venturing 9(2):8589.

Satorra A. 2001. Goodness of fit testing of structuralequation models with multiple group data andnonnormality. In Structural Equation Modeling:Present and Future, Cudeck RC, du Toit S, Sorbom D(eds). Scientific Software International: Lincolnwood,IL; 231256.

Satorra A, Saris WE. 1985. Power of the maximumlikelihood ratio test in covariance structure analysis.Psychometrika 50(1): 8390.

Schendel DE, Hofer CW. 1979. Strategic Management:A New View of Business Policy and Planning . Little,Brown: Boston, MA.

Summer CE, Bettis RA, Duhaime IH, Grant JH, Ham-brick DC, Snow CC, Zeithaml CP. 1990. Doctoraleducation in the field of business policy and strategy.Journal of Management 16: 361398.

Steenkamp JB, van Trijp H. 1991. The use of LISREL invalidating marketing constructs. International Journalof Research in Marketing 8: 283299.

Steiger J. 2001. Driving fast in reverse. Journal ofAmerican Statistical Association 96: 331338.

Tabachnick BG, Fidell LS. 1996. Using MultivariateStatistics . Harper Collins: New York.

Copyright 2004 John Wiley & Sons, Ltd. Strat. Mgmt. J., 25: 397404 (2004)

![W z z z z z z z z z z z z z z z z z z z z z z z z z z z z z z z z...#RT Z ] o [ v u W z z z z z z z z z z z z z W v [ ^ ] P v µ W z z z z z z z z z z z z z z z z z z z z z z z z z](https://static.fdocuments.us/doc/165x107/60949c1fa8e30d779b79b9c0/w-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-z-rt-z-o.jpg)

![r r r r r r r r r r r r r r r r r r r r r r r r r r r r r ... · D v P ^ ] P v W z z z z z z z z z z z z z z z z z W ] v ] o ^ ] P v X W z z z z z z z z z z z z z z z Z '/^dZ d/KE](https://static.fdocuments.us/doc/165x107/5b899f0d7f8b9a5b688e06bf/r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-r-d-v-p-p-v.jpg)

![u Ç E u W z z z z z z z z z z z z h v ] ñ P v W ^ ] o Z ...](https://static.fdocuments.us/doc/165x107/62df142c439ef951be27fa51/u-e-u-w-z-z-z-z-z-z-z-z-z-z-z-z-h-v-p-v-w-o-z-.jpg)