UVA CS 4501: Machine Learning Lecture 10: K-nearest ... · q Classificaon (supervised) q...

Transcript of UVA CS 4501: Machine Learning Lecture 10: K-nearest ... · q Classificaon (supervised) q...

UVACS4501:MachineLearning

Lecture10:K-nearest-neighborClassifier/Bias-VarianceTradeoff

Dr.YanjunQi

UniversityofVirginia

DepartmentofComputerScience

4/5/18

Dr.YanjunQi/UVACS

1

Wherearewe?èFivemajorsecFonsofthiscourse

q Regression(supervised)q ClassificaFon(supervised)q Unsupervisedmodelsq Learningtheoryq Graphicalmodels

4/5/18 2

Dr.YanjunQi/UVACS

ThreemajorsecFonsforclassificaFon

• We can divide the large variety of classification approaches into roughly three major types

1. Discriminative

- directly estimate a decision rule/boundary - e.g., support vector machine, decision tree, logistic regression, - e.g. neural networks (NN), deep NN

2. Generative: - build a generative statistical model - e.g., Bayesian networks, Naïve Bayes classifier 3. Instance based classifiers - Use observation directly (no models) - e.g. K nearest neighbors

4/5/18 3

Dr.YanjunQi/UVACS

Today:

ü K-nearestneighborü ModelSelecFon/BiasVarianceTradeoff

ü Bias-Variancetradeoffü Highbias?Highvariance?Howtorespond?

4/5/18 4

Dr.YanjunQi/UVACS

Unknown recordNearest neighbor classifiers

Requiresthreeinputs:1. Thesetofstored

trainingsamples2. Distancemetricto

computedistancebetweensamples

3. Thevalueofk,i.e.,thenumberofnearestneighborstoretrieve

4/5/18

Dr.YanjunQi/UVACS

5

Nearest neighbor classifiers

Toclassifyunknownsample:1. Computedistanceto

trainingrecords2. IdenFfyknearest

neighbors3. Useclasslabelsofnearest

neighborstodeterminetheclasslabelofunknownrecord(e.g.,bytakingmajorityvote)

Unknown record

4/5/18

Dr.YanjunQi/UVACS

6

Definition of nearest neighbor

X X X

(a) 1-nearest neighbor (b) 2-nearest neighbor (c) 3-nearest neighbor

k-nearestneighborsofasamplexaredatapointsthathavetheksmallestdistancestox

4/5/18

Dr.YanjunQi/UVACS

7

1-nearest neighbor

Voronoidiagram:partitioning of a

plane into regions based on distance to points in a specific subset of the plane.

4/5/18

Dr.YanjunQi/UVACS

8

• Compute distance between two points: – For instance, Euclidean distance

• Options for determining the class from nearest neighbor list – Take majority vote of class labels among the

k-nearest neighbors – Weight the votes according to distance

• example: weight factor w = 1 / d2

Nearest neighbor classification

∑ −=i

ii yxd 2)(),( yx

4/5/18

Dr.YanjunQi/UVACS

9

• Choosing the value of k: – If k is too small, sensitive to noise points – If k is too large, neighborhood may include (many)

points from other classes

Nearest neighbor classification

X

4/5/18

Dr.YanjunQi/UVACS

10

• Choosing the value of k: – If k is too small, sensitive to noise points – If k is too large, neighborhood may include points

from other classes

Nearest neighbor classification

X

4/5/18

Dr.YanjunQi/UVACS

11

• Scaling issues – Attributes may have to be scaled to prevent

distance measures from being dominated by one of the attributes

– Example: • height of a person may vary from 1.5 m to 1.8 m • weight of a person may vary from 90 lb to 300 lb • income of a person may vary from $10K to $1M

Nearest neighbor classification

4/5/18

Dr.YanjunQi/UVACS

12

• k-Nearest neighbor classifier is a lazy learner – Does not build model explicitly. – Classifying unknown samples is relatively

expensive.

• k-Nearest neighbor classifier is a local model, vs. global model like linear classifiers.

Nearest neighbor classification

4/5/18

Dr.YanjunQi/UVACS

13

K-Nearest Neighbor

Regression/ Classification

Local Smoothness

NA

NA

Training Samples

Task

Representation

Score Function

Search/Optimization

Models, Parameters

4/5/18 15

Dr.YanjunQi/UVACS

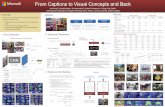

Decision boundaries in global vs. local models

Linear classification • global • stable • can be inaccurate

15-nearest neighbor

1-nearest neighbor

• local • accurate • unstable

What ultimately matters: GENERALIZATION

4/5/18 16

Dr.YanjunQi/UVACS

K=15 K=1

• Kactsasasmoother

Today:

ü K-nearestneighborü ModelSelecFon/BiasVarianceTradeoff

ü Bias-Variancetradeoffü Highbias?Highvariance?Howtorespond?

4/5/18 17

Dr.YanjunQi/UVACS

e.g. Training Error from KNN, Lesson Learned

• When k = 1, • No misclassifications (on

training): Overtraining

• Minimizing training error is not always good (e.g., 1-NN)

X1

X2

1-nearest neighbor averaging

5.0ˆ =Y

5.0ˆ <Y

5.0ˆ >Y

4/5/18 18

Dr.YanjunQi/UVACS

Review:MeanandVarianceofRandomVariable(RV)

• Mean(ExpectaFon):– DiscreteRVs:

– ConFnuousRVs:

( )XEµ =

E X( ) = vi *P X = vi( )vi∑

E X( ) = x* p x( )dx−∞

+∞

∫

4/5/18 19

Dr.YanjunQi/UVACS

AdaptFromCarols’probtutorial

Review:MeanandVarianceofRandomVariable(RV)

• Mean(ExpectaFon):– DiscreteRVs:

– ConFnuousRVs:

( )XEµ =

( ) ( )X P Xii iv

E v v= =∑

E X( ) = x* p x( )dx−∞

+∞

∫€

E(g(X)) = g(vi)P(X = vi)vi∑

E(g(X)) = g(x)* p(x)dx−∞

+∞

∫4/5/18 20

Dr.YanjunQi/UVACS

AdaptFromCarols’probtutorial

Review:MeanandVarianceofRV

• Variance:

– DiscreteRVs:

– ConFnuousRVs:

( ) ( ) ( )2X P Xi

i ivV v vµ= − =∑

V X( ) = x − µ( )2 p x( )−∞

+∞

∫ dx

Var(X) = E((X −µ)2 )

4/5/18 21

Dr.YanjunQi/UVACS

AdaptFromCarols’probtutorial

BIAS AND VARIANCE TRADE-OFF

• Bias – measures accuracy or quality of the estimator – low bias implies on average we will accurately

estimate true parameter from training data

• Variance – Measures precision or specificity of the estimator – Low variance implies the estimator does not change

much as the training set varies

22

4/5/18

Dr.YanjunQi/UVACS

4/5/18

Dr.YanjunQi/UVACS

23

BIAS AND VARIANCE TRADE-OFF for Mean Squared Error of

parameter estimation

Error due to incorrect

assumptions

Error due to variance of training

samples

E()=

(1)TrainingvsTestError• Trainingerror

canalwaysbereducedwhenincreasingmodelcomplexity,

ExpectedTestError

ExpectedTrainingError

264/5/18

Dr.YanjunQi/UVACS

(1)TrainingvsTestError• Trainingerror

canalwaysbereducedwhenincreasingmodelcomplexity,

• ExpectedTesterrorandCVerrorègoodapproximaFonofofEPE

ExpectedTestError

ExpectedTrainingError

274/5/18

Dr.YanjunQi/UVACS

Statistical Decision Theory (Extra)

• Random input vector: X • Random output variable:Y • Joint distribution: Pr(X,Y ) • Loss function L(Y, f(X))

• Expected prediction error (EPE):

€

EPE( f ) = E(L(Y, f (X))) = L(y, f (x))∫ Pr(dx,dy)

e.g. = (y − f (x))2∫ Pr(dx,dy)

One way to consider

generalization: by

considering the joint

population distribution

e.g. Squared error loss (also called L2 loss ) 4/5/18 28

Dr.YanjunQi/UVACS

(2)Bias-VarianceTrade-off

• Modelswithtoofewparametersareinaccuratebecauseofalargebias(notenoughflexibility).

• Modelswithtoomanyparametersareinaccuratebecauseofalargevariance(toomuchsensiFvitytothesamplerandomness).

Slidecredit:D.Hoiem4/5/18 29

Dr.YanjunQi/UVACS

(2.1) Regression: Complexity versus Goodness of Fit

HighestBiasLowestvarianceModelcomplexity=low

MediumBiasMediumVariance

Modelcomplexity=medium

SmallestBiasHighestvarianceModelcomplexity=high

304/5/18

Dr.YanjunQi/UVACS

LowVariance/HighBias

LowBias/HighVariance

(2.2) Classification,

Decision boundaries in global vs. local models

linear regression • global • stable • can be inaccurate

15-nearest neighbor

1-nearest neighbor

KNN • local • accurate • unstable

What ultimately matters: GENERALIZATION 4/5/18 31

LowBias/HighVariance

LowVariance/HighBias

Dr.YanjunQi/UVACS

Model “bias” & Model “variance”

• Middle RED: – TRUE function

• Error due to bias: – How far off in general

from the middle red

• Error due to variance: – How wildly the blue

points spread

4/5/18 33

Dr.YanjunQi/UVACS

Model “bias” & Model “variance”

• Middle RED: – TRUE function

• Error due to bias: – How far off in general

from the middle red

• Error due to variance: – How wildly the blue

points spread

4/5/18 34

Dr.YanjunQi/UVACS

needtomakeassumpFonsthatareabletogeneralize

• Components – Bias: how much the average model over all training sets differ from

the true model? • Error due to inaccurate assumptions/simplifications made by the

model – Variance: how much models estimated from different training sets

differ from each other

Slidecredit:L.Lazebnik4/5/18 37

Dr.YanjunQi/UVACS

Today:

ü K-nearestneighborü ModelSelecFon/BiasVarianceTradeoff

ü Bias-Variancetradeoffü Highbias?Highvariance?Howtorespond?

4/5/18 38

Dr.YanjunQi/UVACS

needtomakeassumpFonsthatareabletogeneralize

• Underfitting: model is too “simple” to represent all the relevant class characteristics – High bias and low variance – High training error and high test error

• Overfitting: model is too “complex” and fits irrelevant characteristics (noise) in the data – Low bias and high variance – Low training error and high test error

Slidecredit:L.Lazebnik4/5/18 39

Dr.YanjunQi/UVACS

(1)Highvariance

4/5/18 40

• TesterrorsFlldecreasingasmincreases.Suggestslargertrainingsetwillhelp.

• Largegapbetweentrainingandtesterror.

• Low training error and high test error Slidecredit:A.Ng

Dr.YanjunQi/UVACS

CouldalsouseCrossVError

Howtoreducevariance?

• Chooseasimplerclassifier

• Regularizetheparameters

• Getmoretrainingdata

• Trysmallersetoffeatures

Slidecredit:D.Hoiem4/5/18 41

Dr.YanjunQi/UVACS

(2)Highbias

4/5/18 42

•Eventrainingerrorisunacceptablyhigh.•Smallgapbetweentrainingandtesterror.

High training error and high test error Slidecredit:A.Ng

Dr.YanjunQi/UVACS

CouldalsouseCrossVError

HowtoreduceBias?

43

• E.g.- GetaddiFonalfeatures

- Tryaddingbasisexpansions,e.g.polynomial

- Trymorecomplexlearner

4/5/18

Dr.YanjunQi/UVACS

(3)Forinstance,iftryingtosolve“spamdetecFon”using(Extra)

4/5/18 44

L2-

Slidecredit:A.Ng

Dr.YanjunQi/UVACS

Ifperformanceisnotasdesired

(4)ModelSelecFonandAssessment

• ModelSelecFon– EsFmaFngperformancesofdifferentmodelstochoosethebestone

• ModelAssessment– Havingchosenamodel,esFmaFngthepredicFonerroronnewdata

454/5/18

Dr.YanjunQi/UVACS

ModelSelecFonandAssessment(Extra)

• WhenDataRichScenario:Splitthedataset

• WhenInsufficientdatatosplitinto3parts– ApproximatevalidaFonstepanalyFcally

• AIC,BIC,MDL,SRM– Efficientreuseofsamples

• CrossvalidaFon,bootstrap

Model Selection Model assessment

Train Validation Test

464/5/18

Dr.YanjunQi/UVACS

TodayRecap:

ü K-nearestneighborü ModelSelecFon/BiasVarianceTradeoff

ü Bias-Variancetradeoffü Highbias?Highvariance?Howtorespond?

4/5/18 47

Dr.YanjunQi/UVACS

References

q Prof.Tan,Steinbach,Kumar’s“IntroducFontoDataMining”slide

q Prof.AndrewMoore’sslidesq Prof.EricXing’sslidesq HasFe,Trevor,etal.Theelementsofsta.s.callearning.Vol.2.No.1.NewYork:Springer,2009.

4/5/18 48

Dr.YanjunQi/UVACS

Statistical Decision Theory (Extra)

• Random input vector: X • Random output variable:Y • Joint distribution: Pr(X,Y ) • Loss function L(Y, f(X))

• Expected prediction error (EPE): •

€

EPE( f ) = E(L(Y, f (X))) = L(y, f (x))∫ Pr(dx,dy)

e.g. = (y − f (x))2∫ Pr(dx,dy)Consider

population distribution

e.g. Squared error loss (also called L2 loss ) 4/5/18 49

Dr.YanjunQi/UVACS

Expected prediction error (EPE)

• For L2 loss:

• For 0-1 loss: L(k, ℓ) = 1-dkl

EPE( f ) = E(L(Y, f (X))) = L(y, f (x))∫ Pr(dx,dy)Consider joint distribution

!!

f̂ (X )=C k !if!Pr(C k |X = x)=max

g∈CPr(g|X = x)

Bayes Classifier

e.g. = (y− f (x))2∫ Pr(dx,dy)

Conditional mean !! f̂ (x)=E(Y |X = x)

under L2 loss, best estimator for EPE (Theoretically) is :

e.g. KNN NN methods are the direct implementation (approximation )

4/5/18 50

Dr.YanjunQi/UVACS

51

EXPECTED PREDICTION ERROR for L2 Loss

• Expected prediction error (EPE) for L2 Loss:

• Since Pr(X,Y )=Pr(Y |X )Pr(X ), EPE can also be written as

• Thus it suffices to minimize EPE pointwise

EPE( f ) = E(Y − f (X))2 = (y− f (x))2∫ Pr(dx,dy)

)|)](([EE)(EPE 2| XXfYf XYX −=

)|]([Eminarg)( 2| xXcYxf XYc =−=

)|(E)( xXYxf ==Solution for Regression:

Best estimator under L2 loss: conditional expectation

Conditional mean

Solution for kNN:

KNN FOR MINIMIZING EPE

• We know under L2 loss, best estimator for EPE (theoretically) is :

– Nearest neighbors assumes that f(x) is well approximated by a locally constant function.

52

Conditional mean )|(E)( xXYxf ==

4/5/18

Dr.YanjunQi/UVACS

Review : WHEN EPE USES DIFFERENT LOSS

Loss Function Estimator

L2

L1

0-1

(Bayes classifier / MAP)

53 4/5/18 Dr.YanjunQi/UVACS

Decomposition of EPE

– When additive error model: – Notations

• Output random variable: • Prediction function: • Prediction estimator:

Irreducible / Bayes error

54

MSE component of f-hat in estimating f

4/5/18

Dr.YanjunQi/UVACS

Bias-VarianceTrade-offforEPE:

EPE (x_0) = noise2 + bias2 + variance

Unavoidable error

Error due to incorrect

assumptions

Error due to variance of training

samples

Slidecredit:D.Hoiem4/5/18 55

Dr.YanjunQi/UVACS

MSEofModel,aka,Risk

4/5/18

Dr.YanjunQi/UVACS

58hpp://www.stat.cmu.edu/~ryanFbs/statml/review/modelbasics.pdf

CrossValidaFonandVarianceEsFmaFon

4/5/18

Dr.YanjunQi/UVACS

59

hpp://www.stat.cmu.edu/~ryanFbs/statml/review/modelbasics.pdf

![Naïve&Bayes&Classificaon · On&Naïve&Bayes&! Text classification – Spam filtering (email classification) [Sahami et al. 1998] – Adapted by many commercial spam filters –](https://static.fdocuments.us/doc/165x107/5f5af3ea4065cd3cfc5c5f11/navebayesclassiicaon-onnavebayes-text-classification.jpg)

![AnOverviewof TransferLearning - Nanjing Universitylamda.nju.edu.cn/conf/mlss2014/(X(1)S(kcfwaxyqgaqzl2...JMLR 2009] • Transfer$learning$for$ classificaon,$and$ regression$problems.$](https://static.fdocuments.us/doc/165x107/5b05aa8b7f8b9ad1768bb2ef/anoverviewof-transferlearning-nanjing-x1skcfwaxyqgaqzl2jmlr-2009-transferlearningfor.jpg)