Using Infiniband for High Speed Interconnect

-

Upload

hajo-kitzhoefer -

Category

Documents

-

view

222 -

download

0

Transcript of Using Infiniband for High Speed Interconnect

-

8/4/2019 Using Infiniband for High Speed Interconnect

1/15

Abstract .............................................................................................................................................. 2Introduction ......................................................................................................................................... 2InfiniBand technology ........................................................................................................................... 4

InfiniBand components ...................................................................................................................... 5InfiniBand software architecture ......................................................................................................... 5

MPI ............................................................................................................................................. 7IPoIB ........................................................................................................................................... 7RDMA-based protocols .................................................................................................................. 7RDS ............................................................................................................................................ 8

InfiniBand hardware architecture ....................................................................................................... 8Link operation .................................................................................................................................. 9

Scale-out clusters built on InfiniBand and HP technology ......................................................................... 11Conclusion ........................................................................................................................................ 13

Appendix A: Glossary ........................................................................................................................ 14For more information .......................................................................................................................... 15Call to action .................................................................................................................................... 15

Using InfiniBand for a

scalable compute infrastructuretechnology brief, 3rd edition

-

8/4/2019 Using Infiniband for High Speed Interconnect

2/15

2

Abstract

With business models constantly changing to keep pace with todays Internet -based, global economy,IT organizations are continually challenged to provide customers with high performance platformswhile controlling their cost. An increasing number of enterprise businesses are implementing scale-outarchitectures as a cost-effective approach for scalable platforms, not just for high performancecomputing (HPC) but also for financial services and Oracle-based database applications.

InfiniBand (IB) is one of the most important technologies that enable the adoption of cluster computingThis technology brief describes InfiniBand as an interconnect technology used in cluster computing,provides basic technical information, and explains the advantages of implementing InfiniBand-basedscale-out architectures.

Introduction

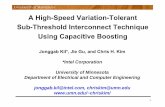

The overall performance of enterprise servers is determined by the synergetic relationship betweenthree main subsystems: processing, memory, and input/output. The multiprocessor architecture usedin the latest single-server systems (Figure 1) provides a high degree of parallel processing capability.However, multiprocessor server architecture cannot scale cost effectively to a large number of

processing cores. Scale-out cluster computing that builds an entire system by connecting stand-alonesystems with interconnect technology has become widely implemented for HPC and enterprise datacenters around the world.

Figure 1. Architecture of a dual-processor single server (node)

CPU(s)

MemoryController

Processor 1

Memory

PCI-XBridge

PCIePort

Controller

Ultra-3 SCSI

Fibre Channel

Gi abit Ethernet

320 MBps

400 MBps

> 1 Gbps

MemoryController

Processor 2

CPU(s)

Processor Input/Output

InfiniBand

> 10 Gbps*

* 4x link, single data rate

-

8/4/2019 Using Infiniband for High Speed Interconnect

3/15

3

Figure 2 shows an example of cluster architecture that integrates computing, storage, andvisualization functions into a single system. Applications are usually distributed to compute nodesthrough job scheduling tools.

Figure 2. Sample clustering architecture

Scale-out systems allow infrastructure architects to meet performance and cost goals, but interconnectperformance, scalability, and reliability are key areas that must be carefully considered. A clusterinfrastructure works best when built with an interconnect technology that scales easily, reliably, andeconomically with system expansion.

Ethernet is a pervasive, mature interconnect technology that can be cost-effective for some applicationworkloads. The emergence of 10-Gigabit Ethernet (10GbE) offers a cluster interconnect that meetshigher bandwidth requirements than 1GbE can provide. However, 10GbE still lags the latestInfiniBand technology in latency and bandwidth performance, and lacks native support for the fat-treeand mesh topologies used in scale-out clusters. InfiniBand remains the interconnect of choice forhighly parallel environments where applications require low latency and high bandwidth across theentire fabric.

-

8/4/2019 Using Infiniband for High Speed Interconnect

4/15

4

InfiniBand technology

InfiniBand is an industry-standard, channel-based architecture in which congestion-managementcapabilities and zero-copy data transfers using remote direct memory access (RDMA) are corecapabilitiesresulting in high-speed, low-latency interconnects for scale-out compute infrastructures.

InfiniBand uses a multi-layer architecture to transfer data from one node to another. In the InfiniBandlayer model (Figure 3), separate layers perform different tasks in the message passing process.

The upper layer protocols (ULPs) work closest to the operating system and application; they define theservices and affect how much software overhead the data transfer will require. The InfiniBandtransport layer is responsible for communication between applications. The transport layer splits themessages into data payloads and encapsulates each data payload and an identifier of thedestination node into one or more packets. Packets can contain data payloads of up to four kilobytes.

The packets are passed to the network layer, which selects a route to the destination node and, ifnecessary, attaches the route information to the packets. The data link layer attaches a local identifier(LID) to the packet for communication at the subnet level. The physical layer transforms the packet intoan electromagnetic signal based on the type of network mediacopper or fiber.

Figure 3. Distributed computing using InfiniBand architecture

InfiniBand has these important characteristics:

Very high bandwidthup to 40Gbps Quad Data Rate (QDR)

Low latency end-to-end communicationMPI ping-pong latency approaching 1 microsecondHardware-based protocol handling, resulting in faster throughput and low CPU overhead due toefficient OS bypass and RDMA

Native support for fat-tree and other common mesh topologies in fabric design

InfiniBand Link

-

8/4/2019 Using Infiniband for High Speed Interconnect

5/15

5

InfiniBand components

InfiniBand architecture involves four key components:

Host channel adapter

Subnet manager

Target channel adapter

InfiniBand switch

A host node or server requires a host channel adapter (HCA) to connect to an InfiniBandinfrastructure. An HCA can be a card ins talled in an expansion slot or integrated onto the hostssystem board. An HCA can communicate directly with another HCA, with a target channel adapter,or with an InfiniBand switch.

InfiniBand uses subnet manager (SM) software to manage the InfiniBand fabric and to monitorinterconnect performance and health at the fabric level. A fabric can be as simple as a point-to-pointconnection or multiple connections through one or more switches. The SM software resides on a nodeor switch within the fabric, and provides switching and configuration information to all of the switchesin the fabric. Additional backup SMs may be located within the fabric for failover should the primarySM fail. All other nodes in the fabric will contain an SM agent that processes management data.Managers and agents communicate using management datagrams (MADs).

A target channel adapter (TCA) is used to connect an external storage unit or I/O interface to anInfiniBand infrastructure. The TCA includes an I/O controller specific to the devices protocol (SCSI,Fibre Channel, Ethernet, etc.) and can communicate with an HCA or an InfiniBand switch.

An InfiniBand switch provides scalability by allowing a number of HCAs, TCAs, and other IB switchesto connect to an InfiniBand infrastructure. The switch handles network traffic by checking the local linkheader of each data packet received and forwarding the packet to the proper destination.

The most basic InfiniBand infrastructure will consist of host nodes or servers equipped with HCAs, anInfiniBand switch, and subnet manager software. More expansive networks will include multipleswitches.

InfiniBand software architectureInfiniBand, like Ethernet, uses a multi-layer processing stack to transfer data between nodes.InfiniBand architecture, however, provides OS-bypass features such as the communication processingduties and RDMA operations as core capabilities and offers greater adaptability through a variety ofservices and protocols.

While the majority of existing InfiniBand clusters operate on the Linux platform, drivers and HCAstacks are also available for Microsoft Windows, HP-UX, Solaris, and other operating systemsfrom various InfiniBand hardware and software vendors.

The layered software architecture of the HCA allows writing code without specific hardware in mind.The functionality of an HCA is defined by its verb set, which is a table of commands used by the

application programming interface (API) of the operating system being run. A number of services andsoftware protocols are available (Figure 4) and, depending on type, can be implemented from userspace or from the kernel.

-

8/4/2019 Using Infiniband for High Speed Interconnect

6/15

6

Figure 4. InfiniBand software layers

As indicated in Figure 4, InfiniBand supports a variety of upper level protocols (ULPs) and librariesthat have evolved since the introduction of InfiniBand. The key protocols are discussed in the followingpages.

User APIs

uDAPL

SDP Library

MAD API

Open Fabrics Verbs, CMA, and API

IPoIB

Upper Level Protocols

Provider

Userspace

Kernelspace

Hardware-Specific Driver

Hardware

MPIs Open SMApplication Level

Mid-Layer Modules

Connection Manager SA Client

MAD Services

Diag Tools

RDSRDMA-based Protocols

(kDAPL, SDP, SRP, iSER, NFS)

InfiniBand HCA

Open Fabrics Verbs and API

SMA

Clustered DB

VNIC

IP-Based Access Sockets-Based Access Block Storage Access

File System Access

-

8/4/2019 Using Infiniband for High Speed Interconnect

7/15

7

MPI

The message passing interface (MPI) protocol is a library of calls used by applications in a parallelcomputing environment to communicate between nodes. MPI calls are optimized for performance in acompute cluster that takes advantage of high-bandwidth and low-latency interconnects. In parallelcomputing environments, code is executed across multiple nodes simultaneously. MPI facilitates thecommunication and synchronization among these jobs across the entire cluster.

To take advantage of the features of MPI, an application must be written and compiled to include thelibraries from the particular MPI implementation used. Several implementations of MPI are on the

market:

HP-MPI

Intel MPI

Publicly available versions such as MVAPICH2 and Open MPI

MPI has become the de-facto IB ULP standard. In particular, HP-MPI has been accepted by moreindependent software vendors (ISVs) than any other commercial MPI. By using shared libraries,applications built on HP-MPI can transparently select interconnects that significantly reduce the effortfor applications to support various popular interconnect technologies. HP-MPI is supported on HP-UX,Linux, True64 UNIX, and Microsoft Windows Compute Cluster Server 2003.

IPoIB

Internet Protocol over InfiniBand (IPoIB) allows the use of TCP/IP or UDP/IP-based applicationsbetween nodes connected to an InfiniBand fabric. IPoIB supports IPv4 or IPv6 protocols andaddressing schemes. An InfiniBand HCA is configured through the operating system as a traditionalnetwork adapter that can use all of the standard IP-based applications such as PING, FTP, andTELNET. IPoIB does not support the RDMA features of InfiniBand. Communication between IB nodesusing IPoIB and Ethernet nodes using IP will require a gateway/router interface.

RDMA-based protocols

DAPL - The Direct Access Programming Library (DAPL) allows low-latency RDMA communicationsbetween nodes. The uDAPL provides user-level access to RDMA functionality on InfiniBand while the

kDAPL provides the kernel-level API. To use RDMA for the data transfers between nodes, applicationsmust be written with a specific DAPL implementation.

SDP Sockets Direct Protocol (SDP) is an RDMA protocol that that operates from the kernel.Applications must be written to take advantage of the SDP interface. SDP is based on the WinSockDirect Protocol used by Microsoft server operating systems and is suited for connecting databases toapplication servers.

SRP SCSI RDMA Protocol (SRP) is a data movement protocol that encapsulates SCSI commands overInfiniBand for SAN networking. Operating from the kernel level, SRP allows copying SCSI commandsbetween systems using RDMA for low-latency communications with storage systems.

iSER iSCSI Enhanced RDMA (iSER) is a storage standard originally specified on the iWARP RDMAtechnology and now officially supported on InfiniBand. The iSER protocol provides iSCSImanageability to RDMA storage operations.

NFS The Network File System (NFS) is a storage protocol that has evolved since its inception in the1980s, undergoing several generations of development while remaining network-independent. Withthe development of high-performance I/O such as PCIe and the significant advances in memorysubsystems, NFS over RDMA on InfiniBand offers low-latency performance for transparent file sharingacross different platforms.

-

8/4/2019 Using Infiniband for High Speed Interconnect

8/15

8

RDS

The Reliable Datagram Sockets (RDS) is a low-overhead, low-latency, high-bandwidth transportprotocol that yields high performance for cluster interconnect-intensive environments such as OracleDatabase or Real Application Cluster (RAC). The RDS protocol offloads error-checking operations tothe InfiniBand fabric, freeing more CPU time for application processing.

InfiniBand hardware architecture

InfiniBand fabrics use high-speed, bi-directional serial interconnects between devices. Interconnectbandwidth and distances are characteristics determined by the type of cabling and connectionsemployed. The bi-directional links contain dedicated send and receive lanes for full duplex operation.

For quad data rate (QDR) operation, each lane has a signaling rate of 10 Gbps. Bandwidth isincreased by adding more lanes per link. InfiniBand interconnect types include 1x, 4x, or 12x widefull-duplex links (Figure 5)., The 4x is the most popular configuration and provides a theoretical full-duplex QDR bandwidth of 80 (2 x 40) gigabits per second.

Figure 5. InfiniBand link types

Encoding overhead in the data transmission process limits the maximum data bandwidth per link toapproximately 80 percent of the signal rate. However, the switched fabric design of InfiniBand allowsbandwidth to grow or aggregate as links and nodes are added. Double data rate (DDR) andespecially quad data rate (QDR) operation increase bandwidth significantly (Table 1).

Table 1. InfiniBand interconnect bandwidth

LinkSDRSignal rate

DDRSignal rate

QDRSignal rate

1x 2.5 Gbps 5 Gbps 10 Gbps

4x 10 Gbps 20 Gbps 40 Gbps

12x 30 Gbps 60 Gbps 120 Gbps

1x Link(1 send channel,1 receive channel,each using differential voltage orfiber optic signaling)

4x Link

12x Link

-

8/4/2019 Using Infiniband for High Speed Interconnect

9/15

9

The operating distances of InfiniBand interconnects are contingent upon the type of cabling (copper orfiber optic), the connector, and the signal rate. The common connectors used in InfiniBandinterconnects today are CX4 and quad small-form-factor pluggable (QSFP) as shown in Figure 6.

Figure 6. InfiniBand connectors

Fiber optic cable with CX4 connectors generally offers the greatest distance capability. The adoptionof 4X DDR products is widespread, and deployment of QDR systems is expected to increase.

Link operation

Each link can be divided (multiplexed) into a set of virtual lanes, similar to highway lanes (Figure 7).Each virtual lane provides flow control and allows a pair of devices to communicate autonomously.Typical implementations have each link accommodating eight lanes1; one lane is reserved for fabricmanagement and the other lanes for packet transport. The virtual lane design allows an InfiniBandlink to share bandwidth between various sources and targets simultaneously. For example, if a10Gb/s link were divided into five virtual lanes, each lane would have a bandwidth of 2Gb/s. TheInfiniBand architecture defines a virtual lane mapping algorithm to ensure inter-operability betweenend nodes that support different numbers of virtual lanes.

Figure 7. InfiniBand virtual lane operation

1 The IBTA specification defines a minimum of two and a maximum of 16 virtual lanes per link.

Sources Targets

QSFP

CX4

-

8/4/2019 Using Infiniband for High Speed Interconnect

10/15

10

When a connection between two channel adapters is established, one of the following transport layercommunication protocols is selected:

Reliable connection (RC) data transfer between two entities using receive acknowledgment

Unreliable connection (UC) same as RC but without acknowledgement (rarely used)

Reliable datagram (RD) data transfer using RD channel between RD domains

Unreliable datagram (UD) data transfer without acknowledgement

Raw packets (RP) transfer of datagram messages that are not interpreted

These protocols can be implemented in hardware; some protocols more efficient than others. The UDand Raw protocols, for instance, are basic datagram movers and may require system processorsupport depending on the ULP used.

When the reliable connection protocol is operating (Figure 8), hardware at the source generatespacket sequence numbers for every packet sent, and the hardware at the destination checks thesequence numbers and generates acknowledgments for every packet sequence number received. Thehardware also detects missing packets, rejects duplicate packets, and provides recovery services forfailures in the fabric.

Figure 8. Link operation using reliable connection protocol

-

8/4/2019 Using Infiniband for High Speed Interconnect

11/15

11

The programming model for the InfiniBand transport assumes that an application accesses at least oneSend and one Receive queue to initiate the I/O. The transport layer supports four types of datatransfers for the Send queue:

Send/Receive Typical operation where one node sends a message and another node receives themessage

RDMA Write Operation where one node writes data directly into a memory buffer of a remotenode

RDMA Read Operation where one node reads data directly from a memory buffer of a remotenode

RDMA Atomics Allows atomic update of a memory location from an HCA perspective.

The only operation available for the receive queue is Post Receive Buffer transfer, which identifies abuffer that a client may send to or receive from using a Send or RDMA Write data transfer.

Scale-out clusters built on InfiniBand and HP technology

In the past few years, scale-out cluster computing has become a mainstream architecture for highperformance computing. As the technology becomes more mature and affordable, scale-out clustersare being adopted in a broader market beyond HPC. HP Oracle Database Machine is one example.

The trend in this industry is toward using space- and power-efficient blade systems as building blocksfor scale-out solutions . HP BladeSystem c-Class solutions offer significant savings in power, cooling,and data center floor space without compromising performance.

The c7000 enclosure supports up to 16 half-height or 8 full-height server blades and includes rearmounting bays for management and interconnect components. Each server blade includes mezzanineconnectors for I/O options such as the HP 4x QDR IB mezzanine card. HP c-Class server blades areavailable in two form-factors and server node configurations to meet various density goals. To meetextreme density goals, the half-height HP BL2x220c server blade includes two server nodes. Eachnode can support two quad-core Intel Xeon 5400-series processors and a slot for a mezzanine board,providing a maximum of 32 nodes and 256 cores per c7000 enclosure.

NOTE:The DDR HCA mezzanine card should be installed in a PCIe x8connector for maximum InfiniBand performance. The QDR HCAmezzanine card is supported on the ProLiant G6 blades with PCIex8 Gen 2 mezzanine connectors

Figure 9 shows a full bandwidth fat-tree configuration of HP BladeSystem c-Class componentsproviding 576 nodes in a cluster. Each c7000 enclosure includes an HP 4x QDR IB Switch, whichprovides 16 downlinks for server blade connection and 16 QSFP uplinks for fabric connectivity.Spine-level fabric connectivity is provided through sixteen 36-port Voltaire 4036 QDR InfiniBand

Switches2

. The Voltaire 36-port switches provide 40-Gbps (per port) performance and offer fabricmanagement capabilities.

2 Qualified, marketed, and supported by HP.

-

8/4/2019 Using Infiniband for High Speed Interconnect

12/15

12

Figure 9. HP BladeSystem c-Class 576-node cluster configuration

The HP Unified Cluster Portfolio includes a range of hardware, software, and services that providecustomers a choice of pre-tested, pre-configured systems for simplified implementation, fastdeployment, and standardized support.

HP solutions optimized for HPC:

HP Cluster Platforms flexible, factory integrated/tested systems built around specific platforms,backed by HP warranty and support, and built to uniform, worldwide specifications

HP Scalable File Share (HP SFS) high-bandwidth, scalable HP storage appliance for Linux clusters

HP Financial Services Industry (FSI) solutions defined solution stacks and configurations for real-time market data systems

HP and partner solutions optimized for scale-out database applications:

HP Oracle Exadata Storage

HP Oracle Database Machine

HP BladeSystem for Oracle Optimized Warehouse (OOW)

HP Cluster Platforms are built around specific hardware and software platforms and offer a choice ofinterconnects. For example, the HP Cluster Platform CL3000BL uses the HP BL2x220c G5, BL280cG6, and BL460c blade servers as the compute node with a choice of GbE or InfiniBandinterconnects. No longer unique to Linux or HP-UX environments, HPC clustering is now supportedthrough Microsoft Windows Server HPC 2003, with native support for HP-MPI.

HP c7000 Enclosure

16 HP BL280c G6server blades

w/4x QDR HCAs

16

36-Port QDRIB Switch

HP c7000 Enclosure

16 HP BL280c G6server blades

w/4x QDR HCAs

16

HP c7000 Enclosure

16 HP BL280c G6server blades

w/4x QDR HCAs

16

1 2 36

HP 4x QDR IBInterconnect Switch

HP 4x QDR IBInterconnect Switch

HP 4x QDR IBInterconnect Switch

Total nodes 576 (1 per blade)

Total processor cores 4608 (2 Nehalem processors per node, 4 cores per processor

Memory 28 TB w/4 GB DIMMs (48 GB per node)or 55 TB w/ 8 GB DIMMS (96 GB per node)

Storage 2 NHP SATA or SAS per node

Interconnect 1:1 full bandwidth (non-blocking),3 switch hops maximum, fabric redundancy

36-Port QDRIB Switch

36-Port QDRIB Switch

1 2 16

-

8/4/2019 Using Infiniband for High Speed Interconnect

13/15

13

Conclusion

InfiniBand offers an industry standard, high-bandwidth, low-latency, scalable interconnect with a highdegree of connectivity between servers connected to a fabric. While zero-copy (RDMA) protocolshave been applied to TCP/IP networks such as Ethernet, RDMA is a core capability of InfiniBandarchitecture. Flow control support is native to the HCA design, and the latency time for InfiniBanddata transfers is generally lessapproaching 1 microsecond for MPI pingpong latencythan that for10Gb Ethernet.

InfiniBand provides native support for fat-tree and other mesh topologies, allowing simultaneousconnections across multiple links. This gives the fabric the ability to scale or aggregate bandwidth asmore nodes and/or additional links are connected.

InfiniBand is further strengthened by HP-MPI becoming the leading solution among ISVs fordeveloping and running MPI-based applications across multiple platforms and interconnect types.Software development and support become simplified since interconnects from a variety of vendorscan be supported by an application written to the HP-MPI protocol.

Parallel compute applications that involve a high degree of message passing between nodes benefitsignificantly from InfiniBand. HP BladeSystem c-Class clusters and similar rack-mounted clusterssupport IB QDR and DDR HCAs and switches.

InfiniBand offers solid growth potential in performance, with DDR infrastructure currently accepted asmainstream, QDR becoming available, and Eight Data Rate (EDR) with a per-port rate of 80 Gbpsbeing discussed by the InfiniBand Trade Association (IBTA) as the next target level.

The decision to use Ethernet or InfiniBand should be based on interconnect performance and costrequirements. HP is committed to support both InfiniBand and Ethernet infrastructures, and to helpcustomers choose the most cost-effective fabric interconnect solution.

-

8/4/2019 Using Infiniband for High Speed Interconnect

14/15

14

Appendix A: Glossary

The following table lists InfiniBand-related acronyms and abbreviations used in this document.

Table 1. Acronyms and abbreviations

Acronym or abbreviation Description

API Application Programming Interface: software routine or object written in support of a languag

DDR Double Data Rate: for InfiniBand, clock rate of 5.0 Gbps (2.5 Gbps x 2)

GbE Gigabit Ethernet: Ethernet network operating at 1 Gbps or greater

HCA Host Channel Adapter: hardware interface connecting a server node to the IB network

HPC High Performance Computing: the use of computer and/or storage clusters

IB InfiniBand: interconnect technology for distributed computing/storage infrastructure

IP Internet Protocol: standard of data communication over a packet-switched network

IPoIP Internet Protocol over InfiniBand: protocol allowing the use of TCP/IP over IB networks

iSER iSCSI Enhanced RDMA: file storage protocol

iWARP Internet Wide Area RDMA Protocol: protocol enhancement allowing RDMA over TCP

MPI Message Passing Interface: library of calls used by applications in parallel compute systems

NFS Network File System: file storage protocol

NHP Non Hot Pluggable (drive)

QDR Quad Data Rate: for InfiniBand, clock rate of 10 Gbps (2.5 Gbps x 4)

QSFP Quad Small Form factor Pluggable: interconnect connector type

RDMA Remote Direct Memory Access: protocol allowing data movement in and out of system memorywithout CPU intervention

RDS Reliable Datagram Sockets: transport protocol

SATA Serial ATA (hard drive)

SAS Serial Attached SCSI (hard drive)

SDP Sockets Direct Protocol: kernel-level RDMA-based protocol

SDR Single Data Rate: for InfiniBand, standard clock rate of 2.5 Gbps

SM Subnet Manager: management software for InfiniBand network

SRP SCSI RDMA Protocol: data movement protocol

TCA Target Channel Adapter: hardware interface connecting a storage or I/O node to the IB

network

TCP Transmission Control Protocol: core high-level protocol for packet-based data communication

TOE TCP Offload Engine: accessory processor and/or driver that assumes TCP/IP duties fromsystem CPU

ULP Upper Level Protocol: protocol layer that defines the method of data transfer over InfiniBand

VNIC Virtual Network Interface Controller: software interface that allows a host on an InfiniBandfabric access to nodes on an external Ethernet network

-

8/4/2019 Using Infiniband for High Speed Interconnect

15/15

For more information

For additional information, refer to the resources listed below.

Resource Hyperlink

HP products www.hp.com

HPC/IB/cluster products www.hp.com/go/hptc

HP InfiniBand products http://h18004.www1.hp.com/products/servers/networng/index-ib.html

InfiniBand Trade Organization http://www.infinibandta.org

Open Fabrics Alliance http://www.openib.org/

RDMA Consortium http://www.rdmaconsortium.org.

Technology brief discussing iWARP RDMA http://h20000.www2.hp.com/bc/docs/support/Suppo

Manual/c00589475/c00589475.pdfHP BladeSystem http://h18004.www1.hp.com/products/blades/compo

nts/c-class-tech-function.html

Call to action

Send comments about this paper to:[email protected].

2009 Hewlett-Packard Development Company, L.P. The information contained herein issubject to change without notice. The only warranties for HP products and services are set forthin the express warranty statements accompanying such products and services. Nothing hereinshould be construed as constituting an additional warranty. HP shall not be liable for technicalor editorial errors or omissions contained herein.Microsoft, Windows, and Windows NT are US registered trademarks of Microsoft Corporation.

Linux is a U.S. registered trademark of Linux Torvalds.

TC090403TB, April 2009

http://www.hp.com/http://www.hp.com/http://www.hp.com/go/hptchttp://www.hp.com/go/hptchttp://h18004.www1.hp.com/products/servers/networking/index-ib.htmlhttp://h18004.www1.hp.com/products/servers/networking/index-ib.htmlhttp://h18004.www1.hp.com/products/servers/networking/index-ib.htmlhttp://www.infinibandta.org/http://www.infinibandta.org/http://www.openib.org/http://www.openib.org/http://www.rdmaconsortium.org/http://www.rdmaconsortium.org/http://h20000.www2.hp.com/bc/docs/support/SupportManual/c00589475/c00589475.pdfhttp://h20000.www2.hp.com/bc/docs/support/SupportManual/c00589475/c00589475.pdfhttp://h20000.www2.hp.com/bc/docs/support/SupportManual/c00589475/c00589475.pdfhttp://h18004.www1.hp.com/products/blades/components/c-class-tech-function.htmlhttp://h18004.www1.hp.com/products/blades/components/c-class-tech-function.htmlhttp://h18004.www1.hp.com/products/blades/components/c-class-tech-function.htmlmailto:[email protected]:[email protected]:[email protected]:[email protected]:[email protected]://h18004.www1.hp.com/products/blades/components/c-class-tech-function.htmlhttp://h18004.www1.hp.com/products/blades/components/c-class-tech-function.htmlhttp://h20000.www2.hp.com/bc/docs/support/SupportManual/c00589475/c00589475.pdfhttp://h20000.www2.hp.com/bc/docs/support/SupportManual/c00589475/c00589475.pdfhttp://www.rdmaconsortium.org/http://www.openib.org/http://www.infinibandta.org/http://h18004.www1.hp.com/products/servers/networking/index-ib.htmlhttp://h18004.www1.hp.com/products/servers/networking/index-ib.htmlhttp://www.hp.com/go/hptchttp://www.hp.com/