Unsupervised Learning and Modeling of Knowledge and Intent for Spoken Dialogue Systems

-

Upload

yun-nung-vivian-chen -

Category

Presentations & Public Speaking

-

view

20.265 -

download

0

Transcript of Unsupervised Learning and Modeling of Knowledge and Intent for Spoken Dialogue Systems

Unsupervised Learning and Modeling of Knowledge and Intent for Spoken Dialogue SystemsYUN-NUNG (VIVIAN) CHEN H T T P V I V I A N C H E N I D VT W

2015 APRIL

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

C O M M I T T E E A L E X A N D E R I R U D N I C K Y ( C H A I R ) A N ATO L E G E RS H M A N ( C O - C H A I R ) A L A N W B L A C K D I L E K H A K K A N I - T Uuml R ( )

1

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS2

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS3

A Popular Robot - Baymax

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Big Hero 6 -- Video content owned and licensed by Disney Entertainment Marvel Entertainment LLC etc

4

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS2

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS3

A Popular Robot - Baymax

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Big Hero 6 -- Video content owned and licensed by Disney Entertainment Marvel Entertainment LLC etc

4

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS3

A Popular Robot - Baymax

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Big Hero 6 -- Video content owned and licensed by Disney Entertainment Marvel Entertainment LLC etc

4

A Popular Robot - Baymax

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Big Hero 6 -- Video content owned and licensed by Disney Entertainment Marvel Entertainment LLC etc

4

A Popular Robot - Baymax

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Baymax is capable of maintaining a good spoken dialogue system and learning new knowledge for better understanding and interacting with people

The thesis goal is to automate learning and understanding procedures in system development

5

Spoken Dialogue System (SDS) Spoken dialogue systems are the intelligent agents that are able to help users finish tasks more efficiently via speech interactions

Spoken dialogue systems are being incorporated into various devices (smart-phones smart TVs in-car navigating system etc)

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Applersquos Siri

Microsoftrsquos Cortana

Amazonrsquos Echo

Samsungrsquos SMART TV Google Nowhttpswwwapplecomiossirihttpwwwwindowsphonecomen-ushow-towp8cortanameet-cortanahttpwwwamazoncomocechohttpwwwsamsungcomusexperiencesmart-tvhttpswwwgooglecomlandingnow

6

Large Smart Device Population The number of global smartphone users will surpass 2 billion in 2016

As of 2012 there are 11 billion automobiles on the earth

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The more natural and convenient input of the devices evolves towards speech

7

Knowledge RepresentationOntology Traditional SDSs require manual annotations for specific domains to represent domain knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Restaurant Domain

Movie Domain

restaurant

typeprice

location

movie

yeargenre

director

Node semantic conceptslotEdge relation between concepts

located_in

directed_by

released_in

8

Utterance Semantic Representation A spoken language understanding (SLU) component requires the domain ontology to decode utterances into semantic forms which contain core content (a set of slots and slot-fillers) of the utterance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

find a cheap taiwanese restaurant in oakland

show me action movies directed by james cameron

target=ldquorestaurantrdquo price=ldquocheaprdquo type=ldquotaiwaneserdquo location=ldquooaklandrdquo

target=ldquomovierdquo genre=ldquoactionrdquo director=ldquojames cameronrdquo

Restaurant Domain

Movie Domain

restaurant

typeprice

location

movie

yeargenre

director

9

Challenges for SDS An SDS in a new domain requires

1) A hand-crafted domain ontology2) Utterances labelled with semantic representations3) An SLU component for mapping utterances into semantic representations

With increasing spoken interactions building domain ontologies and annotating utterances cost a lot so that the data does not scale up

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The thesis goal is to enable an SDS to automatically learn this knowledge so that open domain requests can be handled

10

Questions to Address1) Given unlabelled raw audio recordings how can a system automatically

induce and organize domain-specific concepts

2) With the automatically acquired knowledge how can a system understand individual utterances and user intents

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS11

Interaction Examplefind a cheap eating place for asian foodUser

Intelligent Agent

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Q How does a dialogue system process this request

12

Cheap Asian eating places include Rose Tea Cafe Little Asia etc What do you want to choose I can help you go there

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

13

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

14

Ontology Induction(semantic slot)

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

15

Ontology Induction(semantic slot)

Structure Learning(inter-slot relation)

SDS Process ndash Spoken Language Understanding (SLU)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

seeking=ldquofindrdquotarget=ldquoeating placerdquoprice=ldquocheaprdquofood=ldquoasian foodrdquo

16

SDS Process ndash Spoken Language Understanding (SLU)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

seeking=ldquofindrdquotarget=ldquoeating placerdquoprice=ldquocheaprdquofood=ldquoasian foodrdquo

17

Semantic Decoding

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FORSELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

18

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FORSELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

19

Surface Form Derivation(natural language)

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Rose Tea CafeLittle Asia

LulursquosEveryday Noodles

Predicted behavior navigation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

20

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Rose Tea CafeLittle Asia

LulursquosEveryday Noodles

Predicted behavior navigation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

21

Behavior Prediction

SDS Process ndash Natural Language Generation (NLG)

find a cheap eating place for asian foodUser

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

22

Cheap Asian eating places include Rose Tea Cafe Little Asia etc What do you want to choose I can help you go there (navigation)

Thesis Goals

target

foodprice AMODNN

seeking PREP_FOR

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Predicted behavior navigation

find a cheap eating place for asian foodUser

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Required Domain-Specific Information

23

Thesis Goals ndash 5 Goals

target

foodprice AMODNN

seeking PREP_FOR

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Predicted behavior navigation

find a cheap eating place for asian foodUser

1 Ontology Induction

2 Structure Learning

3 Surface Form Derivation

4 Semantic Decoding

5 Behavior Prediction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

(natural language)

(inter-slot relation)

(semantic slot)

24

Thesis Goals ndash 5 Goalsfind a cheap eating place for asian foodUser

1 Ontology Induction

2 Structure Learning

3 Surface Form Derivation

4 Semantic Decoding

5 Behavior Prediction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS25

Thesis Goals ndash 5 Goalsfind a cheap eating place for asian foodUser

1 Ontology Induction2 Structure Learning3 Surface Form Derivation

4 Semantic Decoding5 Behavior Prediction

Knowledge Acquisition SLU Modeling

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS26

SDS Architecture - Thesis Contributions

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

DomainDMASR SLU

NLG

Knowledge Acquisition SLU Modeling

27

Knowledge Acquisition1) Given unlabelled raw audio recordings how can a system

automatically induce and organize domain-specific concepts

Restaurant Asking

Conversations

target

foodprice

seeking

quantity

PREP_FOR

PREP_FOR

NN AMOD

AMODAMOD

Organized Domain Knowledge

Unlabelled Collection

Knowledge Acquisition

1 Ontology Induction2 Structure Learning3 Surface Form Derivation

Knowledge Acquisition

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS28

SLU Modeling2) With the automatically acquired knowledge how can a system

understand individual utterances and user intents

Organized Domain

Knowledge

price=ldquocheaprdquo target=ldquorestaurantrdquobehavior=navigation

SLU Modeling

SLU Component

ldquocan i have a cheap restaurantrdquo

4 Semantic Decoding5 Behavior Prediction

SLU Modeling

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS29

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Knowledge Acquisition

SLU Modeling

30

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS31

100

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Input Unlabelled user utterances

Output Slots that are useful for a domain-specific SDS

Y-N Chen et al Unsupervised Induction and Filling of Semantic Slots for Spoken Dialogue Systems Using Frame-Semantic Parsing in Proc of ASRU 2013 (Best Student Paper Award)Y-N Chen et al Leveraging Frame Semantics and Distributional Semantics for Unsupervised Semantic Slot Induction in Spoken Dialogue Systems in Proc of SLT 2014

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Restaurant Asking

Conversations

target

foodprice

seeking

quantity

Domain-Specific Slot

Unlabelled Collection

Step 1 Frame-semantic parsing on all utterances for creating slot candidates

Step 2 Slot ranking model for differentiating domain-specific concepts from generic concepts

Step 3 Slot selection

32

Probabilistic Frame-Semantic Parsing

FrameNet [Baker et al 1998] a linguistically semantic resource based on the frame-

semantics theory ldquolow fat milkrdquo ldquomilkrdquo evokes the ldquofoodrdquo frame ldquolow fatrdquo fills the descriptor frame element

SEMAFOR [Das et al 2014] a state-of-the-art frame-semantics parser trained on

manually annotated FrameNet sentences

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Baker et al The berkeley framenet project in Proc of International Conference on Computational linguistics 1998Das et al Frame-semantic parsing in Proc of Computational Linguistics 2014

33

Step 1 Frame-Semantic Parsing for Utterances

can i have a cheap restaurant

Frame capabilityFT LU can FE LU i

Frame expensivenessFT LU cheap

Frame locale by useFTFE LU restaurant

Task adapting generic frames to domain-specific settings for SDSs

GoodGood

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

FT Frame Target FE Frame Element LU Lexical Unit

34

Step 2 Slot Ranking Model Main Idea rank domain-specific concepts higher than generic semantic concepts

can i have a cheap restaurant

Frame capabilityFT LU can FE LU i

Frame expensivenessFT LU cheap

Frame locale by useFTFE LU restaurant

slot candidate

slot filler

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS35

Step 2 Slot Ranking Model Rank a slot candidate s by integrating two scores

slot frequency in the domain-specific conversation

semantic coherence of slot fillers

slots with higher frequency more important

domain-specific concepts fewer topics

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS36

Step 2 Slot Ranking Model h(s) Semantic coherence

lower coherence in topic space higher coherence in topic space

slot name quantity slot name expensiveness

aone

allthree

cheapexpensive

inexpensive

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

measured by cosine similarity between their word embeddingsthe slot-filler set

corresponding to s

37

Step 3 Slot Selection Rank all slot candidates by their importance scores

Output slot candidates with higher scores based on a threshold

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

frequency semantic coherence

locale_by_use 089capability 068food 064expensiveness 049quantity 030seeking 022

locale_by_use

foodexpensiveness

capability

quantity

38

Experiments of Ontology Induction Dataset

Cambridge University SLU corpus [Henderson 2012]

Restaurant recommendation in an in-car setting in Cambridge WER = 37 vocabulary size = 1868 2166 dialogues 15453 utterances dialogue slot addr area

food name phone postcode price rangetask type The mapping table between induced and reference slots

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Henderson et al Discriminative spoken language understanding using word confusion networks in Proc of SLT 2012

39

Experiments of Ontology Induction Slot Induction Evaluation Average Precision (AP) and Area Under

the Precision-Recall Curve (AUC) of the slot ranking model to measure quality of induced slots via the mapping table

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Induced slots have 70 of AP and align well with human-annotated slots for SDS

Semantic relations help decide domain-specific knowledge

ApproachASR Manual

AP () AUC () AP () AUC ()Baseline MLE 567 547 530 508

MLE + Semantic Coherence 717 (+265)

704 (+287)

744 (+404)

736 (+449)

40

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS41

100

90

Structure Learning [NAACL-HLTrsquo15]

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Input Unlabelled user utterances

Output Slots with relations

Step 1 Construct a graph to represent slots words and relations

Step 2 Compute scores for edges (relations) and nodes (slots)

Step 3 Identify important relations connecting important slot pairs

Y-N Chen et al Jointly Modeling Inter-Slot Relations by Random Walk on Knowledge Graphs for Unsupervised Spoken Language Understanding in Proc of NAACL-HLT 2015

Restaurant Asking

Conversations

Domain-Specific Ontology

Unlabelled Collection

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

42

Step 1 Knowledge Graph Construction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

ccomp

amoddobjnsubj det

Syntactic dependency parsing on utterances

Word-based lexical knowledge graph

Slot-based semantic knowledge graph

can i have a cheap restaurantcapability expensiveness locale_by_use

restaurantcan

have

i

acheap

w

w

capabilitylocale_by_use expensiveness

s

43

Step 1 Knowledge Graph Construction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The edge between a node pair is weighted as relation importance

Word-based lexical knowledge graph

Slot-based semantic knowledge graph

restaurantcan

have

i

acheap

w

w

capabilitylocale_by_use expensiveness

s

How to decide the weights to represent relation importance

44

Dependency-based word embeddings

Dependency-based slot embeddings

Step 2 Weight MeasurementSlotWord Embeddings Training

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

can = have =

expensiveness = capability =

can i have a cheap restaurant

ccomp

amoddobjnsubj det

have acapability expensiveness locale_by_use

ccomp

amoddobjnsubj det

45

Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

Step 2 Weight Measurement

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Compute edge weights to represent relation importance Slot-to-slot relation similarity between slot embeddings Word-to-slot relation or frequency of the slot-word pair Word-to-word relation similarity between word embeddings

119871119908119908

119871119908119904119900119903 119871119904119908

119871119904119904

w1

w2

w3

w4

w5

w6

w7

s2s1 s3

46

Step 2 Slot Importance by Random Walk

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

scores propagated from word-layer then propagated within slot-layer

Assumption the slots with more dependencies to more important slots should be more important

The random walk algorithm computes importance for each slot

original frequency score

slot importance

119871119908119908

119871119908119904119900119903 119871119904119908

119871119904119904

w1

w2

w3

w4

w5

w6

w7

s2s1 s3 Converged scores can

be obtained by a closed form solution

httpsgithubcomyvchenMRRW

47

The converged slot importance suggests whether the slot is important

Rank slot pairs by summing up their converged slot importance

Select slot pairs with higher scores according to a threshold

Step 3 Identify Domain Slots w Relations

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

s1 s2 rs(1) + rs(2)

s3 s4 rs(3) + rs(4)

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

48

(Experiment 1)

(Experiment 2)

Experiments for Structure LearningExperiment 1 Quality of Slot Importance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Dataset Cambridge University SLU Corpus

ApproachASR Manual

AP () AUC () AP () AUC ()Baseline MLE 567 547 530 508

Random Walk MLE + Dependent Relations

690 (+218)

685 (+248)

752 (+418)

745 (+467)

Dependent relations help decide domain-specific knowledge

49

Experiments for Structure LearningExperiment 2 Relation Discovery Evaluation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Discover inter-slot relations connecting important slot pairs

The reference ontology with the most frequent syntactic dependencies

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

type

food pricerange

DOBJ

AMOD AMOD

AMOD

taskarea

PREP_IN

50

Experiments for Structure LearningExperiment 2 Relation Discovery Evaluation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Discover inter-slot relations connecting important slot pairs

The reference ontology with the most frequent syntactic dependencies

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

type

food pricerange

DOBJ

AMOD AMOD

AMOD

taskarea

PREP_INThe automatically learned domain ontology aligns well with the reference one

51

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS52

100

90

100

Surface Form Derivation [SLTrsquo14b]

Input a domain-specific organized ontology

Output surface forms corresponding to entities in the ontology

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Domain-Specific Ontology

Y-N Chen et al Deriving Local Relational Surface Forms from Dependency-Based Entity Embeddings for Unsupervised Spoken Language Understanding in Proc of SLT 2014

movie

genrechar

directordirected_by

genrecasting

character actor role hellip action comedy hellip

director filmmaker hellip

53

Surface Form Derivation Step 1 Mining query snippets corresponding to specific relations

Step 2 Training dependency-based entity embeddings

Step 3 Deriving surface forms based on embeddings

Y-N Chen et al Deriving Local Relational Surface Forms from Dependency-Based Entity Embeddings for Unsupervised Spoken Language Understanding in Proc of SLT 2014

Relational Surface Form

DerivationEntity

Embeddings

PF (r | w) Entity Surface Forms

PC (r | w) Entity Syntactic Contexts

Query Snippets

Knowledge Graph

ldquofilmmakerrdquo ldquodirectorrdquo $director

ldquoplayrdquo $character

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS54

Step 1 Mining Query Snippets on Web Query snippets including entity pairs connected with specific relations in KG

Avatar is a 2009 American epic science fiction film directed by James Cameron

directed_by

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The Dark Knight is a 2008 superhero film directed and produced by Christopher Nolan

directed_by

directed_byAvatar

James Cameron

directed_byThe Dark

KnightChristopher

Nolan

55

Step 2 Training Entity Embeddings Dependency parsing for training dependency-based embeddings

Avatar is a 2009 American epic science fiction film Camerondirected by James

nsubnumdetcop

nnvmod

prop_by

nn

$movie $directorprop pobjnnnnnn

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

$movie = is =

film =

56

Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

Step 3 Deriving Surface Forms Entity Surface Forms

learn the surface forms corresponding to entities most similar word vectors for each entity embedding

Entity Syntactic Contexts learn the important contexts of entities most similar context vectors for each entity embedding

$char ldquocharacterrdquo ldquorolerdquo ldquowhordquo$director ldquodirectorrdquo ldquofilmmakerrdquo$genre ldquoactionrdquo ldquofictionrdquo

$char ldquoplayedrdquo$director ldquodirectedrdquo

with similar contexts

frequently occurring together

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS57

Experiments of Surface Form Derivation Knowledge Base Freebase

670K entities 78 entity types (movie names actors etc)

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Entity Tag Derived Word$character character role who girl she he officier$director director dir filmmaker$genre comedy drama fantasy cartoon horror sci

$language language spanish english german$producer producer filmmaker screenwriter

The dependency-based embeddings are able to derive reasonable surface forms which may benefit semantic understanding

58

Integrated with Background Knowledge

Relation Inference from

GazetteersEntity Dict PE (r | w)

Knowledge Graph Entity

Probabilistic Enrichment

Ru (r)

Final Results

ldquofind me some films directed by james cameronrdquoInput Utterance

Background Knowledge

Relational Surface Form

DerivationEntity

Embeddings

PF (r | w)

Entity Surface Forms

PC (r | w)

Entity Syntactic Contexts

Local Relational Surface Form

Bing Query Snippets

Knowledge Graph

D Hakkani-Tuumlr et al ldquoProbabilistic enrichment of knowledge graph entities for relation detection in conversational understandingrdquo in Proc of Interspeech 2014

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS59

Approach Micro F-Measure ()

Baseline Gazetteer 389

Gazetteer + Entity Surface Form + Entity Context 433(+114)

Experiments of Surface Form Derivation Relation Detection Data (NL-SPARQL) a dialog system challenge set for converting natural language to structured queries [Hakkani-Tuumlr 2014]

Crowd-sourced utterances (3338 for self-training 1084 for testing)

Evaluation Metric micro F-measure ()

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Derived entity surface forms benefit the SLU performance

60

D Hakkani-Tuumlr et al ldquoProbabilistic enrichment of knowledge graph entities for relation detection in conversational understandingrdquo in Proc of Interspeech 2014

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Knowledge Acquisition

SLU Modeling

Restaurant Asking

Conversations

Organized Domain KnowledgeUnlabelled Collection

61

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS62

100

90

100

90

SLU Model

Semantic Representation

ldquocan I have a cheap restaurantrdquoOntology Induction

Unlabeled Collection

Semantic KG

Frame-Semantic ParsingFw Fs

Feature Model

Rw

Rs

Knowledge Graph Propagation Model

Word Relation Model

Lexical KG

Slot Relation Model

Structure Learning

Semantic KG

SLU Modeling by Matrix Factorization

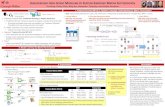

Semantic Decoding Input user utterances automatically learned knowledge

Output the semantic concepts included in each individual utterance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Y-N Chen et al Matrix Factorization with Knowledge Graph Propagation for Unsupervised Spoken Language Understanding submitted

63

Ontology Induction

SLUFw Fs

Structure Learning

Matrix Factorization (MF)Feature Model

1

Utterance 1i would like a cheap restaurant

Word Observation Slot Candidate

Train

hellip hellip

hellip

cheap restaurant foodexpensiveness

1

locale_by_use

11

find a restaurant with chinese foodUtterance 2

1 1

food

1 1

1 Test

1

1

9790 9585

93 929805 05

Slot Induction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

show me a list of cheap restaurantsTest Utterance

64

hidden semantics

Matrix Factorization (MF)Knowledge Graph Propagation Model

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Reasoning with Matrix Factorization

Word Relation Model Slot Relation Model

word relation matrix

slot relation matrix

1

Word Observation Slot Candidate

Train

cheap restaurant foodexpensiveness

1

locale_by_use

11

1 1

food

1 1

1 Test1

1

9790 9585

93 929805 05

Slot Induction

65

The MF method completes a partially-missing matrix based on the latent semantics by decomposing it into product of two matrices

Bayesian Personalized Ranking for MF

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Model implicit feedback not treat unobserved facts as negative samples (true or false) give observed facts higher scores than unobserved facts

Objective

1

119891 +iquest iquest119891 minus119891 minus

The objective is to learn a set of well-ranked semantic slots per utterance

119906

66

119909

Experiments of Semantic DecodingExperiment 1 Quality of Semantics Estimation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Dataset Cambridge University SLU Corpus

Metric Mean Average Precision (MAP) of all estimated slot probabilities for each utterance

Approach ASR ManualBaseline Logistic Regression 340 388

Random 225 251Majority Class 329 384

MF Approach

Feature Model 376 453Feature Model +

Knowledge Graph Propagation435

(+279)534

(+376)

Modeling Implicit

Semantics

The MF approach effectively models hidden semantics to improve SLU

Adding a knowledge graph propagation model further improves the results

67

Experiments of Semantic DecodingExperiment 2 Effectiveness of Relations

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Dataset Cambridge University SLU Corpus

Metric Mean Average Precision (MAP) of all estimated slot probabilities for each utterance

Both semantic and dependent relations are useful to infer hidden semantics

Approach ASR ManualFeature Model 376 453

Feature + Knowledge Graph Propagation

Semantic Relation 414 (+101) 516 (+139)

Dependent Relation 416 (+106) 490 (+82)

Both 435 (+157) 534 (+179)

Combining both types of relations further improves the performance

68

Low- and High-Level Understanding

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Semantic concepts for individual utterances do not consider high-level semantics (user intents)

The follow-up behaviors are observable and usually correspond to user intents

price=ldquocheaprdquo target=ldquorestaurantrdquo

SLU Component

ldquocan i have a cheap restaurantrdquo

behavior=navigation

69

restaurant=ldquolegumerdquo time=ldquotonightrdquo

SLU Component

ldquoi plan to dine in legume tonightrdquo

behavior=reservation

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS70

100

90

100

90

10

Behavior Prediction

1

Utterance 1play lady gagarsquos song bad romance

Feature Observation Behavior

Train

hellip hellip

hellip

play song pandora youtube

1

maps

1

irsquod like to listen to lady gagarsquos bad romanceUtterance 2

1

listen

1

1

Test1 9790 0585

Feature Relation Behavior RelationPredicting with Matrix Factorization

Identification

SLU Model

Predicted Behavior

ldquoplay lady gagarsquos bad romancerdquo

Behavior Identification

Unlabeled Collection

SLU Modeling for Behavior Prediction

Ff Fb

Feature Model

Rf

Rb

Relation ModelFeature Relation Model

Behavior Relation Model

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS71

Data 195 utterances for making requests about launching an app

Behavior Identification popular domains in Google Play

Feature Relation Model Word similarity etc

Behavior Relation Model Relation between different apps

Mobile Application Domain

please dial a phone call to alex

Irsquod like navigation instruction from my home to cmu

skype hangout etc

Maps

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS72

Insurance-Related Domain Data 52 conversations between customers and agents from MetLife call center 2205 automatically segmented utterances

Behavior Identification call_back verify_id ask_info

Feature Relation Model Word similarity etc

Behavior Relation Model transition probability etc

A Thanks for calling MetLife this is XXX how may help you today

C I wish to change the beneficiaries on my three life insurance policies and I want to change from two different people so I need 3 sets of forms

A Currently your name

A now will need to send a format Im can mail the form fax it email it orders on the website

C please mail the hard copies to me

greeting

ask_action

verify_idask_detail

ask_action

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS73

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

SLU Modeling

Organized Domain Knowledge

price=ldquocheaprdquo target=ldquorestaurantrdquobehavior=navigation

SLU Model

ldquocan i have a cheap restaurantrdquo

74

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS75

100

90

100

90

10

Summary

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS76

1 Ontology Induction Semantic relations are useful2 Structure Learning Dependent relations are useful3 Surface Form Derivation Surface forms benefit understanding

Knowledge Acquisition

4 Semantic Decoding The MF approach builds an SLU model to decode semantics5 Behavior Prediction The MF approach builds an SLU model to predict behaviors

SLU Modeling

Conclusions and Future Work The knowledge acquisition procedure enables systems to automatically learn open domain knowledge and produce domain-specific ontologies

The MF technique for SLU modeling provides a principle model that is able to unify the automatically acquired knowledge and then allows systems to consider implicit semantics for better understanding

Better semantic representations for individual utterances Better follow-up behavior prediction

The thesis work shows the feasibility and the potential of improving generalization maintenance efficiency and scalability of SDSs

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS77

Timeline April 2015 ndash finish identifying important behaviors for insurance data

May 2015 ndash finish annotating behavior labels for insurance data

June 2015 ndash implement behavior prediction for both data

July 2015 ndash submit a conference paper about behavior prediction (ASRU)

August 2015 ndash submit a journal paper about open domain SLU

September 2015 ndash finish thesis writing

October 2015 ndash thesis defense

December 2015 ndash finish thesis revision

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS78

Q amp ATHANKS FOR YOUR ATTENTIONS

THAN KS TO MY COMMI T TEE MEMBERS FOR THEI R HELPFUL FEEDBACK

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS79

Word Embeddings Training Process

Each word w is associated with a vector The contexts within the window size c

are considered as the training data D Objective function

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

[back]

wt-2

wt-1

wt+1

wt+2

wt

SUM

INPUT PROJECTION OUTPUT

CBOW ModelMikolov et al Efficient Estimation of Word Representations in Vector Space in Proc of ICLR 2013Mikolov et al Distributed Representations of Words and Phrases and their Compositionality in Proc of NIPS 2013Mikolov et al Linguistic Regularities in Continuous Space Word Representations in Proc of NAACL-HLT 2013

80

Dependency-Based Embeddings Word amp Context Extraction

Word Contextscan haveccomp

i havensub-1

have canccomp-1 insubj restaurantdobja restaurantdet-1

cheap restaurantamod-1

restaurant havedobj-1 adet cheapamod

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

can i have a cheap restaurant

ccomp

amoddobjnsubj det

81

Dependency-Based Embeddings Training Process

Each word w is associated with a vector vw and each context c is represented as a vector vc

Learn vector representations for both words and contexts such that the dot product vw vc associated with good word-context pairs belonging to the training data D is maximized

Objective function

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

[back]Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

82

Evaluation Metrics Slot Induction Evaluation Average Precision (AP) and Area Under

the Precision-Recall Curve (AUC) of the slot ranking model to measure the quality of induced slots via the mapping table

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

[back]

1 locale_by_use089

2 capability0683 food

0644 expensiveness

0495 quantity 030

Precision10

23340

AP = 8056

83

Slot Induction on ASR amp Manual Results The slot importance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

[back]

1 locale_by_use088

2 expensiveness078

3 food064

4 capability0595 quantity 044

1 locale_by_use089

2 capability0683 food

0644 expensiveness

0495 quantity 030

ASR Manual

Users tend to speak important information more clearly so misrecognition of less important slots may slightly benefit the slot induction performance

frequency

84

Slot Mapping Table

origin

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

food

u1

u2

uk

un

asian

japan

asianbeer

japan

noodle

food

beer

noodle

Create the mapping if slot fillers of the induced slot are included by the reference slot

induced slots reference slot

[back]

85

Random Walk Algorithm

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The converged algorithm satisfies

The derived closed form solution is the dominant eigenvector of M

[back]

86

SEMAFOR Performance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The SEMAFOR evaluation

[back]

87

Matrix Factorization

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The decomposed matrices represent latent semantics for utterances and wordsslots respectively

The product of two matrices fills the probability of hidden semantics

[back]

88

1

Word Observation Slot Candidate

Train

cheap restaurant foodexpensiveness

1

locale_by_use

11

1 1

food

1 1

1 Test1

1

9790 9585

93 929805 05

|119932|

|119934|+|119930|

asymp|119932|times119941 119941times (|119934|+|119930|)times

Cambridge University SLU Corpus

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

hi id like a restaurant in the cheap price range in the centre part of town

um id like chinese food please

how much is the main cost

okay and uh whats the address

great uh and if i wanted to uh go to an italian restaurant instead

italian please

whats the address

i would like a cheap chinese restaurant

something in the riverside[back]

89

type=restaurant pricerange=cheaparea=centre

food=chinese

pricerange

addr

food=italian type=restaurant

food=italian

addrpricerange=cheap food=chinesetype=restaurantarea=centre

Relation Detection Data NL-SPARQL

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

how many movies has alfred hitchcock directed

who are the actors in captain america

[back]

90

moviedirected_by movietype directorname

moviestarringactor moviename

The SPARQL queries corresponding natural languages are converted into semantic relations as follows

- Unsupervised Learning and Modeling of Knowledge and Intent for

- Outline

- Outline (2)

- A Popular Robot - Baymax

- A Popular Robot - Baymax (2)

- Spoken Dialogue System (SDS)

- Large Smart Device Population

- Knowledge RepresentationOntology

- Utterance Semantic Representation

- Challenges for SDS

- Questions to Address

- Interaction Example

- SDS Process ndash Available Domain Ontology

- SDS Process ndash Available Domain Ontology (2)

- SDS Process ndash Available Domain Ontology (3)

- SDS Process ndash Spoken Language Understanding (SLU)

- SDS Process ndash Spoken Language Understanding (SLU) (2)

- SDS Process ndash Dialogue Management (DM)

- SDS Process ndash Dialogue Management (DM) (2)

- SDS Process ndash Dialogue Management (DM) (3)

- SDS Process ndash Dialogue Management (DM) (4)

- SDS Process ndash Natural Language Generation (NLG)

- Thesis Goals

- Thesis Goals ndash 5 Goals

- Thesis Goals ndash 5 Goals (2)

- Thesis Goals ndash 5 Goals (3)

- SDS Architecture - Thesis Contributions

- Knowledge Acquisition

- SLU Modeling

- Outline (3)

- Outline (4)

- Ontology Induction [ASRUrsquo13 SLTrsquo14a]

- Probabilistic Frame-Semantic Parsing

- Step 1 Frame-Semantic Parsing for Utterances

- Step 2 Slot Ranking Model

- Step 2 Slot Ranking Model (2)

- Step 2 Slot Ranking Model (3)

- Step 3 Slot Selection

- Experiments of Ontology Induction

- Experiments of Ontology Induction (2)

- Outline (5)

- Structure Learning [NAACL-HLTrsquo15]

- Step 1 Knowledge Graph Construction

- Step 1 Knowledge Graph Construction (2)

- Step 2 Weight Measurement SlotWord Embeddings Training

- Step 2 Weight Measurement

- Step 2 Slot Importance by Random Walk

- Step 3 Identify Domain Slots w Relations

- Experiments for Structure Learning Experiment 1 Quality of Slo

- Experiments for Structure Learning Experiment 2 Relation Disco

- Experiments for Structure Learning Experiment 2 Relation Disco (2)

- Outline (6)

- Surface Form Derivation [SLTrsquo14b]

- Surface Form Derivation

- Step 1 Mining Query Snippets on Web

- Step 2 Training Entity Embeddings

- Step 3 Deriving Surface Forms

- Experiments of Surface Form Derivation

- Integrated with Background Knowledge

- Experiments of Surface Form Derivation (2)

- Outline (7)

- Outline (8)

- Semantic Decoding

- Matrix Factorization (MF) Feature Model

- Matrix Factorization (MF) Knowledge Graph Propagation Model

- Bayesian Personalized Ranking for MF

- Experiments of Semantic Decoding Experiment 1 Quality of Seman

- Experiments of Semantic Decoding Experiment 2 Effectiveness of

- Low- and High-Level Understanding

- Outline (9)

- Behavior Prediction

- Mobile Application Domain

- Insurance-Related Domain

- Outline (10)

- Outline (11)

- Summary

- Conclusions and Future Work

- Timeline

- Q amp A

- Word Embeddings

- Dependency-Based Embeddings

- Dependency-Based Embeddings (2)

- Evaluation Metrics

- Slot Induction on ASR amp Manual Results

- Slot Mapping Table

- Random Walk Algorithm

- SEMAFOR Performance

- Matrix Factorization

- Cambridge University SLU Corpus

- Relation Detection Data NL-SPARQL

-

Spoken Dialogue System (SDS) Spoken dialogue systems are the intelligent agents that are able to help users finish tasks more efficiently via speech interactions

Spoken dialogue systems are being incorporated into various devices (smart-phones smart TVs in-car navigating system etc)

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Applersquos Siri

Microsoftrsquos Cortana

Amazonrsquos Echo

Samsungrsquos SMART TV Google Nowhttpswwwapplecomiossirihttpwwwwindowsphonecomen-ushow-towp8cortanameet-cortanahttpwwwamazoncomocechohttpwwwsamsungcomusexperiencesmart-tvhttpswwwgooglecomlandingnow

6

Large Smart Device Population The number of global smartphone users will surpass 2 billion in 2016

As of 2012 there are 11 billion automobiles on the earth

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The more natural and convenient input of the devices evolves towards speech

7

Knowledge RepresentationOntology Traditional SDSs require manual annotations for specific domains to represent domain knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Restaurant Domain

Movie Domain

restaurant

typeprice

location

movie

yeargenre

director

Node semantic conceptslotEdge relation between concepts

located_in

directed_by

released_in

8

Utterance Semantic Representation A spoken language understanding (SLU) component requires the domain ontology to decode utterances into semantic forms which contain core content (a set of slots and slot-fillers) of the utterance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

find a cheap taiwanese restaurant in oakland

show me action movies directed by james cameron

target=ldquorestaurantrdquo price=ldquocheaprdquo type=ldquotaiwaneserdquo location=ldquooaklandrdquo

target=ldquomovierdquo genre=ldquoactionrdquo director=ldquojames cameronrdquo

Restaurant Domain

Movie Domain

restaurant

typeprice

location

movie

yeargenre

director

9

Challenges for SDS An SDS in a new domain requires

1) A hand-crafted domain ontology2) Utterances labelled with semantic representations3) An SLU component for mapping utterances into semantic representations

With increasing spoken interactions building domain ontologies and annotating utterances cost a lot so that the data does not scale up

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The thesis goal is to enable an SDS to automatically learn this knowledge so that open domain requests can be handled

10

Questions to Address1) Given unlabelled raw audio recordings how can a system automatically

induce and organize domain-specific concepts

2) With the automatically acquired knowledge how can a system understand individual utterances and user intents

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS11

Interaction Examplefind a cheap eating place for asian foodUser

Intelligent Agent

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Q How does a dialogue system process this request

12

Cheap Asian eating places include Rose Tea Cafe Little Asia etc What do you want to choose I can help you go there

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

13

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

14

Ontology Induction(semantic slot)

SDS Process ndash Available Domain Ontology

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

Organized Domain Knowledge

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

15

Ontology Induction(semantic slot)

Structure Learning(inter-slot relation)

SDS Process ndash Spoken Language Understanding (SLU)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

seeking=ldquofindrdquotarget=ldquoeating placerdquoprice=ldquocheaprdquofood=ldquoasian foodrdquo

16

SDS Process ndash Spoken Language Understanding (SLU)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FOR

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

seeking=ldquofindrdquotarget=ldquoeating placerdquoprice=ldquocheaprdquofood=ldquoasian foodrdquo

17

Semantic Decoding

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FORSELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

18

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

target

foodprice AMODNN

seeking PREP_FORSELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

19

Surface Form Derivation(natural language)

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Rose Tea CafeLittle Asia

LulursquosEveryday Noodles

Predicted behavior navigation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

20

SDS Process ndash Dialogue Management (DM)

find a cheap eating place for asian foodUser

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Rose Tea CafeLittle Asia

LulursquosEveryday Noodles

Predicted behavior navigation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

21

Behavior Prediction

SDS Process ndash Natural Language Generation (NLG)

find a cheap eating place for asian foodUser

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Intelligent Agent

22

Cheap Asian eating places include Rose Tea Cafe Little Asia etc What do you want to choose I can help you go there (navigation)

Thesis Goals

target

foodprice AMODNN

seeking PREP_FOR

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Predicted behavior navigation

find a cheap eating place for asian foodUser

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Required Domain-Specific Information

23

Thesis Goals ndash 5 Goals

target

foodprice AMODNN

seeking PREP_FOR

SELECT restaurant restaurantprice=ldquocheaprdquo restaurantfood=ldquoasian foodrdquo

Predicted behavior navigation

find a cheap eating place for asian foodUser

1 Ontology Induction

2 Structure Learning

3 Surface Form Derivation

4 Semantic Decoding

5 Behavior Prediction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

(natural language)

(inter-slot relation)

(semantic slot)

24

Thesis Goals ndash 5 Goalsfind a cheap eating place for asian foodUser

1 Ontology Induction

2 Structure Learning

3 Surface Form Derivation

4 Semantic Decoding

5 Behavior Prediction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS25

Thesis Goals ndash 5 Goalsfind a cheap eating place for asian foodUser

1 Ontology Induction2 Structure Learning3 Surface Form Derivation

4 Semantic Decoding5 Behavior Prediction

Knowledge Acquisition SLU Modeling

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS26

SDS Architecture - Thesis Contributions

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

DomainDMASR SLU

NLG

Knowledge Acquisition SLU Modeling

27

Knowledge Acquisition1) Given unlabelled raw audio recordings how can a system

automatically induce and organize domain-specific concepts

Restaurant Asking

Conversations

target

foodprice

seeking

quantity

PREP_FOR

PREP_FOR

NN AMOD

AMODAMOD

Organized Domain Knowledge

Unlabelled Collection

Knowledge Acquisition

1 Ontology Induction2 Structure Learning3 Surface Form Derivation

Knowledge Acquisition

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS28

SLU Modeling2) With the automatically acquired knowledge how can a system

understand individual utterances and user intents

Organized Domain

Knowledge

price=ldquocheaprdquo target=ldquorestaurantrdquobehavior=navigation

SLU Modeling

SLU Component

ldquocan i have a cheap restaurantrdquo

4 Semantic Decoding5 Behavior Prediction

SLU Modeling

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS29

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Knowledge Acquisition

SLU Modeling

30

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS31

100

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Input Unlabelled user utterances

Output Slots that are useful for a domain-specific SDS

Y-N Chen et al Unsupervised Induction and Filling of Semantic Slots for Spoken Dialogue Systems Using Frame-Semantic Parsing in Proc of ASRU 2013 (Best Student Paper Award)Y-N Chen et al Leveraging Frame Semantics and Distributional Semantics for Unsupervised Semantic Slot Induction in Spoken Dialogue Systems in Proc of SLT 2014

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Restaurant Asking

Conversations

target

foodprice

seeking

quantity

Domain-Specific Slot

Unlabelled Collection

Step 1 Frame-semantic parsing on all utterances for creating slot candidates

Step 2 Slot ranking model for differentiating domain-specific concepts from generic concepts

Step 3 Slot selection

32

Probabilistic Frame-Semantic Parsing

FrameNet [Baker et al 1998] a linguistically semantic resource based on the frame-

semantics theory ldquolow fat milkrdquo ldquomilkrdquo evokes the ldquofoodrdquo frame ldquolow fatrdquo fills the descriptor frame element

SEMAFOR [Das et al 2014] a state-of-the-art frame-semantics parser trained on

manually annotated FrameNet sentences

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Baker et al The berkeley framenet project in Proc of International Conference on Computational linguistics 1998Das et al Frame-semantic parsing in Proc of Computational Linguistics 2014

33

Step 1 Frame-Semantic Parsing for Utterances

can i have a cheap restaurant

Frame capabilityFT LU can FE LU i

Frame expensivenessFT LU cheap

Frame locale by useFTFE LU restaurant

Task adapting generic frames to domain-specific settings for SDSs

GoodGood

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

FT Frame Target FE Frame Element LU Lexical Unit

34

Step 2 Slot Ranking Model Main Idea rank domain-specific concepts higher than generic semantic concepts

can i have a cheap restaurant

Frame capabilityFT LU can FE LU i

Frame expensivenessFT LU cheap

Frame locale by useFTFE LU restaurant

slot candidate

slot filler

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS35

Step 2 Slot Ranking Model Rank a slot candidate s by integrating two scores

slot frequency in the domain-specific conversation

semantic coherence of slot fillers

slots with higher frequency more important

domain-specific concepts fewer topics

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS36

Step 2 Slot Ranking Model h(s) Semantic coherence

lower coherence in topic space higher coherence in topic space

slot name quantity slot name expensiveness

aone

allthree

cheapexpensive

inexpensive

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

measured by cosine similarity between their word embeddingsthe slot-filler set

corresponding to s

37

Step 3 Slot Selection Rank all slot candidates by their importance scores

Output slot candidates with higher scores based on a threshold

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

frequency semantic coherence

locale_by_use 089capability 068food 064expensiveness 049quantity 030seeking 022

locale_by_use

foodexpensiveness

capability

quantity

38

Experiments of Ontology Induction Dataset

Cambridge University SLU corpus [Henderson 2012]

Restaurant recommendation in an in-car setting in Cambridge WER = 37 vocabulary size = 1868 2166 dialogues 15453 utterances dialogue slot addr area

food name phone postcode price rangetask type The mapping table between induced and reference slots

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Henderson et al Discriminative spoken language understanding using word confusion networks in Proc of SLT 2012

39

Experiments of Ontology Induction Slot Induction Evaluation Average Precision (AP) and Area Under

the Precision-Recall Curve (AUC) of the slot ranking model to measure quality of induced slots via the mapping table

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Induced slots have 70 of AP and align well with human-annotated slots for SDS

Semantic relations help decide domain-specific knowledge

ApproachASR Manual

AP () AUC () AP () AUC ()Baseline MLE 567 547 530 508

MLE + Semantic Coherence 717 (+265)

704 (+287)

744 (+404)

736 (+449)

40

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS41

100

90

Structure Learning [NAACL-HLTrsquo15]

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Input Unlabelled user utterances

Output Slots with relations

Step 1 Construct a graph to represent slots words and relations

Step 2 Compute scores for edges (relations) and nodes (slots)

Step 3 Identify important relations connecting important slot pairs

Y-N Chen et al Jointly Modeling Inter-Slot Relations by Random Walk on Knowledge Graphs for Unsupervised Spoken Language Understanding in Proc of NAACL-HLT 2015

Restaurant Asking

Conversations

Domain-Specific Ontology

Unlabelled Collection

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

42

Step 1 Knowledge Graph Construction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

ccomp

amoddobjnsubj det

Syntactic dependency parsing on utterances

Word-based lexical knowledge graph

Slot-based semantic knowledge graph

can i have a cheap restaurantcapability expensiveness locale_by_use

restaurantcan

have

i

acheap

w

w

capabilitylocale_by_use expensiveness

s

43

Step 1 Knowledge Graph Construction

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The edge between a node pair is weighted as relation importance

Word-based lexical knowledge graph

Slot-based semantic knowledge graph

restaurantcan

have

i

acheap

w

w

capabilitylocale_by_use expensiveness

s

How to decide the weights to represent relation importance

44

Dependency-based word embeddings

Dependency-based slot embeddings

Step 2 Weight MeasurementSlotWord Embeddings Training

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

can = have =

expensiveness = capability =

can i have a cheap restaurant

ccomp

amoddobjnsubj det

have acapability expensiveness locale_by_use

ccomp

amoddobjnsubj det

45

Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

Step 2 Weight Measurement

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Compute edge weights to represent relation importance Slot-to-slot relation similarity between slot embeddings Word-to-slot relation or frequency of the slot-word pair Word-to-word relation similarity between word embeddings

119871119908119908

119871119908119904119900119903 119871119904119908

119871119904119904

w1

w2

w3

w4

w5

w6

w7

s2s1 s3

46

Step 2 Slot Importance by Random Walk

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

scores propagated from word-layer then propagated within slot-layer

Assumption the slots with more dependencies to more important slots should be more important

The random walk algorithm computes importance for each slot

original frequency score

slot importance

119871119908119908

119871119908119904119900119903 119871119904119908

119871119904119904

w1

w2

w3

w4

w5

w6

w7

s2s1 s3 Converged scores can

be obtained by a closed form solution

httpsgithubcomyvchenMRRW

47

The converged slot importance suggests whether the slot is important

Rank slot pairs by summing up their converged slot importance

Select slot pairs with higher scores according to a threshold

Step 3 Identify Domain Slots w Relations

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

s1 s2 rs(1) + rs(2)

s3 s4 rs(3) + rs(4)

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

48

(Experiment 1)

(Experiment 2)

Experiments for Structure LearningExperiment 1 Quality of Slot Importance

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Dataset Cambridge University SLU Corpus

ApproachASR Manual

AP () AUC () AP () AUC ()Baseline MLE 567 547 530 508

Random Walk MLE + Dependent Relations

690 (+218)

685 (+248)

752 (+418)

745 (+467)

Dependent relations help decide domain-specific knowledge

49

Experiments for Structure LearningExperiment 2 Relation Discovery Evaluation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Discover inter-slot relations connecting important slot pairs

The reference ontology with the most frequent syntactic dependencies

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

type

food pricerange

DOBJ

AMOD AMOD

AMOD

taskarea

PREP_IN

50

Experiments for Structure LearningExperiment 2 Relation Discovery Evaluation

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Discover inter-slot relations connecting important slot pairs

The reference ontology with the most frequent syntactic dependencies

locale_by_use

food expensiveness

seeking

relational_quantity

PREP_FOR

PREP_FOR

NN AMOD

AMOD

AMODdesiring

DOBJ

type

food pricerange

DOBJ

AMOD AMOD

AMOD

taskarea

PREP_INThe automatically learned domain ontology aligns well with the reference one

51

Outline Introduction

Ontology Induction [ASRUrsquo13 SLTrsquo14a]

Structure Learning [NAACL-HLTrsquo15]

Surface Form Derivation [SLTrsquo14b]

Semantic Decoding (submitted)

Behavior Prediction (proposed)

Conclusions amp Timeline

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS52

100

90

100

Surface Form Derivation [SLTrsquo14b]

Input a domain-specific organized ontology

Output surface forms corresponding to entities in the ontology

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Domain-Specific Ontology

Y-N Chen et al Deriving Local Relational Surface Forms from Dependency-Based Entity Embeddings for Unsupervised Spoken Language Understanding in Proc of SLT 2014

movie

genrechar

directordirected_by

genrecasting

character actor role hellip action comedy hellip

director filmmaker hellip

53

Surface Form Derivation Step 1 Mining query snippets corresponding to specific relations

Step 2 Training dependency-based entity embeddings

Step 3 Deriving surface forms based on embeddings

Y-N Chen et al Deriving Local Relational Surface Forms from Dependency-Based Entity Embeddings for Unsupervised Spoken Language Understanding in Proc of SLT 2014

Relational Surface Form

DerivationEntity

Embeddings

PF (r | w) Entity Surface Forms

PC (r | w) Entity Syntactic Contexts

Query Snippets

Knowledge Graph

ldquofilmmakerrdquo ldquodirectorrdquo $director

ldquoplayrdquo $character

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS54

Step 1 Mining Query Snippets on Web Query snippets including entity pairs connected with specific relations in KG

Avatar is a 2009 American epic science fiction film directed by James Cameron

directed_by

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

The Dark Knight is a 2008 superhero film directed and produced by Christopher Nolan

directed_by

directed_byAvatar

James Cameron

directed_byThe Dark

KnightChristopher

Nolan

55

Step 2 Training Entity Embeddings Dependency parsing for training dependency-based embeddings

Avatar is a 2009 American epic science fiction film Camerondirected by James

nsubnumdetcop

nnvmod

prop_by

nn

$movie $directorprop pobjnnnnnn

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

$movie = is =

film =

56

Levy and Goldberg Dependency-Based Word Embeddings in Proc of ACL 2014

Step 3 Deriving Surface Forms Entity Surface Forms

learn the surface forms corresponding to entities most similar word vectors for each entity embedding

Entity Syntactic Contexts learn the important contexts of entities most similar context vectors for each entity embedding

$char ldquocharacterrdquo ldquorolerdquo ldquowhordquo$director ldquodirectorrdquo ldquofilmmakerrdquo$genre ldquoactionrdquo ldquofictionrdquo

$char ldquoplayedrdquo$director ldquodirectedrdquo

with similar contexts

frequently occurring together

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS57

Experiments of Surface Form Derivation Knowledge Base Freebase

670K entities 78 entity types (movie names actors etc)

UNSUPERVISED LEARNING AND MODELING OF KNOWLEDGE AND INTENT FOR SPOKEN DIALOGUE SYSTEMS

Entity Tag Derived Word$character character role who girl she he officier$director director dir filmmaker$genre comedy drama fantasy cartoon horror sci