The markovchain package use r2016

-

Upload

giorgio-alfredo-spedicato -

Category

Data & Analytics

-

view

86 -

download

0

Transcript of The markovchain package use r2016

The markovchain R packageGiorgio A. Spedicato

University of Bologna & UnipolSai Assicurazioni

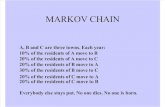

Discrete Time Markov Chains (DTMCs) represent a notable class of stochastic processes. Whilst their basic theory is rather simple, they are extremely effective to model categorical data sequences. Notable applications can be found in linguistic, information theory, life sciences, economics and sociology .The markovchain package aims to fill a gap within CRAN ecosystem providing an unified infrastructure to easily manage DTMC and to perform statistical and probabilistic analyses on Markov chains stochastic processes. The markovchain package contains S4 classes and methods that allow to create and manage homogeneous and non - homogeneous DTMC straightforwardly. This means that DTMCs can be created, represented and exported using an approach natural and intuitive for any R programmer. At the same time, it provides functions to perform structural analysis of transition matrices (that means classification of matrices and states, analysis of the stationary distribution, periodicity, etc...). In addition, it provides methods to estimate transition matrix from data and to perform some inference (confidence intervals calculations), statistical tests on the order and stationarity, etc... Finally embryonic procedures for Bayesian modeling of DTMCs, continuous time Markov Chains and higher order ones has been started to be provided.

Acknowledgements

For further information please contact Giorgio A. Spedicato ([email protected]).This poster is available upon request.

Develop an easy-to-use software package that permits to perform most common statistical analyses on Markov Chains.

Simulation, estimation and inference Abstract

Objective

Contact information

Representing DTMCs

Creating and operating with DTMCs

##create a DTMC objectlibrary(markovchain)#defining a Transition MatrixweatherStates <- c("rainy", "nice", "sunny")weatherMatrix <- matrix(data = c(0.50,0.25, 0.25,0.5, 0.0, 0.5, 0.25, 0.25, 0.5), byrow = TRUE, nrow = 3,dimnames = list(weatherStates, weatherStates))#create the DTMC (long and short way)#long waymcWeather <- new("markovchain", states = weatherStates, byrow = TRUE, transitionMatrix = weatherMatrix,name = "Weather")mcWeather2<-as(mcWeather, "markovchain")name(mcWeather2)<-"Weather Mc"mcWeather2

Weather Mc A 3 - dimensional discrete Markov Chain defined by the following states: rainy, nice, sunny The transition matrix (by rows) is defined as follows: rainy nice sunnyrainy 0.50 0.25 0.25nice 0.50 0.00 0.50sunny 0.25 0.25 0.50

Non-homogeneous DTMCs

Creating DTMCs S4 classes is extremely easy

Algebraic and logical operations can be easily performed

Algebraic and logical operations can be naturally performed:#equalitymcWeather == mcWeather2TRUE#exponentiationmcWeather^2Weather Mc^2 A 3 - dimensional discrete Markov Chain defined by the following states: rainy, nice, sunny The transition matrix (by rows) is defined as follows: rainy nice sunnyrainy 0.4375 0.1875 0.3750nice 0.3750 0.2500 0.3750sunny 0.3750 0.1875 0.4375

plot(mcWeather,main="Weather transition matrix")plot(mcWeather, package = "DiagrammeR", label = "Weather transition matrix")

The markovchain s4 method wraps plot functions from igraph and DiagrammeR packages

Structural analysis of DTMCsThe package allows to perform structural analysis of DTMCs, thanks to an algorithm by (Feres, 2007) ported from Matlab.require(matlab)mathematicaMatr <- zeros(5)mathematicaMatr[1,] <- c(0, 1/3, 0, 2/3, 0)mathematicaMatr[2,c(1,5)] <- 0.5mathematicaMatr[c(3,4),c(3,4)] <- 0.5mathematicaMatr[5,5] <- 1mathematicaMc <- new("markovchain", transitionMatrix = mathematicaMatr, name = "Mathematica MC")names(mathematicaMc)<-LETTERS[1:5]#the summary method provides an overview of structural characteristics of DTMCssummary(mathematicaMc)

Mathematica MC Markov chain that is composed by: Closed classes: C D E Recurrent classes: {C,D},{E}Transient classes: {A,B}The Markov chain is not irreducible The absorbing states are: E

Various functions have been defined at this purpose.#checking accessibilityis.accessible(mathematicaMc,from = "A","E")TRUEis.accessible(mathematicaMc,from = "C","E")FALSE#canonic form myCanonicForm<-canonicForm(mathematicaMc)Mathematica MC A 5 - dimensional discrete Markov Chain defined by the following states: C, D, E, A, B The transition matrix (by rows) is defined as follows: C D E A BC 0.5 0.5000000 0.0 0.0 0.0000000D 0.5 0.5000000 0.0 0.0 0.0000000E 0.0 0.0000000 1.0 0.0 0.0000000A 0.0 0.6666667 0.0 0.0 0.3333333B 0.0 0.0000000 0.5 0.5 0.0000000#periodicityperiod(mathematicaMc)0Warning message: In period(mathematicaMc) : The matrix is not irreducibleperiod(as(matrix(c(0,1,1,0),nrow = 2),"markovchain"))

Steady state distribution(s) can be easily found as well

#finding the steady statesteadyStates(mcWeather)rainy nice sunny0.4 0.2 0.4

dailyWeathers<-rmarkovchain(n=365,object=mcWeather, t0=“sunny”) dailyWeathers[1:7] "sunny" "sunny" "sunny" "sunny" "nice" "rainy" "rainy"

Given a markovchain object, simulating a stochastic sequence given a transition matrix is straightforward

The markovchainFit function allows to estimate the underlying transition matrix for a given character sequence. Asymptotic standard error and MLE confidence intervals are given by default.

mleFit<-markovchainFit(data=dailyWeathers)mleFit$estimate nice rainy sunnynice 0.0000000 0.4864865 0.5135135rainy 0.2406015 0.4586466 0.3007519sunny 0.2675159 0.2229299 0.5095541

$standardError nice rainy sunnynice 0.00000000 0.08108108 0.08330289rainy 0.04253274 0.05872368 0.04755305sunny 0.04127860 0.03768204 0.05696988

$confidenceInterval$confidenceInterval$confidenceLevel[1] 0.95

$confidenceInterval$lowerEndpointMatrix nice rainy sunnynice 0.0000000 0.3531200 0.3764924rainy 0.1706414 0.3620548 0.2225341sunny 0.1996187 0.1609485 0.4158470

$confidenceInterval$upperEndpointMatrix nice rainy sunnynice 0.0000000 0.6198530 0.6505346rainy 0.3105616 0.5552385 0.3789697sunny 0.3354132 0.2849114 0.6032613

$logLikelihood[1] -354.3094

In addition to maximum likelihood, estimates can be obtained by bootstrap and bayesian approaches.

Non-homogeneous DTMCs help when transition probabilities across states structurally change during time. They are represented by an implicity ordered list of markovchain objects.mcC=as(matrix(data=c(0.1,.9,.5,.5),byrow=2,nrow=2),"markovchain")mcA=as(matrix(data=c(0.4,.6,.1,.9),byrow=2,nrow=2),"markovchain")mcB=as(matrix(data=c(0.2,.8,.2,.8),byrow=2,nrow=2),"markovchain")mcC=as(matrix(data=c(0.1,.9,.5,.5),byrow=2,nrow=2),"markovchain")> myMcList=new("markovchainList",markovchains=list(mcA,mcB,mcC))myMcList list of Markov chain(s) Markovchain 1 Unnamed Markov chain A 2 - dimensional discrete Markov Chain with following states: s1, s2 The transition matrix (by rows) is defined as follows: s1 s2s1 0.4 0.6s2 0.1 0.9

Markovchain 2 Unnamed Markov chain A 2 - dimensional discrete Markov Chain with following states: s1, s2 The transition matrix (by rows) is defined as follows: s1 s2s1 0.2 0.8s2 0.2 0.8

Markovchain 3 Unnamed Markov chain A 2 - dimensional discrete Markov Chain with following states: s1, s2 The transition matrix (by rows) is defined as follows: s1 s2s1 0.1 0.9s2 0.5 0.5

Function for simulate from and fit markovchainList are available as well

mcListSims<-rmarkovchain(n=1000,object=myMcList,what="matrix")head(mcListSims,3) [,1] [,2] [,3][1,] "s2" "s2" "s1"[2,] "s2" "s1" "s2"[3,] "s2" "s2" "s1"myMcListFit<-markovchainListFit(data=mcListSims)myMcListFit$estimate list of Markov chain(s) Markovchain 1 Unnamed Markov chain A 2 - dimensional discrete Markov Chain with following states: s1, s2 The transition matrix (by rows) is defined as follows: s1 s2s1 0.2326203 0.7673797s2 0.2220447 0.7779553

Markovchain 2 Unnamed Markov chain A 2 - dimensional discrete Markov Chain with following states: s1, s2 The transition matrix (by rows) is defined as follows: s1 s2s1 0.3584071 0.6415929s2 0.3552972 0.6447028

Special thanks are given to: the Google Summer of Code Project (2015-2016 sessions) and the many users that continuously return feedbacks, suggestions and bugs checks.