The Interpose PUF: Secure PUF Design against …to obtain a software model of a PUF by using...

Transcript of The Interpose PUF: Secure PUF Design against …to obtain a software model of a PUF by using...

The Interpose PUF: Secure PUF Design againstState-of-the-art Machine Learning Attacks

Phuong Ha Nguyen1, Durga Prasad Sahoo2, Chenglu Jin1,Kaleel Mahmood1, Ulrich Rührmair3 and Marten van Dijk1

1 University of Connecticut,phuong_ha.nguyen,chenglu.jin,kaleel.mahmood,[email protected]

2 Bosch India (RBEI/ESY), [email protected] LMU München, [email protected]

Abstract. The design of a silicon Strong Physical Unclonable Function (PUF) that islightweight and stable, and which possesses a rigorous security argument, has beena fundamental problem in PUF research since its very beginnings in 2002. Variouseffective PUF modeling attacks, for example at CCS 2010 and CHES 2015, haveshown that currently, no existing silicon PUF design can meet these requirements. Inthis paper, we introduce the novel Interpose PUF (iPUF) design, and rigorously proveits security against all known machine learning (ML) attacks, including any currentlyknown reliability-based strategies that exploit the stability of single CRPs (we arethe first to provide a detailed analysis of when the reliability based CMA-ES attackis successful and when it is not applicable). Furthermore, we provide simulations andconfirm these in experiments with FPGA implementations of the iPUF, demonstratingits practicality. Our new iPUF architecture so solves the currently open problem ofconstructing practical, silicon Strong PUFs that are secure against state-of-the-artML attacks.

Keywords: Arbiter Physical Unclonable Function (APUF), Majority Voting, ModelingAttack, Strict Avalanche Criterion, Reliability based Modeling, XOR APUF, CMA-ES,Logistic Regression, Deep Neural Network.

1 IntroductionA Physical Unclonable Function (PUF) is a fingerprint of a chip which behaves as aone-way function in the following way: it leverages process manufacturing variation togenerate a unique function taking “challenges” as input and generating “responses” asoutput. Silicon PUFs were introduced in 2002 by Gassend et al. [GCvDD02] and are anemerging hardware security primitive in various applications such as device identification,authentication and cryptographic key generation [MV10, KGM+08, YMSD11, BFSK11].The fundamental open problem of strong silicon PUFs is: How to design a PUF that islightweight, strong and secure. Below we explain each of these three properties and discusscurrent PUF designs that do not satisfy all of these properties. We also propose a newPUF design, the Interpose PUF (iPUF) to solve this open problem.

Lightweight and Strong Design. A lightweight design must have a small number ofgates (small area) and in addition only limited digital computation is allowed with only asmall number of gate computations and without the need for accessing additional memory.This lightweight implementation gives a small hardware footprint and high throughput. Astrong PUF is one that has a large number of Challenge-Response Pairs (CRPs) which are

Licensed under Creative Commons License CC-BY 4.0.IACR Transactions on Cryptographic Hardware and Embedded Systems Vol. 0, No.0, pp.1—48,DOI:XXXXXXXX

2The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

impractical to enumerate.1

Security Argument. We consider an adversarial model in which an attacker attemptsto obtain a software model of a PUF by using information extracted from measuredCRPs. Intrinsically, a PUF hides a “random” function, and learning such functions frominput-output pairs is the field of machine learning – therefore, security must be arguedwith respect to the best-known applicable machine learning techniques. In practice wemeasure the security of a PUF by the number of required CRPs for modeling the PUFwith a sufficiently high prediction accuracy (e.g. 90%).

Current PUF Designs. Current strong PUFs designs can generally be placed intoone of three categories. 1. Strong PUFs without rigorous security arguments, e.g., thePower Grid PUF [HAP09], Clock PUF [YKL+13], Crossbar PUF [RJB+11]. 2. StrongPUFs based on the Arbiter PUF (APUF) or APUF design which have been broken bymachine learning based attacks as discussed in Section 2, e.g., the XOR APUF [SD07],the Feed-Forward PUF [LLG+05], LSPUF (Lightweight Secure PUF) [MKP08], BistableRing PUF [CCL+11], and MPUF (Multiplexer PUF) [SMCN18]. 3. Strong PUFs withsecurity proofs but are not lightweight, e.g., LPN PUF (Learning Parity with Noise basedPUF) [HRvD+17, JHR+17].

Machine Learning Categorization. In this paper we divide the best-known machinelearning attacks on PUFs into two types. The first type of attacks which use non-repeatedmeasurements of CRPs, are denoted as Classical Machine Learning (CML). We furtherdescribe various CML attacks in Section 2. The CML security arguments for the iPUF arebased on the theoretical arguments developed for the x-XOR APUF. It has already beenproven that for the x-XOR APUF, if x is large enough then it is secure against knownCML attacks [Söl09, RSS+10, TB15]. In this paper, we prove an equivalence relationshipbetween the (x, y)-iPUF and x-XOR APUF such that the developed theoretical frameworkfor CML for the x-XOR APUF can be applied to the iPUF. Specifically, if y is chosenlarge, then the iPUF is theoretically secure against CML, just like the x-XOR APUF. Inthis way, we can re-use the XOR APUF CML security framework without having to createand prove an entirely new CML security framework. However, regardless of how large x is,the x-XOR APUF is still vulnerable to a second type of machine learning attacks.

The second type of machine learning attacks we discuss in this paper are attacks thatuse repeated CRP measurements [DV13, Bec15] (see Section 2 for more details). Wedenote these types of attacks as reliability-based machine learning. In this paper we provethese attacks are unable to model the iPUF. Overall, the iPUF is secure against CMLattacks and reliability-based machine learning attacks. Moreover, it is as lightweight asan XOR APUF. Hence, the iPUF replaces the XOR APUF as a next-generation securelightweight strong PUF.

Contributions. In this paper, our contributions are as follows.

• Reliability-based CMA-ES (Covariance Matrix Adaptation Evolution Strategy) attacks.Through theoretical study, rigorous simulation and experimentation we explain whythe most prevalent reliability-based machine learning attack works on APUFs andXOR APUF. Moreover, we show under what circumstances the attack fails. Tothe best of our knowledge, we are the first ones to work on these two problems. Inaddition, we propose enhanced reliability-based CMA-ES attacks which are muchmore powerful than the original one.

• Interpose PUF (iPUF). We propose a novel APUF based PUF design called theiPUF. The iPUF is a lightweight, strong secure PUF design that solves the openfundamental problem of silicon PUFs. We show that the iPUF can prevent CML

1Assuming no additional physical limitation on the read-out speed of CRPs from the PUF.

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 3

attacks that use derivative based calculations (i.e. Logistic Regression) as well asthe main reliability-based machine learning attack (i.e. reliability-based CMA-ES).Moreover, by showing the similarity between the iPUF and XOR APUF, we canprove that the iPUF is secure against deep neural network attacks and CRP-basedCMA-ES attacks which are the most powerful classical attacks applicable to theiPUF.

• Open Source Library. We provide open source code on GitHub2 for all our simulationsand experiments. This includes code for machine learning attacks on APUF, XORAPUF and iPUF in Matlab, C#, and Python. Code to create various PUF modelson FPGA hardware is also given.

Paper Organization. The paper is organized as follows.The background on machine learning attacks is presented in Section 2. Section 3 briefly

introduces the APUF and iPUF designs. Here, we also explain the road map to provethe resistance of the iPUF against all known modeling attacks, and introduce the specificchosen set of parameters for a practical secure iPUF (see Section 3.4). After that, wegive a short description of reliability-based CMA-ES on XOR APUF and introduce itsenhanced variant on APUF and XOR APUF in Section 4.

Section 5 provides a comprehensive study of reliability-based CMA-ES on XOR APUF,i.e., why it works and when it fails. We move to the security analysis of iPUF regard-ing classical machine learning attacks and reliability-based machine learning attacks inSection 6. We explicitly provide the security conjecture used for iPUF security and thedesign philosophy of iPUF (see Section 6.1). We introduce the notions Contributionand Equivalence to show the relationship between iPUF and XOR APUF in Section 6.3.With the developed understanding of reliability-based CMA-ES, the knowledge of theequivalence between iPUF and XOR APUF, we now can show the resistance of the iPUFagainst all known attacks, i.e., the reliability-based CMA-ES attack, the special class ofmachine learning attacks developed only for iPUF (coined linear approximation attacks,see Section 4.3), the Logistic Regression (LR) attacks, the Deep Neural Network attacks,the CRP-based CMA-ES attacks, the Boolean function based attacks, Deterministic FiniteAutomata attacks and Perceptron attacks used for PAC learning framework in Section 6.5.Section 7 studies the strict avalanche criteria (SAC) and the reliability property of theiPUF.

We provide detailed simulations to support the analysis of iPUF in Section 8. Section 9presents the results for the iPUF implemented in FPGA. Here, we experimentally andtheoretically explain the impact of bad implementations and good implementations on thesecurity of the iPUF. We conclude our paper in Section 10. Moreover, we also provide abrief description of APUF and XOR APUF in Appendix A.1, the influence of a challengebit on the output of an APUF in Appendix A.2, Multiplexer PUF’s vulnerability withrespect to the reliability-based CMA-ES attack in Appendix A.3, and k-junta test inAppendix A.4.

2 Background on Machine Learning Attacks on PUFs

In this section we discuss background information related to the evolution of machinelearning attacks on PUFs.

2URL: https://github.com/scluconn/DA_PUF_Library

4The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

2.1 Classical Machine Learning Attacks BackgroundClassical Machine Learning (CML) was first introduced in 2004, where the 64 bit APUF wasshown to be vulnerable with respect to Support Vector Machine (SVM) [Lim04, LLG+05].The most efficient CML attack on APUF and XOR APUF [RSS+10, TB15] is LogisticRegression (LR) which uses non-repeated measurements of CRPs. LR was used to breakAPUFs and XOR APUFs in 2010 [RSS+10]. Rührmair et al. [RSS+10] demonstrated howthe x-XOR APUF for x ≤ 5 can be modeled with at least 98% accuracy using LR withnon-repeated measurements of CRPs. The state-of-the-art implementation of LR breaksthe 64 bit x-XOR APUF up to x ≤ 9 and a 128 bit x-XOR APUF up to x ≤ 7 [TB15].For larger x the XOR APUF has been shown to resist LR due to the subexponentialrelationship between x and the amount of training data required to model the XOR APUF[Söl09, RSS+10, TB15].

Many PUF designs based on the APUF can also be broken using CML. The BistableRing PUF borrows from the APUF design and can be broken like the APUF using aneural network [SH14] or SVM [XRHB15]. The Feed-Forward APUF (FFA) [LLG+05]is another variant of the APUF and can be broken using SVM, LR and ES (EvolutionStrategy) for ≤ 8 feedforward positions [RSS+10]. For a larger number of feedforwardpositions the FFA becomes unreliable and is therefore not considered to be practical. TheLightweight Secure PUF (LSPUF) [MKP08] is a variant of the XOR APUF with multipleoutputs. The LSPUF is based on the x-XOR PUF and consequently is vulnerable to LR[SNMC15] when x ≤ 9.

Another type of classical machine learning is the Probably Approximately Correct(PAC) [GTS15, GTS16, GTFS16] attack. PAC learning provides a framework to learnthe maximum number of CRPs required for modeling an XOR PUF, with given levels ofaccuracy and final model delivery confidence. The Perceptron algorithm used in the PAClearning framework is shown to be successful against the x-XOR APUF for x ≤ 4 [GTS15]and the Deterministic Finite Automata (DFA) [GTS16] algorithm can be used to modelAPUF. We note that DFA in [GTS16] can be modified to further attack x-XOR APUF,but the necessary modification is not fully explained. If we compare the LR attack onXOR APUF in [Söl09, RSS+10, TB15] with the Perceptron attack or with the DFA attackon XOR APUF in [GTS15], we can see that the LR attack is much more powerful. This isbecause that LR has a much smaller search space, uses a mathematical model of the XORAPUF, and uses gradient information in an optimization process. Therefore, we only focuson the LR attack for XOR APUF in this paper. In addition, there is one Boolean functionbased attack related to the PAC learning framework [GTFS16]. However we show that itis inapplicable to APUFs, XOR APUFs and iPUFs in Section 6.5.

In this paper we focus on the main classical machine learning attacks: LR, Deep NeuralNetwork [GBC16] (DNN) and Covariance Matrix Adaptation Evolution Strategy (CMA-ES) [Han16]. LR is considered as the most powerful attack on XOR APUF because it is agradient-based optimization and it uses the mathematical model of XOR APUF [Söl09,RSS+10]. Since DNN is considered as one of the most powerful black box attack (i.e.,does not require any mathematical model of the PUF), it is an interesting case for study.CMA-ES is a gradient free algorithm (i.e., the gradient is not used for finding the desiredmodel) and this property makes it different from algorithms that require gradient basedcomputations (i.e., the LR algorithm). By considering LR, DNN and CMA-ES algorithms,we complete the study of classical machine learning attacks on APUF-based PUFs (i.e.,XOR APUF and iPUF).

2.2 Reliability-based Machine Learning Attacks BackgroundThe first reliability-based attack was proposed in [DV13], where the authors used reliabilityinformation and Least Mean Square optimization method to attack APUF only. In other

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 5

words, this attack in [DV13] is not applicable to XOR APUFs. Later on, Becker [Bec15]was able to break the XOR APUF (and as a consequence the LSPUF) with a linearcomplexity in x using a non-classical ML attack which uses the reliability information ofCRPs and the CMA-ES method. Reliability information can be extracted from repeatedmeasurements of responses belonging to the same challenge [DV13]. This allows an attackerto measure the sensitivity of a response to environmental noise caused by e.g. temperatureand voltage variations.

In this paper we refer to attacks that use repeated CRP measurements as reliability-basedmachine learning attacks.3

Note that if responses are not sensitive to environmental noise, i.e., the PUF is veryreliable, then Becker’s reliability-based CMA-ES attack is not applicable. For this reasonone may want to add digital circuitry to the PUF in the form of a fuzzy extractor [DRS04]or an interface that exploits the LPN problem [HRvD+17, JHR+17], but these techniquesare not lightweight and therefore do not solve the stated fundamental problem. A morelightweight solution is presented in [WGM+17] where the reliability of an x-XOR APUFis enhanced using majority voting. However, this is not sufficiently lightweight as thesame circuitry (including memory for storing a counter) needs to be executed repeatedly alarge number of times which implies a large number of gate evaluations and reduction inthroughput. But more importantly, majority voting does not prevent the reliability-basedCMA-ES attack as the environment can be pushed to extremes in order to make thePUF more unreliable again. In [SMCN18], a new PUF design was introduced but it isalso not secure against CMA-ES attack (see Algorithm 1 in Section 5 in [SMCN18] orAppendix A.3). Since we only focus on the PUF primitive, PUF-based protocols whichcan prevent the reliability-based CMA-ES attack are out of the scope of this paper. Forexample the Lockdown protocol in [YHD+16] is one of good candidates. However, theiPUF design we propose is more lightweight and simpler than Lockdown protocol becauseit does not require many hardware primitives such as a True Random Number Generator,counters etc (see [YHD+16]).

3 The Arbiter PUF and Interpose PUFIn this section, we briefly introduce the analytical delay model [Lim04] and the reliabilitymodel [DV13] of the APUF. We also discuss the basic design of the newly proposed(x, y)-iPUF. Descriptions of both designs are necessary to understand the effectiveness ofML attacks on PUFs in Section 4. The reader can find the detailed description of APUFand XOR APUF in Appendix A.1.

3.1 The APUF Linear Additive Delay Model

In [Lim04, LLG+05], an analytical model called the Linear Additive Delay Model waspresented. As shown in [Lim04], the linear additive delay model of an APUF has the form:

∆ = w[0]Ψ[0] + · · ·+ w[i]Ψ[i] + · · ·+ w[n]Ψ[n] = 〈w,Ψ〉, (1)

where n is the number of challenge bits, w and Ψ are known as weight and parity (orfeature) vectors, respectively. The parity vector Ψ is derived from the challenge c as

3Reliability information can be regarded as a type of ‘side-channel’ information which is extractedfrom CRPs alone without the use of extraneous equipment, whereas additional equipment is needed inpower side-channel analysis etc. [RXS+14, TDF+14, TLG+15]. In this paper security is argued in anadversarial model where the attacker only has access to CRPs.

6The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

c = (c[0], . . . , c[i], . . . , c[n− 1])

(c[0], . . . , c[i], rx, c[i+ 1] . . . , c[n− 1])

rx

y-XOR APUF

x-XOR APUF

r

Figure 1: The (x, y)-iPUF (Interpose PUF) is comprised of an x-XOR APUF whose outputrx is interposed between c[i] and c[i+ 1] in the input of a y-XOR APUF.

follows:

Ψ[n] = 1, and Ψ[i] =n−1∏j=i

(1− 2c[j]), i = 0, . . . , n− 1. (2)

In this delay model, the unknown weight vector w depends on the process variationof the APUF instance (i.e. of a specifically manufactured APUF). The response to achallenge c is defined as: r = 0 if ∆ ≥ 0. Otherwise, r = 1.

3.2 The Arbiter PUF Reliability ModelDue to noise, the reproducibility or reliability of the output of a PUF is not perfect, i.e.,applying the same challenge to a PUF will not produce a response bit with the same valueevery time. The repeatability is the short-term reliability of a PUF in presence of CMOSnoise, and it is not the long-term device aging effect [DV13].

In an APUF we can measure the (short-term) reliability R (i.e., repeatability) for aspecific challenge c in the following way: Assume that the challenge c is evaluated Mtimes, and suppose the measured responses that are equal to 1 is N out of M evaluations.The reliability is defined as R = N/M ∈ [0, 1].

In Eq. (1) we showed the mathematical relationship between the parity vector Ψ andthe corresponding response ∆. Similarly, there exists a mathematical relationship betweenthe reliability R of a given challenge and its response ∆ as shown in [DV13]:

∆/σN =n∑i=0

(w[i]/σN )Ψ[i] = −Φ−1(R) (3)

where the noise follows a normal distribution N (0, σN ) and Φ(·) is the cumulative distri-bution function of the standard normal distribution. In the case where R ∈ [0.1, 0.9] afurther approximation [DV13] can be made:

∆/σN ≈ R. (4)

3.3 The iPUF DesignA (x, y)-iPUF consists of two layers. The upper layer is an n-bit x-XOR APUF and thelower layer is an (n+ 1)-bit y-XOR APUF. We denote the input to the x-XOR APUF ascx = (c[0], . . . , c[i], c[i+1], . . . , c[n−1]). The response rx of the x-XOR APUF is interposedin cx to create a new n+ 1 bit challenge cy = (c[0], . . . , c[i], rx, c[i+ 1], . . . , c[n− 1]). Thefinal response bit is the response ry of the y-XOR APUF with respect to the new challengecy. The structure of the (x, y)-iPUF is shown in Figure 1.

When creating an n-bit (x, y)-iPUF four important parameters determine its securityagainst ML attacks. The four parameters are n which represents the length of the iPUF’s

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 7

challenge, x which represents the number of APUFs in the upper layer XOR APUF, ywhich represents the number of APUFs in the lower layer XOR APUF, and the placewhere the response of the upper layer rx is interposed in the input challenge for the lowerlayer y-XOR APUF. In the next two sections, we will go over more details related to theML attacks on PUFs including the (x, y)-iPUF. Based on this knowledge, we explain howto properly choose the design parameters for the (x, y)-iPUF in Section 6.5 to secure itagainst various ML attacks.

3.4 iPUF Security PhilosophyThe iPUF design parameters are chosen based on certain machine learning attacks. Inthis section, we explain why we use certain specific attacks to dictate the choice of iPUFparameters instead of others. In Section 6 we will prove the (x, y)-iPUF is secure againstreliability based CMA-ES attacks, so we only focus our design choices here based onclassical machine learning attacks. Our explanation of design choices also requires us todevelop a hierarchy to rank some of the classical machine learning attacks. In this manner,we can show that the iPUF can defend against the strongest classical attack.

Theorem 1. For any given x-XOR APUF, the Logistic Regression (LR) attack is themost powerful attack among three classical machine learning attacks: the LR attack, theCRP-based CMA-ES attack and the Deep Neural Network (DNN) attack.

Proof. We note that the following comparison is only valid for XOR APUFs, and later wewill link these comparison results to the security of iPUFs. The comparison is based onthe following facts.

1. Fact 1. We can accurately describe the behavior of an XOR APUF using a mathe-matical model [Söl09, RSS+10].

2. Fact 2. The formulation of the LR attack exploits a-priori knowledge of the XORstructure in the XOR APUF, i.e., the mathematical model of the XOR APUF.The model used in the LR attack perfectly captures the structure of the XORAPUF [Söl09, RSS+10]. Moreover, LR is a gradient-based optimization methodology,i.e., for finding the true model, the gradient is based on a training dataset and themodel is updated in each iteration. The gradient information will give the mostoptimal updated direction to guide the current model to the true model in eachiteration. The reader is referred to [Sch16] for more details.

3. Fact 3. The CRP-based CMA-ES attack is a gradient free optimization methodol-ogy [Han16] and it uses the mathematical model of XOR APUF. In each iteration,it randomly generates a population of models based on the current model and thenkeeps only some best matching models among these models (see [Han16] or Section 4.1for more details). The current model will be updated based on the information fromthese chosen models. Therefore, CRP-based CMA-ES only gives a heuristic updateddirection to take the current model to the true model in each iteration. Therefore,CRP-based CMA-ES is not as efficient as LR (i.e., LR has the most optimal updateddirection in each iteration as described in Fact 2). We also perform this attack oniPUF and XOR APUF in Section 6.3.

4. Fact 4. The deep neural network algorithm is a black box optimization methodol-ogy [GBC16], i.e., it does not use the mathematical model of XOR APUF. This isthe main disadvantage compared to LR. Note that LR attack on XOR APUF takesthe advantage of the mathematical model of XOR APUF (see Fact 2). Therefore LRdoes not have to optimize as many parameters as a DNN to model a PUF. In otherwords, the LR attack is more efficient than the DNN attack.

8The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

We formalize the result in the following inequalities of attacking efficiency.

CMA-ES < Logistic Regression. (5)

Deep Neural Network < Logistic Regression. (6)

Our experimental results in Table 3 also confirm our arguments above. Hence, the mostpowerful classical attack on XOR APUF is LR. However the LR attack is not applicableon iPUF as we will show in Section 6.5. Therefore we have to show the resiliency ofthe iPUF against the DNN attack and the CRP-based CMA-ES attack. To the bestof our knowledge, there is no theory which can give a lower bound on the requirednumber of CRPs for training a machine learning model to learn an XOR APUF with agiven prediction accuracy using CRP-based CMA-ES, the DNN attack, or LR. Since thestructure of iPUF is only slightly different from the structure of XOR APUF, we do nothave such theory for iPUF either.

The x-XOR PUF has one theory which states that the number of CRPs required forthe LR algorithm exponentially increases when x increases [Söl09]. Moreover, this theorywas confirmed by experiments in [TB15] and the empirical lower bounds for the numberof CPRs required for the LR algorithm is presented in [TB15].

Fortunately, we can come up with an “approximate” theory to choose the iPUFparameters based on the following information. First we know from the above attackcomparison, LR is the strongest classical attack on an XOR APUF. We also know theXOR APUF’s state-of-the-art empirical lower bound of the number CRPs to make theattack work [TB15]. Second we know the security of the iPUF can be related to thesecurity of XOR APUF (which we formally develop in Theorem 4). The result in brief isthat the (x, y)-iPUF with i = n/2 is equivalent to (y + x

2 )-XOR APUF (see Theorem 4).Based on the two pieces of information above, we can conclude that if a (y + x

2 )-XORAPUF can be secure against LR, then the (x, y)-iPUF with i = n/2 can be secure againstDNN attacks and CRP-based CMA-ES attacks as well. The literature has shown thata 64-bit 10-XOR APUF is secure against LR attack [TB15]. Based on that design wesuggest using challenge length n = 64, (1,10)-iPUF with interpose bit in themiddle i = n/2 = 32 as a set of practical design parameters.

4 Reliability Based ML AttacksConceptually, instead of using CRPs like in Classical ML (CML) attacks, the repeatability(i.e. short-term reliability) of APUF outputs can be used to build an APUF model basedon a set of challenge-reliability (not response) pairs. The relationship between reliability, Rand the weights w of an APUF were shown in Eq. (3) and Eq. (4). In [DV13] a Reliabilitybased ML (RML) attack was done under the assumption that R ∈ [0.1, 0.9]. Based on thisassumption a system of linear equations was established using Eq. (4) so that w[i]/σNcould be solved for by using the Least Mean Square algorithm. This approach only appliesto the APUF, and not the XOR APUF and by extension it also does not apply to theiPUF. However, RML attacks can be done without assumptions about the range of R, aswe will explain in Section 4.1. Section 4.2 presents the enhanced version of this attackon APUF and XOR APUF. In Section 4.3 we show how to model the iPUF as a linearapproximation (LA) of an XOR APUF. This can be used to analyze to what extent theCML and RML attacks on the XOR APUF apply to the iPUF.

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 9

4.1 Reliability Based CMA-ESIn [Bec14, Bec15] an ML attack on APUFs was developed using CMA-ES with reliabilityinformation obtained from the repeated measurements of CRPs. More precisely, thereliability information R of a challenge c (i.e. (c, R)) is used in the attack instead of thecorresponding response r (i.e. (c, r)).

The rationale behind this attack is as follows: if the delay difference |∆| between thetwo competing paths in an APUF for a given challenge c is smaller than a threshold ε,then the corresponding response r would be unreliable in the presence of noise; otherwisethe response would be reliable. This implies that reliability information directly leaksinformation about the “wire delays” in an APUF model. Let ri be the i-th measuredresponse of challenge c for i = 1, . . . ,M . Two different definitions of R, as provided inEq. (7) and Eq. (8), are found in [DV13] and [Bec15], respectively:

R = 1M

M∑i=1

ri, (7)

R = |M/2−M∑i=1

ri|. (8)

Note similar to Eq. (7) and Eq. (8), repeatability for an APUF was defined as R =N/M ∈ [0, 1] (see Section 3.2). The objective of CMA-ES is to learn weights w =(w[0], . . . ,w[n]) together with a threshold value ε. All variables w[0], . . . ,w[n] are treatedas independent and identically distributed Gaussian random variables. The attack isconducted as follows:

1. Collect N challenge-reliability pairs

Q = (c1, R1), . . . , (ci, Ri), . . . , (cN , RN ),

where Ri is computed as in Eq. (8).

2. Generate K random models:

(w1, ε1), . . . , (wj , εj), . . . , (wK , εK).

3. For each model (wj , εj) (j = 1, . . . ,K), do the following steps:

(a) For each challenge ci (i = 0, . . . , N), compute the R′i as follows:

R′i =

1, if |∆| ≥ ε0, if |∆| < ε,

(9)

where ε = εj and ∆ follows from (1) and (2) with c = ci and w = wj . Notethat for a given model wj with input ci, R′i indicates whether the output ofthe response of the model is reliable or noisy.

(b) Compute the Pearson correlation coefficient ρj based on(R1, . . . , Ri, . . . , RN ) and (R′1, . . . , R′i, . . . , R′N ),

where Ri is computed as in Eq.(8).

4. CMA-ES keeps L models (wj , εj) which have the highest Pearson correlation ρ, andthen, from these L models, another K models are generated based on the CMA-ESalgorithm.

10The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

5. Repeat steps (3)-(4) for T iterations and model (w, ε) which has the highest Pearsoncorrelation ρ will be chosen as the desired model. Note that sometimes the chosenmodel may have low prediction accuracy and in this case we restart the algorithm tofind a model with higher prediction accuracy.

While the above pseudo-code is used to model an APUF, it can also be used to modelan x-XOR APUF. Let

Q = (c1, R1), . . . , (ci, Ri), . . . , (cN , RN )

be a set of challenge-reliability pairs of an x-XOR APUF instance. If the CMA-ESalgorithm for modeling an APUF is executed many times with the set Q, then it canproduce x different models for A0, . . . , Ax−1 with high probability [Bec15]. Although thereis no proof on how many times the CMA-ES algorithm has to be executed to get themodels of all APUF instances of the x-XOR APUF, experimentally it is observed thatthis value needs not to be large, i.e., 2x or 3x CMA-ES runs. As done in [Bec15], one canparallelize CMA-ES executions to build models for A0, . . . , Ax−1.

As explained above, the modeling of an x-XOR APUF simplifies to the modeling of xindependent APUF instances in the reliability based CMA-ES attack. This is significantbecause the relationship between x and the number of CRPs needed for the reliabilitybased CMA-ES attack is linear. As previously stated, CML attacks have an exponentialrelationship between the number of CRPs and x. Therefore increasing x is a valid wayto mitigate the CML attacks. However, the reliability based CMA-ES attack cannot bedefeated in the same manner.

4.2 Enhanced Reliability Based CMA-ES Attack On APUF and XORAPUF

In the reliability based CMA-ES attack, each model w is evaluated according to a fitnessfunction. The fitness function correlates the observed reliability R of the PUF to thereliability of the estimated model R′. Let us denote the observed reliability R in Eq. (8) byRoriginal and the model reliability R′ in Eq. (9) as R′original. The correlation between Roriginaland R′original is limited by the fact that the observed reliability Roriginal only ranges from[0,M/2], while the range of R′original is 0, 1. This is significant because the comparison ofR′original and Roriginal is not as accurate, since the two terms do not have the same range ofvalues.

We propose two alternative definitions for (Roriginal, R′original). We define (Rabsolute, R

′absolute)

and (Rcdf , R′cdf), as follows:

Roriginal = |M/2−M∑i=1

ri| and R′original =

1, if |∆| ≥ ε0, if |∆| < ε,

(10)

Rabsolute = |M/2−M∑i=1

ri| and R′absolute = |∆| (11)

Rcdf = 1M

M∑i=1

ri and R′cdf = Φ(−∆/σN ) (12)

We hypothesize that (Rcdf , R′cdf) can create a more accurate model than (Roriginal, R′original)

when attacking an individual APUF for two reasons. First (Rcdf , R′cdf) uses the proper

reliability ranges. Second (Rcdf , R′cdf) takes into account information from two sources:

reliability information and the response information of the APUF by not using any absolutevalue operation when computing R′cdf . However, when attacking an XOR APUF, the

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 11

response of each individual APUF is not known so (Rcdf , R′cdf) does not improve the

reliability based CMA-ES attack in this case.When attacking an XOR APUF (Rabsolute, R

′absolute) outperforms (Roriginal, R

′original) be-

cause it has the proper reliability ranges. Since it does not attempt to use any responseinformation (which is not available from individual APUFs in an XOR APUF), it alsooutperforms (Rcdf , R

′cdf).

The simulated results of the enhanced attacks on APUF and XOR APUFs are presentedin Section 8.3 and Section 8.4, respectively.

4.3 Linear Approximation of the (x, y)-iPUFA technique that can be used in conjunction with CML or RML is linear approximation;this technique is specifically designed for use against the (x, y)-iPUF. We develop thetechnique based on the following observation: The difference between the input challengeto the y-XOR APUF in an (x, y)-iPUF and the input to a standard y-XOR APUF isonly one bit. Assume i + 1 is the interposed bit position for the input challenge tothe y-XOR APUF in an (x, y)-iPUF. The input to the y-XOR APUF is denoted asclow = (c[0], . . . , c[i], rx, c[i+ 1], . . . , c[n− 1]). Instead of attempting to learn the x-XORAPUF in the (x, y)-iPUF to estimate rx, we can give a fixed value for bit i + 1, i.e.c′low = (c[0], . . . , c[i], 0, c[i+ 1], . . . , c[n− 1]).

By making this approximation we can effectively ignore the x-XOR APUF component ofthe (x, y)-iPUF and treat the (x, y)-iPUF as a y-XOR APUF. Through this approximationwe can do both CML and RML attacks on the (x, y)-iPUF while still using the XOR APUFmodel. These attacks are denoted as LA-CML and LA-RML. In Section 6.4.2 we analyzeunder what conditions the linear approximation accurately approximates the (x, y)-iPUFand how this attack can be mitigated.

5 Analysis of the Reliability based CMA-ES AttackThe reliability based CMA-ES attack is a serious security issue when designing a PUF.Our goal is to create a secure PUF design (the (x, y)-iPUF) that prevents this attack. Todo this, it is necessary to understand under what conditions the reliability based CMA-ESattack works. In this section, we consider the following questions for an in-depth analysisof the reliability based CMA-ES attack:

1. In [Bec15] it is noted that CMA-ES converges more often to some APUF instancesthan others when modeling the components of an x-XOR APUF. Essentially thismeans some APUFs in an x-XOR APUF are easier and some are harder to modelusing the given challenge-reliability pairs. Why does this happen?

2. What are the conditions such that CMA-ES never converges to a particular APUFinstance? In other words, under which condition does the reliability based CMA-ESattack fail?

The first question was posed in [Bec15] without any theoretical answer and the secondquestion has not been investigated in literature. The next experiments are aimed atanswering these questions. Based on the knowledge gained from these experiments, wedevelop the (x, y)-iPUF that is secure against the reliability based CMA-ES attack as wellas the other known classical machine learning attacks mentioned in Section 2.

5.1 Experiment-I: Understanding CMA-ES ConvergenceThe objective of this experiment is to analyze why some APUF instances are easier andsome are harder to model using reliability based CMA-ES. More specifically, we want to

12The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

Table 1: Relationship between the APUF model converged to by CMA-ES and the APUF’sdata set noise rate proportion in each data set Q

Rank n = 1 n = 2 n = 3 n = 4 n = 5 n = 6 n = 7 n = 8 n = 9 n = 10 FailedOccurrence 36% 5% 9% 5% 12% 7% 7% 5% 7% 5% 2%

Rank: APUF model had the n-th highest data set noise proportion in the data set.Failed: Failed to converge to any APUF model.Occurrence: Percentage of time a convergence occurred.

verify whether the probability of converging to an APUF model in CMA-ES is correlatedwith the “data set noise proportion” of that APUF instance present in a given data set Q.Here we define the data set noise proportion for a particular APUF in an x-XOR APUFas the number of noisy (unreliable) CRPs Qn due to the specified APUF divided by thenumber of total CRPs in Q. We define the noise rate β of a given PUF as follows. Let rc,1and rc,2 be the values of the first and the second measurements of the PUF for a givenchallenge c, respectively. The noise rate β of the PUF is equal to Prc(rc,1 6= rc,2), i.e.,β = Prc(rc,1 6= rc,2).

Every time we generate a new set Q, the challenge-reliability pairs (ci, Ri) of Q canbe divided into two parts. The first part is made up of the reliable challenge-reliability pairsQr and the second part is made up of the noisy challenge-reliability pairs Qn. Each APUFinstance Ai has its own set of noisy challenge-reliability pairs Qi,n ⊂ Qn, i = 0, . . . , x− 1.

Theorem 2. For a given dataset Q, the CMA-ES algorithm is more likely to converge tothe APUF instance which has the largest number of pairs in the combined set Qr ∪Qi,n,i.e., highest Pearson correlation, where Qr is the reliable CRPs and Qi,n is the noisy CRPsfor APUF instance i.

Proof. Since Qr is useful for modeling all the APUF instances, the set Qi,n should be largeto make the Qr∪Qi,n sufficiently large enough for modeling the i-th APUF instance. FromStep-4 of the reliability based CMA-ES attack in Section 4.1, it is obvious that CMA-EStries to converge to the APUF instance that has the largest value for |Qi,n|/|Q|.

To verify Theorem 2, we do the following experiment.

Experimental Setup: We run the CMA-ES algorithm 100 times on a 10-XOR APUFin simulation. Each time we run CMA-ES we use a new randomly generated dataset Qwhich contains 70 × 103 CRPs. The noise rate of each individual APUF is 20%. Notethat the 20% noise rate is chosen to be intentionally large to make the number of usedCRPs small, i.e., the bigger the noise of each APUF, the less number of CRPs requiredfor the reliability-based attack. This is by no means the only possible noise rate that couldwork, but we only use this noise rate here to illustrate the results of the experiment. Itis important to note that while the noise rate of each APUF is the same, the data setnoise proportion of each APUF in Q will change each time we generate a new Q. Foreach Q, we generate the challenge-reliability pairs for Q by measuring the response toeach challenge 11 times. Note the repeated measurement 11 is chosen heuristically herebased on two criteria. The repeated measurement must be large enough to capture thereliability information with an acceptable precision, but not too large that the repeatedmeasurement time significantly slows down the experiment run time.

Experimental Results: The results of experiment I are summarized in Table 1. Ineach run of CMA-ES, a model w is generated. We classify the model in the followingway: if the model w matches one of the APUF models with probability ≥ 0.9 or ≤ 0.1(the complement model), then we accept it as a correct model for that particular APUFinstance. If the generated model w does not match any of the APUF models we considerthe CMA-ES algorithm to have failed to correctly converge. Note that w can match withat most one APUF instance. This is because if the model w corresponds to a particular

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 13

APUF instance, then only that instance will have a matching probability ≥ 0.9 or ≤ 0.1,and the other APUF instances will have a matching probability around 0.5. This is due tothe good uniqueness property of simulated APUF instances. Note that in Experiments Iand II we are not a true adversary, i.e., we are a verifier regarding the reliability basedCMA-ES attack on the x-XOR PUF as described in [Bec15]. In other words, we know allAPUF instances and check whether a model produced by the CMA-ES algorithm is a correctmodel for one of them. The audience is referred to [Bec15] for the detailed description ofa real attack.

For the given Q, we measure the data set noise proportion of each APUF with respectto the challenges present in Q. If CMA-ES converges to the APUF model with the highestdata set noise proportion in the current Q, we increment the count in column one. IfCMA-ES converges to the APUF model with the second highest data set noise proportionin the current Q, we increment the count in column two and so on. If the CMA-ESalgorithm generates a model that corresponds to none of the APUFs then we say it failedto converge to any model.

Analysis of Results: The APUF with the highest data set noise rate proportion in Qshould have the highest Pearson correlation coefficient as explained in Theorem 2 andtherefore be the global maximum. However CMA-ES does not guarantee convergence tothe global maximum. When CMA-ES converges to a local maximum, it produces anotherone of the valid APUF models, or an invalid model. For this reason we can see in Table 1that we can converge to a PUF model that does not have the highest noise proportionwith small probability compared to the highest case, i.e.,

5100 ,

7100 ,

9100 ,

12100

36100 .

Overall every time we generate Q, the PUF with the highest noise proportion in Q willhave a high probability of being found by CMA-ES (i.e., 36/100). Likewise, the PUFs witha smaller noise proportion in Q will have a smaller probability of being found.

5.2 Experiment-II: CMA-ES Reliability ConditionsThe objective of this experiment is to understand under which condition the reliabilitybased CMA-ES attack fails to build a model for a particular APUF in an x-XOR APUF.From experiment I we know that CMA-ES is most likely to converge to the APUF modelwhich has the highest data set noise proportion in Q. We want to show that if the noiserate of the APUF instances in the x-XOR APUF’s output are not equal (i.e., drawn fromdifferent distributions), then some APUF models cannot be generated by CMA-ES.

Experimental Setup: We simulate a 2-XOR APUF consisting of two APUF instances,denoted as A0 and A1. The noise rate of both APUFs are set at 1%. We run CMA-ES onthe 2-XOR APUF 100 times. In each run of CMA-ES we generate a Q of size 70× 103,where in Q each challenge is evaluated 11 times to generate the reliability information.

In this experiment we hypothesize that CMA-ES fails to build a certain APUF modelwhen that model always has a lower noise rate (and therefore a lower data set noiseproportion in Q). To do this we manipulate the reliability information presented in thefinal output, such that A0 always has lower data set noise proportion. This is achieved byapplying majority voting to the responses of A0 before XOR-ing it with the response ofA1. In majority voting, we have experimented with M = 5 and M = 10 votes to observethe performance of CMA-ES. We note that the noise rate in this experiment (i.e., 1%) isnot related to the noise rate in Experiment-I (i.e., 20%). Here, we intentionally choosea small noise rate to demonstrate two things. First even if the noise rate of the APUFsare extremely small, the CMA-ES attack still works. Second even if there is a sufficientlysmall discrepancy in the noise rates between two APUFs, CMA-ES will converge to the

14The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

Table 2: Results of Experiment-IIM† Number of times A0 found Number of times A1 found5 8 9210 0 ‡ 99 ‡

† No. of measurements used in the majority voting of A0.‡ The sum of the two counts is not equal to 100 because one attack failed.

APUF model which is the nosiest. The numbers 5 and 10 for majority voting and 11 forreliability evaluation are chosen arbitrarily. While other values are possible here, we justuse one set of values to illustrate the results of our experiment.

Theorem 3. In a 2-XOR APUF, if one APUF instance is sufficiently more reliable thanthe other, then the CMA-ES fails to converge to the APUF which has lower noise rate.

Proof. We will prove the Theorem above using the experimental setup we described in thebeginning of Section 5.2. If the number of votes M is sufficiently large, then the outputof A0 is always more reliable than that of A1 due to the existing majority voting at theoutput of A0. This implies that |Q0,n|/|Q| |Q1,n|/|Q| for any given Q where Qi,n isthe set of noisy challenge-reliability pairs of Ai, i = 0, 1. For a given data set Q, as statedin Theorem 2, CMA-ES tends to converge to the model with the highest data set noiseproportion with high probability. Hence, CMA-ES always converges to A1 when M issufficiently large.Experimental Results: The experimental results are shown in Table 2. The first columncorresponds to the number of times the output was measured on A0 before majority votingwas done. The second and the third columns refer to the number of times the correctmodel was found by CMA-ES for A0 and A1, respectively.

Analysis of Results: From Table 2, it is clear that if M is sufficiently large (M ≥ 10),then the reliability based CMA-ES attack cannot build a model for A0. This is becausewe decreased the noise rate of A0 by applying majority voting. Since A0 is less noisy, itwill have a lower data set noise proportion in Q. As we established in the first experiment,CMA-ES tends to converge to the model with the highest data set noise proportion withhigh probability. In Table 2 we can clearly see this happening when M = 10, as CMA-ESis never able to build a model for A0 (the APUF with the lower data set noise proportion).

It is important to also note that just because the APUFs have different noise rates, itdoes not make the XOR APUF secure against the reliability based CMA-ES attack. Inthe setup described in this experiment, an attacker could simply learn a model for A1.Once the model for A1 has been learned the attacker could then use that model to removemost of the noisy challenge-reliability pairs corresponding to A1 from Q. Doing this wouldcreate a new dataset Qreduced from Q in which A0 would have the highest data set noiseproportion. The attacker could then run CMA-ES on Qreduced to get a model for A0.

5.3 Inferences from the ExperimentsTwo important points can be understood from the experiments in this section. In order forthe reliability based CMA-ES attack to be successful the following conditions must be met:

1. All APUF instances outputs must have the same influence on the final output of thePUF.

2. The noise rate of all APUF instances should be similar.

In the next section, we leverage the knowledge from these experiments to show howthe proposed iPUF can be secured against the reliability based CMA-ES attack.

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 15

6 Security Analysis of iPUF against Machine Learning At-tacks

In this section, we analyze the security and reliability of the proposed (x, y)-iPUF design.Starting in Section 6.1 we discuss our basic conjecture to reason about the security of theiPUF design. In Section 6.2 we begin our security analysis by first showing how a singlechallenge bit in an APUF effects its output r. We then develop a relationship between thesecurity of the (x, y)-iPUF and x-XOR APUF in Section 6.3. We then use the analysis fromSection 6.2 to determine the most secure position for the interposed bit in a (1, 1)-iPUF inSection 6.4.2. Since the iPUF is developed to prevent the reliability-based ML attacks, weneed to study the reliability of the (1, 1)-iPUF as shown in Section 6.4.1. After that, weexplain why iPUF’s design can prevent reliability-based ML attacks. While the (1, 1)-iPUFcan prevent reliability-based ML attacks, properly choosing the interposed bit positionalone is not enough. The (1, 1)-iPUF still suffers from classical machine learning, LA-CMLand LA-RML attacks (see Section 4.3). Therefore, in Section 6.5 we expand our analysisto the (x, y)-iPUF where x ≥ 1 and y > 1. By using a middle interposed bit and properlychoosing x and y ≥ 2 we are able to show that the (x, y)-iPUF is secure against all currentstate-of-the-art ML attacks. Based on our analysis we claim properly designed iPUFs aresuperior to XOR APUFs in terms of security, reliability and hardware overhead.

6.1 Security Conjecture and Design Philosophy of iPUFSecurity Conjecture. Our basic security conjecture is “if x is sufficiently large, thenan x-XOR APUF is practically secure against all known classical ML attacks” as statedin the literature [Söl09, RSS+10, TB15] (this is not an NP-hard problem). We showthat the (x, y)-iPUF is equivalent to the (x/2 + y)-XOR APUF, (see Definition 2 forthe definition of equivalence), so it inherits the same classical ML security. Furthermorethe iPUF can prevent the reliability-based CMA-ES attack which the XOR APUF isvulnerable to. For PUF research, the XOR APUF w.r.t. classical ML is similar to AES(Advanced Encryption Standard) block cipher in the sense that it is considered a hardproblem by itself [Söl09, RSS+10, TB15], i.e., the security of AES is not reduced to anyintractable problem [DR02]. Indeed, we can show that among all known classical MLattacks on XOR APUF, LR attack is the most powerful attack (see Theorem 1) because itis a gradient-based optimization method and it uses a mathematical model of the XORAPUF. In other words, it uses the information about the XOR APUF (the XOR APUFmathematical model) in the most efficient way. No non-repeated measurement ML attackcan match LR. Moreover, the LR attack on XOR APUFs has a full theory developed on therelationship between the modeling accuracy and modeling complexity [Söl09]. This theoryis strongly supported with empirical results [TB15]. In summary, we deeply understandthe resistance of XOR APUF against all known machine learning attacks.

Design Philosophy. Our design philosophy is as follows: We propose that it is possibleto construct a strong PUF whose security can be reduced to an intractable problem(classical ML on XOR APUF). This practice of basing PUF security on its reductionto an intractable problem is not unique to this paper. For example the LPN-basedPUF [HRvD+17, JHR+17] uses this exact same formulation and reduces its security to awell-known computational hardness assumption: learning parity with noise (LPN). Notethat the large matrix operations in LPN-based PUF has an extremely large hardwarefootprint making it not lightweight and hence not a solution to the fundamental PUFproblem considered in this paper. To develop a lightweight and secure strong PUF, weadopt another approach. We use broken but lightweight and reliable PUF primitives(i.e., APUF) to develop a secure, lightweight and reliable PUF design (i.e., iPUF) whichcan be shown to be equal to some hard problems (classical ML on an XOR APUF). We

16The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

consider this approach as a practical and proper methodology to develop a lightweight andsecure strong PUF. To re-iterate, this process is commonly accepted in the cryptographycommunity to develop cryptographic primitives such as block ciphers, stream ciphers andhash functions. In all these cryptographic primitives the secure designs are built on insecurecomponents such as s-box, non linear shift register, etc [DR02, MOV96]. Moreover, themathematical model of the APUF is fully developed and the iPUF is a simple compositeAPUF design. Hence, a full mathematical model of the iPUF can be developed for analysispurpose. This point is significantly important, because we can do a clean security analysisas we will show in the rest of the paper. In summary, we use APUF as a building block ofiPUF because it is a lightweight, reliable strong PUF with a clean mathematical model foranalysis purpose. Our iPUF design is also significantly simple. Hence, it also offers a cleanframework for conducting security analysis.

6.2 Influence of Challenge Bit c[j] on the Response r in the APUFThe influence of a challenge bit on an APUF’s response depends on its position inthe challenge c [MKP09, DGSV14, NSCM16]. From Eq. (1), it can be observed thatΨ[j+ 1], . . . ,Ψ[n− 1] does not depend on challenge bit c[j]. For a given challenge c, basedon the linear delay model of the APUF, the delay difference ∆ can be described as:

∆ = (1− 2c[j])×∆Flipping + ∆Non−Flipping (13)

where ∆Flipping is the term affected by the flipping of bit c[j] and is given by ∆Flipping =∑ji=0 w[i] Ψ[i]

(1−2c[j]) . Likewise, the term that is not dependent on the flipping of c[j] isdenoted as ∆Non−Flipping =

∑ni=j+1 w[i]Ψ[i].

If c[j] = 0 then ∆ = ∆Flipping + ∆Non−Flipping and we will denote this ∆ as ∆c[j]=0with corresponding response rc[j]=0. Similarly when c[j] = 1 we will have ∆ = −∆Flipping+∆Non−Flipping. We denote this ∆ as ∆c[j]=1 and the response as rc[j]=1.

We want to know the influence of flipping c[j] on output r. We measure this influenceby computing the probability that the output remains the same if we flip bit c[j] whilekeeping the rest of c constant:

Prc(rc[j]=0 = rc[j]=1). (14)

In Appendix A.2, we explain:

Prc(rc[j]=0 = rc[j]=1) ≈ (n− j)n

, j = 0, . . . , n− 1 (15)

This implies that an expected probability Prc(rc[j]=0 = rc[j]=1) decreases with in-creasing value of j, so the influence of each challenge bit is not equal. This undesirablesecurity property means we must carefully consider the position of the interposed bit.In the next section we analyze how the interposed bit position effects the security andreliability of the (1, 1)-iPUF. We note that the influence of individual challenge bitson the response is highly related to the SAC (Strict Avalanche Criterion) introducedin [MKP09, DGSV14, NSCM16], and the SAC property of iPUF will be further explainedand analyzed in Section 7.2.

6.3 The Relationship between (x, y)-iPUF and (x + y)-XOR APUFFor a fixed challenge we study the contribution of each APUF component of an APUF-based PUF (i.e. XOR APUF or iPUF) to its output. Here we define contribution in thefollowing manner.

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 17

Definition 1. [Contribution] For a given challenge c, if the output of an APUF Ai flips(while the rest of the APUF outputs are held constant) and this causes the final responseof the APUF-based PUF r to flip, then we say that Ai contributes to the output for thechallenge c.

In an x-XOR APUF it can clearly be seen that each of the x APUFs contribute to theoutput. This is because in an XOR gate flipping any one of the inputs always causes theoutput to flip. We know the more APUFs that contribute to the output, the harder theXOR APUF design is to attack with classical machine learning.

Definition 2. [Equivalence] For a given challenge c, if an APUF-based PUF has mAPUFs contributing to the final response, then we say that this PUF is equivalent to anm-XOR APUF for the challenge c.

Theorem 4. If the interposed bit position is i, then averaged over all possible challengesc the n-bit (x, y)-iPUF is equivalent to the n-bit (y + prx)-XOR APUF where

pr = 1− (1− 2p)y

2 and p = i

n.

Proof. We denote r0y as the output of the iPUF when rx = 0 with cy = (c[0], . . . , c[i], rx =

0, c[i + 1], . . . , c[n − 1]) and r1y as the output of the iPUF when rx = 1 with cy =

(c[0], . . . , c[i], rx = 1, c[i+ 1], . . . , c[n− 1]). Based on our definition of contribution above,if r0

y = r1y it means that the x-XOR APUF output rx does not contribute to the final

output ry of the iPUF. In this case only y APUFs contribute to the output of the iPUF.Note we can also write r0

y = r1y as r1

y ⊕ r0y = 0. Alternatively if r1

y ⊕ r0y = 1 then the output

of (x, y)-iPUF depends on the output rx of x-XOR PUF, as well as the output ry of they-XOR PUF. This implies that there are (x + y) APUFs which contribute to the finaloutput ry for a given challenge. Therefore, the challenge-response space of an (x, y)-iPUFcan be partitioned into two groups. The first group represents challenge-response pairswhere the response only depends on the y APUFs of the y-XOR PUF. The second groupof challenge-response pairs has responses which depend on both the x-XOR APUF andthe y-XOR APUF (the response depends on a total of (x+ y) APUFs). Now we calculatethe expected number of challenge-response pairs in each group. First, we will compute theprobability the challenge-response pair is in the second group:

pr = Prc(r0y 6= r1

y) = Prc(r0y ⊕ r1

y = 1) (16)

Let rlow,0, rlow,1, . . . , rlow,y−1 be the outputs of the y APUFs in the lower layer y-XORPUF when rx is 0. We will assume ideal classical machine learning conditions whereno measurement noise is present. Because there is no measurement noise, Section 6.2is applicable: For a given challenge c, rlow,i depends on the upper layer output rx (inthat output rlow,i would flip if rx would be substituted by 1) with probability p =(i+ 1)/(n+ 1) ≈ i/n if the feedback position of rx is i. For a given challenge c, pr is theprobability that the response of (x, y)-iPUF is equal to r = 1⊕ rlow,0 ⊕ . . .⊕ rlow,y−1 if rxwould be substituted by 1. Then pr depends on i:

pr =y∑

k=1,k odd

(y

k

)pk(1− p)y−k = 1− (1− 2p)y

2 . (17)

4

4For any given a and b, we have:

(a+ b)x − /+ (a− b)x = 2x∑

j=0,j is odd/ even

(xj

)ajbx−j .

Hence, replacing a = 1− p and b = p yields Eq.(17).

18The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

0.5

0.6

0.7

0.8

0.9

1

1-XP

2-XP

3-XP

4-XP

5-XP

6-XP

(1,5)

-IP

(2,4)

-IP

(3,3)

-IP

(4,2)

-IP

(5,1)

-IP

Pred

ictio

n A

ccur

acy

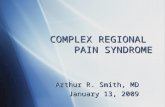

Figure 2: Prediction accuracy of CRPs based CMA-ES attack on 64-bit APUF (1-XP),64-bit 2,3,4,5,6-XOR APUF (y-XP) and (1,5), (2,4), (3,3), (4,2), (5,1)-iPUF ((x,y)-IP).

Thus, a fraction 1− pr of challenge-response pairs is in the first group and a fraction prof challenge-response pairs is in the second group. For a given (x, y)-iPUF with parameteri, the expected number of APUFs contributing to the response of a given challenge is

= (1− pr)y + pr(x+ y) (18)= y + prx

Simulation Validation. Through simulation we compare the (x, y)-iPUF and the (x+y)-XOR APUF. Since we only focus on the design, we consider reliable PUFs to experimentallydemonstrate Eq. (18), i.e. If the interposed bit position is in the middle, then the (x, y)-iPUF is equivalent to a (y + x

2 )-XOR APUF (see Theorem 4). We run the CRPs-basedCMA-ES attack on 64-bit APUF (a.k.a 1-XOR APUF or 1-XP), 64-bit 2,3,4,5,6-XORAPUF (y-XP) and (1,5), (2,4), (3,3), (4,2), (5,1)-iPUF ((x,y)-IP) with 200,000 CRPs fortraining. In each attack the CMA-ES algorithm is run for 1000 iterations. The results areshown in Fig 2. For each PUF, we attack it 10 times. The prediction accuracy of eachattack is computed using 2000 CRPs. Fig 2 shows that the experimental results closelymatch the theory presented in Eq. (18). Note that the prediction accuracy of (5,1)-iPUFis higher than that of 3-XOR APUF or 4-XOR APUF, because when the lower layer onlyhas one APUF, the linear approximation attack becomes effective. Here the attacker onlyneeds to model the APUF in the lower layer accurately, after which the upper bound onthe accuracy for the linear approximation attack on (x, 1)-iPUF can be achieved, which is75% accuracy. A detailed analysis of the linear approximation attack will be provided inSection 6.4.2.

6.4 Security and Reliability Analysis of the (1,1)-iPUFThe most basic form of the (x, y)-iPUF is the (1, 1)-iPUF, with the upper layer consistingof a single APUF Aup and the lower layer consists of a single APUF Alow. Let us denotethe responses to Aup and Alow by rup and rlow, respectively. The final output of the(1, 1)-iPUF is the response rlow. Based on the (1, 1)-iPUF structure we analyze where to

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 19

interpose the bit in the lower APUF and how this affects the reliability and security of the(1, 1)-iPUF.

6.4.1 Reliability of the (1,1)-iPUF

In order to determine the effect of measurement noise in the (1, 1)-iPUF, we evaluate achallenge c twice. Let us denote rup,0 as the response of the upper APUF and rlow,0 as theresponse of the lower APUF the first time the challenge is applied. Likewise, let us denoterup,1 and rlow,1 as the APUF’s responses the second time the challenge is evaluated.

Assume APUF Aup has noise rate βup such that Prc(rup,0 6= rup,1) = βup. Similarly,assume that APUF Alow has noise rate βlow such that Prc(rlow,0 6= rlow,1|rup,0 = rup,1) =βlow.

Let us denote i+ 1 as the interposed bit position for rup in the (n+ 1)-bit challengeof Alow. If the flipping of the output of Aup flips the output of Alow and Alow is itselfreliable, then the output of Alow should be flipped. This event occurs with probability(1 − βlow) i+1

n+1 . If the flipping of the output of Aup does not flip of the output of Alowand Alow is itself noisy, then the output of Alow flips. This event occurs with probabilityβlow

(1− i+1

n+1

). Therefore, the event where flipping the output of Aup flips the output of

Alow occurs with the following probability

Prc(rlow,0 6= rlow,1|rup,0 6= rup,1) = (1− βlow) i+ 1n+ 1 + βlow

(1− i+ 1

n+ 1

).

We use the equality above and Eq. (15) to derive

Prc(rlow,0 6= rlow,1)= Prc(rlow,0 6= rlow,1|rup,0 = rup,1)Prc(rup,0 = rup,1) +

Prc(rlow,0 6= rlow,1|rup,0 6= rup,1)Prc(rup,0 6= rup,1)

= βlow(1− βup) + [(1− βlow) i+ 1n+ 1 + βlow

(1− i+ 1

n+ 1

)]βup

= βlow(1− βup) + [(1− 2βlow) i+ 1n+ 1 + βlow]βup. (19)

In practice βup 1 and βlow 1, thus Eq. (19) can be approximated as:

Prc(rlow,0 6= rlow,1) ≈ βlow + βupi

n(20)

From Eq. (19) and Eq. (20) it can be seen that the reliability information of Aup andAlow available at the output of (1, 1)-iPUF are not equal even when βlow = βup. If weassume βlow = βup = β then Alow contributes approximately β while Aup contributes ap-proximately β i

n . The unequal reliability contribution shown by our analysis has importantimplications of the resilience against the reliability based CMA-ES attack.

6.4.2 Security of (1, 1)-iPUF

We will now discuss the security of the (1,1)-iPUF with respect to the reliability basedCMA-ES attack (i.e. RML), CML and ML attacks that use a linear approximationtechnique (i.e. LA-CML and LA-RML).

Reliability Based CMA-ES Attack: The (1,1)-iPUF is theoretically secure againstthe CMA-ES reliability attack for two reasons if the interposed bit position for rup isproperly chosen: The first reason is based on the conditions under which the CMA-ESattack operates and the second is based on the computation done to learn the APUFmodel in CMA-ES.

20The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

First, recall the conditions under which the reliability based CMA-ES attack worksas described in Section 5.2. In order to successfully model the APUF components Aupand Alow, each APUF must contribute equal reliability information to the output. For the(1,1)-iPUF, we showed in Eq. (20) that the contributions of Alow and Aup are not equal,i.e., β is not equal to β i

n when i is between 0 and n2 . Due to the unequal contribution of

reliability information in the output when the interposed bit position (i+ 1) is not close ton, CMA-ES will not converge to the model for Aup. We did the following experiment todetermine the importance of the interposed bit position. We launched the reliability basedCMA-ES attack on a 64-bit (1,1)-iPUF with 30,000 challenge-reliability pairs. In thisexperiment, the noise rate of each APUF was 20% (β = 0.2). The number of iterations forCMA-ES was 30,000. We repeated the attack 20 times with the interposed bit position at 0(i = 0), 32 (i = n/2) and 64 (i = n). The result shows that Aup can be modeled (i.e.,the prediction accuracy of the model of Aup is 98%) when i = n = 64. However,if the inserted position is in the middle (i = 32) or at first stage (i = 0), thenAup can not be modeled (i.e., the prediction accuracy of the model of Aup is51%).

Since we cannot first build a model for Aup we must first try to build a model for Alow.This brings us to the second reason the (1,1)-iPUF is secure against the reliability basedCMA-ES attack. Recall in Section 4.1 that ∆ is needed to compute the fitness of eachmodel w. ∆ is based on the input to Alow. However we do not know one of the input bits(the interposed bit) to Alow so we cannot compute ∆ (see the calculation of ∆ in Eq. (1)and (2)).

However, we should take a closer look at the way the computation of ∆ isdone to know how the interposed bit position affects the modeling attack onAlow. In Section 6.2, we have ∆ = (1− 2c[j])×∆Flipping + ∆Non−Flipping (see Eq.( 13))and thus, if j gets closer to 0, then ∆ and ∆Non−Flipping become similar. Strictly speaking,we cannot run CMA-ES to build a model for Alow when the interposed bit position i isNOT close to 0, for example the interposed bit position is in the range of [n/2, n].

Conclusion: We cannot model Aup due to the unequal reliability information on theoutput and we cannot model Alow due to the unknown value of the interposed bit whenthe interposed bit position is properly chosen. Therefore, we claim the (1, 1)-iPUF issecure against the reliability based CMA-ES attack when the interposed bitposition is properly chosen in the middle of the input to Alow.

Classical Machine Learning Attacks: The (1, 1)-iPUF is not secure against classicalmachine learning attacks due to its low model complexity. Instead of modeling the APUFcomponents individually, any machine learning algorithm can be used to learn the modelfor Alow and Aup simultaneously. Experiments to support our claim are given in Section 8(see Table 4). Note that, while the iPUF is vulnerable to classical derivative free machinelearning, we will prove that derivative based classical machine attacks are not possible onan iPUF in the next section. As a result, we only need to consider derivative free classicalmachine learning attacks on an iPUF.

Attacks Using Linear Approximation (LA-CML and LA-RML): The security ofthe (1, 1)-iPUF against an ML attack that use the linear approximation (see Section 4.3)depends on the choice of the interposed bit position. In this attack, any CML or RMLattack on an XOR PUF can be adapted to work on an (x, y)-iPUF. This adaptation isdone by approximating the (x, y)-iPUF as a y-XOR APUF by fixing the interposed bitfrom the x-XOR APUF to be 0. In the case of the (1, 1)-iPUF this means that we ignorethe component Aup and approximate (1, 1)-iPUF by APUF Alow.

Let us denote clow as the input to Alow and c′low to be the approximation of clowwhere we fix the interposed bit rup in clow to be 0. We can write Alow(c′low) as the outputof the linear approximation and Alow(clow) as the output of the (1, 1)-iPUF. We cannow analyze how effective the linear approximation is. We measure the effectiveness of

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 21

the approximation by computing the probability that the output of the linearized modelAlow(c′low) matches the output of Alow(clow):

papprox = Prc(Alow(c′low) = Alow(clow)) (21)

If papprox is high, then the (1, 1)-iPUF is accurately approximated by APUFAlow(c′low) and can be modeled with an ML attack.

Let us assume the following conditions for the analysis of the attack and for the sakeof explanation, we drop low from c′low or clow. We model a (x, 1)-iPUF with APUFcomponents that are 100% reliable and the output rup of the x-XOR APUF is uniform,i.e., Prc(rup = 0) = Prc(rup = 1) = 1

2 . Then,

papprox = Prc(Alow(c′) = Alow(c))= Prc (Alow(c′) = Alow(c)|rup = 0) Prc(rup = 0)

+Prc(Alow(c′) = Alow(c)|rup = 1)Prc(rup = 1)

= 1× 1/2 + n− in× 1/2 = 1/2 + n− i

2n . (22)

Eq. (22) shows that papprox decreases as i increases. Note that our discussion holdsfor any (x, 1)-iPUF and thus, it is applicable to the (1, 1)-iPUF. We model a 64-bit(1,1)-iPUF using the reliability based CMA-ES attack with the linear approximation(LA-RML). The number of challenge-reliability pairs is 30,000 and the noise rate is 20%.From the experiment, the prediction accuracy of LA-RML on a 64-bit (1,1)-iPUF wheni = 0, 32, 64 is equal to 97%, 70% and 51%, respectively. Likewise, a similar result can beachieved by using CRP based CMA-ES (classical machine learning).

A prediction accuracy of 50% is the worst a machine learning attack can do on aPUF with uniform binary output. Therefore, it would seem that picking the interposedbit position to be as high as possible would result in the most secure (x, y)-iPUF design.However, below we will explain why choosing a high interposed position is not ideal.

Interposed Bit Position: In the (1, 1)-iPUF the only design parameter we must chooseis the interposed bit position. The higher the interposed bit position, the more influenceAup has on the (1, 1)-iPUF. As a result the PUF model is more complex. It is more difficultto attack with CML attacks and the linear approximation is less accurate. However, ahigh bit position yields high noise on the output (less reliable). In addition, with a highinterposed bit position and large enough number of input bits, the (1, 1)-iPUF becomesequivalent to an XOR APUF in terms of susceptibility to RML attacks.

The conclusion from the analysis of the (1, 1)-iPUF is that using the interposed bitposition as the only security parameter is not enough to mitigate all the different MLattacks. We seek a trade-off between all the factors by choosing the interposed bit to bethe middle bit position. Based on this choice we then analyze how to modify x and y tofurther secure our design in Section 6.5.

6.5 Security Analysis of the (x, y)-iPUFThe analysis in Section 6.4.2 shows that the (1, 1)-iPUF with a middle interposed bitposition can defeat the reliability based CMA-ES attack. However the (1, 1)-iPUF is stillvulnerable to CML attacks and both types of attacks that employ the linear approximation(LA-CML and LA-RML). In this section, we show how selecting x and y can mitigatethe remaining ML attacks, and we prove that the CRP based Logistic Regression attacksare not applicable, and we show how the (x, y)-iPUF can mitigate the reliability basedCMA-ES attack (RML), CML attacks (LR, DNN, CRP-based CMA-ES), LA-CML andLA-RML, and PAC learning attacks (Boolean function based attack, DFA attack andPerceptron attack).

22The Interpose PUF: Secure PUF Design against State-of-the-art Machine Learning

Attacks

Reliability based CMA-ES Attack: The security argument for the (1, 1)-iPUF inSection 6.4.2 also applies to the (x, y)-iPUF. Using challenge-reliability pairs and CMA-ES,an adversary cannot build models for the x component APUFs in the x-XOR APUF. Thisis due to the unequal contribution of noise on the output between the x-XOR APUFs andthe y-XOR APUFs (see Section 7.1 for a detailed reliability analysis). Also an adversarycannot build models for the y component APUFs in the y-XOR APUF as rx (at theinterposed bit position) is not known. Therefore, we consider the (x, y)-iPUF to be secureagainst the reliability based CMA-ES attack.

Classical Machine Learning Attacks: There is no known way the APUF componentsof the (x, y)-iPUF can be modeled individually. As a result, the only way to attack an(x, y)-iPUF is by modeling all the (x+ y) component APUFs simultaneously using classicalmachine learning, e.g., neural network or CRP based CMA-ES. Rührmair et al. [RSS+10]showed that the more APUFs that influence (contribute) to the output of an XOR APUF,the more difficult the PUF is to model using CML methods. In other words, increasingx in an x-XOR APUF can mitigate CML attacks. In Theorem 4, we prove that if theinterpose position of rx is i then the (x, y)-iPUF is equivalent to a (y + prx)-XOR APUFwhere:

pr = 1− (1− 2p)y

2 and p = i

n.

If i = n and y is odd, then pr = 1 and (x, y)-iPUF is equivalent to (x+ y)-XOR APUFin terms of security because of (x+ y) APUFs contributing to every challenge-responsepair. If i = 0, then pr = 0 and (x, y)-iPUF is equivalent to a y-XOR PUF. In terms ofdifficulty of modeling with classical machine learning methods (i.e. CRP based CMA-ES),the (x, y)-iPUF with parameter i is approximately equivalent to a (y + prx)-XOR PUF.We experimentally verify this claim in Section 8.2 (see Figures 2 and 5). As a result, wecan use the same strategy employed in the XOR APUF design [RSS+10] and increase xand y in an (x, y)-iPUF to mitigate classical machine learning attacks. It is also worthnoting the analysis to determine the number of APUFs that contribute in an iPUF can beused to derive further iPUF properties such as uniformity, uniqueness and reliability.

LA-RML. Reliability based CMA-ES applied to an (x, y)-iPUF after linearly approxi-mating it as a y-XOR APUF learns one single component APUF of the y-XOR APUF. Inthe linear approximation we substitute rx from the upper x-XOR APUF by 0 and feed0 into the lower y-XOR APUF. If we denote the single component APUF which we tryto learn using CMA-ES by Alow, then, for the challenge interposed with rx, the outputof Alow is the output of an (x, 1)-iPUF. I.e., we attempt to learn a model for Alow froma linear approximation of an (x, 1)-iPUF. From Eq.(22) with interpose bit position inthe middle we infer that the model at best predicts Alow with papprox = 75% accuracy.Reliability based CMA-ES learns models for each of the component APUFs of the lowery-XOR APUF, each with at most 75% accuracy. This shows that the maximum accuracyof the learned approximated y-XOR APUF is at most

pXORlearn =y∑

j=0,j is even

(y

j

)(1− papprox)jpy−japprox

= 1 + (2papprox − 1)y

2 = 12 + 1

2y+1 .

This shows that pXORlearn is close to 1/2 for y large enough and this implies that thelearned model for the linearly approximated y-XOR APUF does not contain predictivevalue5.

5We can compare the model’s output with the iPUF’s output and attempt to find out whether this

Phuong Ha Nguyen, Durga Prasad Sahoo, Chenglu Jin, Kaleel Mahmood, UlrichRührmair and Marten van Dijk 23

If y ≥ 3, then pXORlearn ≤ 56.25%. We launched the LA-RML attack on a 64-bit (3,3)-iPUF. In this experiment the noise rate for each APUF was 20%. The prediction accuracyof the final model was around 50% (confirming the theoretical upper bound) when 200,000challenge-reliability pairs were used in the training phase (see Table 4).

LA-CML. In the case of linearly approximating the (x, y)-iPUF as a y-XOR APUF andapplying classical ML, we know that learning a model for even a noise-free 64 bit y-XORAPUF is currently not practical for y ≥ 10 if LR is used and not practical for even smallery if CRP based CMA-ES is used. However, even if the iPUF itself is 100% reliable, areliable CRP of the iPUF may be a noisy CRP of the linearly approximated y-XOR APUF.By combining Eq.(15) with the derivation leading to Eq.(22), the accuracy pXORapprox of thelinearly approximated y-XOR APUF itself is given by

pXORapprox = 1× 1/2 + p′ × 1/2,

where

p′ =y∑

j=0,j is even

(y

j

)(i

n

)j (n− in

)y−j=

1 + (1− 2in )y

2 .

After substituting p′ we obtain

pXORapprox = 34 + 1

4(1− 2in

)y.

If the interposed bit is at the middle position (i.e. i = n2 ), then pXORapprox is 75%. This

implies that the noise rate of the linearly approximated y-XOR APUF given by the CRPsfrom the iPUF is 25%. For this reason CML should be even more difficult: We performedthe LA-CML attack using CRP based CMA-ES on a reliable 64-bit (3,3)-iPUF. Theprediction accuracy of the model is around 50% when 200,000 CRPs are used in thetraining phase (see Table 4).

Logistic Regression Attack on the iPUF: In the analysis of the security of the iPUFagainst classical ML attacks, we do not specifically consider the type of machine learningattack, i.e., deep neural network, Logistic Regression, or CRP based CMA-ES. We provethat the Logistic Regression attacks can at best learn a linear approximated model of theiPUF and therefore reduce to LA-RML or LA-CML.

Basically, CRP based Logistic Regression works more efficiently than CRP based CMA-ES when the searching space is large. For example, in [TB15], using Logistic Regressionwe can successfully model 4-XOR APUF with 15,000 CRPs only while using CMA-ES wecannot have a good model for 4-XOR APUF with 200,000 CRPs (see Figure 2). Hence,it is important to know if there exists any possible Logistic Regression based attacks onthe iPUF or not. We show that the answer to this question is NO by analyzing LogisticRegression (LR).

In the upper x-XOR APUF of the iPUF, since there are x n-bit APUF instances,we denote wx = (wx

1 , . . . ,wxx) as the model of the x-XOR APUF. Further we denote

wxi = (wx

i [0], . . . ,wxi [n]) as the (n+ 1) dimensional vectors and the models of the APUFs

in the x-XOR APUF, i = 1, . . . , x. Similarly, wy = (wy1 , . . . ,wy