The International Journal of Robotics Research2 The International Journal of Robotics Research...

Transcript of The International Journal of Robotics Research2 The International Journal of Robotics Research...

http://ijr.sagepub.com/Robotics Research

The International Journal of

http://ijr.sagepub.com/content/early/2011/03/31/0278364911401765The online version of this article can be found at:

DOI: 10.1177/0278364911401765

published online 1 April 2011The International Journal of Robotics ResearchAlvaro Collet, Manuel Martinez and Siddhartha S Srinivasa

The MOPED framework: Object recognition and pose estimation for manipulation

Published by:

http://www.sagepublications.com

On behalf of:

Multimedia Archives

can be found at:The International Journal of Robotics ResearchAdditional services and information for

http://ijr.sagepub.com/cgi/alertsEmail Alerts:

http://ijr.sagepub.com/subscriptionsSubscriptions:

http://www.sagepub.com/journalsReprints.navReprints:

http://www.sagepub.com/journalsPermissions.navPermissions:

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

The MOPED framework: Objectrecognition and pose estimation formanipulation

The International Journal ofRobotics Research00(000) 1–23© The Author(s) 2011Reprints and permission:sagepub.co.uk/journalsPermissions.navDOI: 10.1177/0278364911401765ijr.sagepub.com

Alvaro Collet1, Manuel Martinez1, and Siddhartha S Srinivasa2

AbstractWe present MOPED, a framework for Multiple Object Pose Estimation and Detection that seamlessly integrates single-image and multi-image object recognition and pose estimation in one optimized, robust, and scalable framework. Weaddress two main challenges in computer vision for robotics: robust performance in complex scenes, and low latencyfor real-time operation. We achieve robust performance with Iterative Clustering Estimation (ICE), a novel algorithmthat iteratively combines feature clustering with robust pose estimation. Feature clustering quickly partitions the sceneand produces object hypotheses. The hypotheses are used to further refine the feature clusters, and the two steps iterateuntil convergence. ICE is easy to parallelize, and easily integrates single- and multi-camera object recognition and poseestimation. We also introduce a novel object hypothesis scoring function based on M-estimator theory, and a novel poseclustering algorithm that robustly handles recognition outliers. We achieve scalability and low latency with an improvedfeature matching algorithm for large databases, a GPU/CPU hybrid architecture that exploits parallelism at all levels, andan optimized resource scheduler. We provide extensive experimental results demonstrating state-of-the-art performance interms of recognition, scalability, and latency in real-world robotic applications.

KeywordsObject recognition, pose estimation, scene complexity, efficiency analysis, scalability analysis, architecture optimization,robotic manipulation, personal robotics

1. Introduction

The task of estimating the pose of a rigid object modelfrom a single image is a well-studied problem in theliterature. In the case of point-based features, this isknown as the Perspective-n-Point (PnP) problem (Fischlerand Bolles 1981), for which many solutions are avail-able, both closed-form (Lepetit et al. 2008) and iterative(Dementhon and Davis 1995). Assuming that enough per-fect correspondences between 2D image features and 3Dmodel features are known, one only needs to use the PnPsolver of choice to obtain an estimation of an object’spose. When noisy measurements are considered, non-linearleast-squares minimization techniques (e.g. Levenberg–Marquardt (LM) (Marquardt 1963)) often provide betterpose estimates. Given that such techniques require goodinitialization, a closed-form PnP solver is often used toinitialize the non-linear minimizer.

The very related task of recognizing a single object anddetermining its pose from a single image requires solv-ing two sub-problems: finding enough correct correspon-dences between image features and model features, andestimating the model pose that best agrees with that set of

correspondences. Even with highly discriminative locallyinvariant features, such as SIFT (Lowe 2004) or SURF (Bayet al. 2008), mismatched correspondences are inevitable,forcing us to utilize robust estimation techniques such asM-estimators or RANSAC (Fischler and Bolles 1981) inmost modern object recognition systems. A comprehensiveoverview of model-based 3D object recognition/trackingtechniques is available at Lepetit and Fua (2005).

The problem of model-based 3D object recognition ismature, with a vast literature behind it, but it is far fromsolved in its most general form. Two problems greatlyinfluence the performance of any recognition algorithm.

The first problem is scene complexity. This can arisedue to feature count, with both extremes (too many fea-tures, or too few) significantly decreasing the recognition

1The Robotics Institute, Carnegie Mellon University, Pittsburgh, PA, USA2 Intel Labs Pittsburgh, Pittsburgh, PA, USA

Corresponding author:Alvaro Collet, The Robotics Institute, Carnegie Mellon University, 5000Forbes Avenue, Pittsburgh, PA 15213, USA.Email: [email protected]

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

2 The International Journal of Robotics Research 00(000)

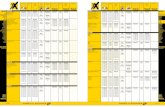

Fig. 1. Recognition of real-world scenes. (Top) High-complexityscene. MOPED finds 27 objects, including partially occluded,repeated and non-planar objects. Using a database of 91 modelsand an image resolution of 1,600 × 1,200, MOPED processesthis image in 2.1 seconds. (Bottom) Medium complexity scene.MOPED processes this 640 × 360 image in 87 ms and findsall known objects (the undetected green soup can is not in thedatabase).

rate. Related is the issue of repeated objects: the match-ing ambiguity introduced by repeated instances of an objectpresents an enormous challenge for robust estimators, as thematched features might belong to different object instancesdespite being correct. Solutions such as grouping (Lowe1987), interpretation trees (Grimson 1991) or image spaceclustering (Collet et al. 2009) are often used, but false pos-itives often arise from algorithms not being able to handleunexpected scene complexity. Figure 1 shows an example ofan image of high complexity and multiple repeated objectscorrectly processed by MOPED.

The second problem is that of scalability and systemlatency. In systems that operate online, a trade-off betweenrecognition performance and latency must be reached,depending on the requirements for each specific task. Inrobotics, the reaction time of robots operating in dynamicenvironments is often limited by the latency of theirperception (see, e.g., Srinivasa et al. 2010; WillowGarage2008). Increasing the volume of input data to process (e.g.increasing the image resolution, using multiple cameras)usually results in a severe penalty in terms of processingtime. Yet, with cameras getting better, cheaper, and smaller,

Fig. 2. Object grasping in a cluttered scene using MOPED. (Top)Scene observed by a set of three cameras. (Bottom) Our roboticplatform HERB (Srinivasa et al. 2010) in the process of graspingan object, using only the pose information from MOPED.

multiple high-resolution views of a scene are often easilyavailable. For example, our robot HERB has, at varioustimes, been outfitted with cameras on its shoulder, in thepalm, on its ankle-high laser, as well as with a stereo pair.Multiple views of a scene are often desirable, because theyprovide depth estimation, robustness against line-of-sightocclusions, and an increased effective field of view. Figure2 shows MOPED applied on a set of three images for grasp-ing. Also, the higher resolution can potentially improve therecognition of complicated objects and the accuracy of poseestimation algorithms, but often at a steep penalty cost, asthe extra resolution often causes an increase in the numberof false positives as well as severe degradation in terms oflatency and throughput.

In this paper, we address these two problems in model-based 3D object recognition through multiple novel con-tributions, both algorithmic and architectural. We providea scalable framework for object recognition specificallydesigned to address increased scene complexity, limit falsepositives, and utilize all computing resources to providelow-latency processing for one or multiple simultaneoushigh-resolution images. The Iterative Clustering Estimation(ICE) algorithm is our most important contribution to han-dling scenes with high complexity while keeping latencylow. In essence, ICE jointly solves the correspondence andpose estimation problems through an iterative procedure.ICE estimates groups of features that are likely to belongto the same object through clustering, and then searches forobject hypotheses within each of the groups. Each hypoth-esis found is used to refine the feature groups that are likelyto belong to the same object, which in turn helps in findingmore accurate hypotheses. The iteration of this procedure

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 3

focuses the object search only in the regions with potentialobjects, avoiding the waste of processing power in unlikelyregions. In addition, ICE allows for an easy parallelizationand the integration of multiple cameras in the same jointoptimization.

Another important contribution of this paper is a robustmetric to rank object hypotheses based on M-estimatortheory. A common metric used in model-based 3D objectrecognition is the sum of reprojection errors. However, thismetric prioritizes objects that have been detected with theleast amount of information, since each additional recog-nized object feature is bound to increase the overall error.Instead, we propose a quality metric that encourages objectsto have as most correspondences as possible, thus achievingmore stable estimated poses. This metric is relied upon inthe clustering iterations within ICE, and is specially use-ful when coupled with our novel pose clustering algorithm.The key insight behind our pose clustering algorithm, calledProjection Clustering, is that our object hypotheses havebeen detected from camera data, which might be noisy,ambiguous and/or contain matching outliers. Therefore,instead of using a regular clustering technique in pose space(using, e.g., Mean Shift (Cheng 1995) or Hough Trans-forms (Olson 1997)), we evaluate each type of outlier andpropose a solution that handles incorrect object hypothesesand effectively merges their information with those that aremost likely to be correct.

In this work we also tackle the issues of scalabil-ity, throughput, and latency, which are vital for real-timerobotics applications. ICE enables easy parallelism in theobject recognition process. We also introduce an improvedfeature matching algorithm for large databases that bal-ances matching accuracy and logarithmic complexity. OurGPU/CPU hybrid architecture exploits parallelism at alllevels. MOPED is optimized for bandwidth and cache man-agement and single input multiple data (SIMD) instruc-tions. Components such as feature extraction and matchinghave been implemented on a GPU. Furthermore, a novelscheduling scheme enables the efficient use of symmetricmultiprocessing (SMP) architectures, utilizing all availablecores on modern multi-core CPUs.

Our contributions are validated through extensive exper-imental results demonstrating state-of-the-art performancein terms of recognition, pose estimation accuracy, scalabil-ity, throughput and latency. Five benchmarks and a totalof over 6,000 images are used to stress-test every compo-nent of MOPED. The different benchmarks are executed ina database of 91 objects, and contain images with up to 400simultaneous objects, high-definition video footage, and amulti-camera setup.

Preliminary versions of this work have been publishedat Collet et al. (2009); Collet and Srinivasa (2010) andMartinez et al. (2010). Additional information, videos,and the full source code of MOPED are availableonline at http://personalrobotics.intel-research.net/projects/moped.

2. Problem formulation

The goal of MOPED is the recognition of objects fromimages given a database of object models, and the estima-tion of the pose of each recognized object. In this section,we formalize these inputs and outputs and introduce theterminology we use throughout the paper.

2.1. Input: images

The input to MOPED is a set I of M images

I = {I1, . . . , Im, . . . , IM }, Im = {Km, Tm, gm}. (1)

In the general case, each image is captured with a differ-ent calibrated camera. Therefore, each image Im is definedby a 3 × 3 matrix of intrinsic camera parameters Km, a4×4 matrix of extrinsic camera parameters Tm with respectto a known world reference frame, and a matrix of pixelvalues gm.

MOPED is agnostic to the number of images M . In otherwords, it is equally valid in both an extrinsically calibratedmulti-camera setup, and in the simplified case of a singleimage (M = 1) and a camera-centric world (T1 = I4, whereI4 is a 4× 4 identity matrix).

2.2. Input: object models

Each object to be recognized by MOPED first goes throughan offline learning stage, in which a sparse 3D modelof the object is created. First, a set of images is takenwith the object in various poses. Reliable local descrip-tors are extracted from natural features using SIFT (Lowe2004), which have proven to be one of the most distinctiveand robust local descriptors across a wide range of trans-formations (Mikolajczyk and Schmid 2005). Alternativedescriptors (e.g. SURF (Bay et al. 2008), ferns (Ozuysalet al. 2010)) can also be used. Using structure from motion(Szeliski and Kang 1994) on the matched SIFT keypoints,we merge the information from each training image intoa sparse 3D model. Each 3D point is linked to a descrip-tor that is produced from clustering individual matcheddescriptors in different views. Finally, proper alignment andscale for each model are computed to match the real objectdimensions and define an appropriate coordinate frame,which for simplicity is defined at the object’s center.

Let O be a set of object models. Each object model isdefined by its object identity o and a set of features Fo

O = {o, Fo}, Fo = {F1;o, . . . , Fi;o, . . . , FN ;o}. (2)

Each feature is represented by a 3D point location P =[X , Y , Z]T in the object’s coordinate frame and a featuredescriptor D, whose dimensionality depends on the type ofdescriptor used, e.g. k = 128 if using SIFT or k = 64 ifusing SURF. That is,

Fi;o = {Pi;o, Di;o}, Pi;o ∈ R3, Di;o ∈ R

k . (3)

The union of all features from all objects in O is definedas F =⋃

o∈O Fo.

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

4 The International Journal of Robotics Research 00(000)

Fig. 3. Illustration of two ICE iterations. Colored outlines represent estimated poses. (a) Feature extraction and matching. (b) Featureclustering. (c) Hypothesis generation. (d), (e) Cluster clustering. (f) Pose refinement. (g) Final result.

2.3. Output: recognized objects

The output of MOPED is a set of object hypotheses H.Each object hypothesis Hh = {o, Th} is represented by anobject identity o and a 4 × 4 matrix Th that corresponds tothe pose of the object with respect to the world referenceframe.

3. Iterative Clustering Estimation

The task of recognizing objects from local features inimages requires solving two sub-problems: the correspon-dence problem and the pose estimation problem. The cor-respondence problem refers to the accurate matching ofimage features to features that belong to a particular object.The pose estimation problem refers to the generation ofobject poses that are geometrically consistent with thefound correspondences.

The inevitable presence of mismatched correspondencesforces us to utilize robust estimation techniques, such asM-estimators or RANSAC (Fischler and Bolles 1981).In the presence of repeated objects in a scene, the cor-respondence problem cannot be solved in isolation, aseven perfect image-to-model correspondences need to belinked to a particular object instance. Robust estima-tion techniques often fail as well in the presence of thisincreased complexity. Solutions such as grouping (Lowe1987), interpretation trees (Grimson 1991) or image spaceclustering (Collet et al. 2009) alleviate the problem ofrepeated objects by reducing the search space for objecthypotheses.

The ICE algorithm at the heart of MOPED aims to jointlysolve the correspondence and pose estimation problemsin a principled way. Given initial image-to-model corre-spondences, ICE iteratively executes clustering and poseestimation to progressively refine which features belong toeach object instance, and to compute the object poses thatbest fit each object instance. The algorithm is illustrated inFigure 3.

Given a scene with a set of matched features(Figure 3(a)), the Clustering step generates groups of imagefeatures that are likely to belong to a single object instance(Figure 3(b)). If prior object pose hypotheses are available,features consistent with each object hypothesis are usedto initialize distinct clusters. Numerous object hypothesesare generated for each cluster (Figure 3(c)). Then, objecthypotheses are merged together if their poses are similar(Figure 3(d)), thus uniting their feature clusters into largerclusters that potentially contain all information about a sin-gle object instance (Figure 3(e)). With multiple images, theuse of a common reference frame allows us to link objecthypotheses recognized in different images, and thus createmulti-image feature clusters. If prior object pose hypothe-ses are not available (i.e. at the first iteration of ICE), weuse the density of local features matched to an object modelas a prior, with the intuition that groups of features spatiallyclose together are more likely to belong to the same objectinstance than features spread across all images. Thus, weinitialize ICE with clusters of features in image space (x, y),as seen in Figure 3(b).

The Estimation step computes object hypotheses givenclusters of features (as shown in Figure 3(c) and (f)).Each cluster can potentially generate one or multiple objecthypotheses, and also contain outliers that cannot be used forany hypothesis. A common approach for hypothesis gener-ation is the use of RANSAC along with a pose estimationalgorithm, although other approaches are equally valid. InRANSAC, we choose subsets of features at random withinthe cluster, then hypothesize an object pose that best fitsthe subset of features, and finally check how many fea-tures in the cluster are consistent with the pose hypothesis.This process is repeated multiple times and a set of objecthypotheses is generated for each cluster. The advantage ofrestricting the search space to that of feature clusters is thehigher likelihood that features from only one object instanceare present, or at most a very limited number of them. Thisprocess can be performed regardless of whether the featuresbelong to one or multiple images.

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 5

The set of hypotheses from the Estimation step are thenutilized to further refine the membership of each feature toeach cluster (Figure 3(d) and (e)). The whole process is iter-ated until convergence, which is reached when no featureschange their membership in a Clustering step (Figure 3(f)).

In practice, ICE requires very few iterations until con-vergence, usually as little as two for setups with one ora few simultaneous images. Parallelization is easy, sincethe initial steps are independent for each cluster in eachimage and object type. Therefore, large sets of images canbe potentially integrated into ICE with very little impact onoverall system latency. Two ICE iterations are required forincreased robustness and speed in setups ranging from oneto a few simultaneous images, while further iterations ofICE might potentially be necessary if tens or hundreds ofsimultaneous images are to be processed.

3.1. ICE as Expectation–Maximization

It is interesting to note the conceptual similarity betweenICE and the well-known Expectation–Maximization (EM)(Dempster et al. 1977) algorithm, particularly in the learn-ing of Gaussian Mixture Models (GMMs) (Redner andWalker 1984). EM is an iterative method for finding param-eter estimates in statistical models that contain unobservedlatent variables, alternating between expectation (E) andmaximization (M) steps. The E step computes the expectedvalue of the log-likelihood using the current estimate forthe latent variables. The M step computes the parametersthat maximize the expected log-likelihood found on the Estep. These parameter values determine the latent variabledistribution in the next E step. In GMMs, the EM algorithmis applied to find a set of Gaussian distributions that bestfits a set of data points. The E step computes the expectedmembership of each data point to one of the Gaussian distri-butions, while the M step computes the parameters for eachdistribution given the memberships computed in the E step.Then, the E step is repeated with the updated parameters torecompute new membership values. The entire procedure isrepeated until model parameters converge.

Despite the mathematical differences, the concept behindICE is essentially the same. The problem of object recog-nition in the presence of severe clutter and/or repeatedobjects can be interpreted as one of estimation of modelparameters, the pose of a set of objects, where the modeldepends on unobserved latent variables: the correspon-dences of image features to particular object instances.Under this perspective, the Clustering step of ICE com-putes the expected membership of each local feature to oneof the object instances, while the Estimation step computesthe best object poses given the feature memberships com-puted in the Clustering step. Then, the entire procedureis repeated until convergence. If our object models wereGaussian distributions, ICE and GMMs would be virtuallyequivalent.

4. The MOPED framework

This section contains a brief summary of the MOPEDframework and its components. Each individual componentis explored in depth in subsequent sections.

The steps itemized in the following sections compose thebasic MOPED framework for the typical requirements of arobotics application. In essence, MOPED is composed of asingle feature extraction and matching step per image, andmultiple iterations of ICE that efficiently perform objectrecognition and pose estimation per object in a bottom-up approach. Assuming the most common setup of objectrecognition, utilizing a single or a small set of images (i.e.less than 10), we fix ICE to compute two full Clustering–Estimation iterations plus a final cluster merging to removepotential multiple detections that might have not yet con-verged. In this way, we ensure a good trade-off between highrecognition rate and reduced system latency, but a greaternumber of iterations should be considered if working witha larger set of simultaneous images.

1. Feature extraction

Salient features are extracted from each image. We repre-sent images I as sets of local features fm. Each image Im ∈ Iis processed independently, so that

Im = {Km, Tm, fm}, fm = FeatExtract( gm) . (4)

Each individual local feature fj;m from image m is definedby a 2D point location pj;m = [x, y]T and its correspondingfeature descriptor dj;m; that is,

fm = {f1;m, . . . , fj;m, . . . , fJ ;m}, fj;m = {pj;m, dj;m}. (5)

We define the union of all extracted local features fromall images m as f =⋃M

m=1 fm.

2. Feature matching

One-to-one correspondences are created between extractedfeatures in the image set and object features stored in thedatabase. For efficiency, approximate matching techniquescan be used, at the cost of a decreased recognition rate. LetC be a correspondence between an image feature fj;m and amodel feature Fi;o, such that

Coj,m =

{ (fj;m, Fi;o

), if fj;m ↔ Fi;o

∅, otherwise

}

. (6)

The set of correspondences for a given object o andimage m is represented as Co

m =⋃∀j Co

j;m. The sets ofcorrespondences Cm, Co are defined equivalently as Cm =⋃∀j,o Co

j;m and Co =⋃∀j,m Co

j;m.

3. Feature clustering

Features matched to a particular object are clustered inimage space (x, y), independently for each image. Given

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

6 The International Journal of Robotics Research 00(000)

that spatially close features are more likely to belong tothe same object instance, we cluster the set of feature loca-tions p ∈ Co

m, producing a set of clusters that group featuresspatially close together.

Each cluster Kk is defined by an object identity o, animage index m, and a subset of the correspondences toobject O in image Im, that is,

Kk = {o, m, Ck ⊂ Com}. (7)

The set of all clusters is expressed as K.

4. Estimation 1: hypothesis generation

Each cluster is processed in each image independently insearch of objects. RANSAC and LM are used to find objectinstances that are loosely consistent with each object’sgeometry in spite of outliers. The number of RANSAC iter-ations is high and the number of LM iterations is kept low,so that we discover multiple object hypotheses with coarsepose estimation. At this step, each hypothesis h consists of

h = {o, k, Th, Ch ⊂ Ck}, (8)

where o is the object identity of hypothesis h, k is a clus-ter index, Th is a 4 × 4 transformation matrix that definesthe object hypothesis pose with respect to the world refer-ence frame, and Ch is the subset of correspondences thatare consistent with hypothesis h.

5. Cluster clustering

As the same object might be present in multiple clusters andimages, poses are projected from the image set onto a com-mon coordinate frame, and features consistent with a poseare re-clustered. New, larger clusters are created, that oftencontain all consistent features for a whole object across theentire image set. These new clusters contain

KK = {o, CK ⊂ Co}. (9)

6. Estimation 2: pose refinement

After Steps 4 and 5, most outliers have been removed,and each of the new clusters is very likely to containfeatures corresponding to only one instance of an object,spanned across multiple images. The RANSAC procedureis repeated for a low number of iterations, and poses areestimated using LM with a larger number of iterations toobtain the final poses from each cluster that are consistentwith the multi-view geometry.

Each multi-view hypothesis H is defined by

H = {o, TH , CH ⊂ CK}, (10)

where o is the object identity of hypothesis H , TH is a4× 4 transformation matrix that defines the object hypoth-esis pose with respect to the world reference frame, and CH

is the subset of correspondences that are consistent withhypothesis H .

7. Pose recombination

A final merging step removes any multiple detections thatmight have survived, by merging together object instancesthat have similar poses. A set of hypotheses H, with Hh ={o, TH }, is the final output of MOPED.

5. Addressing complexity

In this section, we provide an in-depth explanation of ourcontributions to address complexity that have been inte-grated in the MOPED object recognition framework, andhow each of our contributions relate to the ICE procedure.

5.1. Image space clustering

The goal of Image Space Clustering in the context of objectrecognition is the creation of object priors based solely onimage features. In a generic unstructured scene, it is infea-sible to attempt the recognition of objects with no higher-level reasoning than the image-model correspondencesCo

j,m = ( fj;m, Fi;o). Correspondences for a single object typeo may belong to different object instances, or may be match-ing outliers. Multi-camera setups are even more uncertain,since the amount of image features increases dramatically,and so does the probability of finding multiple repeatedobjects in the combined set of images. Under these circum-stances, the ability to compute a prior over the image fea-tures is of utmost importance, in order to evaluate which ofthe features are likely to belong to the same object instance,and which of them are likely to be outliers.

RANSAC (Fischler and Bolles 1981) and M-estimatorsare often the methods of choice to find models in the pres-ence of outliers. However, both of them fail in the pres-ence of heavy clutter and multiple objects, in which onlya small percentage of the matched correspondences belongto the same object instance. To overcome this limitation,we propose the creation of object priors based on the den-sity of correspondences across the image, by exploitingthe assumption that areas with a higher concentration ofcorrespondences for a given model are more likely to con-tain an object than areas with very few features. Therefore,we aim to create subsets of correspondences within eachimage that are reasonably close together and assume theyare likely to belong to the same object instance, avoidingthe waste of computation time in trying to relate featuresspread all across the image. We can accomplish this goal byseeking the modes of the density distribution of features inimage space. A well-known technique for this task is MeanShift clustering (Cheng 1995), which is a particularly goodchoice for MOPED because no fixed number of clustersneeds to be specified. Instead, a radius parameter needs tobe chosen, that intuitively selects how close two featuresmust in order to be part of the same cluster. Thus, for eachobject in each image independently, we cluster the set offeature locations p ∈ Co

m (i.e. pixel positions p = (x, y)),

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 7

Fig. 4. Example of highly cluttered scene and the importanceof clustering. (Top-left) Scene with nine overlapping notebooks.(Bottom-left) Recovered poses for notebooks with MOPED.(Right) Clusters of features in image space.

producing a set of clusters K that contain groups of fea-tures spatially close together. Clusters that contain veryfew features, those in which no object can be recognized,are discarded, thus considering the features as outliers anddiscarding them as well.

The advantage of using Mean Shift clusters as object pri-ors is illustrated in Figure 4. In Figure 4(top-left) we seean image with nine notebooks. As a simple example, let usimagine that all notebooks have the same number of cor-respondences, and that 70% of those correspondences arecorrect, i.e. that the global inlier ratio w = # inliers

# points = 0.7.The inlier ratio for a particular notebook is then wobj =

w# obj = 0.0778. The number of iterations k theoreticallyrequired (Fischler and Bolles 1981) to find one particularinstance of a notebook with probability p is

k = log( 1− p)

log( 1−( wobj)n ), (11)

where n is the number of inliers for a successful detec-tion. If we require n = 5 inliers and a probability p =0.95 of finding a particular notebook, then we should per-form k = 1.05M iterations of RANSAC. On the otherhand, clustering the correspondences in smaller sets as inFigure 4 (right) means that fewer notebooks (at most three)are present in a given cluster. In such a scenario, find-ing one particular instance of a notebook with 95% prob-ability requires 16, 586, and 4,386 iterations when 1, 2,and 3 notebooks, respectively, are present in a cluster, atleast three orders of magnitude lower than the previouscase.

5.2. Estimation 1: Hypothesis generation

In the first Estimation step of ICE, our goal is to generatecoarse object hypotheses from clusters of features, so thatthe object poses can be used to refine the cluster associa-tions. In general, each cluster Kk = {o, m, Ck ⊂ Co

m} maycontain features from multiple object hypotheses as well asmatching outliers. In order to handle the inevitable presence

of matching outliers, we use the robust estimation proce-dure RANSAC. For a given cluster Kk , we choose a subsetof correspondences C ⊂ Ck and estimate an object hypoth-esis with the best pose that minimizes the sum of repro-jection errors (see Equation (18)). We minimize the sumof reprojection errors via a standard LM non-linear leastsquares minimization. If the amount of correspondences inCk consistent with the hypothesis is higher than a thresholdε, we create a new object instance and refine the estimatedpose using all consistent correspondences in the optimiza-tion. We then repeat this procedure until the amount ofunallocated points is lower than a threshold, or the maxi-mum number of iterations has been exceeded. By repeatingthis procedure for all clusters in all images and objects,we produce a set of hypotheses h, where each hypothesish = {o, k, Th, Ch ⊂ Ck}.

At this stage, we wish to obtain a set of coarse posehypotheses to work with, as fast as possible. We requirea large number of RANSAC iterations to detect as manyobject hypotheses as possible, but we can use a low maxi-mum number of LM iterations and loose threshold ε whenminimizing the sum of reprojection errors. Accurate posewill be achieved in later stages of MOPED. The initializa-tion of LM for pose estimation can be implemented witheither fast PnP solvers such as those proposed in Colletet al. (2009) and Lepetit et al. (2008) or even completely atrandom within the space of possible poses (e.g. some dis-tance in front of the camera). Random initialization is thedefault choice for MOPED, as it is more robust to the poseambiguities that sometimes confuse PnP solvers.

5.3. Hypothesis quality score

It is useful at this point to introduce a robust metric toquantitatively compare the goodness of multiple objecthypotheses. The desired object hypothesis metric shouldfavor hypotheses with:

• the greatest number of consistent correspondences;• the minimum distance error in each of the correspon-

dences.

The sum of reprojection errors in Equation (19) is not agood evaluation metric according to these requirements, asthis error is bound to increase whenever an extra correspon-dence is added. Therefore, the sum of reprojection errorsfavors hypotheses with the lowest number of correspon-dences, which can lead to choosing spurious hypothesesover more desirable ones.

In contrast, we define a robust estimator based on theCauchy distribution that balances the two criteria statedabove. Consider the set of consistent correspondences Ch

for a given object hypothesis, where each correspondenceCj = ( fj;m, Fi;o). Assume the corresponding features in Cj

have locations in an image pj and in an object model Pj. Letdj = d( pj, ThPj) be an error metric that measures the dis-tance between a 2D point in an image and a 3D point from

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

8 The International Journal of Robotics Research 00(000)

hypothesis h with pose Th (e.g. reprojection, backprojectionerrors). Then, the Cauchy distribution ψ( dj) is defined by

ψ( dj)= 1

1+(

djσ

)2 , (12)

where σ 2 parameterizes the cut-off distance at whichψ( dj)= 0.5. In our case, the distance metric dj is thereprojection error measured in pixels. This distribution ismaximal when dj = 0, i.e. ψ( 0)= 1, and monotoni-cally decreases to zero when a pair of correspondencesare infinitely away from each other, i.e. ψ(∞)= 0. TheQuality Score Q for a given object hypothesis h is thendefined as a summation over the Cauchy scores ψ( dj) forall correspondences:

Q( h)=∑

∀j:Cj∈Ch

ψ( dj)=∑

∀Cj∈Ch

1

1+ d2(pj ,ThPj)

σ 2

. (13)

The Q-score has a lower bound at 0, if a given hypoth-esis has no correspondences or if all its correspondenceshave infinite error, and has an upper bound at |O|, whichis the total number of correspondences for model O. Thisscore allows us to reliably rank our object hypotheses andevaluate their strength.

The cut-off distance σ may be either a fixed value, oradjusted at each iteration via robust estimation techniques(Zhang 1997), depending on the application. Robust esti-mation techniques require a certain minimum outlier/inlierratio to work properly ( # outliers

# inliers < 1 in all cases), knownas the breaking point of a robust estimator (Huber 1981).In the case of MOPED, the outlier/inlier ratio is often wellover the breaking point of any robust estimator, especiallywhen multiple instances of an object are present; as a con-sequence, robust estimators might result in unrealisticallylarge values of σ in complex scenes. Therefore, we chooseto set a fixed value for the cut-off parameter, σ = 2 pixels,for a good balance between encouraging a large number ofcorrespondences while keeping their reprojection error low.

5.4. Cluster Clustering

The disadvantage of separating the image search space intoa set of clusters is that the produced pose hypotheses maybe generated with only partial information from the scene,given that information from other clusters and other viewsis not considered in the initial Estimation step of ICE. How-ever, once a rough estimate of the object poses is known, wecan merge the information from multiple clusters and mul-tiple views to obtain sets of correspondences that containall features from a single object instance (see Figure 3).

Multiple alternatives are available to group similarhypotheses into clusters. In this section, we propose a novelhypothesis clustering algorithm called Projection Cluster-ing, in which we perform correspondence-level groupingfrom a set of object hypotheses h and provide a mechanismto robustly filter any pose outliers.

For comparison, we introduce a simpler Cluster Cluster-ing scheme based on Mean Shift, and analyze the compu-tational complexity of both schemes to conclude in whichcases we might prefer one over the other.

5.4.1. Mean Shift clustering on pose space A straightfor-ward hypothesis clustering scheme is to perform Mean Shiftclustering on all hypotheses h = {o, k, Th, Ch ⊂ Ck} fora given object type o. In particular, we cluster the posehypotheses Th in pose space. In order to properly measuredistances between poses, it is convenient to parameterizerotations in terms of quaternions and project them in thesame half of the quaternion hypersphere prior to clustering,using then Mean Shift on the resulting seven-dimensionalposes. After this procedure, we merge the correspondenceclusters Ch of those poses that belong to the same posecluster TK :

CK =⋃

Th∈TK

Ch. (14)

This produces clusters KK = {o, CK ⊂ Co} whose corre-spondence clusters span over multiple images. In addition,the centroid of each pose cluster TK can be used as initial-ization for the following Estimation iteration of ICE. At thispoint, we can discard all correspondences not consistentwith any pose hypothesis, thus filtering many outliers andreducing the search space for future iterations of ICE. Thecomputational complexity of Mean Shift is O( dN2t), whered = 7 is the dimensionality of the clustered data, N = |h| isthe total number of hypotheses to cluster, and t is the num-ber of iterations that Mean Shift requires. In practice, thenumber of hypotheses is often fairly small, and t ≤ 100 inour implementation.

5.4.2. Projection clustering Mean Shift provides basicclustering in pose space, and works well when multiplecorrect detections of an object are present. However, it ispossible that spurious false positives are detected in thehypothesis generation step. It is important to realize thatthese false positives are very rarely exclusively due to ran-dom outliers in the feature matching process. To the con-trary, most outlier detections are artifacts of the projectionof a 3D scene into a 2D image when captured by a perspec-tive camera. In particular, we can distinguish between twodifferent cases:

• A group of correct matches whose 3D configuration isdegenerate or near-degenerate (e.g. a group of 3D pointsthat are almost collinear), incorrectly grouped with onesingle matching outlier. In this case, the output pose islargely determined by the location of the matching out-lier, which causes arbitrarily erroneous hypotheses to beaccepted as correct.

• Pose ambiguities in objects with planar surfaces. Thesum of reprojection errors in planar surfaces may con-tain two complementary local minima in some config-urations of pose space (Schweighofer and Pinz 2006),

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 9

which often causes the appearance of two distinct andpartially overlapping object hypotheses. These hypothe-ses are usually too distant in pose space to be groupedtogether.

The false positives output in the pose hypothesis genera-tion are often too distant from any correct hypothesis in thescene, and cannot be merged using regular clustering tech-niques (e.g. Mean Shift). However, the point features thatgenerated those false positives are usually correct, and theycan provide valuable information to some of the correctobject hypotheses. In Projection clustering, we process eachpoint feature individually, and assign them to the strongestpose hypothesis to which they might belong. Usually, spu-rious poses only contain a limited number of consistentpoint features, thus resulting in lower Q-scores (Section5.3) than correct object hypotheses. By transferring mostof the point features to the strongest object hypotheses, wenot only utilize the extra information available in the scenefor increased accuracy, but also filter most false positivesby lowering their number of consistent points below therequired minimum.

The first step in Projection clustering is the computa-tion of Q-scores for all object hypotheses h. We generatea pool of potential correspondences Co that contains allcorrespondences for a given object type that are consistentwith at least one pose hypothesis. Correspondences fromall images are included in Co. We compute the Q-score foreach hypothesis h from all correspondences Cj = ( fj, Fj)such that Cj ∈ Co:

Q( h)=∑

∀j:Cj∈Co

ψ( dj)=∑

∀Cj∈Co

1

1+ d2(pj ,ThPj)

σ 2

. (15)

For each potential correspondence Cj in Co, we define aset of likely hypotheses hj as the set of those hypotheses hwhose reprojection error is lower than a threshold γ . Thisthreshold can be interpreted as an attraction coefficient;large values of γ lead to heavy transference of correspon-dence to strong hypotheses, while small values cause fewcorrespondences to transfer from one hypothesis to another.In our experiments, a large threshold γ of 64 pixels is used:

h ∈ hj ⇐⇒ d2( pj, ThPj)< γ . (16)

At this point, the relation between correspondences Cj

and hypotheses h is broken. In other words, we emptythe set of correspondences Ch for each hypothesis h, sothat Ch = ∅. Then, we re-assign each correspondenceCj ∈ Co to the pose hypothesis h within hj with strongeroverall Q-score:

Ch ← Cj : h = arg maxh∈hj

Q( h) . (17)

Finally, it is important to check the remaining num-ber of correspondences that each object hypothesis hasafter Projection clustering. Pose hypotheses that retain less

than a minimum number of consistent correspondences areconsidered outliers and therefore discarded.

The Projection Clustering algorithm we propose has acomputational complexity O( MNI), where M = |C| is thetotal number of correspondences to process, N = |h| is thenumber of pose hypotheses and I = |I| is the total numberof images. Comparing this with the complexity O( dN2t) ofMean Shift, we see that the only advantage of Mean Shift interms of computational cost would be in the case of objectmodels with enormous numbers of features and many high-resolution images, so that O( MNI)� O( dN2t). Whileoffering a similar computational complexity, the advantageof Projection Clustering with respect to Mean Shift is interms of improved robustness and outlier detection, bothof which are essential for handling increased complexity inMOPED.

5.4.3. Performance comparison In this experiment, wecompare the recognition performance of MOPED whenusing the two Cluster Clustering approaches explained inthis section. In addition, and given that Projection Cluster-ing depends on the behavior of a hypothesis ranking mech-anism, we implement four different ranking mechanismsand compare their performance. The first ranking mecha-nism is our own Q-score introduced in Section 5.3, whilethe other approaches considered are the sum of reprojectionerrors, the average reprojection error, and the number ofconsistent correspondences. The performance of MOPEDwhen using the different Cluster Clustering and hypothesisranking algorithms is shown in Table 1, on a subset of 100images from the Simple Movie Benchmark (Section 6.2.3)that contain a total of 1,289 object instances.

An object hypothesis is considered a true positive if itspose estimate is within 5 cm and 10◦ of the ground truthpose. Object hypotheses whose pose is outside this errorrange are considered false positives. Ground truth objectswithout an associated true positive are considered false neg-atives. According to these performance metrics, the appear-ance of pose ambiguities and misdetections is particularlycritical, since they often produce both a false positive (therotational error is greater than the threshold) and a falsenegative (no pose agrees with the ground truth).

The overall best performer is Projection Clustering whenusing Q-score as its hypothesis ranking metric, which cor-rectly recognizes and estimates the pose of 87.6% of theobjects in the dataset. Mean Shift is the second best per-former, but the increased false-positive rate is mainly due topose ambiguities that cannot be resolved and produce spu-rious detections. The different hypothesis ranking schemescritically impact the overall performance of ProjectionClustering, as multiple poses often need to be merged afterthe first Clustering–Estimation iteration. Ranking spuriousposes over correct poses results in an increased numberof false positives, as Projection Clustering is unable tomerge object hypothesis properly. While Q-score is ableto estimate the best pose out a set of multiple detections,

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

10 The International Journal of Robotics Research 00(000)

Table 1. Mean recognition per image: mean shift versus projec-tion clustering, in the Simple Movie Benchmark (see Section 6.2.3for details).

True False Falsepositive positive negative

Mean Shift 10.92 3.32 1.97Projection Clustering

(Q-score) 11.3 2.29 1.59Projection Clustering

(Reprojection) 11.05 7.4 1.84Projection Clustering

(Average Reprojection) 10.88 7.81 2.01Projection Clustering

(Number ofCorrespondences) 3.6 9.82 9.29

Reprojection and Average Reprojection select sub-optimalposes that contain just a few points, often including outliers.This behavior severely impacts the performance of Projec-tion Clustering, which barely merges any pose hypothe-ses and produces an increased number of false positives.The Number of Correspondences metric prefers hypotheseswith many consistent matches in the scene, and ProjectionClustering merges hypotheses correctly. Unfortunately, thechosen best hypotheses are in most cases incorrect due toambiguities, estimating only 28% of the poses correctly.It must be noted that in simple scenes with a few unoc-cluded objects, all of the evaluated metrics perform sim-ilarly well. Projection Clustering and Q-score, however,showcase increased robustness when working with the mostcomplex scenes.

5.5. Estimation 2: pose refinement

At the second iteration of ICE, we use the information frominitial object hypotheses to obtain clusters that are mostlikely to belong to a single object instance, with very fewoutliers. For this reason, the Estimation step at the seconditeration of ICE requires only a low number of RANSACiterations to find object hypotheses. In addition, we usea high number of LM iterations to estimate object posesaccurately.

This procedure is equivalent to that of the first Esti-mation step, being the objective function to minimize theonly difference between the two. For a given multi-viewfeature cluster KK , we perform RANSAC on subsets ofcorrespondences C ⊂ CK and obtain pose hypothesesH = {o, TH , CH ⊂ CK} with consistent correspondencesCH ⊂ CK . The objective function to minimize can be eitherthe sum of reprojection errors (Equation (19)) or the sum ofbackprojection errors (Equation (24)). See the appendix fora discussion on these two objective functions.

We initialize the non-linear minimization of the cho-sen objective function using the highest-ranked pose in CK

according to their Q-scores. This non-linear minimizationis performed, again, with a standard LM non-linear leastsquares minimization algorithm.

5.6. Truncating ICE: pose recombination

An optional final step should be applied in case ICE has notconverged after two iterations, in order to remove multipledetections of objects. In this case, we perform a full Clus-tering step as in Section 5.4, and if any hypothesis H ∈ His updated with transferred correspondences, we perform afinal LM optimization that minimizes Equation (24) on allcorrespondences CH .

6. Addressing scalability and latency

In this section, we present multiple contributions to opti-mize MOPED in terms of scalability and latency. We firstintroduce a set of four benchmarks designed to stress testevery component of MOPED. Each of our contributions isevaluated and verified on this set of benchmarks.

6.1. Baseline system

For comparison purposes, we provide results for a Baselinesystem that implements the MOPED framework with noneof the optimizations described in Section 6. In each case,we have tried to choose the most widespread publicly avail-able code libraries for each task. In particular, the followingconfiguration is used as the baseline for our performanceexperiments:

• Feature extraction with an OpenMP-enabled, CPU-optimized version of SIFT we have developed.

• Feature matching with a publicly available implemen-tation of Approximate Nearest Neighbor (ANN) byArya et al. (1998) and 2-NN per object (described inSection 6.3.1).

• Image space clustering with a publicly available Cimplementation of Mean Shift (Dollár and Rabaud2010).

• Estimation 1 with 500 iterations of RANSAC and up to100 iterations of the LM implementation from Lourakis(2010), optimizing the sum of reprojection errors inEquation (18).

• Cluster clustering with Mean Shift, as described inSection 5.4.1.

• Estimation 2 with 24 iterations of RANSAC and up to500 iterations of LM, optimizing the sum of backprojec-tion errors in Equation (24). The particular number ofiterations of RANSAC in this step is not a critical factor,as most objects are successfully recognized in the first10–15 iterations. It is useful, however, to use a multipleof the number of concurrent threads (see Section 6.4.3)for performance reasons.

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 11

• Pose recombination with Mean Shift and up to 500iterations of LM.

6.2. Benchmarks

We present four benchmarks (Figure 5) designed to stresstest every component of our system. All benchmarks, bothsynthetic and real world, provide exclusively a set of imagesand ground truth object poses (i.e. no synthetically com-puted feature locations or correspondences). We performedall experiments on a 2.33 GHz quad-core Intel Xeon E5345CPU, 4 GB of RAM and a nVidia GeForce GTX 260 GPUrunning Ubuntu 8.04 (32 bits).

6.2.1. The Rotation Benchmark The Rotation Benchmarkis a set of synthetic images that contains highly clutteredscenes with up to 400 cards in different sizes and orienta-tions. This benchmark is designed to test MOPED’s scal-ability with respect to the database size, while keeping aconstant number of features and objects. We have generateda total of 100 independent images for different resolutions(1,400 × 1,050, 1,000 × 750, 700 × 525, 500 × 375 and350 × 262). Each image contains from 5 to 80 differentobjects and up to 400 simultaneous object instances.

6.2.2. The Zoom Benchmark The Zoom Benchmark is aset of synthetic images that progressively zooms in on 160cards until only 12 cards are visible. This benchmark isdesigned to check the scalability of MOPED with respectto the total number of detected objects in a scene. We gen-erated a total of 145 independent images for different reso-lutions (1,400× 1,050, 1,000× 750, 700× 525, 500× 375,and 350×262). Each image contains from 12 to 80 differentobjects and up to 160 simultaneous object instances. Thisbenchmark simulates a board with 160 cards seen by a 60◦

field of view (FOV) camera at distances ranging from 280 to1,050 mm. The objects were chosen to have the same num-ber of features at each scale. Each image has over 25,000features.

6.2.3. The Simple Movie Benchmark Synthetic bench-marks are useful to test a system in controlled conditions,but are a poor estimator of the performance of a systemin the real world. Therefore, we provide two real-world sce-narios for algorithm comparison. The Simple Movie Bench-mark consists of a 1,900-frame movie at 1,280× 720 reso-lution, each image containing up to 18 simultaneous objectinstances.

6.2.4. The Complex Movie Benchmark The ComplexMovie Benchmark consists of a 3,542-frame movie at1,600 × 1,200 resolution, each image containing up to60 simultaneous object instances. The database contains91 models and 47,342 SIFT features when running thisbenchmark. It is noteworthy that the scenes in this video

present particularly complex situations, including: severalobjects of the same model contiguous to each other, whichstresses the clustering step; overlapping partially occludedobjects, which stresses RANSAC; and objects in particu-larly ambiguous poses, which stresses both LM and themerging algorithm, that encounter difficulties determiningwhich pose is preferable.

6.3. Feature matching

Once a set of features have been extracted from inputimage(s), we must find correspondences between the imagefeatures and our object database. Matching is done as anearest-neighbor search in the 128-dimensional space ofSIFT features. An average database of 100 objects can con-tain over 60,000 features. Each input image, depending onthe resolution and complexity of the scene, can contain over10,000 features.

The feature matching step aims to create one-to-one cor-respondences between model and image features. The fea-ture matching step is, in general, the most important bot-tleneck for model-based object recognition to scale to largeobject databases. In this section, we propose and evaluatedifferent alternatives to maximize scalability with respectto the number of objects in the database, without sacrificingaccuracy. The extension of the matching search space is, inthis case, the balancing factor between accuracy and speedwhen finding nearest neighbors.

There are no known exact algorithms for solving thematching problem that are faster than linear search.Approximate algorithms, on the other hand, can providemassive speedups at the cost of a decreased matching accu-racy, and are often used wherever speed is an issue. Manyapproximate approaches for finding the nearest neighbors toa given feature are based on kd-trees (e.g. ANN (Arya et al.1998), randomized kd-trees (Silpa-Anan and Hartley 2008),FLANN (Muja and Lowe 2009)) or hashing (e.g. LSH(Andoni and Indyk 2006)). A ratio test between the firsttwo nearest neighbors is often performed for outlier rejec-tion. We analyze the different alternatives in which thesetechniques are often applied to feature matching, and pro-pose an intermediate solution that achieves a good balancebetween recognition rate and system latency.

6.3.1. 2-NN per object On the one end, we can comparethe image features against each model independently. Ifusing, e.g., ANN, we build a kd-tree for each model inthe database once (off-line), and we match each of themagainst every new image. This process entails a complex-ity of O( |fm||O| log( |F̄o|) ), where |fm| is the number offeatures on the image, |O| the number of models in thedatabase, and |F̄o| the mean number of features for eachmodel. When |O| is large, this approach is vastly ineffi-cient as the cost of accessing each kd-tree dominates theoverall search cost. The search space is in this case verylimited, and there is no degradation in performance when

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

12 The International Journal of Robotics Research 00(000)

Fig. 5. MOPED Benchmarks. For the sake of clarity, only half of the detected objects are marked. (a) The Rotation Benchmark: MOPEDprocesses this scene 36.4 times faster than the baseline. (b) The Zoom Benchmark: MOPED processes this scene 23.4 times faster thanthe Baseline. (c) The Simple Movie Benchmark. (d) The Complex Movie Benchmark.

new models are added to the database. We refer to it asOBJ_MATCH.

6.3.2. 2-NN per database On the other end, we can com-pare the image features against the whole object database.This naïve alternative, which we term DB_MATCH, buildsjust one kd-tree containing the features from all models.This solution has a complexity of O( |fm| log( |O||F̄o|) ).The search space is in this case the whole database. Whilethis approach is orders of magnitude faster than the previ-ous one, every new object added to the database degradesthe overall recognition performance of the system due to thepresence of similar features in different objects. In the limit,if an infinite number of objects were added to the database,no correspondences would ever be found, because the ratiotest between the first and second nearest neighbor would bealways close to 1.

6.3.3. Brute force on GPU The advent of GPUs and theirmany-core architecture allows the efficient implementationof an exact feature matching algorithm. The parallel natureof the brute force matching algorithm suits the GPU, andallows it to be faster than the ANN approach when |O|is not too large. Given that this algorithm scales linearly

with the number of features instead of logarithmically, wecan match each model independently without performanceloss.

6.3.4. k-NN per database Alternatively, one can considerthe closest k nearest neighbors instead (with k > 2). k-ANNimplementations using kd-trees can provide more neighborswithout significantly increasing their computational cost, asthey are often a byproduct of the process of obtaining thenearest neighbor. An intermediate approach to those pre-sented before is the search for k multiple neighbors in thewhole database. If two neighbors from the same model arefound, the distance ratio is then applied to the two nearestneighbors from the same model. If the nearest neighbor isthe only neighbor for a given model, we apply the distanceratio with the second nearest neighbor to avoid spurious cor-respondences. This algorithm (with k = 90) is the defaultchoice for MOPED.

6.3.5. Performance comparison Figure 6 compares thecost of the different alternatives on the Rotation Bench-mark. OBJ_MATCH and GPU scale linearly with respect to|O|, while DB_MATCH and MOPED-k scale almost loga-rithmically. We show MOPED-k using k = 90 and k = 30.

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 13

Table 2. Feature matching algorithms in the Simple Movie Bench-mark. GPU used as performance baseline, as it computes exactnearest neighbors.

Number of After After Finalcorrespondences matching clustering

GPU 3893.7 853.2 562.1OBJ_MATCH 3893.6 712.0 449.2DB_MATCH 1778.4 508.8 394.7MOPED-90 3624.9 713.6 428.9

Matching time (ms) Objects found

GPU 253.34 8.8OBJ_MATCH 498.59 8.0DB_MATCH 129.85 7.5MOPED-90 140.36 8.2

The value of k adjusts the speed and quality behavior ofMOPED between OBJ_MATCH (k = ∞) and DB_MATCH(k = 2). The recognition performance of MOPED-k whenusing the different strategies is shown in Table 2. GPUprovides an upper bound for the object recognition, as itis an exact search method. OBJ_MATCH comes closestin raw matching accuracy with MOPED-90 a close sec-ond. However, the number of objects detected are nearlythe same. The matching speed of MOPED-90 is, how-ever, significantly better than OBJ_MATCH. Feature match-ing in MOPED-90 thus provides a significant performanceincrease without sacrificing much accuracy.

6.4. Architecture optimizations

Our algorithmic improvements were focused mainly onboosting the scalability and robustness of the system. Thearchitectural improvements of MOPED are obtained as aresult of an implementation designed to make the bestuse of all of the processing resources of standard com-pute hardware. In particular, we use GPU-based process-ing, intra-core parallelization using SIMD instructions, andmulti-core parallelization. We have also carefully optimizedthe memory subsystem, including bandwidth transfer andcache management.

All optimizations have been devised to reduce the latencybetween the acquisition of an image and the output of thepose estimates, to enable faster response times from ourrobotic platform.

6.4.1. GPU and embarrassingly parallel problems State-of-the-art CPUs, such as the Intel Core i7 975 Extreme,can achieve a peak performance of 55.36 GFLOPS, accord-ing to the manufacturer (Intel Corp. 2010). State-of-the-artGPUs, such as the ATI Radeon HD 5900, can achieve apeak performance of 4,640 SP GFLOPS (AMD 2010).

Table 3. SIFT versus SURF: mean recognition performance perimage in the Zoom Benchmark.

Latency (ms) Recognized objects

SIFT 223.072 13.83SURF 86.136 6.27

To use GPU resources efficiently, input data needs to betransferred to the GPU memory. Then, algorithms are exe-cuted simultaneously on all shaders, and finally recover theresults from the GPU memory. As communication betweenshaders is expensive, the best GPU-performing algorithmsare those that can be divided evenly into a large num-ber of simple tasks. This class of easily separable prob-lems is called Embarrassingly Parallel Problems (EPPs)(Wilkinson and Allen 2004).

GPU-based feature extraction. Most feature extractionalgorithms consist of an initial keypoint detection step fol-lowed by a descriptor calculation for each keypoint, bothof which are EPPs. Keypoint detection algorithms can pro-cess each pixel from the image independently. They mayneed information about neighboring pixels, but they do nottypically need results from them. After obtaining the list ofkeypoints, the respective descriptors can also be calculatedindependently.

In MOPED, we consider two of the most popular locallyinvariant features: SIFT (Lowe 2004) and SURF (Bay et al.2008). SIFT features have proven to be among the best-performing invariant descriptors in the literature (Mikola-jczyk and Schmid 2005), while SURF features are consid-ered to be a fast alternative to SIFT. MOPED uses SIFT-GPU (Wu 2007) as its main feature extraction algorithm.If compatible graphics hardware is not detected MOPEDautomatically reverts back to performing SIFT extractionon the CPU, which is an OpenMP-enabled, CPU-optimizedversion of SIFT we have developed. A GPU-enabled ver-sion of SURF, GPU-SURF (Cornelis and Van Gool 2008),is used for comparison purposes.

We evaluate the latency of the three implementations inFigure 7. The comparison is as expected: GPU versions ofboth SIFT and SURF provide tremendous improvementsover their non-GPU counterparts. Table 3 compares theobject recognition performance of SIFT and SURF: SURFproves to be 2.59 times faster than SIFT at the cost ofdetecting 54% less objects. In addition, the performancegap between both methods decreases significantly as imageresolution increases, as shown in Figure 7. For MOPED,we consider SIFT to be almost always the better alterna-tive when balancing recognition performance and systemlatency.

GPU matching. Performing feature matching in the GPUrequires a different approach than the standard ANN tech-niques. Using ANN, each match involves searching in a

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

14 The International Journal of Robotics Research 00(000)

Fig. 6. Scalability of feature matching algorithms with respect to the database size, in the Rotation Benchmark at 1,400 × 1,050resolution.

Fig. 7. SIFT-CPU versus SIFT-GPU versus SURF-GPU, in the Rotation Benchmark at different resolutions. (Left) SIFT-CPU versusSIFT-GPU: 658% speed increase in SIFT extraction on GPU. (Right) SIFT-GPU versus SURF-GPU: 91% speed increase in SURF overSIFT at the cost of lower matching performance.

kd-tree, which requires fast local storage and a heavy useof branching that are not suitable for GPUs.

Instead of using ANN, Wu (2007) suggests the use ofbrute force nearest-neighbor search on the GPU, which

scales quite well as vector processing matches the GPUstructure quite well. In Figure 6, brute force GPU match-ing is shown to be faster than per-object ANN and pro-vide better quality matches because it is not approximate.

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 15

Fig. 8. SSE performance improvement in the Complex MovieBenchmark. Time per frame without counting SIFT extraction.

As graphics hardware becomes cheaper and more power-ful, brute-force feature matching in large databases mightbecome the most sensible choice in the near future.

6.4.2. Intra-core optimizations SSE instructions allowMOPED to perform 12 floating point instructions per cycleinstead of just one. The 3D to 2D projection function, crit-ical in the pose estimation steps, is massively improved byusing SSE-specific algorithms from Van Weveren (2005)and Conte et al. (2000).

The memory footprint of MOPED is very lightweightfor current computers. In the case of a database of 100models and a total of 102,400 SIFT features, the requiredmemory is less than 13 MB. The runtime memory footprintis also small: a scene with 100 different objects with 100matched features each would require less than 10 MB ofmemory to be processed. This is possible thanks to usingdynamic and compact structures, such as lists and sets, andremoving unused data as soon as possible. In addition, SIFTdescriptors are stored as integer numbers in a 128-byte arrayinstead of a 512-byte array. Cache performance has beengreatly improved due to the heavy use of memory-alignedand compact data structures (Dysart et al. 2004).

The main data structures are kept constant throughout thealgorithm, so that no data needs to be copied or translatedbetween steps. k-ANN feature matching benefits from com-pact structures in the kd-tree storage, as smaller structuresincrease the probability of staying in the cache for fasterprocessing. In image space clustering, the performance ofMean Shift is boosted 250 times through the use of compactdata structures.

The overall performance increase is over 67% in CPUprocessing tasks (see Figure 8).

6.4.3. Symmetric multiprocessing SMP is a multiproces-sor computer architecture with identical processors andshared memory space. Most multi-core-based computersare SMP systems. OpenMP is a standard framework formulti-processing in SMP systems that we implement inMOPED.

We use Best Fit Decreasing (Johnson 1974) to balancethe load between cores using the size of a cluster as anestimate of its processing time, given that each cluster of

Fig. 9. Performance improvement of Pose Estimation step inmulti-core CPUs, in the Complex Movie Benchmark.

Table 4. Impact of pipeline scheduling, in the Simple MovieBenchmark. Latency measurements in milliseconds.

FPS Latency Latency SD

Scheduled MOPED 3.49 368.45 92.34Non-Scheduled MOPED 2.70 303.23 69.26Baseline 0.47 2124.30 286.54

features can be processed independently. Tests on a subsetof 10 images from the Complex Movie Benchmark showperformance improvements of 55% and 174% on dual andquad core CPUs respectively, as seen in Figure 9.

6.4.4. Multi-frame scheduling In order to maximize thesystem throughput, MOPED can benefit from GPU-CPUpipeline scheduling (Chatha and Vemuri 2002). In order touse all available computing resources, a second executionthread can be added, as shown in Figure 10. However, theGPU and CPU execution times are not equal in real scenes,and one of the execution threads often needs to wait for theother (see Figure 11). The impact of pipeline schedulingdepends heavily on image resolution, as shown in Figure 12,because the GPU and CPU loads do not increase at the samerate when increasing the number of features but keeping thesame number of objects in each scene. In MOPED, pipelinescheduling may increase latency significantly, especially ifusing high-resolution images, but also increases throughputalmost two-fold. Since GPU processing is the bottleneckon very small resolutions, these are the best scenarios forpipeline scheduling. For example, as seen in Figure 12, at alower resolution of 500 × 380, throughput is increased by95.6% and latency is increased by 9%.

We further test the impact of pipeline scheduling in a realsequence in the Simple Movie Benchmark, in Table 4. Theaverage throughput of the overall system is increased by25% when using pipeline scheduling, at the cost of 21.5%more latency. In addition, we see the average system latencyfluctuates 33.2% more when using pipeline scheduling. Inour particular application, latency is a more critical factorthan throughput, as our robot HERB (Srinivasa et al. 2010)must interact with the world in real time. Therefore, we

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

16 The International Journal of Robotics Research 00(000)

Fig. 10. (Top) Standard MOPED uses the GPU to obtain the SIFTfeatures, then the CPU to process them. (Bottom) The addition of asecond execution thread does not substantially increase the systemlatency.

Fig. 11. (Top) Limiting factor: CPU. GPU thread processingframe N + 1 must wait for CPU processing frame N to finish,increasing latency. (Bottom) Limiting factor: GPU. No substantialincrease in latency.

choose not to use pipeline scheduling in our default imple-mentation of MOPED (and in the experiments displayed inthis paper). In general, pipeline scheduling should be imple-mented in any kind of offline process, or whenever latencyis not a critical factor in object recognition.

6.5. Performance evaluation

In this section, we evaluate the impact of our combinedoptimizations on the overall performance of MOPED, com-pared with the baseline system in Section 6.1, and weanalyze how the application of our optimizations improvessystem latency and scalability.

Testing both systems on the Simple Movie Bench-mark (Table 4), MOPED outperforms the baseline with a5.74-fold increase in throughput and a 7.01-fold decreasein latency. These improvements become more acute thegreater the scene complexity is. Our architectural optimiza-tions offer improvement even with the simple scenes, butthe overhead of managing the SMP and GPU processing islarge enough to limit the improvement. However, in sceneswith high complexity (and, therefore, with a high numberof features and objects) this overhead is negligible, result-ing in massive performance boosts. In the Complex MovieBenchmark, MOPED shows an average throughput of 0.44frames per second and latency of 2,173.83 ms, a 30.1-foldperformance increase over the 0.015 frames per second and65,568.20 ms of average latency of the Baseline system.

It is also interesting to compare the Baseline andMOPED in terms of system scalability. We are most inter-ested in the scalability with respect to image resolution,number of objects in a scene and number of objects in thedatabase. Our synthetic benchmarks allow for a controlledcomparison of these different parameters without affectingthe others.

The Rotation Benchmark contains images with a con-stant number of object instances at five different resolu-tions. Figure 13(left) shows that both MOPED and the base-line system scale linearly in execution time with respect toimage resolution, i.e. quadratically with respect to imagewidth. To be more accurate, the implementation of ICEin both systems allows their performance to increase lin-early with the number of feature clusters. The number ofSIFT features and feature clusters also increase linearlywith respect to image resolution in this benchmark.

To test the scalability with respect to the number ofobjects in the database, images from the Rotation Bench-mark are generated to have a fixed number of objectinstances and poses, and change the identity of the objectinstances from a minimum of 5 different objects to a max-imum of 80. The use of a database-wide feature match-ing technique (k-NN per database, Section 6.3.4) allowsMOPED to perform almost constantly with respect to thenumber of objects in the database. The latency of the base-line system, which performs independent feature match-ing per object, increases roughly linearly. Figure 13 showsthe latency of each system relative to their best scores,to see how latency increments when each of the param-eters change. Therefore, it is important to note that thelatency of MOPED and the baseline are in different scales inFigure 13, and one should only compare the relative differ-ences when changing the image resolution and the size ofthe object database.

The number of objects in a scene is another factor thatcan greatly affect the performance of MOPED. The ZoomBenchmark aims to show a relatively constant number ofimage features (Figure 14), despite being only 12 (large)objects visible at 280 mm and 160 (smaller) objects visi-ble at 1,050 mm. It is interesting to note that the requiredtime is inversely proportional to the number of objects inthe image, i.e. a small number of large objects are moredemanding than large numbers of small objects. The expla-nation for this fact is that the smaller objects in this bench-mark are more likely to fit in a single object prior in the firstiteration of ICE. Clusters that converge after the first itera-tion of ICE (i.e. with no correspondences transferred to orfrom them) require very little processing time in the seconditeration of ICE. On the other hand, bigger objects requiremore effort in the second iteration of ICE due to the clustermerging process. It is also worth noting that this experimentpushes the limits of our graphics card, causing an inevitabledegradation in performance when the GPU memory limit isreached. In the 850–1,050 mm range, the number of SIFTfeatures to compute is slightly lower than in the 280–850mm range. In the latter case, the memory limit of the GPU

at CARNEGIE MELLON UNIV LIBRARY on June 1, 2011ijr.sagepub.comDownloaded from

Collet et al. 17

Fig. 12. Impact of image resolution in Pipeline Scheduling, in the Rotation Benchmark. (Left) Latency comparison (ms). (Right)Throughput comparison (FPS).

is reached, causing a two-fold increase in feature extractionlatency when this happens. Despite this effect, the averagelatency for MOPED in the Zoom Benchmark is 2.4 seconds,compared with 65.5 seconds in the baseline system.

7. Recognition and accuracy

In this section, we evaluate the recognition rate, pose esti-mation accuracy and robustness of MOPED in the caseof a single-view setup (MOPED-1V) and a three-viewsetup (MOPED-3V, shown in Figure 16), and compare theirresults to other well-known multi-view object recognitionstrategies.

We have conducted two sets of experiments to proveMOPED’s suitability for robotic manipulation. The first setevaluates the accuracy of MOPED in estimating the posi-tion and orientation of a given object in a set of images.The second set of experiments evaluates the robustness ofMOPED against modeling errors, which can greatly influ-ence the accuracy of pose estimation. In all experiments,we estimate the full six-degree-of-freedom (6-DOF) poseof objects, and no assumptions are made on their orientationor position. In all cases, we perform the image space clus-tering step with a Mean Shift radius of 100 pixels, and weuse RANSAC with subsets of five correspondences to com-pute each hypothesis. The maximum number of RANSACiterations is set to 500 in both Pose Estimation steps. InMOPED-3V, we enforce the requirement that a pose mustbe seen by at least two views, and that at least 50% ofthe points from the different hypotheses are consistent withthe final pose. We add this requirement in order to provethat MOPED-3V takes full advantage of the multi-viewgeometry to improve its estimation results.

The experimental setup is a static three-camera setupwith approximately 10 cm baseline between each two cam-eras (see Figure 16). Both intrinsic and extrinsic parametersfor each camera have been computed, considering camera 1as the coordinate origin.

7.1. Alternatives for multi-view recognition andestimation

We consider two well-known techniques for object recogni-tion and pose estimation in multiple simultaneous views.The techniques we consider are the generalized cam-era (Grossberg and Nayar 2001) and the pose averaging(Viksten et al. 2006) techniques.

7.1.1. Generalized camera The generalized cameraapproach parameterizes a network of cameras as sets ofrays that project from each camera center to each imagepixel, thus expressing the network of cameras a singlenon-perspective camera with multiple projection centersand focal planes. Then, the camera pose is optimized in thisgeneralized space by solving the resulting non-perspectivePnP (i.e. nPnP) problem (Chen and Chang 2004). Whilesuch an approach is perfectly valid, it might not be entirelyfeasible in real-time if the correspondence problem needsto be addressed as well, as the search space increases dra-matically with each extra image added to the system. Thisprocess takes full advantage of the multi-view geometryconstraints imposed by the camera setup, and its accuracyresults can be considered a theoretical limit for multi-viewmodel-based pose estimation. In our experiments, weimplement this technique and use 1,000 RANSAC itera-tions to robustly find correspondences in the generalizedspace.