TestWorthiness% %% TheCurve% Reliability% Validity%€¦ · ProperAes%of%Normal%DistribuAons% 1....

Transcript of TestWorthiness% %% TheCurve% Reliability% Validity%€¦ · ProperAes%of%Normal%DistribuAons% 1....

Review of Concepts

• Psychological Measurement vs. Other Types of Assessment

• CorrelaAon Coefficient – PosiAve/Direct – NegaAve/Inverse – Pearson’s r

Pithy Points about r

• The degree of relaAonship between two variables is indicated by the number, the direcAon indicated by sign

• CorrelaAon, even if high does not imply causaAon

• High correlaAons allow us to make predicAons

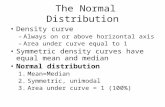

ProperAes of Normal DistribuAons The most important probability distribuAon in staAsAcs is the normal

distribu.on.

A normal distribuAon is a conAnuous probability distribuAon for a random variable, x. The graph of a normal distribuAon is called the normal curve.

Normal curve

x

ProperAes of Normal DistribuAons

1. The mean, median, and mode are equal. 2. The normal curve is bell-shaped and symmetric about

the mean. 3. The total area under the curve is equal to one. 4. The normal curve approaches, but never touches the x-

axis as it extends farther and farther away from the mean.

5. Between µ - σ and µ + σ (in the center of the curve), the graph curves downward. The graph curves upward to the left of µ - σ and to the right of µ + σ. The points at which the curve changes from curving upward to curving downward are called the inflection points.

ProperAes of Normal DistribuAons

μ -‐ 3σ μ + σ μ -‐ 2σ μ -‐ σ μ μ + 2σ μ + 3σ

Inflec.on points

Total area = 1

If x is a conAnuous random variable having a normal distribuAon with mean μ and standard deviaAon σ, you can graph a normal curve with the equaAon

2 2( ) 21=2

x µ σy eσ π

- - . = 2.178 = 3.14 e π

x

Means and Standard DeviaAons

A normal distribuAon can have any mean and any posiAve standard deviaAon.

Mean: µ = 3.5 Standard deviation: σ ≈ 1.3

Mean: µ = 6 Standard deviation: σ ≈ 1.9

The mean gives the location of the line of symmetry.

The standard deviation describes the spread of the data.

InflecAon points

InflecAon points

3 6 1 5 4 2 x

3 6 1 5 4 2 9 7 11 10 8 x

Means and Standard DeviaAons Example: 1. Which curve has the greater mean? 2. Which curve has the greater standard deviation?

The line of symmetry of curve A occurs at x = 5. The line of symmetry of curve B occurs at x = 9. Curve B has the greater mean.

Curve B is more spread out than curve A, so curve B has the greater standard deviaAon.

3 1 5 9 7 11 13

A B

x

-‐3 1 -‐2 -‐1 0 2 3

z

The Standard Normal DistribuAon

The standard normal distribution is a normal distribution with a mean of 0 and a standard deviation of 1.

Any value can be transformed into a z-score by using the formula

The horizontal scale corresponds to z-scores.

- -Value Mean= = .Standard deviationx µz σ

What is Reliability?

– Reliability means consistency.

– Reliability refers to the scores obtained with a test and not to the instrument itself.

The Classical Model of Reliability – A person’s true score is the score the individual would have received if the test and tesAng condiAons were free from error.

– Systema.c error remains constant from one measurement to another and leads to consistency.

– Random error, which does affect reliability, is due to:

• FluctuaAons in the mood or alertness of persons taking the test due to faAgue, illness, or other recent experiences.

• Incidental variaAon in the measurement condiAons due, for example, to outside noise or inconsistency in the administraAon of the instrument.

• Differences in scoring due to factors such as scoring errors, subjecAvity, or clerical errors.

• Random guessing on response alternaAves in tests or quesAonnaire items.

The Classical Model of Reliability ConAnued…

– The classical assumpAon is that any observed score consists of both the true score and error of measurement.

– Reliability indicates what proporAon of the observed score variance is true score variance.

– Reliability coefficients in the .80s are desirable for screening tests, .90s for diagnosAc decisions.

The Classical Model of Reliability ConAnued…

– Classical test theory also proposes two addiAonal assumpAons: • The distribuAon of observed scores that a person may obtain under repeated independent tesAng with the same test is normal. • The standard deviaAon of this normal distribuAon, referred to as the standard error of measurement (SEM), is the same for all persons of a given group taking the test.

Overview: Types of Reliability

• Internal consistency • Test-‐retest reliability • Alternate forms reliability • Interscorer and interrater reliability

Internal Consistency – Internal consistency esAmates are based on the average correlaAon among items within a test or scale. There are various ways to obtain internal consistency: • Split-‐half method:

– Odd-‐even method or matched random subsets method – The Spearman-‐Brown prophecy formula must be applied

• Cronbach’s coefficient alpha used for mulAscaled item response formats • Kuder-‐Richardson formula 20 used for dichotomous item response formats

Test-‐Retest Reliability

– Test-‐retest reliability, also known as temporal stability, is the extent to which the same persons consistently respond to the same test administered on different occasions.

– The major problem is the potenAal for carryover effects between the two administraAons.

– Thus, it is most appropriate for measurements of traits that are stable across Ame.

Alternate Forms Reliability

• Alternate forms reliability counteracts the pracAce effects that occur in test-‐retest reliability by measuring the consistency of scores on alternate test forms administered to the same group of individuals.

Reliability of Criterion-‐Referenced Tests

• Classifica.on consistency shows the consistency with which classificaAons are made, either by the same test administered on two occasions of by alternate test forms. There are two forms: – Mastery versus nonmastery – Cohen’s k: the proporAon of nonrandom consistent classificaAons

Interscorer and Interrater Reliability

• Interscorer and interrater reliability are influenced by subjecAvity of scoring. The higher the correlaAon, the lower the error variance due to scorer differences, and the higher the interrater agreement.

Researchers and Test Users Endeavor to Reduce Measurement Error and Improve Reliability by:

– WriAng items clearly.

– Providing complete and understandable test instrucAons.

– Administering the instrument under prescribed condiAons.

– Reducing subjecAvity in scoring. – Training raters and providing them with clear scoring instrucAons.

– Using heterogeneous respondent samples to increase the various of observed scores.

– Increasing the length of the test by adding items that are ideally parallel to those that are already in the test.

– The general principle behind improving reliability it to maximize the variance of relevant individual differences and minimize the error variance.

The Importance of Reliability

– Reliability is a necessary, but not sufficient, condiAon in the validaAon process.

Social JusAce and MulAcultural Issues

• Test authors should determine whether the reliabiliAes of scores from different groups vary substanAally, and report those variaAons for each populaAon for which the test has been recommended.

Validity

We say an instrument is valid to the extent that it measures what it is

designed to measure and accurately performs the funcAons it is purported

to perform

Validity (I.O.W.)

Validity indicates the degree to which test scores measure what the test claims to measure.

Examples of the Types of Validity

• Face Validity • Content-‐Related Validity • Criterion-‐Related Validity – PredicAve criterion-‐related validity – Concurrent criterion-‐related validity

• Construct Validity

Face Validity

– Face validity is derived from the obvious appearance of the measure itself and its test items, but it is not an empirically demonstrated type of validity. – Face validity is commonly referred to; not really a type of validity

– Self-‐report tests with high face validity can face problems when the trait or behavior in quesAon is one that many people will not want to reveal about themselves.

Content-‐Related Validity

– The main focus is on how the instrument was constructed and how well the test items reflect the domain of the material being tested.

– This type of validity is widely used in educaAonal tesAng and in tests of apAtude or achievement.

– Determining the content validity of a test requires a systemaAc evaluaAon of the test items to determine whether adequate coverage of a representaAve sample of the content domain was measured.

Criterion-‐related Validity

– Criterion-‐related validity is derived from comparing scores on the test to scores on a selected criterion.

– Sources of criterion scores include: • Academic achievement • Task performance • Psychiatric diagnosis • ObservaAons • RaAngs • CorrelaAons with previous tests

Criterion-‐related Validity ConAnued…

– Two forms of criterion-‐related validity: • Predic.ve criterion-‐related validity • Concurrent criterion-‐related validity

– Standard error of es.mate (SEE) is derived from examining the difference between our predicted value of the criterion and the person’s actual score on the criterion.

Construct Validity – Evidence for construct validity is established by defining the construct being measured and by gradually collecAng informaAon over Ame to demonstrate or confirm what the test measures.

– Construct validity evidence is gathered by:

• Convergent validity evidence • Discriminant validity evidence • Factor analysis uses staAsAcs to determine the degree to which the items contained in two separate instruments tend to group together along factors that mathemaAcally indicate similarity, and thus a common meaning.

• Developmental changes • DisAnct groups

Controversies in Assessment: “Teaching to the Test” Inflates Scores

– “Teaching to the test” means that the focus of instrucAon becomes so prescribed that only content that is sure to appear on an exam is addressed in instrucAon. If this occurs, test scores should rise.

– Whether test scores are inflated in this instance is a marer of content mastery.

– Test publishers, state educaAon departments, and local educators must work collaboraAvely to develop test items that adequately sample the broad content domain and standards.

Decision Making Using a Single Score

• Decision theory involves the collecAon of a screening test score and a criterion score, either at the same point in Ame or at some point in the future. – You want to maximize hits (valid acceptances and valid rejecAons) and minimize misses (false rejecAons and false posiAves).

Decision Making Using MulAple Tests – Mul.ple regression allows for several variables to be weighted in order to predict some criterion score.

– The mul.ple cutoff method means that the counselor must establish a minimally acceptable score on each measure under consideraAon, then analyze the scores of a given client or student and determine whether each of the scores meets the given criterion.

– Clinical judgment and diagnosis using a test baRery rely on the experiences, informaAon processing capability, theoreAcal frameworks, and reasoning ability of the professional counselor.

– Combining decision-‐making models can lead to greater accuracy.

MulAcultural and Social JusAce Issues

– Tests developers must evaluate diverse subgroups for potenAal score differences not related to the skills, attudes, or abiliAes being assessed.

– Tests must be used for verifiable purposes and with individuals with appropriate characterisAcs.

Jigsaw –Describe for Clients

• Team 1: Normal Curve • Team 2: Raw Scores & Standard Scores • Team 3: Age-‐Equivalent Scores vs Grade Equivalent Scores

• Team 4: Confidence Intervals for Obtained Scores

• Team 5: Validity and Reliability