TA webinar 9 sep 2015 slides - Transforming...

Transcript of TA webinar 9 sep 2015 slides - Transforming...

9 September 2015: 07:00AM GMT What can we do with assessment analytics? A/Prof. Cath Ellis (University of New South Wales, Australia) and Dr. Rachel Forsyth (Manchester Metropolitan University, UK)

Your Hosts Professor Geoff Crisp, Dean Learning and Teaching, RMIT University geoffrey.crisp[at]rmit.edu.au Dr Mathew Hillier, Institute for Teaching and Learning Innovation, University of Queensland mathew.hillier[at]uq.edu.au

Just%to%let%you%know:%%By#par'cipa'ng#in#the#webinar#you#acknowledge#and#agree#that:!The#session#may#be#recorded,#including#voice#and#text#chat#communica'ons#(a#recording#indicator#is#shown#inside#the#webinar#room#when#this#is#the#case).#We#may#release#recordings#freely#to#the#public#which#become#part#of#the#public#record.#We#may#use#session#recordings#for#quality#improvement,#or#as#part#of#further#research#and#publica'ons.!

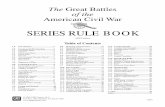

Webinar Series

e-Assessment SIG

Assessment Analytics

• Should we do it? • If so – what might it look like?

A/Prof Cath Ellis, University of New South Wales, Australia Dr Rachel Forsyth, Manchester Metropolitan University, UK

What are Assessment Analytics?

Analysis of assessment data, but

which data, and

for what purpose?

Binary data image by W.Rebel, cc licensed !

Informed decision-making

“In higher education many institutional decisions are too important to be based only on intuition, anecdote, or presumption; critical decisions require facts and the testing of possible solutions”

(Campbell & Oblinger, 2007, p. 2).

Emphasis on SNA

Partial

• Most student learning doesn’t happen online

• Most teaching happens in classrooms

Not useful

• ‘Isn’t this interesting and aren’t I clever’

• ‘How can I put this to use to improve student learning?

Learning Analytics

Useful to only a tiny proportion of

students and teachers

Focus on things other than learning

Academic Analytics

Business intelligence in HE

Learning Analytics

Using data to make informed decisions regarding student learning

Assessment Analytics

The analysis of assessment data within a learning analytics strategy

Assessment

All students are assessed and all tutors mark student work

Ubiquitous

Routine Normative

Widely understood

Expected Everyone ‘gets’ it

Important

It’s what students pay for

It’s what tutors are paid to do

Focused on achievement

not just retention

Feedback analysis

Assignment types Student satisfaction ‘scores’ and comments

Marks

Dates when assignments are due

Data we’ve used

Degree Classification

GPA at progression

Module Results

Assessment task results

Granularity

Common errors

Assessment criteria

Assessment Analytics Automates • Data harvesting at fine granularity

Allows • handling assessment data over large and dispersed

cohorts • monitoring common errors, progression and

achievement • informed curriculum planning even in year

Reporting • Student • School • Institution • PSRB

0% 20% 40% 60% 80% 100%

1

2

3

4

5

Percentage of Students

Ass

essm

ent C

rite

ria

Rubric Results

First 2.1 2.2 3rd Fail

0%# 20%# 40%# 60%# 80%# 100%#

Criterion#1#09L10#

Criterion#1#10L11#

Criterion#2#09L10#

Criterion#2#10L11#

Criterion#3#09L10#

Criterion#3#10L11#

Criterion#4#09L10#

Criterion#4#10L11#

Criterion#5#09L10#

Criterion#5#10L11#

%#Students#

Rubric#Criteria#

2009L10/2010L11#Rubric#Result#Comparison#

1st#

2.1#

2.2#

3rd#

fail#

Activity

Usefulness

• Supporting student learning • Informing curriculum design decisions

(between and in year)

Risks • Ethics • Negative backwash

Student satisfaction with assessment

0% 5%

10% 15% 20% 25% 30% 35% 40% 45%

Engagement Feedback Marking Organisation Support

Comparison of positive and negative comments as percentage of total comments about assessment (n=10155)

"Best things" "Things I'd like improved"

Session Feedback Survey

With thanks from your hosts Professor Geoff Crisp, Dean Learning and Teaching, RMIT University geoffrey.crisp[at]rmit.edu.au Dr Mathew Hillier, Institute Teaching and Learning Innovation, University of Queensland mathew.hillier[at]uq.edu.au Recording available http://transformingassessment.com

Webinar Series

e-Assessment SIG