Statistical analysis and parameter selection for MapperStatistical analysis and parameter selection...

Transcript of Statistical analysis and parameter selection for MapperStatistical analysis and parameter selection...

Statistical analysis and parameter selection for Mapper

Mathieu Carriere∗, Bertrand Michel†and Steve Oudot‡

November 10, 2017

Abstract

In this article, we study the question of the statistical convergence of the 1-dimensionalMapper to its continuous analogue, the Reeb graph. We show that the Mapper is an optimalestimator of the Reeb graph, which gives, as a byproduct, a method to automatically tune itsparameters and compute confidence regions on its topological features, such as its loops andflares. This allows to circumvent the issue of testing a large grid of parameters and keeping themost stable ones in the brute-force setting, which is widely used in visualization, clustering andfeature selection with the Mapper.

Keywords: Mapper, Extended Persistence, Topological Data Analysis, Confidence Regions,Parameter Selection

1 Introduction

In statistical learning, a large class of problems can be categorized into supervised or unsupervisedproblems. For supervised learning problems, an output quantity Y must be predicted or explainedfrom the input measures X. On the contrary, for unsupervised problems there is no output quantityY to predict and the aim is to explain and model the underlying structure or distribution in thedata. In a sense, unsupervised learning can be thought of as extracting features from the data,assuming that the latter come with unstructured noise. Many methods in data sciences can bequalified as unsupervised methods, among the most popular examples are association methods,clustering methods, linear and non linear dimension reduction methods and matrix factorizationto cite a few (see for instance Chapter 14 in Friedman et al. (2001)). Topological Data Analysis(TDA) has emerged in the recent years as a new field whose aim is to uncover, understand andexploit the topological and geometric structure underlying complex. and possibly high-dimensionaldata. Most of TDA methods can thus be qualified as unsupervised. In this paper, we study arecent TDA algorithm called Mapper which was first introduced in Singh et al. (2007).

Starting from a point cloud Xn sampled from a metric space X , the idea of Mapper is to studythe topology of the sublevel sets of a function f : Xn → R defined on the point cloud. The functionf is called a filter function and it has to be chosen by the user. The construction of Mapper dependson the choice of a cover I of the image of f by open sets. Pulling back I through f gives an opencover of the domain Xn. It is then refined into a connected cover by splitting each element into its

∗[email protected]†[email protected]‡[email protected]

1

arX

iv:1

706.

0020

4v2

[cs

.CG

] 9

Nov

201

7

g

1/r10 20 30 40 50 60 70 80 90 100

10

20

30

40

50

15

25

35

45

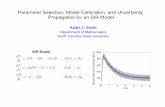

Figure 1: A collection of Mappers computed with various parameters. Left: crater dataset.Right: outputs of Mapper with various parameters. One can see that for some Mappers, (the oneswith purple squares), topological features suddenly appear and disappear. These are discretizationartifacts, that we overcome in this article by appropriately tuning the parameters.

various clusters using a clustering algorithm whose choice is left to the user. Then, the Mapper isdefined as the nerve of the connected cover, having one vertex per element, one edge per pair ofintersecting elements, and more generally, one k-simplex per non-empty (k + 1)-fold intersection.

In practice, the Mapper has two major applications. The first one is data visualization andclustering. Indeed, when the cover I is minimal, the Mapper provides a visualization of the datain the form of a graph whose topology reflects that of the data. As such, it brings additionalinformation to the usual clustering algorithms by identifying flares and loops that outline potentiallyremarkable subpopulations in the various clusters. See e.g. Yao et al. (2009); Lum et al. (2013);Sarikonda et al. (2014); Hinks et al. (2015) for examples of applications. The second applicationof Mapper deals with feature selection. Indeed, each feature of the data can be evaluated on itsability to discriminate the interesting subpopulations mentioned above (flares, loops) from the restof the data, using for instance Kolmogorov-Smirnov tests. See e.g. Lum et al. (2013); Nielson et al.(2015); Rucco et al. (2015) for examples of applications.

Unsupervised methods generally depend on parameters that need to be chosen by the user. Forinstance, the number of selected dimensions for dimension reduction methods or the number ofclusters for clustering methods have to be chosen. Contrarily to supervised problems, it is trickyto evaluate the output of unsupervised methods and thus to select parameters. This situation ishighly problematic with Mapper since, as for many TDA methods, it is not robust to outliers.This major drawback of Mapper is an important obstacle to its use in Exploratory Data Analysiswith non trivial datasets. This phenomenon is illustrated for instance in Figure 1 on a datasetthat we study further in Section 5. The only answer proposed to this drawback in the literatureconsists in selecting parameters in a range of values for which the Mapper seems to be stable—seefor instance Nielson et al. (2015). We believe that such an approach is not satisfactory because itdoes not provide statistical guarantees on the inferred Mapper.

Our main goal in this article is to provide a statistical method to tune the parameters of Mapper

2

automatically. To select parameters for Mapper, or more generally to evaluate the significance oftopological features provided by Mapper, we develop a rigorous statistical framework for the con-vergence of the Mapper. This contribution is made possible by the recent work (in a deterministicsetting) of Carriere and Oudot (2017b) about the structure and the stability of the Mapper. In thisarticle, the authors explicit a way to go from the input space to the Mapper using small perturba-tions. We build on this relation between the input space and its Mapper to show that the Mapperis itself a measurable construction. In Carriere and Oudot (2017b), the authors also show thatthe topological structure of the Mapper can actually be predicted from the cover I by looking atappropriate signatures that take the form of extended persistence diagrams. In this article, we usethis observation, together with an approximation inequality, to show that the Mapper, computedwith a specific set of parameters, is actually an optimal estimator of its continuous analogue, theso-called Reeb graph. Moreover, these specific parameters act as natural candidates to obtain areliable Mapper with no artifacts, avoiding the computational cost of testing millions of candidatesand selecting the most stable ones in the brute-force setting of many practitioners. Finally, we alsoprovide methods to assess the stability and compute confidence regions for the topological featuresof the Mapper. We believe that this set of methods open the way to an accessible and intuitiveutilization of Mapper for non expert researchers in applied topology.

Section 2 presents the necessary background on the Reeb graph and Mapper, and it also givesan approximation inequality—Theorem 2.7—for the Reeb graph with the Mapper. From thisapproximation result, we derive rates of convergences as well as candidate parameters in Section 3,and we show how to get confidence regions in Section 4. Section 5 illustrates the validity of ourparameter tuning and confidence regions with numerical experiments on smooth and noisy data.

2 Approximation of a Reeb graph with Mapper

2.1 Background on the Reeb graph and Mapper

We start with some background on the Reeb graph and Mapper. In particular, we present thespecific Mapper algorithm that we study in this article.

Reeb graph. Let X be a topological space and let f : X → R be a continuous function. Such afunction on X is called a filter function in the following. Then, we define the equivalence relation∼f as follows: for all x and x′ in X , x and x′ are in the same class (x ∼f x′) if and only if x andx′ belong to the same connected component of f−1(y), for some y in the image of f .

Definition 2.1. The Reeb graph Rf (X ) of X computed with the filter function f is the quotientspace X/ ∼f endowed with the quotient topology.

See Figure 2 for an illustration. Note that, since f is constant on equivalence classes, there isan induced map fR : Rf (X )→ R such that f = fR π, where π is the quotient map X → Rf (X ).

The topological structure of a Reeb graph can be described if the pair (X , f) is regular enough.From now on, we will assume that the filter function f : X → R is Morse-type. Morse-type functionsare generalizations of classical Morse functions that share some of their properties without havingto be differentiable (nor even defined over a smooth manifold):

Definition 2.2. A continuous real-valued function f on a compact space X is of Morse type if:

3

Rf(T )

Figure 2: Example of Reeb graph computed on the torus T with the height function f .

(i) There is a finite set Crit(f) = a1 < ... < an, called the set of critical values, such thatover every open interval (a0 = −∞, a1), ..., (ai, ai+1), ..., (an, an+1 = +∞) there is a compactand locally connected space Yi and a homeomorphism µi : Yi × (ai, ai+1) → X (ai,ai+1) s.t.∀i = 0, ..., n, f |X (ai,ai+1) = π2 µ−1

i , where π2 is the projection onto the second factor;

(ii) ∀i = 1, ..., n−1, µi extends to a continuous function µi : Yi× [ai, ai+1]→ X [ai,ai+1] – similarlyµ0 extends to µ0 : Y0 × (−∞, a1] → X (−∞,a1] and µn extends to µn : Yn × [an,+∞) →X [an,+∞);

(iii) Each levelset X t has a finitely-generated homology.

Key fact 1a: For f : X → R a Morse-type function, the Reeb graph Rf (X ) is a multigraph.

For our purposes, in the following we further assume that X is a smooth and compact subman-ifold of RD. The space of Reeb graphs computed with Morse-type functions over such spaces isdenoted R in this article.

Mapper. The Mapper is introduced in Singh et al. (2007) as a statistical version of the Reebgraph Rf (X ) in the sense that it is a discrete and computable approximation of the Reeb graphcomputed with some filter function. Assume that we observe a point cloud Xn = X1, . . . , Xn ⊂ Xwith known pairwise dissimilarities. A filter function is chosen and can be computed on each pointof Xn. The generic version of the Mapper algorithm on Xn computed with the filter function f canbe summarized as follows:

1. Cover the range of values Yn = f(Xn) with a set of consecutive intervals I1, . . . , IS whichoverlap.

2. Apply a clustering algorithm to each pre-image f−1(Is), s ∈ 1, ..., S. This defines a pullbackcover C = C1,1, . . . , C1,k1 , . . . , CS,1, . . . , CS,kS of the point cloud Xn.

3. The Mapper is then the nerve of C. Each vertex vs,k of the Mapper corresponds to one elementCs,k and two vertices vs,k and vs′,k′ are connected if and only if Cs,k ∩ Cs′,k′ is not empty.

Even for one given filter function, many versions of the Mapper algorithm can be proposed depend-ing on how one chooses the intervals that cover the image of f , and which method is used to clusterthe pre-images. Moreover, note that the Mapper can be defined as well for continuous spaces. Thedefinition is strictly the same except for the clustering step, since the connected components ofeach pre-image f−1(Is), s ∈ 1, ..., S are now well-defined. See Figure 3.

4

Mf(T#T, I)

Figure 3: Example of Mapper computed on the double torus T#T with the height function f anda cover I of its range with four open intervals.

Our version of Mapper. In this article, we focus on a Mapper algorithm that uses neighborhoodgraphs. Of course, more sophisticated versions of Mapper can be used in practice but then thestatistical analysis is more tricky. We assume that there exists a distance on Xn and that the matrixof pairwise distances is available. First, from the distance matrix we compute the 1-skeleton of aRips complex with parameter δ, i.e. the δ-neighborhood graph built on top of Xn. This objet playsthe role of an approximation of the underlying and unknown metric space X on which the dataare sampled. Second, given Yn = f(Xn) the set of filter values, we choose a regular cover of Ynwith open intervals, where no more than two intervals can intersect at a time. More precisely, weuse open intervals with same length r (apart from the first and the last one, which can have anypositive length): ∀s ∈ 2, . . . , S − 1,

r = `(Is)

where ` is the Lebesgue measure on R. The overlap g between two consecutive intervals is also afixed constant: ∀s ∈ 1, . . . , S − 1,

0 < g =`(Is ∩ Is+1)

`(Is)<

1

2.

The parameters g and r are generally called the gain and the resolution in the literature on theMapper algorithm. Finally, for the clustering step, we simply consider the connected components ofthe pre-images f−1(Is) that are induced by the 1-skeleton of the Rips complex. The correspondingMapper is denoted Mr,g,δ(Xn,Yn) or Mn for short in the following. When dealing with a continuousspace X , there is no need to compute a neighborhood graph since the connected components arewell-defined, so we let Mr,g(X , f) denote such a Mapper.

Key fact 1b: The Mapper Mr,g,δ(Xn,Yn) is a combinatorial graph.

Moreover, following Carriere and Oudot (2017b), we can define a function on the nodes of Mn

as follows.

Definition 2.3. Let v be a node of Mn, i.e. v represents a connected component of f−1(Is) forsome s ∈ 1, . . . , S. Then, we let

fI(v) = mid(Is),

where Is = Is \ (Is ∩ Is−1) ∪ (Is ∩ Is+1) and mid(Is) denotes the midpoint of the interval Is.

5

Filter functions. In practice, it is common to choose filter functions that are coordinate-independent,in order to avoid depending on solid transformations of the data like rotations or translations. Thetwo most common filters that are used in the literature are:

• the eccentricity: x 7→ supy∈Xd(x, y),

• the eigenfunctions given by a Principal Component Analysis of the data.

2.2 Extended persistence signatures and the persistence metric d∆

In this section, we introduce extended persistence and its associated metric, the bottleneck distance,which we will use later to compare Reeb graphs and Mappers.

Extended persistence. Given any graph G = (V,E) and a function attached to its nodesf : V → R, the so-called extended persistence diagram Dg(G, f) is a multiset of points in theEuclidean plane R2 that can be computed with extended persistence theory. Each of the diagrampoints has a specific type, which is either Ord0, Rel1, Ext+

0 or Ext−1 . We refer the reader toAppendix C for formal definitions and further details about extended persistence. A rigorousconnexion between the Mapper and the Reeb graph was drawn recently by Carriere and Oudot(2017b), who show how extended persistence provides a relevant and efficient framework to comparea Reeb graph with a Mapper. We summarize below the main points of this work in the perspectiveof the present article.

Topological dictionary. Given a topological space X and a Morse-type function f : X → R,there is a nice interpretation of Dg(Rf (X ), fR) in terms of the structure of Rf (X ). Orienting theReeb graph vertically so fR is the height function, we can see each connected component of thegraph as a trunk with multiple branches (some oriented upwards, others oriented downwards) andholes. Then, one has the following correspondences, where the vertical span of a feature is the spanof its image by fR:

• The vertical spans of the trunks are given by the points in Ext+0 (Rf (X ), fR);

• The vertical spans of the branches that are oriented downwards are given by the points inOrd0(Rf (X ), fR);

• The vertical spans of the branches that are oriented upwards are given by the points inRel1(Rf (X ), fR);

• The vertical spans of the holes are given by the points in Ext−1 (Rf (X ), fR).

These correspondences provide a dictionary to read off the structure of the Reeb graph from thecorresponding extended persistence diagram. See Figure 4 for an illustration.

Note that it is a bag-of-features type descriptor, taking an inventory of all the features (trunks,branches, holes) together with their vertical spans, but leaving aside the actual layout of the fea-tures. As a consequence, it is an incomplete descriptor: two Reeb graphs with the same persistencediagram may not be isomorphic.

6

Ext+0

Ord0

Rel1

Ext−1

Figure 4: Example of correspondences between topological features of a graph and points in itscorresponding extended persistence diagram. Note that ordinary persistence is unable to detectthe blue upwards branch.

Bottleneck distance. We now define the commonly used metric between persistence diagrams.

Definition 2.4. Given two persistence diagrams D,D′, a partial matching between D and D′ is asubset Γ of D ×D′ such that:

∀p ∈ D, there is at most one p′ ∈ D′ s.t. (p, p′) ∈ Γ,

∀p′ ∈ D′, there is at most one p ∈ D s.t. (p, p′) ∈ Γ.

Furthermore, Γ must match points of the same type (ordinary, relative, extended) and of the samehomological dimension only. Let ∆ be the diagonal ∆ = (x, x) | x ∈ R. The cost of Γ is:

cost(Γ) = max

maxp∈D

δD(p), maxp′∈D′

δD′(p′)

,

where

δD(p) = ‖p− p′‖∞ if ∃p′ ∈ D′ s.t. (p, p′) ∈ Γ, otherwise δD(p) = infq∈∆‖p− q‖∞,

δD′(p′) = ‖p− p′‖∞ if ∃p ∈ D s.t. (p, p′) ∈ Γ, otherwise δD′(p

′) = infq∈∆‖p′ − q‖∞.

Definition 2.5. Let D,D′ be two persistence diagrams. The bottleneck distance between D andD′ is:

d∆(D,D′) = infΓ

cost(Γ),

where Γ ranges over all partial matchings between D and D′.

Note that d∆ is only a pseudometric, not a true metric, because points lying on ∆ can be leftunmatched at no cost.

7

Definition 2.6. Let G1 = (V1, E1) and G2 = (V2, E2) be two combinatorial graphs with real-valuedfunctions f1 : V1 → R and f2 : V2 → R attached to their nodes. The persistence metric d∆ betweenthe pairs (G1, f1) and (G2, f2) is:

d∆(G1, G2) = d∆ (Dg(G1, f1),Dg(G2, f2)) .

For a Morse-type function f defined on X and for a finite point cloud Xn ⊂ X , we can thusconsider Dg(Rf (X )) = Dg(Rf (X ), fR) and Dg(Mn) = Dg(Mn, fI), with fI as in Definition 2.3.In this context the bottleneck distance d∆(Rf (X ),Mn) = d∆(Dg(Rf (X )),Dg(Mn)) is well definedand we use this quantity to assess if the Mapper Mn is a good approximation of the Reeb graphRf (X ). Moreover, note that, even though d∆ is only a pseudometric, it has been shown to a be atrue metric locally for Reeb graphs by Carriere and Oudot (2017a).

As noted in Carriere and Oudot (2017b), the choice of fI is in some sense arbitrary sinceany function defined on the nodes of the Mapper that respects the ordering of the intervals of Icarries the same information in its extended persistence diagram. To avoid this issue, Carriere andOudot (2017b) define a pruned version of Dg(Rf (X ), fR) as a canonical descriptor for the Mapper.The problem is that computing this canonical descriptor requires to know the critical values offR beforehand. Here, by considering Dg(Mn, fI) instead, the descriptor becomes computable.Moreover, one can see from the proofs in the Appendix that the canonical descriptor and itsarbitrary version actually enjoy the same rate of convergence, up to some constant.

2.3 An approximation inequality for Mapper

We are now ready to give the key ingredient of this paper to derive a statistical analysis of theMapper in Euclidean spaces. The ingredient is an upper bound on the bottleneck distance betweenthe Reeb graph of a pair (X , f) and the Mapper computed with the same filter function f and aspecific cover I of a sampled point cloud Xn ⊂ X . From now on, it is assumed that the underlyingspace X is a smooth and compact submanifold embedded in RD, and that the filter function f isMorse-type on X .

Regularity of the filter function. Intuitively, approximating a Reeb graph computed with afilter function f that has large variations is more difficult than for a smooth filter function, for somenotion of regularity that we now specify. Our result is given in a general setting by considering themodulus of continuity of f . In our framework, f is assumed to be Morse-type and thus uniformlycontinuous on the compact set X . Following for instance Section 6 in DeVore and Lorentz (1993),we define the (exact) modulus of continuity of f by

ωf (δ) = sup‖x−x′‖≤δ

|f(x)− f(x′)|

for any δ > 0, where ‖ · ‖ denotes the Euclidean norm in RD. Then ωf satisfies :

1. ωf (δ)→ ω(0) = 0 as δ → 0 ;

2. ωf is non negative and non-decreasing on R+ ;

3. ωf is subadditive : ωf (δ1 + δ2) ≤ ωf (δ1) + ωf (δ2) for any δ1, δ2 > 0;

4. ωf is continous on R+.

8

In this paper we say that a function ω defined on R+ is a modulus of continuity if it satisfies thefour properties above and we say that it is a modulus of continuity for f if, in addition, we have

|f(x)− f(x′)| ≤ ω(‖x− x′‖),

for any x, x′ ∈ X .

Theorem 2.7. Assume that X has positive reach rch and convexity radius ρ. Let Xn be a pointcloud of n points, all lying in X . Assume that the filter function f is Morse-type on X . Let ω be amodulus of continuity for f . If the three following conditions hold:

δ ≤ 1

4min rch, ρ , (1)

max|f(X)− f(X ′)| : X,X ′ ∈ Xn, ‖X −X ′‖ ≤ δ < gr, (2)

4dH(X ,Xn) ≤ δ, (3)

then the Mapper Mn = Mr,g,δ(Xn,Yn) with parameters r, g and δ is such that:

d∆ (Rf (X ),Mn) ≤ r + 2ω(δ). (4)

Remark 2.8. Using the edge-based MultiNerve Mapper—as defined in Section 8 of Carriere andOudot (2017b)—allows to weaken Assumption (2) since gr can be replaced by r in the correspondingequation, and r can be replaced by r/2 in Equation (4).

Analysis of the hypotheses. On the one hand, the scale parameter of the Rips complex couldnot be smaller than the approximation error corresponding to the Hausdorff distance between thesample and the underlying space X (Assumption (3)). On the other hand, it must be smallerthan the reach and convexity radius to provide a correct estimation of the geometry and topologyof X (Assumption (1)). The quantity gr corresponds to the minimum scale at which the filter’scodomain is analyzed. This minimum resolution has to be compared with the regularity of thefilter at scale δ (Assumption (2)). Indeed the pre-images of a filter with strong variations will bemore difficult to analyze than when the filter does not vary too fast.

Analysis of the upper bound. The upper bound given in (4) makes sense in that the approxi-mation error is controlled by the resolution level in the codomain and by the regularity of the filter.If one uses a filter with strong variations, or if the grid in the codomain has a too rough resolution,then the approximation will be poor. On the other hand, a sufficiently dense sampling is requiredin order to take r small, as prescribed in the assumptions.

Lipschitz filters. A large class of filters used for Mapper are actually Lipschitz functions andof course, in this case, one can take ω(δ) = cδ for some positive constant c. In particular, c = 1for linear projections (PCA, SVD, Laplacian or coordinate filter for instance). The distance to ameasure (DTM) is also a 1-Lipschitz function, see Chazal et al. (2011). On the other hand, themodulus of continuity of filter functions defined from estimators, e.g. density estimators, is lessobvious although still well-defined.

9

Filter approximation. In some situations, the filter function f used to compute the Mapperis only an approximation of the filter function f with which the Reeb graph is computed. In thiscontext, the pair (Xn, f) appears as an approximation of the pair (X , f). The following result isdirectly derived from Theorem 2.7 and Theorem 5.1 in Carriere and Oudot (2017b) (that derivesstability for Mappers building on the stability theorem of extended persistence diagrams proved byCohen-Steiner et al. (2009)):

Corollary 2.9. Let f : X → R be a Morse-type filter function approximating f . Assume thatAssumptions (1) and (3) of Theorem 2.7 are satisfied, and assume moreover that

maxmax|f(X)− f(X ′)|, |f(X)− f(X ′)| : X,X ′ ∈ Xn, ‖X −X ′‖ ≤ δ < gr. (5)

Then, the Mapper Mn = Mr,g,δ(Xn, f(Xn)) built on Xn with filter function f and parameters r, g, δsatisfies:

d∆(Rf (X ), Mn) ≤ 2r + 2ω(δ) + max1≤i≤n

|f(Xi)− f(Xi)|.

3 Statistical Analysis of Mapper

From now on, the set of observations Xn is assumed to be composed of n independent pointsX1, ..., Xn sampled from a probability distribution P in RD (endowed with its Borel algebra). Weassume that each point Xi comes with a filter value which is represented by a random variable Yi.Contrarily to the Xi’s, the filter values Yi’s are not necessarily independent. In the following, weconsider two different settings: in the first one, Yi = f(Xi), where the filter f is a deterministicfunction, in the second one, Yi = f(Xi) where f is an estimator of the filter function f . In thelatter case, the Yi’s are obviously dependent. We first provide the following Proposition, whoseproof is deferred to Appendix A.4, which states that computing probabilities on the Mapper makessense:

Proposition 3.1. For any fixed choice of parameters r, g, δ and for any fixed n ∈ N, the function

Φ :

(RD)n × Rn → R

(Xn,Yn) 7→ Mr,g,δ(Xn,Yn)

is measurable, where R denotes the set of Reeb graphs computed from Morse-type functions.

3.1 Statistical Model for Mapper

In this section, we study the convergence of the Mapper for a general generative model and a classof filter functions. We first introduce the generative model and next we present different settingsdepending on the nature of the filter function.

Generative model. The set of observations Xn is assumed to be composed of n independentpoints X1, ..., Xn sampled from a probability distribution P in RD. The support of P is denoted XPand is assumed to be a smooth and compact submanifold of RD with positive reach and positiveconvexity radius, as in the setting of Theorem 2.7. We also assume that 0 < diam(XP) ≤ L. Next,the probability distribution P is assumed to be (a, b)-standard for some constants a > 0 and b ≥ D,that is for any Euclidean ball B(x, t) centered on x ∈ X with radius t :

P (B(x, t)) ≥ min(1, atb).

10

This assumption is popular in the literature about set estimation (see for instance Cuevas, 2009;Cuevas and Rodrıguez-Casal, 2004). It is also widely used in the TDA literature (Chazal et al.,2015b; Fasy et al., 2014; Chazal et al., 2015a). For instance, when b = D, this assumption issatisfied when the distribution is absolutely continuous with respect to the Hausdorff measure onXP. We introduce the set Pa,b = Pa,b,κ,ρ,L which is composed of all the (a, b)-standard probabilitydistributions for which the support XP is a smooth and compact submanifold of RD with reachlarger than κ, convexity radius larger than ρ and diameter less than L.

Filter functions in the statistical setting. The filter function f : XP → R for the Reeb graphis assumed as before to be a Morse-type function. Two different settings have to be consideredregarding how the filter function is defined. In the first setting, the same filter function is used todefine the Reeb graph and the Mapper. The Mapper can be defined by taking the exact valuesof the filter function at the observation points f(X1), . . . , f(Xn). Note that this does not meanthat the function f is completely known since, in our framework, knowing f would imply to knowits domain and thus XP would be known which is of course not the case in practice. This firstsetting is referred to as the exact filter setting in the following. It corresponds to the situationswhere the Mapper algorithm is used with coordinate functions for instance. In the second setting,the filter function used for the Mapper is not available and an estimation of this filter function hasto be computed from the data. This second setting is referred to as the inferred filter setting inthe following. It corresponds to PCA or Laplacian eigenfunctions, distance functions (such as theDTM), or regression and density estimators.

Risk of Mapper. We study, in various settings, the problem of inferring a Reeb graph usingMappers and we use the metric d∆ to assess the performance of the Mapper, seen as an estimatorof the Reeb graph:

E [d∆ (Mn,Rf (XP))] ,

where Mn is computed with the exact filter f or the inferred filter f , depending on the context.

3.2 Reeb graph inference with exact filter and known generative model

We first consider the exact filter setting in the simplest situation where the parameters a and b ofthe generative model are known. In this setting, for given Rips parameter δ, gain g and resolutionr, the Mapper Mn = Mr,g,δ(Xn,Yn) is computed with Yn = f(Xn). We now tune the triple ofparameters (r, g, δ) depending on the parameters a and b. Let Vn(δn) = max|f(X) − f(X ′)| :X,X ′ ∈ Xn, ‖X −X ′‖ ≤ δn. We choose for g a fixed value in

(13 ,

12

)and we take:

δn = 8

(2log(n)

an

)1/b

and rn =Vn(δn)+

g,

where Vn(δn)+ denotes a value that is strictly larger but arbitrarily close to Vn(δn). We give belowa general upper bound on the risk of Mn with these parameters, which depends on the regularity ofthe filter function and on the parameters of the generative model. We show a uniform convergenceover a class of possible filter functions. This class of filters necessarily depends on the support ofP, so we define the class of filters for each probability measure in Pa,b. For any P ∈ Pa,b, we letF(P, ω) denote the set of filter functions f : XP → R such that f is Morse-type on XP with ωf ≤ ω.

11

Proposition 3.2. Let ω be a modulus of continuity for f such that ω(x)/x is a non-increasingfunction on R+. For n large enough, the Mapper computed with parameters (rn, g, δn) definedbefore satisfies

supP∈Pa,b

E

[sup

f∈F(P,ω)d∆ (Rf (XP),Mn)

]≤ C ω

(2 · 8ba

log(n)

n

)1/b

where the constant C only depends on a, b, and on the geometric parameters of the model.

Assuming that ω(x)/x is non-increasing is not a very strong assumption. This property issatisfied in particular when ω is concave, as in the case of concave majorant (see for instanceSection 6 in DeVore and Lorentz (1993)). As expected, we see that the rate of convergence ofthe Mapper to the Reeb graph directly depends on the regularity of the filter function and onthe parameter b which roughly represents the intrinsic dimension of the data. For Lipschitz filterfunctions, the rate is similar to the one for persistence diagram inference Chazal et al. (2015b),namely it corresponds to the one of support estimation for the Hausdorff metric (see for instanceCuevas and Rodrıguez-Casal (2004)) and Genovese et al. (2012a)). In the other cases where thefilters only admit a concave modulus of continuity, we see that the “distortion” created by the filterfunction slows down the convergence of the Mapper to the Reeb graph.

We now give a lower bound that matches with the upper bound of Proposition 3.2.

Proposition 3.3. Let ω be a modulus of continuity for f . Then, for any estimator Rn of Rf (XP),we have

supP∈Pa,b

E

[sup

f∈F(P,ω)d∆

(Rf (XP), Rn

)]≥ C ω

(1

an

) 1b

,

where the constant C only depends on a, b and on the geometric parameters of the model.

Propositions 3.2 and 3.3 together show that, with the choice of parameters given before, Mn isminimax optimal up to a logarithmic factor log(n) inside the modulus of continuity. Note that thelower bound is also valid whether or not the coefficients a and b and the filter function f and itsmodulus of continuity are given.

3.3 Reeb graph inference with exact filter and unknown generative model

We still assume that the exact values Yn = f(Xn) of the filter on the point could can be computedand that at least an upper bound on the modulus of continuity for the filter is known. However,the parameters a and b are not assumed to be known anymore. We adapt a subsampling approachproposed by Fasy et al. (2014). As before, for given Rips parameter δ, gain g and resolution r, theMapper Mn = Mr,g,δ(Xn,Yn) is computed with Yn = f(Xn).

We introduce the sequence sn = n(logn)1+β

for some fixed value β > 0. Let Xsnn be an arbitrary

subset of Xn that contains sn points. We tune the triple of parameters (r, g, δ) as follows: we choosefor g a fixed value in

(13 ,

12

)and we take:

δn = dH(Xsnn ,Xn) and rn =Vn(δn)+

g, (6)

where Vn is defined as in Section 3.2.

12

Proposition 3.4. Let ω be a modulus of continuity for f such that x 7→ ω(x)/x is a non-increasingfunction. Then, using the same notations as in the previous section, the Mapper Mn computed withparameters (rn, g, δn) defined before satisfies

supP∈Pa,b

E

[sup

f∈F(P,ω)d∆ (Rf (XP),Mn)

]≤ C ω

(C ′log(n)2+β

n

)1/b

,

where the constants C,C ′ only depends on a, b, and on the geometric parameters of the model.

Up to logarithmic factors inside the modulus of continuity, we find that this Mapper is stillminimax optimal over the class Pa,b by Proposition 3.3.

3.4 Reeb graph inference with inferred filter and unknown generative model

One of the nice properties of Mapper is that it can easily be computed with any filter function,including estimated filter functions such as PCA eigenfunctions, eccentricity functions, DTM func-tions, Laplacian eigenfunctions, density estimators, regression estimators, and many other filtersdirectly estimated from the data. In this section, we assume that the true filter f is unknown butcan be estimated from the data using an estimator f . Without loss of generality we assume thatboth f and f are defined on RD. As before, parameters a and b are not assumed to be known andwe have to tune the triple of parameters (rn, g, δn).

In this context, the quantity Vn cannot be computed as before because there is no direct accessto the values of f : we only know an estimation f of it. However, in many cases, an upper boundω1 on the modulus of continuity of f is known, which makes possible the tuning of the parameters.For instance, PCA (and kernel) projectors, eccentricity functions, DTM functions (see Chazal et al.(2011)) are all 1-Lipschitz functions, and Corollary 3.5 below can be applied.

Let Vn(δn) = max|f(X)− f(X ′)| : X,X ′ ∈ Xn, ‖X −X ′‖ ≤ δn, and let ω1 be a modulus ofcontinuity for f . Let

rn =maxω1(δn), Vn(δn)+

g. (7)

Following the lines of the proof of Proposition 3.4 and applying Corollary 2.9, we obtain:

Corollary 3.5. Let f : RD → R be a Morse-type filter function and let f : RD → R be aMorse-type estimator of f . Let ω1 (resp. ω2) be a modulus of continuity for f (resp. f). Let ω =maxω1, ω2 such that x 7→ ω(x)/x is a non-increasing function. Let also Mn = Mrn,g,δn(Xn, f(Xn))

be the Mapper built on Xn with function f and parameters g, δn as in Equation (6), and rn as inEquation (7). Then, Mn satisfies

E[d∆

(Rf (XP), Mn

)]≤ Cω

(C ′log(n)2+β

n

) 1b

+ E[

max1≤i≤n

|f(Xi)− f(Xi)|],

where the constants C,C ′ only depends on a, b, and on the geometric parameters of the model.

Note that ω1 has to be known to compute Mn in Corollary 3.5 since it appears in the definitionof rn. On the contrary, ω2—and thus ω—are not required to tune the parameters.

13

PCA eigenfunctions. In the setting of this article, the measure µ has a finite second moment.Following Biau and Mas (2012), we define the covariance operator Γ(·) = E(〈X, ·〉X) and we let Πk

denote the orthogonal projection onto the space spanned by the k-th eigenvector of Γ. In practice,we consider the empirical version of the covariance operator

Γn(·) =1

n

n∑i=1

〈Xi, ·〉Xi

and the empirical projection Πk onto the space spanned by the k-th eigenvector of Γn. Accordingto Biau and Mas (2012)(see also Blanchard et al. (2007); Shawe-Taylor et al. (2005)), we have

E[‖Πk − Πk‖∞

]= O

(1√n

).

This, together with Corollary 3.5 and the fact that both Πk and Πk are 1-Lipschitz, gives that therate of convergence of the Mapper of Πk(Xn) computed with parameters δn and g as in Equation (6),and rn as in Equation (7), i.e. rn = g−1δ+

n , satisfies

E[d∆

(RΠk(XP),Mrn,g,δn(Xn, Πk(Xn))

)].

(log(n)2+β

n

)1/b

∨ 1√n.

Hence, the rate of convergence of Mapper is not deteriorated by using Πk instead of Πk if theintrinsic dimension b of the support of µ is at least 2.

The distance to measure. It is well known that TDA methods may fail completely in thepresence of outliers. To address this issue, Chazal et al. (2011) introduced an alternative distancefunction which is robust to noise, the distance-to-a-measure (DTM). A similar analysis as with thePCA filter can be carried out with the DTM filter using the rates of convergence proven in Chazalet al. (2016b).

4 Confidence sets for Reeb signatures

4.1 Confidence sets for extended persistence diagrams

In practice, computing a Mapper Mn and its signature Dg(Mn, fI) is not sufficient: we need toknow how accurate these estimations are. One natural way to answer this problem is to provide aconfidence set for the Mapper using the bottleneck distance. For α ∈ (0, 1), we look for some valueηn,α such that

P (d∆(Mn,Rf (XP)) ≥ ηn,α) ≤ αor at least such that

lim supn→∞

P (d∆(Mn,Rf (XP)) ≥ ηn,α) ≤ α.

LetMα = R ∈ R : d∆(Mn,R) ≤ α

be the closed ball of radius α in the bottleneck distance and centered at the Mapper Mn in thespace of Reeb graphs R. Following Fasy et al. (2014), we can visualize the signatures of the points

14

belonging to this ball in various ways. One first option is to center a box of side length 2α at eachpoint of the extended persistence diagram of Mn—see the right columns of Figure 5 and Figure 6for instance. An alternative solution is to visualize the confidence set by adding a band at (vertical)distance 2α from the diagonal (the bottleneck distance being defined for the `∞ norm). The pointsoutside the band are then considered as significant topological features, see Fasy et al. (2014) formore details.

Several methods have been proposed in Fasy et al. (2014) and Chazal et al. (2014) to defineconfidence sets for persistence diagrams. We now adapt these ideas to provide confidence sets forMappers. Except for the bottleneck bootstrap (see further), all the methods proposed in these twoarticles rely on the stability results for persistence diagrams, which say that persistence diagramsequipped with the bottleneck distance are stable under Hausdorff or Wasserstein perturbations ofthe data. Confidence sets for diagrams are then directly derived from confidence sets in the samplespace. Here, we follow a similar strategy using Theorem 2.7, as explained in the next section.

4.2 Confidence sets derived from Theorem 2.7

In this section, we always assume that an upper bound ω on the exact modulus of continuityωf of the filter function is known. We start with the following remark: if we can take δ of theorder of dH(XP,Xn) in Theorem 2.7 and if all the conditions of the theorem are satisfied, thend∆(Mn,Rf (XP)) can be bounded in terms of ω(dH(XP,Xn)). This means that we can adapt themethods of Fasy et al. (2014) to Mappers.

Known generative model. Let us first consider the simplest situation where the parameters aan b are also known. Following Section 3.2, we choose for g a fixed value in

(13 ,

12

)and we take

δn = 8

(2log(n)

an

)1/b

and rn =Vn(δn)+

g,

where Vn is defined as in Section 3.2. Let εn = dH(XP,Xn). As shown in the proof of Proposition 3.2(see Appendix A.5), for n large enough, Assumption (1) and (2) are always satisfied and then

P (d∆(Mn,Rf (XP)) ≥ η) ≤ P(δn ≥ ω−1

(η

g−1 + 2

)).

Consequently,

P (d∆(Mn,Rf (XP)) ≥ η) ≤ P (d∆(Mn,Rf (XP)) ≥ η ∩ εn ≤ 4δn) + P (εn > 4δn)

≤ Iω(δn)≥ g1+2g

η + min

1,

2b

2log(n)n

= Φn(η).

where Φn depends on the parameters of the model (or some bounds on these parameters) whichare here assumed to be known. Hence, given a probability level α, one has:

P(d∆(Mn,Rf (XP)) ≥ Φ−1

n (α))≤ α.

15

Unknown generative model. We now assume that a and b are unknown. To compute confi-dence sets for the Mapper in this context, we approximate the distribution of dH(XP,Xn) using thedistribution of dH(Xsnn ,Xn) conditionally to Xn. There are N1 =

(nsn

)subsets of size sn inside Xn,

so we let X1sn , . . . ,X

N1sn denote all the possible configurations. Define

Ln(t) =1

N1

N1∑k=1

IdH(Xksn ,Xn)>t.

Let s be the function on N defined by s(n) = sn and let s2n = s(s(n)). There are N2 =

(ns2n

)subsets

of size s2n inside Xn. Again, we let Xks2n , 1 ≤ k ≤ N2, denote these configurations and we also

introduce

Fn(t) =1

N2

N2∑k=1

IdH

(Xks2n,Xsn

)>t.

Proposition 4.1. Let η > 0. Then, one has the following confidence set:

P (d∆(Rf (XP),Mn) ≥ η) ≤ Fn(

1

4ω−1

(g

1 + 2gη

))+ Ln

(1

4ω−1

(g

1 + 2gη

))+ o

(snn

)1/4.

Both Fn and Ln can be computed in practice, or at least approximated using Monte Carloprocedures. The upper bound on P (d∆(Rf (XP),Mn) ≥ η) then provides an asymptotic confidenceregion for the persistence diagram of the Mapper Mn, which can be explicitly computed in practice.See the green squares in the first row of Figure 5. The main drawback of this approach is thatit requires to know an upper bound on the modulus of continuity ω and, more importantly, thenumber of observations has to be very large, which is not the case on our examples in Section 5.

Modulus of continuity of the filter function. As shown in Proposition 4.1, the modulus ofcontinuity of the filter function is a key quantity to describe the confidence regions. Inferring themodulus of continuity of the filter from the data is a tricky problem. Fortunately, in practice, evenin the inferred filter setting, the modulus of continuity of the function can be upper bounded inmany situations. For instance, projections such as PCA eigenfunctions and DTM functions are1-Lipschitz.

4.3 Bottleneck Bootstrap

The two methods given before both require an explicit upper bound on the modulus of continuityof the filter function. Moreover, these methods both rely on the approximation result Theorem 2.7,which often leads to conservative confidence sets. An alternative strategy is the bottleneck boot-strap introduced in Chazal et al. (2014), and which we now apply to our framework.

The bootstrap is a general method for estimating standard errors and computing confidenceintervals. Let Pn be the empirical measure defined from the sample (X1, Y1), . . . , (Xn, Yn). Let(X∗1 , Y

∗1 ) . . . , (X∗n, Y

∗n ) be a sample from Pn and let also M∗n be the random Mapper defined from

this sample. We then take for ηn,α the quantity η∗n,α defined by

P(d∆(M∗n,Mn) > η∗n,α |X1, . . . , Xn

)= α. (8)

16

Note that η∗n,α can be easily estimated with Monte Carlo procedures. It has been shown in Chazalet al. (2014) that the bottleneck bootstrap is valid when computing the sublevel sets of a densityestimator. The validity of the bottleneck bootstrap has not been proven for the extended persistencediagram of any distance function. For Mapper, it would require to write d∆(M∗n,Mn) in terms ofthe distance between the extrema of the filter function and the ones of the interpolation of the filterfunction on the Rips. We leave this problem open in this article.

Extension of the analysis. As pointed out in Section 2.1, many versions of the discrete Mapper

exist in the literature. One of them, called the edge-based MultiNerve Mapper M4r,g,δ(Xn,Yn), is

described in Section 8 of (Carriere and Oudot (2017b)). The main advantage of this version is thatit allows for finer resolutions than the usual Mapper while remaining fast to compute. Our analysiscan actually handle this version as well by replacing gr by r in Assumption (2) of Theorem 2.7—see Remark 2.8, and changing constants accordingly in the proofs. In particular, this improves theresolution rn in Equation (6) since g−1Vn(δn)+ becomes Vn(δn)+. Hence, we use this edge-basedversion in Section 5, where this improvement on the resolution rn allows us to compensate for thelow number of observations in some datasets.

5 Numerical experiments

In this section, we provide few examples of parameter selections and confidence regions (which areunion of squares in the extended persistence diagrams) obtained with bottleneck bootstrap. Theinterpretation of these regions is that squares that intersect the diagonal, which are drawn in pinkcolor, represent topological features in the Mappers that may be horizontal or artifacts due to thecover, and that may not be present in the Reeb graph. We show in Figure 5 various Mappers (ineach node of the Mappers, the left number is the cluster ID and the right number is the numberof observations in that cluster) and 85 percent confidence regions computed on various datasets.All δ parameters and resolutions were computed with Equation (6) (the δ parameters were alsoaveraged over N = 100 subsamplings with β = 0.001), and all gains were set to 40%. The code weused is expected to be added in the next release of The GUDHI Project (2015), and should then beavailable soon. The confidence regions were computed by bootstrapping data 100 times. Note thatcomputing confidence regions with Proposition 4.1 is possible, but the numbers of observations inall of our datasets were too low, leading to conservative confidence regions that did not allow forinterpretation.

5.1 Mappers and confidence regions

Synthetic example. We computed the Mapper of an embedding of the Klein bottle into R4 with10,000 points with the height function. In order to illustrate the conservativity of confidence regionscomputed with Proposition 4.1, we also plot these regions for an embedding with 10,000,000 pointsusing the fact that the height function is 1-Lipschitz. Corresponding squares are drawn in greencolor. Their very large sizes show that Proposition 4.1 requires a very large number of observationsin practice. See the first row of Figure 5.

3D shapes. We computed the Mapper of an ant shape and a human shape from Chen et al.(2009) embedded in R3 (with 4,706 and 6,370 points respectively) Both Mappers were computed

17

with the height function. One can see that the confidence squares for the features that are almosthorizontal (such as the small branches in the Mapper of the ant) intersect indeed the diagonal. Seethe second and third rows of Figure 5.

Miller-Reaven dataset. The first dataset comes from the Miller-Reaven diabetes study thatcontains 145 observations of patients suffering or not from diabete. Observations were mappedinto R5 by computing various medical features. Data can be obtained in the “locfit” R-package.In Reaven and Miller (1979), the authors identified two groups of diseases with the projection pur-suit method, and in Singh et al. (2007), the authors applied Mapper with hand-crafted parametersto get back this result. Here, we normalized the data to zero mean and unit variance, and weobtained the two flares in the Mapper computed with the eccentricity function. Moreover, theseflares are at least 85 percent sure since the confidence squares on the corresponding points in theextended persistence diagrams do not intersect the diagonal. See the first row of Figure 6.

COIL dataset. The second dataset is an instance of the 16,384-dimensional COIL dataset Neneet al. (1996). It contains 72 observations, each of which being a picture of a duck taken at a specificangle. Despite the low number of observations and the large number of dimensions, we managedto retrieve the intrinsic loop lying in the data using the first PCA eigenfunction. However, the lownumber of observations made the bootstrap fail since the confidence squares computed around thepoints that represent this loop in the extended persistence diagram intersect the diagonal. See thesecond row of Figure 6.

5.2 Noisy data

Denoising Mapper. An important drawback of Mapper is its sensitivity to noise and outliers.See the crater dataset in Figure 7, for instance. Several answers have been proposed for recoveringthe correct persistence homology from noisy data. The idea is to use an alternative filtration ofsimplical compexes instead of the Rips filtration. A first option is to consider the upper level setsof a density estimator rather then the distance to the sample (see Section 4.4 in Fasy et al. (2014)).Another solution is to consider the sublevel sets of the DTM and apply persistence homologyinference in Chazal et al. (2014).

Crater dataset. To handle noise in our crater dataset, we simply smoothed the dataset bycomputing the empirical DTM with 10 neighbors on each point and removing all points withDTM less than 40 percent of the maximum DTM in the dataset. Then we computed the Mapperwith the height function. One can see that all topological features in the Mapper that are mostlikely artifacts due to noise (like the small loops and connected components) have correspondingconfidence squares that intersect the diagonal in the extended persistence diagram. See Figure 7.

6 Conclusion

In this article, we provided a statistical analysis of the Mapper. Namely, we proved the factthat the Mapper is a measurable construction in Proposition 3.1, and we used the approximationTheorem 2.7 to show that the Mapper is a minimax optimal estimator of the Reeb graph in variouscontexts—see Propositions 3.2, 3.3 and 3.4—and that corresponding confidence regions can be

18

0:294

1:349

2:375

3:396

4:438

5:464

6:497

7:598

8:761

9:673

10:279

11:270

13:240

12:235

14:234

15:221

17:234

16:21219:201

18:253

20:218

21:204

22:204

23:209

24:207

25:224

27:221

26:212

28:185

29:231

30:221

31:223

33:231

32:24334:237

35:236

37:232

36:239

39:264

38:275

40:323

41:311

42:746

43:621

44:480

45:440

46:388

47:386

48:343

49:295

50:317

−4 −2 0 2

−2

02

4

birth

deat

h

0:220

2:68

1:2203:68

5:33

4:336:37

7:37

9:47

8:48

10:48

11:48

12:48

13:48

14:48

15:48

17:67

16:6718:62

19:62

20:98

21:98

22:111

23:111

24:74

25:74

26:73

27:73

28:137

29:96

30:96

31:86

34:78

32:14

35:78

33:13

36:75

37:100

38:91

39:89

40:181

42:5141:51

44:205

45:4243:43

48:30

47:168

46:30

51:39

49:166

50:40

52:151

54:55 53:56

55:112

56:5357:53

60:70

58:7559:75

61:217

62:216

63:295

64:277

65:24366:226

67:24268:219

69:20170:21371:20272:260

−1.0 −0.8 −0.6 −0.4 −0.2 0.0

−0.

4−

0.2

0.0

0.2

0.4

0.6

0.8

birth

deat

h

0:30

1:29

2:28

3:32

4:31

8:30

5:19 9:30

6:167:2715:26

12:26

13:25

10:16

14:31

11:36

16:88

19:26

21:30

17:32

18:30

20:115

22:30

26:28

25:24

23:119

24:29

31:28

28:123

27:28

29:23

30:29

36:29

32:146

33:29

34:31

35:30

38:140

39:30

41:25

37:28

40:28

46:29

43:145

45:30

42:27

44:25

47:24

48:200

51:24

49:32

50:24

56:28

52:161

55:25

54:26

53:24

59:163

57:26

61:29

58:26

60:33

64:31

66:23

62:192

63:38

65:38

67:165

69:33

70:27

71:32

68:24

75:165

76:25

72:31

73:28

74:29

83:36

80:35

82:37

79:195

81:37

77:36

84:70

78:32

85:133

89:160

90:25

88:32

91:35

92:34

87:239

86:3

93:28

94:197 96:33

98:155

97:25

100:42

99:42

95:42

102:207

103:48

101:560

105:713

106:48

104:28

108:680

107:3

109:687

110:528111:56

112:575113:48

114:546

116:137115:15

−0.4 −0.2 0.0 0.2

−0.

2−

0.1

0.0

0.1

0.2

0.3

0.4

birth

deat

h

Figure 5: Mappers computed with automatic tuning (middle) and 85 percent confidence regionsfor their topological features (right) are provided for an embedding of the Klein Bottle into R4

(first row), a 3D human shape (second row) and a 3D ant shape (third row).

19

0:103

1:97

2:17

4 5 6 7 8 9

45

67

89

birth

deat

h

0:30

1:4

2:4

3:3

4:3

5:3

6:3

8:4

7:4

9:29

−20 −10 0 10 20

−20

−10

010

20

birth

deat

h

Figure 6: Mappers computed with automatic tuning (middle) and 85 percent confidence regionsfor their topological features (right) are provided for the Reaven-Miller dataset (first row) and theCOIL dataset (second row).

0:60

3:48

4:3

1:45

2:80

6:39

7:9

5:67

8:58

9:21

10:22

11:22

15:2

17:1

14:2

12:4

16:87

13:5

19:19718:7

21:335

20:2

22:412

23:405

24:326 25:138

26:118

28:113

27:133

29:133

30:161

32:163

33:166

31:8

34:74

35:196

36:147

37:119

39:159

38:127

40:62

43:158

41:165

42:5

44:167

45:166

46:196

47:189

48:496

49:568

50:573

51:557

52:487

54:324

53:255:2

56:103

60:1

61:3

57:1

63:3

58:4

59:22

64:59

62:12

65:42

67:97

66:70

68:48

69:95

−2 0 2 4 6 8 10

−2

02

46

810

12

birth

deat

h

Figure 7: Mappers computed with automatic tuning (middle) and 85 percent confidence regionsfor their topological features (right) are provided for a a noisy crater in the Euclidean plane.

20

computed—see Proposition 4.1 and Section 4.3. Along the way, we derived rules of thumb toautomatically tune the parameters of the Mapper with Equation (6). Finally, we provided fewexamples of our methods on various datasets in Section 5.

Future directions. We plan to investigate several questions for future work.

• We will work on adapting results from Chazal et al. (2014) to prove the validity of bootstrapmethods for computing confidence regions on the Mapper, since we only used bootstrapmethods empirically in this article.

• We believe that using weighted Rips complexes Buchet et al. (2015) instead of the usual Ripscomplexes would improve the quality of the confidence regions on the Mapper features, andwould probably be a better way to deal with noise that our current solution.

• We plan to adapt our statistical setting to the question of selecting variables, which is one ofthe main applications of the Mapper in practice.

A Proofs

A.1 Preliminary results

In order to prove the results of this article, we need to state several preliminary definitions and the-orems. All of them can be found, together with their proofs, in Dey and Wang (2013) and Carriereand Oudot (2017b). In this section, we let Xn ⊂ X be a point cloud of n points sampled on asmooth and compact submanifold X embedded in RD, with positive reach rch and convexity radiusρ. Let f : X → R be a Morse-type filter function, I be a minimal open cover of the range of fwith resolution r and gain g, |Ripsδ(Xn)| denote a geometric realization of the Rips complex builton top of Xn with parameter δ, and fPL : |Ripsδ(Xn)| → R be the piecewise-linear interpolation off on the simplices of Ripsδ(Xn).

Definition A.1. Let G = (Xn, E) be a graph built on top of Xn. Let e = (X,X ′) ∈ E be an edge ofG, and let I(e) be the open interval (minf(X), f(X ′),maxf(X), f(X ′)). Then e is said to beintersection-crossing if there is a pair of consecutive intervals I, J ∈ I such that ∅ 6= I ∩ J ⊆ I(e).

Theorem A.2 (Lemma 8.1 and 8.2 in Carriere and Oudot (2017b)). Let Rips1δ(Xn) denote the

1-skeleton of Ripsδ(Xn). If Rips1δ(Xn) has no intersection-crossing edges, then Mr,g,δ(Xn, f(Xn))

and Mr,g(|Ripsδ(Xn)|, fPL) are isomorphic as combinatorial graphs.

Theorem A.3 (Theorem 7.2 and 5.1 in Carriere and Oudot (2017b)). Let f : X → R be a Morse-type function. Then, we have the following inequality between extended persistence diagrams:

d∆(Dg(Rf (X ), fR),Dg(Mr,g(X , f), fI)) ≤ r. (9)

Moreover, given another Morse-type function f : X → R, we have the following inequality:

d∆(Dg(Mr,g(X , f), fI),Dg(Mr,g(X , f), fI)) ≤ r + ‖f − f‖∞. (10)

Theorem A.4 (Theorem 4.6 and Remark 2 in Dey and Wang (2013) and Theorem 8.3 in Carriereand Oudot (2017b)). If 4dH(X ,Xn) ≤ δ ≤ minrch/4, ρ/4, then:

d∆(Dg(Rf (X ), fR),Dg(RfPL(|Ripsδ(Xn)|), fPLR )) ≤ 2ω(δ).

21

Note that the original version of this theorem is only proven for Lipschitz functions in Dey andWang (2013), but it extends at no cost to functions with modulus of continuity.

A.2 Proof of Theorem 2.7

Let |Ripsδ(Xn)| denote a geometric realization of the Rips complex built on top of Xn with parameterδ. Moreover, let fPL : |Ripsδ(Xn)| → R be the piecewise-linear interpolation of f on the simplicesof Ripsδ(Xn), whose 1-skeleton is denoted by Rips1

δ(Xn). Since (|Ripsδ(Xn)|, fPL) is a metric space,we also consider its Reeb graph RfPL(|Ripsδ(Xn)|), with induced function fPL

R , and its Mapper

Mr,g(|Ripsδ(Xn)|, fPL), with induced function fPLI . See Figure 8. Then, the following inequalities

lead to the result:

d∆(Rf (X ),Mn) = d∆ (Dg(Rf (X ), fR),Dg(Mn, fI))

= d∆

(Dg(Rf (X ), fR),Dg(Mr,g(|Ripsδ(Xn)|, fPL), fPL

I ))

(11)

≤ d∆

(Dg(Rf (X ), fR),Dg(RfPL(|Ripsδ(Xn)|), fPL

R ))

+ d∆

(Dg(RfPL(|Ripsδ(Xn)|), fPL

R ),Dg(Mr,g(|Ripsδ(Xn)|, fPL), fPLI ))

(12)

≤ 2ω(δ) + r. (13)

Let us prove every (in)equality:

Equality (11). Let X1, X2 ∈ Xn such that (X1, X2) is an edge of Rips1δ(Xn) i.e. ‖X1−X2‖ ≤ δ.

Then, according to (2): |f(X1) − f(X2)| < gr. Hence, there is no s ∈ 1, . . . , S − 1 such thatIs ∩ Is+1 ⊆ [minf(X1), f(X2),maxf(X1), f(X2)]. It follows that there are no intersection-crossing edges in Rips1

δ(Xn). Then, according to Theorem A.2, there is a graph isomorphismi : Mn = Mr,g,δ(Xn, f(Yn)) → Mr,g(|Ripsδ(Xn)|, fPL). Since fI = fPL

I i by definition of fI andfPLI , the equality follows.

Inequality (12). This inequality is just an application of the triangle inequality.

Inequality (13). According to (1), we have δ ≤ minrch/4, ρ/4. According to (3), we alsohave δ ≥ 4dH(X ,Xn). Hence, we have

d∆(Dg(Rf (X ), fR),Dg(RfPL(|Ripsδ(Xn)|), fPLR )) ≤ 2ω(δ),

according to Theorem A.4. Moreover, we have

d∆(Dg(RfPL(|Ripsδ(Xn)|), fPLR ),Dg(Mr,g(|Ripsδ(Xn)|, fPL), fPL

I )) ≤ r,

according to Equation (9).

A.3 Proof of Corollary 2.9

Let |Ripsδ(Xn)| denote a geometric realization of the Rips complex built on top of Xn with parameterδ. Moreover, let fPL : |Ripsδ(Xn)| → R be the piecewise-linear interpolation of f on the simplicesof Ripsδ(Xn), whose 1-skeleton is denoted by Rips1

δ(Xn). Similarly, let fPL be the piecewise-linear interpolation of f on the simplices of Rips1

δ(Xn). As before, since (|Ripsδ(Xn)|, fPL) and

22

f fR

fPL fPLR

fPLI

fI

Figure 8: Examples of the function defined on the original space (left column), its induced functiondefined on the Reeb graph (middle column) and the function defined on the Mapper (right column).Note that the Mapper computed from the geometric realization of the Rips complex (middle row,right) is not isomorphic to the standard Mapper (last row), since there are two intersection-crossingedges in the Rips complex (outlined in orange).

23

(|Ripsδ(Xn)|, fPL) are metric spaces, we also consider their Mappers Mr,g(|Ripsδ(Xn)|, fPL) and

Mr,g(|Ripsδ(Xn)|, fPL). Then, the following inequalities lead to the result:

d∆(Rf (X ), Mn) ≤ d∆(Rf (X ),Mn) + d∆(Mn, Mn) by the triangle inequality

= d∆(Rf (X ),Mn) + d∆(Mr,g(|Ripsδ(Xn)|, fPL),Mr,g(|Ripsδ(Xn)|, fPL)) (14)

≤ r + 2ω(δ) + d∆(Mr,g(|Ripsδ(Xn)|, fPL),Mr,g(|Ripsδ(Xn)|, fPL)) by Theorem 2.7

≤ r + 2ω(δ) + r + ‖fPL − fPL‖∞ by Equation (10)

= 2r + 2ω(δ) + max|f(X)− f(X)| : X ∈ Xn

Let us prove Equality (14). By definition of r, there are no intersection-crossing edges for bothf and f . According to Theorem A.2, Mr,g(|Ripsδ(Xn)|, fPL) and Mn are isomorphic and similarly

for Mr,g(|Ripsδ(Xn)|, fPL) and Mn. See also the proof of Equality (11).

A.4 Proof of Proposition 3.1

We check that not only the topological signature of the Mapper but also the Mapper itself is ameasurable object and thus can be seen as an estimator of a target Reeb graph. This problemis more complicated than for the statistical framework of persistence diagram inference, for whichthe existing stability results give for free that persistence estimators are measurable for adequatesigma algebras.

Let R = R ∪ +∞ denote the extended real line. Given a fixed integer n ≥ 1, let C[n] be theset of abstract simplicial complexes over a fixed set of n vertices. We see C[n] as a subset of the

power set 22[n] , where [n] = 1, · · · , n, and we implicitly identify 2[n] with the set [2n] via the mapassigning to each subset i1, · · · , ik the integer 1 +

∑kj=1 2ij−1. Given a fixed parameter δ > 0, we

define the application

Φ1 :

(RD)n × Rn → C[n] × R2[n]

(Xn,Yn) 7→ (K, fK)

where K is the abstract Rips complex of parameter δ over the n labeled points in RD, minus theintersection-crossing edges and their cofaces, and where fK is a function defined by:

fK :

2[n] → R

σ 7→

maxi∈σ Yi if σ ∈ K+∞ otherwise.

The space (RD)n × Rn is equipped with the standard topology, denoted by T1, inherited from

R(D+1)n. The space C[n]×R2[n] is equipped with the product, denoted by T2 hereafter, of the discretetopology on C[n] and the topology induced by the extended distance d(f, g) = max|f(σ)− g(σ)| :

σ ∈ 2[n], f(σ) or g(σ) 6= +∞ on R2[n] . In particular, K 6= K ′ ⇒ d(fK , fK′) = +∞.Note that the map (Xn,Yn) 7→ K is piecewise-constant, with jumps located at the hypersurfaces

defined by ‖Xi − Xj‖2 = δ2 (for combinatorial changes in the Rips complex) or Yi = cst ∈End((r, g)) (for changes in the set of intersection-crossing edges) in (RD)n ×Rn, where End((r, g))

24

denotes the set of endpoints of elements of the gomic (r, g). We can then define a finite measurablepartition (C`)`∈L of (RD)n × Rn whose boundaries are included in these hypersurfaces, and suchthat (Xn,Yn) 7→ K is constant over each set C`. As a byproduct, we have that (Xn,Yn) 7→ f iscontinuous over each set C`.

We now define the operator

Φ2 :

C[n] × R2[n] → A

(K, f) 7→ (|K|, fPL)

where A denotes the class of topological spaces filtered by Morse-type functions, and where fPL isthe piecewise-linear interpolation of f on the geometric realization |K| of K. For a fixed simplicialcomplex K, the extended persistence diagram of the lower-star filtration induced by f and ofthe sublevel sets of fPL are identical—see e.g. Morozov (2008), therefore the map Φ2 is distance-preserving (hence continuous) in the pseudometrics d∆ on the domain and codomain. Since thetopology T2 on C[n] × R2n is a refinement1 of the topology induced by d∆, the map Φ2 is also

continuous when C[n] × R2[n] is equipped with T2.Let now Φ3 : A → R map each Morse-type pair (X , f) to its Mapper Mf (X , I), where I = (r, g)

is the gomic induced by r and g. Note that, similarly to Φ1, the map Φ3 is piecewise-constant,since combinatorial changes in Mf (X , I) are located at the regions Crit(f) ∩ End(I) 6= ∅. Hence,Φ3 is measurable in the pseudometric d∆. For more details on Φ3, we refer the reader to Definition7.6 in Carriere and Oudot (2017b).

Moreover, MfPL(|K|, I) is isomorphic to Mr,g,δ(Xn,Yn) by Theorem A.2 since all intersection-crossing edges were removed in the construction of K. Hence, the map Φ defined by Φ = Φ3Φ2Φ1

is a measurable map that sends (Xn,Yn) to Mr,g,δ(Xn,Yn).

A.5 Proof of Proposition 3.2

We fix some parameters a > 0 and b ≥ 1. First note that Assumption (2) is always satisfiedby definition of rn. Next, there exists n0 ∈ N such that for any n ≥ n0, Assumption (1) issatisfied because δn → 0 and ω(δn) → 0 as n → +∞. Moreover, n0 can be taken the same for allf ∈ ⋃P∈P(a,b)F(P, ω).

Let εn = dH(X ,Xn). Under the (a, b)-standard assumption, it is well known that (see forinstance Cuevas and Rodrıguez-Casal (2004); Chazal et al. (2015b)):

P (εn ≥ u) ≤ min

1,

4b

aube−a(

u2 )bn

, ∀u > 0. (15)

In particular, regarding the complementary of (3) we have:

P(εn >

δn4

)≤ min

1,

2b

2log(n)n

. (16)

Recall that diam(XP) ≤ L. Let C = ω(L) be a constant that only depends on the parameters ofthe model. Then, for any P ∈ P(a, b), we have:

supf∈F(P,ω)

d∆ (Rf (XP),Mn) ≤ C. (17)

1This is because singletons are open balls in the discrete topology, and also because of the stability theorem forpersistence diagrams Chazal et al. (2016a); Cohen-Steiner et al. (2007)

25

For n ≥ n0, we have :

supf∈F(P,ω)

d∆ (Rf (XP),Mn) = supf∈F(P,ω)

d∆ (Rf (XP),Mn) Iεn>δn/4 + supf∈F(P,ω)

d∆ (Rf (XP),Mn) Iεn≤δn/4

and thus

E

[sup

f∈F(P,ω)d∆ (Rf (XP),Mn)

]≤ CP

(εn >

δn4

)+ rn + 2ω(δn)

≤ C min

1,

2b

2log(n)n

+

(1 + 2g

g

)ω(δn) (18)

where we have used (17), Theorem 2.7 and the fact that Vn(δn)+ can be chosen less or equal toω(δn). For n large enough, the first term in (18) is of the order of δbn, which can be upper boundedby δn and thus by ω(δn) (up to a constant) since ω(δ)/δ is non-increasing. Since 1+2g

g < 6 because13 < g < 1

2 , we get that the risk is bounded by ω(δn) for n ≥ n0 up to a constant that only dependson the parameters of the model. The same inequality is of course valid for any n by taking a largerconstant, because n0 itself only depends on the parameters of the model.

A.6 Proof of Proposition 3.3

The proof follows closely Section B.2 in Chazal et al. (2013). Let X0 = [0, a−1/b] ⊂ RD. Obviously,X0 is a smooth and compact submanifold of RD. Let U(X0) be the uniform measure on X0. LetPa,b,X0 denote the set of (a, b)-standard measures whose support is included in X0. Let x0 = 0 ∈ X0

and xnn∈N∗ ∈ XN0 such that ‖xn − x0‖ = (an)−1/b. Now, let

f0 :

X0 → Rx 7→ ω(‖x− x0‖)

By definition, we have f0 ∈ F(U(X0), ω) because Dg(X0, f0) = (0, ω(a−1/b)) since f0 is increasingby definition of ω. Finally, given any measure P ∈ Pa,b,X0 , we let θ0(P) = Rf0|XP

(XP). Then, wehave:

supP∈Pa,b

E

[sup

f∈F(P,ω)d∆

(Rf (XP), Rn

)]

≥ supP∈Pa,b,X0

E

[sup

f∈F(P,ω)d∆

(Rf (XP), Rn

)]≥ sup

P∈Pa,b,X0E[d∆

(Rf0|XP

(XP), Rn

)]= sup

P∈Pa,b,X0E[ρ(θ0(P), Rn

)],

where ρ = d∆. For any n ∈ N∗, we let P0,n = δx0 be the Dirac measure on x0 and P1,n =(1 − 1

n)P0,n + 1nU([x0, xn]). As a Dirac measure, P0,n is obviously in Pa,b,X0 . We now check that

P1,n ∈ Pa,b,X0 .

• Let us study P1,n(B(x0, r)).

Assume r ≤ (an)−1/b. Then

P1,n(B(x0, r)) = 1− 1

n+

1

n

r

(an)−1/b≥(

1− 1

n+

1

n

)(r

(an)−1/b

)b≥(

1

2+

1

n

)anrb ≥ arb.

26

Assume r > (an)−1/b. Then

P1,n(B(x0, r)) = 1 ≥ minarb.

• Let us study P1,n(B(xn, r)). Assume r ≤ (an)−1/b. Then

P1,n(B(xn, r)) =1

n

r

(an)−1/b≥ 1

n

(r

(an)−1/b

)b= arb.

Assume r > (an)−1/b. Then

P1,n(B(xn, r)) = 1 ≥ minarb.

• Let us study P1,n(B(x, r)), where x ∈ (x0, xn). Assume r ≤ x. Then

P1,n(B(x, r)) ≥ 1

n

r

(ab)−1/b≥ arb (see previous case).

Assume r > x. Then P1,n(B(x, r)) = 1− 1n+ 1

n(x+minr, (an)−1/b−x)

(an)−1/b . If minr, (an)−1/b−x =

r, then we have

P1,n(B(x, r)) ≥ 1− 1

n+

1

n

r

(ab)−1/b≥ arb (see previous case).

Otherwise, we haveP1,n(B(x, r)) = 1 ≥ minarb.

Thus P1,n is in Pa,b,X0 as well. Hence, we apply Le Cam’s Lemma (see Section B) to get:

supP∈Pa,b,X0

E[ρ(θ0(P), Rn

)]≥ 1

8ρ(θ0(P0,n), θ0(P1,n)) [1− TV(P0,n,P1,n)]2n .

By definition, we have:

ρ(θ0(P0,n), θ0(P1,n)) = d∆

(Rf0|x0

(x0),Rf0|[x0,xn](U [x0, xn])

).

Since Dg(

Rf0|x0(x0)

)= (0, 0) and Dg

(Rf0|[x0,xn]

(U [x0, xn]))

= (f(x0), f(xn)) because f0

is increasing by definition of ω, it follows that

ρ(θ0(P0,n), θ0(P1,n)) =1

2|f(xn)− f(x0)| = 1

2ω(

(an)−1/b).

It remains to compute TV(P0,n,P1,n) =∣∣1− (1− 1

n

)∣∣ + 1n(an)−1/b = 1

n + o(

1n

). The Proposition

follows then from the fact that [1− TV(P0,n,P1,n)]2n → e−2.

27

A.7 Proof of Proposition 3.4

Let P ∈ Pa,b and ω a modulus of continuity for f . Using the same notation as in the previoussection, we have

P (δn ≥ u) ≤ P(dH(Xn,XP) ≥ u

2

)+ P

(dH(Xsnn ,XP) ≥ u

2

)≤ P

(εn ≥

u

2

)+ P

(εsn ≥

u

2

). (19)

Note that for any f ∈ F(P, ω), according to (4) and (17)

d∆ (Rf (XP),Mn) ≤ [r + 2ω(δ)] IΩn + C IΩcn (20)

where Ωn is the event defined by

Ωn = 4δn ≤ minκ, ρ ∩ 4εn ≤ δn.

This gives

E

[sup

f∈F(P,ω)d∆ (Mn,Rf (X ))

]≤∫ C

0P(ω(δn) ≥ g

1 + 2gα

)dα︸ ︷︷ ︸

(A)

+ CP(εn ≥

δn4

)︸ ︷︷ ︸

(B)

+ CP(δn ≥ min

κ4,ρ

4

)︸ ︷︷ ︸

(D)

.

Let us bound the three terms (A), (B) and (C).

• Term (C). It can be bounded using (19) then (15).

• Term (B). Let tn = 2(

2log(n)an

)1/band An = εn < tn. We first prove that δn ≥ 4εn on

the event An, for n large enough. We follow the lines of the proof of Theorem 3 in Section 6in Fasy et al. (2014).

Let qn be the tn-packing number of XP, i.e. the maximal number of Euclidean balls B(X, tn)∩XP, where X ∈ XP, that can be packed into XP without overlap. It is known (see for instanceLemma 17 in Fasy et al. (2014)) that qn = Θ(t−dn ), where d is the (intrinsic) dimensionof XP. Let Packn = c1, · · · , cqn be a corresponding packing set, i.e. the set of centersof a family of balls of radius tn whose cardinality achieves the packing number qn. Notethat dH(Packn,XP) ≤ 2tn. Indeed, for any X ∈ XP, there must exist c ∈ Packn such that‖X − c‖ ≤ 2tn, otherwise X could be added to Packn, contradicting the fact that Packn ismaximal. By contradiction, assume εn < tn and δn ≤ 4εn. Then:

dH(Xsnn ,Packn) ≤ dH(Xsnn ,Xn) + dH(Xn,XP) + dH(XP,Packn)

≤ 5dH(Xn,XP) + 2tn ≤ 7tn.

Now, one has snqn

= Θ(

n1−b/d

log(n)1−b/d+β

). Since b ≥ D ≥ d by definition, it follows that sn =

o(qn). In particular, this means that dH(Xsnn ,Packn) > 7tn for n large enough, which yields acontradiction.

28

Hence, one has δn ≥ 4εn on the event An. Thus, one has:

P(εn ≥

δn4

)≤ P

(εn ≥

δn4| An

)︸ ︷︷ ︸

=0

P (An) + P (Acn) = P (Acn) .

Finally, the probability of Acn is bounded with (15):

P (Acn) ≤ 2b

2log(n)n.

• Term (A). This is the dominating term. First, note that since ω is increasing, one has forall u > 0:

P (ω(δn) ≥ u) = P(δn ≥ ω−1(u)

). (21)

Then, using (19) and (21), we have:

(A) ≤∫ C

0P(εn ≥

1

2ω−1

(gα

1 + 2g

))dα+

∫ C

0P(εsn ≥

1

2ω−1

(gα

1 + 2g

))dα.

We only bound the first integral, but the analysis extends verbatim to the second integralwhen replacing n by sn. Let

αn =1 + 2g

gω

[(4blog(n)

an

)1/b].

Since x 7→ ω(x)x is non-increasing, it follows that x 7→ ω−1(x)

x is non-decreasing, and

ω−1(x) ≥ x

yω−1(y), ∀x ≥ y > 0. (22)

Taking inspiration from Section B.2 in Chazal et al. (2013) and using (15), we have thefollowing inequalities:∫ C

0P(εn ≥

1

2ω−1

(gα

1 + 2g

))dα ≤ αn +

8b

a

∫ C

αn

1

ω−1(

gα1+2g

)b exp

[−an

4bω−1

(gα

1 + 2g

)b]dα

≤ αn +8b

a

∫ C

αn

αbn[αω−1

(gαn1+2g

)]b exp

[− anαb

(4αn)bω−1

(gαn

1 + 2g

)b]dα

≤ αn + αn2b4n1−1/b

ba1/bω−1(gαn1+2g

) ∫u≥an

4bω−1

(gαn1+2g

)b u1/b−2e−udu

= αn + αn2bn

blog(n)1/b

∫u≥log(n)

u1/b−2e−udu ≤(

1 +2b

b log(n)2

)αn since b ≥ 1

≤ C(b)αn,

29

where we used (22) with x = gα1+2g and y = gαn

1+2g for the second inequality. The constant C(b)only depends on b.

Hence, since 1+2gg < 6, there exist constants K,K ′ > 0 that depend only of the geometric

parameters of the model such that:

(A) ≤ Kω(K ′log(sn)

sn

)1/b

.

Final bound. Since sn = nlog(n)−(1+β), by gathering all four terms, there exist constantsC,C ′ > 0 such that:

E

[sup

f∈F(P,ω)d∆ (Rf (XP),Mn)

]≤ Cω

(C ′log(n)2+β

n

)1/b

.

A.8 Proof of Proposition 4.1

We have the following bound by using (20) in the proof of Proposition 3.4:

P (d∆(,Rf (XP),Mn) ≥ η)

≤ P(ω(δn) ≥ g

1 + 2gη

)+ P

(εn ≥

δn4

)+ P

(δn ≥ min

κ4,ρ

4

)≤ P

(εn ≥

1

2ω−1

(g

1 + 2gη

))+ P

(εsn ≥

1

2ω−1

(g

1 + 2gη

))+ o

(1

nlog(n)

).

Following the lines of Section 6 in Fasy et al. (2014), subsampling approximations give

P(εn ≥

1

2ω−1

(g

1 + 2gη

))≤ Ln

(1

4ω−1

(g

1 + 2gη

))+ o

(snn

)1/4,

and

P(εsn ≥

1

2ω−1

(g

1 + 2gη

))≤ Fn

(1

4ω−1

(g

1 + 2gη

))+ o

(s2n

sn

)1/4

.

The result follows by taking sn = nlog(n)−(1+β).

B Le Cam’s Lemma

The version of Le Cam’s Lemma given below is from Yu (1997) (see also Genovese et al., 2012b).Recall that the total variation distance between two distributions P0 and P1 on a measured space(X ,B) is defined by

TV(P0,P1) = supB∈B|P0(B)− P1(B)|.

Moreover, if P0 and P1 have densities p0 and p1 for the same measure λ on X , then

TV(P0,P1) =1

2`1(p0, p1) =

∫X|p0 − p1|dλ.

30

Lemma B.1. Let P be a set of distributions. For P ∈ P, let θ(P) take values in a pseudometricspace (X, ρ). Let P0 and P1 in P be any pair of distributions. Let X1, . . . , Xn be drawn i.i.d. fromsome P ∈ P. Let θ = θ(X1, . . . , Xn) be any estimator of θ(P), then

supP∈P

EPn[ρ(θ, θ)

]≥ 1

8ρ (θ(P0), θ(P1)) [1− TV(P0,P1)]2n .

C Extended Persistence

Let f be a real-valued function on a topological space X. The family X(−∞,α]α∈R of sublevelsets of f defines a filtration, that is, it is nested w.r.t. inclusion: X(−∞,α] ⊆ X(−∞,β] for allα ≤ β ∈ R. The family X [α,+∞)α∈R of superlevel sets of f is also nested but in the oppositedirection: X [α,+∞) ⊇ X [β,+∞) for all α ≤ β ∈ R. We can turn it into a filtration by reversing thereal line. Specifically, let Rop = x | x ∈ R, ordered by x ≤ y ⇔ x ≥ y. We index the family of

superlevel sets by Rop, so now we have a filtration: X [α,+∞)α∈Rop , with X [α,+∞) ⊆ X [β,+∞) forall α ≤ β ∈ Rop.

Extended persistence connects the two filtrations at infinity as follows. Replace each superlevelset X [α,+∞) by the pair of spaces (X,X [α,+∞)) in the second filtration. This maintains the filtration

property since we have (X,X [α,+∞)) ⊆ (X,X [β,+∞)) for all α ≤ β ∈ Rop. Then, let RExt =R ∪ +∞ ∪ Rop, where the order is completed by α < +∞ < β for all α ∈ R and β ∈ Rop. Thisposet is isomorphic to (R,≤). Finally, define the extended filtration of f over RExt by:

Fα = X(−∞,α] for α ∈ R

F+∞ = X ≡ (X, ∅)Fα = (X,X [α,+∞)) for α ∈ Rop,

where we have identified the space X with the pair of spaces (X, ∅). This is a well-defined filtration

since we have X(−∞,α] ⊆ X ≡ (X, ∅) ⊆ (X,X [β,+∞)) for all α ∈ R and β ∈ Rop. The subfamilyFαα∈R is called the ordinary part of the filtration, and the subfamily Fαα∈Rop is called therelative part. See Figure 9 for an illustration.

Applying the homology functor H∗ to this filtration gives the so-called extended persistencemodule V of f :

Vα = H∗(Fα) = H∗(X(−∞,α]) for α ∈ R

V+∞ = H∗(F+∞) = H∗(X) ∼= H∗(X, ∅)Vα = H∗(Fα) = H∗(X,X

[α,+∞)) for α ∈ Rop,

and where the linear maps between the spaces are induced by the inclusions in the extendedfiltration.