Semi-automatic foreground/background segmentation of ... · PDF filesegmentation of motion...

Transcript of Semi-automatic foreground/background segmentation of ... · PDF filesegmentation of motion...

Semi-automatic foreground/backgroundsegmentation of motion picture images andimage sequences

P. Hillman, J. Hannah and D. Renshaw

Abstract: Segmentation of images into foreground (an actor) and background is required for manymotion picture special effects. To produce these shots, the unwanted background must be removedso that none of it appears in the final composite shot. The standard approach requires thebackground to be a blue screen. Systems that are capable of segmenting actors from more naturalbackgrounds have been proposed, but many of these are not readily adaptable to the resolutioninvolved in motion picture imaging. An algorithm is presented that requires minimal humaninteraction to segment motion picture resolution images. Results from this algorithm arequantitatively compared with alternative approaches. Adaptations to the algorithm, which enablesegmentation even when the foreground is lit from behind, are described. Segmentation of imagesequences normally requires manual creation of a separate hint image for each frame of a sequence.An algorithm is presented that generates such hint images automatically, so that only a single inputis required for an entire sequence. Results are presented that show that the algorithm successfullygenerates hint images where an alternative approach fails.

1 Introduction

Motion picture special effects often require the segmenta-tion of image sequences into foreground and background.The background and foreground may then be processedseparately and recombined, perhaps with additionalelements in the scene. Special backgrounds such as bluescreens [1] can be used if the original background behind thesubject is not required. The segmentation step (detailed byVlahos [2]) is simple and robust, and the resultant matte canrepresent transparencies by outputting shades of grey in thealpha channel. It is a much more complex task to segment animage where the background is arbitrary and transparentpixels are required.We present an algorithm that requires some human

interaction in the form of a hint image (Fig. 1), butovercomes some of the limitations of blue screen composit-ing, allowing segmentation of actors from images with morenormal backgrounds.

2 Still or single image alpha estimation

2.1 Background

Much work has been undertaken in the investigation ofsegmentation of humans from unwanted backgrounds forareas such as low-bandwidth video compression and facerecognition. Many techniques rely on the background beingdarker or brighter than the person, and threshold the image

intensity (also a common technique in compositing [3]).A similar idea is to produce a reference image of thebackground with no person present (perhaps by averagingthe scene over a long period of time) and taking thedifference between that image and each frame [4].

The slow framerate of motion picture images combinedwith the presence of relatively fast moving subjects oftencauses motion blur, which causes a softening of edges.The high resolution (>4 megapixel, 14 bits per channel) alsoincreases the effect of focal blur, which will also cause asoftening of edges. A single pixel in this edge region willthen be a mixture of foreground and background. Its finalcolour will be some mixture a of a background and ‘clean’foreground colour. A segmenter must find the value of a aswell as the foreground colour for every pixel within theforeground area. Its output will be a clean foreground imageC and a matte or alpha channel a: The image can then becomposited into a new background N using the compositingequation

Rij ¼ Cijaij þ ð1� aijÞNij ð1Þ

for each pixel ij in the image. This scheme is adequate tocompose images where there are blurred edges, but notwhere there is reflection or refraction. Zongker et al. [5]developed a system capable of accurately compositingsurfaces that are truly transparent and also reflectiveand refractive, such as a coloured glass. Their techniquerequires multiple artificial backgrounds and a staticforeground.

Various algorithms [6–10] have been proposed that cangenerate alpha channels from single images with non-uniform backgrounds. These all require a hint image thatmaps which parts of the image are known foreground andbackground, and those unknown parts, which may be amixture of the two. These algorithms sample the image inthe known areas in order to process the unknown areas.

q IEE, 2005

IEE Proceedings online no. 20045048

doi: 10.1049/ip-vis:20045048

The authors are with School of Engineering and Electronics, University ofEdinburgh, King’s Buildings, Mayfield Road, Edinburgh EH9 3JL, UK

E-mail: [email protected]

Paper first received 8th June and in revised form 21st December 2004

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 387

2.2 User input for alpha estimation

Figure 1 shows an example hint image. The area that isdefinitely background is marked in black, the foregroundwhite, and the ‘unknown’ area grey. The ‘unknown’ areausually forms a band between the known background andforeground areas, and is the only one that requiresprocessing. Pixels within this area may be part of thebackground, the foreground, or a combination of the two,and must be assigned an alpha value accordingly. This typeof input has two advantages. First, classification can beachieved by comparing parts of the unknown area to thedefinite foreground and background. Secondly, those partsof the image that are marked as definite do not need to beprocessed, saving a massive amount of processing. Only 7%of the 2.7 million pixels in the Gema (Fig. 5a) image aremarked as unknown.

A technique for calculating alpha can be derived from thecompositing equation by inverting it. If a clean foregroundcolour f has been estimated, along with a correspondingclean background colour b (i.e. the colour of the backgroundwith no foreground present), the alpha value can becalculated by solving the compositing equation p ¼af þ ð1� aÞb for a: Since the estimates of f and b arelikely to be inaccurate, the point pmay not lie directly on the

line f b�!

: Alpha can be defined using the nearest point on the

line f b�!

to the point p with the equation

a ¼ ð p� bÞð f � bÞj f � bj2

ð2Þ

and limiting the result to lie on the range (0, 1). Essentially,this is estimating alpha from a point q which is the closestpoint to p on the line bf

!; as shown in Fig. 2.

2.3 Previous work

Published algorithms that estimate alpha channels all workin a similar way, in that they sample nearby knownbackground and foreground pixels (from the areas markedas known foreground and background in the hint image) andderive from these estimated clean foreground and back-ground colours in order to calculate alpha.

The KnockOut algorithm [8] forms the clean colours byforming a weighted average from nearby known back-ground and foreground colours. Ruzon and Tomasi [10] andChuang et al. [9] form a cluster of pixel values from nearbyknown foreground and background pixels and use aGaussian mixture model to find an optimal set of cleanforeground, background and alpha values in a maximumlikelihood approach. Like Chuang et al., Wexler et al. [11]solve the maximum likelihood problem using a Bayesianframework. The problem solved by the system developed byWexler et al. is slightly different from those presented here,in that they assume a static scene with multiple registeredviews of the foreground.

2.4 Alpha estimation

The next three subsections explain, respectively, how thehint image is preprocessed, how clusters of foreground andbackground are extracted and how these clusters are used togenerate estimates of clean foreground and backgroundcolours.

In this work, the RGB colour space is used for alphaestimation. It is common when performing crisp (binary)image segmentation to use a colour space such as the CIE-Lab colour space. Such colour spaces model humanperception and allow the separation of colour and intensity.For alpha estimation, it is essential that results satisfy thecompositing constraint: combining the clean backgroundand foreground images using the alpha channel must exactlyreproduce the input. Choice of colourspace used for thiscompositing step will affect the final composed image.Since packages that perform this operation almost alwayswork in RGB colour space; the constraint must be satisfiedin RGB space. Additionally, most image sensors (includingfilm and CCD sensors) are sensitive to RGB. Implicitly, thiswork assumes that a motion blurred pixel at the edge of theforeground area will be a linear combination a in RGBcolour space of the colours of its foreground and back-ground objects. An alpha value of 0.25 for a pixel wouldimply that the pixel was illuminated by the backgroundobject for 75% of the time and foreground for the remaining25% of the time. Applying processing to the image(e.g. gamma correction or level changes) prior to alphaestimation may invalidate this assumption, but the compo-siting constraint should still be satisfied. For motion picturepost production, it is important only that the alpha channelappears correct. Qualitive analysis of experiments withother colour spaces lead to the conclusion that results inRGB colour space are at least as good as those as in otherspaces.

2.4.1 Hint image preprocessing: Overlargeunknown areas in hint images result in unnecessaryprocessing and inaccurate results. On fairly smooth images,the unknown area can be reduced using a region growingapproach. A region is grown from every pixel onthe boundary of the unknown area. Pixels neighbouring

point p

cleanforeground f

1 − α

α

cleanbackground b

q

Fig. 2 Calculating a when the clean colours are estimates

Where p does not lie on f b, the projection of p onto f b (point q) is used tofind a: The diagram is normalised such that j f bj ¼ 1

Fig. 1 Example ‘hint image’ used to indicate background,foreground and unknown areas

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005388

the region will be included in it if they pass a Homogeneitycriterion: the colour difference between the candidate pixeland the original boundary pixel must be less than athreshold. This threshold is chosen such that any pixel thatshould be assigned an alpha value other than zero or one willnot be included in the region. In practice, the region growingis terminated well before any such pixel is reached. All thepixels in a region that grew from a background edge seed aremarked as background and all those that were grown from aforeground edge are marked as foreground. Thus theunknown area is reduced in size. Pixels that fail thehomogeneity criterion are placed on a queue E for lateralpha estimation. CIE-Lab colour space is used for thismeasure, since this is a crisp segmentation and we wentresults that better model human perception.

2.4.2 Cluster processing: The pixels now remain-ing in the unknown area must be assigned a cleanforeground and background colour as well as an alphavalue. Pixels are processed from the queue E. As each pixelis processed, any neighbouring unknown pixels areenqueued onto E to ensure that every pixel is processed inturn, starting from the edges and progressing toward thecentre of the unknown area.To classify each pixel s in E the n nearest clean

background and foreground pixels are sampled into twoseparate clusters. Pixels that are spatially too far away(typically about ten pixel widths) from the original unknownarea will not be sampled. This prevents the inclusion ofcolours in areas dissimilar to the unknown area. Pixels arealso collected from the areas of background and foregroundcreated by erosion of the unknown area in the preprocessingstep, as well as from previously estimated clean foregroundand background regions. To reduce errors caused by poorprevious estimates, pixels within the clusters are givenweights according to their reliability. Pixels taken from theknown foreground and background areas are the mostreliable and have weight w ¼ 3; those taken from previousestimates are the least reliable and have weight w ¼ 1:These weightings were chosen arbitrarily but small changeshave little effect on the results.

2.4.3 Computation of alpha: Other algorithmsfollow a similar method to collect clusters of pixels that aredefinitely foreground and background. Whereas Ruzon andTomasi [10], Chuang et al. [9] and Wexler et al. [11] useGaussian mixture models to represent their cluster, we makean assumption about cluster shape to achieve better noisetolerance and faster processing. Observation of manynatural images has shown that pixels taken from sectionsof images tend to form prolates — they are cigar shaped inRGB space, as shown in Fig. 3. Ohta et al. [12] made asimilar observation: they took the Karhunen Loeve trans-form (KLT) of nine images, and measured the variancealong all the transformed axes. They measured a variancealong the major axis of approximately ten times that of thesecond and third axes, suggesting that the clusters areprolates.The following algorithm assumes that the foreground and

background clusters are prolates in order to obtainextremely fast estimates of clean foreground and back-ground colours.The first step of this process finds the principal axis.

The principal axis is given by the parametric linesðtÞ ¼ mþ rt; where m is the mean of the cluster and r isa vector oriented such that the mean squared distance of allpoints in the cluster to the line s(t) is minimised. r canbe found using the Karhunen Loeve transform. Orchard

and Bouman [13] show how to derive the principalcomponents of a distribution in R

3 for colour analysis:Given a cluster C with n members x, the mean m andcovariance matrix �RR of C are found:

Rn ¼Xn

s¼1

ws xs xts ð3Þ

m ¼Xn

s¼1

xs ð4Þ

m ¼ m

nð5Þ

�RR ¼ R1

nPn

s¼1 ws

mmt ð6Þ

where ws is the weight of xs:The vector r is given by the principal eigenvector e0 of �RR;

i.e. that which has the largest corresponding eigenvalue l:The entire matrix of eigenvectors M ¼ ½et

0et1e

t2� (where ei is

the eigenvector with the ith largest eigenvalue) is anorthogonal matrix that forms a transform into KLT space,that is, a new set C0 with members x0i ¼ Mxi is a set rotatedabout the origin with respect to C with the principal axisparallel to the x-axis, and the second and third alignedparallel to the y- and z-axes, respectively.

Once the set C0 has been formed, the range ðrmin; rmaxÞ ofthe transformed set along the principal axis is found.The mean ½m1; m2; m3� in each dimension of KLT space isalso calculated. The transformed end points pmin ¼½rmin; m2; m3� and pmax ¼ ½rmax; m2; m3� are then inversetransformed back into RGB space to form p0 and p1:Figure 3 shows a cluster, and the line p0 p1

��!:The range of the set is used rather than a statistical

measure such as the standard deviation because the clustersare very rarely symmetric and do not fit well to simplestatistical models. The range calculation is performed forboth foreground and background, giving two lines p0 p1

��!f

and p0 p1��!

b; as shown in Fig. 4.It is now assumed that each unknown pixel s is composed

of a clean background colour close to a colour b, and a cleanforeground close to a colour f, where b is a point on the linep0 p1��!

b and f lies on the line p0 p1��!

f : f and b are therefore initialestimates of the clean foreground and background colours.The most appropriate points to choose for b and f are thosepoints closest to s, formed by finding

q ¼ ðs� p0Þ � ð p1 � p0Þj p1 � p0j2

ð7Þ

for foreground qf and background qb: If qf and qb are limitedto the range (0, 1), then

b ¼ p1bqb þ p0b

ð1� qbÞ ð8Þ

f ¼ p1fqf þ p0f

ð1� qf Þ ð9Þ

give points f and b constrained to lie between p0 and p1:The alpha value can then be calculated using (2) and againlimiting the result to lie in the range (0, 1). f 0; equal tof þ s� q; is a better estimate of the clean foregroundcolour. An updated clean background colour b0 is alsocalculated. These values are stored in separate frame buffersand are used in processing of subsequent pixels.

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 389

3 Results

Results are presented for bluescreen images as wellas images with more natural backgrounds (Figs. 5–8).The Rachael image — kindly provided by the ComputerFilm Co. — is a 640� 480 crop of a frame from a sequencescanned from 16mm movie film. The Gema image is a1612� 1673 pixel image scanned from a 35mm slide.The Edith image is a 2240� 2024 image acquired from theCorel Stock Collection (original medium unknown).

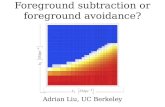

Table 1 compares the root mean squared error (RMSE)difference between the alpha channel produced by eachalgorithm and a hand-generated ground truth alpha channel.This channel was obtained by combining and modifying thechannels produced by CFC’s bluescreen segmentationpackage Keylight, shown in Fig. 7, and that produced byPhotoshop. Since all pixels marked as clean background orforeground in the hint image are automatically marked assuch in the alpha channel, only the unknown pixels areconsidered in the RMSE calculation. Whether the flowers

are considered background or foreground is thereforeignored in the RMSE calculation, and only part of thecurtain is considered, as the hint image only selects theperson in the scene. Three figures are given for each image:one for the errors in the alpha channel corresponding to thepart of the foreground that covers the blue screen, one forthe part that covers the curtain, and one for the whole image.This allows separate analysis of performance in the difficultcurtain area with the blue screen area.

RMSE results are calculated from the 8 bit alpha channelsdirectly. The greatest possible RMSE is therefore 255, andan algorithm producing random alpha values for each pixelwould give an expected RMSE of 127.5.

As expected, the bluescreen alpha channel shows the bestperformance of all in the bluescreen area. Although thealpha channel is very noisy in the background area, theincorrect alpha values are close enough to the correct valueto cause only a small error. The bluescreen algorithm showsvery poor performance in the foreground area. The generalalgorithm with the best performance in the bluescreen areais our principal axis algorithm. Photoshop performsparticularly well in the curtain areas, but mainly becausethe hint area for this technique is much smaller at this pointthan for the other algorithms and the ground truth referenceimage was generated from the Photoshop result for this partof the image.

In order to obtain a numerical analysis for non-bluescreenimages, an image with a white background was taken fromthe Corel Stock Photo Collection (image number 678058).Since the background was white, an alpha channel could beextracted relatively simply. The alpha channel and cleanforeground image were then used to composite a new image.Figure 8a shows the input image, Fig. 8b shows a detail of

Fig. 5 Results of running algorithm on Gema image

a Original imageb Alpha channelc Segmented imaged Composite scene

p1

p0

blue

cha

nnel

14-bit channel intensities

green channel

red channel

700080009000

4000

40006000

1000012000

14000 1600018000

8000

2000

40005000

60007000

80009000

1000011000

300020001000

6000

3000

5000

Fig. 3 Cluster of points in RGB space with line p0 p1

s

p1b

p0b

f

q

back

grou

ndb

fore

grou

nd

p1f

p0ff ′

Fig. 4 Position of points used to classify in colour space

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005390

Fig. 6 Results of running CFC’s Keylight on Rachael bluescreen test image

a Original imageb Alpha channel

Fig. 7 Results of running algorithm on Rachael bluescreen test image

a Alpha channelb Composite image

Fig. 8 Results of processing Edith Corel Stock image

a Input imageb True alpha channelc Result of running algorithm

Table 1: RMSE performance of algorithms on the Rachaeltest image

RMSE

Algorithm Bluescreen area Curtain area Whole image

Bluescreen 10.45 124.8 62.83

KnockOut 47.96 77.3 56.68

Photoshop 50.88 40.26 48.46

Ruzon=Tomasi 40.30 72.31 50.19

Chuang et al. 46.16 73.50 54.24

Principal axis 37.69 54.22 42.39

Table 2: RMSE accuracy of alpha channels producedfrom composited Edith image

RMSE

Algorithm Top of image T-shirt area Whole image

KnockOut 9.277 17.45 10.83

Photoshop 113.4 109.1 112.9

Ruzon=Tomasi 21.78 31.17 23.35

Chuang et al. 9.077 15.01 10.14

Principal axis 8.599 9.070 8.667

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 391

the alpha channel used to create it, and Fig. 8c shows thealpha channel produced by our algorithm. Table 2 shows theaccuracy of alpha channels produced by various algorithms.

Results for both images are shown graphically in Fig. 9.Figure 5 shows the result of running our algorithm on an

image with natural background.

4 Backlit images

Actors in scenes are commonly illuminated from behind(‘backlit’) This causes a highlight along the edge of theforeground, as shown in Fig. 10a, taken from theDragonheart sequence (scanned from a 35mm print of atrailer, 2286� 1224 pixels, 24 bpp, 24 fps). Backlightinghelps the foreground to stand out (and therefore can help acomposite to look more natural) but causes a problem whenestimating alpha. Figure 10c shows the result of composit-ing the alpha channel generated by the algorithm describedin Section 2.4 over a black background. This shows that theestimated alpha channel is too small–the pixels that werehighlighted from the backlight have been classified asbackground rather than foreground and are missing from thecomposite scene.

Figure 11 shows the colour vectors for pixels in a columnof Fig. 10b, with the foreground and background colours

marked as crosses. The line connects adjacent pixelsbetween these two end points. If the compositing equationis to hold, these points should be linear combinations of theforeground and background. Thus the intermediate pointsshould lie on a straight line between foreground andbackground. Instead, the line bends toward a valuecorresponding to white. The intermediate pixels are acombination of not two but three colours: the cleanbackground, the clean foreground, and a ‘clean backlight’colour m.

To produce an accurate matte in this case, an extension ofnormal alpha values is required, which permits the mixtureof three, rather than two colours. Given three clean coloursf, b and m, we wish to know for each pixel p how much ofeach clean colour is required to produce p. Figure 12 showsthe position of these four points.

Rather than using point p directly, the point q is used,which is the nearest point from point p on the plane formedby f, b and m. q is found by calculating the normal n to theplane:

n ¼ ðm� f Þ � ðb� f Þ ð10Þ

qp�! ¼ n

jnjðp� f Þ � n

jnj ð11Þ

00

120

Rachael image

Edith image

RM

SE

ove

r w

hole

imag

e

110100908070605040302010

Keylight KnockOut Photoshop Ruzon andTomasi

Chuang our algorithm

Fig. 9 RMSE error of each algorithm combined for both test images

Keylight bluescreen algorithm was not run on Edith image

Fig. 10 Result of using backlight alpha estimation

a Original imageb Original image – detailc With normal alpha estimationd With backlit alpha estimation

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005392

q ¼ p� qp�! ð12Þ

The point c must now be found. This is the point where the

line from b through q intersects with fm�!

: This is found bysolving the simultaneous equations of the two lines:

c ¼ f þ xm ð13Þ

c ¼ bþ yq ð14Þ

Writing u ¼ q� b; v ¼ b� f and w ¼ m� f for short, andequating (13) and (14) gives

f þ xw ¼ bþ yu ð15Þ

xw� yu ¼ v ð16Þ

x

w0

w1

w2

24

35� y

u0

u1

u2

24

35 ¼

v0v1v2

24

35 ð17Þ

This is an overspecified system, since it has two unknownsin three equations, and can be solved for x using just two ofthe three equations:

x ¼ u0v1 � u1v0w1u0 � w0u1

ð18Þ

x can then be substituted into (13) to find c. Alpha is thenfound in the normal way

a ¼ jq� bjjc� bj ð19Þ

Clean foreground and background colours f 0 and b0 arefound by adding qp�! to c and b, respectively. Using a toblend these two colours exactly produces p as required.

The backlight colour can be estimated geometrically fromthe pixel values. It will be a point m such that f, b and menclose virtually every point within a small area of thetransition region. Since the clean backlight colour m oftenmay be treated as being uniform in colour and intensityacross the whole image, it can be easily estimated andentered by hand.

Figure 10d shows the result of using the backlight alphaestimation algorithm on a small area of the frame (Fig. 10b).The backlight colour in this case was set to twice theintensity of full brightness in the frame. This clearly shows amore accurate segmentation, as the highlighted area hasbeen correctly detected as foreground.

5 Moving image sequences

For motion picture special effects it is necessary to segmentmoving images rather than single frames. It would bepossible but impractical to segment an entire moving imagesequence by requiring a human operator to generate a hintimage for every separate frame of the sequence andsegmenting them as independent single frames. To segmentsequences with little extra input, a system that generates hintimages for subsequent frames has been developed.

Chuang et al. [14] use optical flow to update their hintimages. Given a frame n for which an alpha channel hasalready been produced, the hint image for frame n þ 1 canbe generated from the motion vectors between the twoframes. If pixel xy in frame n has corresponding motionvector ðdx; dyÞ; then pixel ðx þ dx; y þ dyÞ is marked asbackground if the alpha channel for xy is 0, foreground ifthe alpha channel for xy is 1, and unknown otherwise.By generating this data from two known frames (e.g. fromframe n þ 2 as well as from frame n for frame n þ 1Þ; andfusing together the two results, reliability is improved.Black’s robust optical-flow algorithm [15] was used toestimate the optical flow.

While optical flow is very successful when resolution isrelatively low and interframe movement is small, it is notalways suitable for segmenting motion picture sequences.The high resolution makes processing impractically slow(much slower than modifying the hint images by hand) andlarge interframe movements cause the optical flow to fail.Black’s algorithm employs a multi-resolution technique toallow for a greater interframe movement than with manyother optical-flow techniques, but cannot cope when a smallobject is moving too fast. Even if the bidirectional techniquedescribed by Chuang et al is used it is not always possible togenerate motion vectors in areas that become unoccludedbetween frames. For example, Figs. 13 and 14 show caseswhere the hint image generated by optical flow is incorrect,as discussed in Section 5.1. In these cases an alternativetechnique is required.

Mitsunaga et al. [16] developed a system that works withmoving images. It requires a hand-generated alpha channelfor the first frame. The boundary between foreground andbackground for the first frame is then known. The boundaryin each subsequent frame is found using block matching.Alpha values are generated by calculating the gradient of theimage within the boundary. They assume that, within theboundary, the background and foreground are relativelyconstant so any gradient in the image is due only to atransition between foreground and background. They thusrequire fairly sharp transitions between foreground andbackground, or very smooth images.

Our assumption that the background and foreground aredifferent colours, which is required for the alpha channelestimation described in Section 2.4, can be used to update

intermediate foreground background

20

40

60

80

100

120

140

160

180

200

red

inte

nsiti

es

20 40 60 80 100 120 140 160 180

green intensities

4080

120160200blue intensities

Fig. 11 Graph of pixel colours taken from single column inimage of Fig. 12b, showing progression of colours from theforeground colour (left-hand end) to foreground (right-hand end)

Curve in line is caused by backlighting

f

nm

p

bq

f'b'

c

Fig. 12 Position of points used to classify in presence ofbacklighting

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 393

hint images. A probability-based colour classifier can beused to generate a hint image for frame p given an alphachannel for frame n. Frame n is split into three regions:those for which the alpha channel is zero (i.e. background),those for which the alpha channel is one (foreground) and allother values (similar to the unknown area). Blocks of sizem � m pixels (typically 128� 128Þ are then processed and amixture of Gaussians found for the distribution of pixelvalues in each block for each region: the RGB colour valuesare quantised [13] to derive a set of N means m (typicallyabout ten). Each vector is then assigned to the subclusterthat contains the most similar mean. The result is a set ofsubclusters fðn0; m0;S0Þ; ðn1; m1;S1Þ; . . . ; ðnM ;mM;SMÞgwhere n is the number of pixels in the subcluster, m is themean and S the covariance matrix. The sets are cached asthey are used several times during processing.

To create an alpha channel for frame p, blocks C of m �m pixels are processed together. A search aperture isselected which must be large enough to cover all movement.In the results presented here, the aperture was 384� 384;i.e. 3� 3 blocks of 128 pixels. The sets of clusters for eachof the blocks covered are merged. The result is three sets F,B and U for foreground, background and unknown areas,respectively.

For each pixel P in block C, the foreground, backgroundand unknown area probabilities are found:

pf ¼1

ojFjXj2F

nFjS�1

Fj

������e

�ðP�mFjÞTS�1jF

ðP�mFjÞ

2 ð20Þ

where jFj indicates the total number of pixels in the set andP is the colour of the pixel under classification. pb and pu arefound in a similar manner. In the event that one of theregions has no pixels, the probability for that region is set tozero. o is a weighting factor, which ensures that allprobabilities sum to one.

P is assigned to the region that corresponds to the highestprobability measure. Multiple source frames can be used tomake the hint image more accurate. The probabilities foreach reference frame are calculated independently and thepixel assigned to whichever region has the highestprobability across all reference frames.

The resultant hint image can be noisy and often has anunknown area which is slightly too small. This occursbecause the distribution of the unknown area overlaps withthe foreground and background clusters. To rectify thisproblem, small regions are removed and the unknown areadilated slightly. The result is a clean hint image that can beused to segment the frame.

5.1 Results

With the short Teddy sequence (1546� 1155 pixels,captured with a digital stills camera, approx 70 pixelsmotion between frames), a hand-drawn hint image wasgenerated from frame 1 and processed to generate an alphachannel, as shown in Fig. 15. Black’s optical flow algorithmfails for this image sequence, due to the aperture problem:the scene is too uniform for the optical flow to resolve to thecorrect motion in the scene. Figure 13 shows a detail of theincorrect hint image generated for frame 2 of the Teddysequence using optical flow. Clearly, the grey area in thehint image — which should overlap the boundary betweenthe bear and the background — is not correctly positioned.Figure 16 shows the hint image generated using ouralgorithm and the output alpha channel and a compositedimage generated using our estimation algorithm with thishint image. The results for frame 3 are shown in Fig. 17.While these results are not perfect (note the disoccludedtable surface on the right-hand side of the image, which isclassified as foreground in frame 3) they are a significantimprovement over the use of optical flow.

Optical flow also fails on the Dragonheart sequence.Figure 18 shows the result for frame 1 of the sequence,generated using our single image alpha estimation algor-ithm. Figure 18 shows the poor hint image subsequentlygenerated by optical flow for frame 15 of the sequence.

We then generated hint images for this sequence using amultiple source frame approach. First, the hint image for thefinal frame of the sequence (frame 21) was estimated fromframe 1. Since these two frames are so dissimilar, there wereareas that required minor correction by hand, as shown inFig. 19. This was the only frame that required suchmodification and this task is much quicker than generatingan entire hint image by hand. The intermediate frames werethen generated using both frame 1 and frame 21. Theseframes can be processed in parallel since there are nointerdependencies. Figures 20–22 show the results forframes 5, 10 and 15 of the sequence. Backlit alphaestimation was used for alpha estimation in this sequence,since the background is brighter than the foreground andbacklighting is present.

When the new segmented foreground is composited overa new background and the video sequence viewed, somesparkling can be observed: individual pixels appear anddisappear in subsequent frames causing a flickering effect atthe edges. This is partly due to the noise sensitive nature ofthe backlit alpha algorithm. There are also minor

Fig. 13 Detail showing incorrect Hint image for frame 2 ofTeddy sequence when estimated using optical flow

Fig. 14 Detail of incorrect hint image for Frame 15 ofDragonheart sequence generated using optical flow

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005394

Fig. 16 Results for frame 2 of Teddy sequence

a Hint image automatically generated from frame 1 for frame 2b New composite image

Fig. 17 Results for frame 3 of Teddy sequence

a Hint image automatically generated from frame 1 for frame 3b New composite image

Fig. 18 Results for frame 1 of Dragonheart sequence

a Hand-edited hint imageb New composite image

Fig. 15 Results of running single image algorithm on frame 1 of Teddy sequence

a Original imageb Alpha channelc Composite image

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 395

artefacts visible around the horse’s saddle in the intermedi-ate frames. These could easily be corrected by a specialeffects artist.

6 Conclusions and future work

This paper has presented a new algorithm for imagesegmentation and estimation of alpha channels on images.The technique used requires some human interaction, butovercomes the limitations of bluescreen compositing,allowing segmentation of actors from images with more

normal backgrounds. An RMSE-based metric was devel-oped to compare this algorithm to other published methodsand commercially available packages. This metric suggeststhat our algorithm is generally superior to others. Qualitiveassessment also suggests that the results produced by oursystem are at least as good as other solutions, and in manycases are produced much faster.

A technique has also been presented that allows automaticgeneration of hint images of a multiframe image sequence.Using this algorithm, only a small number of hint imagesneed to be generated manually in order to segment an imagesequence, with only two of the 21 frames in the Dragonheart

Fig. 19 Results for frame 21 of Dragonheart sequence

a Hint image automatically generated from frame 1b This hint image hand modifiedc New composite image

Fig. 20 Results for frame 5 of Dragonheart sequence

a Hint image automatically built from frames 1 and 21 for frame 5b New composite image

Fig. 21 Results for frame 10 of Dragonheart sequence

a Hint image automatically built from frames 1 and 21 for frame 10b New composite image

Fig. 22 Results for frame 15 of Dragonheart sequence

a Hint image automatically built from frames 1 and 21 for frame 15b New composite image

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005396

sequence being created by hand. Since optical flow fails onthis sequence, the only alternative would be to create everyhint image manually. Our technique operates much fasterthan optical flow, since our algorithm is an order ofmagnitude faster and can be run in parallel. Thus, theremay be cases where optical flow succeeds but it is stillpreferable to use our approach. Therefore, it would be avaluable tool in semi-automatic image segmentation formotion picture special effects and post production.Future work will investigate the possibility of simul-

taneous classification of multiple frames in order to reducesparkling by enforcing temporal consistency of alpha values.

7 Acknowledgments

Peter Hillman was supported by EPSRC studentshipnumber 99303086 and Platform Grant GR=S06578=01The Rachael sequence was provided by the ComputerFilm Co., London.

8 References

1 Smith, A.R., and Blinn, J.F.: ‘Blue screen matting’. Proc. ACS Conf. onComputer Graphics, 1996, pp. 259–268

2 Vlahos, P.: ‘Electronic composite photography with colour control’.United States patent number 4,007,487, February 1977

3 Brinkmann, R.: ‘The art and science of digital compositing’ (MorganKaufmann, 1999)

4 Rosin, P.I., and Ellis, T.: ‘Image difference threshold strategiesand shadow detection’. Proc. 6th British Machine Vision Conf., 1995,pp. 347–356

5 Zongker, D.E., Werner, D.M., Curless, B., and Salesin, D.H.:‘Environment matting and compositing’. Proc. Conf. on ComputerGraphics, (SIGGRAPH), 1999, pp. 205–214

6 Hillman, P., Hannah, J., and Renshaw, D.: ‘Alpha estimation in highresolution images and image sequences’. IEEE Computer SocietyConf. on Computer Vision and Pattern Recognition (CVPR), 2001,Vol. 1, pp. 1063–1068

7 Hillman, P., Hannah, J., and Renshaw, D.: ‘Segmentation of motionpicture images’. IEE Int. Conf. on Visual Information Engineering(VIE), 2003, pp. 97–101

8 Berman, A., Dadourian, A., and Vlahos, P.: ‘Comprehensive method forremoving from an image the background surrounding a selected object’.United States patent number 6,134,345, October 17 2000

9 Chuang, Y.-Y., Brian, C., Salesin, D.H., and Szelsiki, R.: ‘A Bayesianapproach to digital matting’. IEEE Computer Society Conf. onComputer Vision and Pattern Recognition (CVPR), 2001, Vol. 2,pp. 264–271

10 Ruzon, M., and Tomasi, C.: ‘Alpha estimation in natural images’.Proc. IEEE Conf. on Computer Vision and Pattern Recognition, 2000,Vol. 1, pp. 18–25

11 Wexler, Y., Fitzgibbon, A., and Zisserman, A.: ‘Bayesian estimation oflayers from multiple images’. Proc. ECCV, 2002, Vol. 3, pp. 487–501

12 Ohta, Y.-I., Kanade, T., and Sakai, T.: ‘Color information for regionsegmentation’, Comput. Graph. Image Process., 1980, 13, pp. 222–241

13 Orchard, M., and Bouman, C.: ‘Color quantization of images’, IEEETrans. Signal Process., 1991, 12, pp. 2677–2690

14 Chuang, Y.-Y., Agarwala, A., Curless, B., Salesin, D.H., and Szeliski,R.: ‘Video matting of complex scenes’, ACM Trans. Graph., 2002, 21,pp. 243–248, Special Issue of the SIGGRAPH 2002 Proceedings

15 Black, M.J., and Anandan, P.: ‘A framework for the robust estimation ofoptical flow’. Proc. Fourth Int. Conf. on Computer Vision, 1993,pp. 231–236

16 Mitsunaga, T., Yokoyama, Y., and Totsuka, T.: ‘Autokey: humanassisted key extraction’. Proc. Conf. on Computer Graphics(SIGGRAPH), 1995, pp. 265–272

IEE Proc.-Vis. Image Signal Process., Vol. 152, No. 4, August 2005 397