S-Caffe: Co-designing MPI Runtimes and Caffe for Scalable ......• CUDA-Aware MPI -->...

Transcript of S-Caffe: Co-designing MPI Runtimes and Caffe for Scalable ......• CUDA-Aware MPI -->...

S-Caffe:Co-designingMPIRuntimesandCaffeforScalableDeepLearningonModernGPUClusters

AmmarAhmadAwan,KhaledHamidouche,Jahanzeb Maqbool Hashmi,andDhabaleswarK.Panda

Network-BasedComputingLaboratoryDepartmentofComputerScienceandEngineeringTheOhioStateUniversity,Columbus,OH,U.S.A

Outline• Introduction– DeepLearning– Scale-upandScale-out– CUDA-AwareMPI

• ResearchChallenges• ProposedCo-Designs• PerformanceEvaluation• ConclusionandFutureWork

2PPoPP2017

• DeepLearningisgoingthrougharesurgence

• Excellentaccuracyfordeep/convolutionalneuralnetworks

• PublicavailabilityofversatiledatasetslikeMNIST,CIFAR,andImageNet

• WidespreadpopularityofacceleratorslikeNvidiaGPUs

DeepLearningResurgence

3PPoPP2017

0

20

40

60

80

100

120

2003

-12

2004

-10

2005

-08

2006

-06

2007

-04

2008

-02

2008

-12

2009

-10

2010

-08

2011

-06

2012

-04

2013

-02

2013

-12

2014

-10

2015

-08

2016

-06

Relativ

eSearchIn

terest

DeepLearning- GoogleTrends

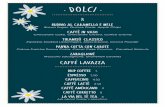

• Caffe,TensorFlow,CNTKandmanymore..

• MostframeworksareexploitingGPUstoacceleratetraining

• Diverseapplications–ImageRecognition,CancerDetection,Self-DrivingCars,SpeechProcessingetc.

DLFrameworksandTrends

4PPoPP2017https://www.top500.org/news/market-for-artificial-intelligence-projected-to-hit-36-billion-by-2025/

• NvidiaGPUsarethemaindrivingforceforfastertrainingofDLmodels– TheImageNetChallenge- (ILSVRC)– 90%oftheImageNetteamsusedGPUsin2014*– DLmodelslikeAlexNet,GoogLeNet,andVGGareused– AnaturalfitforDLduetothethroughput-orientednature

DeepLearningandGPUs

5PPoPP2017

*https://blogs.nvidia.com/blog/2014/09/07/imagenet/

DeepLearning,GPUs,andHPC

6PPoPP2017

Source:www.top500.org• IntheHPCarena– 60/500TopHPCsystemsuseNvidiaGPUs

– CUDA-AwareMessagePassingInterface(MPI)

– NvidiaKeplerand/orPascalarchitecture

– DGX-1(adedicatedDLsuper-computer)

• Scale-up:Intra-nodePerformance– Manyimprovementslike:

• NVIDIAcuDNN,NVIDIAcuBLAS• NVIDIANCCLandmanymore..

• Scale-out:Inter-nodePerformance– DLframeworks- single-nodeonly– Distributed(Parallel)Training– anemergingtrend• S-CaffeorOSU-Caffe(Thispaper)• MicrosoftCNTK(MPI-based)• GoogleTensorFlow(gRPC-based)

ParallelTraining:Scale-upandScale-out

7PPoPP2017Scale-up

Perform

ance

Scale-outPerformance

cuDNN

NCCL

gRPC

Hadoop

MPIcuBLAS

• BeforeCUDA4.0,lackofacommonmemoryregistrationmechanism– Eachdevicehastopinthehostmemory– Manyoperatingsystemsdonotallowmultiple

devicestoregisterthesamememorypages– Previoussolution:Usedifferentbufferforeach

deviceandcopythedata• AfterCUDA4.0,bothdevicesregisteracommon

hostbuffer– GPUcopiesdatatothisbuffer,andthenetwork

adaptercandirectlyreadfromthisbuffer(orvice-versa)

– NotethatGPU-Directdoesnotallowyoutobypasshostmemory

CUDA-AwareMPI(BeforeCUDA5.0)

8PPoPP2017

https://devblogs.nvidia.com/parallelforall/introduction-cuda-aware-mpi/

BeforeCUDA4.0

AfterCUDA4.0

• AfterCUDA5.0(GDR),networkadaptercandirectlyread/writedatafrom/toGPUdevicememory

• Avoidscopiesthroughthehost• Fastestpossiblecomm.

betweenGPUandIBHCA• Allowsforbetterasynchronous

communication

CUDA-AwareMPI(AfterCUDA5.0)

9PPoPP2017

InfiniBand

GPU

GPUMem.

CPU

Chipset

SystemMemory

Outline

• Introduction• ResearchChallenges• ProposedCo-Designs• PerformanceEvaluation• ConclusionandFutureWork

10PPoPP2017

Howtoefficientlyscale-outaDeepLearning(DL)frameworkandtakeadvantageofheterogeneousHighPerformanceComputing(HPC)

resources?

MainIdea

11PPoPP2017

• WhatarethefundamentalbottlenecksintheexistingDLframeworks?– Whydoweneedtosupportdistributedtraining?

• HowtodesignaScalable andDistributed Framework?– AchievebothScale-upandScale-outefficiently?

• WhatarethenewrequirementsandexpectationsforCommunicationRuntimes?– WhatarespecificchallengesfortheMPIruntimes?

• CanaCo-designapproachhelpinachievingbettertrainingperformance?– CanaDLframeworklikeCaffebeco-designedwithanMPIruntime?

• Whatistheimpactoftheco-design?– Whatperformancebenefitscanbeobserved?Atwhatlevels?

ResearchChallenges:Overview

12PPoPP2017

• WhatarethenewrequirementsandexpectationsforCommunicationRuntimes?1. VeryLarge buffersizes–

OrderofMegabytes2. GPU-only buffers3. LargeBroadcast - model

parameters4. LargeReductions - gradients

ResearchChallenges:Details

13PPoPP2017http://arxiv.org/abs/1511.00175

• cuDNN,cuBLAS -->scale-up performance• CUDA-AwareMPI-->scale-out performance

– forsmallandmediummessagesizesonly!• Canweco-design theMPIruntime

(MVAPICH2-GDR)andtheDLframework(Caffe)toachieveboth?– EfficientOverlap ofComputationand

Communication– EfficientLarge-Message Communication

(Reductions)– Whatapplication co-designs areneededto

exploitcommunication-runtime co-designs?

Canweco-designMPIandCaffe?

14PPoPP2017Scale-up

Perform

ance

Scale-outPerformance

cuDNN

NCCL

gRPC

Hadoop

ProposedCo-Designs

MPIcuBLAS

Outline• Introduction• ResearchChallenges• ProposedCo-Designs– BasicCUDA-AwareMPIDesign(SC-B)– OverlappedDataPropagation(SC-OB)– OverlappedGradientAggregation(SC-OBR)– HierarchicalReductions(HR)

• PerformanceEvaluation• ConclusionandFutureWork

15PPoPP2017

Bcast(GPU0)

packed_comm_buff

L1L2..Ln

F

L1L2..Ln

L1L2..Ln

L1L2..Ln

Params

GPU0 Params

GPU1 Params

GPU2 Params

GPU3

Gradients

1.DataPropagation

2.ForwardBackward

Pass

3.GradientAggregation

B F B F B F B

packed_reduce_buff

packed_reduce_buff

packed_reduce_buff

packed_reduce_buff

ApplyUpdates

Reduce(GPU0)

CaffeArchitecture

PPoPP2017 16

Loop{}

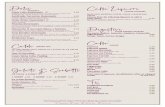

• Caffehascoarse-grainphases:1)DataPropagation2)Forward/Backward3)GradientAggregation

• Eachphaseblocksuntilthecompletionoftheearlierphase• ForS-Caffe,weproposetoexploitmanyfine-grainphases• Someareblockingduetotruedatadependencies• Mostworkinanon-blocking(overlapping)manner

ProposedCo-DesignApproach:Overview

17PPoPP2017

• Attheapplication(DLframework)level– Developafine-grainworkflow– i.e.layer-wise

communicationinsteadofcommunicatingtheentiremodel

• Attheruntime(MPI)level– Developsupporttoperformreductionofvery-largeGPUbuffers

• Challengesinmovingfromasingle-nodeCaffetoamulti-nodeS-Caffedesign(SC-B)– ParallelDataReaders-- TrainingData/Imagesneedtobereadfromdisks

– ExplicitCommunication-- Unlikepointer-basedaccessesinCaffe• e.g.parentàgradient

– UsingCollectivesinsteadofPoint-to-pointops.forDataPropagationPhase?• cudaMemcpy• MPI_Send/MPI_Recv• MPI_Bcast

BasicCUDA-AwareMPIDesign(SC-B)

18PPoPP2017

• ExploitNon-BlockingCollective(NBC)operationsinMPI-3– Dividecommunicationintofine-grainsteps

• Overlapcomputationoflayer“i”withcommunicationoflayer“i+1”• MPI_Ibcast topostallcommunicationinadvance• Waitinanon-demandfashion

– Allowforruntimeselectionofdatapropagationdesign• Basedonmessage(DLmodel)size,no.ofGPUs,no.ofnodes,etc.

OptimizedDataPropagation(SC-OB)

19PPoPP2017

• Exploitnon-blockingMPIoperations– LackofanefficientMPI_Ireduce• NoCUDA-Awaresupport!• ComputeonCPUisslow!

• Adoptfine-graintechniquesdevelopedforSC-OB?– Doesn’tworkdirectly!– Co-designgradientaggregationatapplicationlevel• Helperthread basedapproachtorealizeanon-blockingMPI_Reduce

OptimizedGradientAggregation(SC-OBR)

20PPoPP2017

• Canwedobetter?– TrueCUDA-AwareMPI_Reduce?• Doesn’texistinanyMPIruntime!• Canweco-designforDL-specificrequirements?

• HierarchicalReduction(HR)– Efficient,pipelined,chunkedchainalgorithm– PerformreductionsontheGPU– Exploithierarchicalcommunicators

PipelinedHierarchicalReduction(HR)

21PPoPP2017

• AnewdesignformappingprocessesontoGPUs– TobetterhandleGPUtopologyandnodeswithoneortwoGPUs

• Usually,MPIhastwocommunicators– Intra-nodeCommunicator– Inter-nodeCommunicator

• Weproposedandimplementedalower-levelcommunicator– Thatmayspanmultiplenodes– Enablesthepipelined-chaintospanalargernumberofGPUs

HierarchicalReduction:Details

22PPoPP2017

– MVAPICH(MPI-1),MVAPICH2(MPI-2.2andMPI-3.0),Startedin2001,Firstversionavailablein2002

– MVAPICH2-X(MPI+PGAS),Availablesince2011

– SupportforGPGPUs(MVAPICH2-GDR)andMIC(MVAPICH2-MIC),Availablesince2014

– SupportforVirtualization(MVAPICH2-Virt),Availablesince2015

– SupportforEnergy-Awareness(MVAPICH2-EA),Availablesince2015

– SupportforInfiniBandNetworkAnalysisandMonitoring(OSUINAM)since2015

– Usedbymorethan2,725organizationsin83countries

– Morethan408,000(>0.4million)downloadsfromtheOSUsitedirectly

– EmpoweringmanyTOP500clusters(Nov‘16ranking)

• 1st,10,649,600-core(SunwayTaihuLight)atNationalSupercomputingCenterinWuxi,China

• 13th,241,108-core(Pleiades)atNASA

• 17th,462,462-core(Stampede)atTACC

• 40th,74,520-core(Tsubame2.5)atTokyoInstituteofTechnology

– AvailablewithsoftwarestacksofmanyvendorsandLinuxDistros(RedHatandSuSE)

OverviewofMVAPICH2Project

PPoPP 2017 23

EmpoweringTop500systemsforoveradecade1.System-XfromVirginiaTech(3rd inNov2003,2,200processors,12.25TFlops)->2.StampedeatTACC(17th inNov‘16,462,462cores,5.168Plops)

HighPerformanceopen-sourceMPILibraryforInfiniBand,Omni-Path,Ethernet/iWARP,andRoCE(http://mvapich.cse.ohio-state.edu)

Outline

• Introduction• ResearchChallenges• ProposedCo-Designs• PerformanceEvaluation– GoogLeNet,CIFAR10– ComparisonwithMicrosoftCNTKandInspur-Caffe– HierarchicalReductions

• ConclusionandFutureWork24PPoPP2017

• Cluster-A– CrayCS-StormbasedGPUclustercalled

KESCHlocatedattheSwissNationalSupercomputingCenter

– Multi-GPUdensecluster:

• 12hybridnodes• 8NVIDIAK-80GK210GLGPUs/node

– 4K-80cardsareconnectedpersocket– 16CUDAdevices(orGPUs)inonenode– Connect-IBFDRInterconnect

PerformanceEvaluation(1/2)

25PPoPP2017

• Cluster-B• ClusteratOSUwithIntelXeon

(Broadwell)processors• IBEDRInterconnect

• 20nodes• 1K-80GPU/pernode

1. EvaluationofHierarchicalReduce(HR)– Usedosu_reduce fromtheOSUMicrobenchmarks(OMB)suite

2. EvaluationofS-Caffe– Application-level:CIFAR10andGoogLeNet– Comparison:MicrosoftCNTK,Inspur-Caffe,and

S-Caffe– EffectofHR onperformanceofS-Caffe

PerformanceEvaluation(2/2)

26PPoPP2017

HierarchicalReduce(160GPUs)

27PPoPP2017

2.5x

HierarchicalReduce (160GPUs)

28PPoPP2017

>100x

2.5x

S-Caffe:CIFAR10(64GPUs)

29PPoPP2017

• CIFAR10isagoodexampleformediumsizedmodels– ModelSizeisnotverybig!– Achievesgoodscalability– Despitethesmallcompute

requirements,anincreasedbatch-sizecanbeusedtodemonstratescale-out

• S-Caffe:forsinglenode,betterorcomparableperformance S-Caffeallowsmulti-nodetraining!

(33ximprovementoversingle-GPUtraining)

Comparison(AlexNet):S-Caffe,Inspur-Caffe,andCNTK

30PPoPP2017

• AlexNet– thebenchmarknetworkforImagerecognitionchallenges• Notoriouslyhardto

scale-outonmultiplenodesMainreason:increasingcommunicationoverhead!

• Largenumberofparameters~64Million

• BufferSize~256MB

S-Caffe:GoogLeNet(160GPUs)

31PPoPP2017

• GoogLeNetisanotherpopularDLnetwork

• 13millionparameters

• Comm.Buffer>50MB

Upto14%improvement(Scale-up)

ImpactofHR

• S-Caffeprovidesscale-outto160GPUs!!

Outline

• Introduction• ResearchChallenges• ProposedCo-Designs• PerformanceEvaluation• ConclusionandFutureWork

32PPoPP2017

• S-Caffe:rigorousco-designsattheapplicationlevelaswellastheruntimelevel

– Providesascale-outupto160GPUs

– ExploitsCUDA-Awarecommunicationincludingreductions

– Achievesspeed-upsbetterthanallotherdistributedframeworks

Conclusion

33PPoPP2017

• Ourworkisafundamentalstudy• AnalyzesthegrowthofDL

frameworksanditsimpactoncomm.runtimes

• IdentifieschallengesandopportunitiesforMPIruntimesthatdealwithnext-generationDLframeworks

• Presentsco-designsthatcanbeapplied/extendedtootherDLframeworksforefficientdistributedtraining

Alldesignsarepubliclyavailable.http://hidl.cse.ohio-state.eduhttp://mvapich.cse.ohio-state.edu/userguide/gdr/2.2/

Ammar Ahmad Awan,KhaledHamidouche,Jahanzeb MaqboolHashmi,andDhabaleswarK.Panda

{awan.10,hamidouche.2,hashmi.29,panda.2}@osu.edu

Network-BasedComputingLaboratoryhttp://nowlab.cse.ohio-state.edu/

HiDL Web Pagehttp://hidl.cse.ohio-state.edu

MVAPICHWebPagehttp://mvapich.cse.ohio-state.edu/

ThankYou!

34PPoPP2017