Reliability analysis of observational data: Problems ... · PDF fileBehavior Research Methods,...

Click here to load reader

Transcript of Reliability analysis of observational data: Problems ... · PDF fileBehavior Research Methods,...

Behavior Research Methods Instruments amp Computers2003 35 (3) 391-399

In observational research reliability of data refers tothe degree of agreement between sets of observationaldata collected independently from the same scene by twodifferent observers (interobserver agreement) or by thesame observer at different times in the data collectionprocess (intraobserver agreement) Reliable data are afirst prerequisite for answering research questions It isimportant to determine whether data sets that are col-lected by different observers or at different times differso little that one can safely assume that they are equallyvalid for analysis Reliability analysis is also used to trainnew observers in coding schemes and observational datacollectionmdashfor example by testing their data against areference data set both collected from the same videorecording

Various quantitative measures have been used by re-searchers for the assessment of the degree of agreementbetween observers Most commonly used measures forthe consistency of data sets are Cohenrsquos kappa (Cohen1960) for nominal data Pearsonrsquos product moment cor-relation coefficient commonly known as Pearsonrsquos r(Bravais 1846 Galton 1888 Pearson 1920) for inter-val data and percentage of agreement and index of con-cordance when there is no measurement scale The lattertwo are merely different expressions of the same quan-tity We will not discuss the computational formulas forthese measures we assume that the readers are familiarwith these If not please refer to statistical textbooks orthe Internet

Cohenrsquos kappa is in general the measure of choice(Hollenbeck 1978) Percentage of agreement does nottake into account the rate of chance agreement the baselevel of agreement under the assumption that each in-stance of behavior was linked at random to a behavioralcategory (throughout this paper we will use behavioralcategory instead of behavioral element which is lessgeneric) This is especially important if one has few be-havioral categories because the fewer the categories thebigger the chance that two raters will pick the same cat-egory even if they pick the categories randomly (Cohen1960)

Pearsonrsquos correlation applies only to interval data forwhich (formally) normality is assumed However anethogram can generate nominal data as well as intervaldata as we will show later Note that one preserves moreinformation when nominal data is generated than wheninterval data is generated

Many programs have been developed that are capableof computing quantitative reliability measures In factthere are too many to mention so we will mention justsome of the authors in chronological order who have de-veloped programs that are more or less specific to ob-servational data Watkins and Larimer 1980 Burns andCavallaro 1982 Bloor 1983 Chan 1987 Repp Har-man Felce van Acker and Karsh 1989 Strube 1989Valiquette Lesage Cyr and Toupin 1994 Robinson andBakeman 1998 What these programs have in commonis that confusion matrices (also known as association ma-trices or correlation matrices) are used as the startingpoint for the computations Confusion matrices are con-tingency tables in which agreements and disagreementsbetween two observers in the assessment of instances ofbehavior have been tallied However as we will demon-strate below computing a reliability measure is not re-ally a problem once you have the correct formula trans-forming the observational data into a confusion matrix isthe real issue

391 Copyright 2003 Psychonomic Society Inc

The authors thank R H Geuze (University of Groningen GroningenThe Netherlands) for his valuable input to and constructive commentson this paper Correspondence should be addressed to R G JansenNoldus Information Technology bv Costerweg 5 P O Box 268 6700 AGWageningen The Netherlands (e-mail rjansennoldusnl)

mdash Note The authors have a commercial interest in the softwaredescribed in this paper

Reliability analysis of observational dataProblems solutions and software implementation

ROLAND G JANSEN LEON F WIERTZ ERIK S MEYER and LUCAS P J J NOLDUSNoldus Information Technology Wageningen The Netherlands

In the reliability analysis literature little attention has been given to the various possible ways of cre-ating a basis for the comparison required to compute observer agreement One needs this comparisonto turn a sequential list of behavioral records into a confusion matrix It is shown that the way to dothis depends on the research question one needs to answer Four methods for creating a basis for com-parison for the computation of observer agreement in observational data are presented Guidelines aregiven for computing observer agreement in a way that fits onersquos goals Finally we discuss how thesemethods have been implemented in The Observer software The Observer 41 supports all the methodsthat have been discussed Most of these methods are not present in any other software package

392 JANSEN WIERTZ MEYER amp NOLDUS

Some literature has addressed specific problems thatarise in determining observer agreement because of thetime-structured and multivariate nature of the observa-tional data (Haccou amp Meelis 1992 Hollenbeck 1978MacLean Tapp amp Johnson 1985 Repp et al 1989Suen amp Ary 1989 Thomann 2001) However no paperhas listed all problems or provided for a full and thor-ough solution to the problems addressed

In this paper we will discuss some methodologicalproblems related to the assessment of observer agree-ment on observational data how these can be solved andhow these solutions have been implemented with TheObserver software The Observer is a software packagefor the collection management analysis and presenta-tion of observational data (Noldus 1991 NoldusTrienes Hendriksen Jansen amp Jansen 2000) One canuse it to record activities postures movements posi-tions facial expressions social interactions or any otheraspect of human or animal behavior

Version 50 the latest release at the time of writingcontains a new reliability analysis module with severalnew functions that will be discussed below Note that inthis paper observer agreement will be used to denoteboth interobserver and intraobserver agreement

COMPUTING OBSERVER AGREEMENTFOR OBSERVATIONAL DATA

The following three problems with respect to the com-putation of observer agreement for observational datawill be discussed below what cases should be comparedhow to analyze at the right level of precision and how todetermine for which behavioral categories observersagreed the least

What Cases Should be ComparedBehavioral instances versus behavioral categories

In general all measures that are used to assess observeragreement on observational data have been developed toassess the degree of correlation between two indepen-dent measurements of a number of independent casesCohenrsquos kappa for instance was originally developed toassess the degree of agreement between two medicalprofessionals in the diagnosis of the conditions fromwhich patients suffer (Cohen 1960)

In Cohenrsquos (1960) original setting each patient repre-sented a case and each medical condition was a value tobe assigned to a case However in observational re-search it is not clearly defined what the cases and thepossible values are Consequently one cannot readily in-terpret any kappa value (or other reliability measure) aslong as one does not know exactly which data were usedto compute kappa

We propose two approaches to identifying cases (1) Acase is represented by an instance of behavior and thebehavioral categories represent the possible values (iemeasurement is done on a nominal scale) One then ap-proaches the process of recording behavior as labelinginstances of behaviormdashthat is linking a behavioral cat-egory to each instance of behavior (Table 1) The orderof cases equals the chronology of the observed process(2) A case is represented by a behavioral category andthe sum of the behavioral instances for each behavioralcategory within the data set represents the values (iemeasurement is done on an interval scale) The behavior

Table 1Assigning a Nominal Value or Label (A to D)

to Instances of Behavior (Cases)

Case Observer 1 Observer 2

1 A A2 C B3 D D4 A C5 C C6 B B7 D D

Table 2Tallying Instances of Behavior for Each Nominal Category

(Case) Thus Creating Interval Data

Sum of Behavioral Instances

Case Observer 1 Observer 2

A 2 1B 1 2C 2 2D 2 2

Table 3Frequency-Based Comparison

Each Behavior Scored Equals a Case

Case Observer 1 Observer 2

1 A A2 C B3 D D4 A C5 C C6 B B7 D D

Table 4Duration-Based Comparison Each Unit of Time Equals a Case

Case Observer 1 Observer 2

1 A A2 C A3 C B4 D D5 D C6 A C7 C C8 C C9 C C

10 B C11 D B12 D D

RELIABILITY ANALYSIS WITH THE OBSERVER 393

recording process is regarded as tallying instances of be-havior for a number of behavioral categories (Table 2)The chronology of the observed process cannot be de-duced from this table since there is no temporal order inthe list of cases

In theory these different approaches could be re-flected by different data-logging methods It is clear thatTable 1 contains nominal data whereas Table 2 containsinterval data However there have been two develop-ments in the past 15 years that have caused a high degreeof standardization in data logging First of all the intro-duction of computer-aided recording of behavior has re-sulted in a major shift toward logging behaviors as nom-inal data even if logging them as interval data wouldhave sufficed to answer the research questions This isbecause the computer can record the time stamp auto-matically and can easily turn the sequential data of thekind presented in Table 1 into the contingency table pre-sented in Table 2 In other words one can easily trans-form nominal data into interval data but not the otherway round so it is always safer to log behaviors as nom-inal data Second thanks to the increased availabilityand ease of use of applications that allow the coding ofvideo at single-frame accuracy (Noldus et al 2000) re-searchers more and more use continuous sampling in-

stead of time sampling (interval sampling) The philos-ophy behind this development is simple One gets moreinformation with hardly any extra effort In continuoussampling instances of observational data are recordedcontinuously which results in the recording of the sameinstance of behavior at different times for different ob-servers This makes it difficult to tell which behavioralrecords from the observers refer to the same instance ofbehavior which is no problem at all when time samplingis used Moreover since observers may overlook behav-iors accidentally enter a behavior or record a behaviorthat they think they saw but that did not occur just de-termining the number of instances of behavior is diffi-cult Because researchers may use continuous samplingwhen interval sampling or even old-fashioned tallyingof behaviors per category would have sufficed one can-not decide upon an approach to reliability analysis on thebasis of the data recording method only and one musttake research goals into consideration

One chooses the labeling instances of behavior ap-proach if the focus is on qualitative analysis of datamdashinother words if it is important that the observers agree onthe assessment of each specific instance of behavior(eg because one is interested in the temporal and se-quential analysis of the data) For instance in usabilitystudies the human observer may need to know the exactsequence of events that causes the test participant tomake an error in the operation of the computer interfacethat is being studied Or in the study of motherndashchild in-teractions one may be interested in the presence and sig-nificance of particular sequential patterns of behaviorsand one thus needs an accurate registration of the se-quence of events

In contrast the tallying instances of behavior approachis simpler to apply and does not require linking of spe-cific behavioral records One chooses this approach ifthe focus is on quantitative analysis of datamdashin otherwords if it is important that the observers agree on thenumber of behavioral instances for each behavioral cat-egory and the timing and sequence of behavioral in-stances are not of interest For instance if one needs toanswer a research question such as ldquoIs there more shout-ing in the classroom during biology class than duringmath classrdquo it is not important who does the shoutingwhen during the course of the class the shouting is doneor what else is happening in the classroom Just the countof instances of shouting matters

Table 5Example of Two Streams of Data From the Same Scene

Recorded by Different Observers

Observer 1 Observer 2

Time Behavior Time Behavior

0 walk 0 walk3 jog 5 run8 hold 8 hold

10 walk 12 run13 run 16 walk17 end 17 end

Table 6Tallying Instances of Behavior With a

Duration-Based Comparison

Case Observer 1 Observer 2

Jog 5 0Walk 6 6Run 4 7Hold 2 4

Table 7Confusion Matrix Resulting From the Data of Table 6

Observer 1

Observer 2 Jog Walk Run Hold Disagreement Total

Jog 0 ndash ndash ndash 0 0Walk ndash 6 ndash ndash 0 6Run ndash ndash 4 ndash 3 7Hold ndash ndash ndash 2 2 4Disagreement 5 0 0 0 ndash 5Total 5 6 4 2 5 22

394 JANSEN WIERTZ MEYER amp NOLDUS

Both approaches to reliability analysis have been dis-cussed briefly in the literature For example Johnson andBolstad (1973) noted that when you compute observeragreement on the basis of the sum of the instances of be-havior per behavioral category per data set high observeragreement might be misleading because this agreementmay have been based on different instances of behaviorThis is indeed true when one is interested in the evalua-tion of sequence timing or duration of the recorded be-haviors We however want to point out that if for analy-sis one is not interested in the sequence timing andduration of instances of behaviors but only in overallstatistics (count total duration or mean duration) for thecomplete observation this method is a valid and prefer-able alternative because it better fits the analysis goalStill it is important that one should always look at themarginal totals in the confusion matrix and not just atthe correlation coefficient because Pearsonrsquos r remainsunaffected if one observer consistently overestimates thenumber of instances of behavior for each behavioral cat-egory by a certain factor For instance if one observerhas exactly twice as many tallies for each behavioral cat-egory as compared with a second observer Pearsonrsquos ris the same for both observers

Frequencies of behavioral instances versus dura-tions of behavioral instances Until now we have usedthe phrase instance of behavior However we have notdefined it exactly because we felt that there were twovalid definitions here too depending on onersquos researchquestions One could simply state that an instance of be-havior is the occurrence of (the onset of) a behavioralevent (to be referred to as the frequency-based approachsee Table 3) or one could state that it is a unit of time(eg 1 sec) for which behavior must be recorded (to bereferred to as the duration-based approach Table 4)

The interpretation of the phrase instance of behaviorhas a big impact on the way one does reliability analysis

If one defines an instance of behavior as a behavioralevent one has far fewer cases for comparison Using unitof time as onersquos definition of an instance of behavior isactually a way to take the duration of the behavioralevents into account for reliability analysis because be-havioral events that last longer will result in more in-stances of behavior and will have more impact on the re-liability scores

The frequency-based and duration-based approacheshave been discussed in the literature Hollenbeck (1978)wrote that for duration-based comparison (or second-by-second comparison as he named it) it is arguable whetherobservers actually make a decision about a behavior con-tinuously or only as a change in behavior occurs MacLeanet al (1985) did not engage in much discussion on thisThey simply stated that for event data without durationinformation one should choose the frequency-based ap-proach and for event data with duration informationone should choose the duration-based approach

In our opinion the authors of both papers passed overwhat reliability analysis is all about verifying whetheronersquos data can be trusted to give a fair answer to the re-search questions So by definition how one does relia-bility analysis depends on what research questions oneneeds to answer eventually If the question is ldquoDo peopleeat more cookies when they eat together than when theyare alonerdquo then it is clearly related to frequencies of be-havior and consequently a frequency-based approach toreliability analysis should be chosen If however thequestion is ldquoDo people spend a longer time eating whenthey are together than when they are alonerdquo then it is re-lated to the durations of behavior and a duration-basedapproach to reliability analysis should be chosen Notethat if one needs to answer both research questions oneshould do two separate reliability analyses in support ofthe answers

Four methods for identifying cases for comparisonThe approaches to the problem of identifying the cases forcomparison described in the previous two sections resultin four methods for identifying cases for comparison

Tallying instances of behavior with a duration-basedcomparison (Tables 5 6 and 7) For each behavioral cat-egory the total duration of instances is computed If astudy were designed to answer a question like ldquoDoes theobject of study walk for a higher percentage of time inthe first situation than in the secondrdquo then this would be

Table 8Tallying Instances of Behavior

With a Frequency-Based Comparison

Case Observer 1 Observer 2

Jog 1 0Walk 2 2Run 1 2Hold 1 1

Table 9Confusion Matrix Resulting From the Data of Table 8

Observer 1

Observer 2 Jog Walk Run Hold Disagreement Total

Jog 0 ndash ndash ndash 0 0Walk ndash 2 ndash ndash 0 2Run ndash ndash 1 ndash 1 2Hold ndash ndash ndash 1 0 1Disagreement 1 0 0 0 ndash 1Total 1 2 1 1 1 6

RELIABILITY ANALYSIS WITH THE OBSERVER 395

the comparison method of choice because the researchersneed to verify only whether the observers were consis-tent in measuring the percentage of time walking

Tallying instances of behaviors with a frequency-basedcomparison (Tables 5 8 and 9) For each behavioral cat-egory the number of instances is counted If a study weredesigned to answer a question like ldquoDoes walk occurmore often in the first situation than in the secondrdquo thenthis would be the comparison method of choice becausethe researchers need to verify only whether the observerswere consistent in counting the bouts of walking Mostoften this approach is related to the study of point events

Labeling instances of behavior with a duration-basedcomparison (Tables 5 10 and 11) Behavioral instancesare compared on a time-by-time basis and are linked di-rectly to the instance recorded by the other observer atthe same time If a study were designed to answer a ques-tion like ldquoDoes the object of study talk more while walk-ing when he or she is in the first situation than when heor she is in the secondrdquo then this would be the rightcomparison method because the researchers need to besure that the timing of walk and talk bouts was accuratein order to compute the amount of time for which thosebehaviors occurred at the same time This would also bethe method of choice if the researchers wanted to knowwhether longer periods of hold occurred after jog boutsthan after walk bouts because the researchers then

would need to verify whether the sequence and durationof walk jog and hold bouts were recorded accurately

Labeling instances of behavior with a frequency-basedcomparison (Tables 5 12 and 13) Each instance of be-havior is linked to an instance of behavior recorded bythe other observer Which other instance an instance ofbehavior is linked to depends on its behavioral categorytiming and sequence If researchers want to know the an-swer to a question like ldquoIs there a particular pattern thatoften precedes a run boutrdquo then this will be the method ofchoice because answering this question requires an accu-rate registration of the sequence of instances of behavior

Again note that when researchers need to answer dif-ferent questions they may need to run separate reliabil-ity analysis runs and use multiple comparison methods

Table 5 presents an example to which these methodshave been applied (shown in Tables 6ndash13) Contrary tothe previous examples time stamps have been added toinstances of behaviors to illustrate additional problemsthat are specific to continuously recorded data Further-more the confusion matrices are shown This illustratesthat the resulting confusion matrices which are used asthe basis for computation of reliability measures are dif-ferent depending on the comparison method

Note that labeling instances of behavior with afrequency-based comparison (Table 12) is not as straight-forward as it may sound For instance do jog (time = 3)and run (time = 5) refer to the same behavioral event inthe real world or did both observers miss out on a behav-ioral event Or more generally when should two behav-ioral records be considered to refer to the same instanceof behavior in the real world and when should they beconsidered to refer to separate instances of behavior

This difficulty can be countered by applying a toler-ance window that sets the limits for how far off the tim-ing of behavioral records in either data set is allowed to

Table 10Labeling Instances of Behavior With a

Duration-Based Comparison

Observer 1 Observer 2

Case Time Behavior Time Behavior

1 0 walk 0 walk2 1 walk 1 walk3 2 walk 2 walk4 3 jog 3 walk5 4 jog 4 walk6 5 jog 5 run7 6 jog 6 run8 7 jog 7 run9 8 hold 8 hold

10 9 hold 9 hold11 10 walk 10 hold12 11 walk 11 hold13 12 walk 12 run14 13 run 13 run15 14 run 14 run16 15 run 15 run17 16 run 16 walk

Table 11Confusion Matrix Resulting From the Data of Table 10

Observer 1

Observer 2 Jog Walk Run Hold Total

Jog 0 0 0 0 0Walk 2 3 1 0 0Run 3 1 3 0 7Hold 0 2 0 2 4Total 5 6 4 2 17

Table 12Labeling Instances of Behavior With a

Frequency-Based Comparison

Observer 1 Observer 2

Case Time Behavior Time Behavior

1 0 walk 0 walk2 3 jog 3 walk3 3 jog 5 run4 8 hold 8 hold5 10 walk 12 run6 13 run 16 walk

Table 13Confusion Matrix Resulting From the Data of Table 12

Observer 1

Observer 2 Jog Walk Run Hold Total

Jog 0 0 0 0 0Walk 1 1 1 0 3Run 1 1 0 0 2Hold 0 0 0 1 1Total 2 2 1 1 6

396 JANSEN WIERTZ MEYER amp NOLDUS

be (Hollenbeck 1978 MacLean et al 1985 Repp et al1989) If this tolerance window is exceeded one mayconclude that the records refer to different instances ofbehavior Of course the optimal width of the tolerancewindow varies from situation to situation Various fac-tors may affect this width the research question the na-ture of the behavior involved (does it appear suddenly orevolve slowly from another behavior) and so forth TheObserver 50 allows the user to define a tolerance win-dow as part of the reliability analysis settings

However what if Data Set 2 does not contain run (attime = 5) but rather jog (at time = 6) If the tolerancewindow is 2 sec should one regard Observer 1rsquos jog(time = 3) and Observer 2rsquos jog (time = 6) as separate be-havioral events in the real world as seems intuitive whenthese are different codes or do both records refer to thesame instance of behavior but one which the second ob-server simply entered too late The difference is impor-tant because if one makes the former assumption thecomparison will result in two disagreements whereas ifone makes the latter assumption there will be just onedisagreement

In this example it seems most probable that jog(time = 3) and jog (time = 6) refer to the same instanceof behavior There are no other obvious candidates tolink these records to and moreover there is an overlapin time for these records from time = 6 to time = 8

Still had there been a record of run (time = 5) in thefirst data set one would be inclined to link this record tojog (time = 6) which is supposedly an error of judgmentby one of the observers and in addition to conclude thatthe second observer missed out on an instance of behav-ior that corresponds with jog (time = 3) This means thatthe interpretation of these records depends on otherrecords We think that this is exactly how it should workafter all this method is about using sequential informa-tion to identify the instances of behavior

Finally another difficulty is illustrated (see Table 14)The two observers agree on walk (time = 0) and hold(time = 10) but what about the other behavioral recordsFor instance should leap (time = 3) be linked to run(time = 3) and walk (time = 6) to jump (time = 5) Orshould jog (time = 2) be linked to run (time = 3) leap(time = 3) to jump (time = 5) and walk (time = 6) to walk(time = 9) How should one set criteria for linking when

multiple behavioral records are present in either dataset between matching pairs

As a solution to these linking problems The Ob-server 50 uses an algorithm that f inds agreements byf irst linking behavioral records that overlap in time(these links are agreements per definition) and then link-ing identical records within the tolerance window Thenthe algorithm tries to identify disagreements It f irstlinks any still unlinked record to the nearest unlinkedrecord in the other data set within the tolerance windowIf there is none it tries to link this record to any eventwithin the tolerance window And if that does not workit will link this record to the nearest record in the otherdata set This algorithm is a refinement of the algorithmdescribed by Haccou and Meelis (1992) Its advantage isthat it applies to all behavioral records whether theycontain states or point events Moreover the procedure issymmetric meaning that it does not matter with whichdata set you start the comparison

So if the tolerance window were set to 2 sec Table 14would render the results presented in Table 15

How to Analyze at the Right Level of PrecisionThe second major problem in reliability analysis of ob-

servational data is getting the right level of precision forcomputing observer agreement Hollenbeck (1978) men-tioned that both relatively broad units of behavior (molarbehaviors) because of their ambiguous nature and rela-tively minute units of behavior (molecular behaviors) be-cause of their subtle differences may give rise to unreli-able recording of behavior suggesting that there is anoptimal granularity of the definition of units of behaviorSo the paradox in reliability analysis is that the more pre-cisely one tries to record behavior the more likely it isthat one will get disagreements and the lower onersquos val-ues of reliability measures will become This is especiallytrue in observational research since behavioral cate-gories are of a composite nature and typically consist ofa subject identifier a behavior identifier and possiblysome behavior modifiers Quite often one does not needsuch a high level of precision for all research questionsThe obvious remedy is that one combines (lumps) relatedelements at the analysis stage If one does not do this onewill be doing an overly harsh assessment of reliabilitywhich may lead one to think that the conclusions per-taining to onersquos research questions are invalid

Table 14Example of Two Streams of Data From the Same Scene

Recorded by Different Observers

Observer 1 Observer 2

Time Behavior Time Behavior

0 walk 0 walk2 jog 3 run3 leap 5 jump6 walk 9 walk

10 hold 10 hold

Table 15Results of Comparison When Instances of Behavior

(Drawn From Table 14) are Labeled With a Frequency-Based Comparison

Observer 1 Observer 2

Time Behavior Time Behavior Result

0 walk 0 walk agreement2 jog 3 run disagreement3 leap 5 jump disagreement6 walk 9 walk disagreement

10 hold 10 hold agreement

RELIABILITY ANALYSIS WITH THE OBSERVER 397

How to Determine for Which BehavioralCategories Observers Agreed the Least

Simply by looking at the literature references in thispaper one may conclude that much has been writtenabout reliability measures However one overall value of

a reliability measure does not provide one with any in-sight as to where exactly two observers failed to interpretthe behavior the same way Very little has been writtenabout how one can find out for which behavioral cate-gories observers agreed the least

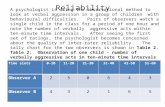

Figure 1 The Methods tab of the Reliability Analysis Settings dialog box of The Observer 50showing all four comparison methods discussed in this paper as well as the tolerance window op-tion which is specific for the frequencysequence-based comparison method

Figure 2 An example of a list of compared behavioral records Each row shows thetwo records that are linked and the result of comparison (agreement or disagreement)

398 JANSEN WIERTZ MEYER amp NOLDUS

Of course one may take the straightforward approachdescribed by van der Vlugt Kruk van Erp and Geuze(1992) They evaluated where and when a trainee failedwith a simple procedure in which the trainee and an ex-perienced observer f irst independently annotated avideotape They then replayed the videotape with a syn-chronized display of the recorded behaviors for both ob-servers However this method is quite time consumingand is of no use for evaluating discrepancies once thedata have been collected

In order to understand the actual areas of disagree-ment the confusion matrix is more useful than the val-ues of the reliability measures A particular valuemdashforexample a kappa of 05mdashdoes not tell one which be-havioral categories disagree the most One really needsto study the confusion matrix to find that out Unfortu-nately the topic of how to construct a confusion matrixhas been completely overlooked in the reliability analy-sis literature which traditionally has focused on thechoice of the most suitable reliability measure and itscomputation Indeed it is fairly obvious how to con-struct a confusion matrix from data that are not timestructured or data that are sampled at fixed intervalsHowever it is far from obvious how to construct a con-fusion matrix from continuously recorded observationaldata Such data typically consist of a sequential list of(nonequidistant) time-stamped behavioral records Trans-formation from such a list into a confusion matrix (withintermediate results such as those presented in Tables 6ndash9)cannot be done in any data acquisition and analysis pro-grams for observational research available so far withthe exception of GSEQ (Bakeman amp Quera 1995) GSEQ

however supports only the duration-based comparisonmethod in which instances of behavior are labeled andnone of the other comparison methods

RELIABILITY ANALYSIS IN THEOBSERVER 41

The Observer 50 offers a new set of reliability func-tions that tackle all the problems discussed in the previ-ous sections Note that The Observer 30 already hassupported one comparison method labeling instances ofbehavior with a frequency-based comparison Howeverback then the method was implemented in a less so-phisticated way

The Observer 50 supports all four comparison methodsdescribed above that have been used to identify casesThese have been given the following names duration-

Figure 4 An example of the sheet in a reliability analysis reportthat shows the values computed for several reliability measures

Figure 3 An example of a confusion matrix The values on the diagonal rep-resent the records that are in agreement Off-diagonal values represent the dis-agreements

RELIABILITY ANALYSIS WITH THE OBSERVER 399

based comparison tallying instances of behavior with aduration-based comparison frequency-based comparisontallying instances of behaviors with a frequency-basedcomparison durationsequence-based comparison label-ing instances of behavior with a duration-based compari-son and frequencysequence-based comparison labelinginstances of behavior with a frequency-based compari-son In addition the tolerance window option and the ad-vanced algorithms for labeling instances of behaviorwhen frequency-based comparison is used are applied(Figure 1)

Also one can tailor the precision of behavioral recordsto the level that is sufficient in relation to the researchquestions This can be done by ignoring specific segments(subject behavior modifier) of each behavioral recordor by grouping categories defined within segments

Finally outcomes are presented at different levels alist of coupled records and their assessment (agreementor disagreement) for a detailed analysis of reliability percase (Figure 2) which list is shown only when instancesof behavior are labeled with a frequency-based compar-ison a confusion matrix that lists agreements on the di-agonal and disagreements in off-diagonal cells (Fig-ure 3) and an overview of reliability statistics includingpercentage of agreement index of concordance Pear-sonrsquos r and Cohenrsquos kappa (Figure 4)

AVAILABILITY

The Observer is commercially available from NoldusInformation Technology and various international dis-tributors Readers can contact the first author for moreinformation or visit the product homepage on the Inter-net (httpwwwnolduscomproductsobserver)

REFERENCES

Bakeman R amp Quera V (1995) Analyzing interaction Sequentialanalysis with SDIS and GSEQ New York Cambridge University Press

Bloor R N (1983) A computer program to determine interrater reli-ability for dichotomous-ordinal rating scales Behavior ResearchMethods amp Instrumentation 15 615

Bravais A (1846) Analyse matheacutematique sur les probabiliteacutes des er-reurs de situation drsquoun point Meacutemoires preacutesenteacutes par divers savantsagrave lrsquoAcadeacutemie royale des sciences de lrsquoInstitut de France 9 255-332

Burns E amp Cavallaro C (1982) A computer program to determineinterobserver reliability statistics Behavior Research Methods amp In-strumentation 14 42

Chan T S C (1987) A DBASE III program that performs signifi-cance testing for the Kappa coeff icient Behavior Research MethodsInstruments amp Computers 19 53-54

Cohen J (1960) A coeff icient of agreement for nominal scales Edu-cational amp Psychological Measurement 20 37-46

Galton F (1888) Co-relations and their measurement chiefly fromanthropometric data Proceedings of the Royal Society of London 45135-145

Haccou P amp Meelis E (1992) Statistical analysis of behaviouraldata An approach based on time-structured models Oxford OxfordUniversity Press

Hollenbeck A R (1978) Problems of reliability in observationaldata In G P Sackett (Ed) Observing behavior Vol 2 Data collectionand analysis methods (pp 79-98) Baltimore University Park Press

Johnson S M amp Bolstad O D (1973) Methodological issues innaturalistic observation Some problems and solutions for field re-search In L A Hamerlynck L C Handy amp E J Mash (Eds) Be-havior change Methodology concepts and practice (pp 7-67)Champaign IL Research Press

MacLean W E Tapp J T amp Johnson W L (1985) Alternate meth-ods and software for calculating interobserver agreement for contin-uous observation data Journal of Psychopathology amp Behavioral As-sessment 7 65-73

Noldus L P J J (1991) The Observer A software system for col-lection and analysis of observational data Behavior Research Meth-ods Instruments amp Computers 23 415-429

Noldus L P J J Trienes R J H Hendriksen A H M Jansen Hamp Jansen R G (2000) The Observer Video-Pro New software forthe collection management and presentation of time-structured datafrom videotapes and digital media files Behavior Research Meth-ods Instruments amp Computers 32 197-206

Pearson K (1920) Notes on the history of correlation Biometrika13 25-45

Repp A C Harman M L Felce D van Acker R amp Karsh K G(1989) Conducting behavioral assessments on computer-collecteddata Behavioral Assessment 11 249-268

Robinson B F amp Bakeman R (1998) ComKappa A Windows rsquo95program for calculating kappa and related statistics Behavior Re-search Methods Instruments amp Computers 30 731-734

Strube M J (1989) A general program for the calculation of thekappa coefficient Behavior Research Methods Instruments amp Com-puters 21 643-644

Suen H K amp Ary D (1989) Analyzing quantitative behavioral ob-servation data Hillsdale NJ Erlbaum

Thomann B (2001) Observation and judgment in psychology As-sessing agreement among markings of behavioral events BehaviorResearch Methods Instruments amp Computers 33 339-348

Valiquette C A M Lesage A D Cyr M amp Toupin J (1994)Computing Cohenrsquos kappa coefficients using SPSS MATRIX Be-havior Research Methods Instruments amp Computers 26 60-61

van der Vlugt M J Kruk M R van Erp A M M amp Geuze R H(1992) CAMERA A system for fast and reliable acquisition of mul-tiple ethological records Behavior Research Methods Instrumentsamp Computers 24 147-149

Watkins M W amp Larimer L D (1980) Interrater agreement sta-tistics with the microcomputer Behavior Research Methods amp In-strumentation 12 466

(Manuscript received November 12 2002revision accepted for publication May 18 2003)

392 JANSEN WIERTZ MEYER amp NOLDUS

Some literature has addressed specific problems thatarise in determining observer agreement because of thetime-structured and multivariate nature of the observa-tional data (Haccou amp Meelis 1992 Hollenbeck 1978MacLean Tapp amp Johnson 1985 Repp et al 1989Suen amp Ary 1989 Thomann 2001) However no paperhas listed all problems or provided for a full and thor-ough solution to the problems addressed

In this paper we will discuss some methodologicalproblems related to the assessment of observer agree-ment on observational data how these can be solved andhow these solutions have been implemented with TheObserver software The Observer is a software packagefor the collection management analysis and presenta-tion of observational data (Noldus 1991 NoldusTrienes Hendriksen Jansen amp Jansen 2000) One canuse it to record activities postures movements posi-tions facial expressions social interactions or any otheraspect of human or animal behavior

Version 50 the latest release at the time of writingcontains a new reliability analysis module with severalnew functions that will be discussed below Note that inthis paper observer agreement will be used to denoteboth interobserver and intraobserver agreement

COMPUTING OBSERVER AGREEMENTFOR OBSERVATIONAL DATA

The following three problems with respect to the com-putation of observer agreement for observational datawill be discussed below what cases should be comparedhow to analyze at the right level of precision and how todetermine for which behavioral categories observersagreed the least

What Cases Should be ComparedBehavioral instances versus behavioral categories

In general all measures that are used to assess observeragreement on observational data have been developed toassess the degree of correlation between two indepen-dent measurements of a number of independent casesCohenrsquos kappa for instance was originally developed toassess the degree of agreement between two medicalprofessionals in the diagnosis of the conditions fromwhich patients suffer (Cohen 1960)

In Cohenrsquos (1960) original setting each patient repre-sented a case and each medical condition was a value tobe assigned to a case However in observational re-search it is not clearly defined what the cases and thepossible values are Consequently one cannot readily in-terpret any kappa value (or other reliability measure) aslong as one does not know exactly which data were usedto compute kappa

We propose two approaches to identifying cases (1) Acase is represented by an instance of behavior and thebehavioral categories represent the possible values (iemeasurement is done on a nominal scale) One then ap-proaches the process of recording behavior as labelinginstances of behaviormdashthat is linking a behavioral cat-egory to each instance of behavior (Table 1) The orderof cases equals the chronology of the observed process(2) A case is represented by a behavioral category andthe sum of the behavioral instances for each behavioralcategory within the data set represents the values (iemeasurement is done on an interval scale) The behavior

Table 1Assigning a Nominal Value or Label (A to D)

to Instances of Behavior (Cases)

Case Observer 1 Observer 2

1 A A2 C B3 D D4 A C5 C C6 B B7 D D

Table 2Tallying Instances of Behavior for Each Nominal Category

(Case) Thus Creating Interval Data

Sum of Behavioral Instances

Case Observer 1 Observer 2

A 2 1B 1 2C 2 2D 2 2

Table 3Frequency-Based Comparison

Each Behavior Scored Equals a Case

Case Observer 1 Observer 2

1 A A2 C B3 D D4 A C5 C C6 B B7 D D

Table 4Duration-Based Comparison Each Unit of Time Equals a Case

Case Observer 1 Observer 2

1 A A2 C A3 C B4 D D5 D C6 A C7 C C8 C C9 C C

10 B C11 D B12 D D

RELIABILITY ANALYSIS WITH THE OBSERVER 393

recording process is regarded as tallying instances of be-havior for a number of behavioral categories (Table 2)The chronology of the observed process cannot be de-duced from this table since there is no temporal order inthe list of cases

In theory these different approaches could be re-flected by different data-logging methods It is clear thatTable 1 contains nominal data whereas Table 2 containsinterval data However there have been two develop-ments in the past 15 years that have caused a high degreeof standardization in data logging First of all the intro-duction of computer-aided recording of behavior has re-sulted in a major shift toward logging behaviors as nom-inal data even if logging them as interval data wouldhave sufficed to answer the research questions This isbecause the computer can record the time stamp auto-matically and can easily turn the sequential data of thekind presented in Table 1 into the contingency table pre-sented in Table 2 In other words one can easily trans-form nominal data into interval data but not the otherway round so it is always safer to log behaviors as nom-inal data Second thanks to the increased availabilityand ease of use of applications that allow the coding ofvideo at single-frame accuracy (Noldus et al 2000) re-searchers more and more use continuous sampling in-

stead of time sampling (interval sampling) The philos-ophy behind this development is simple One gets moreinformation with hardly any extra effort In continuoussampling instances of observational data are recordedcontinuously which results in the recording of the sameinstance of behavior at different times for different ob-servers This makes it difficult to tell which behavioralrecords from the observers refer to the same instance ofbehavior which is no problem at all when time samplingis used Moreover since observers may overlook behav-iors accidentally enter a behavior or record a behaviorthat they think they saw but that did not occur just de-termining the number of instances of behavior is diffi-cult Because researchers may use continuous samplingwhen interval sampling or even old-fashioned tallyingof behaviors per category would have sufficed one can-not decide upon an approach to reliability analysis on thebasis of the data recording method only and one musttake research goals into consideration

One chooses the labeling instances of behavior ap-proach if the focus is on qualitative analysis of datamdashinother words if it is important that the observers agree onthe assessment of each specific instance of behavior(eg because one is interested in the temporal and se-quential analysis of the data) For instance in usabilitystudies the human observer may need to know the exactsequence of events that causes the test participant tomake an error in the operation of the computer interfacethat is being studied Or in the study of motherndashchild in-teractions one may be interested in the presence and sig-nificance of particular sequential patterns of behaviorsand one thus needs an accurate registration of the se-quence of events

In contrast the tallying instances of behavior approachis simpler to apply and does not require linking of spe-cific behavioral records One chooses this approach ifthe focus is on quantitative analysis of datamdashin otherwords if it is important that the observers agree on thenumber of behavioral instances for each behavioral cat-egory and the timing and sequence of behavioral in-stances are not of interest For instance if one needs toanswer a research question such as ldquoIs there more shout-ing in the classroom during biology class than duringmath classrdquo it is not important who does the shoutingwhen during the course of the class the shouting is doneor what else is happening in the classroom Just the countof instances of shouting matters

Table 5Example of Two Streams of Data From the Same Scene

Recorded by Different Observers

Observer 1 Observer 2

Time Behavior Time Behavior

0 walk 0 walk3 jog 5 run8 hold 8 hold

10 walk 12 run13 run 16 walk17 end 17 end

Table 6Tallying Instances of Behavior With a

Duration-Based Comparison

Case Observer 1 Observer 2

Jog 5 0Walk 6 6Run 4 7Hold 2 4

Table 7Confusion Matrix Resulting From the Data of Table 6

Observer 1

Observer 2 Jog Walk Run Hold Disagreement Total

Jog 0 ndash ndash ndash 0 0Walk ndash 6 ndash ndash 0 6Run ndash ndash 4 ndash 3 7Hold ndash ndash ndash 2 2 4Disagreement 5 0 0 0 ndash 5Total 5 6 4 2 5 22

394 JANSEN WIERTZ MEYER amp NOLDUS

Both approaches to reliability analysis have been dis-cussed briefly in the literature For example Johnson andBolstad (1973) noted that when you compute observeragreement on the basis of the sum of the instances of be-havior per behavioral category per data set high observeragreement might be misleading because this agreementmay have been based on different instances of behaviorThis is indeed true when one is interested in the evalua-tion of sequence timing or duration of the recorded be-haviors We however want to point out that if for analy-sis one is not interested in the sequence timing andduration of instances of behaviors but only in overallstatistics (count total duration or mean duration) for thecomplete observation this method is a valid and prefer-able alternative because it better fits the analysis goalStill it is important that one should always look at themarginal totals in the confusion matrix and not just atthe correlation coefficient because Pearsonrsquos r remainsunaffected if one observer consistently overestimates thenumber of instances of behavior for each behavioral cat-egory by a certain factor For instance if one observerhas exactly twice as many tallies for each behavioral cat-egory as compared with a second observer Pearsonrsquos ris the same for both observers

Frequencies of behavioral instances versus dura-tions of behavioral instances Until now we have usedthe phrase instance of behavior However we have notdefined it exactly because we felt that there were twovalid definitions here too depending on onersquos researchquestions One could simply state that an instance of be-havior is the occurrence of (the onset of) a behavioralevent (to be referred to as the frequency-based approachsee Table 3) or one could state that it is a unit of time(eg 1 sec) for which behavior must be recorded (to bereferred to as the duration-based approach Table 4)

The interpretation of the phrase instance of behaviorhas a big impact on the way one does reliability analysis

If one defines an instance of behavior as a behavioralevent one has far fewer cases for comparison Using unitof time as onersquos definition of an instance of behavior isactually a way to take the duration of the behavioralevents into account for reliability analysis because be-havioral events that last longer will result in more in-stances of behavior and will have more impact on the re-liability scores

The frequency-based and duration-based approacheshave been discussed in the literature Hollenbeck (1978)wrote that for duration-based comparison (or second-by-second comparison as he named it) it is arguable whetherobservers actually make a decision about a behavior con-tinuously or only as a change in behavior occurs MacLeanet al (1985) did not engage in much discussion on thisThey simply stated that for event data without durationinformation one should choose the frequency-based ap-proach and for event data with duration informationone should choose the duration-based approach

In our opinion the authors of both papers passed overwhat reliability analysis is all about verifying whetheronersquos data can be trusted to give a fair answer to the re-search questions So by definition how one does relia-bility analysis depends on what research questions oneneeds to answer eventually If the question is ldquoDo peopleeat more cookies when they eat together than when theyare alonerdquo then it is clearly related to frequencies of be-havior and consequently a frequency-based approach toreliability analysis should be chosen If however thequestion is ldquoDo people spend a longer time eating whenthey are together than when they are alonerdquo then it is re-lated to the durations of behavior and a duration-basedapproach to reliability analysis should be chosen Notethat if one needs to answer both research questions oneshould do two separate reliability analyses in support ofthe answers

Four methods for identifying cases for comparisonThe approaches to the problem of identifying the cases forcomparison described in the previous two sections resultin four methods for identifying cases for comparison

Tallying instances of behavior with a duration-basedcomparison (Tables 5 6 and 7) For each behavioral cat-egory the total duration of instances is computed If astudy were designed to answer a question like ldquoDoes theobject of study walk for a higher percentage of time inthe first situation than in the secondrdquo then this would be

Table 8Tallying Instances of Behavior

With a Frequency-Based Comparison

Case Observer 1 Observer 2

Jog 1 0Walk 2 2Run 1 2Hold 1 1

Table 9Confusion Matrix Resulting From the Data of Table 8

Observer 1

Observer 2 Jog Walk Run Hold Disagreement Total

Jog 0 ndash ndash ndash 0 0Walk ndash 2 ndash ndash 0 2Run ndash ndash 1 ndash 1 2Hold ndash ndash ndash 1 0 1Disagreement 1 0 0 0 ndash 1Total 1 2 1 1 1 6

RELIABILITY ANALYSIS WITH THE OBSERVER 395

the comparison method of choice because the researchersneed to verify only whether the observers were consis-tent in measuring the percentage of time walking

Tallying instances of behaviors with a frequency-basedcomparison (Tables 5 8 and 9) For each behavioral cat-egory the number of instances is counted If a study weredesigned to answer a question like ldquoDoes walk occurmore often in the first situation than in the secondrdquo thenthis would be the comparison method of choice becausethe researchers need to verify only whether the observerswere consistent in counting the bouts of walking Mostoften this approach is related to the study of point events

Labeling instances of behavior with a duration-basedcomparison (Tables 5 10 and 11) Behavioral instancesare compared on a time-by-time basis and are linked di-rectly to the instance recorded by the other observer atthe same time If a study were designed to answer a ques-tion like ldquoDoes the object of study talk more while walk-ing when he or she is in the first situation than when heor she is in the secondrdquo then this would be the rightcomparison method because the researchers need to besure that the timing of walk and talk bouts was accuratein order to compute the amount of time for which thosebehaviors occurred at the same time This would also bethe method of choice if the researchers wanted to knowwhether longer periods of hold occurred after jog boutsthan after walk bouts because the researchers then

would need to verify whether the sequence and durationof walk jog and hold bouts were recorded accurately

Labeling instances of behavior with a frequency-basedcomparison (Tables 5 12 and 13) Each instance of be-havior is linked to an instance of behavior recorded bythe other observer Which other instance an instance ofbehavior is linked to depends on its behavioral categorytiming and sequence If researchers want to know the an-swer to a question like ldquoIs there a particular pattern thatoften precedes a run boutrdquo then this will be the method ofchoice because answering this question requires an accu-rate registration of the sequence of instances of behavior

Again note that when researchers need to answer dif-ferent questions they may need to run separate reliabil-ity analysis runs and use multiple comparison methods

Table 5 presents an example to which these methodshave been applied (shown in Tables 6ndash13) Contrary tothe previous examples time stamps have been added toinstances of behaviors to illustrate additional problemsthat are specific to continuously recorded data Further-more the confusion matrices are shown This illustratesthat the resulting confusion matrices which are used asthe basis for computation of reliability measures are dif-ferent depending on the comparison method

Note that labeling instances of behavior with afrequency-based comparison (Table 12) is not as straight-forward as it may sound For instance do jog (time = 3)and run (time = 5) refer to the same behavioral event inthe real world or did both observers miss out on a behav-ioral event Or more generally when should two behav-ioral records be considered to refer to the same instanceof behavior in the real world and when should they beconsidered to refer to separate instances of behavior

This difficulty can be countered by applying a toler-ance window that sets the limits for how far off the tim-ing of behavioral records in either data set is allowed to

Table 10Labeling Instances of Behavior With a

Duration-Based Comparison

Observer 1 Observer 2

Case Time Behavior Time Behavior

1 0 walk 0 walk2 1 walk 1 walk3 2 walk 2 walk4 3 jog 3 walk5 4 jog 4 walk6 5 jog 5 run7 6 jog 6 run8 7 jog 7 run9 8 hold 8 hold

10 9 hold 9 hold11 10 walk 10 hold12 11 walk 11 hold13 12 walk 12 run14 13 run 13 run15 14 run 14 run16 15 run 15 run17 16 run 16 walk

Table 11Confusion Matrix Resulting From the Data of Table 10

Observer 1

Observer 2 Jog Walk Run Hold Total

Jog 0 0 0 0 0Walk 2 3 1 0 0Run 3 1 3 0 7Hold 0 2 0 2 4Total 5 6 4 2 17

Table 12Labeling Instances of Behavior With a

Frequency-Based Comparison

Observer 1 Observer 2

Case Time Behavior Time Behavior

1 0 walk 0 walk2 3 jog 3 walk3 3 jog 5 run4 8 hold 8 hold5 10 walk 12 run6 13 run 16 walk

Table 13Confusion Matrix Resulting From the Data of Table 12

Observer 1

Observer 2 Jog Walk Run Hold Total

Jog 0 0 0 0 0Walk 1 1 1 0 3Run 1 1 0 0 2Hold 0 0 0 1 1Total 2 2 1 1 6

396 JANSEN WIERTZ MEYER amp NOLDUS

be (Hollenbeck 1978 MacLean et al 1985 Repp et al1989) If this tolerance window is exceeded one mayconclude that the records refer to different instances ofbehavior Of course the optimal width of the tolerancewindow varies from situation to situation Various fac-tors may affect this width the research question the na-ture of the behavior involved (does it appear suddenly orevolve slowly from another behavior) and so forth TheObserver 50 allows the user to define a tolerance win-dow as part of the reliability analysis settings

However what if Data Set 2 does not contain run (attime = 5) but rather jog (at time = 6) If the tolerancewindow is 2 sec should one regard Observer 1rsquos jog(time = 3) and Observer 2rsquos jog (time = 6) as separate be-havioral events in the real world as seems intuitive whenthese are different codes or do both records refer to thesame instance of behavior but one which the second ob-server simply entered too late The difference is impor-tant because if one makes the former assumption thecomparison will result in two disagreements whereas ifone makes the latter assumption there will be just onedisagreement

In this example it seems most probable that jog(time = 3) and jog (time = 6) refer to the same instanceof behavior There are no other obvious candidates tolink these records to and moreover there is an overlapin time for these records from time = 6 to time = 8

Still had there been a record of run (time = 5) in thefirst data set one would be inclined to link this record tojog (time = 6) which is supposedly an error of judgmentby one of the observers and in addition to conclude thatthe second observer missed out on an instance of behav-ior that corresponds with jog (time = 3) This means thatthe interpretation of these records depends on otherrecords We think that this is exactly how it should workafter all this method is about using sequential informa-tion to identify the instances of behavior

Finally another difficulty is illustrated (see Table 14)The two observers agree on walk (time = 0) and hold(time = 10) but what about the other behavioral recordsFor instance should leap (time = 3) be linked to run(time = 3) and walk (time = 6) to jump (time = 5) Orshould jog (time = 2) be linked to run (time = 3) leap(time = 3) to jump (time = 5) and walk (time = 6) to walk(time = 9) How should one set criteria for linking when

multiple behavioral records are present in either dataset between matching pairs

As a solution to these linking problems The Ob-server 50 uses an algorithm that f inds agreements byf irst linking behavioral records that overlap in time(these links are agreements per definition) and then link-ing identical records within the tolerance window Thenthe algorithm tries to identify disagreements It f irstlinks any still unlinked record to the nearest unlinkedrecord in the other data set within the tolerance windowIf there is none it tries to link this record to any eventwithin the tolerance window And if that does not workit will link this record to the nearest record in the otherdata set This algorithm is a refinement of the algorithmdescribed by Haccou and Meelis (1992) Its advantage isthat it applies to all behavioral records whether theycontain states or point events Moreover the procedure issymmetric meaning that it does not matter with whichdata set you start the comparison

So if the tolerance window were set to 2 sec Table 14would render the results presented in Table 15

How to Analyze at the Right Level of PrecisionThe second major problem in reliability analysis of ob-

servational data is getting the right level of precision forcomputing observer agreement Hollenbeck (1978) men-tioned that both relatively broad units of behavior (molarbehaviors) because of their ambiguous nature and rela-tively minute units of behavior (molecular behaviors) be-cause of their subtle differences may give rise to unreli-able recording of behavior suggesting that there is anoptimal granularity of the definition of units of behaviorSo the paradox in reliability analysis is that the more pre-cisely one tries to record behavior the more likely it isthat one will get disagreements and the lower onersquos val-ues of reliability measures will become This is especiallytrue in observational research since behavioral cate-gories are of a composite nature and typically consist ofa subject identifier a behavior identifier and possiblysome behavior modifiers Quite often one does not needsuch a high level of precision for all research questionsThe obvious remedy is that one combines (lumps) relatedelements at the analysis stage If one does not do this onewill be doing an overly harsh assessment of reliabilitywhich may lead one to think that the conclusions per-taining to onersquos research questions are invalid

Table 14Example of Two Streams of Data From the Same Scene

Recorded by Different Observers

Observer 1 Observer 2

Time Behavior Time Behavior

0 walk 0 walk2 jog 3 run3 leap 5 jump6 walk 9 walk

10 hold 10 hold

Table 15Results of Comparison When Instances of Behavior

(Drawn From Table 14) are Labeled With a Frequency-Based Comparison

Observer 1 Observer 2

Time Behavior Time Behavior Result

0 walk 0 walk agreement2 jog 3 run disagreement3 leap 5 jump disagreement6 walk 9 walk disagreement

10 hold 10 hold agreement

RELIABILITY ANALYSIS WITH THE OBSERVER 397

How to Determine for Which BehavioralCategories Observers Agreed the Least

Simply by looking at the literature references in thispaper one may conclude that much has been writtenabout reliability measures However one overall value of

a reliability measure does not provide one with any in-sight as to where exactly two observers failed to interpretthe behavior the same way Very little has been writtenabout how one can find out for which behavioral cate-gories observers agreed the least

Figure 1 The Methods tab of the Reliability Analysis Settings dialog box of The Observer 50showing all four comparison methods discussed in this paper as well as the tolerance window op-tion which is specific for the frequencysequence-based comparison method

Figure 2 An example of a list of compared behavioral records Each row shows thetwo records that are linked and the result of comparison (agreement or disagreement)

398 JANSEN WIERTZ MEYER amp NOLDUS

Of course one may take the straightforward approachdescribed by van der Vlugt Kruk van Erp and Geuze(1992) They evaluated where and when a trainee failedwith a simple procedure in which the trainee and an ex-perienced observer f irst independently annotated avideotape They then replayed the videotape with a syn-chronized display of the recorded behaviors for both ob-servers However this method is quite time consumingand is of no use for evaluating discrepancies once thedata have been collected

In order to understand the actual areas of disagree-ment the confusion matrix is more useful than the val-ues of the reliability measures A particular valuemdashforexample a kappa of 05mdashdoes not tell one which be-havioral categories disagree the most One really needsto study the confusion matrix to find that out Unfortu-nately the topic of how to construct a confusion matrixhas been completely overlooked in the reliability analy-sis literature which traditionally has focused on thechoice of the most suitable reliability measure and itscomputation Indeed it is fairly obvious how to con-struct a confusion matrix from data that are not timestructured or data that are sampled at fixed intervalsHowever it is far from obvious how to construct a con-fusion matrix from continuously recorded observationaldata Such data typically consist of a sequential list of(nonequidistant) time-stamped behavioral records Trans-formation from such a list into a confusion matrix (withintermediate results such as those presented in Tables 6ndash9)cannot be done in any data acquisition and analysis pro-grams for observational research available so far withthe exception of GSEQ (Bakeman amp Quera 1995) GSEQ

however supports only the duration-based comparisonmethod in which instances of behavior are labeled andnone of the other comparison methods

RELIABILITY ANALYSIS IN THEOBSERVER 41

The Observer 50 offers a new set of reliability func-tions that tackle all the problems discussed in the previ-ous sections Note that The Observer 30 already hassupported one comparison method labeling instances ofbehavior with a frequency-based comparison Howeverback then the method was implemented in a less so-phisticated way

The Observer 50 supports all four comparison methodsdescribed above that have been used to identify casesThese have been given the following names duration-

Figure 4 An example of the sheet in a reliability analysis reportthat shows the values computed for several reliability measures

Figure 3 An example of a confusion matrix The values on the diagonal rep-resent the records that are in agreement Off-diagonal values represent the dis-agreements

RELIABILITY ANALYSIS WITH THE OBSERVER 399

based comparison tallying instances of behavior with aduration-based comparison frequency-based comparisontallying instances of behaviors with a frequency-basedcomparison durationsequence-based comparison label-ing instances of behavior with a duration-based compari-son and frequencysequence-based comparison labelinginstances of behavior with a frequency-based compari-son In addition the tolerance window option and the ad-vanced algorithms for labeling instances of behaviorwhen frequency-based comparison is used are applied(Figure 1)

Also one can tailor the precision of behavioral recordsto the level that is sufficient in relation to the researchquestions This can be done by ignoring specific segments(subject behavior modifier) of each behavioral recordor by grouping categories defined within segments

Finally outcomes are presented at different levels alist of coupled records and their assessment (agreementor disagreement) for a detailed analysis of reliability percase (Figure 2) which list is shown only when instancesof behavior are labeled with a frequency-based compar-ison a confusion matrix that lists agreements on the di-agonal and disagreements in off-diagonal cells (Fig-ure 3) and an overview of reliability statistics includingpercentage of agreement index of concordance Pear-sonrsquos r and Cohenrsquos kappa (Figure 4)

AVAILABILITY

The Observer is commercially available from NoldusInformation Technology and various international dis-tributors Readers can contact the first author for moreinformation or visit the product homepage on the Inter-net (httpwwwnolduscomproductsobserver)

REFERENCES

Bakeman R amp Quera V (1995) Analyzing interaction Sequentialanalysis with SDIS and GSEQ New York Cambridge University Press

Bloor R N (1983) A computer program to determine interrater reli-ability for dichotomous-ordinal rating scales Behavior ResearchMethods amp Instrumentation 15 615

Bravais A (1846) Analyse matheacutematique sur les probabiliteacutes des er-reurs de situation drsquoun point Meacutemoires preacutesenteacutes par divers savantsagrave lrsquoAcadeacutemie royale des sciences de lrsquoInstitut de France 9 255-332

Burns E amp Cavallaro C (1982) A computer program to determineinterobserver reliability statistics Behavior Research Methods amp In-strumentation 14 42

Chan T S C (1987) A DBASE III program that performs signifi-cance testing for the Kappa coeff icient Behavior Research MethodsInstruments amp Computers 19 53-54

Cohen J (1960) A coeff icient of agreement for nominal scales Edu-cational amp Psychological Measurement 20 37-46

Galton F (1888) Co-relations and their measurement chiefly fromanthropometric data Proceedings of the Royal Society of London 45135-145

Haccou P amp Meelis E (1992) Statistical analysis of behaviouraldata An approach based on time-structured models Oxford OxfordUniversity Press

Hollenbeck A R (1978) Problems of reliability in observationaldata In G P Sackett (Ed) Observing behavior Vol 2 Data collectionand analysis methods (pp 79-98) Baltimore University Park Press

Johnson S M amp Bolstad O D (1973) Methodological issues innaturalistic observation Some problems and solutions for field re-search In L A Hamerlynck L C Handy amp E J Mash (Eds) Be-havior change Methodology concepts and practice (pp 7-67)Champaign IL Research Press

MacLean W E Tapp J T amp Johnson W L (1985) Alternate meth-ods and software for calculating interobserver agreement for contin-uous observation data Journal of Psychopathology amp Behavioral As-sessment 7 65-73

Noldus L P J J (1991) The Observer A software system for col-lection and analysis of observational data Behavior Research Meth-ods Instruments amp Computers 23 415-429

Noldus L P J J Trienes R J H Hendriksen A H M Jansen Hamp Jansen R G (2000) The Observer Video-Pro New software forthe collection management and presentation of time-structured datafrom videotapes and digital media files Behavior Research Meth-ods Instruments amp Computers 32 197-206

Pearson K (1920) Notes on the history of correlation Biometrika13 25-45

Repp A C Harman M L Felce D van Acker R amp Karsh K G(1989) Conducting behavioral assessments on computer-collecteddata Behavioral Assessment 11 249-268

Robinson B F amp Bakeman R (1998) ComKappa A Windows rsquo95program for calculating kappa and related statistics Behavior Re-search Methods Instruments amp Computers 30 731-734

Strube M J (1989) A general program for the calculation of thekappa coefficient Behavior Research Methods Instruments amp Com-puters 21 643-644

Suen H K amp Ary D (1989) Analyzing quantitative behavioral ob-servation data Hillsdale NJ Erlbaum

Thomann B (2001) Observation and judgment in psychology As-sessing agreement among markings of behavioral events BehaviorResearch Methods Instruments amp Computers 33 339-348

Valiquette C A M Lesage A D Cyr M amp Toupin J (1994)Computing Cohenrsquos kappa coefficients using SPSS MATRIX Be-havior Research Methods Instruments amp Computers 26 60-61

van der Vlugt M J Kruk M R van Erp A M M amp Geuze R H(1992) CAMERA A system for fast and reliable acquisition of mul-tiple ethological records Behavior Research Methods Instrumentsamp Computers 24 147-149

Watkins M W amp Larimer L D (1980) Interrater agreement sta-tistics with the microcomputer Behavior Research Methods amp In-strumentation 12 466

(Manuscript received November 12 2002revision accepted for publication May 18 2003)

RELIABILITY ANALYSIS WITH THE OBSERVER 393

recording process is regarded as tallying instances of be-havior for a number of behavioral categories (Table 2)The chronology of the observed process cannot be de-duced from this table since there is no temporal order inthe list of cases

In theory these different approaches could be re-flected by different data-logging methods It is clear thatTable 1 contains nominal data whereas Table 2 containsinterval data However there have been two develop-ments in the past 15 years that have caused a high degreeof standardization in data logging First of all the intro-duction of computer-aided recording of behavior has re-sulted in a major shift toward logging behaviors as nom-inal data even if logging them as interval data wouldhave sufficed to answer the research questions This isbecause the computer can record the time stamp auto-matically and can easily turn the sequential data of thekind presented in Table 1 into the contingency table pre-sented in Table 2 In other words one can easily trans-form nominal data into interval data but not the otherway round so it is always safer to log behaviors as nom-inal data Second thanks to the increased availabilityand ease of use of applications that allow the coding ofvideo at single-frame accuracy (Noldus et al 2000) re-searchers more and more use continuous sampling in-

stead of time sampling (interval sampling) The philos-ophy behind this development is simple One gets moreinformation with hardly any extra effort In continuoussampling instances of observational data are recordedcontinuously which results in the recording of the sameinstance of behavior at different times for different ob-servers This makes it difficult to tell which behavioralrecords from the observers refer to the same instance ofbehavior which is no problem at all when time samplingis used Moreover since observers may overlook behav-iors accidentally enter a behavior or record a behaviorthat they think they saw but that did not occur just de-termining the number of instances of behavior is diffi-cult Because researchers may use continuous samplingwhen interval sampling or even old-fashioned tallyingof behaviors per category would have sufficed one can-not decide upon an approach to reliability analysis on thebasis of the data recording method only and one musttake research goals into consideration

One chooses the labeling instances of behavior ap-proach if the focus is on qualitative analysis of datamdashinother words if it is important that the observers agree onthe assessment of each specific instance of behavior(eg because one is interested in the temporal and se-quential analysis of the data) For instance in usabilitystudies the human observer may need to know the exactsequence of events that causes the test participant tomake an error in the operation of the computer interfacethat is being studied Or in the study of motherndashchild in-teractions one may be interested in the presence and sig-nificance of particular sequential patterns of behaviorsand one thus needs an accurate registration of the se-quence of events

In contrast the tallying instances of behavior approachis simpler to apply and does not require linking of spe-cific behavioral records One chooses this approach ifthe focus is on quantitative analysis of datamdashin otherwords if it is important that the observers agree on thenumber of behavioral instances for each behavioral cat-egory and the timing and sequence of behavioral in-stances are not of interest For instance if one needs toanswer a research question such as ldquoIs there more shout-ing in the classroom during biology class than duringmath classrdquo it is not important who does the shoutingwhen during the course of the class the shouting is doneor what else is happening in the classroom Just the countof instances of shouting matters

Table 5Example of Two Streams of Data From the Same Scene

Recorded by Different Observers

Observer 1 Observer 2

Time Behavior Time Behavior

0 walk 0 walk3 jog 5 run8 hold 8 hold

10 walk 12 run13 run 16 walk17 end 17 end

Table 6Tallying Instances of Behavior With a

Duration-Based Comparison

Case Observer 1 Observer 2

Jog 5 0Walk 6 6Run 4 7Hold 2 4

Table 7Confusion Matrix Resulting From the Data of Table 6

Observer 1

Observer 2 Jog Walk Run Hold Disagreement Total

Jog 0 ndash ndash ndash 0 0Walk ndash 6 ndash ndash 0 6Run ndash ndash 4 ndash 3 7Hold ndash ndash ndash 2 2 4Disagreement 5 0 0 0 ndash 5Total 5 6 4 2 5 22

394 JANSEN WIERTZ MEYER amp NOLDUS

Both approaches to reliability analysis have been dis-cussed briefly in the literature For example Johnson andBolstad (1973) noted that when you compute observeragreement on the basis of the sum of the instances of be-havior per behavioral category per data set high observeragreement might be misleading because this agreementmay have been based on different instances of behaviorThis is indeed true when one is interested in the evalua-tion of sequence timing or duration of the recorded be-haviors We however want to point out that if for analy-sis one is not interested in the sequence timing andduration of instances of behaviors but only in overallstatistics (count total duration or mean duration) for thecomplete observation this method is a valid and prefer-able alternative because it better fits the analysis goalStill it is important that one should always look at themarginal totals in the confusion matrix and not just atthe correlation coefficient because Pearsonrsquos r remainsunaffected if one observer consistently overestimates thenumber of instances of behavior for each behavioral cat-egory by a certain factor For instance if one observerhas exactly twice as many tallies for each behavioral cat-egory as compared with a second observer Pearsonrsquos ris the same for both observers

Frequencies of behavioral instances versus dura-tions of behavioral instances Until now we have usedthe phrase instance of behavior However we have notdefined it exactly because we felt that there were twovalid definitions here too depending on onersquos researchquestions One could simply state that an instance of be-havior is the occurrence of (the onset of) a behavioralevent (to be referred to as the frequency-based approachsee Table 3) or one could state that it is a unit of time(eg 1 sec) for which behavior must be recorded (to bereferred to as the duration-based approach Table 4)

The interpretation of the phrase instance of behaviorhas a big impact on the way one does reliability analysis

If one defines an instance of behavior as a behavioralevent one has far fewer cases for comparison Using unitof time as onersquos definition of an instance of behavior isactually a way to take the duration of the behavioralevents into account for reliability analysis because be-havioral events that last longer will result in more in-stances of behavior and will have more impact on the re-liability scores

The frequency-based and duration-based approacheshave been discussed in the literature Hollenbeck (1978)wrote that for duration-based comparison (or second-by-second comparison as he named it) it is arguable whetherobservers actually make a decision about a behavior con-tinuously or only as a change in behavior occurs MacLeanet al (1985) did not engage in much discussion on thisThey simply stated that for event data without durationinformation one should choose the frequency-based ap-proach and for event data with duration informationone should choose the duration-based approach

In our opinion the authors of both papers passed overwhat reliability analysis is all about verifying whetheronersquos data can be trusted to give a fair answer to the re-search questions So by definition how one does relia-bility analysis depends on what research questions oneneeds to answer eventually If the question is ldquoDo peopleeat more cookies when they eat together than when theyare alonerdquo then it is clearly related to frequencies of be-havior and consequently a frequency-based approach toreliability analysis should be chosen If however thequestion is ldquoDo people spend a longer time eating whenthey are together than when they are alonerdquo then it is re-lated to the durations of behavior and a duration-basedapproach to reliability analysis should be chosen Notethat if one needs to answer both research questions oneshould do two separate reliability analyses in support ofthe answers