R r vv r m R r v v v v r r v m V v r v v r v Oblique FAUST Clustering P R = P (X dot d)a D m R m V...

-

Upload

gillian-gwen-gordon -

Category

Documents

-

view

235 -

download

0

description

Transcript of R r vv r m R r v v v v r r v m V v r v v r v Oblique FAUST Clustering P R = P (X dot d)a D m R m V...

r r vv r mR r v v v v r r v mV v r v v r v

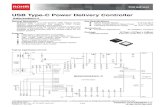

Oblique FAUST ClusteringOblique FAUST Clustering

PR = P(X dot d)<a

D≡ mRmV = oblique vector. d=D/|D|

Separate classR, classV using midpoints of means (mom)midpoints of means (mom) method: calc a

a

View mR, mV as vectors (mR≡vector from origin to pt_mR), a = (mR+(mV-mR)/2)od = (mR+mV)/2 o d (Very same formula works when D=mVmR, i.e., points to left)

d

Training ≡ choosing "cut-hyper-plane" (CHP), which is always an (n-1)-dimensionl hyperplane (which cuts space in two). Classifying is one horizontal program (AND/OR) across pTrees to get a mask pTree for each entire class (bulk classification)Improve accuracy? e.g., by considering the dispersion within classes when placing the CHP. Use1. the vector_of_median, vom, to represent each class, rather than mV, vomV ≡ ( median{v1|vV},2. project each class onto the d-line (e.g., the R-class below); then calculate the std (one horizontal formula per class; using Md's method); then use the std ratio to place CHP (No longer at the midpoint between mr [vomr] and mv [vomv] )

median{v2|vV}, ... )

vomV

v1

v2

vomR

std of these distances from origin

along the d-line

dim 2

dim 1

d-line

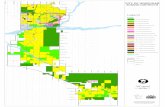

We search (p,d,r) for large gaps in the ypdr and ypd SPTSs.We pick a gap width threshold (GWT) and search for (p,d,r) for which Gap(ypdr)>GWT2 and Gap(ypd)>GWT.

So we need a pTree-based [log(n) time] Gap Finder for these cylindrical gaps

Note that the ypd gap situation changes when you change r and the ypdr gap situation changes when you change d or p, so we can't just search for ypd gaps and then search for ypdr gaps or vice versa either.

Question? Are gaps in these SPTSs independent of p? I.e., can we simplify and always take p=origin? (y-p)o(y-p)=yoy-pop is a shift of yoy by constant, pop, and (y-p)od=yod-pod is a shift of yp≡yod by constant pod.

A pTree-based Cylindrical Gap Finder (CGF):1. Choose a small initial radius, r0, (a*global_density/(n-1) for some a?)

2. Create an r0 cylinder mask about pd-line (Round cluster thru which

pd-line runs should reveal ypd gaps even if it doesn't enclose the cluster).3. Identify gaps in the ypdr SPTS after it is masked to the space between ypd gap1 and gap2

On a dataset,Y, we use 2 real valued functionals (or SPTSs) to define cylindrical gaps, ypd=(y-p)od (for planar gaps) and ypdr=(y-p)o(y-p)-((y-p)od)2 (for cylinder gaps) d=unit_vector

y

ypd gap > GWT

ypdr gap>GWT2

d

y pdr

y pd

p

But, (y-p)o(y-p) - ((y-p)od)2 =yoy-pop - (yod-pod)2

=yoy-pop - yod2 + (2pod)yod - pod2

is not a constant shift of yoy - yod2 So the answer seems to be. NO?We find yp,d gaps using yod but need p (and d) when finding ypdr gaps?

ypd

gap1

dr0 cylinder

p

ypd

gap2

ypd,r

gap

ypd gap1,gap2 mask

ypd,r

gap

Are there problems here? Yes, what if the p,d-line does not pierce our cluster near its center? Next slide.

Clustering with Oblique FAUST using cylindrical gaps (Building cylindrical gaps around round clusters)

What if clusters cannot be isolated by oblique hyperplanes? We make gaps local by adding to the d-line planar gaps, cylindrical gaps around the d-line.

ypd,,r

gap

What if the pd-line doesn't pierce the cluster at its widest?yp,d gaps are still revealed but yp,d,r gaps may not be!

yp.d

gap1

yp,d

gap2

ypd gap1,gap2 mask

2. Identify gaps in the yp,d,r SPTS after it's masked to the space between yp,d gap1 and gap2 CFG: Cylindrical Gap Finder: 1. Create a small radius (r0 = a*global_density/(n-1) for some a?) cylinder about the pd-line

dr0 cylinder

p

Solution ideas?1. Before cylinder masking (step 1) move p using gradient descent of xp,d gap width?

3. Identify dense cylinders that get pieced together later?4. Maximize each dense cylinder before finding the next?

5. We know we are in a cluster (by virtue of the fact that there are yp,d substantial gap1 and gap2) so we then move to neighboring (touching) cylinders with similar density (since they are touching there is no gap and we are confident that we are in the same cluster).

6. If we are clustering to identify outliers (anomaly detection), then the clusters we want to identify are singleton [or doubleton] sets. We can simply test each "cluster" between two gaps (and especially the end ones) for outliership (Note that we will always pierce outlier cluster at their widest).

Clustering with Oblique FAUST using cylindrical gaps 2

2. Before cylinder masking (step 1) move (p,d) using gradient descent and line search to minimize the Variance of ypd

7. For (p,d,r), r very small, do a 2Dplanar search on t=(tp,td) to maximize the variance of ypd inside the r-cylinder (this is not a gradient search but a heuristic search. The variance may not be continuously differentiable in p and d since the set of y changes as you change p or d (keeping r fixed). Also, the SPTS, ypd must be recalculated every time you change p or d).

8. For (p,d), do a 2Dplanar search on t=(tp,td) to maximize the variance of ypd over the entire space, Y. Then gradient descend to maximize the variance, then 2Dplanar search, ...

X x1 x2p1 1 1p2 3 1p3 2 2p4 3 3p5 6 2p6 9 3p7 15 1p8 14 2p9 15 3pa 13 4pb 10 9pc 11 10pd 9 11pe 11 11pf 7 8

xofM 11 27 23 34 53 80118114125114110121109125 83

No zero counts yet (=gaps)

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p2 0 0 1 0 1 0 1 0 1 0 1 0 1 1 0

p1 1 1 1 1 0 0 1 1 0 1 1 0 0 0 1

p0 1 1 1 0 1 0 0 0 1 0 0 1 1 1 1

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p2' 1 1 0 1 0 1 0 1 0 1 0 1 0 0 1

p1' 0 0 0 0 1 1 0 0 1 0 0 1 1 1 0

p0' 0 0 0 1 0 1 1 1 0 1 1 0 0 0 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p3' 0 0 1 1 1 1 1 1 0 1 0 0 0 0 1

p3 1 1 0 0 0 0 0 0 1 0 1 1 1 1 0

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4' 1 0 0 1 0 0 0 0 0 0 1 0 1 0 0

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p4 0 1 1 0 1 1 1 1 1 1 0 1 0 1 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5' 1 1 1 0 0 1 0 0 0 0 0 0 0 0 1

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p5 0 0 0 1 1 0 1 1 1 1 1 1 1 1 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6' 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

p6 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1

f=p1 and xofM-GT=23. First round of finding Lp gaps

width = 24 =16 gap: [100 0000, 100 1111]= [64,80)

width=23 =8 gap:[010 1000, 010 1111]=[40,48)

width=23 =8 gap:[011 1000, 011 1111]=[56,64)

width= 24 =16 gap: [101 1000, 110 0111]=[88,104)

width=23=8 gap:[000 0000, 000 0111]=[0,8)

OR between gap 1 & 2 for cluster C1={p1,p3,p2,p4}

OR between gap 2 and 3 for cluster C2={p5}

between 3,4 cluster C3={p6,pf} Or for cluster C4={p7,p8,p9,pa,pb,pc,pd,pe}

f=FAUST CLUSTER-fmg: O(logn)

pTree method for finding P-gaps: P ≡ ScalarPTreeSet( c o fM )

DSR

Mining Communications data prediction and anomaly detection on emails, tweets, phone, text

fS0 1 0 1

fD0 1 0 1

fT

0100

fT0 1 0 1

FU

0100

U

23

45

D

23

45

54

3

T

2

fD

0100

TD

UT

We do pTrees conversions and train F in the CLOUD; then download the resulting F to user's personal devices for predictions, anomaly detections.The same setup should work for phone record Documents, tweet Documents (in the US Library of Congress) and text Documents, etc.

fR

0000

fD

0 01 00 00 1

fD

0 0 0 10 1 0 1

fT

0 01 00 00 1

fT

0 0 0 10 1 0 1

fU

0 01 00 00 1

fS

f1,S

f2,S0 0 0 10 1 0 1

fR

0000

0000

DSR

U

23

45

TD

1 0 0 10 1 1 11 0 0 01 1 0 0

D

23

45

54

3

T

2

UT

0 0 0 10 0 1 00 0 0 10 1 0 0

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

sender rec

DSR is binary (1 means doc was sent by Sender to Reciever). Or, Sender can be attr. of Doc(typ,Nm,Sz,HsTg,Sndr)

The pSVD trick is to replace these massive relationship matrixes with small feature matrixes.

Use GradientDescent+LineSearch to minimize sum of square errors, sse, where sse is the sum over all nonblanks in TD, UT and DSR.Should we train User feature segments separately (train fU with UT only and train fS and fR with DSR only?) or train U with UT and DSR, then let

fS = fR = fU , so f = This will be called 3D f.<----fD---->

0 1 0 1 0 1 0 1

<----fT---->0 1 0 1

<----fU---->0 1 1 0

<----fS---->1 0 0 1

<----fR---->

Or training User the feature segment just once, f = This will be called 3DTU f

<----fD---->0 1 0 1 0 1 0 1

<----fT---->0 1 0 1

<fU=fS=fR>

Replace UT with fU and fT feature matrixes (2 features)

Replace TD with fT and fD

Replace DSR with fD, fS, fR

Using just one feature, replace with vectors, f=fDfTfUfSfR or f=fDfTfU

DSR

fSDSR

0 1 0 1

fDTD

0 1 0 1

fTTD

0100

fTUT

0 1 0 1

fUUT

0100

U

23

45

D

23

45

54

3

T

2

fDDSR

0100

TD

UT

fRDSR0000

T

fDTD

0 1 0 1

fTTD

0 1 0 0

fTUT

0 1 0 1

fUUT

0 1 0 0

fSDSR

0 1 0 1

fDDSR

0 1 0 0

fRDSR

0 0 0 0

Train f as follows: Train w 2D matrix, TD Train w 2D matrix UT Train over the 3D matrix, DSR pSVD for

Communication Analytics, f =

sse=nbDSR(dsr-DSRdsr)2sse=nbTD(td-TDtd)2 sse=nbUT(ut-UTut)2

ssed=2nbTD(td-TDtd)t

sset=2nbTD(td-TDtd)d

sseu=2nbUT(ut-UTtd)t

sset=2nbUT(ut-UTtd)u

ssed=2nbDSR(dsr-DSRdsr)sr

sses=2nbDSR(dsr-DSRdsr)drsser=2nbDSR(dsr-DSRdssr)ds

pSVD classification predicts blank cell values.

pSVD FAUST Cluster: Use pSVD to speed up FAUST cluster by looking for gaps in TD rather than TD (i.e., using SVD predicted values rather than actual given TD values). The same goes for DT, UT, TU, DSR, SDR, RDS.

pSVD FAUST Classification: Use pSVD to speed up FAUST Classification by finding optimal cutpoints in TD rather than TD (i.e., using SVD predicted values rather than actual given TD values). Same goes for DT, UT, TU, DSR, SDR, RDS.

E.g., on the T(d1,...,dn) table, the tth row is pSVD estimated as

(ft*d1,...,ft*dn) and the dot product vot is pSVD estimated as k=1..n vk*ft*dk So we analyze gaps in this column of values taken over all rows, t.

A 2-entity matrix can be viewed as a vector space 2 ways.

where f=(fRfC) is a F(N+n) matrix trained to minimize sse=Tij nonblank(fTij-Tij)2.

A real valued vector space, T(C1..Cn) is a 2-entity (R=row entity, C=column entity) labeled relationship over rows R1..RN, columns C1..Cn Let fTi,j= fRi

ofC be the approximation to T

E.g., Document entity: We meld the Document table with the DSR matrix and the DT matrix to form an ultrawide Universal Doc Tbl, UD(Name,Time,Sender,Length,Term1,...,TermN,Receiver1,...,Receivern) where N=~10.000 and n=~1,000,000,000. We train 2 feature vectors to approximate UD, fD and fC where fC=(fST,fS,fL,FT1,...,fTN,fR1,...,fRn).

We have found it best to train with a minimum of matrixes, which means that there will be a distinct fD vectors for each matrix.)

How many bitslices in the PTreeSet for UD? Assuming an average of bitwidth=8 for its columns, that would be 8,000,080,0024 bitslices. That may be too many to be useful (e.g., for download onto an Iphone).

Therefore we can appoximate PTreeSetUD with fUD as above. Whenever we need a Scalar PTreeSet representing a column, Ck, of UD (from PTreeSetUD) we can download that fCk

value plus fD and

multiply the SPTS, fD, by the constant, fCk to get a "good" approximation to the actual SPTS needed.

fTcolj=fRtrfCj

=fR1 fCj

: fRN

= fR1fCj

:

fRNfCj

fTrowi=fRifC=fRi

fC1...fCn

= fRifCn

... fRifCn

One forms each SPTS by multiplying a SPTS by a number (Md's alg) So we only need the two feature SPTSs. to get the entire PTS(fT) which approximates PTS(T)

Assuming one feature (i.e., F=1):

We note that the concept of the join (equijoin) which is so central to the relational model, is not necessary when we use the rolodex model and focus on entities (each entity, as a join attribute is pre-joined.)

A vector space is closed under addition (adding one vector componentwise to and multiplication by a scalar (real multiplication or multiplication of a vector by a real number producing another vector).

We also need component-wise multiplication (vector multiplication) (the 1st half of dot product) but is not a required vector space operation. Md and Arjun, do you have code these?Some thoughts on scalar multiplication. It's just shifts and additions? e.g., Take v=(7,1,6,6,1,2,2,4)TR and scalar mult by 3=(0 1 1)

10110001

10110110

11001000

the leftmost 1 bit in 3 shifts each bitslice 1 to the left and those get added to the unshifted bitslices (due to the units 1 bit.

The results bitslices are:r3 r2 r1 r0v2 v1 v0 due to the 1x21 in 3

v2 v2 v1 v0 due to the 1x21 in 3

v2 v2+v1 v1+v0 v0

Note vi + vj = vi XOR vj with carry vi AND vj

Customer

1

2

3

4

Item

6

5

4

3

Gene

111

Doc

1

2

3

4

Gene

113

Exp

11 11 11 11

1 2 3 4 Author

1 2 3 4 G 5 6term 7

5 6 7People

11

11

11

3

2

1

Doc

2 3 4 5PI

People

cust item card

authordoc card

termdoc card

docdoc

expgene card

genegene card (ppi)

expPI card

genegene card (ppi)

mov

ie

0 0 0 0

0 2

0 0

3 0 0 0

1 0 0

5 0

0

0

0

5

1

2

3

4

4 0 0

0 0 0

5

0

0

1

0

3

0

0

customer rates movie card

0 0 0 0

0 0

0 0

0 0 0 0

0 0 0

1 0 0

0

0

0

1

0 0 0

0 0 0

1

0

0

0

0

0

customer rates movie as 5 card

4

3

2

1

Course

Enrollments

76

54

32

t

1

termterm card (share stem?)

2 3 4 5PI

UT

0 0 0 10 0 1 00 0 0 10 1 0 0

DSR

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

0 0 0 11 0 1 00 0 0 10 1 0 1

sender rec

Recalling the massive interconnection of relationships between entities, any analysis we do on this we can do after estimating each matrix using pSVD trained feature vectors for the entities.

On the next slide we display the pSVD1 (one feature) replacement by a feature vector which approximates the non-blank cell values and predicts the blanks.

On this slide we display the pSVD1 (one feature) replacement by a feature vector which approximates the non-blank cell values and predicts the blanks.

DocSenderReceiver

ExpPI

GG2

fG1

TD

DD

ExpG

GG1

UserMovie ratings

Enroll

1

2

3

4

Gene2

1234

Item

111

Doc

543

G3

113

Expe

rime

nt

1 2 3 4 G1 5 6 T1 7

=Customer=users Author 1 2 3 4 5 62 3 4 52 3 4 5 7 3 4 5 62 3 4 5 3 42 3People =

mov

ie

1

2

3

4

3

2

1

Course

32

T2

1TermTerm

UT

fUT,T

fDSR,D

111

f DSR,R

fCI,C

CI

fTT,T1fG3

fG5

fG4

32

fTT,T2

1

AD

11

f E2

fE,S

fE,C11

f D2

1 2 3 4 5 62 3 4 52 3 4 5 7 3 4 5 62 3 4 5 3 42 3

fUM,M

fDSR,S

fTD,T

111

f TD,D fTT,T1

fE

11

f E1

fG2

fCI,I

fUT,U

3

2

1

fD1

111

f TD,D

fUM,M

Train the following feature vector thru gradient descent of sse, but that each set of matrix feature vectors be trained on only the sse over the nonblank cells of that matrix.

/ train these 2 on GG1 \ /train these 2 on EG\ / train on GG2 \

And the same for the rest of them.Any data mining we can do with the matrixes, we can do

(estimate) with the feature vectors (e.g., netflix like recommenders, prediction of blank cell values, FAUST gap based classification and clustering including anomaly detection).

fG1 fG2 fG3fG5

1 1

fE2 fG4

RC C1 C2 ... Cn

R1R2

.

.

.

RN

A n-dim vector space, RC(C1,...,Cn) is a matrix or TwoEntityRelationship (with row entity instances R1...RN and column entity instances C1...Cn.) ARC will denote the pSVD approximation of RC:

0 0 0 10 0 1 00 0 0 10 1 0 0

0 0 0 10 0 1 00 0 0 10 1 0 00 0 0 10 0 1 00 0 0 10 1 0 0

fC 4 1 ... 3A N+n vector, f=(fR, fC) defines prediction, pi,j=fRifCj

, error, ei,j=pi,j-RCi,j then ARCf,i,j≡fRifCj

and ARCf,row_i= fRifC= fRi

(fC1...fCn

)= (fRifC1

...fRifCn

). Use sse gradient descent to train f.

Compute fCodt=k=1..nfCkdk form constant SPTS with it, and multiply that SPTS by SPTS, fR.

Any datamining that can be done on RC can be done using this pSVD approximation of RC, ARCe.g., FAUST Oblique (because ARCodt should show us the large gaps quite faithfully).

Given any K(N+n) feature matrix, F=[FR FC], FRi=(f1Ri

...fKRi), FCj

=(f1Cj...fKCj

) pi,j=fRiofCj

=k=1..KfkRifkCj

d 1 0 ... 0

fR

1::6

42351522

FC=f1C

f2C

2 4 ... 5

1::2

42331521

f1R f2R

fR1(fCodt)

Once f is trained and if d=unit n-vector, the SPTS, ARCfodt, is:

k=1..n fR2fCk

dk : k=1..n fRN

fCkdk

fR1k=1..n fCk

dk = fR2

k=1..n fCkdk

: fRN

k=1..n fCkdk

k=1..n fR1fCk

dk = (fR1fC)odt =

(fR2fC)odt

: (fRN

fC)odt

fR2(fCodt)

fRN

(fCodt)

Once F is trained and if d=unit n-vector, the SPTS, ARCodt, is:

(FR1oFC)odt = (FR2

oFC)odt

: (FRN

oFC)odt

k=1..n(f1R1f1Ck

+..+fKR1fKCk

)dk = k=1..n(f1R2

f1Ck+..+fKR2

fKCk)dk

: k=1..n(f1RN

f1Ck+..+fKRN

fKCk)dk

FR1o(FCodt)

FR2o(FCodt)

: FRN

o(FCodt) Keeping in mind that we have decided (tentatively) to approach all matrixes as rotatable tables, this then is a

universal method of approximation. The big question is, how good is the approximation for data mining? It is known to be good for Netflix type recommender matrixes but what about others?

1 2 3 4 5 6 7 8 9 a b1 12 53 2 3456 37 28 9 4 310 111 3 41213 114 515 2

t sse Rnd 1.67 24.357 1 0.1 4.2030 2 0.124 1.8173 3 0.085 1.0415 4 0.16 0.7040 5 0.08 0.5115 6 0.24 0.3659 7 0.074 0.2741 8 0.32 0.2022 9 0.072 0.1561 10 0.4 0.1230 11 0.07 0.0935 12 0.42 0.0741 13 0.05 0.0599 14 0.07 0.0586 15 0.062 0.0553 16 0.062 0.0523 17 0.062 0.0495 18 0.063 0.0468 19 2.1 0.0014 20 0.1 0.0005 21 0.2 0.0000 22

e 1 2 3 4 5 6 7 8 9 a b1 2 3 456 7 8 9 a b c d e f

-0.23-2.54 0.078 -4.52 -2.22 -3.56 -3.56 -3.56 -3.02 -2.22 -3.08 -3.850.213 -3.46 -0.18 -1.56 -2.50 -2.50 -2.50 -2.12 -1.56 -2.17 -2.71-1.99 -3.85 -3.54 -1.74 -2.78 -2.78 -2.78 -2.36 -1.74 -2.41 -3.02-1.99 -3.85 -3.54 -1.74 -2.78 -2.78 -2.78 -2.36 -1.74 -2.41 -3.02-1.52 0.047 -2.71 -1.33 -2.13 -2.13 -2.13 -1.81 -1.33 -1.85 -2.31-1.68 -3.26 -3.00 -1.47 -2.36 -2.36 -2.36 -0.00 -1.47 -2.04 -2.55-1.99 -3.85 -3.54 -1.74 -2.78 -2.78 -2.78 -2.36 -1.74 -2.41 -3.02-2.12 -4.11 0.215 -1.86 -2.97 -2.97 -2.97 -2.52 -1.86 -2.58 -0.22-1.20 -2.32 -2.14 -1.05 -1.68 -1.68 -1.68 -1.42 -0.05 -1.46 -1.82-2.53 -4.90 -4.50 -2.21 -3.54 -3.54 -3.54 -3.00 -2.21 -0.07 0.156-1.99 -3.85 -3.54 -1.74 -2.78 -2.78 -2.78 -2.36 -1.74 -2.41 -3.02-1.20 -2.32 -2.14 -0.05 -1.68 -1.68 -1.68 -1.42 -1.05 -1.46 -1.82-2.54 0.078 -4.52 -2.22 -3.56 -3.56 -3.56 -3.02 -2.22 -3.08 -3.850.008 -3.85 -3.54 -1.74 -2.79 -2.79 -2.79 -2.36 -1.74 -2.41 -3.02

Of course if we take the previous data (all nonblanks=1. and we only count errors in those nonblarnks, then f=pure1 has sse=0. But of course, if it is a fax-type image (of 0/1s) then there are no blank (=0 positions must be assessed error too). So we change the data.

1 2 3 4 5 6 7 8 9 a b 1.19 2.30 2.12 1.04 1.67 1.67 1.67 1.41 1.04 1.44 1.81

1 2 3 4 5 6 7 8 9 a b1.03 2.13 1.49 1.67 1.67 1.27 1.41 1.67 1.78 1.00 2.12

t sse 0.25 13.128 1.0411.057 0.410.633 0.610.436 0.410.349 0.610.298 0.410.266 0.610.241 0.510.223 0.410.209 110.193 0.410.182 0.510.176 0.510,171 0.5 10.167 0.510.164 0.510.161 0.510.159 0.510.158 0.510.157 0.510.156 0.510.155 0.510.154 0.510.154

1 2 3 4 5 6 7 8 9 a b c d e f 0.06 -0.0 0.02 -0.0 -0. -0. -0. -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0

1 2 3 4 5 6 7 8 9 a b c d e f 0.01 -0.0 0.04 -0. -0. -0.0 -0.0 -0. 0.05 -0.0 0.04 -0.0 -0.0 -0.0 0.01

1 2 3 4 5 6 7 8 9 a b1 12 13 1 14 5 6 17 189 1 110 111 1 11213 114 115 1

Next, consider a fax-type image dataset (blanks=zeros. sse summed over all cells).e 1 2 3 4 5 6 7 8 9 a b c d e f1 2 3 456 7 8 9 a b c d e f

0.605 -0.16 -0.35 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.09 -0.26 -0.00-0.19 0.920 -0.17 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.04 -0.12 -0.000.255 -0.30 0.327 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.18 -0.49 -0.00-0.00 -0.00 -0.00 -0.00 ******************-0.00 -0.00 -0.00 -0.00 *****-0.00 -0.00 -0.00 -0.00 ******************-0.00 -0.00 -0.00 -0.00 *****-0.19 0.920 -0.17 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.04 -0.12 -0.00-0.00 -0.00 -0.00 -0.00 ******************0.999 -0.00 -0.00 -0.00 *****-0.00 -0.00 -0.00 -0.00 ******************-0.00 -0.00 -0.00 -0.00 *****-0.60 -0.24 0.453 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.15 0.596 -0.00-0.00 -0.00 -0.00 -0.00 ******************-0.00 0.999 -0.00 -0.00 *****-0.35 -0.14 -0.31 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 0.911 0.765 -0.00-0.00 -0.00 -0.00 -0.00 ******************-0.00 -0.00 -0.00 -0.00 *****-0.00 -0.00 -0.00 0.999 ******************-0.00 -0.00 -0.00 -0.00 *****-0.19 0.920 -0.17 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.04 -0.12 -0.000.605 -0.16 -0.35 -0.00 -0.00 -0.00 -0.00 -0.00 -0.00 -0.09 -0.26 -0.00

-0.00 -0.00 -0.00-0.00 -0.00 -0.00-0.00 -0.00 -0.00**********************************-0.00 -0.00 -0.00**********************************-0.00 -0.00 -0.00*****************-0.00 -0.00 -0.00**********************************-0.00 -0.00 -0.00-0.00 -0.00 -0.00

Without any gradient descent rounds we can knock down column 1 with T=t+(tr1...tcf) but sse=11.017 (can't go below its min=10.154)

6t=1 2 3 4 5 6 7 8 9 a b c d e3.88 0.78 0.52 0.26 0.00 0.00 0.00 0.26 0.26 0.26 0.52 0.00 0.00 0.00 1 1 1 1

1 1

1 1 1 1 1

1 1 1

-0.4 -17 -1.0 .01 .01 -17 -17 .02 -1.9 -17 -1.9 .01 -17 f 1 2 3 4 5 6 7 8 9 a b c d0.00 0.26 0.00 0.25 0.00 0.00 0.00 0.00 0.00 -0.0 0.00 0.00 0.00 0.00 -0.0 -0.2 -0.1 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.1 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.2 0.86 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.1 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.00 0.00 1.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 1.00 0.00 0.00 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 0.99 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.2 -0.1 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.0 -0.1 -0.0 -0.0

-17 -0.5 e f0.00 0.25-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.00.00 0.00-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0-0.0 -0.0

tr1 tr2 tr3 tr4 tr5 tr6 tr7 tr8 tr9 tra trb trc trd tre trf tc1 tc2 tc3 tc4 tc5 tc6 tc7 tc8 tc9 tca tcb tcc tcd tce tcf

Minimum sse=10.154

![( Z } } ( Z : } ] v d l & } } v / r í í DD^ } o } P v U ' u v Ç î ì r î î & µ ... · ï ï X d v ] ] } v W o v } D ] v v v } ( / r í í DD^ ï í ï ð X d v ] ] } v }](https://static.fdocuments.us/doc/165x107/5ee0df91ad6a402d666bf443/-z-z-v-d-l-v-r-dd-o-p-v-u-u-v-r-.jpg)

![Z ] v P K ] } v ( Ks/ r í õ > } l r } Á v d Z d } Ç } t Ç ... Toyota Restart Manual 10-4-2020.pdfZ ] v P K ] } v ( Ks/ r í õ > } l r } Á v d Z d } Ç } t Ç ...](https://static.fdocuments.us/doc/165x107/61224a522ac9e35f3d2c7089/z-v-p-k-v-ks-r-l-r-v-d-z-d-t-toyota-restart.jpg)

![d o r í Z } v } Z µ ] } ( W r ] D ] v P Z o } v í ì r ì ò ...€¦ · z } v } z µ ] } ( w r ] d ] v p z o } v í ì r ì ò r î ì î ì p ] v d v p o } ] w l p e/d z ( x](https://static.fdocuments.us/doc/165x107/5f8fa81bc650b204ff09bf85/d-o-r-z-v-z-w-r-d-v-p-z-o-v-r-z-v-z-.jpg)

![dzW r Zd/&/ d d ^, d · dzW r Zd/&/ d d ^, d EK X ^ X/D X X ì ñ ï ( } v î ì ò ^ ] ~^ ] } v ] d Ç ] ( ] , } o W d Æ } v À ] ] } v / v X K v v } µ o À](https://static.fdocuments.us/doc/165x107/5e684541e0166763be5fc0c6/dzw-r-zd-d-d-d-dzw-r-zd-d-d-d-ek-x-xd-x-x-v.jpg)

![hE/d E d/KE^ W } } } o v > ] ] } v ^ À ] , ^ K& ^d d , ^ K ......} ( } ] v u v î õ rK r ì ð î ñ rE } À r õ ô ì õ r r í ò KhEdZz , K& ^d d , K& 'Ks ZED Ed D/E/^d Z &KZ](https://static.fdocuments.us/doc/165x107/61349030dfd10f4dd73bcf52/hed-e-dke-w-o-v-v-k-d-d-k-.jpg)