Probability%2C Random Processes%2C and Statistical Analysis

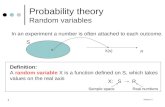

Transcript of Probability%2C Random Processes%2C and Statistical Analysis