Probabilistic Model-Agnostic Meta-Learningcbfinn/_files/icml2018_pgm_platipus.pdfProbabilistic...

Transcript of Probabilistic Model-Agnostic Meta-Learningcbfinn/_files/icml2018_pgm_platipus.pdfProbabilistic...

Probabilistic Model-Agnostic Meta-LearningChelsea Finn*, Kelvin Xu*, Sergey Levine

*equal contribution

Deep learning requires a lot of data.

Few-shot learning / meta-learning: incorporate prior knowledge from previous tasks for fast learning of new tasks

Given 1 example of 5 classes: Classify new examples

few-shot image classification

Ambiguity in Few-Shot Learning

Important for:- learning to actively learn

- safety-critical few-shot learning (e.g. medical imaging)

- learning to explore in meta-RL

Can we learn to generate hypotheses about the underlying function?

?

?

+ -

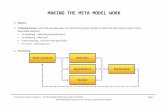

Key idea: Train over many tasks, to learn parameter vector θ that transfers

Meta-test time:

Background: Model-Agnostic Meta-LearningOptimize for pretrained parameters

MAML:

Finn, Abbeel, Levine ICML ’17

How to reason about ambiguity?

Modeling ambiguity

Can we sample classifiers?

Intuition of our approach: learn a prior where a random kick can put us in different modes

smiling, hatsmiling, young

Meta-learning with ambiguity

Sampling parameter vectors

approximate with MAPthis is extremely crude

but extremely convenient!

Training is harder. We use amortized variational inference.

(Santos ’92, Grant et al. ICLR ’18)

PLATIPUSProbabilistic LATent model for Incorporating Priors and Uncertainty in few-Shot learning

Ambiguous 5-shot regression:

Ambiguous 1-shot classification:

PLATIPUSProbabilistic LATent model for Incorporating Priors and Uncertainty in few-Shot learning

Collaborators

Questions?

Sergey LevineKelvin Xu

Takeaways

Can use hybrid inference in hierarchical Bayesian model for scalable and uncertainty-aware meta-learning.

Handling ambiguity is particularly relevant in few-shot learning.

Related concurrent work

Kim et al. “Bayesian Model-Agnostic Meta-Learning”: uses Stein variational gradient descent for sampling parameters

Gordon et al. “Decision-Theoretic Meta-Learning”: unifies a number of meta-learning algorithms under a variational inference framework

![A meta-meta-model for seven business process modelling ... · A meta-meta-model for seven business process modelling languages Heidari, F.; ... (BWW) [9] ontology as an ... model](https://static.fdocuments.us/doc/165x107/5b952f7309d3f2ea5c8bb113/a-meta-meta-model-for-seven-business-process-modelling-a-meta-meta-model.jpg)

![Adaptive Gradient-Based Meta-Learning Methods · GBML: GBML stems from the Model-Agnostic Meta-Learning (MAML) algorithm [23] and has been widely used in practice [1, 44, 31]. An](https://static.fdocuments.us/doc/165x107/5fe123ce63e4bc70da1aaedb/adaptive-gradient-based-meta-learning-methods-gbml-gbml-stems-from-the-model-agnostic.jpg)

![via Task-Aware Modulation - GitHub Pages• Task network: further gradient adaptation via MAML steps Background Model-Agnostic Meta-Learning [1] • Meta-learn a parameter initialization](https://static.fdocuments.us/doc/165x107/5f01ee407e708231d401bd1c/via-task-aware-modulation-github-pages-a-task-network-further-gradient-adaptation.jpg)

![Meta-Learning for User Cold-Start Recommendation · RS, by applying model-agnostic meta learning (MAML) [11]. MAML is a general and very powerful technique proposed for meta-learning](https://static.fdocuments.us/doc/165x107/5fbdcf670e5fe867b94035f6/meta-learning-for-user-cold-start-recommendation-rs-by-applying-model-agnostic.jpg)

![arXiv:2002.04766v1 [cs.LG] 12 Feb 2020Distribution-Agnostic Model-Agnostic Meta-Learning Liam Collins , Aryan Mokhtari , Sanjay Shakkottai February 13, 2020 Abstract TheModel-AgnosticMeta-Learning(MAML)algorithm[Finnetal.,2017]hasbeencel](https://static.fdocuments.us/doc/165x107/5e5f5b26282d4a2232338b23/arxiv200204766v1-cslg-12-feb-2020-distribution-agnostic-model-agnostic-meta-learning.jpg)