polynomial reg 150...60 70 80 90 0 50 100 150 x y Polynomial Regression (degree=2) 60 70 80 90 0 50...

Transcript of polynomial reg 150...60 70 80 90 0 50 100 150 x y Polynomial Regression (degree=2) 60 70 80 90 0 50...

60 70 80 90

050

100

150

x

y

polynomial regLOESSSmoothing spline

60 70 80 90

050

100

150

x

y

60 70 80 90

050

100

150

x

y

Polynomial Regression (degree=2)

60 70 80 90

050

100

150

xy

LOESS

60 70 80 90

050

100

150

x

y

Smoothing Spline

60 70 80 90

050

100

150

x

y

60 70 80 90

050

100

150

x

y

Polynomial Regression (degree=2)

60 70 80 90

050

100

150

xy

LOESS

60 70 80 90

050

100

150

x

y

Smoothing Spline

# Prepare the spam data: classify Y based on 57 X’s > spam=read.table("spam.txt"); > spam=data.frame(spam); > spam.name=read.table("spam_name.txt", as.is=TRUE); > for(i in 1:57) names(spam)[i]=as.character(spam.name[i,]); > names(spam)[58]="Y"; > spam$Y=as.factor(spam$Y); # Split data into training and testing > load("spam_ID.Rdata"); > spam.training = spam[ train.id, ]; > spam.test = spam[ test.id, ]; > p = dim(spam)[2] - 1;

> library(randomForest); > rfModel = randomForest(Y~., data = spam.training, importance = T, mtry = 7, ntree=400 ); #mtry is, by default, sqrt(p) for classification > yhat.train = predict(rfModel, spam.training ) > table(spam.training$Y, yhat.train); yhat.train 0 1 0 364 0 1 1 235 > 1/(365+235) [1] 0.001666667 > table(rfModel$predicted, spam.training$Y) 0 1 0 349 30 1 15 206

> rfModel$confusion 0 1 class.error 0 349 15 0.04120879 1 30 206 0.12711864 > (45)/(45+349+206) [1] 0.075 > yhat.test = predict(rfModel, spam.test) > table(spam.test$Y, yhat.test); yhat.test 0 1 0 236 6 1 19 139 > (25)/(236+6+19+139) [1] 0.0625

# Check the possible outcomes returned by randomForest > names(rfModel) > ?randomForest > rfModel$oob.times [1] 148 145 144 143 ….. > tmp=rfModel$err.rate > tmp[c(1:5,397:400),] OOB 0 1 [1,] 0.17592593 0.15447154 0.2043011 [2,] 0.19726027 0.17061611 0.2337662 [3,] 0.19325843 0.17110266 0.2252747 [4,] 0.16566866 0.15384615 0.1831683 [5,] 0.16141002 0.14814815 0.1813953 [6,] 0.07833333 0.04120879 0.1355932 [7,] 0.07666667 0.04120879 0.1313559 [8,] 0.07666667 0.04120879 0.1313559 [9,] 0.07833333 0.04120879 0.1355932

0 100 200 300 400

0.05

0.10

0.15

0.20

0.25

rfModel

trees

Error

0 100 200 300 400

0.05

0.10

0.15

0.20

0.25

c(0, rfModel$ntree)

range(tmp)

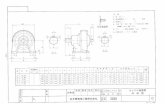

> round(rfModel$importance, dig=3) 0 1 MeanDecreaseAccuracy MeanDecreaseGini Wmake 0.001 0.001 0.001 2.481 Waddress 0.001 0.000 0.001 1.541 Wall 0.001 0.003 0.002 3.137 Cdollar 0.017 0.029 0.022 21.712 Cpound 0.001 0.000 0.000 1.293 CAPave 0.023 0.018 0.021 14.539 CAPlongest 0.032 0.026 0.029 15.064 CAPtotal 0.022 0.032 0.026 15.368 > sortedImpt_scaled = sort(importance(rfModel, scale = T)[,3], decreasing = T ) > sortedImp_gini = sort(rfModel$importance[,4], decreasing=T); > par( mfrow = c(2,1) ); > plot(sortedImpt_scaled, ylim=c(-.1, max(sortedImpt_scaled)+.1), type="h"); > text(1:p, sortedImpt_scaled, substr(names(sortedImpt_scaled), 2, 5), col=3); > title("importance measured by accuracy - scaled"); > grid(20); > plot(sortedImp_gini, ylim=c(-1, max(sortedImp_gini)+1), type="h"); > text(1:p, sortedImp_gini, substr(names(sortedImp_gini), 2, 5), col=3); > title("importance measured by gini"); > grid(20);

0 10 20 30 40 50

0.0

0.4

0.8

Index

sortedImpt_scaled remoexcl

freedollAPloAPtohpAPavourgeor000monehpleduyouyour1999650intebusipareemaioverrecredmeetsemi85techrecemailpmlabswilllabbractelndiremakealladdrorigdatapeopfont415ordecsaddrpoun8573dconftablpartrepoproj

importance measured by accuracy - scaled

0 10 20 30 40 50

05

15

25

Index

sortedImp_gini

exclremo

dollfree

APtoAPloAPavour

your000hpmoneyougeorpareemaioverhplintemailbusieduwill1999allmakererecesemi650addrlabstechpmpounmeetcredordepeoporigbrac85telnprojlabfont3ddatapartdirerepoaddr415conftablcs857

importance measured by gini