Personalized Recommendation on Dynamic Content Using Predictive Bilinear Models Wei ChuSeung-Taek...

-

Upload

cameron-horton -

Category

Documents

-

view

219 -

download

1

Transcript of Personalized Recommendation on Dynamic Content Using Predictive Bilinear Models Wei ChuSeung-Taek...

Personalized Recommendation on Dynamic Content

Using Predictive Bilinear Models

Wei Chu Seung-Taek Park

WWW 2009

Audience Science

Yahoo! Labs.

Outline

• Dynamic content– Yahoo! Front Page Today Module

– Difficulties on new users and new items

• Personalized recommendation– Global level, “one-size-fits-all” / “most popular”– Segmented level, “segmentation” – Individual level, “personalization”

• Methodology – Predictive bilinear models

• Findings in the case study• Conclusions

WWW 2009

• At default, the article at F1 is highlighted at the Story position.• Articles are selected from a hourly-refreshed article pool.• Replacement on out-of-date articles happens every a few hours.• GOAL: select the most attractive article for the Story position to draw

users’ attention and then increase users’ retention.

Dynamic Content

WWW 2009

Today Module

Dynamic Content

WWW 2009

Today Module

a) Click-through rate (CTR) is decaying temporally, e.g. breaking news.

b) About 40% clickers are first-time clickers.

c) Lifetime of an article is usually short, only a few hours;

d) The universe of content pool is dynamic.

9 da

ys’

data

Difficulties on Dynamic Content

WWW 2009

• Collaborative filtering provides good solution to “a closed world”– Overlaps in feedback across users are relatively high

– The universe of content items is almost static

• CTR is decaying temporally– Difficult to compare users’ feedback on the same article received at

different time slots

• Lifetime of an article is usually short, only a few hours– Reduce overlaps in feedback across users

• The universe of content pool is dynamic– Have to wait for clicks on new items (content-based filtering helps)

– Storage and retrieval of historical ratings of retired items are demanding

• About 40% clickers are first-time clickers– Hard on new users without historical ratings (“most popular” is baseline)

Cold-Start Recommendationexisting items new items

existing users Collaborative filtering Content-based filtering

new users “most popular” WAIT

Solution: Feature-based modeling

WWW 2009

• Users with open profiles– Demographical information, age, gender, location

– Property usage over Yahoo! networks

– Search logs, purchase history etc.

• Content profile management– Static descriptors: categories, title, bags of words of textual content etc.

– Temporal characteristics: popularity, CTR, freshness etc.

• Feature-based regression models for personalization at individual level– New items: initialize popularity based on static meta features

– New users: estimate preferences on items based on user features

Methodology

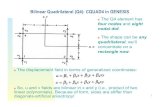

WWW 2009

• Data representation– User features (D features per user)

– Content features (C features per article)

– Historical feedback (“story click” or not)

• Predictive bilinear models– Bilinear score for a user/article pair

the b-th feature of user

the a-th feature of item

affinity between and

affinity sports finance

age 50 0.5 0.8

age 20 0.9 0.2

male 0.6 0.5

1.5

0.7

1.1

1.3

C100

D1000

• Model fitting on historical feedback – Regression on continuous targets

– Logistic regression on binary targets

– Probabilistic framework

• Optimal affinities at maximum a posteriori (MAP) estimate

• Prediction

Offline Optimization

affinity sports finance

age 50 ? ?

age 20 ? ?

male ? ?

WWW 2009

affinity sports finance

age 50 0.5 0.8

age 20 0.9 0.2

male 0.6 0.5

1.5

0.7

C100

D1000

• Data collection – Random serving policy

– Temporal partition

– About 40 million events for training

– About 5 million distinct users

– Test events (about 0.6 million “story click”s)

• Offline performance metric– “Click Portion”: the fraction of clicks at rank position r

Case Study

WWW 2009

Application: Front Page Today Module

• Data collection – Random serving policy

– Temporal partition

– About 40 million events for training

– About 5 million distinct users

– Test events (about 0.6 million “story click”s)

• Offline performance metric– “Click Portion”: the fraction of clicks at rank position r

Case Study

WWW 2009

Application: Front Page Today Module

Click Rank : 2at the moment of the click event in test

Like Dislike

• Data collection– Random serving policy

– Temporal partition

– About 40 million events for training

– About 5 million distinct users

– Test events (about 0.6 million “story click”s)

• Offline performance metric– “Click Portion”: the fraction of test clicks at rank r

Case Study

WWW 2009

Application: Front Page Today Module

at the moment of click events in test Click Rank : 1

Like Dislike

Case Study

WWW 2009

• Baseline: select the article with the highest CTR (EMP)– “One-size-fits-all” approach by online CTR tracking (Agarwal et al. NIPS 2009; Agarwal et al. WWW 2009)

• Segmentation– Age/gender-based segmentation with 6 clusters (GM)

– Conjoint analysis with 5 clusters (Chu et al. KDD 2009) (SEG5)

• Collaborative filtering– Item-based collaborative filtering (IBCF)

– Content-based filtering (CB)

– Hybrid CB with CTR (CB+EMP) :

• Feature-based personalized models– Bilinear regression (RG)

– Logistic bilinear regression (LRG)

– LRG without article CTR feature (LRG-CTR)

Matchbox: Large Scale Bayesian Recommendations

Stern, Herbrich and Graepel (WWW2009) Microsoft Res.

Thursday XL-2, Statistical Methods

Case Study

WWW 2009

• Lift over the baseline EMP “one-size-fits-all”

• SEG5: tensor conjoint analysis with 5 clusters

• CB+EMP:

• Logistic Bilinear Models

Case Study

WWW 2009

• A utility function (overall performance at top 4 positions)

where is “Click Portion” at rank r

Bucket Test

WWW 2009

• Lift on offline metric (click portion) of three segmentation models

• Gender: – male, female, unknown

• AgeGender:– 11 segments

• Tensor-5 (SEG5):

– 5 clusters

Method: Tensor-5 > AgeGender > GenderLift at rank 1: 0.08 > 0.65 > 0.55

Bucket Test

WWW 2009

Method: Tensor-5 > AgeGender > GenderLift on offline metric : 8% > 6.5% > 5.5%Lift in online bucket test : 3.24 > 2.45% > 1.49%

Summary

WWW 2009

• Feature-based bilinear regression models for personalized recommendation on cold-start situation of dynamic content.

• The affinities between user attributes and content features are optimized by learning from historical user feedback.

• Alleviate cold-start difficulties by leveraging available information at both user and item sides.

• Significantly outperform six competitive approaches at global, segmented or individual levels on an offline metric.

• We validated our offline metric by bucket test on segmentation models.

Acknowledgment

WWW 2009

We thank our colleague:• Raghu Ramakrishnan• Scott Roy• Deepak Agarwal• Bee-Chung Chen• Pradheep Elango• Ajoy Sojan • Todd Beaupre• Nitin Motgi• Amit Phadke• Seinjuti Chakraborty• Joe Zachariah