Parallel Contour Path Planning for Complicated Cavity Part ...

Parallel, Probabilistic Path Planning

-

Upload

kadeem-cooke -

Category

Documents

-

view

14 -

download

3

description

Transcript of Parallel, Probabilistic Path Planning

Parallel, Probabilistic Path Planning

Nathan IckesMay 19, 2004

6.846 Parallel Processing: Architecture and Applications

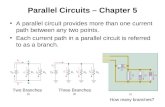

Rapidly-Exploring Random Trees

• RRTs are good at quickly finding a workable path

Rapidly-Exploring Random Trees

Pick a random point a in space

Find the node b in the tree which is closest to a

Drive robot towards a from b

If path to c is collision-free,

add c to the tree

Building an RRT:

Biasing an RRT towards a goal• On some iterations, pick the goal postion as a, instead of a random point

a a

b

a

b

ca

b

41 32

Parallelizing with OpenMP

• RRT has one major, global data structure

• Easily parallelized on a shared-memory machine

intplanner_run(int max_iter){#pragma omp parallel private(i)#pragma omp for schedule(dynamic) nowait for (i=0; i<max_iter; i++) { #pragma omp atomic planner_iterations++; a = planner_pick_random_point(); b = planner_find_closest_point(a); c = planner_drive_towards(a, b); if (c) { #pragma omp critical tree_append_node(c); if (c == goal) return 0; } } return 1;}

OpenMP Results

Time to execute 100,000 iterations:

0

10

20

30

40

50

60

70

80

GCC ICC OpenMP(1)

OpenMP(2)

OpenMP(3)

OpenMP(4)

OpenMP(8)

Tim

e (s

)

Parallelizing with MPICan’t use pointers! Network latency is huge!

Master-slave architecture Cooperative Architecture

Master process maintains tree

Slave processes iterate algorithm

Tree updates

New nodes

Slaves generate new nodes, but wait for master to put them in the tree

Can’t update tree fast enough

Every process generates new nodes and adds them to its own tree

New nodes are broadcast to other processes

Processes work largely independently due to network latency

New nodes

Conclusions

• RRT works well on a shared-memory machine

• OpenMP makes it easy to parallelize RRT– provides significant performance increase

• RRT is harder to implement with MPI, and doesn’t work as well– Global data structure– Can’t divide task into large chunks