Optimization and uncertainty assessment of strongly...

Transcript of Optimization and uncertainty assessment of strongly...

Optimization and uncertainty assessment of stronglynonlinear groundwater models with highparameter dimensionality

Elizabeth H. Keating,1 John Doherty,2,3 Jasper A. Vrugt,1,4,5 and Qinjun Kang1

Received 28 August 2009; revised 12 May 2010; accepted 28 May 2010; published 12 October 2010.

[1] Highly parameterized and CPU‐intensive groundwater models are increasingly beingused to understand and predict flow and transport through aquifers. Despite their frequentuse, these models pose significant challenges for parameter estimation and predictiveuncertainty analysis algorithms, particularly global methods which usually require verylarge numbers of forward runs. Here we present a general methodology for parameterestimation and uncertainty analysis that can be utilized in these situations. Our proposedmethod includes extraction of a surrogate model that mimics key characteristics of a fullprocess model, followed by testing and implementation of a pragmatic uncertainty analysistechnique, called null‐space Monte Carlo (NSMC), that merges the strengths of gradient‐based search and parameter dimensionality reduction. As part of the surrogate modelanalysis, the results of NSMC are compared with a formal Bayesian approach using theDiffeRential Evolution Adaptive Metropolis (DREAM) algorithm. Such a comparison hasnever been accomplished before, especially in the context of high parameter dimensionality.Despite the highly nonlinear nature of the inverse problem, the existence of multiple localminima, and the relatively large parameter dimensionality, bothmethods performed well andresults compare favorably with each other. Experiences gained from the surrogate modelanalysis are then transferred to calibrate the full highly parameterized and CPU intensivegroundwater model and to explore predictive uncertainty of predictions made by that model.The methodology presented here is generally applicable to any highly parameterized andCPU‐intensive environmental model, where efficient methods such as NSMC provide theonly practical means for conducting predictive uncertainty analysis.

Citation: Keating, E. H., J. Doherty, J. A. Vrugt, and Q. Kang (2010), Optimization and uncertainty assessment of stronglynonlinear groundwater models with high parameter dimensionality, Water Resour. Res., 46, W10517,doi:10.1029/2009WR008584.

1. Introduction

[2] Uncertainty quantification is currently receiving asurge of attention in environmental modeling as researcherstry to understand which parts of our models are wellresolved, and which parts of our process knowledge andtheories are subject to considerable uncertainty, and asdecision‐makers push to better quantify accuracy and pre-cision of model predictions. In the past decades, a variety ofdifferent approaches have appeared in the scientific literatureto address parameter, model, and measurement uncertainty,and to provide theories and algorithms for its treatment.See, for example, Carrera and Neuman [1986a, 1986b],

Kitanidis [1997], Woodbury and Ulrych [2000], Jiang andWoodbury [2006], Ronayne et al. [2008], Fu and Gomez‐Hernandez [2009], Cooley and Christensen [2006],Tiedeman et al. [2004],Hill [1998], Vrugt et al. [2005], Vrugtand Robinson [2007], and Tonkin et al. [2007], to name just afew works on this subject. However, despite the variety ofmethods available for this purpose, uncertainty analysis israrely undertaken as an adjunct to model‐based decision‐making in applied hydrologic analysis [Pappenberger andBeven, 2006].[3] For groundwater modeling in particular, this is an

outcome of several practical challenges. First and foremost,many thousands of model evaluations are typically requiredfor calibration, and to search parameter space for otherfeasible parameter sets that collectively allow appraisal ofparameter uncertainty. Yet groundwater models, especiallythose considering processes such as reactive geochemistryand geomechanical or thermal effects, may require signifi-cant CPU time to complete a single forward run. Second,widely available and efficient gradient‐based parameterestimation/optimization algorithms may perform verypoorly when parameter dimensionality is large, local optimain the objective function surface are numerous, parametersensitivities are low, and model input/output relationships

1Earth and Environmental Sciences Division, Los Alamos NationalLaboratory, Los Alamos, New Mexico, USA.

2National Centre for Groundwater Research and Training, FlindersUniversity, Adelaide, South Australia, Australia.

3Watermark Numerical Computing, Brisbane, Queensland, Australia.4Department of Civil and Environmental Engineering, University of

California, Irvine, California, USA.5Institute for Biodiversity and Ecosystem Dynamics, University of

Amsterdam, Amsterdam, Netherlands.

Copyright 2010 by the American Geophysical Union.0043‐1397/10/2009WR008584

WATER RESOURCES RESEARCH, VOL. 46, W10517, doi:10.1029/2009WR008584, 2010

W10517 1 of 18

are highly nonlinear. Third, inverse problems involvinggroundwater models often violate key assumptions thatunderpin rigorous statistical analysis of parameter and pre-dictive uncertainty. For example, the assumption that mea-surement/structural noise is uncorrelated, or even possessesa known and/or stationary covariance matrix, is almostnever met [Cooley, 2004; Cooley and Christensen, 2006;Doherty and Welter, 2010]. Unfortunately, model structuralerror is typically significant and much larger than mea-surement error [Vrugt et al., 2005, 2008b]; yet there are noclear criteria or inference methods to determine its size,statistical properties, and its effect on the uncertainty asso-ciated with values assigned to parameters through the cali-bration process. Each of these issues can create significantproblems in analyzing the uncertainty associated with pre-dictions made by complex groundwater models.[4] Recent developments in inverse algorithms have pro-

duced more robust and efficient tools for uncertainty analysis.As stated above, gradient‐based methods are extremelyefficient, but have historically been hampered by problemsassociated with local minima and insensitive parameters. Anumber of methodologies constituting improvements oftraditional gradient based methods have been developed toaddress these limitations and efficiently compute theuncertainty associated with predictions made by highlyparameterized groundwater models. These included the“self‐calibration method” (Hendricks Franssen et al. [2004],Gomez‐Hernandez et al. [2003] and dimensionality reduc-tion techniques such as those described by Tonkin andDoherty [2005] and Tonkin et al. [2007]). On the otherhand, global search methods using population‐based evo-lutionary strategies are especially designed to provide robustuncertainty analysis in difficult numerical contexts, and can thusovercome many of the difficulties encountered by gradient‐based methods. However, these typically require that a largenumber of model runs be carried out, and hence cannot beroutinely applied in typical groundwater modeling applica-tions. Meanwhile, another line of research has focused on thedevelopment of simultaneous, multimethod, global searchalgorithms whose synergy and interaction of constituentmethods significantly increases search efficiency whencompared to other methods of this type. The AMALGAMevolutionary search approach developed by Vrugt andRobinson [2007], and Vrugt et al. [2009a] provides anexample of such a method, and has been shown to outper-form existing calibration algorithms when confronted with arange of excessively difficult response surfaces. Unfortu-nately, AMALGAM is especially designed to only provideestimates of optimum parameter values without recourse toestimating parameter and prediction uncertainty. The recentlydeveloped DiffeRential Evolution Adaptive Metropolis(DREAM) algorithm [Vrugt et al., 2008a, 2009a, 2009b],which searches for objective function minima while simul-taneously providing estimates of parameter uncertainty,overcomes this limitation while maintaining relatively highlevels of model run efficiency in the context of Markovchain Monte Carlo (MCMC) simulation. Nevertheless, thenumber of model runs required for sampling of the posteriorprobability density function is often prohibitively large. Forinstance, a simple multivariate normal distribution withdimensionality of about 100, would require at least 300,000–

500,000 function evaluations to provide a sample set of theposterior distribution [Vrugt et al., 2009b].[5] Here, we present a novel strategy for obtaining robust

estimates of the uncertainties associated with highlyparameterized, field‐scale process models. This strategyshould be widely applicable for complex model analysiswhere, due to CPU limitations, standard sequential MCMCapproaches that rely on detailed balance are impractical tobe used. Our strategy consists of several steps. First, asurrogate model is abstracted from the process model. Thesurrogate model must have a similar level of parameteriza-tion to the process model, and be calibratable to the samedata set as the process model. The surrogate model is thenused to do the vital work of appropriately posing the inverseproblem through which parameters are estimated, includingdesigning the objective function to be minimized throughthe calibration process, refinement of understanding ofenvironmental processes operative in the study area, eval-uation of the magnitude and nature of irreducible modelstructural error, and so on. This iterative process of modelevaluation and calibration/uncertainty analysis continuesuntil a reasonable result is achieved. During this process, apragmatic methodology for uncertainty analysis, to be latertransferred to the process model, can be developed for thesurrogate model. Importantly, as is done in the presentpaper, this method can be tested against more statisticallybased and theoretically rigorous MCMC methods if this isjudged to be necessary. Finally, the efficient method istransferred to predictive uncertainty analysis with the fullprocess model, for which the high computational burden ofrunning the model requires that parameter and predictiveanalysis be undertaken using as few model runs as possible.[6] We demonstrate the utility of this strategy by applying

it to an ongoing modeling study at Yucca Flat, Nevada TestSite, USA. Although the particulars of the site are unique,this application is representative of many real‐world sce-narios where management decisions are based, in part, onpredictions derived from models, yet where the models aretoo CPU‐intensive to benefit from standard Bayesianuncertainty analysis approaches. The model has 252 para-meters, fewer than would normally be accommodated byhighly parameterized methods such as those described by(among others) Gomez Hernandez et al. [2003], Woodburyand Ulrych [2000], and Kitanidis [1999] which rely onthe use of sophisticated and often model‐specific softwarefor computation of numerical derivatives for analysis ofparameter and predictive uncertainty, yet many more thanare often employed in groundwater modeling applications.The model has long run‐times and would therefore beunusable in conjunction with non‐gradient uncertaintyanalysis methods.[7] In the course of estimating the uncertainty associated

with predictions made by this model, we compare twopowerful yet very different methodologies using the simplersurrogate model: null‐space Monte Carlo (NSMC) analysisand Markov chain Monte Carlo (MCMC) analysis. Becauseof the recent development of the former of these methods,they have not been compared before. The outcome of thiscomparison should be of great interest to practitioners whouse either of the two methodologies in the face of significantparameter dimensionality. Finally, the understandings gained

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

2 of 18

and refinements made in application of the uncertaintyanalysis methodology to the surrogate model are applied tothe CPU‐intensive process model. The end product of thisprocedure is a population of calibration‐constrained param-eter sets, together with predictions made by the model on thebasis of these parameter sets that are of practical interest tosite managers and stakeholder groups.[8] This paper is organized as follows. First we briefly

describe the general methodology, as well as details of thetwo algorithms used for uncertainty quantification. Then wedescribe the site‐specific practical application of the meth-odology. This begins with a description of the processmodel and the surrogate model used as a simple approxi-mation to it. Next, a description is provided of the iterativeprocess of objective function design, calibration, modelevaluation, and finally parameter uncertainty estimationusing two very different algorithms. Results from the twoalgorithms are compared. Then we apply the more efficientof the two algorithms to the CPU‐intensive process model.The process model is calibrated, and then subjected to post‐calibration parameter and prediction uncertainty analysis.The outcomes of this analysis are then discussed and con-clusions are drawn.

2. Uncertainty Estimation Methodology

[9] When faced with a practical groundwater applicationwhere a process model is developed which is detailed,highly parameterized, and CPU‐intensive, it can be very

difficult to do the work necessary to evaluate and calibratethe model, and to derive meaningful estimates of parameterand predictive uncertainty. To assist in this process, wepropose the sequence of steps shown in Figure 1. The firststep is to develop a fast surrogate model. The best strategyfor accomplishing this will be highly problem‐specific.Ideally, the surrogate model will be characterized by anearly identical level of parameterization detail as the pro-cess model; furthermore the relationships between modelparameters and the model‐generated counterparts to cali-bration targets should be similar. However, as will be shownbelow, the exact mathematical relationship between surro-gate and process model parameters need not be exactlyknown.[10] Step 2 involves an iterative process of model cali-

bration, evaluation, and parameter estimation (with uncer-tainty). An important task here is to establish a quantitativecriterion for model and parameter adequacy. The weightedsum‐of‐squared‐residuals statistic, F, is often used for thispurpose where F is defined as

F ¼Xmi¼1

wi ci � co;i� �� �2 ð1Þ

where ci are model outputs, co,i are corresponding field orlaboratory measurements, and wi are weighting factors. Ifmeasurements are statistically independent and the dominantsource of error is measurement error, weights (as defined inthe above equation) are normally assigned as inversely pro-portional to assumed measurement error standard deviation.However, if structural error dominates model‐to‐measure-ment misfit, which is usually the case in groundwater modelcalibration, assignment of weights is less straightforward.Since structural error and its contribution to parameter andprediction error is so difficult to quantify, iterative calibra-tion and model improvement must often be implemented inorder to reduce structural error, thus minimizing its contri-bution to parameter and predictive error.[11] Seen in this light, the calibration process serves two,

somewhat distinct, purposes. First, by identifying theparameter vector g corresponding to Fmin, important infor-mation is gained about likely parameter values. This is thestandard inverse problem of model calibration. Second, byidentifying the magnitude of Fmin, and the nature andspatial relationships of residuals which constitute it,information is gained about model adequacy. If Fmin isunacceptably large, or if posterior parameter estimates areunreasonable, an inference may be made that structural erroris too large and that the model should therefore be improved.This iterative process of optimization, model evaluation, andmodel improvement continues until model structural error isconsidered to be acceptably low.[12] In Step 3 an assessment is made of the level of

parameter uncertainty associated with the surrogate model,this being a function of prior knowledge of parameteruncertainty and additional constraints on parameters exer-cised through the calibration process. In the present case weemploy two very different methods to assess parameteruncertainty using the surrogate model. The reason for usingtwo methods is that only one of the two, NSMC, is efficientenough to later be applied to the process model (Step 4).However, to gain confidence in the validity of the results

Figure 1. Outline of proposed methodology.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

3 of 18

obtained with NSMC analysis we compare its performanceto a statistically rigorous MCMC‐based approach, DREAM.As we will show, in our case parameter uncertainties com-puted by the two compare reasonably well. In the generalcase, however, if significant discrepancies arise, theseshould be investigated and understood before proceeding.[13] The fourth and final step is to apply the NSMC

methodology, tuned to the surrogate model in Steps 2 and 3,to the process model. This step goes beyond model cali-bration and parameter uncertainty estimation; ultimately aset of calibration‐constrained predictions are provided.[14] The two uncertainty estimation methodologies em-

ployed in this general methodology are now brieflydescribed. The first and most efficient (in terms of its modelrun requirements) method is embedded in a suite of modelcalibration and uncertainty analysis tools which comprise thePEST [Doherty, 2009] suite. The NSMC methodology im-plemented by PEST (see Tonkin and Doherty [2009] for adescription of this methodology) is used to generate apopulation of parameter sets, all meeting the criteria F < =F*, where F* is a user‐specified criterion for “calibrationadequacy,” given knowledge of measurement noise and theexpected degree of model structural error. The method ispragmatic, using a highly efficient subspace approach togenerate a collection of calibration‐constrained parametersets. The first step in the process is model calibration, thisyielding (if implemented correctly) something approachinga minimum error variance estimate g of the possible set ofparameters g. Of necessity, this will be a simplified andsmoothed parameter set, exhibiting only as much hetero-geneity as can be supported by the calibration data set, thisbeing an outcome of the regularization necessary to achieveuniqueness of an ill‐posed inverse problem, as Moore andDoherty [2006] describe. The PEST suite of tools includesa number of algorithms for achieving this parameter set,including gradient‐based methods such as Levenberg‐Marquardt and global methods such as the CovarianceMatrix Adaptation Evolutionary Scheme (CMAES), thelatter being a population‐based stochastic global optimizer[Hansen and Ostermeier, 2001; Hansen et al., 2003]. Notethat appropriate regularization constraints must be employedwith these methods to achieve something approaching theminimum error variance parameter set g instead of any ofthe infinite number of other parameters sets that are alsocapable of minimizing F and/or of reducing it to a suffi-ciently low value.[15] Through singular value decomposition (SVD) of the

linearized model operator, the parameter set g is thendecomposed into two orthogonal vectors, these being itsprojection onto the “calibration solution space” (this beingcomprised of p linear combinations of parameters with areinformed by the calibration data set), and “the calibrationnull space” (comprised of d‐p calibration‐insensitiveparameter combinations, where d is the total number ofparameters). Demarcation between these two subspaces isdeemed to occur at that singular value at which attemptedestimation of orthogonal linear parameter combinationsobtained through the SVD process on the basis of the cali-bration data set results in a gain rather than diminution ofpost‐calibration uncertainty of either that linear combinationof parameters, or of a prediction of interest (see Doherty andHunt [2009] for details).

[16] Uncertainty analysis then proceeds with the genera-tion of a set of random parameter realizations, gm. Eachparameter value within each set is drawn from a randomdistribution (uniform or lognormal) within specified bounds,with bounds and distributions chosen to ensure that allparameter values are physically reasonable. For each gm, g issubtracted; the difference is projected onto the calibrationnull‐space, then g is added back in, resulting in a transformedparameter vector gm′. If the inversemodelwere perfectly linear,each gm′would represent a calibrated parameter set. Because ofnonlinearity, however, each gm′ must be adjusted through re‐calibration. To achieve this, each gm′ is used as a startingpoint for further optimization. Collectively this results in a setof S parameter sets, gi, i = 1,S, all of which realize objectivefunctions which are low enough (F < F*) for these parametersets to be considered to “calibrate” the model. The computa-tional demands of this pragmatic process are relatively lightdue to the use of the null‐space projection operation describedabove in generating nearly calibrated random parameter fields,and due to the fact that re‐calibration of these parameter fieldscan make use of pre‐calculated sensitivities applied only toparameter solution space components. Subsequent adjustmentof these solution space components so that the objectivefunction falls below a specified value (that reflects the degreeof measurement/structural noise associated with model‐to‐measurement misfit) ensures that variability of these compo-nents is introduced in accordance with the necessity to reflectpost‐calibration variability that they inherit from measure-ment/structural noise. It should be noted that, even thoughmethods used to subdivide parameter space into solution andnull subspaces are inherently linear, the methodology imposesno constraints on parameter variability and does not presumemodel linearity. The higher the parameter variability andmodel nonlinearity, the more model runs be invested in post‐null‐space‐projection model re‐calibration for each parameterrealization.[17] A disadvantage of the NSMC method is that, in

difficult parameter estimation contexts, parameter setsachieved through application of the method do not neces-sarily constitute a sample of the posterior probability densityfunction of the parameters in a strictly Bayesian sense.Therefore, for comparison purposes we also apply a secondmethod in Step 3, which is implemented in the DREAMpackage (see references below). DREAM is a Markov chainMonte Carlo (MCMC) scheme adapted from the DifferentialEvolution–Markov Chain (DE‐MC) method of ter Braak[2006]. While not particularly developed for efficiency inhighly parameterized contexts, it is capable of producingposterior probability density functions of the parameters thatare exactly Bayesian, even in highly parameterized environ-ments characterized by multiple objective function minimaand multimodal posterior distributions, where it is able tocontinually update the orientation and scale of the proposaldistribution employed by its underlying Metropolis algo-rithm while maintaining detailed balance and ergodicity[Vrugt et al., 2008a, 2008b, 2009b]. Contrary to thePEST approach to uncertainty assessment described above,DREAM combines calibration and uncertainty analysiswithin a single algorithm in which it samples the post‐calibration probability density function of the parameters.In summary, DREAM runs N different Markov chainssimultaneously in parallel that are typically initialized from a

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

4 of 18

uniform prior distribution with bounds of the parameters thatare chosen based on physical reasoning. The states of the Nchains are denoted by the d‐dimensional parameter vectorsg1,…, gN,. At each time, themembers of the individual chainsform a population, stored as an N × d matrix Gt. DREAMevolves the initial population, (Gt; t = 0) into a posteriorpopulation using a Metropolis selection rule to decidewhether to accept the offspring or not. With this approach, aMarkov chain is obtained, the stationary distribution of whichis the posterior parameter probability distribution. The proofof this is presented by ter Braak and Vrugt [2008] and Vrugtet al. [2009b]. After a so‐called burn‐in period, the conver-gence of a DREAM run can bemonitored using the statistic ofGelman and Rubin [1992], which compares the variancewithin and between the chains. The use of a formal conver-gence criterion defines a significant difference between theMCMC approach of DREAM and the NSMC techniqueimplemented by PEST. Prior to the present investigation,DREAM had not been tested in highly parameterized con-texts; nor had PEST’s performance been compared with thetheoretically more rigorous, though computationally moredemanding, DREAM approach in difficult applications suchas that described here. Note that DREAM uses explicitboundary handling to force the parameters to stay within theirprior defined bounds. This approach is essential to avoidsampling out of bounds and is especially designed tomaintaindetailed balance.

3. Example Application

[18] Here we demonstrate the sequence of steps outlinedabove using a real‐world example. The context is themodeling of groundwater flow and transport within satu-rated volcanic rocks in Yucca Flat, Nevada Test Site, USA(Figure 1), where a total of 659 underground nuclear testswere conducted between 1951 and 1992 [Fenelon, 2005].For the purposes of this paper, we focus on one particularprediction required of the model: the enhanced flux from thevolcanic rocks into the lower aquifer due to undergroundnuclear testing. This enhanced flux has obvious implicationsfor radionuclide transport. Quantification of the uncertaintyassociated with this prediction is a very important outcomeof the analysis. Here we will demonstrate a method forquantifying uncertainty and then test its effectiveness usinga post‐audit (section 4.5.1).

3.1. Process Model Development

[19] The complex process model simulates groundwaterflow in a shallow portion of the saturated zone in Yucca Flatwhich is characterized by a complex layering of volcanicrocks, dissected by numerous faults, some quite large andhydrologically significant. A unique aspect of the hydrologyof this site is the large and abrupt changes in pore pressuresand rock properties that occur subsequent to each nucleartest [Laczniak et al., 1996]. A cavity is formed around theworking point of each test due to vaporization and meltingof rock, which later fills with rubble as the rocks above thecavity collapse, forming a chimney and surface crater. Eachof these features has very different hydrologic propertiesfrom the pre‐testing rocks which form the host material.Upon detonation of a nuclear device, pore pressures areinstantaneously increased within tens of meters of the cavity

due to compressive stresses associated with the explosionand pore‐space compaction. Hydrofracturing occurs in placeswhere stresses reach very high levels. At some locations,elevated pore pressures (and consequently enhanced hydraulicgradients) have persisted for decades [Fenelon, 2005].[20] To appropriately model groundwater flow in this highly

dynamic aquifer system, a complex process model was con-structed, which sequentially couples a “testing‐effects”module(to simulate abrupt head alterations) with a 3‐D transientgroundwater flow module that simulates long‐term ground-water movement in the highly heterogeneous system.3.1.1. Numerical Details[21] The first of the two submodels that are coupled to

form the process model is a 3‐D, transient groundwater flowmodel developed specifically for the central portion ofYucca Flat. The dimensions of this model are roughly 11 km ×30 km × 1 km; its outline is shown in Figure 2. Several modelgrids have been developed for different purposes using thegrid generation package LaGrit [Trease et al., 1996]; themedium‐resolution grid used for the present uncertainty studyhas 247,464 nodes. The standard transient groundwater flowequation (simplified here, for brevity):

Ss@h

@t¼ �g

�

@

@x�x

@h

@x

� �þ @

@y�y

@h

@y

� �þ @

@z�z

@h

@z

� �� �þ Q ð2Þ

is solved using the Finite Element Heat and Mass Transfer(FEHM) [Zyvoloski et al., 1997] porous flow simulator. Here,h is hydraulic head, t is time, � denotes intrinsic permeability(which can be anisotropic), r is density, g signifies thegravitational constant, m is the viscosity, Ss represents thespecific storage, and Q is a source or sink term. In the presentcase the only specified sink or source is a spatially uniformrecharge rate applied at the water table; water flows from thebottom of the model domain (into the aquifer below) throughspecified head nodes. Rock properties (Ss, �) are spatiallydistributed according to a deterministic hydrogeologicframework model (HFM) [Drellack, 2006] which defines thegeometry of 11 units and more than 100 faults within the flowmodel domain. Faults are lumped into seven categories for thepurpose of parameter value assignment.[22] The second model component is the testing‐effects

module referred to above, which simulates instantaneouschanges in permeability and porosity in the vicinity of a test,as well as increased pore pressures due to elastic and inelasticrock deformation [Davis, 1971; Garber and Wollitz, 1969;Halford et al., 2005; Hawkins et al., 1989; Knox et al., 1965;Reed, 1970]. The model also simulates the creation, immedi-ately following each underground nuclear test, of a high per-meability cavity and chimney with radius rc. Within theregion r < rc hydraulic heads are assumed to be hydrostatic,whereas for larger radial distances rc < r < R hydraulic headincreases instantaneously according to the following rela-tionship, from Halford et al. [2005]:

dh rð Þ ¼ Ho 1� r0=Rð Þ ð3Þ

where Ho is a test‐specific value. In accordance with his-torical observations that test‐effects are often more pro-nounced laterally than vertically because of rock anisotropy,we introduce a modified distance parameter, r′ = r(1 − dz

� ),where dz is the vertical distance to the working point of thetest and g is an unknown scaling parameter subject to cali-

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

5 of 18

bration. The smaller the value of g, the more anisotropic isthe effect of an underground nuclear test.[23] Local rock permeability can be enhanced or reduced

by the testing‐effects model, this depending on the magni-tude of the head increase and on its relationship to lithostaticload. Out to a distance of r

rc≤ F, a permeability reduction

factor, x, is applied if dh > H′. If the pressure at any locationexceeds lithostatic pressure by more than a factor l, the rockbreaks and the porosity and permeability are increased. In

total, this testing‐effects module has k+6 parameters, wherek represents the total number of underground tests within themodel domain; this is equal to 212 in the present study.[24] The FEHM and testing‐effects models are iteratively

coupled to form the overall process model which can thenbe used to simulate transient and long‐term groundwaterprocesses during and after the period of testing (1952–1992). The combined FEHM and testing‐effects model iscomputationally very demanding, requiring between 1 and3 h to complete a single run on a 3.6 GHz processor. Theexact run time depends on the time discretization used tosolve the pertinent partial differential equations in FEHM.The combined model has 242 model parameters; these aresummarized in Table 1.3.1.2. Calibration Objectives[25] A rich and complex data set of hydrographs is

available for this site. By reviewing the extensive databasedeveloped by Fenelon [2005], we were able to filter outunreliable measurements and only use head measurementswhich are trustworthy and thus unaffected by wellbore and/or drilling affects. A total of 583 head measurements col-lected at 60 different wells were selected for inclusion in thiscalibration data set, spanning the time period from 1958 to2005. Large variations exist in the frequency of data col-lection in the various wells. One example of a well withhead measurements spanning a relatively long time is TW‐7(see Figure 2); measurement data from this well are depictedin Figure 3. Sharp increases in measured head immediatelyfollowing underground nuclear tests are apparent in Figure 3;each increase is followed by a period of relaxation. Anadditional complicating factor is that many wells were sam-pled only once or very few times. Implications of this for thedesign of the calibration objective function are discussedbelow.[26] Due to the spatial and temporal complexities of this

data set, it is impossible to determine a priori which of the242 model parameters may, to any extent, be constrained bymodel calibration. However, even if the number of para-meters constrained by the calibration data set is small, theinformation pertaining to those parameters acquired throughthe calibration process could be quite valuable in reducingthe uncertainty of key model predictions. Additionally,calibration to the hydrologic data set may reveal importantmodel inadequacies that should be addressed. Meanwhile,

Figure 2. Yucca Flat, Nevada Test Site, Nevada. Circlesindicate locations of underground nuclear tests detonatedwithin the model domain. Green line shows outline of numer-ical model. Black lines show faults. The red square is TW‐7;the yellow square is UE‐4t. The horizontal light blue line isthe location of cross‐section indicated in Figure 15.

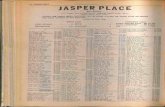

Table 1. Summary of Parametersa

Parameter Type Surrogate Process Free

Testing effectsH′ 1 1 1fmax 1 1 1Vad1, 2, 3 3x 1 1 1g 1 1 1a 1R 1 1 1Ho 212 212 28

Rock properties� 10 20 9Ss 2 2Kz 2 2

Recharge 1His 10Total 241 242

aFree parameters are described in section 3.5.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

6 of 18

our high level of parameterization respects the philosophythat by keeping the level of parameterization commensuratewith the complexity of the physical problem, rather thancommensurate with the (relatively low) information contentof the calibration data set, we will ultimately obtain the bestestimates of those parameters that are available to us (pro-vided that appropriate regularization mechanisms are intro-duced to the solution process of the resulting ill‐posedinverse problem). This philosophy also grants us the abilityto quantify the uncertainty associated with these parameterestimates and with predictions which are sensitive to them[Hunt et al., 2007].

3.2. Surrogate Model Development and Analysis

[27] To implement the process depicted in Figure 1, wedeveloped a fast‐running surrogate model of the complexand CPU‐intensive process model. Design of the surrogatemodel is based on the very simple assumption that for aspecific head increase at the location of an explosion, Ho,the immediate head perturbation imposed at a nearby wellwill be determined by only three factors: 1) distance of thewell from the test, 2) time elapsed since the test, and 3)properties of the rock at the point of measurement. The headperturbation is calculated using equation (3). To approxi-mate the transient hydrologic process of depressurizationdue to groundwater flow away from the pressurized zone,this effect is assumed to decay over time at an exponentialrate (�s/a), where �s is specific to each rock type, s. Theprinciple of superposition is used to calculate the cumulativeimpact of k tests, where k = 212. The resulting equation is asfollows:

hi x; y; z; tð Þ ¼ hi sð Þ þXkn¼1

dhn rð Þe��s t�tnð Þ� ð4Þ

where hi is the head at the ith well and hi(s) is the initial(pre‐1950) head in this respective well.[28] To test the efficacy of this equation in simulating

observed groundwater behavior, a number of very simplehomogeneous radially symmetric models of groundwaterflow based on equation (2) were used to simulate instanta-neous pressurization due to a single test (equation (3)) andsubsequent relaxation over time. Simulation results were in

excellent agreement with equation (4); furthermore a directrelation was evident between parameter �s of (equation (4))and permeability � featured in equation (2). However, thissimple relationship quickly degraded where more complexhydrostratigraphic conditions were represented in theseradial simulation models. For this reason, we do not expectthere to be a simple relationship between parameters fea-tured in equation (4) and parameters of the full processmodel which incorporates very complex hydrostratigraphy.[29] Computation of head using equation (4) does not

require an iterative procedure and variable time stepping,and can be used to generate all 60 hydrographs representedin the calibration data set in less than one second on a3.6 GHz processor. The model has a similar number ofparameters to those employed by the process model assummarized in Table 1. One difference is that there arefewer rock‐specific values of permeability (�). This is due tothe fact that the surrogate model only considers the rocktype at the observation point, whereas the process modelconsiders all rock types within the model domain. This isonly one of many simplifications of the surrogate modelwhich prevent an explicit mapping of parameters from thesurrogate to process model. The relationship between para-meters and outputs of the surrogate model is highly nonlinear,as Figure 4 demonstrates for one example parameter/obser-vation pair; hence, as for the process model, parametersemployed by the surrogate model may be difficult to esti-mate through calibration. Meanwhile, because of its com-putational efficiency and similarity (to first order) to theprocess model, use of the surrogate model provides an idealopportunity for developing strategies for effective calibra-tion and uncertainty estimation that can ultimately beapplied to the process model.3.2.1. Objective Function Definition and ModelAssessment[30] Using the surrogate model, we now implement Step

2 depicted in Figure 1. In implementing this step, our goalwas to design the objective function such that the maximumamount of information could be gleaned from the calibrationdata set through forcing the model, if possible, to reproducekey features in the data set through minimization of thisobjective function. Beginning with the most simple approach,we used the objective function defined in equation (1), with

Figure 3. Measured heads at Test Well 7, showing response of well to nearby tests and subsequentrelaxation.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

7 of 18

all 563 head observations given equal weight, this reflecting apresumed uniform measurement error of 0.1 m. We theniterated between parameter estimation, objective functionrefinement, and model improvement, using the PEST suite ofcalibration tools to minimize F in each case. Early in thisprocess PEST quickly found a solution that explained over90% of the variance in the head data, (which is remarkableconsidering the simplicity of the surrogate model and thecomplexity of the data set), but was unable to further improvethe correspondence between measured and modeled heads.Detailed inspection of PEST outcomes revealed that thegoodness‐of‐fit achieved for the mean head at any particularwell was strongly controlled by the number of observationsmade at that well. Similarly, goodness‐of‐fit within a par-ticular subregion of the model domain was strongly affectedby the density of observations in that subregion. Furthermore,temporal trends at any given well were generally poorlyreproduced, since in many cases the temporal variance at agiven well was much smaller than the overall spatial variancebetween wells, and so PEST placed much more focus oncapturing the latter. This is undesirable, since rock propertiesof interest, such as permeability, can often be much morereliably deduced from stress‐induced head changes (such asa pump test) than from static head measurements over spacethat are also a function of uncertain recharge and even moreuncertain boundary conditions. As Doherty and Welter[2010] demonstrate, for maximum efficacy of the calibra-tion process in providing reliable estimates of these para-meters, the objective function must be formulated in such away as to therefore accentuate these head changes inducedby known stresses.[31] We therefore experimented with several modifica-

tions to the objective function. Best results were achieved bymaking the following adjustments. First, weighting wasadjusted to accommodate variations in spatial observationdensity (and, by inference, density of observations in dif-ferent hydrostratigraphic units), so that approximate equalityof weighting normalized by area was obtained. Then hy-drographs were filtered so that data representing time peri-ods in which heads changed linearly with time (or not at all)were replaced by two numbers: 1) an initial head for thattime period, and 2) dh/dt for that time period. In some cases,where multiple measurements were taken during a linear‐trending time period, this substitution greatly reduced the

total number of observations. Finally, weights were adjustedto apportion approximately equal weighting to derivatives(dh/dt) and to absolute heads. The resulting objectivefunction was comprised of 361 observations, constituting ablend of absolute head values and derivatives.[32] In a similar iterative fashion, we tested and improved

conceptual model elements common to both the surrogateand process models. For example, we experimented withallowing the parameter R (equation (3)) to be spatially dis-tributed. This change lead to lower values of F but producedclearly non‐physical simulations of system behavior. As aconsequence, we removed spatial dependency in R. We alsofound that the anisotropic test‐effects parameter, g, could beused to great advantage for enhancing model‐to‐measure-ment fit over portions of the model domain. Unfortunately,however, a large value was estimated for this parameter(meaning no vertical anisotropy) when attempts were madeto fit heads over the entirety of the model domain. It is thusapparent that a simple model of anisotropy has no value; iteither must be made much more complex or ignored com-pletely. We adopted the latter option. On other occasions weexperimented with various alternative strategies to that ofestimating Ho independently for each test. For example wetried to express Ho as a function of maximum announcedyield of each test [Fenelon, 2005]. We found no advantagein this approach.[33] Another outcome of this iterative process was that we

began to appreciate the sensitivity of the objective functionvalue to the quality of the result, as assessed by visualcomparison of measured and modeled hydrographs. It wasclear that objective function values ranging from a fewhundred to approximately 1,000 gave rise to only subtlevisual differences in model‐to‐measurement misfit. How-ever, misfit noticeably deteriorated above a value of about1,000. This qualitative relationship between objectivefunction value and parameter field acceptability was em-ployed later in null‐space Monte Carlo analysis. It should benoted that use of a subjective likelihood function in thisfashion is not uncommon practice in environmental modelcalibration, where model‐to‐measurement misfit is domi-nated by structural noise. It is embraced by the GLUEmethodology [Beven, 2009] and is given justification byBeven [2005], Beven et al. [2008], and Doherty and Welter[2010].3.2.2. Parameter Estimation and Uncertainty Analysis[34] It is at Step 3 of the procedure illustrated in Figure 1

that we applied our two uncertainty analysis methodologies.First, we assigned reasonable a priori bounds to all 242parameters based on physical arguments and data fromprevious studies [Halford et al., 2005; Kwicklis et al., 2006;Stoller‐Navarro Joint Venture, 2006]. All prior parameterprobability distributions were assumed to be uniform. Wethen applied methodologies provided by both the PEST andDREAM packages to parameter estimation and uncertaintyanalysis of the surrogate model.[35] Because of the large dimensionality and high degree of

nonlinearity of this model, use of PEST was slightly morecomplicated than use of PEST in simpler calibration contexts.To ensure avoidance of local minima, the calibration processwas repeated with 60 random starting values of g. In eachcase several optimization algorithms were employed. Thesewere applied sequentially, each beginning with the resultfrom the previous step. These were 1) CMAES 2) truncated

Figure 4. An example of nonlinearity between model para-meters and outputs (dimensionless parameter a and calibra-tion target, dh/dt at well U‐3kx).

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

8 of 18

singular value decomposition (SVD), and 3) “automatic userintervention.” CMAES is a global optimization algorithmdescribed in detail by Hansen and Ostermeier [2001] andHansen et al. [2003]; a version of the CMAES algorithm isprovided with the PEST suite. Truncated SVD as a mech-anism for stable solution of inverse problems is described inmany texts, including Menke [1984] and Aster et al. [2005].The last of these, “automatic user intervention,” is describedby Skahill and Doherty [2006]; this achieves numericalstability through sequential, temporary, fixing of insensitiveparameters while promoting continual parameter movementin difficult objective function terrains through attemptingobjective function improvement in a number of orthogonaldirections. (Note that in many cases this last step was eitherunnecessary or achieved very little objective functionimprovement.) The number of forward runs required foreach of these steps are listed in Tables 2a and 2b. In total,the entire process required about 400,000 runs and produced60 parameters sets (gi) with F values ranging from 203 and13,000. Of these, those corresponding to the five lowestvalues of F (ranging from 203 to 410) were carried forwardinto the uncertainty analysis.[36] For each of the five gi parameter sets the following

steps were taken. Calibration solution and null‐spaces weredefined using singular value decomposition according to themethod established by Doherty and Hunt [2009]; in all casesthe dimensionality of the solution space was calculated to beabout 24. Three hundred random parameter sets were thengenerated and subjected to null‐space projection in themanner described previously. Re‐calibration of eachparameter set was then effected. As already discussed, thisre‐calibration step was very efficient because parametersensitivities calculated during the previous calibration pro-cess were employed for the first (and often only) iteration ofparameter adjustment. If after two iterations an objectivefunction of 1,000 had not been attained, the parameter esti-mation exercise was aborted and the parameter set rejected.

The number of forward runs required by this process arelisted in Tables 2a and 2b; in total, this entire processrequired approximately 200,000 model runs. From one seed(g, corresponding to F = 404), 172 parameter sets (gi) wereproduced, each meeting the criterion F < 1,000. We com-pared these results to those generated using the four otherseeds and found that they produced very similar results.Obviously, a larger ensemble of parameter sets could havebeen produced by combining all sets produced by each ofthe five gi seeds and/or by continuing the NSMC process forany one seed. However, we used only the results from oneseed in order that our analysis would be comparable to thatundertaken using the process model (presented later) where,due to the computational demands of using that model, onlyone seed could be utilized.[37] The DREAM algorithm described in section 3 was

not customized for this particular application, other than tospecify the value for the number of Markov chains, N.Numerical results presented by Vrugt et al. [2009b] demon-strate that N > 1/2d is appropriate. For all the calculationspresented herein, we set N = 250 and used 50 differentcomputational nodes of the LISA cluster at the SARA com-puting center at the University of Amsterdam, The Nether-lands for posterior inference. DREAM required about50 million model evaluations to generate 100,000 parametersets that represent samples from the posterior probabilitydensity function. As will be shown below, this approach didnot yet find the lowest attainable value of the objectivefunction as found by PEST (presumably the global mini-mum). This demonstrates that this best solution is associatedwith a small, perhaps negligible probability mass, and thatDREAM is inclined to converge to the larger area of attractionencompassing the full range of posterior parameter likeli-hood.]. Here we will present the results of DREAM with N =250 parallel chains. Increasing the value of N did not alterthese results.3.2.3. Comparison of Results From Surrogate ModelAnalysis[38] Objective functions attained through use of the two

methods are compared in Figure 5. Median values of F aresimilar; however variation in the PEST results was muchbroader. Interestingly, the PEST method identified thelowest value of F (186). Considering the difficulties asso-ciated with this problem, it is noteworthy that both methodswere able to reduce F from values of 1.E6 typically asso-ciated with randomly generated parameter sets, to values aslow as several hundred.

Table 2b. NSMC Analyses Based on Five Calibrated Modelsa

Calibrated Models

1 2 3 4 5

Number of random seeds 300 300 300 300 300Number of Forward Runs Required

Definition of null and solution spaces 242 242 242 242 242Recalibration 37328 42864 45231 32943 41423Total 37570 43106 45473 33185 41665Resulting number of parameter sets

meeting criterion F < 1000172 162 203 184 151

aIn all cases 242 runs were undertaken on the basis of the optimizedparameters which were an outcome of one of the previous calibrationexercises as described in the text. These were required for calculation ofa Jacobian matrix on which basis subdivision of parameter space intoorthogonal complimentary solution and null‐spaces was undertaken. Figure 5. Distribution of F values for the two methods.

Table 2a. Summary of Calibration and Uncertainty Analysis For-ward Run Requirements Calibrationa

Number of Model Runs

CMAES 152542SVD 206942AUI 43102Total 402586

aTotal for 60 inversions.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

9 of 18

[39] It was expected that the calibration process wouldconstrain only a small fraction of model parameters; bycomparing the posterior‐to‐prior ranges of all parametersobtained using both methods we see confirmation of this. Asshown in Figure 6, both methods demonstrate that for 70%of the parameters, the posterior range is not significantlysmaller than the prior range. Detailed examination of pos-

terior distributions generated by each method revealed bothsimilarities and differences, as shown in Figure 7, whichillustrates frequency distributions for two parameters. Foreach parameter shown here, both methods yield a posteriorparameter range that is significantly narrower than its priorrange (the range of the x axis in each of these plots reflectsprior parameter bounds), and in Figure 7a it is evident thatthe characters of the two posterior distributions areremarkably similar. However, as shown in Figure 7b, for thea parameter the lower end of the distribution is marked bythe parameter bound in the case of the DREAM distribution,and by a drop in likelihood in the case of the PEST distri-bution; the reason for this is unknown. In Figure 8 anexample is provided where the two results differ signifi-cantly. In this case the DREAM posterior distribution iscommensurate with the prior. In contrast, PEST considersthis parameter to be strongly skewed toward its lowerbound; once again, reasons for these differences areunknown. However, it should be noted that because theprior parameter bounds were constructed so as to ensure thatany estimated parameter would be physically reasonable, nophysical arguments can be made in favor of either PEST orDREAM producing more “realistic” parameter estimates.[40] The median value of the posterior distributions gen-

erated by the two methods was compared for each parameter.To facilitate comparisons among parameters with very dif-ferent magnitudes, the posterior values were first normalizedwith respect to the prior parameter ranges. As ameasure of thedegree to which the posterior median value is constrained bythe calibration process, the ratio (s*) of the posterior standarddeviation to the prior was also calculated. Ratios approaching1.0 indicate parameters poorly constrained by calibration. InFigure 9 a comparison of the median values forthcomingfrom the two methods is shown, with symbols coloredaccording to the s* calculated by PEST (Figure 9a) andDREAM (Figure 9b). The red and black symbols indicatevalues with significantly reduced standard deviations. Mostsymbols cluster near the center of Figure 9, and have lightblue color. These are parameters that the method did notconstrain, implying therefore that the prior and posteriorstandard deviations are similar, and that posterior medianstherefore coincide with prior medians in the center of the

Figure 7. Comparison of posterior distributions for (a) aand (b) Ho (Aleman test). The X range reflected the priorrange.

Figure 8. Comparison of posterior distributions for �,Timber Mountain Volcanic Tuff Aquifer. The x axis rangesare defined by prior distributions.

Figure 6. Histogram of the computed ratio of posterior toprior range for all 263 parameters. For over 70% of the para-meters, there was no range reduction (ratio = 1.0).

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

10 of 18

prior range. There are some light blue symbols, however,with normalized median values significantly different from0.5. The reasons for this are not completely understood. Oneplausible explanation is that the sampling of parameter spaceis insufficiently dense (due to the large number of para-meters) and that if the sampling density had been muchlarger, these poorly constrained parameters would possessnormalized median values of 0.5. Clearly, in a high‐dimen-sional problem with numerous local minima such as this, thenormalized median values estimated by these methods maynot be reliable for poorly constrained parameters. This maybe particularly the case for the DREAMmethod, which has atendency to yield greater spread in the normalized median ofpoorly constrained parameters.[41] Most of the significant departures from the 1:1 line

depicted in Figure 9 are for parameters that are not con-sidered well‐constrained by the calibration data set by oneor both methods; for the reasons described above in these

cases the discrepancy is not important. Table 3 lists thoseparameters considered well‐constrained by calibration (s* <0.5) by both methods. As shown in Figure 10, the medianvalues compare moderately well; however there is a ten-dency for DREAM medians to be lower than those of PEST.We expect that those parameters listed in Table 3 which areshared by the process model will be important in the processmodel calibration and uncertainty analysis as well. This typeof parameter estimability information is a very useful out-come of analysis based on the surrogate model.[42] Overall, there are some interesting differences in the

degree to which each method was able to reduce parameterstandard deviations. Figure 11 compares PEST andDREAM results in this regard. The standard deviation ratiofor both methods clusters near 1.0 (i.e., no reduction). Forparameters with s* < 1.0 according to one or both methods,in most cases DREAM reduced the standard deviation lessthan PEST. One reason for this may be that the PEST

Figure 9. A comparison of median normalized parameter values estimated by PEST and DREAM.Color indicates the ratio of posterior to prior variance according to (a) PEST and (b) DREAM.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

11 of 18

methodology is explicit in exploring the “null space,” thisbeing that portion of the parameter space which is notconstrained, or is only partially constrained, by the cali-bration data set. In contrast, MCMC methods only exploitthe unconstrained variability offered by the null‐space in thenatural course of sampling full parameter space. Wherethere are many insensitive parameters and/or insensitiveparameter combinations, an extremely large number ofmodel runs is required for exhaustive sampling of the null‐space in this way. MCMC methods, which are not specifi-cally focused on doing so, may thereby overestimate theuncertainty associated with parameters whose projectionsonto the null‐space are large.3.2.4. Discussion[43] The surrogate model allowed facile iteration between

objective function definition, model evaluation, and cali-

bration. It also allowed us to apply and compare two tech-niques for estimating parameter uncertainty. Generally, theparameter estimation and uncertainty analysis results forth-coming from these two very different methods are remarkablysimilar. Nevertheless, there are some notable differencesbetween the two. For this highly parameterized, highly non-linear, ill‐posed problem, neither method can provide anunequivocal guarantee that it has sampled the true posteriorparameter distribution, or has identified the global minimumof F. This exercise has, however, demonstrated that eachmethod is capable of providing consistent estimates ofparameter uncertainty and of providing samples from pos-terior parameter distributions that, in the main, are consistentwith each other. As expected, the MCMC method encapsu-lated in DREAM was much less efficient, requiring severalmillion forward runs to converge to a limiting posteriordistribution. The method was not customized for this par-ticular problem, and perhaps could have been made moreefficient if it had been. For instance, significant efficiencyimprovements could have been made by generating candi-date points in each individual chain using an archive of paststates. This would require far fewer chains to be run inparallel (N � 250), which significantly speeds up conver-gence to a limiting distribution. Furthermore, for highparameter dimensions, it would certainly be desirable to usedimensionality reduction sampling in DREAM. This, incombination with multiple‐try Metropolis search andMetropolis adjusted Langevin sampling, which uses thegradient of the likelihood function to sample preferentiallyin direction of largest objective function improvement(violating detailed balance), should significantly increasecomputationally efficiency of DREAM for highly parame-terized models. Conceptually, such an approach could beimplemented in a way that is automatic and therefore requiresno user intervention, in harmony with the DREAM designphilosophy. Such a method is presently under development;results will be reported in due course.[44] PEST‐based analysis was relatively run‐efficient.

There are several reasons for this. Where objective functionsurfaces are reasonably concave and model outputs aredifferentiable with respect to its parameters, gradient‐based

Table 3. Parameters That Both Methods Reduced Standard Devi-ation by at Least 50%.

NormalizedMedian

StandardDeviationReduction

Ratio of Posteriorto Prior Range

PEST DREAM PEST DREAM PEST DREAM

Test effects 0.64 0.15 0.10 0.06 0.14 0.07Permeability 0.08 0.15 0.10 0.06 0.14 0.07Test effects 0.16 0.04 0.01 0.15 0.03 0.23Permeability 0.16 0.05 0.12 0.17 0.30 0.20Permeability 1.00 0.88 0.06 0.17 0.12 0.21Ho 0.32 0.07 0.18 0.14 0.24 0.17Permeability 0.16 0.07 0.16 0.19 0.42 0.24Hi(s) 1.00 0.94 0.03 0.20 0.12 0.28Permeability 0.28 0.15 0.25 0.23 0.80 0.31Ho 0.35 0.17 0.22 0.31 0.46 0.36Hi(s) 1.00 0.95 0.34 0.19 1.00 0.26Ho 0.45 0.46 0.44 0.21 0.53 0.31Ho 0.87 0.93 0.46 0.32 0.66 1.00Ho 0.48 0.29 0.44 0.47 0.52 0.60Ho 0.59 0.41 0.39 0.49 0.53 0.57Hi(s) 0.47 0.16 0.43 0.50 1.00 0.78

Figure 10. Comparison a normalized median estimates forparameters that both methods reduced standard deviation byat least 50% (Tables 2a and 2b).

Figure 11. Comparison standard deviation ratio for eachparameter.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

12 of 18

methods provide fast attainment of an objective functionminimum than global methods. A problem, of course, is thatthey may all too easily find a local rather than global min-imum. In the present case use of the CMAES method as a“starter” for each SVD‐based parameter estimation processincreased PEST’s ability to locate minima with low objec-tive function values, this contributing to overall optimizationefficiency. Model run efficiency of the ensuing uncertaintyanalysis was attained by focusing null‐space Monte Carloanalysis on exploring variability associated with non-uniqueness as it exists around each of several minima locatedin previous calibration exercises. This stepwise approach(first calibrate, then explore uncertainty), appears to havebrought significant computational efficiency to the process.[45] Some of the differences in the results between the

two methods may be an artifact of the relatively smallnumber of calibrated parameter sets generated by PEST(172) through use of its null‐space Monte Carlo methodol-ogy compared to those generated by DREAM (100,000).Theoretically, further null‐space Monte Carlo generation ofnew, and widely different, calibration‐constrained param-eter sets could have been undertaken by simply continuingthe null‐space Monte Carlo process described above; thecost would have been about 150 model runs per additionalcalibrated parameter field. It was for reasons of computa-tional expediency that the process was halted at 172. Thesimilarity of the statistical measures obtained from 172parameter sets to those obtained using the more rigorous(in a Bayesian sense) DREAM results based on 100,000parameter sets engendered optimism that use of PEST inconjunction with the CPU‐intensive process model wouldyield results which are representative of the parameteruncertainty associated with that model. We also note somedifferences between the results of NSMC and DREAM, yetargue that those differences appear rather marginal withinthe context of the current modeling approach.

3.3. Prediction Uncertainty Using the Process Model

[46] The ultimate purpose of the analysis is to generateuseful predictions, together with the uncertainty associatedwith those predictions (Step 4). The high parameter dimen-sionality and long run‐times associated with the processmodel demands that the NSMC, rather than MCMC, methodbe used for this purpose. Most of the methodological detailsdeveloped in Steps 2 and 3 can be directly applied to theprocess model. We applied the design of the objectivefunction as attained and refined through the surrogate modelanalysis directly to estimation of parameters for the processmodel. Likewise, as will be described below, we were ableto directly apply the NSMC approach used with the surro-gate model to the process model. For the initial calibrationstep, however, it was necessary to deviate from the methodused on the surrogate model. We do not expect this adjust-ment to affect the validity of our results since the final NSMCanalysis should not be sensitive to the g obtained in the initialcalibration. This follows from the observation that the natureof the NSMC methodology is such that it is relatively inde-pendent of the particular method used to achieve the initialcalibration which then provides the basis for post‐calibrationexploration of calibration‐constrained parameter space.[47] Specifically, in contrast to the surrogate model cali-

bration, in calibration of the process model, CPU require-

ments prevented us from beginning the calibration processwith 60 random starting points, or from applying truncatedSVD in conjunction with a full adjustable parameter set.This is an outcome of the large computational expense ofcalculating a full Jacobian matrix at every iteration of theinversion process. In principle, the computational burdencould be lessened by taking advantage of model‐computedadjoints. However, FEHM does not provide adjoints, andperhaps more importantly, the sequential coupling of thetesting‐effects and groundwater flow models would makecomputing adjoints very difficult. Instead, we used physicalarguments to limit the number of model parameters varied inthe calibration step (but not the uncertainty analysis step).Only 46 parameters were allowed to vary, as indicated inTable 1 (column labeled “free”). These included 18 of the29 material property parameters, emphasizing rock units inwhich calibration wells were completed, as well as a subsetof 28 Ho values, specifically for those tests located withinapproximately 1000 m of calibration wells. As an additionalefficiency measure for calibration, we used the “SVD‐Assist” technique described by Tonkin and Doherty [2005]to minimize the objective function; this gains efficiencythrough directly estimating linear combinations of para-meters spanning the calibration solution space, therebyeliminating the need to compute sensitivities with respect toall adjustable parameters. We found that by using knowl-edge of the spatial relation between the calibration data setand model parameters to reduce the parameter dimension-ality a priori, followed by the use of the “SVD‐assist”methodology to estimate values for the reduced parameterset we were able to achieve a reasonable calibration out-come (an objective function of 682) using a small fractionof the model runs that were necessary to calibrate the sur-rogate model using the full parameter space. The calibratedmodel explains over 98% of measured variance in head (seeFigure 12), compared to less than 5% based on priorinformation alone. The range of residuals (simulated –

Figure 12. Comparison of measured and simulated heads(m), 583 observations.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

13 of 18

measured) is rather large (77 to −59 m), however, most(∼60%) are less than 10 m.[48] Subsequent NSMC analysis used the same method-

ology as for the surrogate model. First a Jacobian matrixwas calculated for all 242 parameters, in this case at greatcomputational expense. This was only done once, at the gdescribed above. We do not expect that our results wouldchange if we had repeated this procedure multiple times atestimates of g corresponding to other possible local objec-tive function minima, since as described earlier, we foundthe results of NSMC analysis to be fairly insensitive tostarting g when using the surrogate model. The NSMCmethodology was then employed to generate a suite ofparameter sets that all met the criterion (F < 1100), for thiswas heuristically considered to be the objective functionvalue below which the model could be deemed to be “cal-ibrated.” (Note: a slightly larger cutoff value was used forthe process model than for the surrogate model due to thedifficulty of obtaining lower values given the relativelysmall number of forward runs at our disposal). Because ofthe computationally demanding nature of this process, wewere able to generate only 62 such calibration‐constrainedrandom parameter sets. An example hydrograph generatedfrom one of these parameter sets is shown in Figure 13.With enormous additional computational effort, a larger setof calibration‐constrained parameter sets could have beenobtained. Although this sample size is too small to derive“true” posterior distributions characterizing parameter andpredictive uncertainty, it can nevertheless be used to providesome indication of the magnitude of these. The distributionsof objective function and prediction (enhanced flux) values,b, arising from this process are shown in Figure 14.[49] The range of prediction values arising from the

NSMC analysis described above is remarkably small,varying by less than one order of magnitude, and does not

include 0. In contrast, an ensemble of predictions calculatedon the basis of 62 randomly generated parameter sets rangesfrom 0 to 1462 m3 X 1E6. It is possible, of course, that therange of the calibration‐constrained posterior prediction ascalculated using NSMC would increase (even to include 0)if more calibration‐constrained parameter sets were gener-ated. But if the present result were to hold with a largernumber of parameter sets, it would imply that there is noquestion that testing did create some degree of enhancedflux to the lower aquifer.

3.4. Post Audit

[50] It is unfortunately often the case in applied ground-water modeling that predictions must be made in theabsence of any practical means for testing their veracity. Inthe present case, however, the availability of a data set ofdifferent type to any discussed previously affords us anopportunity to evaluate the ability of the process model tomake predictions, and to quantify the uncertainty associatedwith those predictions. This data set is comprised of mea-surements of ground surface subsidence (made using air-borne synthetic aperture radar) caused by post‐testingdepressurization of the aquifer [Vincent et al., 2003]).Subsidence was measured over three time periods during the1990s at a spatial resolution of 30 m. Accuracy of themethod was estimated to be of the order of about 1 cm. Astesting‐induced over–pressurization relaxes over time, porespaces compress due to release of water from elastic storage;as a consequence the ground surface subsides. Simulationsbased on the same parameters used to make the flux pre-dictions can be used to predict surface subsidence over time.If the simplifying assumption is made that all volumechange is accommodated in the vertical direction, theporosity changes due to changes in elastic storage simulatedin each column of cells at each time step can be directly

Figure 13. Comparison of measured and simulated heads at well Ue‐4t. The abrupt rise in simulatedheads around 1987 is due to a nearby underground nuclear test.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

14 of 18

converted to a 2‐D map of ground surface elevation changesover time.[51] We compared simulated to observed subsidence for

the time period 1992–1993 at each point in the model domainthat overlaps with the subsidence data (this comprising thenorthern half of the model domain). Within this overlappingarea, 10,000 comparisons between simulated and observedsubsidence could be made. Of these, 85.6% of observations(±1 cm) fell between the minimum and maximum predictionsas defined by the model runs based on the 64 sets of NSMC‐generated parameters. In Figure 15 a comparison betweenobserved subsidence and model‐generated uncertaintybounds of predicted subsidence is shown for a representativeeast–west cross‐section (located on Figure 1). The compari-son, though not perfect, shows that predictive uncertaintybounds computed using the process model are able to span arange that largely includes observations that were not used inthe model calibration process. This is quite remarkable andinspires confidence that the processmodel has been calibratedappropriately, and provides estimates of uncertainty thatappear reasonable when making the prediction of mostinterest to the present study (Figure 13), as this prediction is

dependent on similar aspects of the system as those whichlead to ground subsidence.[52] It should be noted that both the enhanced flux and the

ground surface subsidence predictions discussed here areprimarily sensitive to relatively large‐scale structural var-iations. Other types of model predictions might be moresensitive to local features, which may not be accuratelyrepresented in this model. The potential impact of unre-solved local features would have to be considered in anyuncertainty analysis of model predictions sensitive to small‐scale, localized effects.

4. Conclusions

[53] The methodology we propose here is of practicalvalue to assist in the development and analysis of complex,CPU‐intensive groundwater models. Our example applica-tion has characteristics that are typical of many practicalproblems. Because the model is developed for a complexfield setting rather than for a synthetic test problem, “true”model structure, parameters, and predictions are unknown.Parameters must be estimated based on limited priorknowledge and using a calibration data set of variablequality whose spatial and temporal sampling is far fromoptimal. Furthermore, the ability of the model to extractinformation from that data set is compromised by the factthat the model, like all models, is an imperfect simulator ofsystem behavior. The statistical character of the resultingstructural noise, including its spatial and temporal correla-tion structure, are unknown. As a result of this, specialattention needed to be given to formulation of an objectionfunction whose minimization allowed maximum receptivityof information within the calibration data set by the model.An added problem is the computationally demanding natureof the model, and the complex nature of the processes that itsimulates and of the hydraulic properties of the host envi-ronment in which those processes operate.[54] As is often the case, in the process of model devel-

opment, calibration, and predictive uncertainty analysis,many qualitative decisions needed to be made. These were, ofnecessity, based on expert knowledge of the site, as well as onthe strengths and weaknesses of the model components andcalibration data set. These decisions included 1) choice ofoptimal formulation of the objective function, 2) choice of aweighting scheme to use in conjunction with this objectivefunction, 3) value of the objective function for which model‐to‐measurement fit is deemed to be satisfactory, and 4) choiceof the number of calibration‐constrained parameter fieldsthat is considered “adequate” for sufficient characterizationof predictive uncertainty. It is the authors’ opinion thatcareful model‐based hydrogeologic analyses will alwaysrequire subjective inputs such as these. We also argue thatsubjectivity does not detract from the value of informationyielded by analyses such as those documented herein. Infact, as we have tried to demonstrate, without the proper useof informed subjectivity, based in part on experimentationallowed by use of a simple surrogate model, the use of acomplex model as a basis for environmental decision‐making would be seriously compromised.[55] To our knowledge, the study documented herein

represents the first occasion on which the NSMC andMCMC methods have been formally compared. It alsoconstitutes the first demonstration of the use of the DREAM

Figure 14. Null‐space Monte Carlo results: (a) objectivefunction distribution and (b) prediction distribution.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

15 of 18

package on a high dimension parameter estimation problem,with low sensitivities for most parameters and a highdimensional null‐space. The high dimensionality and non-linearity of the inverse problem and the low sensitivity ofmost parameters provided a challenge to which both theNSMC and MCMC algorithms were able to rise. Given thefact that these two uncertainty analysis techniques are basedon such different theoretical foundations, the degree ofsimilarity between the outcomes of their analyses was bothpleasing and remarkable. Given the sound theoretical basisof DREAM on the one hand, and the computational effi-ciency of NSMC on the other hand, this is an importantfinding, for it suggests that widespread use of the NSMCmethod in conjunction with the large complex models thatoften form the basis for environmental decision‐making willbe of widespread worth. Furthermore, our work suggeststhat it would be advantageous to combine the strengths ofthe NSMC and MCMC algorithms to enhance the efficiencyof MCMC methods in highly parameterized contexts whileproviding more theoretical rigor to NSMC analysis. Devel-opment of a Metropolis MCMC sampling algorithm thatincorporates a proposal density function that accommodatesthe null‐space may provide the means through which thiscan be achieved. This study has stimulated the developmentof a hybrid parallel MCMC scheme that combines multiple‐try Metropolis search, adjusted Langevin sampling, andsampling from a past archive of states to speed up findingthe posterior distribution for highly parameterized problems.

[56] In the study documented herein, not all of theinformation gained, and techniques developed, for optimalcalibration and uncertainty analysis using the surrogatemodel were directly transferable to the process model. Forexample, the method used to initially calibrate the surrogatemodel had to be modified to reduce the number of forwardmodel runs required for objective function minimization totake place. Such differences in methodologies throughwhich pre‐uncertainty analysis calibration is achievedshould not impair the ability of the NSMC methodology toobtain a wide range of parameters sets which satisfy cali-bration constraints, and hence should not affect the ability ofthe methodology to properly represent parameter and pre-dictive uncertainty. This is demonstrated in the present studywhere our final NSMC analysis achieved a suite of widelydifferent parameter fields which could be used to make anykind of prediction required of the model. Our post‐auditanalysis based on predictions of recovery from subsidence,demonstrated that the range of uncertainty establishedthrough use of the NSMC methodology was indeed able toencapsulate the true behavior of the system.[57] We finish this paper with a summary of our con-

clusions in point form.[58] 1. Use of an appropriately simplified surrogate model

in conjunction with a highly complex CPU‐intensive pro-cess model, provides a user with considerable freedom intesting different model concepts and data processing alter-natives. This, in turn, promulgates optimal use of the pro-

Figure 15. Measured ground‐surface subsidence for an EW cross‐section through northern Yucca Flat,1992–1993. Range of simulated values for the 64 cases generated by the NSMC analysis are shown forcomparison.

KEATING ET AL.: ASSESSMENT OF STRONGLY NONLINEAR GROUNDWATER MODELS W10517W10517

16 of 18