Online Learning. Ensemble Learning. · parison of decision trees and other classification meth-ods...

Transcript of Online Learning. Ensemble Learning. · parison of decision trees and other classification meth-ods...

MachineLearning‐10601

OnlineLearning.EnsembleLearning.

GeoffGordon,MiroslavDudík([[[partlybasedonslidesofRobSchapireandCarlosGuestrin]

hKp://www.cs.cmu.edu/~ggordon/10601/November4,2009

LastTme:Generaliza)onErrorinCon)nuousHypothesisSpaces• find h consistent with data:

errtrue(h) ! O

!

"complexity of H

m

#

$ w/ high prob

• find h with few mistakes:

errtrue(h) ! errtrain(h)+O

!

"%

complexity of H

m

#

$ w/ high prob

complexity of H = ln |H|complexity of H = ln(max #dichotomies

on any m instances)

Dichot(H, m) ! |H|

• find h consistent with data:

errtrue(h) ! O

!

"complexity of H

m

#

$ w/ high prob

• find h with few mistakes:

errtrue(h) ! errtrain(h)+O

!

"%

complexity of H

m

#

$ w/ high prob

complexity of H = ln |H|complexity of H = ln(max #dichotomies

on any m instances)

Dichot(H, m) ! |H|

LastTme:VCdimension

VC(H) = max #samples on which H

can induce an arbitrary labeling

= max{m : Dichot(H, m) = 2m}

! ln |H|

Dichot(H, m) =

!""#

""$

2m for all m (if VC =")

O(md) (if VC = d)

VC(H) = max #samples on which H

can induce an arbitrary labeling

= max{m : Dichot(H, m) = 2m}

! ln |H|

Dichot(H, m) =

!""#

""$

2m for all m (if VC =")

O(md) (if VC = d)

[Vapnik‐Chervonenkis]

GeneralizaTonBoundsusingVCdimension(sayVC=d)

• consistent case:

errtrue(h) ! O

!

" d

m

#

$ w/ high prob

• inconsistent case:

errtrue(h) ! errtrain(h) + O

!

"%

d

m

#

$ w/ high prob

OnlinelearningSetup:

• seeoneexampleataTme• predictalabel• seetruelabel

Goal:minimize#mistakesover)me

Example:LearningwithExpertAdvice

• consistentcase=perfectexpertcaseHalvingAlgorithm

#mistakes

T!

log2 N

T

• initialize: wj " 1 for j = 1, · · · , N

• for t = 1, · · · , T

– expert j predicts !j

– take a weighted vote

– predict yt=result of vote

– observe yt

– for each expert who made a mistake:wj " "wj

parameter 0 < " < 1if " = 0, obtain halving algo

Drop‘perfectexpert’assumpTon

WeightedMajorityAlgorithm

#mistakes

T!

log2 N

T

• initialize: wj " 1 for j = 1, · · · , N

• for t = 1, · · · , T

– expert j predicts !j

– take a weighted vote

– predict yt=result of vote

– observe yt

– for each expert who made a mistake:wj " "wj

parameter 0 < " < 1if " = 0, obtain halving algo

#mistakes

T!

log2 N

T

• initialize: wj " 1 for j = 1, · · · , N

• for t = 1, · · · , T

– expert j predicts !j

– take a weighted vote

– predict yt=result of vote

– observe yt

– for each expert who made a mistake:wj " "wj

parameter 0 < " < 1if " = 0, obtain halving algo

Mistake bound for weigthed majority algorithm with ! = 0.5:

#mistakes ! 2.4(log2 N + #mistakes of best expert)

Problem:What if the best expert makes mistakes 25% of the time?

E[#mistakes]

T!

#mistakes of best expert

T+ O

!"lnN

T

#

FixingWeightedMajority

Fix#1:ifexpertsarenotcertain,randomizeaccordingtocurrenttally

Fix#2:op)mizeβ

ResulTngmistakebound:

Mistake bound for weigthed majority algorithm with ! = 0.5:

#mistakes ! 2.4(log2 N + #mistakes of best expert)

Problem:What if the best expert makes mistakes 25% of the time?

E[#mistakes]

T!

#mistakes of best expert

T+ O

!"lnN

T

#

Whatyoushouldknowaboutonlinelearning• nostaTsTcalassumpTons,yetwecangetsimilarguaranteesasinthei.i.d.sebng

• canbeeasilyadaptedtobatchsebng(wehaven’ttalkedaboutthisinlecture)

• learningwithexpertadvice– expertscanbeanything!– halvingalgorithm– weightedmajorityalgorithm

BacktobatchseHng...

Whichclassifiersarethebest?An Empirical Comparison of Supervised Learning Algorithms

Table 4. Bootstrap Analysis of Overall Rank by Mean Performance Across Problems and Metrics

model 1st 2nd 3rd 4th 5th 6th 7th 8th 9th 10th

bst-dt 0.580 0.228 0.160 0.023 0.009 0.000 0.000 0.000 0.000 0.000rf 0.390 0.525 0.084 0.001 0.000 0.000 0.000 0.000 0.000 0.000bag-dt 0.030 0.232 0.571 0.150 0.017 0.000 0.000 0.000 0.000 0.000svm 0.000 0.008 0.148 0.574 0.240 0.029 0.001 0.000 0.000 0.000ann 0.000 0.007 0.035 0.230 0.606 0.122 0.000 0.000 0.000 0.000knn 0.000 0.000 0.000 0.009 0.114 0.592 0.245 0.038 0.002 0.000bst-stmp 0.000 0.000 0.002 0.013 0.014 0.257 0.710 0.004 0.000 0.000dt 0.000 0.000 0.000 0.000 0.000 0.000 0.004 0.616 0.291 0.089logreg 0.000 0.000 0.000 0.000 0.000 0.000 0.040 0.312 0.423 0.225nb 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.030 0.284 0.686

overall, and only a 4.2% chance of seeing them ranklower than 3rd place. Random forests would come in1st place 39% of the time, 2nd place 53% of the time,with little chance (0.1%) of ranking below third place.

There is less than a 20% chance that a method otherthan boosted trees, random forests, and bagged treeswould rank in the top three, and no chance (0.0%)that another method would rank 1st—it appears to bea clean sweep for ensembles of trees. SVMs probablywould rank 4th, and neural nets probably would rank5th, but there is a 1 in 3 chance that SVMs would rankafter neural nets. The bootstrap analysis clearly showsthat MBL, boosted 1-level stumps, plain decision trees,logistic regression, and naive bayes are not competitiveon average with the top five models on these problemsand metrics when trained on 5k samples.

6. Related Work

STATLOG is perhaps the best known study (Kinget al., 1995). STATLOG was a very comprehensivestudy when it was performed, but since then importantnew learning algorithms have been introduced such asbagging, boosting, SVMs, and random forests. LeCunet al. (1995) presents a study that compares severallearning algorithms (including SVMs) on a handwrit-ing recognition problem using three performance crite-ria: accuracy, rejection rate, and computational cost.Cooper et al. (1997) present results from a study thatevaluates nearly a dozen learning methods on a realmedical data set using both accuracy and an ROC-likemetric. Lim et al. (2000) perform an empirical com-parison of decision trees and other classification meth-ods using accuracy as the main criterion. Bauer andKohavi (1999) present an impressive empirical analy-sis of ensemble methods such as bagging and boosting.Perlich et al. (2003) conducts an empirical comparisonbetween decision trees and logistic regression. Provost

and Domingos (2003) examine the issue of predictingprobabilities with decision trees, including smoothedand bagged trees. Provost and Fawcett (1997) discussthe importance of evaluating learning algorithms onmetrics other than accuracy such as ROC.

7. Conclusions

The field has made substantial progress in the lastdecade. Learning methods such as boosting, randomforests, bagging, and SVMs achieve excellent perfor-mance that would have been di!cult to obtain just 15years ago. Of the earlier learning methods, feedfor-ward neural nets have the best performance and arecompetitive with some of the newer methods, particu-larly if models will not be calibrated after training.

Calibration with either Platt’s method or Isotonic Re-gression is remarkably e"ective at obtaining excellentperformance on the probability metrics from learningalgorithms that performed well on the ordering met-rics. Calibration dramatically improves the perfor-mance of boosted trees, SVMs, boosted stumps, andNaive Bayes, and provides a small, but noticeable im-provement for random forests. Neural nets, baggedtrees, memory based methods, and logistic regressionare not significantly improved by calibration.

With excellent performance on all eight metrics, cali-brated boosted trees were the best learning algorithmoverall. Random forests are close second, followed byuncalibrated bagged trees, calibrated SVMs, and un-calibrated neural nets. The models that performedpoorest were naive bayes, logistic regression, decisiontrees, and boosted stumps. Although some methodsclearly perform better or worse than other methodson average, there is significant variability across theproblems and metrics. Even the best models some-times perform poorly, and models with poor average

[Caruana-Mizil 2006]

BEWARE RANKINGS... ...which college is the best?

Whichclassifiersarethebest?

An Empirical Comparison of Supervised Learning Algorithms

Table 4. Bootstrap Analysis of Overall Rank by Mean Performance Across Problems and Metrics

model 1st 2nd 3rd 4th 5th 6th 7th 8th 9th 10th

bst-dt 0.580 0.228 0.160 0.023 0.009 0.000 0.000 0.000 0.000 0.000rf 0.390 0.525 0.084 0.001 0.000 0.000 0.000 0.000 0.000 0.000bag-dt 0.030 0.232 0.571 0.150 0.017 0.000 0.000 0.000 0.000 0.000svm 0.000 0.008 0.148 0.574 0.240 0.029 0.001 0.000 0.000 0.000ann 0.000 0.007 0.035 0.230 0.606 0.122 0.000 0.000 0.000 0.000knn 0.000 0.000 0.000 0.009 0.114 0.592 0.245 0.038 0.002 0.000bst-stmp 0.000 0.000 0.002 0.013 0.014 0.257 0.710 0.004 0.000 0.000dt 0.000 0.000 0.000 0.000 0.000 0.000 0.004 0.616 0.291 0.089logreg 0.000 0.000 0.000 0.000 0.000 0.000 0.040 0.312 0.423 0.225nb 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.030 0.284 0.686

overall, and only a 4.2% chance of seeing them ranklower than 3rd place. Random forests would come in1st place 39% of the time, 2nd place 53% of the time,with little chance (0.1%) of ranking below third place.

There is less than a 20% chance that a method otherthan boosted trees, random forests, and bagged treeswould rank in the top three, and no chance (0.0%)that another method would rank 1st—it appears to bea clean sweep for ensembles of trees. SVMs probablywould rank 4th, and neural nets probably would rank5th, but there is a 1 in 3 chance that SVMs would rankafter neural nets. The bootstrap analysis clearly showsthat MBL, boosted 1-level stumps, plain decision trees,logistic regression, and naive bayes are not competitiveon average with the top five models on these problemsand metrics when trained on 5k samples.

6. Related Work

STATLOG is perhaps the best known study (Kinget al., 1995). STATLOG was a very comprehensivestudy when it was performed, but since then importantnew learning algorithms have been introduced such asbagging, boosting, SVMs, and random forests. LeCunet al. (1995) presents a study that compares severallearning algorithms (including SVMs) on a handwrit-ing recognition problem using three performance crite-ria: accuracy, rejection rate, and computational cost.Cooper et al. (1997) present results from a study thatevaluates nearly a dozen learning methods on a realmedical data set using both accuracy and an ROC-likemetric. Lim et al. (2000) perform an empirical com-parison of decision trees and other classification meth-ods using accuracy as the main criterion. Bauer andKohavi (1999) present an impressive empirical analy-sis of ensemble methods such as bagging and boosting.Perlich et al. (2003) conducts an empirical comparisonbetween decision trees and logistic regression. Provost

and Domingos (2003) examine the issue of predictingprobabilities with decision trees, including smoothedand bagged trees. Provost and Fawcett (1997) discussthe importance of evaluating learning algorithms onmetrics other than accuracy such as ROC.

7. Conclusions

The field has made substantial progress in the lastdecade. Learning methods such as boosting, randomforests, bagging, and SVMs achieve excellent perfor-mance that would have been di!cult to obtain just 15years ago. Of the earlier learning methods, feedfor-ward neural nets have the best performance and arecompetitive with some of the newer methods, particu-larly if models will not be calibrated after training.

Calibration with either Platt’s method or Isotonic Re-gression is remarkably e"ective at obtaining excellentperformance on the probability metrics from learningalgorithms that performed well on the ordering met-rics. Calibration dramatically improves the perfor-mance of boosted trees, SVMs, boosted stumps, andNaive Bayes, and provides a small, but noticeable im-provement for random forests. Neural nets, baggedtrees, memory based methods, and logistic regressionare not significantly improved by calibration.

With excellent performance on all eight metrics, cali-brated boosted trees were the best learning algorithmoverall. Random forests are close second, followed byuncalibrated bagged trees, calibrated SVMs, and un-calibrated neural nets. The models that performedpoorest were naive bayes, logistic regression, decisiontrees, and boosted stumps. Although some methodsclearly perform better or worse than other methodson average, there is significant variability across theproblems and metrics. Even the best models some-times perform poorly, and models with poor average

[Caruana-Mizil 2006]

Topthreetechniques—ensemblesoftrees

• boosteddecisiontrees• randomforests• baggeddecisiontrees

Thegoodandthebadofdecisiontrees

GOOD BAD

smalltrees:SIMPLE

lesspronetooverfibng

cannotrepresentcomplexconcepts

highbias

bigtrees:EXPRESSIVE

canrepresentcomplexconcepts

overfibng;sensiTvetosmallchangesindata

highvariance

OvercominglimitaTonsofdecisiontrees:ensemblesoftrees

Idea:

• learnmul)plehypotheses(trees)• combinethemusingvo)ng(possiblyweighted)

Ensemblesoftrees

BAGGINGandRANDOMFORESTS

• learnmanybigtrees

• eachtreeaimstofitthesametargetconcept

• voTng≈averaging:DECREASEinVARIANCE

BOOSTING

• learnmanysmalltrees(weakorbasehypotheses)

• eachtree‘specializes’toadifferentpartoftargetconcept

• voTngincreasesexpressivity:DECREASEinBIAS

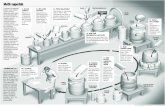

Bagging:learnmanytreesforthesameconceptInput:mexamples(x1,y1),...,(xm,ym)

Fort=1,...,T• choosearandomtrainingsetbydrawingmexampleswithreplacement

• learnatreeht

Output:HsuchthatH(x)=majorityamongh1(x),...,hT(x)

[Hastie-Tibshirani-Friedman, 2nd edition: Section 8.7]

bootstrapsample

BaggingisbeKerthansingletrees

8.7 Bagging 285

0 50 100 150 200

0.2

00

.25

0.3

00

.35

0.4

00

.45

0.5

0

Number of Bootstrap Samples

Te

st

Err

or

Bagged Trees

Original Tree

Bayes

Consensus

Probability

FIGURE 8.10. Error curves for the bagging example of Figure 8.9. Shown isthe test error of the original tree and bagged trees as a function of the number ofbootstrap samples. The orange points correspond to the consensus vote, while thegreen points average the probabilities.

bagging helps under squared-error loss, in short because averaging reducesvariance and leaves bias unchanged.

Assume our training observations (xi, yi), i = 1, . . . , N are indepen-dently drawn from a distribution P, and consider the ideal aggregate es-timator fag(x) = EP f!(x). Here x is fixed and the bootstrap dataset Z!

consists of observations x!i , y

!i , i = 1, 2, . . . , N sampled from P. Note that

fag(x) is a bagging estimate, drawing bootstrap samples from the actualpopulation P rather than the data. It is not an estimate that we can usein practice, but is convenient for analysis. We can write

EP [Y ! f!(x)]2 = EP [Y ! fag(x) + fag(x) ! f!(x)]2

= EP [Y ! fag(x)]2 + EP [f!(x) ! fag(x)]2

" EP [Y ! fag(x)]2. (8.52)

The extra error on the right-hand side comes from the variance of f!(x)around its mean fag(x). Therefore true population aggregation never in-creases mean squared error. This suggests that bagging—drawing samplesfrom the training data— will often decrease mean-squared error.

The above argument does not hold for classification under 0-1 loss, be-cause of the nonadditivity of bias and variance. In that setting, bagging a

[Figure from Hastie-Tibshirani-Friedman, 2nd edition]

RandomForests:baggedtreeswithrandomizedtreegrowthBaggingwithaspecifictreelearner:

• nopruningstep– stopgrowingwhennodecontainstoofewexamples

• don’tconsiderallpossiblea\ributesforsplit– chooseasmallsubsetofa\ributesatrandom(aconstantfracTonorasquarerootofallaKributes)

– pickthebestsplitamongthoseaKributes

[Hastie-Tibshirani-Friedman, 2nd edition: Sections 15.1-15.3]

RandomForestsoutperformbagging

[Figure from Hastie-Tibshirani-Friedman, 2nd edition]

15.2 Definition of Random Forests 589

Typically values for m are !p or even as low as 1.

After B such trees {T (x;!b)}B1 are grown, the random forest (regression)

predictor is

fBrf (x) =

1B

B!

b=1

T (x; !b). (15.2)

As in Section 10.9 (page 356), !b characterizes the bth random forest tree interms of split variables, cutpoints at each node, and terminal-node values.Intuitively, reducing m will reduce the correlation between any pair of treesin the ensemble, and hence by (15.1) reduce the variance of the average.

0 500 1000 1500 2000 2500

0.0

40

0.0

45

0.0

50

0.0

55

0.0

60

0.0

65

0.0

70

Spam Data

Number of Trees

Te

st

Err

or

BaggingRandom ForestGradient Boosting (5 Node)

FIGURE 15.1. Bagging, random forest, and gradient boosting, applied to thespam data. For boosting, 5-node trees were used, and the number of trees werechosen by 10-fold cross-validation (2500 trees). Each “step” in the figure corre-sponds to a change in a single misclassification (in a test set of 1536).

Not all estimators can be improved by shaking up the data like this.It seems that highly nonlinear estimators, such as trees, benefit the most.For bootstrapped trees, ! is typically small (0.05 or lower is typical; seeFigure 15.9), while "2 is not much larger than the variance for the originaltree. On the other hand, bagging does not change linear estimates, suchas the sample mean (hence its variance either); the pairwise correlationbetween bootstrapped means is about 50% (Exercise 15.4).

Classifica)on:spamvsnon‐spam

Features:rela)vefrequenciesof57ofthemostcommonlyoccurringwordsandpunctuaTonmarksintheemailmessage

BOOSTING

BoosTngidea

• learnmanysmalltrees(weakorbasehypotheses)

• eachtree‘specializes’toadifferentpartoftargetconcept

• voTngincreasesexpressivity:DECREASEinBIAS

288 8. Model Inference and Averaging

•

•

•

••

•

•

•

•

•

•

•

•

•

•

••

•

•

••

•

•

••

•

••

•

•

•

•

•

•

•

•

• •••

•

•

•

•

•

•

•

•

•

•

•

••

•

•

•

•

•

••

•

•

••

•

•

•

•

•

•

•

•

•

•

•

•

•

• •• •

•

•

•

•

•

••

••

•

•

•

•

•

•

•

• •

••

•

•

•

•

•

••

•

•

•

•

•

•

•

•

•

•

•

• •

•

•

• •

•

•

•

••

•

•

•

•

•

•

•••

•

•

•

•

•

•

•

•

•

•

•

••

••

•

•

•

•

•

•

•

•

•

•

• ••

•

•

•

••

•

•

••

•

••

•

•

••

•

••

•

•

•

•

•

•

•

•

•

••

•

•

•

Bagged Decision Rule

•

•

•

••

•

•

•

•

•

•

•

•

•

•

••

•

•

••

•

•

••

•

••

•

•

•

•

•

•

•

•

• •••

•

•

•

•

•

•

•

•

•

•

•

••

•

•

•

•

•

••

•

•

••

•

•

•

•

•

•

•

•

•

•

•

•

•

• •• •

•

•

•

•

•

••

••

•

•

•

•

•

•

•

• •

••

•

•

•

•

•

••

•

•

•

•

•

•

•

•

•

•

•

• •

•

•

• •

•

•

•

••

•

•

•

•

•

•

•••

•

•

•

•

•

•

•

•

•

•

•

••

••

•

•

•

•

•

•

•

•

•

•

• ••

•

•

•

••

•

•

••

•

••

•

•

••

•

••

•

•

•

•

•

•

•

•

•

••

•

•

•

Boosted Decision Rule

FIGURE 8.12. Data with two features and two classes, separated by a linearboundary. (Left panel:) Decision boundary estimated from bagging the decisionrule from a single split, axis-oriented classifier. (Right panel:) Decision boundaryfrom boosting the decision rule of the same classifier. The test error rates are0.166, and 0.065, respectively. Boosting is described in Chapter 10.

and is described in Chapter 10. The decision boundary in the right panel isthe result of the boosting procedure, and it roughly captures the diagonalboundary.

8.8 Model Averaging and Stacking

In Section 8.4 we viewed bootstrap values of an estimator as approximateposterior values of a corresponding parameter, from a kind of nonparamet-ric Bayesian analysis. Viewed in this way, the bagged estimate (8.51) isan approximate posterior Bayesian mean. In contrast, the training sampleestimate f(x) corresponds to the mode of the posterior. Since the posteriormean (not mode) minimizes squared-error loss, it is not surprising thatbagging can often reduce mean squared-error.

Here we discuss Bayesian model averaging more generally. We have aset of candidate models Mm, m = 1, . . . ,M for our training set Z. Thesemodels may be of the same type with di!erent parameter values (e.g.,subsets in linear regression), or di!erent models for the same task (e.g.,neural networks and regression trees).

Suppose ! is some quantity of interest, for example, a prediction f(x) atsome fixed feature value x. The posterior distribution of ! is

Pr(!|Z) =M!

m=1

Pr(!|Mm,Z)Pr(Mm|Z), (8.53)

Boosteddecisionstumpscanlearnadiagonaldecisionboundary

[Figure from Hastie-Tibshirani-Friedman, 2nd edition]

Q: howdoIfocusondifferent ‘parts’oftargetconcept?

A: trainonspecificsubsetsof trainingdata!

Q: whichsubsets?A: whereyourcurrentensemble s)llmakesmistakes!

SmallmodificaTonoftheabove:—trainonaweightedtrainingset—weightsdependonthecurrenterror

BoosTng• boos)ng= generalmethodofconverTng

roughrulesofthumb(e.g.,decisionstumps) intohighlyaccuratepredicTonrule

• technically:

– assumegiven“weak”learningalgorithmthatcanconsistentlyfindclassifiers(“rulesofthumb”)atleastslightlybeKerthanrandom,say,accuracy≥55%(intwo‐classsebng)

– givensufficientdata,aboosTngalgorithmcanprovablyconstructsingleclassifierwithveryhighaccuracy,say,99%

AFormalDescripTonofBoosTngA Formal Description of BoostingA Formal Description of BoostingA Formal Description of BoostingA Formal Description of BoostingA Formal Description of Boosting

• given training set (x1, y1), . . . , (xm, ym)

• yi ! {"1,+1} correct label of instance xi ! X

• for t = 1, . . . ,T :• construct distribution Dt on {1, . . . ,m}

• find weak classifier (“rule of thumb”)

ht : X # {"1,+1}

with small error !t on Dt :

!t = Pri!Dt [ht(xi ) $= yi ]

• output final classifier Hfinal

AdaBoostAdaBoostAdaBoostAdaBoostAdaBoost[with Freund]

• constructing Dt :

• D1(i) = 1/m• given Dt and ht :

Dt+1(i) =Dt(i)

Zt!

!

e!!t if yi = ht(xi)e!t if yi "= ht(xi)

=Dt(i)

Ztexp(#!t yi ht(xi ))

where Zt = normalization constant

!t = 12 ln

"

1 # "t

"t

#

> 0

• final classifier:

• Hfinal(x) = sign

$

%

t

!tht(x)

&

AFormalDescripTonofBoosTng

Toy ExampleToy ExampleToy ExampleToy ExampleToy Example

D1

weak classifiers = vertical or horizontal half-planes

Toyexample

weakhypotheses=decisionstumps(verTcalorhorizontalhalf‐planes)

Round1Round 1Round 1Round 1Round 1Round 1

h1

!

"1

1

=0.30

=0.42

2D

Round2Round 2Round 2Round 2Round 2Round 2

!

"2

2

=0.21

=0.65

h2 3

D

Round3Round 3Round 3Round 3Round 3Round 3

h3

!

"3

3=0.92

=0.14

FinalclassifierFinal ClassifierFinal ClassifierFinal ClassifierFinal ClassifierFinal Classifier

H

final+ 0.92+ 0.650.42sign=

=

BoundingtrainingerrorTraining error of final classifier is bounded by:

errtrain(H) =1

m

m!

i=1!(H(xi) != yi) "

1

m

m!

i=1exp(#yif(xi)) =

T"

t=1Zt

where f(x) =T!

t=1"tht(x); H(x) = sign(f(x)).

Last step can be proved by unraveling the definition of Dt(i):

Dt+1(i) =Dt(i)exp(#yi"tht(xi))

Zt

DT+1(i) =1

m

exp(#yif(xi))#T

t=1 Zt

OpTmizingαtBy minimizing

!t Zt, we minimize the training error.

We can tighten this bound greedily, by choosing!t on each round to minimize Zt:

Zt =m"

i=1Dt(i)exp(!yi!tht(xi))

Since ht(xi) " {!1,1}, we can find this explicitly:

!t =1

2ln

#1! "t"t

$

Intuition: In each round, we adjust exampleweights, so that the accuracy of the last ruleof thumb ht drops to 50%.

WeaklearnerstoStronglearnersPlugging the optimal !t in the error bound:

1

m

m!

i=1"(H(xi) != yi) "

T"

t=1Zt " exp

##2

T!

t=1(0.5# #t)

2$

Now, if each rule of thumb (at least slightly)better than random:

#t " 0.5# $ for $ > 0then training error drops exponentially fast:

1

m

m!

i=1"(H(xi) != yi) "

T"

t=1Zt " exp

##2

T!

t=1(0.5# #t)

2$" e#2T$2