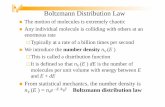

Notes on the Boltzmann distribution

Transcript of Notes on the Boltzmann distribution

Notes on the Boltzmann distribution

Chemistry 422/522, Spring 2021

1 Recalling some simple thermodynamics

In many ways, the most basic thermodynamic quantity is the equilibrium constant, which inits simplest form, is a ratio of concentrations, or probabilities:

�� =[�][�] =

?�

?�(1)

We use here the fact that concentrations are proportional to the probability of being in acertain state. A fundamental conclusion from thermodynamics relates the equilibrium con-stant to free energy difference between A and B:

Δ���> = −') ln �� (2)

By itself, this is not of much use, since it just substitutes one variable for another. Butthermodynamics adds an additional relation:

� = � −)((3�

3)

)?

= −( (3)

where the enthalpy H is related to heat exchange and the entropy S always increases forspontaneous processes (if we consider the entropy of everything, both the system we arestudying and its surroundings.)

2 Molecular interpretation of entropy

Suppose with have a system with a set of possible states {8} , such that the probability offinding a particular state is ?8 . Now we postulate that the entropy of such a system is:

( = −:∑8

?8 ln?8 (4)

We can show that ( is a measure of randomness. For example, if only one state ispopulated, and all the rest have zero probability, then ( = 0 for this very non-random state.Similarly, if there are a total of, possible states, and each has equal probability ?8 = 1/, ,then ( = : ln, , (Eq. 5.1 in your text) and this is the largest entropy one can get. We canshow this by considering the change in entropy upon changing the populations:

1

3(

3?8= −:

∑8

(?83 ln?83?8

+ ln?8

)(5)

or

3( = −:∑8

(1 + ln?8)3?8 (6)

Now we know that probability must be conserved, so that∑?8 = 1, or∑

8

3?8 = 0 (7)

Hence the first term in Eq. 6 (with the “1”) vanishes; furthermore, if you set ln ?8 to beindependent of 8, it can be taken outside of the sum, and you can use Eq. 7 again to showthat 3( = 0, and hence that the entropy is maximized by a uniform distribution.

3 The canonical distribution

Now, instead of having a single, isolated system (where the maximum entropy comes fromuniform distribution), we want to consider a large collection (“ensemble”) of identical sub-systems, which can transfer energy amongst themselves. Now, in addition to the constrainton conservation of probability (Eq. 7), there is a constraint that the total internal energymust be conserved:

∑?8�8 = * (see Eq. 5.1 in Chap. III of the Slater handout or Eq. 5.12 in

MDF), or ∑8

�83?8 = 0 (8)

Now, we want to maximize the entropy (find the most random state), subject to bothconservation equations 7 and 8. We already saw that we can drop the first term in Eq. 6,and we can set 3( = 0 for a maximum:

3( = −:∑8

ln?83?8 = 0 (9)

Here, think of ln ?8 as the coefficient of the 3?8 terms which are to be varied, andnote that all three Equations, 7, 8 and 9, have a similar form, with a sum where the 3?8terms are multiplied by different coefficients. The only way to satisfy all three equations forarbitrary values of the 3?8 variables is if ln?8 is a linear combination of the coefficients inthe constraint equations:

ln?8 = U − V�8 (10)

(Proof : just substitute Eq. 10 into Eq. 9, and simplify using Eqs. 7 and 8).Some notes:

1. The minus sign in Eq. 10 is for convenience: V can be any constant, and using a minussign here rather than a plus sign will make V be positive later on. Also note that Uand V are (initially) unknown constants, often called Lagrange multipliers. There is anelegant discussion of these on pp. 68-72 of MDF.

2

2. You can also come to a similar conclusion starting from Eq. 4, by noting that entropy isadditive (or “extensive”). Consider two uncorrelated systems that have a total num-ber of states,1 and,2. The total number of possibilities for the combined system is,1,2. Then:

( = : ln(,1,2) = : ln,1 + : ln,2 = (1 + (2 (11)

Basically, the logarithm function is the only one that combines addition and multipli-cation in this fashion. For a more detailed discussion, see Appendix E of MDF.

3. Think a little more about variations in the internal energy, * =∑8 ?8�8 : then the full

differential is:3* =

∑8

�83?8 +∑8

?83�8 (12)

In the second term, the ?8 are unchanged; hence the entropy is unchanged; hence thereis no heat transfer; hence this corresponds to work performed, say by an external po-tential that changes the individual �8 values. The first term (rearrangement of proba-bilities among the various possible states) then corresponds to heat exchange. Sincethe probability changes 3?8 are infinitessimal, this must be a reversible heat exchange.

4 The connection to classical thermodynamics

All that remains is to figure out what U and V must be. Getting U is easy by the conservationof total probability:

4U =1/>A U = − ln/ (13)

where Q (often also denoted by Z) is the partition function:

/ =∑9

4−V� 9 (14)

There are a variety of ways to determine V. One way depends on some additionalresults from thermodynamics. Substitute Eq. 10 into Eq. 9:

3( = −:∑8

3?8 (U − V�8) = :V∑8

3?8�8 = :V3@A4E (15)

Here we have removed the term involving U by our usual arguments involving con-servation of probability; next we note that 3?8�8 is the amount of heat energy exchangedwhen the probabilities are changed by 3?8 ; since these are infinitesimal changes in proba-bility, the heat exchanged is also infinitesimal, and hence must be reversible. Since 3( =

3@A4E/) , we find that V = 1/:) , and hence:

?8 =4−V�8

/(16)

What we have shown is that this Boltzmann distribution maximizes the entropy of asystem in thermal equilibrium with other systems kept at a temperature T.

3

5 Some more connections to thermodynamics

We have introduced the Gibbs free energy,� = � −)( , which is useful for the most commontask of interpreting experiments at constant pressure. There is an analogous “constant vol-ume” free energy � (usually called the Helmholtz free energy) which is defined as � = * −)( .It is instructive to use Eq. 16 to compute �:

� = * −)(=

∑8

?8�8 + :)∑8

?8 ln?8

=∑8

4−V�8

/�8 + :)

∑8

4−V�8

/(−V�8 − ln/ )

=∑8

4−V�8

/(�8 − �8 − :) ln/ )

or

� = −:) ln/ (17)

(Note that � = � + ?+ , and that for (nearly) incompressible liquids there is (almost)no pressure-volume work. Hence, in liquids � ' �, just like � ' * .)

The free energy is an extremely important quantity, and hence the partition functionZ is also extremely important. Other thermodynamic formulas follow:

� = * −)( = −:) ln/( = −(m�/m) )+ = : ln/ + :) (m ln//m) )+ (18)

* = −(m ln//mV); �+ = )

(m2(:) ln/ )

m) 2

)6 Connections to classical mechanics

We have implicitly been considered a discrete set of (quantum) states, �8 , and the dimen-sionless partition function that sums over all states:

/& =∑8

4−V�8 (19)

How does this relate to what must be the classical quantity, integrating over all phasespace:

/� =

∫4−V� (?,@)3?3@ (20)

/2 has units of (4=4A6~ · C8<4)3# for# atoms. The Heisenberg principle states (roughly):Δ?Δ@ ' ℎ, and it turns out that we should “count” classical phase space in units of h:

/& ' /2/ℎ3# (21)

For " indistinguishable particles, we also need to divide by " !. This leads to a discussionof Fermi, Bose and Boltzmann statistics....

4

7 Separation of coordinates and momenta

In classical mechanics, with ordinary potentials, the momentum integrals always factor out:

/ = ℎ−3#∫

4−V?2/2<3?

∫4−V+ (@)3@ (22)

The momentum integral can be done analytically, but will always cancel in a ther-modynamic cycle; the coordinate integral is often called the configuration integral, & . Themomentum terms just give ideal gas behavior, and the excess free energy (beyond the idealgas) is just

� = −:) ln& (23)

The momentum integrals can be done analytically:

/ = &

#∏8=1

Λ−38 ; Λ8 = ℎ/(2c<8:�) ) (24)

8 Molecular partition functions

Ideas in this section come from D.R. Herschbach, H.S. Johnston and D. Rapp, MolecularPartition Functions in Terms of Local Properties, J. Chem. Phys. 31, 1652-1661 (1959).

& =

#∏8=1

+8 (25)

• Overall translation and rotation:Since there is no potential for translation or rotation, the integration over the “firstfive” degrees of freedom always gives + 8c2 (for non-linear molecules).

• Harmonic vibrations:Consider a non-linear triatomic where * = 1

2:A (ΔA )2 + 1

2:A ′ (ΔA′)2 + 1

2:\ (Δ\ )2. Then we

get:

+1 = + ; +2 = 4cA 2(2c:�) /:A )1/2;+3 = 2cA ′ sin\ (2c:�) /: |A ′)1/2(2c:�) /:\ )1/2

+2 is a spherical shell centered on atom 1; its thickness is a measure of the average vibrational amplitudeof 1-2 stretching. +3 is a torus with axis along the extension of the 1-2 bond, an a cross section that isproduct of a 2-3 stretch amplitude and a 1-2-3 bond bend.

• Building up molecules one atom at a time

5

• Quantum correctionsThe classical expressions for +8 will fail if a dimension becomes comparable to or lessthan Λ8 . For a harmonic oscillator, let D8 = ℏl/:�) ; then the quantum corrections willbe:

&@/&2 =3#−6∏8=1

Γ(D8) (26)

Γ(D) = D exp(−D/2) (1 − 4−D)−1 (27)

For frequencies less than 300 cm−1, the error is less than 10%, but can become substan-tial at higher frequencies. Furthermore, D is mass dependent, whereas&2 is not: henceisotope effects are quantum dynamical effects.

• An equilibrium constant involves the difference of two free energies, or the ratio oftwo partition functions:

& (?A>3D2CB)& (A402C0=CB) =

∏8

+8 (?A>3D2CB)+8 (A402C0=CB)

(28)

6