New VISUAL SERVO OF TOKAMAK RELEVANT REMOTE HANDLING … · 2018. 9. 27. · the implementation of...

Transcript of New VISUAL SERVO OF TOKAMAK RELEVANT REMOTE HANDLING … · 2018. 9. 27. · the implementation of...

Pramit Dutta et al.

1

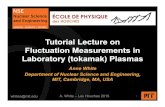

VISUAL SERVO OF TOKAMAK RELEVANT REMOTE HANDLING SYSTEMS USING NEURAL NETWORK ARCHITECTURE

P. DUTTA, N. RASTOGI, R. R. KUMAR, J. CHAUHAN, MANOAHSTEPHEN M., K. K. GOTEWAL Institute for Plasma Research Ahmedabad, India Email: [email protected]

Abstract

Tokamak inspection and maintenance requires different Remote Handling (RH) systems such as long reach planar manipulators, multi-DOF hyper-redundant arms etc. As no structural support can be provided inside the tokamak, these RH systems are usually cantilevered and have a number of articulations to traverse the toroidal geometry of the tokamak. The kinematic configuration is thus different for conventional manipulators. Due to long cantilevered length, heavy payload handling, structural deformations, gearbox backlash and control system inaccuracies the final pose of the end effector may vary from the desired pose when only a servo feedback loop is used. Such inaccuracies can only be eliminated by using Visual Servo (VS) technique, where the inverse kinematics and trajectory planning are done based on visual feedback from cameras mounted on the RH system.

The paper gives a fresh approach to visual servo for tokamak RH systems using artificial neural networks (NN) architecture. A multi-layered feed-forward NN is trained using the joint angle vector as input and the corresponding feature vector(s) of markers in a sample tile as output. The trained NN can thus predict the joint configurations for given features vectors. This eliminates the requirement of closed-form inverse kinematic solution of the manipulator and camera calibration. The NN architecture and proposed controller are validated and presented using simulation on 5DOF remote handling manipulator. Real time implementation methodology for NN based controller are also discussed.

1. INTRODUCTION

Visual servo relates to a field in robotics where computer vision data is used along with the servo loop to control the robot motion. A camera mounted on the robot end-effector feeds visual data of robot environment and target to the controller. An error vector is generated based on image features such as edges, blobs or key-points between the current view of the target and desired view. [1], [2] Suitable control law can compute a trajectory, and subsequently the velocity matrix, to attain the desired final pose of the end-effector with respect to the task. Such vision based control architecture is effective when the robot needs to interact with a dynamic and unstructured environment. Classical visual servo control techniques use image processing algorithms to determine the image features error [1], [2]. Suitable control law can then compute a trajectory and subsequently the joint velocity matrix to attain the desired final pose of the end-effector with respect to the target. Such control mechanism is important in applications like areal manipulators, space robotics, collaborative robots, and humanoids.

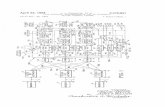

A tokamak is used to produce magnetically controlled high temperature plasma inside toroidal chamber. The tokamak in-vessel components such as tiles, divertors, control coils, diagnostics, shielding blankets and tritium breeding blankets etc., are subjected to the harsh plasma environment during tokamak operation and vitiate over the lifetime of the tokamak. Scheduled maintenance are needed, that involve crucial operations like periodic visual inspections, replacements of tiles and diagnostics, up-gradation of in-vessel coil systems etc. These inspection and maintenance activities inside the tokamak are carried out remotely using specialized robotic equipment that are controlled by an operator(s) from a control room located far away from the reactor building. Such robotic equipment is collectively known as Remote Handling (RH) systems.[3]–[6]. Due to long cantilevered length, structural deformations, gearbox backlash and control system inaccuracies the final pose of tool center point (TCP) may vary from the desired pose when only a servo feedback loop is used. This is illustrated in Fig 1. Standard servo controller will assume the pre-determined location of TCP as per feedback from position sensors. A remote operator can rectify this inaccuracy manually by relying on self-judgment and camera view. An alternative and robust mechanism is by integrating visual servo mechanism to the servo controller which will remove such positional errors by using active visual feedback from camera mounted on the manipulator TCP and guiding the TCP continuously guiding till the final desired pose is achieved.

IAEA-CN-123/45 [Right hand page running head is the paper number in Times New Roman 8 point bold capitals, centred]

Fig. 1. Illustration of Difference of Actual Configuration to Expected Configuration of RH system

In the recent years, a lot of research is conducted to improve visual servo techniques using machine learning approach. Machine learning can improve visual servo by providing it with flexibility and adaptability in unstructured environments. In this paper, an assessment of visual servo control is presented for a tokamak in-vessel inspection system using deep neural networks. Section II of this paper reviews the previous works on of the implementation of visual servomechanism using neural networks. Section III introduces the kinematics of the ARIA manipulator. Section IV presents proposed neural network based visual servo strategy, training data set generation, sensitivity study implementation of a CNN-regression architecture. Results of visual servo using neural network architecture on the ARIA system are presented in Section V. Section VI provides a conclusion and future scope of the work conducted.

2. REVIEW OF NEURAL NETWORK BASED VISUAL SERVO

Visual servo, in a broader sense, refers to the field of robotics that uses computer vision data in the servo control loop to manipulate the motion of a robot [7]. The goal of a visual servo system is to minimize the features error defined by,

𝑒 𝑡 = 𝑠 𝑚 𝑡 , 𝑎 − 𝑠∗ (1)

Here, the term s* contains the final values of the image features. The vector s m t , a provides visual features computed during the task using image measurement m(t) and additional knowledge about the system a. The choice of the vectors in equation (1) are defined based on the type of visual servomechanism being considered. Visual servomechanisms are classified in two ways. Firstly, the mounting of the camera with respect to the manipulator defines the basis of the visual features available for processing. The camera can either be mounted on the manipulator, referred to as ‘eye-in-hand’, or outside the manipulator body - ‘eye-to-hand’, in the workspace of the system[7], [8]. A second classification of visual servo mechanisms is based upon the features used in the control law, as introduced by Sanderson and Weiss[9]. A position based visual servo (PBVS) uses the features extracted from the image along with the geometric data of the target to estimate the pose of the target with respect to the camera or robot TCP. PBVS is more suited in applications where in the target has a 3D orientation with respect to the camera. In Image Based Visual Servo (IBVS), the error in image features between current and final images are used to generate the joint velocity skew matrix and no pose estimation is done. IBVS approach is faster than PBVS as the computing time for pose estimation is eliminated. The controller design for IBVS is however complicated as the process is non-linear and highly coupled[2], [10]. Since a camera mounted on a robot entering the tokamak will only see the front surface of the tiles, IBVS is a more suited scheme for visual servo of tokamak RH systems.

For classical IBVS schemes, the vector s∗ of equation (1) is a list of image plane coordinates of certain set of visual features that is extracted from the image seen by the camera at final pose of the TCP. These features can be center of mass of predefined blobs, QR markers, or edges on a surface[11]–[13]. The vector s(t) represents the list of image plane coordinates of the same features as acquired from the current view. As described in detail in [7], [8], for a fixed target and moving camera mounted on the TCP, the relation between the time variation of vector s and spatial velocity of the camera v1 can be given by

𝑣3 = −𝜆𝐿67(𝑠 − 𝑠∗) (2)

ExpectedConfigurationofRHSystem

ActualConfiguration ofRHSystem

Pramit Dutta et al.

3

Here, L97is pseudo-inverse of the feature Jacobian that links the temporal variation of visual features to the camera velocity. This classical closed form IBVS mechanism requires image feature extraction and tracing of these features when the camera is moving. This is an error prone task[14]. In addition, the interaction matrix needs to be updated at iteration of the camera movement, which is computationally costly.

An alternative method for mapping the TCP pose with the view of the target as seen by the camera is to use direct visual servo using neural networks. Introduced in [15], where a CMACS neural network was used to map the change in the joint angles required to achieve the desired pose using visual cues on the camera image. This methodology is further extended using convolution neural networks to directly map the entire image with the pose of the robot. This helps to eliminate the image processing and tracking of features thus removing the computation of the feature Jacobian. The work presented in [16] uses FlowNet architecture [17] to predict the relative pose between the desired and the current images using a Euclidean loss function, given by :

𝑙𝑜𝑠𝑠 𝐼, 𝐼∗ = 𝑥 − 𝑥 ? + 𝛽 𝑞 − 𝑞 ? (3)

The authors have also developed a 3D positioning visual servo scene dataset. Similar work have also been carried out in [18] where, a four layer feed forward artificial neural network is used to map the next set of joint positions with target Cartesian position, current arm joint position and one step back rate of change of joint position. The validation of the developed neural network is provided on a PUMA560 robotic manipulator. Qeuntin et al in [14] have proposed a model to map the raw image of a target to the relative pose between current and reference camera frames. The authors show that it is possible to repurpose an pre-trained convolution deep neural network for object classification such as AlexNet [19] with an additional regression layer for visual servo. A novel method of developing a new dataset for pose training is also presented. Simulated effects of target illumination and perturbations are also incorporated in the dataset. The authors show that by using a CNN –regression model with Euclidean cost function such as equation (3), the standard IBVS optimization problem of minimizing image feature error can be changed to minimizing the pose error of the TCP, which has better convergence similar to PBVS.

3. DESIGN OF CNN BASED VISUAL SERVO SCHEME

3.1. Data Set Generation and Network Model

A neural network based visual servo scheme, as described in section II, can eliminate the requirement of the feature Jacobian and map the TCP pose error directly with visual feedback in camera. A CNN architecture can further improve the mapping by linking the entire scene of camera feedback to robot pose error, thus eliminating the error prone image processing requirements. CNN models are mostly used for object classification problems, where the network uses a ‘Softmax’ objective function such that the output of the network is a probability distribution within the concerned classes[17], [19], [22]–[24]. In the case of visual servo, however, the output of a CNN network is expected to be a difference between the desired pose of the TCP to the current pose of TCP. This can be achieved by using a Euclidean cost function as defined equation (3) instead of the ‘Softmax’ function. Further, as pose is a continuous entity, the optimization problem is one of regression instead of classification. Thus, the output of CNN needs to be fed to a final regression layer for fitting the relative pose of the TCP. Fig. 2 shows the controller architecture for implementing a visual servomechanism with neural network. The visual feedback from the camera is directly fed to the neural network for estimation of the pose error between the current and desired location of the TCP. The error is used in the visual servo control law to predict the joint velocities, using equations (2) and (5), to be applied to the servo controller. The new image from the camera at updated pose is fed back to the model. Fig 3 shows the strategy adopted for developing the training data set. The training dataset is created starting from a single image 𝐼Cat the reference pose 𝑞CC such that,

𝑞DC = 𝑡DC, 𝜃𝑢DC = [𝑡HDC , 𝑡ID

C , 𝑡JDC , 𝜃𝑢HD

C , 𝜃𝑢IDC , 𝜃𝑢JD

C] (6)

Here, 𝑝DC is the relative pose vector between the current pose of the TCP to the desired pose. 𝑡DC represents the vector form translation matrix and 𝜃𝑢DC is the angle/axis representation of the rotation matrix[14]. The vector 𝑞DC is used as the label for the image during training. For accurate functioning of the proposed deep neural network, it is important to train the network with sufficient image-label pairs to that the regression layer can fit the pose for any new image. The image of tile at reference pose is for a camera placed at a height of 0.3m from the tile surface. To generate subsequent pose images the camera is incrementally oriented as per table below and the

IAEA-CN-123/45 [Right hand page running head is the paper number in Times New Roman 8 point bold capitals, centred]

following file image, file path and 𝑞DC are recorded. A total of ~30000 images-label pairs are generated. For training the network, 29000 pairs are used, 500 pairs are used for validation during training and another 500 randomly selected image-label pairs are kept for testing of the trained model after the training.

Fig. 2. CNN-Regression based Visual Servo Mechanism for Tokamak Invessel Inspection System

The implemented CNN-regression model is as shown in Fig. 4. The CNN-regression model is implemented using ‘TFlearn’[25].

Fig. 3. Dataset Generation for Network Training

The network consists of five convolution layers with kernel size, number of filters and activations as shown in table 2. The output of the convolution layer is provided to a fully connected (FC) with 1024 neurons. The regression layer (RL) is implemented to give six outputs for 𝑞DC with a Euclidean norm objective function. A dropout is used between the FC and RL layers to reduce over fitting.

Fig. 4. CNN Regression Model Implemented for Visual Servo

3.2. Sensitivity Study and Network Training

As designing a CNN model requires selecting the number of layers and setting the hyper-parameters, it is important to carry out a sensitivity analysis to understand the impact of the variation of the hyper-parameters on

TrainedModel

IBVSControlLaw

ARIAControlSystem

ImageI/p

RelativePose[!""#, %""#]

JointVelocityVector['̇]

VR4MaxMonitoring

JointAngleVector[q]

ARIA

ImplementationofCNN- RegressionBasedVisualServoofARIA

Pramit Dutta et al.

5

the accuracy and loss of the final network. This study trains the network, only once per case, for a small amount of epochs, twenty in this study, to understand response the network. The parameters selected here are, input image size, 𝛽- the harmonizing factor of Euclidean norm in objective function, learning rate, dropout and batch size. The results of the sensitivity study are as shown in Fig. 5.

Fig. 5. Sensitivity Study Results

As seen from the figure, the dropout and batch size does not have much variation on the accuracy or loss. Image size, however, greatly affects the accuracy and loss of the network. The accuracy of the network increases with the image size. This also increases the training time for a given number of epoch. The harmonizing factor 𝛽, has more impact on the loss of the network. A lower 𝛽 reduces the network loss. The learning rate varies the both the accuracy and loss. A learning rate of 1e-4 increases the accuracy with low network loss. Based on the above study, the final CNN-regression network is trained with parameters as shown in Table 3. The graphs for accuracy and validation are also shown in Fig. 6. The network is trained with a validation accuracy of 90% and of loss ~0.5.

TABLE 3 PARAMETERS FOR FINAL NETWORK TRAINING 1 Training Samples 29000 2 Validation Samples 500 3 Batch Size 150 4 Learning Rate 1e-4 5 Drop out at layer 7 (FC layer) 0.8 6 Image Size 250x250 7 Maximum Number of Training Epoch 500 8 𝛽- the harmonizing factor 1.0 9 Optimizer ADAM

Fig. 6. Final CNN-Regression Model Training

The final trained CNN-regression network is separately tested for Euclidean loss using the remaining image-label pairs. The output of the testing is as shown in Fig. 7. The X-axis gives the image sample number and the Y-axis gives the error in Euclidean norm between the predicted pose from the model and actual pose from the label. As shown, the maximum error is less than 0.2 for the given sample. The network can be thus considered sufficiently trained for implementation of visual servo.

IAEA-CN-123/45 [Right hand page running head is the paper number in Times New Roman 8 point bold capitals, centred]

Fig. 7. Testing the Network with Separate Test Data

3.3. Visual Servo Results on In-vessel Inspection Arm for Tokamak

The manipulator used in the present activity called the Articulated Robotic Inspection Arm (ARIA). The system is shown in Fig. 8. The manipulator is developed for carrying out inspection and maintenance tasks inside SST-1 like tokamak systems. The manipulator has six degrees of freedom (DOF) which include three yaw joints (Joint 1-3), one rotational joint (Joint 4) and one yaw/pitch joint (Joint 5). The system is mounted on a linear drive mechanism of ~2m stroke length to deploy the system inside the tokamak geometry.

Fig. 8. ARIA Articulated Arm

The ARAI manipulator has designed payload for 25kg at a cantilevered distance of ~1.8m and can be remotely controlled using virtual reality interface [6], [20], [21]. To implement the visual servomechanism on the system, a camera is mounted at the end-flange of Joint-5. The kinematic model and joint coordinate frame are shown in Fig. 4. The DH parameters of the ARIA manipulator is given in Table 3.

TABLE III. DH PARAMETERS OF THE ARIA ARM 𝜶𝒊 𝒂𝒊 𝒅𝒊 𝜽𝒊

L1 0 0 𝑑S 90 L2 90 425.0 0 𝜃? + 90 L3 0 402.0 0 𝜃V L4 -90 0 0 𝜃W − 90 L5 90 0 865.0 𝜃X − 90 Le 0 85.0 0 𝜃Y + 90

The wall-to-wall distance inside the tokamak is very limited thus only joint-4, 5 & 6 can be used for the visual servo. For the present problem the Jacobian of the end effector can be solved up to the joint-4, as given below (8).

𝐽 = 𝑧V×(𝑂Y − 𝑂V) 𝑧W×(𝑂Y − 𝑂W) 𝑧X×(𝑂Y − 𝑂X)

𝑧V 𝑧W 𝑧X (5)

=

𝐽11 𝐽12 𝐽13𝐽21 𝐽22 𝐽23𝐽31 𝐽32 𝐽33𝐽41 𝐽42 𝐽43𝐽51 𝐽52 𝐽53𝐽61 𝐽62 𝐽63

Where, 𝐽11 = −865𝑠 𝜃4 − 85𝑐 𝜃6 𝑠 𝜃4 − 85𝑐 𝜃4 𝑠 𝜃5 𝑠 𝜃6 𝐽21 = 865𝑐 𝜃4 + 85𝑐 𝜃4 𝑐 𝜃6 − 85𝑠 𝜃4 𝑠 𝜃5 𝑠 𝜃6

𝐽12 = −85𝑐 𝜃5 𝑐 𝜃4 −𝑝𝑖2

𝑠 𝜃6

Pramit Dutta et al.

7

𝐽22 = −85𝑐 𝜃5 𝑠 𝜃6 𝑠 𝜃4 −𝑝𝑖2

𝐽32 = −𝑐 𝜃4 −𝑝𝑖2

∗ 865𝑐 𝜃4 + 85𝑐 𝜃4 𝑐 𝜃6 − 85𝑠 𝜃4 𝑠 𝜃5 𝑠 𝜃6

− 𝑠 𝜃4 −𝑝𝑖2

865𝑠 𝜃4 + 85𝑐 𝜃6 𝑠 𝜃4 + 85𝑐 𝜃4 𝑠 𝜃5 𝑠 𝜃6

𝐽42 = −𝑠 𝜃4 −𝑝𝑖2

𝐽52 = 𝑐 𝜃4 −𝑝𝑖2

𝐽13 = −𝑠 𝜃5 85𝑐 𝜃6 𝑠 𝜃4 + 85𝑐 𝜃4 𝑠 𝜃5 𝑠 𝜃6 − 85𝑐 𝜃4 𝑐 𝜃5 ?𝑠 𝜃6 𝐽23𝑠 𝜃5 85𝑐 𝜃4 𝑐 𝜃6 − 85𝑠 𝜃4 𝑠𝑖𝑛 𝜃5 𝑠 𝜃6 − 85𝑐 𝜃5 ?𝑠 𝜃4 𝑠 𝜃6 𝐽33 − 𝑐 𝜃4 𝑐 𝜃5 85𝑐 𝜃4 𝑐 𝜃6 − 85𝑠 𝜃4 𝑠 𝜃5 𝑠 𝜃6 − 𝑐 𝜃5 𝑠 𝜃4 85𝑐 𝜃6 𝑠 𝜃4 + 85𝑐 𝜃4 sin 𝜃5 ∗ 𝑠 𝜃6 𝐽43 = −𝑐 𝜃5 𝑠 𝜃4 𝐽53 = 𝑐 𝜃4 𝑐 𝜃5 𝐽63 = 𝑠 𝜃5 𝐽31 = 𝐽41 = 𝐽51 = 𝐽61 = 𝐽62 = 0

The inverse of the above Jacobian matrix is also used to calculate the calculate the joint velocities from the camera or TCP velocity when implementing the visual servo control law. The results for the visual servo are shown in Fig. 9. Two separate programs are created to implement the visual servo of an unknown camera pose to the desired pose. The first program reads the image file and network model and subsequently predicts the current pose. This pose is written in an output csv file. The second program implements the visual servo control law defined by eq. (5).

𝑣3, 𝜔3 = −𝜆 ∗ 𝑅DC. 𝑡DC𝜃𝑢

(5) The λ value is chosen to be 0.01. The new pose of the camera is calculated by applying the displacement

towards the desired pose from present predicted pose with a sampling time of 0.1s. A new image for the updated camera pose is retrieved stored. The new image is fed back to the first program and the process is repeated till the desired position is achieved.

Fig. 9. Visual Servo from Initial Pose to Final Pose

The fairly good convergence of the camera velocities, expressed in the TCP frame of reference, and the corresponding Joint velocities of the ARIA during the servo action are shown in Fig.10 and 11.

Fig. 10. Camera Velocity during Visual Servo

Fig. 11. ARIA Joint Velocity during Visual Servo

IAEA-CN-123/45 [Right hand page running head is the paper number in Times New Roman 8 point bold capitals, centred]

4. CONCLUSION

The paper presents a novel approach to visual servo using machine-learning techniques. The classical visual servo control law, which uses errors in image features, is changed to direct visual servo using the entire image. A convolution neural network model is used to train on an image-label data set wherein the label represents the error in current pose of the camera with respect to the desired pose. The model is implemented using ‘TF-Learn’ library with is built on ‘TensorFlow’API. The model uses 5 convolution layers and one fully connected layer. A regression layer at the end of the CNN model, fits the pose data. A database of image-label pair is generated to train the model, model validation during training and implementation of visual servo. The sensitivity study carried out on the model helps to properly select the model hyper-parameters and achieve a validation accuracy of ~90% during training. A Euclidean loss function is defined instead of using the ‘Softmax’ classification function. The final trained model gives loss of less than 0.2 on the testing dataset. The visual servo is implemented using the control law and results show a good convergence of the camera velocities and joint velocities. As a part of the future work of the paper, the developed code will be integrated to the ARIA control System. This will enable to use the visual servo-mechanism inherently with the controller platform.

REFERENCES [1] F. Chaumette and S. Hutchinson, “Visual servoing and visual tracking,” Handb. Robot., pp. 563–583, 2008.

[2] S. a Hutchinson, G. D. G. D. Hager, P. I. P. I. Corke, and S. Hutchinson, “A tutorial on visual servo control,” IEEE Trans. Robot. Autom., vol. 12, no. 5, pp. 651–670, 1996.

[3] S. Collins, J. Wilkinson, and J. Thomas, “Remote Handling Operator Training at JET,” prepint Proc. ISFNT, no. 13, 2013.

[4] R. Buckingham and A. Loving, “Remote-handling challenges in fusion research and beyond,” Nat. Phys., vol. 12, no. 5, pp. 391–393, 2016.

[5] P. Dutta, N. Rastogi, and K. K. Gotewal, “Virtual reality applications in remote handling development for tokamaks in India,” Fusion Eng. Des., vol. 118, pp. 73–80, May 2017.

[6] N. Rastogi, V. Krishna, P. Dutta, M. Stephen, K. Kumar, D. Hamilton, and J. K. Mukherjee, “Development of a prototype work-cell for validation of ITER remote handling control system standards,” Fusion Eng. Des., 2016.

[7] F. Chaumette and S. Hutchinson, “Visual servo control. I. Basic approaches,” IEEE Robot. Autom. Mag., vol. 13, no. 4, pp. 82–90, 2006.

[8] E. Marchand, F. Spindler, and F. Chaumette, “ViSP for Visual Servoing,” IEEE Robot. Autom. Mag., no. December, pp. 40–52, 2005.

[9] A. C. Sanderson and L. E. Weiss, “Image based visual servo control of robots,” Robot. INDUST. Insp., vol. 360, pp. 164–169, 1983.

[10] P. I. Corke, VISUAL CONTROL OF ROBOT MANIPULATORS -- A REVIEW. World Scientific, 1994.

[11] F. C. E. Marchand, “Feature tracking for visual servoing purposes,” Rob. Auton. Syst., vol. 52, no. 1, pp. 53–70, 2009.

[12] C. Lopez-Franco, J. Gomez-Avila, A. Alanis, N. Arana-Daniel, and C. Villaseñor, “Visual Servoing for an Autonomous Hexarotor Using a Neural Network Based PID Controller,” Sensors, vol. 17, no. 8, p. 1865, 2017.

[13] “Visual_Servoing_with_Oriented_Blobs.pdf.” .

[14] Q. Bateux, E. Marchand, J. Leitner, F. Chaumette, and P. Corke, “Visual Servoing from Deep Neural Networks,” 2017.

[15] H. Hashimoto, T. Kubota, M. Sato, and F. Harashima, “Visual control of robotic manipulator based on neural networks,” IEEE Trans. Ind. Electron., vol. 39, no. 6, pp. 490–496, 1992.

[16] A. Saxena, H. Pandya, G. Kumar, A. Gaud, and K. M. Krishna, “Exploring convolutional networks for end-to-end visual servoing,” Proc. - IEEE Int. Conf. Robot. Autom., pp. 3817–3823, 2017.

[17] A. Dosovitskiy, P. Fischery, E. Ilg, P. Hausser, C. Hazirbas, V. Golkov, P. Van Der Smagt, D. Cremers, and T. Brox, “FlowNet: Learning optical flow with convolutional networks,” Proc. IEEE Int. Conf. Comput. Vis., vol. 2015 Inter, pp. 2758–2766, 2015.

[18] H. Al-Junaid, “ANN based robotic arm visual servoing nonlinear system,” Procedia Comput. Sci., vol. 62, no. Scse, pp. 23–30, 2015.

[19] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet Classification with Deep Convolutional Neural Networks,” Adv. Neural Inf. Process. Syst., pp. 1–9, 2012.

[20] P. Dutta, N. Rastogi, and K. K. Gotewal, “Virtual reality applications in remote handling development for tokamaks in India,” Fusion Eng. Des., vol. 118, pp. 73–80, 2017.

[21] N. Rastogi, “Virtual Reality based Monitoring and Control System for Articulated In-Vessel Inspection Arm,” pp. 2–5, 2017.

[22] D. Mishkin, N. Sergievskiy, and J. Matas, “Systematic evaluation of CNN advances on the ImageNet,” 2016.

[23] R. Socher, B. Huval, B. Bhat, C. D. Manning, and A. Y. Ng, “Convolutional-Recursive Deep Learning for 3D Object Classification.