Neuropsychological studies of auditory-visual fusion illusions. Four case studies and their...

-

Upload

ruth-campbell -

Category

Documents

-

view

212 -

download

0

Transcript of Neuropsychological studies of auditory-visual fusion illusions. Four case studies and their...

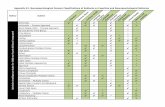

.\rumpi,I,hoC,y,u. Vol. 2X. No. 8. pp 7X7-802. 1990 Punted I” Great Bntam

NEUROPSYCHOLOGICAL STUDIES OF AUDITORY-VISUAL FUSION ILLUSIONS. FOUR CASE STUDIES AND THEIR

IMPLICATIONS

RUTH CAMPBELL’,* JEANETTE GARWOOD,* SUE FRANKLIN,? DAVID HOWARD,: THEODORE LAND@ and MARIANNE REGARDS

*University of Oxford, U.K.; tuniversity of York. U.K.; iuniversity College. London. U.K.; and suniversitaetspital, Zurich, Switzerland

(Rewired 23 September 1988; accepted 15 Norember 1989)

Abstract-A heard speech sound which is not the same as the synchronized seen speech sound can sometimes give rise to an illusory phonological percept. Typically. a heard ,ba; combines with a seen /ga/ to give the impression that ;da:‘has been heard (MCGURK, H. and MACDONALD. J. Nururr Land. 264, 746748, 1976). We report the susceptibility to this illusion of four individuals with localized brain lesions affecting perceptual function. We compare their performance to that of ten control subjects and relate these findings to the efficiency of processing seen and heard speech in separate and combined modalities. The pattern of performance strongly suggests LH specialization for the phonological integration of seen and heard speech. The putative site of such integration can be effectively isolated from unilateral and from bilateral inputs and may be driven by either modality.

INTRODUCTION

WHILE THE localization of input modality-specific cognitive processes (hearing speech, reading, inspecting faces) is relatively well understood, the nature and course of intermodal processing is much less secure. Nevertheless it is one of the central areas of theoretical concern for modern neuropsychology. Luria. in particular, proposed that cerebral sites adjacent to and effectively connected with the modality-specific primary sensory areas would be likely to subserve cross-modal integration. For audition and vision, parieto-temporal regions which are connected both to visual (occipital) and auditory sites such as the superior temporal gyrus would be prime candidates for such cross-modal integration. Luria’s discussions leave unclear the precise functional (information processing) mechanisms of such secondary association areas and the extent to which such associations are obligatory in normal function.

One phenomenon which strikingly demonstrates the obligatory nature of some cross- modal integration is the auditory-visual fusion illusion. MCGURK and MACDONALD [ 143

reported that when a seen speech syllable is coincident with a different heard speech sound (by audio-dubbing onto videotape), a perceptual illusion can arise whereby the place of articulation of the seen speech sound affects the perceived place of articulation of the heard speech gesture. One seems to hear a speech sound that can reflect properties of what is seen as well as what is registered “by ear”. This illusion is circumscribed in terms of the constraints on

7x7

7x8 RUH CAMPMELL er al.

the phonetic patterning of heard sounds and the stimulus properties of the seen sound that produce it. It is sensitive to lexical expectations and can be language-specific [ 16, 17, 193.

Typically, a fusion illusion occurs when the seen sound could be any one of a number of heard sounds. For example, seeing a speaker silently say “(JO”, “docj”. “HO”. or “oh” does not permit the viewer to distinguish reliably between these possibilities. for the critical articulator-the tongue-is being placed in “invisible” positions (alveolar;velar) and manner of speech articulation (e.g. nasalization. voicing of stops) cannot be readily perceived by seeing the speaker. Hearing any of these words being spoken at the same time as one sees the speaker will then determine what has been said. But the fusion illusion occurs when what is heard is not one of these seen possibilities: when “bmv” is heard at the same time as “<go” is seen, “doe” is often reported to be the spoken word. When the contingencies of sight and sound are reversed, so “go” is heard and “bmt” is seen. the perceived sound may be “gho”. “hdo”, “bgo” and not the simple fusion “doe”. That is, one perceives the seen but unheard “b” as if it has been heard, but in a different temporal relationship to the speech sound registered “by ear” and not as a fused percept. These are sometimes called blends: see MASSARO [ 161.

Vision also effects heard speech in the perception of vowels. These effects are less categorical than those for stop consonants; they produce biases towards reports of some rather than other vowels (20). They may be described in terms ofa shift in the auditory vowel space of the perceiver. In a similar way, knowledge of the spoken dialect of the speaker shifts vowel judgement [12]. Cross-modal vowel effects may be thought of as contextual rather than categorical, for this reason.

As well as these effects of seen speech on what is heard, EASTON and BASALA [6] showed that when subjects are asked to say what they see being spoken, their reports are sensitive to what they hear, and show fusion-like effects when the conditions (see above) are right.

Thus the effect of the other modality may not readily be ignored when seen and heard speech are coincident, when the conditions for fusion are met and when one is asked to report from one or other input. The perception of speech that can be seen-and-heard for phonological categorization and report can be effectively integrated. Such percepts. then, are a prime candidate for the inspection of obligatory cross-modal integration processes in the brain.

The present study examines theextent to which fusion illusions occur in the presentation of audioPvisual words and syllables in a sample of brain-lesioned people, with a view to elucidating the localization and power of such integration mechanisms.

Four case studies are presented, with details of the functional deficits these people showed, to clarify possible functional routes to integrated speech processing. The performance of these individuals is compared with that for IO unselected control subjects matched by age to the patients. The conditions were: (I) the report of monosyllabic concrete works; (2) of VCV syllables where the consonant could be a fusion target; and (3) of VCV syllables where the second vowel could be a fusion target. AudioPvisual. vision-only and audition-only presentations of each list were made (as far as possible) for each subject.

In the word and the consonant target conditions the fusion target predicted from vision and audition together was always the alveolar stop,f:’ or id.. generated, respectively. from seen ;i, ’ and heard /p/ and from seen /cl/ and heard /h;. At least ten such target combinations were presented in each condition. For vowels the fusion targets were a range of combinations of seen and heard ;‘3:,, (“fur”). Ii:/ (“bean”), /ll:;l (“boot”) and :(I:; (“bar”). Again these were mixed in with congruent combinations of seen and heard vowels.

SEUROPSYCHOLOGICAL STUDIES OF AUDITORY-VlSCAL FUSIOU ILLUSIONS 7x9

These three list types, examining separately possible fusions for simple words. for stop- consonants in syllables and for vowels in syllables. allow a start to be made in understanding possible distinctions within fusion phenomena within clinical groups.

METHOD

One speaker (NW) was recorded speaking all stimuli. The visual (lipread) material was spoken to camera b> NU and recorded, with sound. by U-matlc videorecording. Sound was recorded on one of the two available audio channels. At the same time a second speaker (JG) counted to an electronic metronome set at 1 set intervals. with a 2 set interval between each cycle of four counts: this was sound-recorded onto the other audio channel. This gave NW ttming cues for speech production and a time code for overdubbing. NW then audio-dubbed his oun voice b\ listening over headphones to the origmal sound-track and speaking the new auditory list in synchrony with the origmal material using the heard timing cues. This process was repeated as often as necessary in order to obtam close Item-synchronized sound, Closeness of synchrony was judged by JG and NW. Further editing added head (‘WORDS . ” “CONSONANTS”, “VOWELS”) and end titles between each list type. In addition. a blank screen of 5 set duration was edited into the tape between each list item. A spoken “start” signal. synchronized with a printed “START” title was edited to the beginning of the tape. This enabled volume. contrast and brightness settings to be adjusted at the start of each test session.

A copy of the U-matic videotape (visual channel with the dubbed auditory channel for all list types) was made onto VHS videotape for use in testing.

Appendix 1 shows the critical (target) stimuli for each tested condition. Within each list, however. these were interspersed with the same congruent stimuli -where audition and vision gave the same item. Every stimulus item. whether congruent or non-congruent with the original. visual channel. was overdubbed.

The critical consonants in the word and consonant hsts were always those in which the place ofarticulation for the target (fused) phoneme was different from that of either the seen or heard item. Within words the critical fusion target position varied-being either the first or the last stop uttered.

In this study we examine. specifically. voiced and voiceless plosiveconsonants which have been shown to generate reliable McGurk effects: in particular heard bilabial sounds (h. nr. p) accompanying seen velars (~1. k) which we predict should generate reports of “t” or “d” (alveolars).

For vowels. all combinations of seen and heard ‘I: . i: II: and 3 were examined.

Control subjects were tested individually m a university laboratory which was quiet. but not sound-proofed. and which was artifically lit. Instructions required that the subject “repeat what the young man said”. It was also emphasized that experiences might vary across conditions. and that there were no wrong answers in this tash. The experimenter was in the same room as the subject throughout the experiment. recording responses by writing. Responses were also audio-recorded for oli-lme analysis.

For the neurological cases testmg was done m the testing room with which they were familiar IhospItal. university). Test rooms were quiet and relativeI! irce of noise Three of the testing sessions were videorecorded for off-line checking. while two experimenters urote dov.n each subject‘s response as it was made. As far as po.\sihlc. instruction conditions were the same for all suhjecta. Including the clinical ones. Prcscntation by modality wa\ always in the fixed order-audiovisual. followed by auditor!. followed hy visual alone. The order orprcscntatlon of list type (words. consonants. \owels) ~a:, also fixed. For control subjects uncued verbal recall of each item was required as it was presented. In the word condition. suhJects wcrc instructed that they were to repcat the word that they thought the speaker had said. In the consonant and the vowel condition subjects wcrc first a&cd to read aloud a set of printed syllables correspondmg to the stimulus set used. Thlb wah In order to hc able to correct for dialectal dilTerences in subject pronunciations as well :1 to famlharlzc suhjccth with the possihlc stimuli.

The audition alone condition was run h> ma\ktng the TV screen: the vision only condition by switching olTthc volume on the TV momtor. Subject> ucrc not Informed that the same vidcotapc was used for all conditions.

The control data reported here comprlsc reports from ten >uhJccts (SIX fcmalel. aged from 42 to S9 year> (mean age 51 years: S.D. = 5.6) who were recruited through the Oxford University Subject panel. None had any rcportcd hearmg loss (see below). nor problems In vlewlng ulth corrcctcd viblon. All wcrc able to detect accurately minimal contrasts in spoken paxs of syllables (ptr hu: IW her: t/l! W. qtr ,u) prebentcd on audio-casscttc. It should be noted that the four clinical cases. whose lull dctatls arc gl\en MON. were all similarly unlmpaircd in thcsc simple ~5th of hearmg and in corrected televlsmn \vx~ng. Bccau\c one of the cllntcal subjects was only 27 years old a scparatc

790 RUTH CAMPBELL PI a/

group of five control subyxts of similar age (mean ape 23 years) was run following the mam stud!. The results from that group were indistinguishable from those of the older controls.

RESULTS

Normal per-formance patterns

The spoken reports of the ten control subjects to lists of the three types of fusion stimuli (words, consonant targets and vowel targets) were recorded, as were their reports of (cued) vision-only material and audition-only conditions.

Figure 1 shows the distribution of fusion-based, audition-based and vision-based responses as a function of the modality (audition-only, vision-only and audio-visual) of input. “Other” responses-which include omissions and “unlikely” reports (that is. those we had not anticipated) are also shown.

Scoring varied with the condition under test; for the WORDS condition the whole word was the scored unit; for CONSONANT and VOWEL conditions these responses were scored. Furthermore, under vision-only viewing conditions any vision-compatible response was scored correct-so any bilabial for a presented /h,!. /no,. or/p, was scored correct and for /cl;/. id/. it; and /IQ any velar or alveolar consonant. We scored as fusion responses only those predicted to result from audio-visual presentation (i.e. ,,t.’ or :d’ from seen ;‘k’ or /y’ with heard jp/ or /hi respectively). Thus the proportion of fusion responses obtained in the vision- only condition is a subset of possible vision-compatible responses and gives a base-line measure for the incidence of such responses in the absence of hearing.

Did these stimuli generate fusions? The statistical significance of the difference between the audio-visual and each of the unimodal conditions was tested for each type of response using paired non-parametric tests (Wilcoxon). This was the preferred test since it is based on rank scores: whereas the proportionate (percentage) scores within each report condition are non- independent (they must add up to unity) the rank orderings ofthe four possible report-modes within each condition are independent of each other. Subjects and stimuli were tested separately to indicate the generality of any effects.

The table of significance (Table 1) for the subject-analysis (stimulus analysis produced similarly significant results) indicates the following points.

(1 ) For stop consonants in words and in syllables. audio-visual presentation elicits significantly more vision and fusion based responses than audition alone.

(3) For vowels. audio-visual presentation does not increase the number of vision-based responses.

(3) Comparing audio- visual and vision-only conditions, audio-visual presentation significantly increases the proportion of audition-based responses for all three types of stimulus: this is at the cost of vision-based reports which drop significantly for all three list types. T/JCJ pf~~/~117ior1 oJ~/ir,siou.s rises si(lrljfj~.clrlf/!‘,fi,r umsom~nt targ/cts.

It can therefore be concluded that these stimuli have succeeded in eliciting fusions for stop- consonants in words and in monosyllables. In reporting audio-visual vowels. however. subjects do not integrate the vision component effectively; instead. they continue to report the rrlrtlifo/.!..vowel.

One reason why trlrtlitor!.donlirlrrnc,c~ characterizes vowel but not stop-consonant report in this experiment is clearly shown in Fig. 1; auditory vowel report is more accurate than either type of stop-consonant report in this study. This difference is statistically reliable on Wilcoxon tests and generalizes across subjects and stimuli.

NEUROPSYCHOLOGICAL STCDIES OF AUDITOR>-VISUAL FL’SIO~ ILLCSIONS 791

/gj Auditorycompatible

q Visual-compatible

n Fusion-xmpatible

cl Others

Concrete

words

A V AV

A V AV

Fig. 1. Mean percent responses by ten elderly control subjects as a function of presentation condition (S.D. in parentheses):

Auditory compatible

Visual compattble Fusions Others

Concrete words auditory 7x (17) 14 (12) n (6) 2 (0.4)

visual 5 (0.4) 75 (12) 19 (5) 1 (0.3) audio-visual 15 (7) 47 (17) 33 (12) 5 (0.7)

Consonants in syllables

auditory x7 (121 6 (41 7 (4) 0 visual 5 (I) 75 123) 16 (14) 4 (0.7) audio-visual 21 1171 33 (II) 43 (20) 3 (0.1)

Vowels

auditory 100 0 0 0 visual 18 (15) 6X (21) 14 (12) 0 audio-vtsual 0 95 (IO) 5 (0.9) 0

792 RLTH CAMPBELL P( al

Table 1. Stgnifcance of paired compartsons between brmodal and unimodal reports for each stimulus set

Auditton audio-vtsual Viston audiovisual

Words response type-auditory

vtsual fusion other

Consonants m syllables response type-auditory

vtsual fusion other

Vowels in syllables response type-auditory

visual fusion other

** **

Wilcoxon matched-pairs t-tests. **Significant differences at P<O.Ol; *P<O.O5; all to two- tailed levels of significance.

Vowels carry greater acoustic energy than stop-consonants and usually show clearer acoustic discrimination patterns (for example, in noise) so it is unsurprising that this is occurring in the present study. But vowels also in this study seem to be less well “lipread” than the other stimuli, for in the vision-alone condition a higher proportion of auditory- compatible items is reported than is the case for consonants in words or syllables.

This might suggest that the extent of fusion reported for stop-consonants in words and syllables may itself be related to slightly impaired acoustic registration of these stimuli. However, there was no significant inverse correlation between subjects’ scores for auditory (“correct”) responses in the auditory condition and fusion responses in the audioPvisual condition (r= +0.07; combined word and consonant scores). Furthermore, these subjects were completely accurate at auditory minimal-contrast detection (,lhn,‘. /pa,‘. ,‘+I,‘) when the auditory tokens were presented over low quality audio-cassette. It is unlikely that the observed fusions for stop-consonants in this study are simply a reflection of relatively poorer auditory classification of consonantal-stop than vowel tokens.

Given this pattern of performance in normal, elderly subjects. we now turn to clinical patients with neurological lesions.

.

Four cases are presented; Cases 1 and 2 present as LH. 3 and 4 as RH lesions. More important than the localization of their lesions is the pattern of performance in these cases and the relationship between performance on the fusion task and other aspects of cognitive impairment in these cases. Anatomical speculation will be confined to its functional significance in the light of these cases.

D.R.B. is a rtght-handed mnlc. born in England. in 1931. He is educated to secondary-school level and worked as a trav,el agent. He had a medical history of hypertension. diabetesand coronary thrombosts. In January 1985 he had a CVA tn the territory of the left middle cerebral artery which was marked by the sudden onset of aphasta and no other neurological stgns. (There is no indication of btlateral damage in D.R.R.)

VELIROPS\ICHOLOG,CAL STL’DES OF ACDITORY-VISCAL FLSIOX ILLLSIO?;S 793

At the ttme of test. in January 1988. D.R.B.‘s speech output was fluent. grammatical and comprehenstble. His main complaint was that speech “sounded funny”. He could follow written instruction and was able to read aloud. but was slightly surface dyslexic [l9]. He was unable to match spoken to written words rehably. HIS abihty to perform such tasks depended on thetr concreteness: abstract words were worse affected than concrete ones. Thus he can reliably point to named pictures. Repetition of spoken words was also sensttive to this dimension: he was unable to repeat a single spoken nonword or abstract word but was gtX9O 4,) accurate at the smgle repetttion of concrete spoken words.

Despite these profound diffrcultles in processing heard words and non-words for comprehension and repetttton. D.R.B. showed some effective auditory speech analysis. He dtstmguished heard mmimally contrasrive phonemtc pairs accurately although he was unable to repeat even one of them. He was also normal at auditory lexical decision despite his inability to further process the word for repetition or comprehension. This is a reliable and robust aspect of D.R.B.‘s performance: for instance. at the time of writing. a total of 156 160 items, including abstract words. and words of single phonemic contrast from non-words were corectly dtscriminated for lexrcal dectsion.

On casual examinatron. lipreading aRects D.R.B.‘s comprehension and repetttion skills for auditory matertal. When D.R.B. can see the speaker. he can occasionally repeat non-words and abstract words that he fails to utter when he simply hears them. D.R.B. can lipread silently spoken numbers and repeat them. one at a time.

Lipreading mav therefore help D.R.B. by boostmg the phonological and lexical maintenance systems. rather than by affecting discriminatton skills. But how could it do this? One possibility is that seeing the speaker. just like oral repetition of the stimulus item. provides a useful second record of the speech ev’ent. A different possibility is that hpreading provides a specific boost m the form of complementary. supportive phonetic features derived from the face rather than the heard voice. On the first account we mtght expect that. under conditions of reportmg vision alone. D.R.B. mav be poor, just as he is in reporting heard speech. On the second account we might expect his performance on silently lipread material to be normal. When. as in the present experiment. seen bps and heard speech provide discrepant information it will be possible to see (a) whether D.R.B. is susceptible to fusions (despite being impaired at reporting heard speech sounds) and tbt to what extent the normal patterns of report for heard. seen and av-sounds, both consonants and vowels. occur m his performance.

Because D.R.B. found it difficult to report single heard words, a visual cued report was used as well as uncued testing. In the cued condition. he was provided with sets of written words and syllables (and for the words. simple line drawings) before the videotapes were shown and during the videotape presentations. He used these actively to guide his responses (pointing and speaking were allowed as scored responses). His performance for each condition was summed across both cued and uncued tests and means derived. Despite the use of cues, D.R.B. found the task frustrating and tiring and unimodal testing of some of the non- word (syllable) targets was not completed.

Figure 2 summarizes D.R.B.‘s performance. Despite extensive help from picture and written word cues, D.R.B. was significantly worse than normal at reporting heard words and syllables, whether or not cues were available. On the first vision-only run, without cues to help him, he failed to report 6 out of 10 seen words; on a second run where picture cues were available he responded. verbally, to every word. Thus, D.R.B. falls within normal range for lipreading using vision alone. as long as he can use cues to guide his report. In this respect D.R.B.‘s visual performance is good. while his auditory performance is bad.

On audio-visual presentation. his pattern of fusion effects for words and consonant targets falls within normal range. Nevertheless. it would not be possible to claim with certainty that he was subject to fusions. While in over ten normal subjects there is a distinction between vision-alone and audio-visual inputs in terms of whether fusion or other visual targets arc reported. this effect is significant only for the group and not reliable for all subjects or stimuli within the group. D.R.B. seems to use all possible visual responses. including fused ones. in the audio-visual report.

However, his performance on vowel targets is quite unambiguous. Vowel targets are as likely to be reported in terms of their visual as their auditory components in the audmvisual condition. In this respect his performance is out of range of the normal performance pattern. D.R.B. does not use heard information effectively in reporting audio-visual vowels. We can

794 RUTH CAMPBELL et al

Concrete words

Auditoryxmpatible

Visual-compatible

Fusion-compatible

Others

V AV

V

Vowels

A V AV

Fig. 2. Response type as a function of presentation condition mean percent reponses D.R.B. (LH lesion). D.R.B. was tested twice on each audio-visual condition (cued and uncued) and these are means over both tests. He was not tested on the conditions left blank. His response in the “other”

category were all omissions.

surmise that this is also the case when he reports words and consonants, though we cannot be sure.

We conclude that D.R.B. shows tkul dominance in his report of audio-visual material. This confirms that although acoustic information may be sufficiently well processed to enable lexical decisions to be made, it does not seem to be effectively used for report; in particular. when D.R.B. can see and hear the speaker he reports what he sees. rather than what he hears. Since he can make accurate samedifferent judgements on auditorily presented pairs of syllables containing minimally contrastive phonemes this cannot be a sensory deafness of cortical location but has its origins in impaired central processes for analysing heard speech.

Lipreading. for D.R.B., provides useful information ofa specific sort; it appears to improve his repetition performance by boosting the maintenance of phonological forms. He shows fusion-like effects with audio-visual presentation, though this is not conclusive.

NEUROPSYCHOLOGICAL STUDIES OF AUDITORY-VISUAL FL!SlON ILLUSIONS 795

Case 2: Mrs T.: pure alexia

Mrs T. a right-handed 68 year-old farmer. has been alexic since a cerebra-vascular Incident m the terntory of the left posterior cerebral artery 4 years ago. This caused damage. medially. to temporo-occipital regions. At the time of testing. Mrs T.‘s reading was typically letter-by letter, and she traced letters with her fingers in reading. Her spelling, and her ability to say a word when she was given the letter names one at a time. were perfect. Mrs T.‘s only other cognitive impairment was a mild anomia for (some) colours. She had a persistent right homonymous hemianopia.

In previous tests. CAMPBELL et al. 133. found Mrs T. to be impaired at extractmg speech information from lips under a range of testing conditions. The present study, conducted three years after that reported earher. specifically examines her fusion skills.

It should be pointed out that Mrs T. is a native Swiss-German speaker. with little English. Nevertheless. the stimuli presented to her are phonologically acceptable in her native tongue.

Auditorycompatible

Visuakompatible

Fusion-compatible

Others

Vowels

0 V AV

V AV

Fig. 3. Response-type as a function of presentation condition mean percent responses Mrs T. (LH lesion). Mrs T. was not an English speaker, so the “word” scores represent not words but consonant scores-hence her errors in the word-visual condition were all omissions of consonants and distortions of vowels. However. she did respond to each stimulus and parts of each word were often correctly reported. In no case. though. was a word correctly reported from vision alone, though many

were from audition alone.

796 RUTH CAMPBELL PI d.

Figure 3 shows Mrs T’s pattern ofperformance. In striking contrast to D.R.B.. Mrs T. was excellent at repetition of auditory lists, but produced bizarre responses to visual ones and showed few fusion illusions for any of the presentation conditions. For vision-compatible responses to visual stimuli (over all list types) and for vision or fusion responses to audio-visual stimuli (word and consonant stimuli) her performance falls more than two standard deviations outside the control range. Unlike control subjects. Mrs T. often offered. under vision only conditions, speech sounds that were not compatible with what was seen (saying “sh” for seen “ha”, for example). This does not occur even in normal poor lipreaders [ 111. These responses are recorded as “other” responses in the figure. This confirms not only that Mrs T. is impaired at strategic aspects of extracting speech from faces but also that. even when audio-visual presentation allows a number of possible percepts, lipreading still fails to access systems responsible for heard speech repetition. Mrs T. is extremely impaired at extracting speech from seen or seen-and-heard faces. Our earlier studies [3] showed her to be unable even to sort face photographs making speech sounds from face photographs where the face seen was making a nonspeech gesture (such as extruding the tongue).

We would further note that the association between colour naming impairments and pure alexia has been familiar for many years and gave rise to GESCHWIND’S comments [7.8] on disconnection anomias. It has been remarked upon in several reviews (Poetzl’s Syndrome: [9, IO]). It is possible that the inability to lipread or to show fusion susceptibility in audio-visual speech processing may possibly be added to the functional constellation of impairments in Poetzl’s syndrome.

Anutotnical speculation: the LH in lipreading

D.R.B. lipreads and shows visual dominance in audio-visual speech presentation: Mrs T. is poor at lipreading and fails to show normal visual categorization of speech or any effective integration of seen and heard speech in audio-visual presentation. At the very least this suggests important and separable functions in the LH. One possibility is as follows: there may be a phonological processor in the LH that can accept seen and heard inputs. In D.R.B. connections between auditory inputs and this processor are partially ineffective. The phonetic processor is itself unimpaired. In Mrs T. the processor is disconnected from visual inputs; since her lesion is medially placed this disconnection may isolate visual speech inputs from both the LH and the RH. (This suggestion reflects that advanced by Geschwind to account for reading and naming problems in patients like Mrs T.) D.R.B. shows complete left-ear dominance for dichotic speech. While this may simply reflect a disconnection of analysis from right ear inputs, it allows the possibility that he may also be processing seen speech in his RH. Cases 3 and 4. where RH damage is proved or suspected, should illuminate this issue further.

A.B. wa born in I960 in England. She is right-handed. She was previously tested and dcscrihed hy MAKOXA(TII~ 1131 ;I\ ;I developmental prosopagnosic. On first testing. at the age of 13. she was found to he poor at recognizing famous or fam~har people by sight alone.

Neurological zwessmcnt at that tlmc found no obvious neurolog~~l signs of motor Impairment. but some EEG ;thnormallty over right posterwr regions. This was the only hard sign of disorder and it is on this hasis that it is suggested that she he Identified provisionally as a “RH-developmental disorder”. There was no evidence or histoq of traumatic hraln lesion. but there was a family history of “poor face recognition”. In addition lo face processing dclicltb. A.B. was poor at drawing maps and buildings. Her verbal IQ NBS 144. and all tashs (including wsuo- pcrcep~ual oncs~ that loaded lo any degree on verbal ability were performed extremely well. There was no mdlcxtion in the published reports of any general visual deficits (colour. stereo. mo\emenl pcrccpt~on were rcportcd lo be

NEUROPSYCHOLOGICALSTUDIESOF AUDITORY-VISCAL FUSION ILLUSIOYS 797

normal). Visual perimetry was reported lo be normal in follow-up neurological tests. A.B. is not a clmical patient and is currently following post-doctoral research full-time in an academic institution.

In 1986 we performed further perceptual tests. Contrast sensitivity function, assessed by the Cambridge Ion frequency gratings test, was normal at all spatial frequencies tested.

We confirmed her difficulties in recognizing faces. both famous and famihar. live and through photographs and recordings. She performed at chance on WARRKGGTON'S old/new unfamiliar face recognition memory test [‘I]. She was also poor at sorting faces by age. expression or gender. Her score on BENTO?: er a/.‘~ [I] test of unfamiliar face identification and recognition was 38 (“moderately clinically impaired”) and she performed very slow,ly. Slow. somewhat impaired (but not abolished) performance on the Benton Facial Recognition test characterizes most reported cases of acquired prosopagnosia. Her topographic memory was poor and she was unable lo give a correct description of her route (which she had taken for 10 years) from home to her place of work. Her speech and reading were at a level commensurate with her proven academic ability, though her writing was”untidy” (as MACCONACHIE

had noted). Her performance on the fusion tasks is summarized in Fig. 4.

•j Auditon/compatible

q Visual-compatible

n Fusion-compatible

0 Others

,.A..........

. .

,.;: :~.~~~~:~.,

. . . . . ,.,.,.,.,.. < . . . . . . . . . . . . . . . . . . . . . s ..;.:.:.:.:.:.:.:.:.:.:.::::::~~:.::::~.:::::::::,

.:::::::::::::::::::~~~::::::::?::;:::::::::::;~ ::,.

Concrete .:.:::::?x......:.:.:.:.:~:~:~:~:;: :.:.: :::::::::,; ,:::,:::. :‘...‘.:.:.iB::~.:.:.:.:.:::::~:::::::~ ::::..

~~.~~~~~~~~~,,,,, t::.:.:.:.:.:.:...:. . . . . . . . . . . . . . . . . . :.:.:.:.:+: .,...,:.:,:.:.:. words 0 “~:::.:,,~:~;?~:.~.~:.~:.::~:::::,~::.~~.~,::.~:~ :.:,: ‘.~‘.‘.‘.:.:.:.:.:.‘.:.::::::.:.:.:.:.:,:.:,:.:,:.:.:.: . . . . . . . . . .

::~~~~~.~~~.:~.:::::~~ :r:. ‘.:.:.::.:.:.:.:.;> . . . . . . . . ...)). ::~;;:~:~:~:~~~~~ ‘I~:~~~~~:~~~~~: “-‘..v.... ‘.......‘~.:.:.:.::::.~ ,:,:.:.:.. .‘A’: ‘:-%gy&gy ” ‘~TV .A . . .,.,,,_,, “:::::+& :::::.:,. .v....

A

,.,.,. :.:.>:.:+ ,..., ..:.:.:.:.:.:::;:::::.:.:.:.:.: ..,. .,::~~~~~~~:ii~:~~:~~:~~:~ ::,, “‘.:xE.>:.: . . . . . . . . . . . ..,. :..:.;,:.:,:.:.:.:.: .:.:. ~‘:‘.Y..:.:.,~:i.:~~.~::::.:,:.:,::~.~.::~::::. :::::::::::::~:::::~~:~:~:~:~:~:.:~: :.~.;.~,~~~‘,:: : : :: :::::::::::::.:.:.:.:.:.:.:::.:::$ :.:.:.:.:.: ‘“.......‘... . . . . . . . ..,,., :,:.:;:;r. ,:,:,:, i ,:.:,:.:.:r,:,

0

i:~:~i:~:~:~i&:~.:~~::~.~:i,:~:~~.:::~,:::~ Vowels ~~~~~~

‘.:C-:i..r ..‘..,. .,+:.:.:.;.:.:.>: .,.,.;,,,,,,,,~,I,,, :.::::::~:::~~$::ii:::~:::~:~~.~~ .:::::: ~ .:::,. 4si~~~~8i#:~:~~:~:~:~:::~~:~:~ ‘.!:::~~:~:I:~~~~.:~~.~:~.~::.. “‘“:::~:~~~:~~~.~~~.~~~::8:~..

A V

AV

AV

Fig. 4. Responsc-type as a function of presentation condition mean percent responses A.B. (presumed RH impairment ). “Other” responses for vision conditions were all of the type--“it could be (and the

correct range of syllable types was otTered)“. See text.

A.B. showed normal repetition of auditory material under audition-alone conditions. Her ability to report material under vision-alone conditions also falls within the normal range, but with one anomaly. A.B. often refused to report consonant targets in syllables (she would say-of a seen “ga”-“well. that could be anything really . .“); hence her abnormally high

798 RUTH CAMPBELL er al.

score in the “other”category. We do not know how to interpret this abnormal phenomenon (it may relate to the observation that she made pragmatic errors in some other aspects of language performance), but note that unlike Mrs T. (Case 2). A.B. did not produce vision- incongruent responses in this condition.

With audio-visual presentation, A.B. failed to show characteristic fusion responses for words or for consonant targets. While her performance for consonant targets is within range of controls, that for words was 1.8 standard deviations outside range. For audio-visual stimuli she consistently reported auditory, not fused or visual stimuli. While this was normal for vowel targets, it was not for the other target types.

At this point it could be argued that A.B., who is much younger than the other clinical subjects, may have had better hearing, hence there was less room for vision to play a role in the report of audio-visual stimuli. Five young control subjects (mean age 23 years) performed the experiment. Their scores were statistically indistinguishable from those of the elderly controls in the fusion condition. It is unlikely that A.B.‘s anomalous performance was due to better hearing than that of older controls.

Since she could perform the task with vision alone, it is clear that A.B. call lipread. but does not use seen speech normally in audio-visual presentation. She forms a contrast both to Mrs T. who cannot lipread and to D.R.B. who lipreads when others do not.

Case 4: Mrs D.: acquired prosopagnosia

Mrs D. is a 66 year-old, right-handed secretary who became densely prosopagnosic following a lesion of the right posterior cerebral artery seven years ago. She is described in detail by CHRISTEN el a/. [4]. Mrs D. was unable to judge gender. age or expression from a live or photographed face. In 1986 we reported [3] that. in complete contrast to all her other debilities with faces, Mrs D. could imitate face-speech movements: could accurately repeat aloud lip- spoken numbers; could discriminate between photographs of speaking faces and “pulling”-faces (“gurmng” faces where unnatural facial movements are made); could correctly discriminate faces by the speech sound they were making. Fmally, she was susceptible to the MCGURK illusion using a short videotape with consonant targets.

The present study extended this last investigation. In 1988 we confirmed that Mrs D. was extremely impaired at identifying emotional expression from faces (but not from voice or auditory content), at identification of a known individual from the face and at judging sex, age or race from face photographs. In these respects she resembles A.B. But Fig. 5 shows that Mrs D.‘s pattern of lipreading is entirely normal. She was susceptible to fusion illusions in precisely the same way as our control subjects and for the same material. Despite her dense problems in processing faces, Mrs D. could lipread effectively and was susceptible to fusion illusions. Her performance on both unimodal and bimodal inputs can also be used to make the negative point that tests of susceptibility to the fusion illusion are not simply sensitive to any’ form of cerebral disorder.

Mrs D. shows that lipreading problems need not co-occur with general problems in interpreting face stimuli. With A.B. this possibility is open.

Anutotnicul arld,funcrional speculation: Cases 3 and 4

These two cases have reported (unilateral) RH damage and prosopagnosia with associated face processing problems. They show a dissociation in fusion sensitivity; Case 4 (Mrs D) showed fusions in audio-visual report while Case 3 (A.B.) did not. Both of these subjects were able to lipread reliably, in contrast to Mrs T. (Case 2).

A.B.‘s developmental aetiology or a generalized RH disorder may have contributed,

sECR”PSYC”OLOG,CAL STUDES OF AUDITORY-VISUAL FL’SIOX ILLUSIONS 799

q Auditoqxompatible

0 Visuakompatible

n Fusion-compatible

cl Others

A V AV

Vowels ~~~

A V AV

Fig. 5. Response-type as a function of presentation condition mean percent responses Mrs D. (RH lesion). Mrs D. spoke Enghsh well. Her scores fall well nlthin normal range on all condltiom

independently or together, to her failure to show effects of “vision on audition” in audio-visual presentation. For example. a developmental difficulty in processing seen faces may lead to the development of effective strategies for ignoring faces in naturalistic contexts. So. in audio-visual presentation she may be able selectively to ignore the seen component of the signal.

But there are other aspects of A.B.‘s speech processing which may be abnormal. Her prosodic abilities-in production and perception-are compromised, with vision relatively more affected than audition. Thus Garwood (in preparation) found that A.B. was unable reliably to indicate lexical stress produced by a seen. unheard speaker reading a sentence aloud. This task was performed accurately by control subjects. A generalized RH disorder may contribute both to problems in processing faces”naturally”and to problems in handling the non-segmental aspects of speech. Both of these may affect her audio visual speech processing.

The general point, however. is that these two cases together show that a failure to

no0 RUTH CAMPBELL er a/

recognize faces, to categorize expression, ethnicity, age or sex from the face. can dissociate quite clearly from the ability to extract speech from the face. and suggest that while face- processing problems commonly involve right hemisphere structures, hpreading may be an exception to this principle.

GENERAL DISCUSSION

We examined susceptibility to auditory-visual speech fusion illusions in a group of elderly control subjects and in four neuropsychological cases with presumed posterior lesions but with normal hearing and vision. In controls, fusion effects were reliably reported for words and syllables containing stop consonants (i.e. “da” was reported for seen “ga” and heard “ba”). But where vowels were different in the seen and heard channels. auditory report, rather than any sort of blend, was the preferred response.

Of the two left-lesioned cases, D.R.B. (Case 1; word-meaning-deafness) used lipreading reliably. Vision dominated his audio-visual responses, which led to abnormal reports of seen-and-heard vowels where the two modalities were not congruent. Case 2, the alexic Mrs T., was impaired at reporting seen speech and was not susceptible to fusions. but had good auditory speech processing. Here is evidence that auditory and seen (lipread) speech processing dissociate in a fashion that suggests disconnexion between primary sensory areas and central (i.e. amodal) phonological processes within the left hemisphere.

Cases 3 and 4 lipread normally despite their other visuo-spatial cognitive deficits. However Case 4 (A.B.) with a suspected generalized RH disorder showed no sign of using vision in processing speech which is seen-and-heard. This may be part of a more general pragmatic linguistic disorder. Case 3 (Mrs D), where damage is specific to medio-temporo- occipital structures in the right hemisphere, was normal on all aspects of the seen and heard speech task.

Thus. the left hemisphere appears to have a special role in the integration of seen and heard speech. This inference is supported by some developmental findings. MCKAIN ~‘1 ol. [ 151 report that infants turn their heads to examine a speaker-but only rightwards.

Right-head-turning is taken to be a sign of left hemisphere activation. At this stage we can say little about how this integration occurs, but a “natural” site would be an amodal phonological processor (sited in the LH) which is (relatively) indifferent to whether its inputs are heard or lipread. Such a system could be “driven” by eye or by ear. A phonetic system of this sort would be particularly able to cope with seen-and-heard specifications for stop consonants. which are often hard to discriminate. The system would be integral to Ihe maintenance of phonetic forms, enabling verbal repetition to take place.

Just such a system has been proposed by investigators in speech perception and memory; in particular by CROWIXR [S] who stressed the continuity between speech perception and memory processes in terms of phonetic equivalences in ostensive “perception” and “immediate memory” tasks.

What is the role of RH processes in processing speech that is seen to be spoken? It is possible that as far as lipreading is concerned the phonological processor is more effectively driven from RH than LH vision reception areas. CAMPBELL [2] has reported that normal LVF (RH) performance was significantly faster than RVF (LH) performance in matching a heard speech sound to a seen still photograph of mouth-shape.

Such RH inputs may be functionally disconnected from the LH speech-sites in Mrs T.. but

NEUROPSYCHOLOGICAL STUDIES OF AUDITORY-VISUAL FUSION ILLUSIONS 801

not in D.R.B. In Mrs D. they may be spared in contrast to associative areas concerned with remembering and interpreting faces in non-speech terms. A.B.‘s pattern of performance could be interpreted to indicate that the LH can lipread. but RH processes may usefully drive the phonological processor when audition and vision provide complementary information.

Finally, we are not suggesting that the only support that can be gleaned from seeing the speaker is at the phonological level. But the performance of the subjects in this experiment suggests that there is an identifiable component of seen speech that is processed phonologically. Stop consonants benefit from being seen and heard--even when heard quite “clearly” in people with no clinical or sub-clinical hearing loss. The extent to which they do depends on the integrity of phonological processing (in the LH) and the access routes to such a processor.

Arknowledgements-We are grateful to Nigel Woodger and to the Auditory-Visual Department of the University of Surrey for their help in the production of the test material. This work was supported by an Oxford University Pump- priming grant (Campbell and Garwood): by a twinning grant from the European Training Program in Brain and Behaviour Research (Landis. Campbell, Regard) and by the Medical Research Council (U.K.) (Howard and Franklin). Parts of this paper were presented to special session of a meeting of the Experimental Psychology Society. Edinburgh. Scotland in July 1988, organized by Dr V. Bruce.

1.

2. 3.

4.

5. 6.

1.

8.

9.

IO. II. 12. 13. 14. IS.

16.

17.

IX.

19.

20.

21.

REFERENCES

BENTON, A. L.. HAMSHER, K.. VARNEY. N. R. and SPREEN. 0. Conrrihurions to iVeurops~cho/oqira/ Asscssrr~cr~r. Oxford University Press, 1983. CAMPBELL. R. The lateralization of lipread sounds; a first look. Brain and Cognit. 5, l-22. 1985. CAMPBELL. R.. LANDIS, T’. and REC;ARI). M. Face recognition and lipreading: a neurological dissociation. Ertrr,~ 88, 287-294, 1986. CHRISTEN. L.. LANDIS. T. and REGARU. M. Left hemispheric functional compensation in prosopagnosia’? A tachistoscopic study with unilaterally lesioned patients. Hum. Newohio/. 111. 1985. CROWUER. R. G. The purity of auditory memory. Phil. Trans. R. SW. Loud. B 302, 251-265. 1983. EASTON. R. D. and BASALA. M. Perceptual dominance during lipreading Percept. Psychophys. 32, 564 570. 1982. GESCHWI?~. N. The varieties of naming error. Corrrs 3, 97-112. 1967. GESCHWINI). N. and FL’SILLO. M. Colournaming deficits in association with alexia. Arch Nwrol. 15. I37 146. 1966. GLONINCL 1.. GLO~I%. K. and HOFF. H. Neltrops?‘c’/Io/oUi~ul Syrnproms and Syndrorws in Lr.wm of r/w

Occipiral Lobes und or/w .4dju~~c~,rr -1rctrs. Gauthier-Villars, Paris, France. 1968. HEC~EN. H. /rurr>ductirm (I lu :Vrltrop.s!cholt,(Iir. Laroussc. Paris. France. 1972. JEFFERS. J. and BAKLEY. M. Snrt,l,hrearli,lcr. Charles C. Thomas. Sminefield. Illinois LA~x~IXX;I:~~. P. and BKOAIX&. D. InformatIon conveyed by vowels.-J. .4coust. Sot,. Atn 29. 1957. MACCO~;A(.HII. H. R. Developmental prosopagnosia: a single case report. Corrrx 12. 76-82. 1976. MCGURK. H. and MACDOVALI). J. Hearing lips and seeing voices. Narure. Land. 264. 746 748. 1976. MCKAIN. K., STLI~I~I:RT-KI:U~I:I~~. M.. SPICI;EK. M. and STERN. D. Infant intermodal speech perception is a left hemisphere function .Sc;rj~r,c 219. 1347 1349. 1983. MASSARO. D. W. Spcuc,/~ Pcvwprwrl hj, Eur cwd Ejv: (I Parudi~qrn /iv Ps~rho/o~~icul Inquiry. Lawrence Erlbaum Associates. Hilladale. Nc\t Jersey, U.S.A.. 1987. MILLS. A. and THIS h!. R. Auditory visual fusion illusions and illusions in speech perceptron. L~n~g~rrstic~/Ic Brrrc~hrc~ 68.80. Xi I OX. 19X0. PATTERSO\. K. P.. MARSHALL. J. C. and COLTHEART. M. .S~t@x~ Dyslrsio: Nrurops~~ho/o!/i~,~l/ trml Cwqnirrw

.Srudic\ o/ phm~lr~yic~trl Rum/rm/ Laurence Erlbaum Associates. London, U.K.. 1985. SUMhltRFlrLI). 0. Some prehmlnarles to ii comprehensive account of audio-visual speech perception. In Hcwriruq h>, En- T/w P~r~~~h~/o~g~~ (>I L~prmu/rm~. B. DOI>I, and R. CAMPBELL (Editors.). pp. 3 52. Lawrence Erlbaum Associatca. London. SI,MMI:RI-II LII. Q. and M(.GKATH. M. 19X7. Detcctlon and resolution of audio-visual incompatlhihty In the perception of vowels. Q. J. c\-p. P.ywltr~/. _36A, 51 74. 19X4. WAHKINC;TO\. E. K. Rrco~gnrrirv~ .Alcwr~r~ 7;,.\r. N.F.E.R.. Nelson Publishmg Co., Windsor, Bucks. U.K.. 1984.

802 RUTH CAMPBELL et a/

APPENDIX 1 Stimuli used in testing

Seen Word targets

Heard Fusion target

Corn Rogue Gate Cart Pick Coast Goal Rack Kick Gear

Pawn Robe Bait Part Pip Post Bowl Wrap Pick Beer

Torn (horn) Road Date Tart Pit Toast Dole Rat Tick Dear

Appendix 1: Continued

Seen

Aga Aka

Consonant Targets Heard Fusion target

Aba Ada

Apa Ata

There were five examples ofeach of these monosyllable tokens in this combination in a total test set of 30 items comprising seen and heard aha. ata, aka, apa, ama, aga in other combinations (including same vision and audition) tokens.

Vowel targets Seen Heard

Erber Erbee Erber Erboo Erber Erbee Erboo Erbee Erbee Erber