Network Address Translation (NAT) Behaviour: Final report · 2014-05-26 · IP addresses, source...

Transcript of Network Address Translation (NAT) Behaviour: Final report · 2014-05-26 · IP addresses, source...

Network Address Translation (NAT) Behaviour: Final report Prepared by: A. Nur Zincir-Heywood Yasemin Gokcen Vahid Aghaevi Faculty of Computer Science Dalhousie University Scientific Authority: Rodney Howes DRDC Centre for Security Science The scientific or technical validity of this Contract Report is entirely the responsibility of the Contractor and the contents do not necessarily have the approval or endorsement of the Department of National Defence of Canada.

Defence R&D Canada – Centre for Security Science DRDC-RDDC-2014-C May 2014

IMPORTANT INFORMATIVE STATEMENTS CSSP-2012-CD-1029 Network Address Translation (NAT) Behaviour was supported by the Canadian Safety and Security Program (CSSP) which is led by Defence Research and Development Canada’s Centre for Security Science, in partnership with Public Safety Canada. Partners in the project include Public Safety Canada and Dalhousie University. CSSP is a federally-funded program to strengthen Canada’s ability to anticipate, prevent/mitigate, prepare for, respond to, and recover from natural disasters, serious accidents, crime and terrorism through the convergence of science and technology with policy, operations and intelligence

© Her Majesty the Queen in Right of Canada, as represented by the Minister of National Defence, 2014

© Sa Majesté la Reine (en droit du Canada), telle que représentée par le ministre de la Défense nationale, 2014

Network Address Translation (NAT) Behaviour: Final report

Contract Number: 78820-12-0024 Project Leader: A. Nur Zincir-Heywood

Graduate Students: Yasemin Gokcen Vahid Aghaevi

Date:March 28th, 2013

Network Information Management and Security Group

Faculty of Computer Science Dalhousie University

6050 University Avenue

Halifax, NS B3H 1W5

2

Contents Table of Figures and Tables ............................................................................................................ 3

Acronyms ........................................................................................................................................ 4

Abstract ........................................................................................................................................... 6

1. Introduction ................................................................................................................................. 7

2. Literature Review...................................................................................................................... 11

3. NAT Overview.......................................................................................................................... 15

3.1 Translation of the Endpoint ................................................................................................. 17

3.2 Visibility of NAT Operations .............................................................................................. 24

4. Data Mining Techniques Employed ......................................................................................... 26

4.1 C4.5 ..................................................................................................................................... 26

4.2 Naive Bayes......................................................................................................................... 28

4.3 Support Vector Machine (SVM) ......................................................................................... 30

5. Methodology and Evaluation .................................................................................................... 33

5.1 Data Sets Employed ............................................................................................................ 33

5.2 Passive Fingerprinting Approach ........................................................................................ 34

5.2.1 Packet Header Based Features - Time to Live (TTL) and Arrival Time ...................... 34

5.2.2Packet Payload Based Features - Http User Agent String ............................................. 36

5.3 Data Mining Based Approach ............................................................................................. 40

5.3.1 No Payload, IP Addresses and Port Numbers– Flow Based Features .......................... 40

5.4 Ground Truth – Labeling of the Data Sets ...................................................................... 41

6. Empirical Evaluation ................................................................................................................ 44

6.1 Evaluation Results Based On The First Labeling Scheme .................................................. 45

6.2 Evaluation Results Based On The Second Labeling Scheme ............................................. 47

8. Conclusion and Future Work .................................................................................................... 50

References: .................................................................................................................................... 52

3

Table of Figures and Tables Figure 1 NAT trace collection scenario ........................................................................................ 16 Figure 2: Home-Side trace: Consider the Source IP/Port and Destination IP/Port for the “HTTP GET” and the “200 OK HTTP” messages .................................................................................... 17 Figure 3 Home-Side trace: Consider the Source IP/Port and Destination IP/port for the three-way SYN/ACK handshake ................................................................................................................... 19 Figure 4 ISP-Side trace: Consider the Source IP/Port and Destination IP/port for the “HTTP GET” and the “200 OK HTTP” messages .................................................................................... 20 Figure 5 Home-Side trace: Consider the “Time To Live” and “Checksum” fields for “HTTP GET” message .............................................................................................................................. 21 Figure 6 ISP-Side trace: Consider the “Time To Live” and “Checksum” fields for “HTTP GET” message ......................................................................................................................................... 22 Figure 7 ISP-Side trace: Consider the Source IP/Port and Destination IP/port for the three-way SYN/ACK handshake ................................................................................................................... 24 Figure 8: Construction of a classification tree .............................................................................. 28 Figure 9: An example of Naive Bayes .......................................................................................... 29 Figure 10: Maximum margin hyperplanes for a SVM trained with samples from two classes.... 32 Figure 11: Propagation Behavior of TTL between IP Header and MPLS Labels ........................ 35

Table 1 Packet Header based features employed, * Normalized by log 37 Table 2: Packet Header based features employed, * Normalized by log...................................... 39 Table 3: Packet Header based features employed, * Normalized by log...................................... 39 Table 4: Packet Header based features employed, * Normalized by log...................................... 39 Table 5: Flow Based Features Employed ..................................................................................... 43 Table 6: Results for the flow-based feature set using the passive fingerprinting approach – Labeling Scheme 1 ........................................................................................................................ 45 Table 7: Training Results for the flow-based feature set using the data mining approach – Labeling Scheme 1 ........................................................................................................................ 46 Table 8: Test Results on the unseen dataset for the flow-based feature set using the data mining approach – Labeling Scheme 1 .................................................................................................... 46 Table 9: Results for the flow-based feature set using the passive fingerprinting approach –Labeling Scheme 2 ........................................................................................................................ 48 Table 10: Training Results for the flow-based feature set using the data mining approach – Labeling Scheme 2 ........................................................................................................................ 49 Table 11: Test Results on the unseen dataset for the flow-based feature set using the data mining approach – Labeling Scheme 2 ..................................................................................................... 49

4

Acronyms ACK Acknowledge

Dal Dalhousie University

DNS Domain Name Server

DPI Deep Packet Inspection

DR Detection Rate

DSL Digital Subscriber Line

eAddr External Address

ePort External Port

FN False Negative

FP False Positive

FPR False Positive Rate

FTP File Transfer Protocol

HTTP Hyper Text Transfer Protocol

HTTPS Hyper Text Transfer Protocol Secure

iAddr Internal Address

ID3 Iterative Dichotomiser 3

IP Internet Protocol

iPort Internal Port

ISP Internet Service Provider

LAN Local Area Network

NAPT Network Address and Port Translation

NAT Network Address Translation

OS Operating System

PC Personal Computer

QoS Quality of Service

RFC 1918 A standard; Address Allocation for

Private Internets

RFC 2663

A standard; IP Network Address

Translator (NAT) Terminology and

Considerations

5

ROC Receiver Operating Characteristic

SRM Structural Risk Minimization

SVM Support Vector Machine

SYN Synchronize

TCP Transmission Control Protocol

TN True Negative

TP True Positive

TTL Time to Live

UDP User Datagram Protocol

WiFi Wireless Fidelity

6

Abstract

Network Address Translation (NAT) is the mechanism, which is used to modify a packet's IP address information while it is in transit across a network routing device. Because NAT can hide a computer’s or even a network's IP address, identifying the presence of NAT in network traffic is an important task for network management and security. The aim of this work is to identify the presence of NAT in the network traffic by utilizing different approaches and evaluate the performance of these approaches under different network environments represented by the availability of different data fields. To this end, passive fingerprinting and data mining based approaches are used and evaluated under different test conditions. In these experiments, not only packet header and flow based features are employed without using source and destination IP addresses, source and destination port numbers and payload information, but also payload information is analyzed to understand how much performance gain is reached if it is available. Last but not least; experiments are also performed to identify NAT devices in encrypted as well as non-encrypted traffic.

7

1. Introduction

Usage of Network Address Translation (NAT) devices is very common in any area where

interconnection devices such as computers, laptops and mobiles connect to the Internet. While

NAT devices are generally used in local area networks (LAN), which include small groups of

computers, they can also be used just for one computer. In home networks most Internet Service

Providers (ISP) give WiFi-enabled NAT home gateways to their users. Thus, when users can

connect their devices to the Internet, the private IP addresses are hidden on the Internet by

encapsulating private IP addresses with a public IP address. NAT gateways modify IP address

information in IP packet headers during transition. Basically, NAT allows a single device, such

as a router, to act as agent between the Internet and a private network. This means that only a

single unique IP address is required to represent an entire group of computers to anything outside

their private network.

NATs are used for many reasons such as shortage of IPv4 addresses. Since an address is

4 bytes, the total number of available addresses is 2 to the power of 32, i.e. 4,294,967,296. This

represents the number of computers that can be directly connected to the Internet. In practice, the

real limit is much smaller for several reasons. Each physical network has to have a unique

Network Number comprising some of the bits of the IP address. The rest of the bits are used as a

Host Number to uniquely identify each computer on that network. The number of unique

Network Numbers that can be assigned on the Internet is therefore much smaller than 4 billion,

and it is very unlikely that all of the possible Host Numbers in each Network Number are fully

assigned. NAT usage provides one single public IP address for a group of computers and

therefore helps to solve some of the addressing related problems.

8

To be represented with a public IP address on the Internet is more advantageous for users.

Since their private IP addresses are not seen on the Internet, it is easier for them to keep their

systems secure. For home users, personal information, such as emails, financial details such as

credit cards or cheque numbers can be stolen. For business users, it is more dangerous. There is

essential company information such as marketing strategies. If these kinds of essential

information are stolen or accessed in any way, this may cause major privacy and security

problems. For these reasons, companies can use firewall technologies to keep their systems safe.

Firewalls are placed between the user and the Internet and verify all traffic before allowing it to

pass through so no unauthorized user would be allowed to access the company's file or email

server. The problem with firewall solutions is that they are expensive and difficult to set up and

maintain for home and small business users.

In this case, NAT becomes a viable alternative. A NAT device is also placed between the

user and the Internet and it automatically protects the systems without any special set-up because

it only allows connections that are originated on the inside network. For instance, an internal

client can connect to an outside FTP server, but an outside client will not be able to connect to an

internal FTP server because it would have to originate the connection, and NAT will not allow

that. It is still possible to make some internal servers available to the outside world by opening

inbound ports, which are well known Transmission Control Protocol (TCP) ports (e.g. 21 for

FTP) to specific internal addresses, thus making services such as FTP or Web available in a

controlled way.

Moreover, it is easier to manage the large networks for network administrators if there

are NATs. That is because a NAT divides large networks into smaller ones. There are groups of

computers behind NAT devices. While there are many computers, they are represented as one IP

9

address to the outside. Therefore, if any change happens within these groups, such as adding or

removing IP addresses, this does not affect the outside network.

All these advantages promote NAT usage. However, because of these reasons, NAT

technology also becomes useful for attackers and users who want to hide their real identities.

Hence, NAT usage increases both in legitimate environments and in illegitimate environments.

Thus, it becomes important to detect the number of devices behind a NAT gateway in order to

understand the anomalies in a given systems traffic and usage. Furthermore, it is not possible to

get information such as private IP addresses, which belong to the devices behind a NAT gateway

just by visualizing the traffic. In other words, identifying the NAT machines and the devices

behind such machine becomes a very challenging problem.

A NAT gateway has at least two interfaces and it has two different IP addresses for these

two interfaces; namely internal and external IP addresses. The internal IP address is for

communicating with hosts behind the NAT, while the external one is for communicating with the

outside network. Therefore, if anyone from outside of this network wants to analyze the network

traffic, the only information he/she will see will be the traffic between the NAT and the outside

networks rather than the hosts behind the NAT on the internal network. On the internal network,

there might be many devices, which are connected to the Internet behind the NAT and these

devices might have different services installed. However, these will all be opaque when standard

techniques (such as IP address or port number analysis or deep packet inspection) are used to

visualize or analyze such traffic (data).Thus, it is necessary both to understand whether there is a

NAT gateway and to determine the number of hosts behind it to support any quality of service or

security related analysis of a network/system traffic.

10

Therefore in this research, we study and evaluate different approaches and evaluate them

on different types of data sets to understand their pros and cons. To this end, one approach we

investigated was based on the analysis of packet level traces and HTTP user agent information as

studied in [2]. Indeed, such an approach becomes useful in the presence of HTTP user agent

information. So in the cases where such information is not available or not accessible, we also

analyzed the flow level traffic using data mining techniques. We investigated all these

approaches under both encrypted and non-encrypted traffic conditions.

11

2. Literature Review Even though not many, there are some works in the literature where the focus was on the

identification of NAT gateways and the number of end users behind such gateways. Different

algorithms were proposed, but generally, researchers used passive operating system

fingerprinting by analyzing certain parameters within the TCP protocol and evaluated

performances in their experimental system with synthetic NAT data sets.

Bellovin [8] claimed that consecutive packets carry sequential IP ID fields, which are

included in the IP header and generally are used as counters. Therefore, he claimed that it was

possible to count the string values of those IP ID fields to find the number of hosts. However, he

noted that there might have been some complications such as packets with zero IP IDs and

packets using byte-swapped counters. Also, he did not take the recent version of OpenBSD and

FreeBSD into consideration, because they use pseudo-random number generator for the IP ID

fields. He proposed an algorithm and tested with a synthetic NAT data.

Miller [6] and Phaal [5] both used passive OS fingerprinting. Phaal [5] took the

advantage of IP Time to live (TTL), while Miller [6] analyzed TCP dump packets to check

certain fields in the TCP/IP header.

Beverly [7] proposed a classifier to infer the operating system passively and find the

number of hosts behind a NAT. He used TTL, Do not fragment (DF), Window size and SYN

size parameters. He also took the advantage of Bellovin's IP ID approach. According to the

results of his evaluations, Bellovin's method performed better on counting hosts behind NAT

devices.

On the other hand, Murakami et al. [3] focused on the Medium Access Control (MAC)

address of a device and proposed a NAT router, which relays the MAC address of PCs based on

FreeBSD. NAT does not have information about the data link layer because it translates IP

12

addresses in the network layer. So they used two functions; obtaining source MAC address and

overwriting an Ethernet header. They added another mechanism by using pcap both to obtain

MAC addresses and to overwrite ethernet headers. According to their evaluation process, their

MAC address relaying NAT router confirmed that a LAN could identify PCs that are behind a

NAT from outside. However, it requires the use of their specific relaying system.

Ishikava et al. [1] proposed an alternate method to identify PCs behind a NAT router with

proxy authentication on a proxy server. Their target application was WWW. They used the

“realm” attribute in the authentication header for identifying client PCs using their MAC

addresses. In this case, the “realm” attribute is shown to the user as a prompt message. Therefore

their proposed system requires Java Runtime Environment (JRE) on each client PC. They

assumed that a web browser always adds the authentication header to its request message when

authentication has succeeded.

Rui et al. [4] proposed an algorithm based on the Support Vector Machine (SVM)

learning algorithm just to detect the presence of a NAT. Their traces were limited by eight

features and activity values (activeness of a host). They labeled their traffic data as ordinary

hosts and hosts behind a NAT. Then, they applied binary classification.

Maier et al. [2] focused on detecting DSL lines that use NAT to connect to the Internet.

They first aimed to find whether there was a NAT device, and then they aimed to find the

number of users behind that NAT. Additionally, they tried to find how many of those users

connected to the Internet at the same time. Their approach is based on IP TTL and HTTP user

agent strings. They extract the operating system and the browser family and versions from HTTP

user agent strings. Indeed, this necessitates deep packet inspection (DPI) into the payload of a

packet. They analyzed the user agent strings just from typical browsers and they ignored the ones

13

which came from mobile devices and gaming consoles. They employed two approaches to

predict the minimum number of users behind a NAT. In the first approach, they counted different

<TTL, OS> combinations as distinct hosts. In the second approach, for each <TTL, OS>

combination, they counted the number of different browser versions of the same browser family

as distinct hosts. They did not consider each different browser family as a distinct host. They

found 10% of DSL lines have more than one user active at the same time, and that 20% of the

lines have multiple hosts that are active within one hour of each other.

14

In summary, in our research, we reengineered the approach in [2], which seems to be the

best technique in the literature notwithstanding its requirement for payload information, to

understand its pros and cons better. In addition, for the cases where payload information not

available or opaque (such as encrypted traffic), we use flow based attributes via a classification

system in order to study whether one can learn general enough patterns to represent the behavior

of NAT devices in a given network/system under analysis. In this second approach we employed,

we are in some way similar to the work in [4]. However, we employ flow features rather than

packet features and we aim to understand NAT behavior to identify its presence in a given traffic

trace and the number of hosts behind such a NAT device.

15

3. NAT Overview

In computer networking, network address translation (NAT) is the process of modifying

IP address information in IP packet headers while in transit across a traffic routing device. It is

common to hide an entire IP address space, usually consisting of private IP addresses, behind a

single IP address in another (usually public) address space. To avoid ambiguity in the handling

of returned packets, a one-to-many NAT must alter higher-level information such as TCP/UDP

ports in outgoing communications and must maintain a translation table so that return packets

can be correctly translated back. RFC 2663 uses the term NAPT (network address and port

translation) for this type of NAT. Since this is the most common type of NAT, it is often referred

to simply as NAT.

The majority of NATs map multiple private hosts to one publicly exposed IP address. In

a typical configuration, a local network uses one of the designated "private" IP address subnets

(RFC 1918) [24]. A router on that network has a private address in that address space. The router

is also connected to the Internet with a "public" address assigned by an ISP. As traffic passes

from the local network to the Internet, the source address in each packet is translated on the fly

from a private address to the public address. The router tracks basic data about each active

connection (particularly the destination address and port). When a reply returns to the router, it

uses the connection tracking data that it stored during the outbound phase to determine the

private address on the internal network to which to forward the packet.

All Internet packets have a source IP address and a destination IP address. Typically

packets passing from the private network to the public network will have their source addresses

modified while packets passing from the public network back to the private network will have

their destination addresses modified. To avoid ambiguity in how to translate returned packets,

16

further modifications to the packets are required. The vast bulk of Internet traffic is TCP and

UDP packets, and for these protocols the port numbers are changed so that the combination of IP

and port information on the returned packet can be unambiguously mapped to the corresponding

private address and port information. Once an internal address (iAddr:iPort) is mapped to an

external address (eAddr:ePort), any packets from iAddr:iPort will be sent through eAddr:ePort.

Any external host can send packets to iAddr:iPort by sending packets to eAddr:ePort.

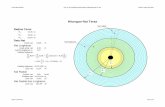

For the purpose of this research, first of all, we observed the behavior of the NAT

protocol in practice. In this case, we captured packets at both the input and the output sides of an

NAT device. To this end, we sent and captured packets from a client PC (at a home network) to

the web server at our faculty, namely www.dal.ca. Within the home network, the home network

router provides a NAT service. Figure 1 shows our Wireshark trace-collection scenario. We have

collected a Wireshark trace on the client PC in our home network. We call it the Home_Side

trace. Because we are also interested in the packets being sent by the NAT router into the ISP,

we have collected a second trace file at a PC on the ISP network, as shown in Figure 1. Client-to-

server packets captured by Wireshark at this point will have undergone NAT translation. The

Wireshark trace captured on the ISP side of the home router is called the ISP-Side trace.

Figure 1 NAT trace collection scenario

17

3.1 Translation of the Endpoint

NAT usage provides that all communication that are sent to external hosts actually

contain the external IP address and port information of the NAT device instead of the internal

host(s) IPs or port numbers.

Figure 2 shows that the HTTP GET sent from the client to the faculty server (whose IP

address is 129.173.21.171) at time 0.004374.

Figure 2: Home-Side trace: Consider the Source IP/Port and Destination IP/Port for the “HTTP GET” and the “200 OK HTTP” messages

The following are the source and destination IP addresses and TCP source and destination ports

on the IP datagram carrying this HTTP GET request.

Source IP: 192.168.137.2, Source Port: 1268

Destination IP: 129.173.21.171, Destination Port: 80

18

At time 0.013089, the corresponding 200 OK HTTP message received from the faculty server.

The following are the source and destination IP addresses and TCP source and destination ports

on the IP datagram carrying this HTTP 200 OK message.

Source IP: 129.173.21.171, Source Port: 80

Destination IP: 192.168.137.2, Destination Port: 1268

Recall that before a GET command can be sent to an HTTP server, TCP must first set up

a connection using the three-way SYN/ACK handshake. Considering Figure 3, you can find the

following information for SYN and ACK messages:

SYN Time: 0.002799

SYN Source IP: 192.168.137.2, Source Port: 1268

SYN Destination IP: 129.173.21.171, Destination Port: 80

ACK Time: 0.004032

ACK Source IP: 129.173.21.171, Source Port: 80

ACK Destination IP: 192.168.137.2, Destination Port: 1268

19

Figure 3 Home-Side trace: Consider the Source IP/Port and Destination IP/port for the three-way SYN/ACK handshake

In the following, we will focus on the two HTTP messages (GET and 200 OK) and the

TCP SYN and ACK segments identified above in the ISP-Side trace captured on the ISP

network. Because these captured frames have already been forwarded through the NAT router,

we are going to show that some of the IP addresses and port numbers have been changed as a

result of the NAT translation.

Note that the time stamps in ISP-Side and Home-Side traces are not synchronized since

the packet captures at the two locations shown in Figure 1 were not started simultaneously.

(Indeed, you can find that the timestamps of a packet captured at the ISP network is actually

bigger than the timestamp of the packet captured at the client PC).

Consider Figure 4, the NAT ISP-Side trace. The HTTP GET message has been sent from

the client to the faculty server at time 0.495146. The source and the destination IP addresses and

TCP source and destination ports on the IP datagram carrying this HTTP GET are as the

following:

Source IP: 129.173.67.98, Source Port: 61947

20

Destination IP: 129.173.21.171, Destination Port: 80

As you can see, in comparison to Figure 2, the destination IP and port have not been

changed, but the source IP and port have been translated by the NAT router.

Figure 4 ISP-Side trace: Consider the Source IP/Port and Destination IP/port for the “HTTP GET” and the “200 OK HTTP” messages

Our observations show that when a computer on the private (internal) network sends a

packet to the external network, the NAT device replaces the internal IP address in the source

field of the packet header (sender's address) with the external IP address of the NAT device.

NAT may then assign the connection a port number from a pool of available ports, inserting this

port number in the source port field (much like the post office box number), and forwards the

packet to the external network. The NAT device then makes an entry in a translation table

containing the internal IP address, original source port, and the translated source port.

Subsequent packets from the same connection are translated to the same port number. The

computer receiving a packet that has undergone the NAT device translation establishes a

21

connection to the port and IP address specified in the altered packet, oblivious to the fact that the

supplied address is being translated (analogous to using a post office box number).

A packet coming from the external network is mapped to a corresponding internal IP

address and the port number from the translation table, replacing the external IP address and the

port number in the incoming packet header. The packet is then forwarded over the inside

network. Otherwise, if the destination port number of the incoming packet is not found in the

translation table, the packet is dropped or rejected because the NAT device does not know where

to send it.

Most importantly, in addition to the IP address and the Port fields, two more fields in the

IP datagram have also been changed, Time to Live (TTL) and Checksum. Consider Figure 5 and

Figure 6 to compare the TTL and Checksum fields of the HTTP GET message in the Home-Side

and the ISP-Side traces.

Figure 5 Home-Side trace: Consider the “Time To Live” and “Checksum” fields for “HTTP GET” message

22

Figure 6 ISP-Side trace: Consider the “Time To Live” and “Checksum” fields for “HTTP GET” message

The TTL value can be thought of as an upper bound on the time that an IP datagram can

exist in an Internet system. The TTL field is set by the sender of the datagram, and reduced by

every router on the route to its destination. The purpose of the TTL field is to avoid a situation in

which an undeliverable datagram keeps circulating on an Internet system, and such a system

eventually becoming swamped by such “immortals". Under IPv4, every host that passes the

datagram must reduce the TTL by one unit. In practice, the TTL field is reduced by one at every

hop (router/gateway), including NAT devices (most of the time). In this case, we have also

observed that the TTL has been decreased by one in figure 6. It should be noted here that it is

possible to configure the NAT device, as well as every other network device, to not to decrease

the TTL value.

Regarding the checksum value, the major transport layer protocols, TCP and UDP, have a

checksum that covers all the data they carry, as well as the TCP/UDP header, plus a "pseudo-

header" that contains the source and destination IP addresses of the packet carrying the

23

TCP/UDP header. For an originating NAT to pass TCP or UDP successfully, it must re-compute

the TCP/UDP header checksum based on the translated IP addresses, not the original ones, and

put that checksum into the TCP/UDP header of the first packet of the fragmented set of packets.

The receiving NAT must re-compute the IP checksum on every packet it passes to the

destination host, and also recognize and re-compute the TCP/UDP header using the retranslated

addresses. In the ISP-Side trace file, Figure 7, you can find the following information for the

three-way SYN/ACK handshake:

SYN Time: 0.493603

SYN Source IP: 129.173.67.98, Source Port: 61947

SYN Destination IP: 129.173.21.171, Destination Port: 80

ACK Time: 0.494453

ACK Source IP: 129.173.21.171, Source Port: 80

ACK Destination IP: 129.173.67.98, Destination Port: 61947

Comparing figure 7 and figure 3, you can see that the source IP and the port in the ACK message

(direction from the home side to the ISP side) and destination IP and the port in the SYN

message (direction from the ISP side to the home side) have been translated by the NAT router.

24

Figure 7 ISP-Side trace: Consider the Source IP/Port and Destination IP/port for the three-way SYN/ACK handshake

3.2 Visibility of NAT Operations

Typically the internal host is aware of the true IP address and the TCP/UDP port of the

external host. The NAT device may function as the default gateway for the internal host.

However, the external host is only aware of the public IP address for the NAT device and the

particular port being used to communicate on behalf of a specific internal host.

As discussed above, NAT only translates the IP addresses and ports of its internal hosts,

possibly decreasing the TTL value by one, and re-computing the checksum. This results in

translating the IP addresses and ports of its internal hosts to hide the true endpoint of an internal

host on a private network. Because the internal addresses are all disguised behind one publicly

accessible address, it is impossible for the external hosts to differentiate between the traffic

originated from a network behind a NAT and one that did not. As a result, these networks are

25

ideal for attackers to hide their identities. If an attacker hides his/her identity behind a NAT

device, it is very difficult to find the exact attacker node.

Thus, a mechanism of identifying the NAT traffic is needed, as the attackers behind the

NAT devices can easily violate the network security. This is the main purpose of this study.

However achieving this purpose is not possible by using the typical network traffic analysis

techniques, because NAT only translates the IP addresses and ports of its internal hosts, possibly

decreasing the TTL value, and re-computing the checksum. In short, we use a combination of

techniques including machine learning (data mining) algorithms to achieve this goal.

26

4. Data Mining Techniques Employed

In this research, we have employed a data mining (machine learning) based approach to

find whether a NAT box exists in a network or not. To this end, we have employed a decision

tree system, e.g. C4.5, a function system, namely Support Vector Machine (SVM), and a

probabilistic system, namely Naive Bayes. The following will summarize all these techniques.

4.1 C4.5

C4.5 is a decision tree based classification algorithm developed by Ross Quinlan that is

an extension of the basic ID3 algorithm [9]. C4.5 is designed to address issues that are not dealt

with in ID3 such as choosing the appropriate attribute (based on information gain), trying to

reduce error pruning, and handling varieties of attributes types (continuous, number, string).

A decision tree is a hierarchical data structure for implementing a divide-and-conquer

strategy. C4.5 is an efficient non-parametric method that can be used to support both

classification and regression. In non-parametric models, C4.5 constructs decision trees from a set

of training data applying the concept of information entropy. The training data is a set, S, such

that each input of the set is an instance of already classified samples. Each sample (record) in the

set is represented as a vector and each input in the vector is represented as an attribute (feature)

of the sample. The training data is added to a vector where each input in the vector represents a

class that each sample belongs to. C4.5 can split the data into smaller subsets using the fact that

each attribute of the data can be used to make a decision. Therefore, the attribute with highest

information gain is used to make the decision of the split. As a result, the input space is divided

into local regions defined by a distance metric. In a decision tree, the local region is identified in

a sequence of recursive splits in small number of steps. A decision tree is composed of internal

27

decision nodes and terminal leaves. Each node, m, implements a test function fm(x) with discrete

outcomes labeling the branches. This process starts at the root and is repeated until a leaf node is

hit. The value of a leaf node constitutes the output. In the case of a decision tree for

classification, the goodness of a split is quantified by an impurity measure. A split is pure if, for

all branches, for all instances, choosing a branch belongs to the same class after the split. One

possible function to measure impurity is entropy, Eq. (1) [10].

= log (1)

If the split is not pure, then the instances should be split to decrease impurity, and there

are multiple possible attributes on which a split can be done. Indeed, this is locally optimal;

hence, there is no guarantee of finding the smallest decision tree. In this case, the total impurity

after the split can be measured by Eq. (2) [10]. In other words, when a tree is constructed, at each

step the split that results in the largest decrease in impurity is chosen. This is the difference

between the impurity of data reaching node m, Eq. (1), and the total entropy of data reaching its

branches after the split, Eq. (2). Figure 8 presents the construction of a classification tree. A more

detailed explanation of C4.5 algorithm can be found in [10].

= (2)

28

Figure 8: Construction of a classification tree

4.2 Naive Bayes

A Naive Bayes classifier is a simple probabilistic classifier based on applying Bayes'

theorem (from Bayesian statistics) with strong (naive) independence assumptions. In simple

terms, a naive Bayes classifier assumes that the presence (or absence) of a particular feature of a

class is unrelated to the presence (or absence) of any other feature. Depending on the precise

nature of the probability model, Naive Bayes classifiers can be trained efficiently in a supervised

learning approach. In many practical applications, parameter estimation for Naive Bayes models

uses the method of maximum likelihood [11]. A simple Naive Bayes probabilistic model can be

expressed as Eq. (3) in the following:

( | , , … , ) = ( ) ( | ), (3)

29

where ( | , , … , ) is the probabilistic model over dependent class variable C with a small

number of outcomes or classes, conditional on several feature variables F1 through Fn; Z is a

scaling factor dependent only on , , … , , i.e., a constant if the value of the feature variables

are known. A Naive Bayes classifier combines the probabilistic model with a decision rule that

aims to maximize a posterior, thus the classifier can be defined using Eq. (4) as follows:

( , , … . , ) = ( = ) ( = | = ) (4)

An advantage of the naive Bayes classifier is that it only requires a small amount of training data

to estimate the parameters (means and variances of the variables) necessary for classification.

Because independent variables are assumed, only the variances of the variables for each class

need to be determined and not the entire covariance matrix.

Figure 9: An example of Naive Bayes

Figure 9 shows an example of Naive Bayes. Naive Bayes is the simplest form of

Bayesian network. All attributes are independent given the value of class variable. This is called

conditional independence. The conditional independence assumption is not often true in the real

world problems.

The authors of [12] aimed to show that the Naive Bayes classifier might have been

successful to choose who would reply to the mailing for the 1998 KDD Data cup. They

evaluated their tests and explained time and space complexities of Naive Bayes by drawing

graphs. Time complexity for learning a Naive Bayes classifier is O(Np), where N is the number

30

of training examples and p is the number of features. Space complexity for Naive Bayes

algorithm is O(pqr), where p is the number of features, q is values for each feature, and r is

alternative values for the class.

4.3 Support Vector Machine (SVM)

The original SVM algorithm was invented by Vladimir N. Vapnik and the current

standard incarnation (soft margin) was proposed by Vapnik and Corinna Cortes in 1995 [15].

Classifying data is a common task in machine learning. Suppose some given data points each

belong to one of two classes, and the goal is to decide which class a new data point will be in. In

the case of support vector machines, a data point is viewed as a p-dimensional vector (a list

of p numbers), and we want to know whether we can separate such points with a (p 1)

dimensional hyperplane. This is called a linear classifier. There are many hyperplanes that might

classify the data. One reasonable choice as the best hyperplane is the one that represents the

largest separation, or margin, between the two classes. So we choose the hyperplane so that the

distance from it to the nearest data point on each side is maximized. If such a hyperplane exists,

it is known as the maximum-margin hyperplane and the linear classifier it defines is known as

a maximum margin classifier; or equivalently, the perceptron of optimal stability [13]

We consider data points of the form as follows:

{( , ), ( , ), ( , ), ( , ), … , ( , )}, (5)

Where = 1/-1, a constant denoting the class to which that point belongs and n is the

number of samples. Each is p-dimensional real vector. The scaling is important to guard

against variable (attribute) with larger variance. We can view this training data, by means of

dividing hyperplane, which takes

31

w.x + b = 0, (6)

where b is scalar and w is p=dimensional vector. The vector w points perpendicular to the

separating hyperplane. Adding the offset parameter b allows to increase the margin. Absent of b,

the hyperplane is forced to pass through the origin, restricting the solution. As we are interesting

in the maximum margin, we are interested SVM and the parallel hyperplanes. Parallel

hyperplanes can be described by the Eq. (7) in [13]:

w.x + b = 1

w.x + b = -1 (7)

A special property of SVM is that SVM simultaneously minimizes the empirical

classification error and maximizes the geometric margin. So SVM can be considered as a

Maximum Margin Classifier. SVM is based on the Structural Risk Minimization (SRM). SVM

maps an input vector to a higher dimensional space where a maximally separating hyperplane is

constructed. Two parallel hyperplanes are constructed on each side of the hyperplane that

separate the data. The separating hyperplane is the hyperplane that maximize the distance

between the two parallel hyperplanes. An assumption is made that the larger the margin or

distance between these parallel hyperplanes the better the generalization error of the classifier

will be [14].

32

Figure 10: Maximum margin hyperplanes for a SVM trained with samples from two classes

33

5. Methodology and Evaluation

As discussed earlier, in this work, we considered and evaluated two different approaches,

namely a data mining approach and a passive operating system fingerprinting by analyzing

specific parameters within the TCP protocol. For the data mining approach, we employed the

classification models, C4.5, Naïve Bayes and SVM learning techniques introduced in section 4.

As for the passive fingerprinting approach, we re-engineer and employ the algorithm introduced

by Maier et al. [2]. For both approaches we used exactly the same data sets including both the

encrypted and the non-encrypted traffic. In doing so, we aim to understand the behavior of NAT

both with and without the payload information. Moreover, for the first approach, we analyzed the

used of both the packet only and the flow only features to detect the presence of NAT devices.

The following describes the data sets and experiments performed in this work.

5.1 Data Sets Employed

In this research, we employed traffic data sets from our faculty’s web server in the form

of TCP dump files, which did not have any payload, as well as the corresponding web server

access log files, which have the payload. The data was collected over a week in November 2012

and data sets have both encrypted and non-encrypted traffic.

In total, there are 533647 flows in our dataset. All these flows were matched with the web

access log data files which are encrypted (HTTPS) and non-encrypted (HTTP). We divided

(detailed in section 5.4) these flows into two categories: (i) NAT flows; and (ii) OTHER flows.

However, some flows did not have valid user agent strings. This means that some user agent

strings did not include OS information and some of them did not include browser related

information. We consider the flows that have these types of user agents in the OTHER category.

34

In the whole dataset, 95 different OSs and 105 different browser families with their versions

exist.

Furthermore we know that in our own faculty we have a NAT device for some of the

labs. Indeed, computers from these labs (behind the NAT device) access the web server (where

we collected the data sets). Thus, the choice of these data sets enables us to derive some ground

truth information about the presence of NAT devices in the data sets, too.

5.2 Passive Fingerprinting Approach

In this case, we first introduced the features used in the passive fingerprinting approach

that we adopted from Maier et al. [2] and re-engineered to use in our work. In applying this

approach certain features are used to identify a NAT device. Some of these features require the

payload to be known otherwise they cannot be extracted from the data set. Other features do not

have that requirement. We detail these features below.

5.2.1 Packet Header Based Features - Time to Live (TTL) and Arrival Time

It is known that networking stacks of operating systems use well-defined initial IP TTL

values (ttlinit) in outgoing packets. For instance, Windows uses 128, MacOS uses 64 and Debian

based systems use 64, too. The TTL field of the IP header is defined to be a timer limiting the

lifetime of a datagram. It is an 8-bit field and the units are in seconds. Each router (or other

modules) that handles a packet must decrement the TTL by at least one, even if the elapsed time

was much less than a second. Since this is very often the case, in effect, the TTL values serves as

a hop count limit on how far a datagram can propagate through the Internet as it is shown in

Figure 11 [18].When a router forwards a packet, it must reduce the TTL by at least one. If it

holds a packet for more than one second, it may decrement the TTL by one for each second.

35

Therefore, it is expected that if there is a NAT box routing in the network, it will decrement the

TTL values for each packet that passes through them. However, it is possible for a NAT box not

to decrement TTL values. Moreover, users could reconfigure their systems to use a different

TTL. In this case, we cannot detect accurately whether there is a NAT box or not by solely

relying on TTL counts.

However, assuming that TTL values are not modified or hidden, these TTL values in the

packets enable us to infer the presence of NAT. If the TTL is ttlinit-1, this means that the sending

host is directly connected to the Internet, so the monitoring point is one hop away from the host.

If the TTL is ttlinit-2 then there is a routing device such as a NAT gateway.

A NAT gateway can be a dedicated gateway such as a home router or it can be a regular

desktop or notebook. A dedicated NAT gateway will often directly interact with the Internet

services, e.g., by serving as DNS resolver for the local network or for synchronizing its time with

NTP servers. Moreover they generally do not use web (HTTP). It should be noted here that in

our datasets we cannot see any DNS records originated by the known NAT devices.

Figure 11: Propagation Behavior of TTL between IP Header and MPLS Labels

36

In addition to the TTL feature, arrival time feature is also used. The "Arrival Time"

feature reflects the timestamp recorded by the station that captures the traffic when the packet

arrives. The accuracy of this field is only as accurate as the time on the receiving station. Packet

captures from Windows systems are only represented with accuracy in seconds. In this work, we

use arrival times to match packets with access log data and flow based data. We will explain this

process in more detail in section 5.3.

In short, TTL and arrival time features embody the two features of the passive

fingerprinting approach that do not require any payload information. In other words, no deep

packet inspection is necessary to extract these features from the traffic.

5.2.2Packet Payload Based Features - Http User Agent String

The user agent string identifies the browser that the user uses to access the web. When a

user visits a webpage, his/her browser sends the user-agent string to the server hosting the site

that is visited. This string indicates, which browser the user is using, its version number, and

details about the user’s system, such as the operating system and its version.

We parsed the HTTP log files (access log files of the web server) and analyzed the user

agent strings. We extracted the OS and browser information from these strings to estimate a

lower bound for the number of hosts behind a NAT gateway. Maier et al. [2] limited their

analysis to user agent strings from typical browsers such as Firefox, Internet Explorer, Safari and

Opera.

However, we did not limit ourselves with the typical browsers, because in our data sets,

we also observed many user agent strings from Android based devices, iPhones and iPads.

Therefore, we took them into consideration in our study, too. We will discuss this in more detail

in the following sub-sections.

37

5.2.2.1User Agent String - OS Only

As we mentioned in section 5.2, it is possible to detect whether there is a NAT gateway

or not by analyzing the TTL values (assuming TTL values are not modified). For instance, if the

TTL value of a packet is 125, which belongs to a Windows system with an initial TTL of 128,

we can say that this computer is behind a routing device such as a NAT gateway.

Furthermore, in this case, in order to predict (calculate) the number of users behind a

NAT, we need to utilize the OS information, which belongs to the hosts. Thus, we can use a

heuristic as: Different <TTL, OS>combinations represent distinct hosts. Then, we can calculate

the number of different combinations and use this number to predict the number of users behind

a NAT. An example for this is shown in Table 1. In this example, this approach predicts a NAT

device’s presence with three users behind it. However, there might be many users using the same

combination, too. In this approach, they will be counted as one.

Table 1 Packet Header based features employed, * Normalized by log

From Packet Header From HTTP User Agent

TTL Proto OS Family Version

54 80/HTTP Intel Mac OS X 10.5 Firefox 3.05

54 80/HTTP Windows NT 5.1 Internet Explorer 6.0

54 80/HTTP Windows NT 5.1 Firefox 2.0

54 80/HTTP Windows NT 5.1 Firefox 3.0.3

54 80/HTTP Windows NT 6.0 Internet Explorer 7.0

54 80/HTTP Windows NT 6.0 Firefox 3.0.5

5.2.2.2 User Agent String - OS and Browser Version

In this case, in addition to the OS information, we can also extract and count the number

of different browser versions to predict the number of users behind a NAT device. This time, we

38

use the following heuristic: it is assumed that if the OSs is the same and the browsers families are

the same but the browser versions are different, then we can count these as two distinct hosts.

The rationale for this is that it is not possible to install different versions of a browser family on

the same computer. For example, in Table 1, TTL values are the same for each packet, while

there are different OSs such as Windows NT 5.1,Windows NT 6.0 and Intel Mac OS X 10.5. The

packets with Windows NT 5.1 OSs employ browsers from the same family, Firefox. Their TTLs,

OSs and browser families are the same. According to the previous approach, we could count

these two packets as belonging to one host. However, if we take into consideration the version of

the browsers, then the conclusion could be different. In this example, they use different Firefox

versions, so that means using our heuristic above, we can conclude that these packets belong to

two distinct hosts.

In summary in table 1, if we take all combinations into consideration there are three

Windows NT 5.1 OSs with the same TTL value but two different browser families (types) and

different versions. Two Windows NT 6.0 with the same TTL value have different browser

families. This can mean two different users or one user with two different browsers. Finally, the

packet with Intel Mac OS X 10.5 OS might be different host (user) or might be the same host

(user) with dual OSs. In this case, we assume that two different versions of Windows OSs (NT

5.1 and NT 6.0) would not be installed on the same machine and the two different versions of the

same browser would not be installed on the same machine either. Therefore, we count three hosts

by analyzing these packets using our heuristics presented above. However, if TTL values were

different, then we could say that those packets belong to different hosts. For instance, in Table 2

there are three hosts and in table 3, we count two distinct hosts.

39

Table 2: Packet Header based features employed, * Normalized by log

From Packet Header From HTTP User Agent

TTL Proto OS Family Version

56 80/HTTP Intel Mac OS X 10_8_2 Safari 6.0.2

119 80/HTTP Windows NT 6.2 Google Chrome 22.0

120 80/HTTP Windows NT 6.2 Google Chrome 22.0

Table 3: Packet Header based features employed, * Normalized by log

From Packet Header From HTTP User Agent

TTL Proto OS Family Version

56 80/HTTP Intel Mac OS X 10_7_5 Safari 6.0.1

119 80/HTTP Windows NT 6.1 Firefox 11.0

119 80/HTTP Windows NT 6.1 Internet

Explorer 9.0

Table 4: Packet Header based features employed, * Normalized by log

From Packet Header From HTTP User Agent

TTL Proto OS Family Version

48 80/HTTP Android 2.2.x Froyo Safari 6.0.1

48 80/HTTP Android 2.0/1 Eclair Firefox 11.0

48 80/HTTP Windows NT 6.1 Internet

Explorer 7.0

In this work, we also use the packets that belong to the Android and other mobile devices,

while mobiles were not taken into consideration in previous works. In our data sets, we observed

lots of records, which belonged to the mobile devices (in our network traces). There are many

numbers of Android devices, iPhone devices and iPad devices. When we analyze their user agent

40

strings, we can also see the device models; such as Nessus One and Samsung SGH-1896.As we

show in Table 4, in this case, we count three different mobile hosts (users).

5.3 Data Mining Based Approach

In this case, we will introduce the features applied in the data mining based approach. To this

end, we have introduced the learning techniques that will be studied in section 4. In this

approach, we only employ features (attributes) that are based on flow statistics. However, we do

not use payload information, IP addresses and/or port numbers as features to this approach. The

reasons behind this are as the following: One may not have access to payload information or the

payload may be encrypted and therefore opaque. Moreover, IP addresses can be spoofed and

ports numbers can be dynamically assigned. In short, such features can be biased. In some ways,

one can say that NATs and proxies are already doing this for free. Thus, our aim here is to

generate fingerprints (in other words signatures) automatically without using any biased features.

We detail the features of this approach below.

5.3.1 No Payload, IP Addresses and Port Numbers– Flow Based Features

As a different approach, we converted our packet-based dataset to a flow-based dataset.

To this end, NetMate [16] was employed to generate flows and compute features as shown in

Table 5 below. Flows are bidirectional and the first packet seen by the tool determines the

forward direction. We consider only UDP and TCP flows. UDP flows are terminated by a flow

timeout, whereas TCP flows are terminated upon proper connection teardown or by a flow

timeout, whichever occurs first. The flow timeout value employed in this work is 600 seconds as

recommended by the IETF [17].

41

5.4 Ground Truth – Labeling of the Data Sets

For evaluating the performance of each approach employed, we need to know actually

how many NAT devices exists in our data sets so that we could measure the detection rate for

each approach. We also need to know this information to be able to prepare training data sets for

the data mining approach. In this research, we do not know every NAT device (from the Internet)

that accesses the web server where we captured traffic. We only know for sure the ones (NAT

devices) that are in our own faculty and access the web server. So we decided to label the data

we captured using two different methods in order to see their effect on the performance: (i)

Assuming the only NAT devices are the ones we know; and (ii) Assuming the number of NATs

is more than the ones in (i) so predicting the number using the heuristics presented in the passive

fingerprinting approach. These are detailed below.

5.3.2.1 Labeling Scheme-1 – NAT Devices of the Faculty Only

As discussed earlier, we use datasets, which were captured on our faculty’s web server

over a week in November 2012. In this case, NetMate as an open source tool is used to convert

the packet-based data set into a flow-based data set.

As our first ground truth, the IP Address of the faculty’s NAT device is 129.173.13.94.

Therefore, the flows and packets with this IP address were all labeled as NAT. The remainder

was labeled as OTHER (meaning non-NAT). Hereafter, we will refer to those flows as NAT

flows and OTHER flows. As a result of this labeling process, there are 12168 NAT flows among

all the flows (out of a total 533647 flows) in the data set. It means that about 2.3% of our data set

is NAT traffic based on this labeling scheme. Please note that for the data mining approach

where we evaluated different learning techniques (C4.5, Naïve Bayes and SVM) to automatically

42

generate fingerprints, we removed the IP addresses and the port numbers (flows do not have

payloads) from the flows.

5.3.2.2 Labeling Scheme-2 – Potential NATs and Encrypted / Unencrypted Traffic

In this case, even though we do not have any ground truth information regarding NAT

devices other than the ones in our faculty, by using the fingerprints introduced in the first

approach discussed earlier, we can potentially label more traffic as NAT, if they match to anyone

of those fingerprints.

Among our data sets we have not only TCP dump traffic collected on the web server but

its corresponding web server access log files, too. To this end, we have two log files :(i) HTTP

web access logs;(ii) HTTPS web access logs. The first one represents the non-encrypted and the

second one represents the encrypted traffic.

However, to be able to use this information, first we need to match the traffic logs (packet

or flow) to the records in the access log files. Then we can label the flows without payload

information accurately in terms of encrypted NAT or non-encrypted NAT traffic. To achieve this

we checked the “arrival time” feature. Since we are dealing with the flows, we did not have

arrival time feature for each flow, but we have it in packet-based access log files. Therefore we

had to add time attribute to each flow in NetMate. After doing that modification, the time

attributes of the flows were compared to the time attributes of the packets in HTTP and HTTPS

files.

As a result, we have 517 Encrypted-NAT flows among 12168 NAT flows. We

can say Unencrypted-NAT for the rest 11651 NAT flows. Therefore, there are 533647 flows;

517 of them are Encrypted-NAT flows, 11651 of them are Unencrypted-NAT flows, 521479 of

them non-NAT flows, which are labeled as OTHER.

43

Table 5: Flow Based Features Employed

44

6. Empirical Evaluation

In traffic classification, two metrics are used in order to quantify the performance of the

classifier: Detection Rate (DR) and False Positive Rate (FP). We adopted these metrics to

evaluate the performance of the different approaches. Hereafter, DR reflects the number of NAT

flows correctly classified. It is calculated using DR = TP / (TP+FN); whereas the FP rate reflects

the number of non-NAT flows, referred as OTHER flows, incorrectly classified as NAT using

FPR = FP/ (FP+TN). Naturally, a high DR rate and a low FP rate are the desirable outcomes. In

addition to these rates, False Negative (FN) implies that NAT traffic is classified as OTHER. We

also measured the time it takes to train/test a data mining algorithm as well as its complexity.

For the complexity analysis, in our work, we consider the complexity of C4.5 algorithm

as the number of all rules in the tree that is generated by the algorithm at the end of the training

phase. The theoretical time complexity for learning a N N is

the number of training examples and p is the number of features. For linear SVMs, at the training

phase, one must estimate the vector w and bias b by solving a quadratic problem and at the test

phase, prediction is linear in the number of features and constant in the size of the training data.

For kernel SVMs, at the training phase, one must select the support vectors and at the test phase,

complexity is linear on the number of the support vectors (which can be lower bounded by

training set size * training set error rate) and linear on the number of features (since most kernels

only compute a dot product; this will vary for graph kernels, string kernels, etc.): In our work, we

considered SVM’s complexity as the number of kernel evaluations. Training time affects kernel

cache size [22]. As for the time analysis, during the training phase, time value shows the

time taken to run the algorithm on the training set. During testing phase, time value shows the

time taken to test the learned model on the given test data set.

45

6.1 Evaluation Results Based On The First Labeling Scheme

When labeling scheme-1 is used, there are 12168 NAT flows (just the ones we know for

sure) among the 533647 (total number of) flows in our data sets. This is approximately 2% of our

data set. On this data set, we evaluated both the passive fingerprinting approach and the data

mining approach (automatic fingerprinting). For the passive fingerprinting approach, our results

are shown in Table 6.

Table 6: Results for the flow-based feature set using the passive fingerprinting approach – Labeling Scheme 1

DR FPR

TTL NAT 0.939 0.19

OTHER 0.395 0.608

TTL + OS NAT 0.939 0.060

OTHER 0.597 0.4023

TTL + OS + Browser Version

NAT 0.947 0.052

OTHER 0.378 0.621

We also did trace route and IP lookup for the IP addresses that we labeled as NAT

according to this approach. Thus, we can see where those IP addresses belong originally.

For the data mining approach, we generated a training dataset by randomly sampling

(uniform probability) flows from the two classes: NAT and OTHER. Our first training dataset

consists of balanced number of flows from both classes. In other words, the number of NAT

flows (instances) is the same as the number of OTHER flows (instances). Then the test is

employed to the flows which are not used in the training phase. In this case, 7200 flows were

chosen randomly for the training data set, where each class had 3600 flows. The rest of the data

46

set was used for testing. Results of these training and testing experiments are shown in tables 7

and 8, respectively.

Table 7: Training Results for the flow-based feature set using the data mining approach – Labeling Scheme 1

DR FPR Complexity Time (sec)

C4.5 NAT 0.990 0.015 122 0.01

OTHER 0.985 0.010

SVM NAT 0.912 0.079 5074542 0.01

OTHER 0.921 0.088

NAIVE

BAYES

NAT 0.833 0.136 O(7200*41) 0.06

OTHER 0.864 0.167

Table 8: Test Results on the unseen dataset for the flow-based feature set using the data mining approach – Labeling Scheme 1

DR FPR Complexity Time (sec)

C4.5 NAT 0.910 0.001 864 4.63

OTHER 0.999 0.090

SVM NAT 0.000 (crashed) 0.000 (crashed) -1869722033

(crashed) 998.4 (crashed) OTHER 1.000 (crashed) 1.000 (crashed)

NAIVE BAYES

NAT 0.826 0.146 O(526447*41) 8.05

OTHER 0.854 0.174

Table 7 shows that the C4.5 based classifier gives the best performance among all the

machine learning algorithms evaluated on this data set. In this case, the C4.5 classifier correctly

identifies 99% of the flows that are originating from “behind a NAT device” with only around

2% false alarm rate. When this trained model of the C4.5 classifier (trained on just 7200 flows) is

tested on the whole data set (approximately half a million flows) the detection rate drops to 91%

bit with almost no false alarm rates, Table 8. Given that even the passive fingerprinting

47

approach, which employs payload information reaches at most to 94% as detection rate with XX

false alarms, the data mining approach employed using no payload information via C4.5

classifier is very promising to identify whether the traffic is originating behind a NAT device or

not on our data sets.

Moreover, decision tree algorithms such as C4.5 are very successful to find the most

informative features (attributes) among the features given to them during the training phase. The

first node in the resulting decision tree shows which feature has the most predictive power. Even

better, WEKA's Explorer utility offers purpose build options to find the most useful attributes in

a dataset. These options can be found under the Select Attributes tab. In our work, we use

statistical flow features, which are shown in Table 5 for all the data mining algorithms. Among

these, C4.5 selected the following as the most predictive top 5 features: min_fpktl, min_fiat,

mean_fpktl, max_fpktl and duration. From these features, it seems like the main predictions can

be made based on the forward packet length’s mean, minimum and maximum values as well as

the duration of the flow.

6.2 Evaluation Results Based On The Second Labeling Scheme

When labeling scheme-2 is used, there are 75297 NAT flows (the ones we know for sure

plus the potential ones) among the 533647 (total number of) flows in our data sets. This is

approximately 14% of our data set. We can label them as encrypted NATs and non-encrypted

NATs. After labeling, there are 74677 non-encrypted NAT flows while there are 620 encrypted

NAT flows among 75297 NAT flows in total. From this data set, we have formed a new training

set for the data mining approaches. In this case, our training data set consists of 400 encrypted

NAT flows, 400 non-encrypted NAT flows and 400 OTHER flows – all randomly chosen.

48

Again, we evaluated both the passive fingerprinting approach and the data mining approach on

the unseen flows. For the Passive fingerprinting approach, our results are shown in Table 9.

Table 9: Results for the flow-based feature set using the passive fingerprinting approach –Labeling Scheme 2

DR FPR

TTL Encrypted-NAT 1.0 0.9509

Unencrypted-NAT 0.616 0.383

OTHER 0.11 0.883

TTL + OS Encrypted-NAT 1.0 0.9536

Unencrypted-NAT 0.68 0.311

OTHER 0.115 0.884

TTL + OS + Browser Version Encrypted-NAT 1.0 0.9505

Unencrypted-NAT 0.57 0.424

OTHER 0.104 0.895

These results show that passive fingerprinting approach cannot perform well when the

traffic is encrypted. It has very high, 95%, false alarm rates. On other hand, it has around 57% -

68% detection rate for non-encrypted NAT flows depending on the fingerprints used. As for the

data mining approach under this labeling scheme, results of the training and testing are given in

Tables 10 and 11, respectively.

49

Table 10: Training Results for the flow-based feature set using the data mining approach – Labeling Scheme 2

DR FPR Complexity Time (sec)

C4.5 Encrypted-NAT 0.988 0.079

93 0.03 Unencrypted-NAT 0.803 0.043

OTHER 0.898 0.035

SVM Encrypted-NAT 0.985 0.244

83789 0.01 Unencrypted-NAT 0.528 0.160

OTHER 0.498 0.091

NAIVE BAYES

Encrypted-NAT 0.878 0.171

O(41*1200) 0.01 Unencrypted-NAT 0.693 0.205

OTHER 0.698 0.151

Table 11: Test Results on the unseen dataset for the flow-based feature set using the data mining approach – Labeling Scheme 2

DR FPR Complexity Time (sec)

C4.5 Encrypted-NAT 0.677 0.015

326 4.62 Unencrypted-NAT 0.793 0.197

OTHER 0.791 0.170

SVM Encrypted-NAT 0.000 0.000

238687 4.73 Unencrypted-NAT 0.777 0.417

OTHER 0.583 0.223

NAIVE BAYES

Encrypted-NAT 0.841 0.109

O(41*4620) 7.04 Unencrypted-NAT 0.686 0.325

OTHER 0.580 0.115

According to the test results, C4.5 classifier seems to have the best performance to detect

both encrypted and non-encrypted NAT flows during training experiments. However, even

though its performance is better than the passive fingerprinting approach during test, Naïve

50

Bayes based classifier achieves much better detection rate (84% vs. 68%) albeit with false alarm

rate at 11%. In our opinion this requires further experimentation and analysis. One of the reasons

behind this might be the nature of the training data (very different than the test data) or it might

be that a different set of features might be more indicative of the behavior for encrypted NAT

flows.

When we analyzed the solution of the C4.5 classifier after the training for this set of

experiments, we find that it uses min_fpktl, bpsh_cnt, mean_biat, mean_fiat, total_fhlen,

min_biat features as the most predictive features. Some of these overlap with the experiments

that we conducted under the first labeling scheme, however 4 of the 6 features are different than

the ones we’ve seen before, hence the need for a potentially new set of features might be the way

to improve the detection rate under this labeling scheme.

8. Conclusion and Future Work

In this work, we have evaluated two different approaches, namely (i) passive

fingerprinting and (ii) data mining (automatic fingerprinting), to identify and analyze NAT

behavior. For the passive fingerprinting approach, we have used three different heuristics using

information extracted from packet headers, specifically TTL and arrival time attributes, as well

as payload, specifically Web user string agent information. Indeed, payload information requires

the presence of web traffic for this approach to be applied. On the other hand, data mining

approach consists of evaluation of three machine learning algorithms, namely C4.5, SVM and

Naive Bayes. Since our aim is identifying the traffic that is originating from behind a NAT

device, we have employed and evaluated all techniques on the same data sets to measure their

performances. To this end, we have used two different labeling schemes, where the distinction

51

was drawn between flows we labeled as NAT traffic and OTHER, and between ones were

encrypted and which ones were not.

All in all, data mining approach using the C4.5 decision tree classifier seem to be the

most reliable performer. Using this approach we can achieve high detection rates with low false

alarms even on encrypted traffic that is originating from a network behind a NAT device.

Moreover, we can achieve this without using IP addresses, port numbers or payload information.

This is not the case for the passive fingerprinting approach, where the complexity is much less,

but certain type of payload information is required to apply it. Furthermore, the solution trees of

the trained models on our data sets can be used as the automatically generated “fingerprints” to

identify NAT devices.

As the next steps, we want to experiment with different set of features for the data mining

model to see if we can decrease the false alarm rate of encrypted NAT traffic. We also wish to

conduct tests on different data sets for generalization purposes. Last but not the least, once the

best model is chosen after these next set of experiments, we want to see if we can access a given

machine identified by this method behind a NAT device. Another interesting direction would be

to compare the traffic behind a NAT to the traffic behind a proxy device. Even though such two

devices have similar purposes, they do not work exactly the same way so the effects of their

different behavior would worth comparing the features and fingerprints found under such

different conditions.

52

References:

[1] Ishikawa, Y.; Yamai, N.; Okayama, K.; Nakamura, M.; , "An Identification Method of PCs behind

NAT Router with Proxy Authentication on HTTP Communication," Applications and the Internet

(SAINT), 2011 IEEE/IPSJ 11th International Symposium on , vol., no., pp.445-450, 18-21 July 2011.

[2] G. Maier, F. Schneider, and A. Feldmann. NAT Usage in Residential Broadband Networks.

Proceedings of the 12th International Conference on Passive and Active Network Measurement (PAM

2011), Atlanta, Georgia, March 2011.

[3] Murakami, R.; Yamai, N.; Okayama, K.; , "A MAC-address Relaying NAT Router for PC