Memory Organization. Data Organization Big endian –Most significant byte stored in first memory...

-

date post

21-Dec-2015 -

Category

Documents

-

view

226 -

download

3

Transcript of Memory Organization. Data Organization Big endian –Most significant byte stored in first memory...

Data Organization

• Big endian– Most significant byte stored in first memory

location each additional n bytes stored in next n locations

• Little endian– Least significant byte stored in first memory

location each additional n bytes stored in next n locations

• Alignment

Memory Configuration

• Single chip– Address bus, data bus, control bus are

connected to the memory chip

• Multiple chips– Address bus and control bus connected to the

chips– Different bits of data bus connected to data pins

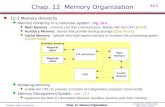

Computer Architectures

• von Neumann– Instructions and data stored in same memory

module

• Harvard– Separate memory modules for each

• Modern PCs – von Neumann– Harvard used in cache memory

Memory Hierarchy Terminology• Hit - Requested data resides in a given level of

memory• Miss - Requested data not found in the given level

of memory• Hit rate – percentage of memory accesses found in

a given level of memory• Miss rate – percentage of memory accesses not

found in a given level of memory (1- Hit rate)• Hit time – time required to access the requested

data in a given level of memory• Miss penalty – time required to process a miss

Memory Hierarchy

Magnetic Tape

Optical Disk

Fixed Disk

Main Mem

L2 Cache

L1 Cache

Registers

less costly

more costly

Locality of Reference

• Temporal locality – recently accessed items tend to be accessed again in the near future

• Spatial locality – accesses tend to be clustered in the address space (arrays or loops)

• Sequential locality – instructions tend to be accessed sequentially

Cache

• Small

• High speed

• Temporarily stores data from frequently used memory locations

• Connected to main memory

Cache Mapping Schemes

• The mapping scheme determines where the data is placed when it is originally copied into cache and provides a method for the CPU to find previously copied data when searching cache– Direct mapped cache– Fully associative cache– Set associative cache

Direct Mapped Cache

• Modular approach

• Block X of main memory is mapped to block Y of cache, mod N, where N is the total number of blocks in cache.

• In direct mapping the binary main memory address is partitioned into the fields shown:

Tag Block Word

Example

Small system with 16 words of main memory divided into 8 blocks (each block has 2 words). Assume cache is 4 blocks in size (total of 8 words).

Main memory address has 4 bits (24 = 16 words)

4-bit address is divided into three fields

word field: 1 bit, block field: 2 bits, tag field: 1 bit

Mapping:

Main Memory Maps to Cache

Block 0 (addresses 0,1) Block 0

Block 1 (addresses 2,3) Block 1

Block 2 (addresses 4,5) Block 2

Block 3 (addresses 6,7) Block 3

Block 4 (addresses 8, 9) Block 0

Block 5 (addresses 10, 11) Block 1

Block 6 (addresses 12, 13) Block 2

Block 7 (addresses 14, 15) Block 3

Main Memory Address 9 = 10012

Split into fields: tag = 1 (1 bit)

block = 00 (2 bits)

word = 1 (1 bit)

Fully Associative Cache

• Built from associative memory so it can be searched in parallel.– A single search must compare the requested tag

to all tags in cache to determine if the block is present.

• Special hardware required to allow associative searching (expensive).

Set Associative Cache

• N-way set associative cache mapping– Combination of direct mapped and fully

associative

• The address maps the block to a set of cache blocks

• Address is partitioned into three fields: tag, set, and word.

Virtual Memory

• Virtual Address• Physical Address• Mapping• Page Frames• Pages• Paging• Fragmentation• Page Fault

Paging

• Allocate physical memory to processes in fixed size chunks

• Page table – in main memory (typically)– N rows (N = # of virtual pages in the process)– valid bit

• 0 page is not in main memory

• 1 page is in main memory

How Paging Works

• Extract the page number

• Extract the offset

• Translate page number into physical page frame number using page table

How Paging Works cont’d

• look up page number in page table• check the valid bit

– valid bit = 0• system generates a page fault and OS must intervene• locate the page on disk• find a free page frame• copy the page into the free page frame• update the page table• resume execution of process

How Paging Works cont’d

– valid bit = 1– page is in memory

– replace virtual page number with actual frame number

– access data at offset in physical page frame

Example

• process has a virtual address space of 28 words

• physical memory of 4 page frames – 32 word in length

• Virtual address is 8 bits

• Physical address is 7 bits

Example cont’d

• 2 fields of virtual address– Page – 3 bits– offset – 5 bits

• system generates virtual address 13 (00001101 in binary)– Page 000– Offset 01101

• Physical address = 1001101

Access Time

• Time penalty associated with virtual memory– two physical memory accesses for each

memory access the processor generates

Disadvantages

• extra resource consumption – memory overhead for storing page tables

• special hardware and OS support required

Advantages

• Programs are no longer restricted by the amount of physical memory available

• Easier to write programs– don’t need to worry about physical address

space limitations

• Allows multitasking

Segmentation

• virtual address space divided into logical variable length units (segments)

• To copy a segment into memory OS looks for a chunk of free memory large enough

• Segment– base address – where located in memory– bounds limit – indicates its size

• Segment table – base/bounds pairs

Segmentation

• External fragmentation– as segments are copied into and out of memory

free chunks of memory are broken up– eventually many small chunks none big enough

for any segment

• Garbage collection combats external fragmentation

Paging and Segmentation

• Systems can use a combination• Virtual address space is divided into segments of

variable length• Segments are divided into fixed-size pages• Main memory is divided into the same size frames• Each segment has a page table• Physical address divided into 3 fields: segment,

page number, offset