Matrices KL

description

Transcript of Matrices KL

-

Matrices kLFrom Wikipedia, the free encyclopedia

-

Contents

1 Kernel (linear algebra) 11.1 Properties of the Kernel . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11.2 Application to modules . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21.3 The kernel in functional analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21.4 Representation as matrix multiplication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.4.1 Subspace properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.4.2 The Row Space of a Matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.4.3 Left null space . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.4.4 Nonhomogeneous systems of linear equations . . . . . . . . . . . . . . . . . . . . . . . . 3

1.5 Illustration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41.6 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51.7 Computation by Gaussian elimination . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61.8 Numerical computation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.8.1 Exact coefficients . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71.8.2 Floating point computation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.9 See also . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71.10 Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81.11 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81.12 External links . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2 Krawtchouk matrices 92.1 See also . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92.2 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92.3 External links . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

3 Laplacian matrix 103.1 Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103.2 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113.3 Properties . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113.4 Incidence matrix . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113.5 Deformed Laplacian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123.6 Symmetric normalized Laplacian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123.7 Random walk normalized Laplacian . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

i

-

ii CONTENTS

3.7.1 Graphs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 133.8 Interpretation as the discrete Laplace operator . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

3.8.1 Equilibrium Behavior . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 153.8.2 Example of the Operator on a Grid . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.9 Approximation to the negative continuous Laplacian . . . . . . . . . . . . . . . . . . . . . . . . . 173.10 In Directed Multigraphs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173.11 See also . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173.12 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173.13 Text and image sources, contributors, and licenses . . . . . . . . . . . . . . . . . . . . . . . . . . 18

3.13.1 Text . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183.13.2 Images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183.13.3 Content license . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

-

Chapter 1

Kernel (linear algebra)

In linear algebra and functional analysis, the kernel (also null space or nullspace) of a linear map L :VW betweentwo vector spaces V andW, is the set of all elements v of V for which L(v) = 0, where 0 denotes the zero vector inW. That is, in set-builder notation,

ker(L) = {v V |L(v) = 0} .

1.1 Properties of the Kernel

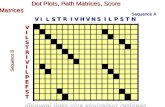

Kernel and image of a map L.

The kernel of L is a linear subspace of the domain V.[1] In the linear map L : V W, two elements of V have thesame image inW if and only if their difference lies in the kernel of L:

L(v1) = L(v2) L(v1 v2) = 0.

1

https://en.wikipedia.org/wiki/Linear_algebrahttps://en.wikipedia.org/wiki/Functional_analysishttps://en.wikipedia.org/wiki/Linear_maphttps://en.wikipedia.org/wiki/Vector_spacehttps://en.wikipedia.org/wiki/Zero_vectorhttps://en.wikipedia.org/wiki/Set-builder_notationhttps://en.wikipedia.org/wiki/Linear_subspacehttps://en.wikipedia.org/wiki/Domain_of_a_functionhttps://en.wikipedia.org/wiki/Image_(mathematics) -

2 CHAPTER 1. KERNEL (LINEAR ALGEBRA)

It follows that the image of L is isomorphic to the quotient of V by the kernel:

im(L) = V / ker(L).

This implies the ranknullity theorem:

dim(kerL) + dim(imL) = dim(V ).

where, by rank we mean the dimension of the image of L, and by nullity that of the kernel of L.When V is an inner product space, the quotient V / ker(L) can be identified with the orthogonal complement in V ofker(L). This is the generalization to linear operators of the row space, or coimage, of a matrix.

1.2 Application to modules

Main article: module (mathematics)

The notion of kernel applies to the homomorphisms of modules, the latter being a generalization of the vector spaceover a field to that over a ring. The domain of the mapping is a "right free module", and the kernel constitutes a"submodule". Here, the concepts of rank and nullity do not necessarily apply.

1.3 The kernel in functional analysis

Main article: topological vector space

If V andW are topological vector spaces (andW is finite-dimensional) then a linear operator L: V W is continuousif and only if the kernel of L is a closed subspace of V.

1.4 Representation as matrix multiplication

Consider a linear map represented as a m n matrix A with coefficients in a field K (typically the field of the realnumbers or of the complex numbers) and operating on column vectors x with n components over K. The kernel ofthis linear map is the set of solutions to the equation A x = 0, where 0 is understood as the zero vector. The dimensionof the kernel of A is called the nullity of A. In set-builder notation,

N(A) = Null(A) = ker(A) = {x Kn|Ax = 0} .

The matrix equation is equivalent to a homogeneous system of linear equations:

Ax = 0

a11x1 + a12x2 + + a1nxn = 0a21x1 + a22x2 + + a2nxn = 0

......

......

am1x1 + am2x2 + + amnxn = 0.

Thus the kernel of A is the same as the solution set to the above homogeneous equations.

https://en.wikipedia.org/wiki/Isomorphismhttps://en.wikipedia.org/wiki/Quotient_space_(linear_algebra)https://en.wikipedia.org/wiki/Rank%E2%80%93nullity_theoremhttps://en.wikipedia.org/wiki/Inner_product_spacehttps://en.wikipedia.org/wiki/Orthogonal_complementhttps://en.wikipedia.org/wiki/Row_spacehttps://en.wikipedia.org/wiki/Module_(mathematics)https://en.wikipedia.org/wiki/Homomorphismhttps://en.wikipedia.org/wiki/Module_(mathematics)https://en.wikipedia.org/wiki/Vector_spacehttps://en.wikipedia.org/wiki/Field_(mathematics)https://en.wikipedia.org/wiki/Ring_(mathematics)https://en.wikipedia.org/wiki/Left_and_right_(algebra)https://en.wikipedia.org/wiki/Free_modulehttps://en.wikipedia.org/wiki/Submodulehttps://en.wikipedia.org/wiki/Topological_vector_spacehttps://en.wikipedia.org/wiki/Topological_vector_spacehttps://en.wikipedia.org/wiki/Continuous_linear_operatorhttps://en.wikipedia.org/wiki/Closed_sethttps://en.wikipedia.org/wiki/Field_(mathematics)https://en.wikipedia.org/wiki/Real_numberhttps://en.wikipedia.org/wiki/Real_numberhttps://en.wikipedia.org/wiki/Complex_numberhttps://en.wikipedia.org/wiki/Zero_vectorhttps://en.wikipedia.org/wiki/Dimension_(vector_space)https://en.wikipedia.org/wiki/Set-builder_notationhttps://en.wikipedia.org/wiki/System_of_linear_equations -

1.4. REPRESENTATION AS MATRIX MULTIPLICATION 3

1.4.1 Subspace properties

The kernel of an m n matrix A over a field K is a linear subspace of Kn. That is, the kernel of A, the set Null(A),has the following three properties:

1. Null(A) always contains the zero vector, since A0 = 0.

2. If x Null(A) and y Null(A), then x + y Null(A). This follows from the distributivity ofmatrixmultiplicationover addition.

3. If x Null(A) and c is a scalar c K, then cx Null(A), since A(cx) = c(Ax) = c0 = 0.

1.4.2 The Row Space of a Matrix

Main article: Ranknullity theorem

The product Ax can be written in terms of the dot product of vectors as follows:

Ax =

a1 xa2 x...

am x

.Here a1, ... , am denote the rows of the matrix A. It follows that x is in the kernel of A if and only if x is orthogonal(or perpendicular) to each of the row vectors of A (because when the dot product of two vectors is equal to zero, theyare by definition orthogonal).The row space, or coimage, of a matrix A is the span of the row vectors of A. By the above reasoning, the kernelof A is the orthogonal complement to the row space. That is, a vector x lies in the kernel of A if and only if it isperpendicular to every vector in the row space of A.The dimension of the row space of A is called the rank of A, and the dimension of the kernel of A is called the nullityof A. These quantities are related by the ranknullity theorem

rank(A) + nullity(A) = n.

1.4.3 Left null space

The left null space, or cokernel, of a matrix A consists of all vectors x such that xTA = 0T, where T denotes thetranspose of a column vector. The left null space of A is the same as the kernel of AT. The left null space of A is theorthogonal complement to the column space of A, and is dual to the cokernel of the associated linear transformation.The kernel, the row space, the column space, and the left null space ofA are the four fundamental subspaces associatedto the matrix A.

1.4.4 Nonhomogeneous systems of linear equations

The kernel also plays a role in the solution to a nonhomogeneous system of linear equations:

Ax = b or

a11x1 + a12x2 + + a1nxn = b1a21x1 + a22x2 + + a2nxn = b2

......

......

am1x1 + am2x2 + + amnxn = bm

https://en.wikipedia.org/wiki/Linear_subspacehttps://en.wikipedia.org/wiki/Zero_vectorhttps://en.wikipedia.org/wiki/Scalar_(mathematics)https://en.wikipedia.org/wiki/Rank%E2%80%93nullity_theoremhttps://en.wikipedia.org/wiki/Dot_producthttps://en.wikipedia.org/wiki/Orthogonalityhttps://en.wikipedia.org/wiki/Row_spacehttps://en.wikipedia.org/wiki/Linear_spanhttps://en.wikipedia.org/wiki/Orthogonal_complementhttps://en.wikipedia.org/wiki/Rank_(linear_algebra)https://en.wikipedia.org/wiki/Rank%E2%80%93nullity_theoremhttps://en.wikipedia.org/wiki/Cokernelhttps://en.wikipedia.org/wiki/Transposehttps://en.wikipedia.org/wiki/Column_spacehttps://en.wikipedia.org/wiki/Cokernelhttps://en.wikipedia.org/wiki/Fundamental_theorem_of_linear_algebra -

4 CHAPTER 1. KERNEL (LINEAR ALGEBRA)

If u and v are two possible solutions to the above equation, then

A(u v) = AuAv = b b = 0

Thus, the difference of any two solutions to the equation Ax = b lies in the kernel of A.It follows that any solution to the equation Ax = b can be expressed as the sum of a fixed solution v and an arbitraryelement of the kernel. That is, the solution set to the equation Ax = b is

{v+ x|Av = b x Null(A)} ,

Geometrically, this says that the solution set to Ax = b is the translation of the kernel of A by the vector v. See alsoFredholm alternative and flat (geometry).

1.5 Illustration

We give here a simple illustration of computing the kernel of a matrix (see the section Basis below for methods bettersuited to more complex calculations.) We also touch on the row space and its relation to the kernel.Consider the matrix

A =

[2 3 5

4 2 3

].

The kernel of this matrix consists of all vectors (x, y, z) R3 for which

[2 3 5

4 2 3

]xyz

= [00

],

which can be expressed as a homogeneous system of linear equations involving x, y, and z:

2x + 3y + 5z = 0,

4x + 2y + 3z = 0,

which can be written in matrix form as:

[2 3 5 04 2 3 0

].

GaussJordan elimination reduces this to:

[1 0 1/16 00 1 13/8 0

].

Rewriting yields:

x = 116

z

y = 138z.

https://en.wikipedia.org/wiki/Translation_(geometry)https://en.wikipedia.org/wiki/Fredholm_alternativehttps://en.wikipedia.org/wiki/Flat_(geometry)https://en.wikipedia.org/wiki/Kernel_(linear_algebra)#Basishttps://en.wikipedia.org/wiki/Real_coordinate_spacehttps://en.wikipedia.org/wiki/System_of_linear_equationshttps://en.wikipedia.org/wiki/Gauss%E2%80%93Jordan_elimination -

1.6. EXAMPLES 5

Now we can express an element of the kernel:

xyz

= c1/1613/8

1

.for c a scalar.Since c is a free variable, this can be expressed equally well as,

xyz

= c 126

16

.The kernel of A is precisely the solution set to these equations (in this case, a line through the origin inR3); the vector(1,26,16)T constitutes a basis of the kernel of A. Thus, the nullity of A is 1.Note also that the following dot products are zero:

[2 3 5

]

12616

= 0 and [ 4 2 3 ] 126

16

= 0,which illustrates that vectors in the kernel of A are orthogonal to each of the row vectors of A.These two (linearly independent) row vectors span the row space of A, a plane orthogonal to the vector (1,26,16)T.With the rank of A 2, the nullity of A 1, and the dimension of A 3, we have an illustration of the rank-nullity theorem.

1.6 Examples If L: Rm Rn, then the kernel of L is the solution set to a homogeneous system of linear equations. As in theabove illustration, if L is the operator:

L(x1, x2, x3) = (2x1 + 3x2 + 5x3, 4x1 + 2x2 + 3x3)

then the kernel of L is the set of solutions to the equations2x1 + 3x2 + 5x3 = 0

4x1 + 2x2 + 3x3 = 0

Let C[0,1] denote the vector space of all continuous real-valued functions on the interval [0,1], and define L:C[0,1] R by the rule

L(f) = f(0.3).

Then the kernel of L consists of all functions f C[0,1] for which f(0.3) = 0.

Let C(R) be the vector space of all infinitely differentiable functions R R, and let D: C(R) C(R) bethe differentiation operator:

D(f) =df

dx.

Then the kernel of D consists of all functions in C(R) whose derivatives are zero, i.e. the set of allconstant functions.

https://en.wikipedia.org/wiki/Scalar_(mathematics)https://en.wikipedia.org/wiki/Free_variablehttps://en.wikipedia.org/wiki/Line_(geometry)https://en.wikipedia.org/wiki/Basis_(linear_algebra)https://en.wikipedia.org/wiki/System_of_linear_equationshttps://en.wikipedia.org/wiki/Vector_spacehttps://en.wikipedia.org/wiki/Differential_operatorhttps://en.wikipedia.org/wiki/Constant_function -

6 CHAPTER 1. KERNEL (LINEAR ALGEBRA)

Let R be the direct product of infinitely many copies of R, and let s: R R be the shift operator

s(x1, x2, x3, x4, . . .) = (x2, x3, x4, . . .).

Then the kernel of s is the one-dimensional subspace consisting of all vectors (x1, 0, 0, ...).

If V is an inner product space and W is a subspace, the kernel of the orthogonal projection V W is theorthogonal complement toW in V.

1.7 Computation by Gaussian elimination

A basis of the kernel of a matrix may be computed by Gaussian elimination.

For this purpose, given an m n matrix A, we construct first the row augmented matrix[

AI

], where I is the n n

identity matrix.

Computing its column echelon form by Gaussian elimination (or any other suitable method), we get a matrix[

BC

].

A basis of the kernel of A consists in the non-zero columns of C such that the corresponding column of B is a zerocolumn.In fact, the computation may be stopped as soon as the upper matrix is in column echelon form: the remainder of thecomputation consists in changing the basis of the vector space generated by the columns whose upper part is zero.For example, suppose that

A =

1 0 3 0 2 80 1 5 0 1 40 0 0 1 7 90 0 0 0 0 0

.Then

[AI

]=

1 0 3 0 2 80 1 5 0 1 40 0 0 1 7 90 0 0 0 0 01 0 0 0 0 00 1 0 0 0 00 0 1 0 0 00 0 0 1 0 00 0 0 0 1 00 0 0 0 0 1

.

Putting the upper part in column echelon form by column operations on the whole matrix gives

[BC

]=

1 0 0 0 0 00 1 0 0 0 00 0 1 0 0 00 0 0 0 0 01 0 0 3 2 80 1 0 5 1 40 0 0 1 0 00 0 1 0 7 90 0 0 0 1 00 0 0 0 0 1

.

https://en.wikipedia.org/wiki/Direct_producthttps://en.wikipedia.org/wiki/Shift_operatorhttps://en.wikipedia.org/wiki/Inner_product_spacehttps://en.wikipedia.org/wiki/Projection_(linear_algebra)https://en.wikipedia.org/wiki/Orthogonal_complementhttps://en.wikipedia.org/wiki/Basis_(linear_algebra)https://en.wikipedia.org/wiki/Gaussian_eliminationhttps://en.wikipedia.org/wiki/Augmented_matrixhttps://en.wikipedia.org/wiki/Identity_matrixhttps://en.wikipedia.org/wiki/Column_echelon_formhttps://en.wikipedia.org/wiki/Zero_matrixhttps://en.wikipedia.org/wiki/Zero_matrix -

1.8. NUMERICAL COMPUTATION 7

The last three columns of B are zero columns. Therefore, the three last vectors of C,

3

51000

,210

710

,

840901

are a basis of the kernel of A.

Since column operations correspond to pre-multiplication by invertible matrices, the fact that[

AI

]reduces to[

BC

]tells us that AC = B . That is, the action ofA via (the columns of) C corresponds to the action ofB . Since

B is in column-echelon form, it acts trivially on only the elementary basis elements corresponding to zero columns inB . Since the action of B corresponds to the action of A via columns of C , the corresponding columns of C mustthen be null columns for A , and must form a basis of the null space of A by the rank-nullity theorem.

1.8 Numerical computation

The problem of computing the kernel on a computer depends on the nature of the coefficients.

1.8.1 Exact coefficients

If the coefficients of thematrix are exactly given numbers, the column echelon form of thematrixmay be computed byBareiss algorithm more efficiently than with Gaussian elimination. It is even more efficient to use modular arithmetic,which reduces the problem to a similar one over a finite field.For coefficients in a finite field, Gaussian elimination works well, but for the large matrices that occur in cryptographyandGrbner basis computation, better algorithms are known, which have roughly the same computational complexity,but are faster and behave better with modern computer hardware.

1.8.2 Floating point computation

For matrices whose entries are floating-point numbers, the problem of computing the kernel makes sense only formatrices such that the number of rows is equal to their rank: because of the rounding errors, a floating-point matrixhas almost always a full rank, even when it is an approximation of a matrix of a much smaller rank. Even for afull-rank matrix, it is possible to compute its kernel only if it is well conditioned, i.e. it has a low condition number.[2]

Even for a well conditioned full rank matrix, Gaussian elimination does not behave correctly: it introduces roundingerrors that are too large for getting a significant result. As the computation of the kernel of a matrix is a specialinstance of solving a homogeneous system of linear equations, the kernel may be computed by any of the variousalgorithms designed to solve homogeneous systems. A state of the art software for this purpose is the Lapack library.

1.9 See also

System of linear equations

Row and column spaces

Row reduction

Four fundamental subspaces

Vector space

https://en.wikipedia.org/wiki/Column_echelon_formhttps://en.wikipedia.org/wiki/Bareiss_algorithmhttps://en.wikipedia.org/wiki/Modular_arithmetichttps://en.wikipedia.org/wiki/Finite_fieldhttps://en.wikipedia.org/wiki/Cryptographyhttps://en.wikipedia.org/wiki/Gr%C3%B6bner_basishttps://en.wikipedia.org/wiki/Analysis_of_algorithmshttps://en.wikipedia.org/wiki/Computer_hardwarehttps://en.wikipedia.org/wiki/Floating-point_numberhttps://en.wikipedia.org/wiki/Rounding_errorhttps://en.wikipedia.org/wiki/Full_rankhttps://en.wikipedia.org/wiki/Well-conditioned_problemhttps://en.wikipedia.org/wiki/Condition_numberhttps://en.wikipedia.org/wiki/Lapackhttps://en.wikipedia.org/wiki/System_of_linear_equationshttps://en.wikipedia.org/wiki/Row_and_column_spaceshttps://en.wikipedia.org/wiki/Row_reductionhttps://en.wikipedia.org/wiki/Four_fundamental_subspaceshttps://en.wikipedia.org/wiki/Vector_space -

8 CHAPTER 1. KERNEL (LINEAR ALGEBRA)

Linear subspace

Linear operator

Function space

Fredholm alternative

1.10 Notes[1] Linear algebra, as discussed in this article, is a very well established mathematical discipline for which there are many

sources. Almost all of the material in this article can be found in Lay 2005, Meyer 2001, and Strang 2005.

[2] https://www.math.ohiou.edu/courses/math3600/lecture11.pdf

1.11 References

See also: Linear algebra Further reading

Axler, Sheldon Jay (1997), Linear Algebra Done Right (2nd ed.), Springer-Verlag, ISBN 0-387-98259-0

Lay, David C. (August 22, 2005), Linear Algebra and Its Applications (3rd ed.), Addison Wesley, ISBN 978-0-321-28713-7

Meyer, Carl D. (February 15, 2001), Matrix Analysis and Applied Linear Algebra, Society for Industrial andApplied Mathematics (SIAM), ISBN 978-0-89871-454-8

Poole, David (2006), Linear Algebra: A Modern Introduction (2nd ed.), Brooks/Cole, ISBN 0-534-99845-3

Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

Leon, Steven J. (2006), Linear Algebra With Applications (7th ed.), Pearson Prentice Hall

Serge Lang (1987). Linear Algebra. Springer. p. 59. ISBN 9780387964126.

Lloyd N. Trefethen and David Bau, III, Numerical Linear Algebra, SIAM 1997, ISBN 978-0-89871-361-9online version

1.12 External links Hazewinkel, Michiel, ed. (2001), Kernel of a matrix, Encyclopedia of Mathematics, Springer, ISBN 978-1-55608-010-4

Gilbert Strang, MIT Linear Algebra Lecture on the Four Fundamental Subspaces at Google Video, from MITOpenCourseWare

Khan Academy, Introduction to the Null Space of a Matrix

https://en.wikipedia.org/wiki/Linear_subspacehttps://en.wikipedia.org/wiki/Linear_operatorhttps://en.wikipedia.org/wiki/Function_spacehttps://en.wikipedia.org/wiki/Fredholm_alternativehttps://www.math.ohiou.edu/courses/math3600/lecture11.pdfhttps://en.wikipedia.org/wiki/Linear_algebra#Further_readinghttps://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/0-387-98259-0https://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/978-0-321-28713-7https://en.wikipedia.org/wiki/Special:BookSources/978-0-321-28713-7http://www.matrixanalysis.com/DownloadChapters.htmlhttps://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/978-0-89871-454-8https://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/0-534-99845-3https://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/9780387964126https://en.wikipedia.org/wiki/Special:BookSources/9780898713619http://web.comlab.ox.ac.uk/oucl/work/nick.trefethen/text.htmlhttp://www.encyclopediaofmath.org/index.php?title=p/k110090https://en.wikipedia.org/wiki/Encyclopedia_of_Mathematicshttps://en.wikipedia.org/wiki/Springer_Science+Business_Mediahttps://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/978-1-55608-010-4https://en.wikipedia.org/wiki/Special:BookSources/978-1-55608-010-4https://en.wikipedia.org/wiki/Gilbert_Stranghttp://ocw.mit.edu/OcwWeb/Mathematics/18-06Spring-2005/VideoLectures/detail/lecture10.htmhttps://en.wikipedia.org/wiki/MIT_OpenCourseWarehttps://en.wikipedia.org/wiki/MIT_OpenCourseWarehttps://en.wikipedia.org/wiki/Khan_Academyhttp://www.khanacademy.org/video/introduction-to-the-null-space-of-a-matrix -

Chapter 2

Krawtchouk matrices

In mathematics,Krawtchouk matrices are matrices whose entries are values of Krawtchouk polynomials at nonneg-ative integer points.[1] [2] The Krawtchouk matrix K(N) is an (N+1)(N+1) matrix. Here are the first few examples:

K(0) =[1]

K(1) =

[1 11 1

]K(2) =

1 1 12 0 21 1 1

K(3) =

1 1 1 13 1 1 33 1 1 31 1 1 1

K(4) =

1 1 1 1 14 2 0 2 46 0 2 0 64 2 0 2 41 1 1 1 1

K(5) =

1 1 1 1 1 15 3 1 1 3 510 2 2 2 2 1010 2 2 2 2 105 3 1 1 3 51 1 1 1 1 1

.

In general, for positive integer N , the entriesK(N)ij are given via the generating function

(1 + v)Nj (1 v)j =i

viK(N)ij

where the row and column indices i and j run from 0 to N .These Krawtchouk polynomials are orthogonal with respect to symmetric binomial distributions, p = 1/2 .[3]

2.1 See also Square matrix

2.2 References[1] N. Bose, Digital Filters: Theory and Applications [North-Holland Elsevier, N.Y., 1985]

[2] P. Feinsilver, J. Kocik: Krawtchouk polynomials andKrawtchoukmatrices,Recent advances in applied probability, Springer-Verlag, October, 2004

[3] Hahn Class: Definitions

2.3 External links Krawtchouk encyclopedia

9

https://en.wikipedia.org/wiki/Mathematicshttps://en.wikipedia.org/wiki/Matrix_(mathematics)https://en.wikipedia.org/wiki/Krawtchouk_polynomialshttps://en.wikipedia.org/wiki/Matrix_(mathematics)#Square_matriceshttp://arxiv.org/abs/quant-ph/0702073http://arxiv.org/abs/quant-ph/0702073http://dlmf.nist.gov/18.19http://chanoir.math.siu.edu/kravchyk -

Chapter 3

Laplacian matrix

In the mathematical field of graph theory, the Laplacian matrix, sometimes called admittance matrix, Kirchhoffmatrix or discrete Laplacian, is a matrix representation of a graph. Together with Kirchhoffs theorem, it can beused to calculate the number of spanning trees for a given graph. The Laplacian matrix can be used to find manyother properties of the graph. Cheegers inequality from Riemannian geometry has a discrete analogue involving theLaplacian matrix; this is perhaps the most important theorem in spectral graph theory and one of the most usefulfacts in algorithmic applications. It approximates the sparsest cut of a graph through the second eigenvalue of itsLaplacian.

3.1 Definition

Given a simple graph G with n vertices, its Laplacian matrix Lnn is defined as:[1]

L = D A,

where D is the degree matrix and A is the adjacency matrix of the graph. In the case of directed graphs, either theindegree or outdegree might be used, depending on the application.The elements of L are given by

Li,j :=

deg(vi) if i = j1 if i = j and vi is adjacent to vj0 otherwise

where deg(vi) is degree of the vertex i.The symmetric normalized Laplacian matrix is defined as:[1]

Lsym := D1/2LD1/2 = I D1/2AD1/2

The elements of Lsym are given by

Lsymi,j :=

1 if i = j and deg(vi) = 0 1

deg(vi) deg(vj)if i = j and vi is adjacent to vj

0 otherwise.

The random-walk normalized Laplacian matrix is defined as:

10

https://en.wikipedia.org/wiki/Mathematicshttps://en.wikipedia.org/wiki/Graph_theoryhttps://en.wikipedia.org/wiki/Matrix_(mathematics)https://en.wikipedia.org/wiki/Graph_(mathematics)https://en.wikipedia.org/wiki/Kirchhoff%2527s_theoremhttps://en.wikipedia.org/wiki/Spanning_tree_(mathematics)https://en.wikipedia.org/wiki/Cheeger_constant#Cheeger.27s_inequalityhttps://en.wikipedia.org/wiki/Riemannian_geometryhttps://en.wikipedia.org/wiki/Spectral_graph_theoryhttps://en.wikipedia.org/wiki/Simple_graphhttps://en.wikipedia.org/wiki/Degree_matrixhttps://en.wikipedia.org/wiki/Adjacency_matrixhttps://en.wikipedia.org/wiki/Directed_graphhttps://en.wikipedia.org/wiki/Degree_(graph_theory)https://en.wikipedia.org/wiki/Laplacian_matrix#Symmetric_normalized_Laplacianhttps://en.wikipedia.org/wiki/Laplacian_matrix#Random_walk_normalized_Laplacian -

3.2. EXAMPLE 11

Lrw := D1L = I D1A

The elements of Lrw are given by

Lrwi,j :=

1 if i = j and deg(vi) = 0 1deg(vi) if i = j and vi is adjacent to vj0 otherwise.

3.2 Example

Here is a simple example of a labeled graph and its Laplacian matrix.

3.3 Properties

For an (undirected) graph G and its Laplacian matrix L with eigenvalues 0 1 n1 :

L is symmetric.

L is positive-semidefinite (that is i 0 for all i). This is verified in the incidence matrix section (below). Thiscan also be seen from the fact that the Laplacian is symmetric and diagonally dominant.

L is an M-matrix (its off-diagonal entries are nonpositive, yet the real parts of its eigenvalues are nonnegative).

Every row sum and column sum of L is zero. Indeed, in the sum, the degree of the vertex is summed with a"1 for each neighbor.

In consequence, 0 = 0 , because the vector v0 = (1, 1, . . . , 1) satisfies Lv0 = 0.

The number of times 0 appears as an eigenvalue in the Laplacian is the number of connected components inthe graph.

The smallest non-zero eigenvalue of L is called the spectral gap.

The second smallest eigenvalue of L is the algebraic connectivity (or Fiedler value) of G.

The Laplacian is an operator on the n-dimensional vector space of functions f : V R , where V is the vertexset of G, and n = |V|.

When G is k-regular, the normalized Laplacian is: L = 1kL = I 1kA , where A is the adjacency matrix andI is an identity matrix.

For a graph with multiple connected components, L is a block diagonal matrix, where each block is the respec-tive Laplacian matrix for each component, possibly after reordering the vertices (i.e. L is permutation-similarto a block diagonal matrix).

3.4 Incidence matrix

Define an |e| x |v| oriented incidence matrix M with element Mev for edge e (connecting vertex i and j, with i > j)and vertex v given by

Mev =

1, if v = i1, if v = j0, otherwise.

https://en.wikipedia.org/wiki/Eigenvalueshttps://en.wikipedia.org/wiki/Positive-definite_matrixhttps://en.wikipedia.org/wiki/Laplacian_matrix#Incidence_matrixhttps://en.wikipedia.org/wiki/Diagonally_dominant_matrix#Applications_and_propertieshttps://en.wikipedia.org/wiki/M-matrixhttps://en.wikipedia.org/wiki/Connected_component_(graph_theory)https://en.wikipedia.org/wiki/Spectral_gaphttps://en.wikipedia.org/wiki/Algebraic_connectivityhttps://en.wikipedia.org/wiki/Fiedler_valuehttps://en.wikipedia.org/wiki/Laplacianhttps://en.wikipedia.org/wiki/Connected_component_(graph_theory)https://en.wikipedia.org/wiki/Block_matrix#Block_diagonal_matriceshttps://en.wikipedia.org/wiki/Incidence_matrix -

12 CHAPTER 3. LAPLACIAN MATRIX

Then the Laplacian matrix L satisfies

L = MTM ,

whereMT is the matrix transpose of M.Now consider an eigendecomposition of L , with unit-norm eigenvectors vi and corresponding eigenvalues i :

i = vTi Lvi= vTi MTMvi= (Mvi)T (Mvi).

Because i can be written as the inner product of the vector Mvi with itself, this shows that i 0 and so theeigenvalues of L are all non-negative.

3.5 Deformed Laplacian

The deformed Laplacian is commonly defined as

(s) = I sA+ s2(D I)

where I is the unit matrix, A is the adjacency matrix, and D is the degree matrix, and s is a (complex-valued) number.Note that the standard Laplacian is just(1) .[2]

3.6 Symmetric normalized Laplacian

The (symmetric) normalized Laplacian is defined as

Lsym := D1/2LD1/2 = I D1/2AD1/2

where L is the (unnormalized) Laplacian, A is the adjacency matrix and D is the degree matrix. Since the degreematrix D is diagonal and positive, its reciprocal square rootD1/2 is just the diagonal matrix whose diagonal entriesare the reciprocals of the positive square roots of the diagonal entries of D. The symmetric normalized Laplacian isa symmetric matrix.One has: Lsym = SS , where S is the matrix whose rows are indexed by the vertices and whose columns areindexed by the edges of G such that each column corresponding to an edge e = {u, v} has an entry 1

duin the row

corresponding to u, an entry 1dv

in the row corresponding to v, and has 0 entries elsewhere. (Note: S denotesthe transpose of S).All eigenvalues of the normalized Laplacian are real and non-negative. We can see this as follows. Since Lsym issymmetric, its eigenvalues are real. They are also non-negative: consider an eigenvector g of Lsym with eigenvalue and suppose g = D1/2f . (We can consider g and f as real functions on the vertices v.) Then:

=g, Lsymgg, g

=g,D1/2LD1/2g

g, g=

f, LfD1/2f,D1/2f

=

uv(f(u) f(v))2

v f(v)2dv

> 0,

where we use the inner product f, g =

v f(v)g(v) , a sum over all vertices v, and

uv denotes the sum overall unordered pairs of adjacent vertices {u,v}. The quantity

u,v(f(u) f(v))2 is called the Dirichlet sum of f,

whereas the expression g,Lsymg

g,g is called the Rayleigh quotient of g.

https://en.wikipedia.org/wiki/Transpose -

3.7. RANDOM WALK NORMALIZED LAPLACIAN 13

Let 1 be the function which assumes the value 1 on each vertex. Then D1/21 is an eigenfunction of Lsym witheigenvalue 0.[3]

In fact, the eigenvalues of the normalized symmetric Laplacian satisfy 0 = 0... - 2. These eigenvalues (knownas the spectrum of the normalized Laplacian) relate well to other graph invariants for general graphs.[4]

3.7 Random walk normalized Laplacian

The random walk normalized Laplacian is defined as

Lrw := D1A

where A is the Adjacency matrix and D is the degree matrix. Since the degree matrix D is diagonal, its inverseD1is simply defined as a diagonal matrix, having diagonal entries which are the reciprocals of the corresponding positivediagonal entries of D. For the isolated vertices (those with degree 0), a common choice is to set the correspondingelement Lrwi,i to 0. This convention results in a nice property that the multiplicity of the eigenvalue 0 is equal to thenumber of connected components in the graph. The matrix elements of Lrw are given by

Lrwi,j :=

1 if i = j and deg(vi) = 0 1deg(vi) if i = j and vi is adjacent to vj0 otherwise.

The name of the random-walk normalized Laplacian comes from the fact that this matrix is simply the transitionmatrix of a random walker on the graph. For example let ei denote the i-th standard basis vector, then x = eiLrwis a probability vector representing the distribution of a random-walkers locations after taking a single step fromvertex i . i.e. xj = P(vi vj) . More generally if the vector x is a probability distribution of the location of arandom-walker on the vertices of the graph then x = x(Lrw)t is the probability distribution of the walker after tsteps.One can check that

Lrw = D12 (I Lsym)D 12

i.e., Lrw is similar to the normalized Laplacian Lsym . For this reason, even if Lrw is in general not hermitian, it hasreal eigenvalues. Indeed, its eigenvalues agree with those of Lsym (which is hermitian) up to a reflection about 1/2.In some of the literature, the matrix I D1A is also referred to as the random-walk Laplacian since its propertiesapproximate those of the standard discrete Laplacian from numerical analysis.

3.7.1 Graphs

As an aside about random walks on graphs, consider a simple undirected graph. Consider the probability that thewalker is at the vertex i at time t, given the probability distribution that he was at vertex j at time t-1 (assuming auniform chance of taking a step along any of the edges attached to a given vertex):

pi(t) =j

Aijdeg(vj)

pj(t 1),

or in matrix-vector notation:

p(t) = AD1p(t 1).

(Equilibrium, which sets in as t , is defined by p = AD1p .)

https://en.wikipedia.org/wiki/Standard_basishttps://en.wikipedia.org/wiki/Probability_vectorhttps://en.wikipedia.org/wiki/Random_walk#Random_walk_on_graphshttps://en.wikipedia.org/wiki/Graph_(mathematics)#Undirected_graph -

14 CHAPTER 3. LAPLACIAN MATRIX

We can rewrite this relation as

D12 p(t) =

[D

12AD

12

]D

12 p(t 1).

Areduced D12AD

12 is a symmetric matrix called the reduced adjacency matrix. So, taking steps on this

random walk requires taking powers of Areduced , which is a simple operation because Areduced is symmetric.

3.8 Interpretation as the discrete Laplace operator

The Laplacian matrix can be interpreted as a matrix representation of a particular case of the discrete Laplace oper-ator. Such an interpretation allows one, e.g., to generalise the Laplacian matrix to the case of graphs with an infinitenumber of vertices and edges, leading to a Laplacian matrix of an infinite size.To expand upon this, we can describe the change of some element i (with some constant k) as

didt

= kj

Aij(i j)

= kij

Aij + kj

Aijj

= ki deg(vi) + kj

Aijj

= kj

(ij deg(vi)Aij)j

= kj

(ij)j .

In matrix-vector notation,

d

dt= k(D A)

= kL,

which gives

d

dt+ kL = 0.

Notice that this equation takes the same form as the heat equation, where the matrix L is replacing the Laplacianoperator2 ; hence, the graph Laplacian.To find a solution to this differential equation, apply standard techniques for solving a first-order matrix differentialequation. That is, write as a linear combination of eigenvectors vi of L (so that Lvi = ivi ), with time-dependent =

i

civi.

Plugging into the original expression (note that we will use the fact that because L is a symmetric matrix, its unit-normeigenvectors vi are orthogonal):

https://en.wikipedia.org/wiki/Discrete_Laplace_operatorhttps://en.wikipedia.org/wiki/Discrete_Laplace_operatorhttps://en.wikipedia.org/wiki/Heat_equationhttps://en.wikipedia.org/wiki/Matrix_differential_equationhttps://en.wikipedia.org/wiki/Matrix_differential_equation -

3.8. INTERPRETATION AS THE DISCRETE LAPLACE OPERATOR 15

d(

i civi)dt

+ kL(i

civi) = 0

i

[dcidt

vi + kciLvi]=

i

[dcidt

vi + kciivi]=

dcidt

+ kici = 0,

whose solution is

ci(t) = ci(0) exp(kit).

As shown before, the eigenvalues i of L are non-negative, showing that the solution to the diffusion equation ap-proaches an equilibrium, because it only exponentially decays or remains constant. This also shows that given i andthe initial condition ci(0) , the solution at any time t can be found.[5]

To find ci(0) for each i in terms of the overall initial condition (0) , simply project (0) onto the unit-norm eigen-vectors vi ;ci(0) = (0), vi .In the case of undirected graphs, this works because L is symmetric, and by the spectral theorem, its eigenvectorsare all orthogonal. So the projection onto the eigenvectors of L is simply an orthogonal coordinate transformation ofthe initial condition to a set of coordinates which decay exponentially and independently of each other.

3.8.1 Equilibrium Behavior

To understand limt (t) , note that the only terms ci(t) = ci(0) exp(kit) that remain are those where i = 0, since

limt exp(kit) ={

0 if i > 01 if i = 0

}In other words, the equilibrium state of the system is determined completely by the kernel of L . Since by definition,

j Lij = 0 , the vector v1 of all ones is in the kernel. Note also that if there are k disjoint connected componentsin the graph, then this vector of all ones can be split into the sum of k independent = 0 eigenvectors of ones andzeros, where each connected component corresponds to an eigenvector with ones at the elements in the connectedcomponent and zeros elsewhere.The consequence of this is that for a given initial condition c(0) for a graph with N verticeslimt (t) = c(0), v1v1

wherev1 = 1

N[1, 1, ..., 1]

For each element j of , i.e. for each vertex j in the graph, it can be rewritten as

limt j(t) = 1NN

i=1 ci(0) .In other words, at steady state, the value of converges to the same value at each of the vertices of the graph, whichis the average of the initial values at all of the vertices. Since this is the solution to the heat diffusion equation, thismakes perfect sense intuitively. We expect that neighboring elements in the graph will exchange energy until thatenergy is spread out evenly throughout all of the elements that are connected to each other.

3.8.2 Example of the Operator on a Grid

This section shows an example of a function diffusing over time through a graph. The graph in this example isconstructed on a 2D discrete grid, with points on the grid connected to their eight neighbors. Three initial points are

https://en.wikipedia.org/wiki/Spectral_theoremhttps://en.wikipedia.org/wiki/Kernel_(linear_algebra)https://en.wikipedia.org/wiki/Connected_component_(graph_theory) -

16 CHAPTER 3. LAPLACIAN MATRIX

This GIF shows the progression of diffusion, as solved by the graph laplacian technique. A graph is constructed over a grid, whereeach pixel in the graph is connected to its 8 bordering pixels. Values in the image then diffuse smoothly to their neighbors over timevia these connections. This particular image starts off with three strong point values which spill over to their neighbors slowly. Thewhole system eventually settles out to the same value at equilibrium.

specified to have a positive value, while the rest of the values in the grid are zero. Over time, the exponential decayacts to distribute the values at these points evenly throughout the entire grid.The complete Matlab source code that was used to generate this animation is provided below. It shows the processof specifying initial conditions, projecting these initial conditions onto the eigenvalues of the Laplacian Matrix, andsimulating the exponential decay of these projected initial conditions.N = 20;%The number of pixels along a dimension of the image A = zeros(N, N);%The image Adj = zeros(N*N,N*N);%The adjacency matrix %Use 8 neighbors, and fill in the adjacency matrix dx = [1, 0, 1, 1, 1, 1, 0, 1];dy = [1, 1, 1, 0, 0, 1, 1, 1]; for x = 1:N for y = 1:N index = (x-1)*N + y; for ne = 1:length(dx) newx = x +dx(ne); newy = y + dy(ne); if newx > 0 && newx 0 && newy

-

3.9. APPROXIMATION TO THE NEGATIVE CONTINUOUS LAPLACIAN 17

3.9 Approximation to the negative continuous Laplacian

The graph Laplacian matrix can be further viewed as a matrix form of an approximation to the (positive semi-definite)Laplacian operator obtained by the finite difference method.[6] In this interpretation, every graph vertex is treated asa grid point; the local connectivity of the vertex determines the finite difference approximation stencil at this gridpoint, the grid size is always one for every edge, and there are no constraints on any grid points, which correspondsto the case of the homogeneous Neumann boundary condition, i.e., free boundary.

3.10 In Directed Multigraphs

An analogue of the Laplacian matrix can be defined for directed multigraphs.[7] In this case the Laplacian matrix Lis defined as

L = D A

whereD is a diagonal matrix withDi,i equal to the outdegree of vertex i and A is a matix with Ai,j equal to the numberof edges from i to j (including loops).

3.11 See also Stiffness matrix

Resistance distance

3.12 References[1] Weisstein, Eric W., Laplacian Matrix, MathWorld.

[2] The Deformed Consensus Protocol, F. Morbidi, Automatica, vol. 49, n. 10, pp. 3049-3055, October 2013.

[3] Chung, Fan R.K. (1997). Spectral graph theory (Repr. with corr., 2. [pr.] ed.). Providence, RI: American Math. Soc.ISBN 0-8218-0315-8.

[4] Chung, Fan (1997) [1992]. Spectral Graph Theory. American Mathematical Society. ISBN 0821803158.

[5] Newman, Mark (2010). Networks: An Introduction. Oxford University Press. ISBN 0199206651.

[6] Smola, Alexander J.; Kondor, Risi (2003), Kernels and regularization on graphs, Learning Theory and Kernel Machines:16th Annual Conference on Learning Theory and 7th Kernel Workshop, COLT/Kernel 2003, Washington, DC, USA, August24-27, 2003, Proceedings, Lecture Notes in Computer Science 2777, Springer, pp. 144158, doi:10.1007/978-3-540-45167-9_12.

[7] Chaiken, S. and Kleitman, D. (1978). Matrix Tree Theorems. Journal of Combinatorial Theory, Series A 24 (3): 377 381. ISSN 0097-3165.

T. Sunada, Discrete geometric analysis, Proceedings of Symposia in Pure Mathematics, (ed. by P. Exner, J. P.Keating, P. Kuchment, T. Sunada, A. Teplyaev), 77 (2008), 51-86.

B. Bollobas, Modern Graph Theory, Springer-Verlag (1998, corrected ed. 2013), ISBN 0-387-98488-7,Chapters II.3 (Vector Spaces and Matrices Associated with Graphs), VIII.2 (The Adjacency Matrix and theLaplacian), IX.2 (Electrical Networks and Random Walks).

https://en.wikipedia.org/wiki/Laplacianhttps://en.wikipedia.org/wiki/Finite_difference_methodhttps://en.wikipedia.org/wiki/Stencil_(numerical_analysis)https://en.wikipedia.org/wiki/Neumann_boundary_conditionhttps://en.wikipedia.org/wiki/Stiffness_matrixhttps://en.wikipedia.org/wiki/Resistance_distancehttps://en.wikipedia.org/wiki/Eric_W._Weissteinhttp://mathworld.wolfram.com/LaplacianMatrix.htmlhttps://en.wikipedia.org/wiki/MathWorldhttps://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/0-8218-0315-8http://www.math.ucsd.edu/~fan/research/revised.htmlhttps://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/0821803158https://en.wikipedia.org/wiki/International_Standard_Book_Numberhttps://en.wikipedia.org/wiki/Special:BookSources/0199206651https://en.wikipedia.org/wiki/Digital_object_identifierhttps://dx.doi.org/10.1007%252F978-3-540-45167-9_12https://dx.doi.org/10.1007%252F978-3-540-45167-9_12http://www.sciencedirect.com/science/article/pii/0097316578900675https://en.wikipedia.org/wiki/International_Standard_Serial_Numberhttps://www.worldcat.org/issn/0097-3165https://en.wikipedia.org/wiki/Special:BookSources/0387984887 -

18 CHAPTER 3. LAPLACIAN MATRIX

3.13 Text and image sources, contributors, and licenses

3.13.1 Text Kernel (linear algebra) Source: https://en.wikipedia.org/wiki/Kernel_(linear_algebra)?oldid=672741591Contributors: Dysprosia, Joriki,

BD2412, SmackBot, Incnis Mrsi, Saippuakauppias, Jim.belk, Thijs!bot, RobHar, Magioladitis, Franp9am, R'n'B, Squids and Chips,LokiClock, Jmath666, ArthurOgawa, Alexbot, WikHead, Guy1890, AnomieBOT, Isheden, WaysToEscape, Erik9bot, FrescoBot, Bill-smithaustin, Quondum, D.Lazard, MerlIwBot, BG19bot, Kephir, Tomasz59 and Anonymous: 21

Krawtchouk matrices Source: https://en.wikipedia.org/wiki/Krawtchouk_matrices?oldid=632979006 Contributors: XJaM, Giftlite,Bender235, CBM, Magioladitis, Bearian, Addbot, Tkasprow, Jkocik, Zigli Lucien, EmausBot, ZroBot and Qetuth

Laplacian matrix Source: https://en.wikipedia.org/wiki/Laplacian_matrix?oldid=672818609 Contributors: SimonP, Tomo, MichaelHardy, Meekohi, Doradus, Zero0000, MathMartin, David Gerard, Giftlite, BenFrantzDale, Dratman, Andreas Kaufmann, Jrme, Linas,Jfr26, Snichols15, Arbor, Mathbot, Maxal, YurikBot, Michael Slone, SmackBot, Taxipom, Juffi, Georgevulov, Hiiiiiiiiiiiiiiiiiiiii, Gensdei,Amit Moscovich, Thijs!bot, David Eppstein, Robin S, Quantling, Epistemenical, TXiKiBoT, Mild Bill Hiccup, Yifeng zhou, Mleconte,IMneme, Addbot, Gtgith, Luckas-bot, Yobot, AnomieBOT, Megatang, Aminrahimian, Daniel.noland, Pnzrusher, Delio.mugnolo, 2an-drewknyazev, Dhirsbrunner, Bazuz, Helpful Pixie Bot, Svebert, Dlituiev, BattyBot, Daniel.Soudry, Altroware, Boxseat, More than onehalf, Coal scuttle, Ctralie, Ako90, Mcallara, Mattatk and Anonymous: 46

3.13.2 Images File:6n-graf.svg Source: https://upload.wikimedia.org/wikipedia/commons/5/5b/6n-graf.svgLicense: Public domainContributors: Image:

6n-graf.png simlar input data Original artist: User:AzaToth File:Edit-clear.svg Source: https://upload.wikimedia.org/wikipedia/en/f/f2/Edit-clear.svg License: Public domain Contributors: TheTango! Desktop Project. Original artist:The people from the Tango! project. And according to themeta-data in the file, specifically: Andreas Nilsson, and Jakub Steiner (althoughminimally).

File:Graph_Laplacian_Diffusion_Example.gif Source: https://upload.wikimedia.org/wikipedia/commons/e/e1/Graph_Laplacian_Diffusion_Example.gif License: CC BY-SA 3.0 Contributors: Own work Original artist: Ctralie

File:KerIm_2015Joz_L2.png Source: https://upload.wikimedia.org/wikipedia/commons/4/4c/KerIm_2015Joz_L2.png License: CCBY-SA 4.0 Contributors: Own work Original artist: Tomasz59

File:Rubik{}s_cube_v3.svg Source: https://upload.wikimedia.org/wikipedia/commons/b/b6/Rubik%27s_cube_v3.svg License: CC-BY-SA-3.0 Contributors: Image:Rubik{}s cube v2.svg Original artist: User:Booyabazooka, User:Meph666 modified by User:Niabot

File:Wikibooks-logo-en-noslogan.svg Source: https://upload.wikimedia.org/wikipedia/commons/d/df/Wikibooks-logo-en-noslogan.svg License: CC BY-SA 3.0 Contributors: Own work Original artist: User:Bastique, User:Ramac et al.

3.13.3 Content license Creative Commons Attribution-Share Alike 3.0

https://en.wikipedia.org/wiki/Kernel_(linear_algebra)?oldid=672741591https://en.wikipedia.org/wiki/Krawtchouk_matrices?oldid=632979006https://en.wikipedia.org/wiki/Laplacian_matrix?oldid=672818609https://upload.wikimedia.org/wikipedia/commons/5/5b/6n-graf.svg//commons.wikimedia.org/wiki/File:6n-graf.png//commons.wikimedia.org/wiki/File:6n-graf.png//commons.wikimedia.org/wiki/User:AzaTothhttps://upload.wikimedia.org/wikipedia/en/f/f2/Edit-clear.svghttp://tango.freedesktop.org/Tango_Desktop_Projecthttp://tango.freedesktop.org/The_Peoplehttps://upload.wikimedia.org/wikipedia/commons/e/e1/Graph_Laplacian_Diffusion_Example.gifhttps://upload.wikimedia.org/wikipedia/commons/e/e1/Graph_Laplacian_Diffusion_Example.gif//commons.wikimedia.org/w/index.php?title=User:Ctralie&action=edit&redlink=1https://upload.wikimedia.org/wikipedia/commons/4/4c/KerIm_2015Joz_L2.png//commons.wikimedia.org/w/index.php?title=User:Tomasz59&action=edit&redlink=1https://upload.wikimedia.org/wikipedia/commons/b/b6/Rubik%2527s_cube_v3.svg//commons.wikimedia.org/wiki/File:Rubik%2527s_cube_v2.svg//commons.wikimedia.org/wiki/User:Booyabazooka//commons.wikimedia.org/w/index.php?title=User:Meph666&action=edit&redlink=1//commons.wikimedia.org/wiki/User:Niabothttps://upload.wikimedia.org/wikipedia/commons/d/df/Wikibooks-logo-en-noslogan.svghttps://upload.wikimedia.org/wikipedia/commons/d/df/Wikibooks-logo-en-noslogan.svg//commons.wikimedia.org/wiki/User:Bastique//commons.wikimedia.org/wiki/User:Ramachttps://creativecommons.org/licenses/by-sa/3.0/Kernel (linear algebra)Properties of the Kernel Application to modulesThe kernel in functional analysisRepresentation as matrix multiplication Subspace properties The Row Space of a MatrixLeft null spaceNonhomogeneous systems of linear equationsIllustration Examples Computation by Gaussian eliminationNumerical computation Exact coefficientsFloating point computationSee alsoNotesReferencesExternal linksKrawtchouk matricesSee alsoReferencesExternal linksLaplacian matrixDefinitionExamplePropertiesIncidence matrix Deformed Laplacian Symmetric normalized Laplacian Random walk normalized Laplacian Graphs Interpretation as the discrete Laplace operator Equilibrium Behavior Example of the Operator on a Grid Approximation to the negative continuous Laplacian In Directed Multigraphs See also References Text and image sources, contributors, and licensesTextImagesContent license