MAC Fall Symposium: Learning What You Want to Know and Implementing Change Elizabeth Yakel, Ph.D....

-

Upload

della-greene -

Category

Documents

-

view

213 -

download

0

Transcript of MAC Fall Symposium: Learning What You Want to Know and Implementing Change Elizabeth Yakel, Ph.D....

MAC Fall Symposium: Learning What You Want to Know and

Implementing Change

Elizabeth Yakel, Ph.D.October 22, 2010

Outline• Asking good research questions• Identifying the right method to get the answers• Operationalizing your concepts• Piloting your instruments• Implementing the study– Identifying subjects– Sample size

• Human subjects issues

Asking Good Research Questions

• What do you want to know?

• Selecting the right method for what you want to answer

Implications of Questions• Reference– Satisfaction levels; user needs

• Collections usage– Measuring use; Citation analysis

• Website or finding aids– Ease of use; usage patterns

• Instruction– Evaluation of teaching; impact of primary sources

on learning

Reference• User-based evaluation– How satisfied are researchers with public services

in my repository?– Getting reseachers’ opinions about operations to

improve service

• User needs– How do researchers’ questions evolve during an

archives visit and how can I better support their resource discovery?

– Understanding general information seeking patterns and research questions

Collections Usage• Measuring use– What are the patterns in collection requests and does

my retrieval schedule and reading room staffing support this level of use?

– Assess workflow around collection retrieval (rate and times for retrieval, time from request to

– Assess levels of staffing in the reading room

• Quality / Nature of use– How do researchers actually use collections in my

repository?– Citation patterns / rates

Website

• Ease of use– What problems do users encounter on my

website?– Make changes to the website to improve the user

experience

• Patterns of use– How do visitors find my website and what do the

committed visitors do when they are there?

Instruction

• Evaluation of teaching– How can I improve my lecture on using the

archives?

• Assessment of learning– What is the impact of using the archives on

students’ critical thinking skills?

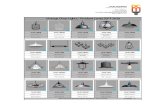

METHODSNon-invasive Invasive

Reference ObservationStatistics (# of readers, registrants, questions)Content analysis (online chat or email reference)

SurveysFocus GroupsInterviews

Web site Web analyticsContent analysis of search terms

SurveysUsability testsFocus GroupsInterviews

Collections Usage Statistics (circulation)Call slipsCitation analysis

SurveysFocus GroupsInterviews

Finding aids Web analyticsContent analysis of search termsObservationWeb 2.0 (analysis of comments/tags)

SurveysFocus GroupsInterviewsUsability tests

Instruction Statistics (# of classes taught, # of participants)# of archives visits after session

Survey Grades / Student assessmentSurveysFocus GroupsInterviewsField experiment

Operationalizing Concepts

• Reference– Satisfaction with services• Which services?• What is the nature of the satisfaction?

– Friendliness of the staff– Time to retrieve materials– Reference process or procedures– Find what you were looking for?

Operationalizing Concepts (2)

• Ease of use– Ability to use online finding aids– Ability to locate key information in < 1 minute

(hours, parking)– Specific tasks that you want researches to do on

the website

Operationalizing Concepts (3)

• Instruction - How does archives use contribute to critical thinking skills?– Confidence– Use of skills learned in the archives– Explain archives to a peer– Assessment

Measuring Your Concepts

• Lib Qual– Gap measurement– Minimum service level, desired service level,

and perceived service performance– Service superiority = Perceived minus desired

service– Service adequacy = Perceived minus minimum

Measuring Your Concepts

• Explore your options• Qualitative measures– Find what you were looking for?

• Quantitative measures– Approachability of the reference staff• Yes / no• Scale

Concepts and InstrumentsConcept Type of instrument

Reference Satisfaction with services

Work processes

Survey

Focus groups

Web site Ease of use Surveys

Collections Usage Nature of use Interviews / Focus groups

Finding aids Ease of use (search, navigation, locating important information)

Usability tests

Instruction Evaluation of orientation

Learning

Survey

Administration IssuesPros Cons

Paper ImmediateSize readily recognizableAllows for a wider set of data

Have to enter data

Online Data is already enteredAllows for skip logicGives people constraints

Hard to assess lengthNeed more contextual cues

Pre-Implementation

• Pilot testing– Staff– Targeted participants • 1-2 make changes• 1-2 make more changes

Population / Sample• Reference: – All Researchers using the archives / special

collections / Researchers in the past month

• Collections usage: – All call slips / the past year of call slips

• Website: – All search terms used / Last 1000 searches

• Instruction– Students in the classes to which you gave talks /

students with a requirement to use the archives

Administration Instructions

• Getting staff on board• Creating a script / email message• System for recruiting– Data range– Type of user– Random sampling

Common Issues in User-based Research

• Memory– Recent of contact• 3-6 months

– Frequency of contact• Several times a year

• Knowledge of Archives– What prompts do you need to provide?

Recruiting• Website survey– Link on website– Send link to recent • Email reference requestors• Recent onsite researchers

– In-house researchers– Stakeholders– Partner groups (genealogical societies)

• Focus groups– Composition

Recruiting

• Usability testing– Single individuals representing specific types– “Friendly dyads”

• Interviews– Single– Researchers working together

Representativeness

• Does the sample mirror or approximate the population?

• Does the sample truly represent the group you are targeting?

Response Rate

• Surveys– Large surveys 15%-20%– Archives surveys (n=50)– Percentage of respondents mirrors percentage of

like users?– Responses per question