Load Shedding in Network Monitoring...

Transcript of Load Shedding in Network Monitoring...

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding in Network

Monitoring Applications

Pere Barlet Ros

Advisor: Dr. Gianluca Iannaccone

Co-advisor: Prof. Josep Solé Pareta

Universitat Politècnica de Catalunya (UPC)Departament d’Arquitectura de Computadors

December 15, 2008

1 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

2 Prediction and Load Shedding Scheme

3 Fairness of Service and Nash Equilibrium

4 Custom Load Shedding

5 Conclusions and Future Work

2 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

MotivationRelated workContributions

2 Prediction and Load Shedding Scheme

3 Fairness of Service and Nash Equilibrium

4 Custom Load Shedding

5 Conclusions and Future Work

3 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Motivation

Network monitoring is crucial for operating data networks

Traffic engineering, network troubleshooting, anomaly detection . . .

Monitoring systems are prone to dramatic overload situations

Link speeds, anomalous traffic, bursty traffic nature . . .

Complexity of traffic analysis methods

Overload situations lead to uncontrolled packet loss

Severe and unpredictable impact on the accuracy of applications

. . . when results are most valuable!!

4 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Motivation

Network monitoring is crucial for operating data networks

Traffic engineering, network troubleshooting, anomaly detection . . .

Monitoring systems are prone to dramatic overload situations

Link speeds, anomalous traffic, bursty traffic nature . . .

Complexity of traffic analysis methods

Overload situations lead to uncontrolled packet loss

Severe and unpredictable impact on the accuracy of applications

. . . when results are most valuable!!

Load Shedding Scheme

Efficiently handle extreme overload situations

Over-provisioning is not feasible

4 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Related Work

Load shedding in data stream management systems (DSMS)

Examples: Aurora, STREAM, TelegraphCQ, Borealis, . . .

Based on declarative query languages (e.g., CQL)

Small set of operators with known (and constant) cost

Maximize an aggregate performance metric (utility or throughput)

Limitations

Restrict the type of metrics and possible uses

Assume explicit knowledge of operators’ cost and selectivity

Not suitable for non-cooperative environments

Resource management in network monitoring systems

Restricted to a pre-defined set of metrics

Limit the amount of allocated resources in advance

5 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Contributions

1 Prediction method

Operates w/o knowledge of application cost and implementation

Does not rely on a specific model for the incoming traffic

2 Load shedding scheme

Anticipates overload situations and avoids packet loss

Relies on packet and flow sampling (equal sampling rates)

Extensions:

3 Packet-based scheduler

Applies different sampling rates to different queries

Ensures fairness of service with non-cooperative applications

4 Support for custom-defined load shedding methods

Safely delegates load shedding to non-cooperative applications

Still ensures robustness and fairness of service

6 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

2 Prediction and Load Shedding SchemeCase Study: Intel CoMoPrediction Methodology

Load Shedding SchemeEvaluation and Operational Results

3 Fairness of Service and Nash Equilibrium

4 Custom Load Shedding

5 Conclusions and Future Work

7 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Case Study: Intel CoMo

CoMo (Continuous Monitoring)1

Open-source passive monitoring system

Framework to develop and execute network monitoring applications

Open (shared) network monitoring platform

Traffic queries are defined as plug-in modules written in C

Contain complex computations

1http://como.sourceforge.net

8 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Case Study: Intel CoMo

CoMo (Continuous Monitoring)1

Open-source passive monitoring system

Framework to develop and execute network monitoring applications

Open (shared) network monitoring platform

Traffic queries are defined as plug-in modules written in C

Contain complex computations

Traffic queries are black boxes

Arbitrary computations and data structures

Load shedding cannot use knowledge of the queries

1http://como.sourceforge.net

8 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding Approach

Working Scenario

Monitoring system supporting multiple arbitrary queries

Single resource: CPU cycles

Approach: Real-time modeling of the queries’ CPU usage

1 Find correlation between traffic features and CPU usage

Features are query agnostic with deterministic worst case cost

2 Exploit the correlation to predict CPU load

3 Use the prediction to decide the sampling rate

9 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

System Overview

Figure: Prediction and Load Shedding Subsystem

10 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Traffic Features vs CPU Usage

0 10 20 30 40 50 60 70 80 90 1000

2

4

x 106

CP

U c

ycle

s

0 10 20 30 40 50 60 70 80 90 1000

1000

2000

3000

Packets

0 10 20 30 40 50 60 70 80 90 1000

5

10

15

x 105

Byte

s

0 10 20 30 40 50 60 70 80 90 1000

1000

2000

3000

Time (s)

5−

tuple

flo

ws

Figure: CPU usage compared to the number of packets, bytes and flows

11 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Traffic Features vs CPU Usage

1800 2000 2200 2400 2600 2800 30001.4

1.6

1.8

2

2.2

2.4

2.6

2.8x 10

6

packets/batch

CP

U c

ycle

s

new_5tuple_hashes < 500

500 <= new_5tuple_hashes < 700

700 <= new_5tuple_hashes < 1000

new_5tuple_hashes >= 1000

Figure: CPU usage versus the number of packets and flows

12 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Prediction Methodology2

Multiple Linear Regression (MLR)

Yi = β0 + β1X1i + β2X2i + · · · + βpXpi + εi , i = 1, 2, . . . ,n.

Yi = n observations of the response variable (measured cycles)

Xji = n observations of the p predictors (traffic features)

βj = p regression coefficients (unknown parameters to estimate)

εi = n residuals (OLS minimizes SSE)

2P. Barlet-Ros et al. "Load Shedding in Network Monitoring Applications", Proc. of USENIX Annual Technical Conference, 2007.

13 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Prediction Methodology2

Multiple Linear Regression (MLR)

Yi = β0 + β1X1i + β2X2i + · · · + βpXpi + εi , i = 1, 2, . . . ,n.

Yi = n observations of the response variable (measured cycles)

Xji = n observations of the p predictors (traffic features)

βj = p regression coefficients (unknown parameters to estimate)

εi = n residuals (OLS minimizes SSE)

Feature Selection

Variant of the Fast Correlation-Based Filter (FCBF)

Removes irrelevant and redundant predictors

Reduces significantly the cost and improves accuracy of the MLR

2P. Barlet-Ros et al. "Load Shedding in Network Monitoring Applications", Proc. of USENIX Annual Technical Conference, 2007.

13 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding Scheme3

Prediction and Load Shedding subsystem

1 Each 100ms of traffic is grouped into a batch of packets

2 The traffic features are efficiently extracted from the batch (multi-resolution bitmaps)

3 The most relevant features are selected (using FCBF) to be used by the MLR

4 MLR predicts the CPU cycles required by each query to run

5 Load shedding is performed to discard a portion of the batch

6 CPU usage is measured (using TSC) and fed back to the prediction system

3P. Barlet-Ros et al. "On-line Predictive Load Shedding for Network Monitoring", Proc. of IFIP-TC6 Networking, 2007.

14 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Load Shedding Performance

09 am 10 am 11 am 12 pm 01 pm 02 pm 03 pm 04 pm 05 pm0

1

2

3

4

5

6

7

8

9x 10

9

time

CP

U u

sage [cycle

s/s

ec]

CoMo cycles

Load shedding cycles

Query cycles

Predicted cycles

CPU limit

Figure: Stacked CPU usage (Predictive Load Shedding)

15 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Load Shedding Performance

0 2 4 6 8 10 12 14 16

x 108

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

CPU usage [cycles/batch]

F(C

PU

usage)

CPU limit per batch

Predictive

Original

Reactive

Figure: CDF of the CPU usage per batch

16 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Packet Loss

09 am 10 am 11 am 12 pm 01 pm 02 pm 03 pm 04 pm 05 pm0

1

2

3

4

5

6

7

8

9

10

11x 10

4

time

pa

cke

ts

Total

DAG drops

(a) Original CoMo

09 am 10 am 11 am 12 pm 01 pm 02 pm 03 pm 04 pm 05 pm0

1

2

3

4

5

6

7

8

9

10

11x 10

4

time

pa

cke

ts

Total

DAG drops

Unsampled

(b) Reactive Load Shedding

09 am 10 am 11 am 12 pm 01 pm 02 pm 03 pm 04 pm 05 pm0

1

2

3

4

5

6

7

8

9

10

11x 10

4

time

pa

cke

ts

Total

DAG drops

Unsampled

(c) Predictive Load Shedding

Figure: Link load and packet drops

17 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Error of the Queries

Query original reactive predictive

application (pkts) 55.38% ±11.80 10.61% ±7.78 1.03% ±0.65

application (bytes) 55.39% ±11.80 11.90% ±8.22 1.17% ±0.76

counter (pkts) 55.03% ±11.45 9.71% ±8.41 0.54% ±0.50

counter (bytes) 55.06% ±11.45 10.24% ±8.39 0.66% ±0.60

flows 38.48% ±902.13 12.46% ±7.28 2.88% ±3.34

high-watermark 8.68% ±8.13 8.94% ±9.46 2.19% ±2.30

top-k destinations 21.63 ±31.94 41.86 ±44.64 1.41 ±3.32

Table: Errors in the query results (mean ± stdev )

Original Reactive Predictive0

5

10

15

20

25

30

35

40

45

50

Err

or

(%)

18 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Load Shedding Overhead

09 am 10 am 11 am 12 pm 01 pm 02 pm 03 pm 04 pm 05 pm0

1

2

3

4

5

6

7

8

9x 10

9

time

CP

U u

sage [cycle

s/s

ec]

CoMo cycles

Load shedding cycles

Query cycles

Predicted cycles

CPU limit

Figure: Stacked CPU usage (Predictive Load Shedding)

19 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

2 Prediction and Load Shedding Scheme

3 Fairness of Service and Nash EquilibriumLoad Shedding Strategy

Evaluation

4 Custom Load Shedding

5 Conclusions and Future Work

20 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Fairness with Non-Cooperative Users4

Previous scheme does not differentiate among queries

An equal sampling rate is applied to all queries

Better results can be obtained applying different sampling rates

Considering the tolerance to sampling of each query

Challenges

Queries are black boxes (i.e. external information is needed)

Users are non-cooperative (selfish) in nature

4P. Barlet-Ros et al. "Robust Network Monitoring in the presence of Non-Cooperative Traffic Queries", Computer Networks, 2008.

21 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Fairness with Non-Cooperative Users4

Previous scheme does not differentiate among queries

An equal sampling rate is applied to all queries

Better results can be obtained applying different sampling rates

Considering the tolerance to sampling of each query

Challenges

Queries are black boxes (i.e. external information is needed)

Users are non-cooperative (selfish) in nature

Game theory-based strategy

Variant of max-min fair share allocation policy

Users provide a minimum sampling rate

Single Nash Equilibrium when users provide correct information

4P. Barlet-Ros et al. "Robust Network Monitoring in the presence of Non-Cooperative Traffic Queries", Computer Networks, 2008.

21 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding Strategy (I)

Load shedding strategy

1 If (1), (2) are not satisfied, disable queries with greatest mq × dq

2 Compute cq ’s that satisfy (1) and (2):

mmfs_cpu: Maximize minimum allocation of cycles (cq)

mmfs_pkt: Maximize minimum sampling rate (pq)

Constraints

∀q∈Q (mq × dq) ≤ cq ≤ dq (1)∑

q∈Q

cq ≤ C (2)

Notation

bdq = Cycles demanded by query q ∈ Q (prediction)

mq = Minimum sampling rate of query q ∈ Q

cq = Cycles allocated to query q ∈ Q

C = System capacity in CPU cycles

22 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding Strategy (II)

Advantages

1 Single Nash Equilibrium (NE) at C|Q| (fair share of C)

2 Enforces users to provide small mq constraints

3 Non-cooperative users have no incentive to lie

4 Optimal solution can be computed in polynomial time

23 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding Strategy (II)

Advantages

1 Single Nash Equilibrium (NE) at C|Q| (fair share of C)

2 Enforces users to provide small mq constraints

3 Non-cooperative users have no incentive to lie

4 Optimal solution can be computed in polynomial time

Limitations of previous approaches

Maximize an aggregate performance metric (utility, throughput)

Difficult to compute (assumptions on cost, selectivity, utility func.)

NE when all players ask for the maximum possible allocation

Not suitable for non-cooperative environments

23 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Results: Performance Evaluation

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy (

mean)

no_lshed

reactive

eq_srates

mmfs_cpu

mmfs_pkt

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy (

min

)

no_lshed

reactive

eq_srates

mmfs_cpu

mmfs_pkt

Figure: Average (left) and minimum (right) accuracy with fixed mq constraints

24 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

2 Prediction and Load Shedding Scheme

3 Fairness of Service and Nash Equilibrium

4 Custom Load Shedding

Proposed MethodEnforcement Policy

5 Conclusions and Future Work

25 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Custom Load Shedding5

Not all monitoring applications are robust against sampling

Previous scheme penalizes these queries (mq = 1)

Queries can compute more accurate results with other methods

Examples: sample-and-hold, compute summaries, . . .

The solution of providing multiple mechanisms is unviable

The monitoring system supports arbitrary queries

5P. Barlet-Ros et al. "Custom Load Shedding for Non-Cooperative Network Monitoring Applications", submitted to IEEE Infocom 2009.

26 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Custom Load Shedding5

Not all monitoring applications are robust against sampling

Previous scheme penalizes these queries (mq = 1)

Queries can compute more accurate results with other methods

Examples: sample-and-hold, compute summaries, . . .

The solution of providing multiple mechanisms is unviable

The monitoring system supports arbitrary queries

Custom Load Shedding

Delegates the task of shedding load to end users

Queries know their implementation (system == black boxes)

Queries can compete under fair conditions for shared resources

5P. Barlet-Ros et al. "Custom Load Shedding for Non-Cooperative Network Monitoring Applications", submitted to IEEE Infocom 2009.

26 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Custom Load Shedding5

Not all monitoring applications are robust against sampling

Previous scheme penalizes these queries (mq = 1)

Queries can compute more accurate results with other methods

Examples: sample-and-hold, compute summaries, . . .

The solution of providing multiple mechanisms is unviable

The monitoring system supports arbitrary queries

Custom Load Shedding

Delegates the task of shedding load to end users

Queries know their implementation (system == black boxes)

Queries can compete under fair conditions for shared resources

Requires an enforcement mechanism

5P. Barlet-Ros et al. "Custom Load Shedding for Non-Cooperative Network Monitoring Applications", submitted to IEEE Infocom 2009.

26 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Example: P2P detector query

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

load shedding rate

err

or

flow sampling

packet sampling

custom load shedding

Figure: Accuracy error of a signature-based P2P detector query

27 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Enforcement Policy

10.90.80.70.60.50.40.30.20.10

108

109

load shedding rate (rq)

CP

U c

ycle

s (

log)

selfish prediction

selfish actual

custom prediction

custom actual

Figure: Actual vs predicted CPU usage (p2p-detector)

MLR update

history_value =dq

1−rq

28 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Outline

1 Introduction

2 Prediction and Load Shedding Scheme

3 Fairness of Service and Nash Equilibrium

4 Custom Load Shedding

5 Conclusions and Future WorkConclusions

Future Work

29 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Conclusions

Effective load shedding methods are now a basic requirement

Increasing data rates, number of users and application complexity

Robustness against traffic anomalies and attacks

Predictive load shedding scheme

Operates without knowledge of the traffic queries

Does not rely on a specific model for the input traffic

Anticipates overload situations avoiding uncontrolled packet loss

Graceful performance degradation (sampling & custom methods)

Suitable for non-cooperative environments

Results in two operational networks show that:

The system is robust against severe overload

The impact on the accuracy of the results is minimized

30 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Future Work

Study other system resources

Examples: memory, disk bandwidth, storage space, . . .

Multi-dimensional load shedding schemes

Extend the prediction model

Study queries with non-linear relationships with traffic features

Include payload-related and entropy-based features

Address resource management problem in a distributed platform

Load balancing and distribution techniques

Other metrics: bandwidth between nodes, query delays, . . .

31 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Main Publications

P. Barlet-Ros, D. Amores-López, G. Iannaccone, J. Sanjuàs-Cuxart, and J.Solé-Pareta. On-line Predictive Load Shedding for Network Monitoring. In Proc.of IFIP-TC6 Networking, Atlanta, USA, May 2007.

P. Barlet-Ros, G. Iannaccone, J. Sanjuàs-Cuxart, D. Amores-López, and J.Solé-Pareta. Load Shedding in Network Monitoring Applications. In Proc. ofUSENIX Annual Technical Conf., Santa Clara, USA, June 2007.

P. Barlet-Ros, J. Sanjuàs-Cuxart, J. Solé-Pareta, and G. Iannaccone. RobustResource Allocation for Online Network Monitoring. In Proc. of Intl.Telecommunications Network Workshop on QoS in Multiservice IP Networks(ITNEWS-QoSIP), Venice, Italy, Feb. 2008.

P. Barlet-Ros, G. Iannaccone, J. Sanjuàs-Cuxart, and J. Solé-Pareta. RobustNetwork Monitoring in the presence of Non-Cooperative Traffic Queries.Computer Networks, Oct. 2008 (in press).

Under submission:

P. Barlet-Ros, G. Iannaccone, J. Sanjuàs-Cuxart, and J. Solé-Pareta. CustomLoad Shedding for Non-Cooperative Monitoring Applications. Submitted to IEEEINFOCOM, Aug. 2008.

32 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Availability

The source code of load shedding system is publicly available athttp://loadshedding.ccaba.upc.edu

The CoMo monitoring system is available athttp://como.sourceforge.net

Acknowledgments

This work was funded by a University Research Grant awarded by the Intel ResearchCouncil and the Spanish Ministry of Education under contracts TSI2005-07520-C03-02(CEPOS) and TEC2005-08051-C03-01 (CATARO)

We thank CESCA and UPCnet for allowing us to evaluate the load shedding prototype in theCatalan NREN and UPC network respectively.

33 / 33

Introduction Load Shedding Fairness and NE Custom Load Shedding Conclusions

Load Shedding in Network

Monitoring Applications

Pere Barlet Ros

Advisor: Dr. Gianluca Iannaccone

Co-advisor: Prof. Josep Solé Pareta

Universitat Politècnica de Catalunya (UPC)Departament d’Arquitectura de Computadors

December 15, 2008

33 / 33

Appendix Backup Slides *

Outline

6 Backup SlidesTestbed ScenarioIntroduction

CoMo SystemPrediction MethodPrediction Validation and EvaluationLoad Shedding Scheme

Robustness Against AttacksNash EquilibriumCustom Load SheddingPublications

-3 / 33

Appendix Backup Slides *

Testbed Scenario

CESCA

scenario

UPC

scenario

optical

spli t ters

optical

spli t ters

1 Gbps1 Gbps

PC-1: online CoMo

(2 x DAG 4.3GE)

PC-2: trace collection

(2 x DAG 4.3GE)

clock synchronization

INTERNET

RedIRIS UPC networkScientific Ring

(~70 inst i tut ions)

Figure: CESCA and UPCnet scenarios

-2 / 33

Appendix Backup Slides *

Datasets

Name Date/TimeTrace Pkts Bytes Load (Mbps)(GB) (M) (GB) avg/max/min

ABILENE 14/Aug/02 09:00-11:00 34.1 532.4 370.6 411.9/623.8/286.2

CENIC 17/Mar/05 15:50-16:20 3.8 59.5 56.0 249.7/936.9/079.1

CESCA-I 02/Nov/05 16:30-17:00 8.3 103.7 81.1 360.5/483.3/197.3

CESCA-II 11/Apr/06 08:00-08:30 30.9 49.4 29.9 133.0/212.2/096.2

UPC-I 07/Nov/07 18:00-18:30 54.7 95.2 53.0 253.5/399.0/177.8

Table: Traces in our dataset

Name Date/TimeTrace Pkts Bytes Load (Mbps)(GB) (M) (GB) avg/max/min

CESCA-III 24/Oct/06 09:00-17:00 155.5 2908.2 2764.8 750.4/973.6/129.0

CESCA-IV 25/Oct/06 09:00-17:00 152.5 2867.2 2652.2 719.9/967.5/218.0

CESCA-V 05/Dec/06 09:00-17:00 138.6 2037.8 1484.8 403.3/771.6/131.0

UPC-II 24/Apr/08 09:00-09:30 47.6 61.3 46.5 222.2/282.1/176.9

Table: Online executions

-1 / 33

Appendix Backup Slides *

Monitoring Applications (Traffic Queries)

Query Description Method Costapplication Port-based application classification packet lowautofocus High volume traffic clusters per subnet packet medcounter Traffic load in packets and bytes packet lowflows Per-flow classification and number of active flows flow lowhigh-watermark High watermark of link utilization over time packet lowp2p-detector Signature-based P2P detector custom highpattern-search Identifies sequences of bytes in the payload packet highsuper-sources Detection of sources with largest fan-out flow medtop-k Ranking of the top-k destination IP addresses packet lowtrace Full-payload packet collection custom med

applic

ation

auto

focus

counte

r

flow

s

hig

h-w

ate

rmark

p2p-d

ete

cto

r

pattern

-searc

h

super-

sourc

es

top-k

trace

0

1

2

3

x108

43

44

CP

U c

ost

(cycle

s/s

)

0 / 33

Appendix Backup Slides *

Challenges

1 Monitoring applications operate in real-time with live traffic

Load shedding scheme must be lightweight

Quickly adapt to overload to prevent packet loss

2 Monitoring applications are non-cooperative

They will try to obtain the maximum resource share

The system must ensure fairness of service and avoid starvation

3 Applications are arbitrary with unknown resource demands

Input data is continuous, highly variable and unpredictable

Applications developed by 3rd parties using imperative languages

No assumptions about the input traffic or application cost

1 / 33

Appendix Backup Slides *

Problem Space

Static (offline) Dynamic (online)

Local

Static query assignment

Resource provisioning

Load shedding

Query scheduling

Global

Placement of monitors

Placement of queries

Dissemination of queries

Load distribution

Table: Resource management problem space

2 / 33

Appendix Backup Slides *

General-Purpose Network Monitoring

Developing and deploying monitoring applications is complex

Continuous streams, high-speed sources, highly variable rates, . . .

Different network devices, transport tech., system configs

E.g., NICs, DAG cards, NetFlow, NPs, SNMP, . . .

Most application code is dedicated to deal with lateral aspects

Increased application complexity

Large development times

High probability of introducing undesired errors

Open network monitoring infrastructures

Abstract away the inner workings of the infrastructure

No tailor made for a single specific application

Examples: Scriptroute, FLAME, Intel CoMo, . . .

Research community demands open monitoring infrastructures

Share measurement data from multiple network viewpoints

Fast prototype applications to evaluate novel analysis methods

Study properties of network traffic and protocols

3 / 33

Appendix Backup Slides *

CoMo Architecture

Callback Description Process

check() Stateful filterscapture

update() Packet processing

export() Processes tuples sent by captureexportaction() Decides what to do with a record

store() Stores records to disk

load() Loads records from diskquery

print() Formats records

4 / 33

Appendix Backup Slides *

Work Hypothesis

Our thesis

Cost of maintaining data structures needed to execute a querycan be modeled looking at a set of traffic features

Empirical observation

Different overhead when performing basic operations on thestate while processing incoming traffic

E.g., creating or updating entries, looking for a valid match, etc.

Cost is dominated by the overhead of some of these operations

5 / 33

Appendix Backup Slides *

Work Hypothesis

Our thesis

Cost of maintaining data structures needed to execute a querycan be modeled looking at a set of traffic features

Empirical observation

Different overhead when performing basic operations on thestate while processing incoming traffic

E.g., creating or updating entries, looking for a valid match, etc.

Cost is dominated by the overhead of some of these operations

Our method

Models queries’ cost by considering the right set of traffic features

5 / 33

Appendix Backup Slides *

Traffic Features

No. Traffic aggregate

1 src-ip

2 dst-ip

3 protocol

4 <src-ip, dst-ip>

5 <src-port, proto>

6 <dst-port, proto>

7 <src-ip, src-port, proto>

8 <dst-ip, dst-port, proto>

9 <src-port, dst-port, proto>

10 <src-ip, dst-ip, src-port, dst-port, proto>

Counters per traffic aggregate

1 Number of unique items in a batch

2 Number of new items in a measurement interval

3 Number of repeated items in a batch

4 Number of repeated items in a measurement interval

6 / 33

Appendix Backup Slides *

Multiple Linear Regression

Multiple Linear Regression (MLR)

Yi = β0 + β1X1i + β2X2i + · · · + βpXpi + εi , i = 1, 2, . . . ,n.

Yi = n observations of the response variable (measured cycles)

Xji = n observations of the p predictors (traffic features)

βj = p regression coefficients (unknown parameters to estimate)

εi = n residuals (OLS minimizes SSE)

Cost = O(min(np2, n2p))

OLS assumptions

X variables are independent (no multicollinearity)

εi is normally distributed and expected value of ε vector is 0

No correlation between residuals and exhibit constant variance

Covariance between predictors and residuals is 0

7 / 33

Appendix Backup Slides *

Fast Correlation Based Filter6

FCBF Algorithm

1 Selecting relevant predictors

Correlation of each predictor (X ) and response variable (Y ):

r=

Pni=1

(Xi−X)(Yi−Y )√Pn

i=1(Xi−X )2

√Pn

i=1(Yi−Y )2

Predictors r < FCBF threshold are discarded

2 Removing redundant predictors

Sort predictors according to r

Iteratively compute r ′ among each pair of predictors

If r ′ > r , the predictor lower in the list is removed

Cost

O(n p log p)

6L. Yu and H. Liu. Feature Selection for High-Dimensional Data: A Fast Correlation-Based Filter Solution, Proc. of ICML, 2003.

8 / 33

Appendix Backup Slides *

Resource Usage Measurements

Time-stamp counter (TSC)

64-bit counter incremented by the processor at each clock cycle

rdtsc: Reads TSC counter

Sources of measurement noise

1 Instruction reordering: Serializing instruction (e.g., cpuid)

2 Context switches: Discard sample from MLR history (rusagestructure, sched_setscheduler to SCHED_FIFO)

3 Disk accesses: Query memory accesses compete with CoModisk accesses

9 / 33

Appendix Backup Slides *

Prediction parameters (n and FCBF threshold)

0 10 20 30 40 50 60 70 80 90 1000

0.005

0.01

0.015

0.02

0.025

0.03

Rela

tive e

rror

History (s)

MLR error vs. cost (100 executions)

0 10 20 30 40 50 60 70 80 90 1000

0.5

1

1.5

2x 10

8

Cost (C

PU

cycle

s)

average error

average cost

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.90

0.005

0.01

0.015

0.02

0.025

0.03

Rela

tive e

rror

FCBF threshold

FCBF error vs. cost (100 executions)

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.90

2

4

6

8

10

12

14

x 105

Cost (C

PU

cycle

s)

average error

average cost

Figure: Prediction error versus cost as a function of the amount of history

used to compute the Multiple Linear Regression (left) and as a function of the

Fast Correlation-Based Filter threshold (right)

10 / 33

Appendix Backup Slides *

Prediction parameters (broken down by query)

0 10 20 30 40 50 60 70 80 90 1000

0.005

0.01

0.015

0.02

0.025

0.03

0.035

0.04

0.045

0.05

Rela

tive e

rror

History (s)

MLR error (100 executions)

application

counter

pattern−search

top−k

trace

flows

high−watermark

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.90

0.005

0.01

0.015

0.02

0.025

0.03

0.035

0.04

0.045

0.05

Rela

tive e

rror

FCBF threshold

FCBF error (100 executions)

application

counter

pattern−search

top−k

trace

flows

high−watermark

Figure: Prediction error broken down by query as a function of the amount of

history used to compute the Multiple Linear Regression (left) and as a

function of the Fast Correlation-Based Filter threshold (right)

11 / 33

Appendix Backup Slides *

Prediction Accuracy (CESCA-II trace)

0 200 400 600 800 1000 1200 1400 1600 18000

0.05

0.1

0.15

0.2

Time (s)

Rela

tive e

rror

Prediction error (5 executions − 7 queries)

average error: 0.012364max error: 0.13867

average

max

Query Mean Stdev Selected features

application 0.0110 0.0095 packets, bytescounter 0.0048 0.0066 packetsflows 0.0319 0.0302 new dst-ip,dst-port,protohigh-watermark 0.0064 0.0077 packetspattern-search 0.0198 0.0169 bytestop-k destinations 0.0169 0.0267 packetstrace 0.0090 0.0137 bytes, packets

12 / 33

Appendix Backup Slides *

Prediction Accuracy (CESCA-I trace)

0 200 400 600 800 1000 1200 1400 1600 18000

0.05

0.1

0.15

0.2

Time (s)

Rela

tive e

rror

Prediction error (5 executions − 7 queries)

average error: 0.0065407max error: 0.19061

average

max

Query Mean Stdev Selected features

application 0.0068 0.0060 repeated 5-tuple, packetscounter 0.0046 0.0053 packetsflows 0.0252 0.0203 new dst-ip, dst-port, protohigh-watermark 0.0059 0.0063 packetspattern-search 0.0098 0.0093 packetstop-k destinations 0.0136 0.0183 new 5-tuple, packetstrace 0.0092 0.0132 packets

13 / 33

Appendix Backup Slides *

Prediction Overhead

Prediction phase Overhead

Feature extraction 9.070%

FCBF 1.702%MLR 0.201%

TOTAL 10.973%

Table: Prediction overhead (5 executions)

14 / 33

Appendix Backup Slides *

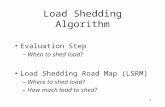

Load Shedding Scheme7

When to shed load

When the prediction exceeds the available cycles

avail_cycles = (0.1 × CPU frequency) − overhead

Overhead is measured using the time-stamp counter (TSC)

Corrected according to prediction error and buffer space

How and where to shed load

Packet and Flow sampling (hash based)

The same sampling rate is applied to all queries

How much load to shed

Maximum sampling rate that keeps CPU usage < avail_cycles

srate = avail_cyclespred_cycles

7P. Barlet-Ros et al. "On-line Predictive Load Shedding for Network Monitoring", Proc. of IFIP-TC6 Networking, 2007.

15 / 33

Appendix Backup Slides *

Load Shedding Scheme7

When to shed load

When the prediction exceeds the available cycles

avail_cycles = (0.1 × CPU frequency) − overhead

Overhead is measured using the time-stamp counter (TSC)

Corrected according to prediction error and buffer space

How and where to shed load

Packet and Flow sampling (hash based)

The same sampling rate is applied to all queries

How much load to shed

Maximum sampling rate that keeps CPU usage < avail_cycles

srate = avail_cyclespred_cycles

7P. Barlet-Ros et al. "On-line Predictive Load Shedding for Network Monitoring", Proc. of IFIP-TC6 Networking, 2007.

15 / 33

Appendix Backup Slides *

Load Shedding Scheme7

When to shed load

When the prediction exceeds the available cycles

avail_cycles = (0.1 × CPU frequency) − overhead

Overhead is measured using the time-stamp counter (TSC)

Corrected according to prediction error and buffer space

How and where to shed load

Packet and Flow sampling (hash based)

The same sampling rate is applied to all queries

How much load to shed

Maximum sampling rate that keeps CPU usage < avail_cycles

srate = avail_cyclespred_cycles

7P. Barlet-Ros et al. "On-line Predictive Load Shedding for Network Monitoring", Proc. of IFIP-TC6 Networking, 2007.

15 / 33

Appendix Backup Slides *

Load Shedding Algorithm

Load shedding algorithm (simplified version)

pred_cycles = 0;foreach qi in Q do

fi = feature_extraction(bi);si = feature_selection(fi, hi);pred_cycles += mlr(fi , si , hi);

if avail_cycles < pred_cycles × (1 + error ) thenforeach qi in Q do

bi = sampling(bi , qi , srate);fi = feature_extraction(bi);

foreach qi in Q doquery_cyclesi = run_query(bi , qi , srate);hi = update_mlr_history(hi, fi , query_cyclesi);

16 / 33

Appendix Backup Slides *

Reactive Load Shedding

Sampling rate at time t :

sratet = min

(1, max

(α, sratet−1 ×

avail_cyclest − delay

consumed_cyclest−1

))

consumed_cyclest−1: cycles used in the previous batch

sratet−1: sampling rate applied to the previous batch

delay : cycles by which avail_cyclest−1 was exceeded

α: minimum sampling rate to be applied

17 / 33

Appendix Backup Slides *

Alternative Prediction Approaches (DDoS attacks)8

0 5 10 15 20 25 300

1

2

3

4

5

x 106

Time (s)

CP

U c

ycle

s

actual

predicted

0 5 10 15 20 25 300

0.1

0.2

0.3

0.4

0.5

Time (s)

Re

lative

err

or

(a) EWMA

0 5 10 15 20 25 300

1

2

3

4

5

x 106

Time (s)C

PU

cycle

s

actual

predicted

0 5 10 15 20 25 300

0.1

0.2

0.3

0.4

0.5

Time (s)

Re

lative

err

or

(b) SLR

0 5 10 15 20 25 300

1

2

3

4

5

x 106

Time (s)

CP

U c

ycle

s

actual

predicted

0 5 10 15 20 25 300

0.1

0.2

0.3

0.4

0.5

Time (s)

Re

lative

err

or

(c) MLR

Figure: Prediction accuracy under DDoS attacks (flows query)

8P. Barlet-Ros et al. "Robust Resource Allocation for Online Network Monitoring", Proc. of IT-NEWS (QoSIP), 2008.

18 / 33

Appendix Backup Slides *

Impact of DDoS Attacks9

0 5 10 15 20 25 30 35 40 45 504

5

6

7

8

9

10

11

12x 10

6

Time (s)

CP

U c

ycle

s

no load shedding

load shedding

CPU threshold

5% bounds

(a) CPU usage

0 5 10 15 20 25 30 35 40 45 500

0.05

0.1

0.15

0.2

0.25

0.3

0.35

0.4

0.45

0.5

Time (s)

Rela

tive e

rror

no load shedding

packet sampling

flow sampling

(b) Error in the query results

Figure: CPU usage and accuracy under DDoS attacks (flows query)

9P. Barlet-Ros et al. "Robust Resource Allocation for Online Network Monitoring", Proc. of IT-NEWS (QoSIP), 2008.

19 / 33

Appendix Backup Slides *

Notation and Definitions

Symbol Definition

Q Set of q continuous traffic queriesC System capacity in CPU cyclescdq Cycles demanded by the query q ∈ Q (prediction)mq Minimum sampling rate constraint of the query q ∈ Qc Vector of allocated cyclescq Cycles allocated to the query q ∈ Qp Vector of sampling ratespq Sampling rate applied to the query q ∈ Qaq Action of the query q ∈ Qa−q Actions of all queries in Q except aquq Payoff function of the query q ∈ Q

Max-min fair share allocation policy

A vector of allocated cycles c is max-min fair if it is feasible, and foreach q ∈ Q and feasible c for which cq < cq, there is some q′ wherecq ≥ cq′ and cq′ > cq′ .

20 / 33

Appendix Backup Slides *

Nash Equilibrium

Payoff function

uq(aq, a−q) =

aq + mmfsq(C −∑

i:ui>0

ai), if∑

i:ai≤aq

ai ≤ C

0, if∑

i:ai≤aq

ai > C

Definition: Nash Equilibrium (NE)

A NE is an action profile a∗ with the property that no player i can dobetter by choosing an action profile different from a∗i , given that everyplayer j adheres to a∗j

Theorem

Our resource allocation game has a single NE when all playersdemand C

|Q| cycles.

21 / 33

Appendix Backup Slides *

Proof

Proof: a∗ is a NE

a∗ is a NE if ui(a∗) ≥ ui(ai , a

∗−i) for every player i and action ai , if

all other players keep their actions fixed to C|Q|

ai > C|Q| : ui(ai , a

∗−i) = 0

ai < C|Q| : ui(ai , a

∗−i) = ai + mmfsi(

C|Q| − ai) ≤

C|Q| (mmfsi(x) ≤ x)

Proof: a∗ is the only NE

For any profile other than a∗i there is at least one query that hasan incentive to change∑

i ai > C: queries with largest demands have incentive to obtaina non-zero payoff∑

i ai < C: queries have incentive to capture spare cycles∑

i ai = C and ∃i : ai 6=CQ

: incentive to disable the query with

largest demand

22 / 33

Appendix Backup Slides *

Accuracy Function

1 00

1

sampling rate

accura

cy

mq

1 − εq

Figure: Accuracy of a generic query

23 / 33

Appendix Backup Slides *

Accuracy with Minimum Constraints

Query mqAccuracy (mean ±stdev, K = 0.5)

no_lshed reactive eq_srates mmfs_cpu mmfs_pktapplication 0.03 0.57 ±0.50 0.81 ±0.40 0.99 ±0.04 1.00 ±0.00 1.00 ±0.03autofocus 0.69 0.00 ±0.00 0.00 ±0.00 0.05 ±0.12 0.97 ±0.06 0.98 ±0.04counter 0.03 0.00 ±0.00 0.02 ±0.12 1.00 ±0.00 1.00 ±0.00 0.99 ±0.01flows 0.05 0.00 ±0.00 0.66 ±0.46 0.99 ±0.01 0.95 ±0.07 0.95 ±0.06high-watermark 0.15 0.62 ±0.48 0.98 ±0.01 0.98 ±0.01 1.00 ±0.01 0.97 ±0.02pattern-search 0.10 0.66 ±0.08 0.63 ±0.18 0.69 ±0.07 0.20 ±0.08 0.41 ±0.08super-sources 0.93 0.00 ±0.00 0.00 ±0.00 0.00 ±0.00 0.95 ±0.04 0.95 ±0.04top-k 0.57 0.42 ±0.50 0.67 ±0.47 0.96 ±0.09 0.99 ±0.03 0.96 ±0.07trace 0.10 0.66 ±0.08 0.63 ±0.18 0.68 ±0.01 0.64 ±0.17 0.41 ±0.08

Table: Sampling rate constraints (mq) and average accuracy with K = 0.5

24 / 33

Appendix Backup Slides *

Example of Minimum Sampling Rates

00.10.20.30.40.50.60.70.80.91 0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

sampling rate (pq)

accura

cy (

1−εq)

high−watermark

top−k

p2p−detector

Figure: Accuracy as a function of the sampling rate (high-watermark, top-k

and p2p-detector queries using packet sampling)

25 / 33

Appendix Backup Slides *

Results: Performance Evaluation

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy (

mean)

no_lshed

reactive

eq_srates

mmfs_cpu

mmfs_pkt

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy (

min

)

no_lshed

reactive

eq_srates

mmfs_cpu

mmfs_pkt

Figure: Average (left) and minimum (right) accuracy with fixed mq constraints

Overload level (K)

C′ = C × (1 − K ), K = 1 − CP

q∈Qbdq

26 / 33

Appendix Backup Slides *

Enforcement Policy

10.90.80.70.60.50.40.30.20.10

109

1010

load shedding rate

CP

U c

ycle

s (

log)

selfish prediction

selfish actual

custom prediction

custom actual

custom−corrected prediction

custom−corrected actual

Figure: Actual vs predicted CPU usage

kq parameter

kq = 1 −dq,rq=0

dq,rq=1

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.5

1

1.5

2

2.5

x 109

load shedding rate

CP

U c

ycle

s

actual CPU usage

expected CPU usage

kq = 0.82

Figure: Actual vs expected CPU usage

Corrected load shedding rate

rq = min(1,

r ′qkq

)

27 / 33

Appendix Backup Slides *

Performance Evaluation

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy

no_lshed

eq_srates

mmfs_pkt

custom

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 10

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

overload level (K)

accura

cy

no_lshed

eq_srates

mmfs_pkt

custom

Figure: Average (left) and minimum (right) accuracy at increasing K levels

28 / 33

Appendix Backup Slides *

Performance Evaluation

0 200 400 600 800 1000 1200 1400 1600 18000

10

x 109

cycle

s

0 200 400 600 800 1000 1200 1400 1600 18000

0.5prediction error

rela

tive e

rror

0 200 400 600 800 1000 1200 1400 1600 18000

0.5delay compared to real time

dela

y (

s)

0 200 400 600 800 1000 1200 1400 1600 18000

0.5

1

system accuracy

accura

cy

0 200 400 600 800 1000 1200 1400 1600 18000

0.5load shedding overhead

overh

ead

time (s)

cycles available to CoMo prediction actual (after sampling)

Figure: Performance of a system that supports custom load shedding

29 / 33

Appendix Backup Slides *

Performance Evaluation

0 200 400 600 800 1000 1200 1400 1600 1800

1010

cycle

s (

log)

0 200 400 600 800 1000 1200 1400 1600 18000

0.5prediction error

rela

tive e

rror

0 200 400 600 800 1000 1200 1400 1600 18000

0.5delay compared to real time

dela

y (

s)

0 200 400 600 800 1000 1200 1400 1600 18000

0.5

1

system accuracy

accura

cy

time (s)

cycles available to CoMo prediction actual (after sampling)

Figure: Performance with a selfish query (every 3 minutes)

30 / 33

Appendix Backup Slides *

Online Performance

09:00 09:05 09:10 09:15 09:20 09:25 09:300

2

4

6

8

10

12

14

16

18x 10

9

time [hh:mm]

CP

U u

sage [cycle

s/s

ec]

CoMo + load_shedding overhead

Query cycles

Predicted cycles

CPU limit

09:00 09:05 09:10 09:15 09:20 09:25 09:300

0.5

1

1.5

2

2.5

3

3.5

4

4.5

5

x 104

time [hh:mm]

total packets

buffer occupation (KB)

DAG drops

Figure: Stacked CPU usage (left) and buffer occupation (right)

31 / 33

Appendix Backup Slides *

Publication Statistics

USENIX Annual Technical Conference

Estimated impact: 2.64 (rank: 7 of 1221)10

Acceptance ratio: 26.5% (31/117)

Computer Networks Journal

Impact factor: 0.829 (25/66)11

IFIP-TC6 Networking

Acceptance ratio: 21.8% (96/440)

ITNEWS-QoSIP

Acceptance ratio: 46.3% (44/95)

IEEE INFOCOM (under submission)

Estimated impact: 1.39 (rank: 133 of 1221)10

Acceptance ratio: 20.3% (236/1160)

10According to CiteSeer: http://citeseer.ist.psu.edu/impact.html11Journal Citation Reports (JCR)

32 / 33

Appendix Backup Slides *

Load Shedding in Network

Monitoring Applications

Pere Barlet Ros

Advisor: Dr. Gianluca Iannaccone

Co-advisor: Prof. Josep Solé Pareta

Universitat Politècnica de Catalunya (UPC)Departament d’Arquitectura de Computadors

December 15, 2008

33 / 33