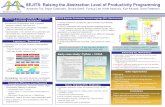

Raising the level of Abstraction in Behavioral Modeling, Programming Patterns and Transformations

Lecture 8: Raising the level of abstraction for...

Transcript of Lecture 8: Raising the level of abstraction for...

Parallel Computing Stanford CS348K, Spring 2020

Lecture 8:

Raising the level of abstraction for ML

Stanford CS348K, Spring 2020

Note▪ Most of this class involved in-class discussion of the Ludwig

and Overton papers

▪ I am posting these slides as some were used during parts of the discussion

Stanford CS348K, Spring 2020

Services provided by ML “frameworks”▪ Functionality:

- Implementations of wide range of useful operators - Conv, dilated conv, relu, softmax, pooling, separable conv, etc. - Implementations of various optimizers:

- Basic SGD, with momentum, Adagrad, etc. - Ability to compose operators into large graphs to create models - Carry out back-propagation

▪ Performance: - High performance implementation of operators (layer types) - Scheduling onto multiple GPUs, parallel CPUs (and sometimes multiple

machines) - Automatic sparsification and pruning

▪ Meta-optimization: - Hyper-parameter search - More recently: neural architecture search

Stanford CS348K, Spring 2020

TensorFlow/MX.Net data-flow graphs

▪ Key abstraction: a program is a DAG of (large granularity) operations that consume and product N-D tensors

Stanford CS348K, Spring 2020

Leveraging domain-knowledge: more efficient topologies (aka better algorithm design)▪ Original DNNs for image recognition where over-provisioned

- Large filters, many filters

▪ Modern DNNs designs are hand-designed to be sparser

•A

linearlayerwith

softmax

lossas

theclassifier(pre-

dictingthe

same

1000classesasthe

main

classifier,butrem

ovedatinference

time).

Aschem

aticview

oftheresulting

network

isdepicted

inFigure

3.

6.T

rain

ing

Meth

od

olo

gy

GoogLeN

etnetw

orksw

eretrained

usingthe

DistB

e-lief

[4]distributed

machine

learningsystem

usingm

od-est

amount

ofm

odeland

data-parallelism.

Although

we

useda

CPU

basedim

plementation

only,arough

estimate

suggeststhat

theG

oogLeNet

network

couldbe

trainedto

convergenceusing

fewhigh-end

GPU

sw

ithina

week,the

main

limitation

beingthe

mem

oryusage.O

urtrainingused

asynchronousstochastic

gradientdescentwith

0.9m

omen-

tum[17],fixed

learningrate

schedule(decreasing

thelearn-

ingrate

by4%

every8

epochs).Polyakaveraging

[13]was

usedto

createthe

finalmodelused

atinferencetim

e.Im

agesam

plingm

ethodshave

changedsubstantially

overthe

months

leadingto

thecom

petition,and

alreadyconverged

modelsw

eretrained

onw

ithotheroptions,som

e-tim

esin

conjunctionw

ithchanged

hyperparameters,

suchas

dropoutand

thelearning

rate.Therefore,

itis

hardto

givea

definitiveguidance

tothe

mosteffective

singlew

ayto

trainthese

networks.To

complicate

mattersfurther,som

eofthe

modelsw

erem

ainlytrained

onsm

allerrelativecrops,

otherson

largerones,inspired

by[8].

Still,oneprescrip-

tionthatw

asverified

tow

orkvery

wellafter

thecom

peti-tion,includes

sampling

ofvarioussized

patchesofthe

im-

agew

hosesize

isdistributed

evenlybetw

een8%

and100%

oftheim

agearea

with

aspectratioconstrained

tothe

inter-val

[34 ,

43 ].A

lso,we

foundthatthe

photometric

distortionsofA

ndrewH

oward

[8]were

usefultocom

batoverfittingto

theim

agingconditions

oftrainingdata.

7.

IL

SV

RC

2014

Cla

ssifi

catio

nC

halle

nge

Setu

pan

dR

esu

lts

TheILSV

RC

2014classification

challengeinvolves

thetask

ofclassifyingthe

image

intoone

of1000leaf-node

cat-egories

inthe

Imagenethierarchy.There

areabout1.2

mil-

lionim

agesfortraining,50,000

forvalidationand

100,000im

agesfor

testing.Each

image

isassociated

with

oneground

truthcategory,and

performance

ism

easuredbased

onthe

highestscoring

classifierpredictions.

Two

num-

bersare

usuallyreported:

thetop-1

accuracyrate,

which

compares

theground

truthagainstthe

firstpredictedclass,

andthe

top-5error

rate,which

compares

theground

truthagainst

thefirst

5predicted

classes:an

image

isdeem

edcorrectly

classifiedif

theground

truthis

among

thetop-5,

regardlessofits

rankin

them.The

challengeuses

thetop-5

errorrateforranking

purposes.

�����

�����

� ��

������

���� ��

�������������

�������

� ��

�������

� ��

�������������

������

���� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

������

���� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

"����#�����!!�

� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

"����#�����!!�

� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

������

���� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

�������

� ��

�������

� ������

���� ��

������

���� ��

���� ������

�������

� ������

!!�� ��

�������

� ��

"����#������

� ��

$�

�������

� ��

$� $�

��%���"���������

��%���&

�������

� ��

$� $�

��%���"���������

��%����

��%���"���������

��%����

Figure3:G

oogLeNetnetw

orkw

ithallthe

bellsand

whistles.

!"!#$%&'(#)*(#+,

-%%.(#+,

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#0,1(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#,2)(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#20,(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

3'4#-%%.

5$#0666

78349

/"/#$%&'(#20,

/"/#$%&'(#)*

/"/#$%&'(#)*

-%%.(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

-%%.(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

-%%.(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

-%%.(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

-%%.(#+,

5$#*6:)

5$#*6:)

5$#0666

78349

%;<-;<#

=7>9?#00,

%;<-;<#

=7>9?#,,*

%;<-;<#

=7>9?#2)

%;<-;<#

=7>9?#,1

%;<-;<#

=7>9?#0*

%;<-;<#

=7>9?#!

%;<-;<#

=7>9?#0

!""#$%

&'#()*+,-.()/0

!"!#$%&'(#)*(#+,

-%%.(#+,

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#0,1(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#,2)(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#20,(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

3'4#-%%.

5$#0666

78349

&'#()*+,-,+1/23)(

Figu

re3.

Exam

ple

netw

ork

arch

itect

ures

forI

mag

eNet

.L

eft

:th

eV

GG

-19

mod

el[4

1](1

9.6

billi

onFL

OPs

)as

are

fere

nce.

Mid

-

dle

:apl

ain

netw

ork

with

34pa

ram

eter

laye

rs(3

.6bi

llion

FLO

Ps).

Rig

ht:

are

sidu

alne

twor

kw

ith34

para

met

erla

yers

(3.6

billi

onFL

OPs

).Th

edo

tted

shor

tcut

sinc

reas

edi

men

sion

s.T

ab

le1

show

sm

ore

deta

ilsan

dot

herv

aria

nts.

Resid

ual

Netw

ork

.B

ased

onth

eab

ove

plai

nne

twor

k,w

ein

sert

shor

tcut

conn

ectio

ns(F

ig.

3,rig

ht)

whi

chtu

rnth

ene

twor

kin

toits

coun

terp

artr

esid

ualv

ersi

on.

The

iden

tity

shor

tcut

s(E

qn.(1

))ca

nbe

dire

ctly

used

whe

nth

ein

puta

ndou

tput

are

ofth

esa

me

dim

ensi

ons

(sol

idlin

esh

ortc

uts

inFi

g.3)

.Whe

nth

edi

men

sion

sinc

reas

e(d

otte

dlin

esh

ortc

uts

inFi

g.3)

,we

cons

ider

two

optio

ns:

(A)

The

shor

tcut

still

perf

orm

sid

entit

ym

appi

ng,w

ithex

traze

roen

tries

padd

edfo

rinc

reas

ing

dim

ensi

ons.

This

optio

nin

trodu

ces

noex

trapa

ram

eter

;(B

)The

proj

ectio

nsh

ortc

utin

Eqn.

(2)i

suse

dto

mat

chdi

men

sion

s(d

one

by1⇥

1co

nvol

utio

ns).

For

both

optio

ns,w

hen

the

shor

tcut

sgo

acro

ssfe

atur

em

aps

oftw

osi

zes,

they

are

perf

orm

edw

itha

strid

eof

2.

3.4

.Im

ple

men

tati

on

Our

impl

emen

tatio

nfo

rIm

ageN

etfo

llow

sth

epr

actic

ein

[21,

41].

The

imag

eis

resi

zed

with

itssh

orte

rsi

dera

n-do

mly

sam

pled

in[256

,480

]fo

rsc

ale

augm

enta

tion

[41]

.A

224⇥

224

crop

isra

ndom

lysa

mpl

edfr

oman

imag

eor

itsho

rizon

talfl

ip,w

ithth

epe

r-pi

xelm

ean

subt

ract

ed[2

1].T

hest

anda

rdco

lora

ugm

enta

tion

in[2

1]is

used

.We

adop

tbat

chno

rmal

izat

ion

(BN

)[1

6]rig

htaf

ter

each

conv

olut

ion

and

befo

reac

tivat

ion,

follo

win

g[1

6].

We

initi

aliz

eth

ew

eigh

tsas

in[1

3]an

dtra

inal

lpla

in/re

sidu

alne

tsfr

omsc

ratc

h.W

eus

eSG

Dw

itha

min

i-bat

chsi

zeof

256.

The

lear

ning

rate

star

tsfr

om0.

1an

dis

divi

ded

by10

whe

nth

eer

rorp

late

aus,

and

the

mod

elsa

retra

ined

foru

pto

60⇥10

4ite

ratio

ns.W

eus

ea

wei

ghtd

ecay

of0.

0001

and

am

omen

tum

of0.

9.W

edo

notu

sedr

opou

t[14

],fo

llow

ing

the

prac

tice

in[1

6].

Inte

stin

g,fo

rcom

paris

onst

udie

sw

ead

optt

hest

anda

rd10

-cro

pte

stin

g[2

1].

For

best

resu

lts,w

ead

optt

hefu

lly-

conv

olut

iona

lfo

rmas

in[4

1,13

],an

dav

erag

eth

esc

ores

atm

ultip

lesc

ales

(imag

esar

ere

size

dsu

chth

atth

esh

orte

rsi

deis

in{22

4,25

6,38

4,48

0,64

0}).

4.

Ex

perim

en

ts

4.1

.Im

ag

eN

et

Cla

ssifi

ca

tio

n

We

eval

uate

ourm

etho

don

the

Imag

eNet

2012

clas

sifi-

catio

nda

tase

t[36

]tha

tcon

sist

sof1

000

clas

ses.

The

mod

els

are

train

edon

the

1.28

mill

ion

train

ing

imag

es,a

ndev

alu-

ated

onth

e50

kva

lidat

ion

imag

es.

We

also

obta

ina

final

resu

lton

the

100k

test

imag

es,r

epor

ted

byth

ete

stse

rver

.W

eev

alua

tebo

thto

p-1

and

top-

5er

rorr

ates

.

Pla

inN

etw

ork

s.

We

first

eval

uate

18-la

yer

and

34-la

yer

plai

nne

ts.T

he34

-laye

rpla

inne

tis

inFi

g.3

(mid

dle)

.The

18-la

yerp

lain

neti

sof

asi

mila

rfor

m.

See

Tabl

e1

ford

e-ta

iled

arch

itect

ures

.Th

ere

sults

inTa

ble

2sh

owth

atth

ede

eper

34-la

yerp

lain

neth

ashi

gher

valid

atio

ner

ror

than

the

shal

low

er18

-laye

rpl

ain

net.

Tore

veal

the

reas

ons,

inFi

g.4

(left)

we

com

-pa

reth

eirt

rain

ing/

valid

atio

ner

rors

durin

gth

etra

inin

gpr

o-ce

dure

.W

eha

veob

serv

edth

ede

grad

atio

npr

oble

m-

the

4

ResNet (34 layer version)

Inception v1 (GoogleLeNet) — 27 total layers, 7M parameters

SqueezeNet: [Iandola 2017] Reduced number of parameters in AlexNet by 50x, with similar performance on image classification

Stanford CS348K, Spring 2020

Modular network designs

6WHP

,QSXW�����[���[�� ���[���[�

��[�,QFHSWLRQ�$

2XWSXW����[��[���

2XWSXW����[��[���

5HGXFWLRQ�$ 2XWSXW����[��[����

��[�,QFHSWLRQ�%

��[�,QFHSWLRQ�&

5HGXFWLRQ�%

$YDUDJH�3RROLQJ

'URSRXW��NHHS�����

2XWSXW����[��[����

2XWSXW���[�[����

2XWSXW���[�[����

2XWSXW������

6RIWPD[

2XWSXW������

2XWSXW������

Figure 9. The overall schema of the Inception-v4 network. For thedetailed modules, please refer to Figures 3, 4, 5, 6, 7 and 8 for thedetailed structure of the various components.

Figure 10. The schema for 35 ⇥ 35 grid (Inception-ResNet-A)module of Inception-ResNet-v1 network.

Figure 11. The schema for 17 ⇥ 17 grid (Inception-ResNet-B)module of Inception-ResNet-v1 network.

Figure 12. “Reduction-B” 17⇥17 to 8⇥8 grid-reduction module.This module used by the smaller Inception-ResNet-v1 network inFigure 15.

�[��&RQY�����

�[��&RQY����

�[��&RQY����

�[��&RQY����

�[��&RQY����

�[��&RQY����

)LOWHU�FRQFDW

)LOWHU�FRQFDW

$YJ�3RROLQJ

�[��&RQY����

Figure 4. The schema for 35 ⇥ 35 grid modules of the pureInception-v4 network. This is the Inception-A block of Figure 9.

Figure 5. The schema for 17 ⇥ 17 grid modules of the pureInception-v4 network. This is the Inception-B block of Figure 9.

Figure 6. The schema for 8⇥8 grid modules of the pure Inception-v4 network. This is the Inception-C block of Figure 9.

Figure 7. The schema for 35 ⇥ 35 to 17 ⇥ 17 reduction module.Different variants of this blocks (with various number of filters)are used in Figure 9, and 15 in each of the new Inception(-v4, -ResNet-v1, -ResNet-v2) variants presented in this paper. The k, l,m, n numbers represent filter bank sizes which can be looked upin Table 1.

Figure 8. The schema for 17 ⇥ 17 to 8 ⇥ 8 grid-reduction mod-ule. This is the reduction module used by the pure Inception-v4network in Figure 9.

Figure 4. The schema for 35 ⇥ 35 grid modules of the pureInception-v4 network. This is the Inception-A block of Figure 9.

�[��&RQY������

�[��&RQY�����

�[��&RQY�����

�[��&RQY�����

�[��&RQY�����

�[��&RQY�����

)LOWHU�FRQFDW

)LOWHU�FRQFDW

$YJ�3RROLQJ

�[��&RQY�����

�[��&RQY�����

�[��&RQY�����

�[��&RQY�����

Figure 5. The schema for 17 ⇥ 17 grid modules of the pureInception-v4 network. This is the Inception-B block of Figure 9.

Figure 6. The schema for 8⇥8 grid modules of the pure Inception-v4 network. This is the Inception-C block of Figure 9.

Figure 7. The schema for 35 ⇥ 35 to 17 ⇥ 17 reduction module.Different variants of this blocks (with various number of filters)are used in Figure 9, and 15 in each of the new Inception(-v4, -ResNet-v1, -ResNet-v2) variants presented in this paper. The k, l,m, n numbers represent filter bank sizes which can be looked upin Table 1.

Figure 8. The schema for 17 ⇥ 17 to 8 ⇥ 8 grid-reduction mod-ule. This is the reduction module used by the pure Inception-v4network in Figure 9.

Inception v4

A block

B block

Stanford CS348K, Spring 2020

Inception stem

tation to reduce the number of such tensors. Historically, wehave been relatively conservative about changing the archi-tectural choices and restricted our experiments to varyingisolated network components while keeping the rest of thenetwork stable. Not simplifying earlier choices resulted innetworks that looked more complicated that they needed tobe. In our newer experiments, for Inception-v4 we decidedto shed this unnecessary baggage and made uniform choicesfor the Inception blocks for each grid size. Plase refer toFigure 9 for the large scale structure of the Inception-v4 net-work and Figures 3, 4, 5, 6, 7 and 8 for the detailed struc-ture of its components. All the convolutions not markedwith “V” in the figures are same-padded meaning that theiroutput grid matches the size of their input. Convolutionsmarked with “V” are valid padded, meaning that input patchof each unit is fully contained in the previous layer and thegrid size of the output activation map is reduced accord-ingly.

3.2. Residual Inception BlocksFor the residual versions of the Inception networks, we

use cheaper Inception blocks than the original Inception.Each Inception block is followed by filter-expansion layer(1 ⇥ 1 convolution without activation) which is used forscaling up the dimensionality of the filter bank before theaddition to match the depth of the input. This is needed tocompensate for the dimensionality reduction induced by theInception block.

We tried several versions of the residual version of In-ception. Only two of them are detailed here. The firstone “Inception-ResNet-v1” roughly the computational costof Inception-v3, while “Inception-ResNet-v2” matches theraw cost of the newly introduced Inception-v4 network. SeeFigure 15 for the large scale structure of both varianets.(However, the step time of Inception-v4 proved to be signif-icantly slower in practice, probably due to the larger numberof layers.)

Another small technical difference between our resid-ual and non-residual Inception variants is that in the caseof Inception-ResNet, we used batch-normalization only ontop of the traditional layers, but not on top of the summa-tions. It is reasonable to expect that a thorough use of batch-normalization should be advantageous, but we wanted tokeep each model replica trainable on a single GPU. It turnedout that the memory footprint of layers with large activa-tion size was consuming disproportionate amount of GPU-memory. By omitting the batch-normalization on top ofthose layers, we were able to increase the overall numberof Inception blocks substantially. We hope that with bet-ter utilization of computing resources, making this trade-offwill become unecessary.

�[��&RQY����VWULGH���9�

,QSXW�����[���[��

�[��&RQY����9�

�[��&RQY����

�[��0D[3RRO�VWULGH���9�

�[��&RQY����VWULGH���9�

)LOWHU�FRQFDW

�[��&RQY����

�[��&RQY����9�

�[��&RQY����

�[��&RQY����

�[��&RQY����

)LOWHU�FRQFDW

�[��&RQY����9�

0D[3RRO�VWULGH ��9�

�[��&RQY�����9�

)LOWHU�FRQFDW

���[���[�

���[���[��

���[���[��

���[���[��

��[��[���

��[��[���

��[��[���

Figure 3. The schema for stem of the pure Inception-v4 andInception-ResNet-v2 networks. This is the input part of those net-works. Cf. Figures 9 and 15

Stanford CS348K, Spring 2020

ResNet !"!#$%&'(#)*(#+,

-%%.(#+,

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#0,1(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#,2)(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#20,(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

3'4#-%%.

5$#0666

78349

/"/#$%&'(#20,

/"/#$%&'(#)*

/"/#$%&'(#)*

-%%.(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

-%%.(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

-%%.(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

-%%.(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

-%%.(#+,

5$#*6:)

5$#*6:)

5$#0666

78349

%;<-;<#

=7>9?#00,

%;<-;<#

=7>9?#,,*

%;<-;<#

=7>9?#2)

%;<-;<#

=7>9?#,1

%;<-;<#

=7>9?#0*

%;<-;<#

=7>9?#!

%;<-;<#

=7>9?#0

!""#$% &'#()*+,-.()/0

!"!#$%&'(#)*(#+,

-%%.(#+,

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#)*

/"/#$%&'(#0,1(#+,

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#0,1

/"/#$%&'(#,2)(#+,

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#,2)

/"/#$%&'(#20,(#+,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

/"/#$%&'(#20,

3'4#-%%.

5$#0666

78349

&'#()*+,-,+1/23)(

Figure 3. Example network architectures for ImageNet. Left: theVGG-19 model [41] (19.6 billion FLOPs) as a reference. Mid-

dle: a plain network with 34 parameter layers (3.6 billion FLOPs).Right: a residual network with 34 parameter layers (3.6 billionFLOPs). The dotted shortcuts increase dimensions. Table 1 showsmore details and other variants.

Residual Network. Based on the above plain network, weinsert shortcut connections (Fig. 3, right) which turn thenetwork into its counterpart residual version. The identityshortcuts (Eqn.(1)) can be directly used when the input andoutput are of the same dimensions (solid line shortcuts inFig. 3). When the dimensions increase (dotted line shortcutsin Fig. 3), we consider two options: (A) The shortcut stillperforms identity mapping, with extra zero entries paddedfor increasing dimensions. This option introduces no extraparameter; (B) The projection shortcut in Eqn.(2) is used tomatch dimensions (done by 1⇥1 convolutions). For bothoptions, when the shortcuts go across feature maps of twosizes, they are performed with a stride of 2.

3.4. Implementation

Our implementation for ImageNet follows the practicein [21, 41]. The image is resized with its shorter side ran-domly sampled in [256, 480] for scale augmentation [41].A 224⇥224 crop is randomly sampled from an image or itshorizontal flip, with the per-pixel mean subtracted [21]. Thestandard color augmentation in [21] is used. We adopt batchnormalization (BN) [16] right after each convolution andbefore activation, following [16]. We initialize the weightsas in [13] and train all plain/residual nets from scratch. Weuse SGD with a mini-batch size of 256. The learning ratestarts from 0.1 and is divided by 10 when the error plateaus,and the models are trained for up to 60⇥ 104 iterations. Weuse a weight decay of 0.0001 and a momentum of 0.9. Wedo not use dropout [14], following the practice in [16].

In testing, for comparison studies we adopt the standard10-crop testing [21]. For best results, we adopt the fully-convolutional form as in [41, 13], and average the scoresat multiple scales (images are resized such that the shorterside is in {224, 256, 384, 480, 640}).

4. Experiments

4.1. ImageNet Classification

We evaluate our method on the ImageNet 2012 classifi-cation dataset [36] that consists of 1000 classes. The modelsare trained on the 1.28 million training images, and evalu-ated on the 50k validation images. We also obtain a finalresult on the 100k test images, reported by the test server.We evaluate both top-1 and top-5 error rates.

Plain Networks. We first evaluate 18-layer and 34-layerplain nets. The 34-layer plain net is in Fig. 3 (middle). The18-layer plain net is of a similar form. See Table 1 for de-tailed architectures.

The results in Table 2 show that the deeper 34-layer plainnet has higher validation error than the shallower 18-layerplain net. To reveal the reasons, in Fig. 4 (left) we com-pare their training/validation errors during the training pro-cedure. We have observed the degradation problem - the

4

Figure 9. The overall schema of the Inception-v4 network. For thedetailed modules, please refer to Figures 3, 4, 5, 6, 7 and 8 for thedetailed structure of the various components.

�[��&RQY�����

�[��&RQY����

�[��&RQY����

�[��&RQY����

�[��&RQY����

�[��&RQY����

�[��&RQY�����/LQHDU�

�

5HOX�DFWLYDWLRQ

5HOX�DFWLYDWLRQ

Figure 10. The schema for 35 ⇥ 35 grid (Inception-ResNet-A)module of Inception-ResNet-v1 network.

Figure 11. The schema for 17 ⇥ 17 grid (Inception-ResNet-B)module of Inception-ResNet-v1 network.

Figure 12. “Reduction-B” 17⇥17 to 8⇥8 grid-reduction module.This module used by the smaller Inception-ResNet-v1 network inFigure 15.

Stanford CS348K, Spring 2020

Depthwise separable convolution

NUM_CHANNELS inputs

NUM_CHANNELS inputs

Kw x Kh x NUM_CHANNELS weights (for each filter)

Kw x Kh x NUM_CHANNELS work per output pixel (per filter)

Convolution Layer Depthwise Separable Conv Layer

Kw x Kh weights (for each channel)

results of filtering each of NUM_CHANNELS independently

NUM_CHANNELS work per output pixel (per filter)

NUM_CHANNELS weights (for each filter)

Main idea: factor NUM_FILTERS 3x3xNUM_CHANNELS convolutions into: - NUM_CHANNELS 3x3x1 convolutions for each input channel - And NUM_FILTERS 1x1xNUM_CHANNELS convolutions to combine the results

Image credit: Eli Bendersky https://eli.thegreenplace.net/2018/depthwise-separable-convolutions-for-machine-learning/

Stanford CS348K, Spring 2020

How to improve system support for ML?

List of papers at MLSys 2020 Conference

Hardware/software for… faster inference? faster training?

Compilers for fusing layers, performing code optimizations?

Stanford CS348K, Spring 2020

But as a user wanting to create a model, where does most of my time really go?

Stanford CS348K, Spring 2020

ML model development is an iterative process

Task Spec Data Training Points Training Labels Model Outputs

New Architectures,Augmentations,

Training Procedure

New Supervision Sources

(Sec 6.1) (Sec 6.1) (Sec 5) (Sec 4) (Sec 6.1, 6.2) (Sec 5)

(Sec 5)

(Sec 6.1)

(Sec 4)

(Sec 6.1)

Thrust 1: Converting SME Knowledge Into Large-Scale SupervisionThrust 2: Rapid Model Improvement Through Discovery of Critical Data

Task InputsData

SelectionGenerate

SupervisionTrain

ModelValidateModel

IdentifyImportant Data

IncreaseSupervision

ChangeTraining Process

Figure 2: Illustration of key actions performed during model development. Boxes are actions, and arrowsbetween the boxes are outputs between di↵erent steps. Our three thrusts support various parts of thedevelopment pipeline, highlighted with section labels and box colors.

Throughout all aspects of the project, we seek to define new abstractions and interfaces forkey ML development tasks. These abstractions should be su�ciently high to enable advancedoptimizations and provide opportunities to automate key actions, but we wish to keep theSME in control of the overall model design process so as to leverage the SME’s unique problemdomain intuition and insight as necessary.

3 System Support for Iterative Model Development

An example workflow. To illustrate the complexity of a modern ML model development processand highlight opportunities for scaling, consider the example of a health care scientist (the SME)seeking to create a model for detecting a rare pathology in medical imaging data. The scientiststarts with an initial definition of the problem, has access to a large collection of image data (e.g.,CT scans from a large population), and has taken to the time to manually identify a small numberof examples of the pathology in this collection.

Typically, an ML engineer would then work with the SME to translate the problem descriptioninto an ML task (e.g., classification), select an appropriate model architecture for the task, andtrain the model using the SME’s labeled examples. In the future platforms we envision, the actionsof choosing the right model architecture, data augmentations, and training hyperparameters can becarried out by an automated model search process which removes the need to interface with an MLengineer, but incurs the high computational cost of exploring many possibilities. While “autoML”platforms provide similar functionality today [1, 11], in practice this is only a first step toward howadditional large-scale computation can accelerate the model design process.

For example, the model may perform poorly due to insu�cient training data. In a traditionalworkflow, the SME might be tasked to manually browse through large databases of images to findadditional instances of the rare pathology. The systems we envision (Thrust 2) will help the SME“mine” for these valuable examples by querying the image dataset for examples with similar (but nottoo similar) appearance to images known to feature the pathology. These systems could also helpthe SME identify modes in the dataset to obtain good sample diversity, and help the SME identifysubsets of the data that are particularly critical for the model to have good accuracy (e.g., specificforms of the pathology that are treatable if caught early). These systems will run continuouslyon large datasets throughout the development process, continuously identifying potentially usefulexamples in these collections, saving the SME time previously used for manual data exploration.

4

Stanford CS348K, Spring 2020

Example: how does TensorFlow help with data curation?

“We cannot stress strongly enough the importance of good training data for this segmentation task: choosing a wide enough variety of poses, discarding poor training images, cleaning up inaccurate [ground truth] polygon masks, etc. With each improvement we made over a 9-month period in our training data, we observed the quality of our defocused portraits to improve commensurately.”

Stanford CS348K, Spring 2020

Thought experiment: I ask you to train a car or person detector for a specific intersection

045

046

047

048

049

050

051

052

053

054

055

056

057

058

059

060

061

062

063

064

065

066

067

068

069

070

071

072

073

074

075

076

077

078

079

080

081

082

083

084

085

086

087

088

089

045

046

047

048

049

050

051

052

053

054

055

056

057

058

059

060

061

062

063

064

065

066

067

068

069

070

071

072

073

074

075

076

077

078

079

080

081

082

083

084

085

086

087

088

089

ECCV#2250

ECCV#2250

2 ECCV-18 submission ID 2250

Fig. 1: Images from three video streams captured by stationary cameras over a span ofseveral days (each row is one stream). There is significant intra-stream variance due totime of day, changing weather conditions, and dynamic objects.

the context of fixed-viewpoint video streams, is challenging due to distributionshift in the images observed over time. Busy urban scenes, such as those shownin Fig. 1, constantly evolve with time of day, changing weather conditions, andas di↵erent subjects move through the scene. Therefore, an accurate camera-specialized model must still learn to capture a range of visual events. We maketwo salient observations: each camera sees a tiny fraction of this general set ofimages, and this set can be made even smaller by limiting the temporal win-dow considered. This allows one to learn dramatically smaller and more e�cientmodels, but at the cost of modeling the non-stationary distribution of imageryobserved by each camera. Fortunately, in real-world settings, scene evolution oc-curs at a su�ciently slow rate (seconds to minutes) to provide opportunity foronline adaptation.

Our fundamental approach is based on widely-used techniques for modeldistillation, whereby a lightweight “student” model is trained to output the pre-dictions of a larger, high-capacity “teacher” model. However, we demonstratethat naive approaches for distillation do not work well for camera specializa-tion because of the underlying non-stationarity of the data stream. We proposea simple, but surprisingly e↵ective, strategy of online distillation. Rather thanlearning a student model on o✏ine data that has been labeled with teacherpredictions, train the student in an online fashion on the live data stream, in-termittently running the teacher to provide a target for learning. This requiresa judicious schedule for running the teacher as well as an online adaption algo-rithm that can process correlated streaming data. We demonstrate that existingo✏ine solvers when carefully tuned and used in the online setting are as accurateas the best o✏ine model trained in hindsight (e.g., with low regret [3]). Our finalapproach learns compact students that perform comparably to high capacitymodels trained o✏ine but o↵ers predictably low-latency inference (sub 20 ms)and an overall 7.8⇥ reduction in computation time.

Stanford CS348K, Spring 2020

Stanford CS348K, Spring 2020

▪ A good system provides valuable services to the user. So in these papers, who is the “user” (what is their goal, what is their skillset?) and what are the painful, hard, or tedious things that the systems are designed to do for the user?

Stanford CS348K, Spring 2020

▪ Let’s specifically contrast the abstractions of Ludwig with that of a lower-level ML system like TensorFlow. TensorFlow/MX.Net/PyTorch largely abstract ML model definition as a DAG of N-Tensor operations. How is Ludwig different?

▪ Then let’s compare those abstractions to Overton.

Stanford CS348K, Spring 2020

▪ Comparison to Google’s AutoML?