LCG Service Challenge Phase 4: Piano di attività e impatto sulla infrastruttura di rete 1 Service...

-

Upload

eleanor-eaton -

Category

Documents

-

view

213 -

download

0

Transcript of LCG Service Challenge Phase 4: Piano di attività e impatto sulla infrastruttura di rete 1 Service...

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 1

LCG

Service Challenge Phase 4Piano di attivitagrave e impatto sulla

infrastruttura di rete

Tiziana FerrariINFN CNAF

CCR Roma 20-21 Ottobre 2005

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 2

LCG

Service Challenge 3

bull Now in SC3 Service Phase (Sep ndash Dec 2005)

bull ALICE CMS and LHCb have all started their production

bull ATLAS are preparing for November 1st start

bull Service Challenge 4 [May 2006 Sep 2006]

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 3

LCG

SC3 INFN Sites (12)

bull CNAFndash LCG File Catalogue (LFC)ndash File Transport Service (FTS)ndash Myproxy server and BDIIndash Storage

bull CASTOR with SRM interface

bull Interim installations of sw components from some of the experiments (not currently available from LCG) - VObox

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 4

LCG

SC3 INFN sites (22)

bull Torino (ALICE) ndash FTS LFC dCache (LCG 260)ndash Storage Space 2 TBy

bull Milano (ATLAS)ndash FTS LFC DPM 137ndash Storage space 529 TBy

bull Pisa (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC DPM 135ndash Storage space 5 TBy available 5 TBy expected

bull Legnaro (CMS)ndash FTS PhEDEx Pool file cat PubDB DPM 137 (1 pool 80 Gby)ndash Storage space 4 TBy

bull Bari (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC dCache DPMndash Storage space 5 TBy available

bull LHCbndash CNAF

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 5

LCG INFN Tier-1 SC4 short-term plans (12)

SC4 storage and computing resources will be shared with production

bull Storage ndash Oct 2005 Data disk

bull 50 TB (Castor front-end)bull WANDisk performance 125 MBs (demonstrated SC3)

ndash Oct 2005 Tapebull 200 TB (4 9940B + 6 LTO2 drives)bull Drives shared with productionbull WANTape performance mean sustained ~ 50 MBs (SC3

throughput phase July 2005)

bull Computingndash Oct 2005 min 1200 kSI2K max1550 kSI2K (as the farm is

shared)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

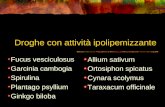

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 2

LCG

Service Challenge 3

bull Now in SC3 Service Phase (Sep ndash Dec 2005)

bull ALICE CMS and LHCb have all started their production

bull ATLAS are preparing for November 1st start

bull Service Challenge 4 [May 2006 Sep 2006]

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 3

LCG

SC3 INFN Sites (12)

bull CNAFndash LCG File Catalogue (LFC)ndash File Transport Service (FTS)ndash Myproxy server and BDIIndash Storage

bull CASTOR with SRM interface

bull Interim installations of sw components from some of the experiments (not currently available from LCG) - VObox

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 4

LCG

SC3 INFN sites (22)

bull Torino (ALICE) ndash FTS LFC dCache (LCG 260)ndash Storage Space 2 TBy

bull Milano (ATLAS)ndash FTS LFC DPM 137ndash Storage space 529 TBy

bull Pisa (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC DPM 135ndash Storage space 5 TBy available 5 TBy expected

bull Legnaro (CMS)ndash FTS PhEDEx Pool file cat PubDB DPM 137 (1 pool 80 Gby)ndash Storage space 4 TBy

bull Bari (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC dCache DPMndash Storage space 5 TBy available

bull LHCbndash CNAF

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 5

LCG INFN Tier-1 SC4 short-term plans (12)

SC4 storage and computing resources will be shared with production

bull Storage ndash Oct 2005 Data disk

bull 50 TB (Castor front-end)bull WANDisk performance 125 MBs (demonstrated SC3)

ndash Oct 2005 Tapebull 200 TB (4 9940B + 6 LTO2 drives)bull Drives shared with productionbull WANTape performance mean sustained ~ 50 MBs (SC3

throughput phase July 2005)

bull Computingndash Oct 2005 min 1200 kSI2K max1550 kSI2K (as the farm is

shared)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 3

LCG

SC3 INFN Sites (12)

bull CNAFndash LCG File Catalogue (LFC)ndash File Transport Service (FTS)ndash Myproxy server and BDIIndash Storage

bull CASTOR with SRM interface

bull Interim installations of sw components from some of the experiments (not currently available from LCG) - VObox

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 4

LCG

SC3 INFN sites (22)

bull Torino (ALICE) ndash FTS LFC dCache (LCG 260)ndash Storage Space 2 TBy

bull Milano (ATLAS)ndash FTS LFC DPM 137ndash Storage space 529 TBy

bull Pisa (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC DPM 135ndash Storage space 5 TBy available 5 TBy expected

bull Legnaro (CMS)ndash FTS PhEDEx Pool file cat PubDB DPM 137 (1 pool 80 Gby)ndash Storage space 4 TBy

bull Bari (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC dCache DPMndash Storage space 5 TBy available

bull LHCbndash CNAF

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 5

LCG INFN Tier-1 SC4 short-term plans (12)

SC4 storage and computing resources will be shared with production

bull Storage ndash Oct 2005 Data disk

bull 50 TB (Castor front-end)bull WANDisk performance 125 MBs (demonstrated SC3)

ndash Oct 2005 Tapebull 200 TB (4 9940B + 6 LTO2 drives)bull Drives shared with productionbull WANTape performance mean sustained ~ 50 MBs (SC3

throughput phase July 2005)

bull Computingndash Oct 2005 min 1200 kSI2K max1550 kSI2K (as the farm is

shared)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 4

LCG

SC3 INFN sites (22)

bull Torino (ALICE) ndash FTS LFC dCache (LCG 260)ndash Storage Space 2 TBy

bull Milano (ATLAS)ndash FTS LFC DPM 137ndash Storage space 529 TBy

bull Pisa (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC DPM 135ndash Storage space 5 TBy available 5 TBy expected

bull Legnaro (CMS)ndash FTS PhEDEx Pool file cat PubDB DPM 137 (1 pool 80 Gby)ndash Storage space 4 TBy

bull Bari (ATLASCMS)ndash FTS PhEDEx POOL file cat PubDB LFC dCache DPMndash Storage space 5 TBy available

bull LHCbndash CNAF

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 5

LCG INFN Tier-1 SC4 short-term plans (12)

SC4 storage and computing resources will be shared with production

bull Storage ndash Oct 2005 Data disk

bull 50 TB (Castor front-end)bull WANDisk performance 125 MBs (demonstrated SC3)

ndash Oct 2005 Tapebull 200 TB (4 9940B + 6 LTO2 drives)bull Drives shared with productionbull WANTape performance mean sustained ~ 50 MBs (SC3

throughput phase July 2005)

bull Computingndash Oct 2005 min 1200 kSI2K max1550 kSI2K (as the farm is

shared)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 5

LCG INFN Tier-1 SC4 short-term plans (12)

SC4 storage and computing resources will be shared with production

bull Storage ndash Oct 2005 Data disk

bull 50 TB (Castor front-end)bull WANDisk performance 125 MBs (demonstrated SC3)

ndash Oct 2005 Tapebull 200 TB (4 9940B + 6 LTO2 drives)bull Drives shared with productionbull WANTape performance mean sustained ~ 50 MBs (SC3

throughput phase July 2005)

bull Computingndash Oct 2005 min 1200 kSI2K max1550 kSI2K (as the farm is

shared)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 6

LCG

INFN Tier-1 SC4 short-term plans (22)

bull Networkndash Oct 2005

bull 2 x 1 GEthernet links CNAF GARR dedicated to SC traffic tofrom CERN

ndash Futurebull ongoing upgrade to 10 GEthernet CNAF GARR dedicated to SCbull Usage of policy routing at the GARR access pointbull Type of connectivity to INFN Tier-2 under discussionbull Backup link Tier-1 Tier-1 (Karlsruhe) under discussion

bull Software ndash Oct 2005

bull SRMCastor and FTSbull farm middleware LCG 26

ndash Futurebull dCache and StoRM under evaluation (for disk-only SRMs)bull Possibility to upgrade to CASTOR v2 under evaluation (end of year 2005)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 7

LCG

What target rates for Tier-2rsquos

bull LHC Computing Grid Technical Design Reportndash Access link at 1 Gbs by the time LHC startsndash Traffic 1 Tier-1 1 Tier-2 ~ 10 Traffic Tier-0 Tier-1ndash Estimations of traffic Tier-1 Tier-2 represent an upper limitndash Tier-2 Tier-2 replications will lower loan on the Tier-1

WSF with safety factor

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 8

LCG

TDR bandwidth estimationbull LHC Computing Grid Technical Design Report (giugno 2005)

httplcgwebcernchLCGtdrbull

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 9

LCG

IN

(real data Mbs)

IN

(MC Mbs)

Total IN

(Mbs)

OUT

(MC Mbs)

Alice 319 958 75 225 394 1183 37 112

Atlas 329 986 49 147 378 1133 34 102

CMS 685 2055 0 0 685 2055 363 1089

LHCb 0 0 0 0 0 0 51 153

Expected rates (rough with_safety-factor)

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 10

LCG

Necessitarsquo vs specifiche Tier-2Esperimento 2006 (Mbs)

BGABEA in

2006 (Mbs)

BGABEA out

Necessitagrave totali

IN OUT (Mbsi)

Bari Alice ndash CMS 500 1000 3188 1191

Catania Alice 0 1000 = 1183 112

Frascati Atlas 100 200 = 1133 102

Legnaro Alice ndash CMS 450 1000 400 1000 3188 1191

Milano Atlas 100 1000 = 1133 102

Napoli Atlas 1000 1000 = 1133 102

Pisa CMS Non specificato Non specificato 2055 1089

Roma1 Atlas ndash CMS 100 2500 atlas

200 1000 cms

= 3188 1191

Torino Alice 70 1000 = 1183 112

With GigEth

(Oct 2005)

No

Yes

no

Yes

Yes

No

Yes

Yes

Yes

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 11

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull 31 Dec 05ndash Tier-01 high-performance network operational at CERN and 3 Tier-1s

bull 31 Dec 05 ndash 750 MBs data recording demonstration at CERN Data generator disk tape

sustaining 750 MBs for one week using the CASTOR 2 mass storage systembull Jan 06 ndash Feb 06

ndash Throughput testsbull SC4a 28 Feb 06

ndash All required software for baseline services deployed and operational at all Tier-1s and at least 20 Tier-2 sites

bull Mar 06 ndash Tier-01 high-performance network operational at CERN and 6 Tier-1s at least 3 via

GEANTbull SC4b 30 Apr 06

ndash Service Challenge 4 Set-up Set-up complete and basic service demonstrated capable of running experiment-supplied packaged test jobs data distribution tested

bull 30 Apr 06 ndash 10 GBs data recording demonstration at CERN Data generator disk tape

sustaining 10 GBs for one week using the CASTOR 2 mass storage system and the new tape equipment

bull SC4 31 May 06 Service Challenge 4 ndash Start of stable service phase including all Tier-1s and 40 Tier-2 sites

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 12

LCG The Worldwide LHC Computing GridLevel 1 Service Milestones

bull The service must be able to support the full computing model of each experiment including simulation and end-user batch analysis at Tier-2 sites

bull Criteria for successful completion of SC4 By end of service phase (end September 2006)

1 8 Tier-1s and 20 Tier-2s must have demonstrated availability better than 90 of the levels specified the WLCG MoU [adjusted for sites that do not provide a 24 hour service]

2 Success rate of standard application test jobs greater than 90 (excluding failures due to the applications environment and non-availability of sites)

3 Performance and throughput tests complete Performance goal for each Tier-1 is ndash the nominal data rate that the centre must sustain during LHC operation (200 MBs for CNAF) ndash CERN-disk network Tier-1-tape ndash Throughput test goal is to maintain for one week an average throughput of 16 GBs from disk at CERN to

tape at the Tier-1 sites All Tier-1 sites must participate

bull 30 Sept 06 ndash 16 GBs data recording demonstration at CERN Data generator1048774 disk tape sustaining 16

GBs for one week using the CASTOR mass storage systembull 30 Sept 06

ndash Initial LHC Service in operation Capable of handling the full nominal data rate between CERN and Tier-1s The service will be used for extended testing of the computing systems of the four experiments for simulation and for processing of cosmic-ray data During the following six months each site will build up to the full throughput needed for LHC operation which is twice the nominal data rate

bull 24 hour operational coverage is required at all Tier-1 centres from January 2007

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 13

LCG

Milestones in brief

bull Jan 2006 ndash throughput tests (rate targets not specified)

bull May 2006 ndash high-speed network infrastructure operational

bull By Sep 2006 ndash avg throughput of 16 GBs from disk at CERN to tape at the Tier-1

sites (nominal rate for LHC operation 200 MBs for CNAF)

bull Oct 2006 ndash Mar 2007 ndash avg throughput up to twice the nominal rate

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4

Service Challenge Phase 4 Piano di attivitagrave e impatto sulla infrastruttura di rete 14

LCG Target (nominal) data rates for Tier-1 sites and CERN in SC4