JOURNAL OF LA Parallware Trainer: Interactive Tool for...

Transcript of JOURNAL OF LA Parallware Trainer: Interactive Tool for...

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 1

Parallware Trainer: Interactive Tool forExperiential Learning of Parallel Programming

using OpenMP and OpenACCManuel Arenaz, and Sergio Ortega, and Ernesto Guerrero, and Fernanda Foertter,

Abstract—STEM education plays a key role in the sustainedgrowth and stability of the US economy and worldwide. There iscurrently a shortage of a skilled STEM workforce, and that gapis expected to grow widely in the next decade. It is key to widenthe audience of STEM people trained in parallel programmingtargeting parallel architectures like Intel Xeon, IBM Power,NVIDIA GPU and Intel Xeon Phi. In this regard, the standardsOpenMP 4.5 and OpenACC 2.5 offer pragma-based parallelprogramming paradigms that promise performance portabilityand higher productivity.

This paper presents Parallware Trainer, a new interactive toolfor high-productivity STEM education and training in parallelprogramming using OpenMP 4.5 and OpenACC 2.5. It enablesexperiential learning by providing an interactive, real-time GUIwith editor capabilities to assist in the design, implementation andbenchmarking of OpenMP/OpenACC-enabled parallel code. Weenvision Parallware Trainer as a key enabler for STEM educationfrom PhD down to undergraduate in computer science, maths,physics,.... This paper also describes a success story resulting froma GPU Hackathon organized at the Supercomputing Center ofGalicia (CESGA). We present the progress of a 2-people team ofthe EDANYA group learning how to address the parallelization ofa simulation code for prediction of tsunamis used by the NationalOceanic and Atmospheric Administration (NOAA).

Index Terms—STEM education, experiential learning, parallelprogramming, OpenMP 4.5, OpenACC 2.5, Parallware Trainer.

I. INTRODUCTION

HPC education and training is organized today mostlyaround courses, workshops and hackathons. As shown

in the pyramid of Fig. 1, in courses the participants passivelylisten to a lecture or presentation, and apply parallel program-ming concepts to simple example codes in hands-on sessions.Workshops increase learning retention through interactivetraining activities between the participants. And hackathonsare events where people come together to solve problems,participating in groups of about 2-5 individuals that take outtheir laptops and dive into their own problems. Hackathonsallow experiential learning as participants work with a coachto learn through immediate practice in the optimization andparallelization of their own code. However, hackathons areexpensive small events that train only a few people throughoutthe year.

M. Arenaz is with the Department of Computer Engineering at University ofA Coruna, and with Appentra Solutions, Spain e-mail: [email protected].

S. Ortega and E. Guerrero are with EDANYA group, University of Malaga,Spain e-mail: [email protected], [email protected].

F. Foertter is with ORNL e-mail: [email protected] received September 8, 2017; revised September 8, 2017.

Fig. 1. The HPC education and training pyramid.

The view of the parallel computing landscape from Berke-ley [5] addresses the so-called parallel challenge, which isdescribed as ”Writing programs that scale with increasingnumbers of cores should be as easy as writing programs forsequential computers”. Thus, a Parallel Bridge is used toillustrate that Software is the main problem in bridging the gapbetween user Applications and the parallel Hardware industry.Today, the HPC field still widely recognizes that software ispain #1. This paper addresses this by extending the parallelbridge as shown in Fig. 2. The new tower Code highlightsthat the features of the code implemented by the programmerdirectly impact on the productivity of the parallelization pro-cess. Thus, best practices on parallel programming typicallyrecommend, for example, to use stride-1 memory accesses andto prefer structures-of-arrays instead of arrays-of-structures.

The main contribution of this paper is ParallwareTrainer [1], a new commercial software that aims at bringingthe benefits of workshops and hackathons to a broader audi-ence of STEM people. It is a new interactive, real-time GUIfor high-productivity HPC education and training that enablesself-learning of best practices on parallel programming with

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 2

Fig. 2. An extension of the parallel bridge of Berkeley’s view of the parallel computing landscape. The new tower Code inserted between Applications andparallel Hardware highlights the impact of the program code in the productivity of the parallelization process.

OpenMP 4.5 and OpenACC 2.5. Powered by the hierarchicalclassification engine of Parallware technology [4], it discoversparallel patterns in sequential code, provides a ranking ofparallelization strategies, and generates pragma-based parallelsource code using standards OpenMP 4.5 and OpenACC 2.5.The tool is also pre-loaded with sample codes that cover themost important parallel patterns used in the codes availablein the CORAL Benchmarks [6] and the NAS Parallel Bench-marks [7]. As shown in Fig. 2, the programmers interact withcompilers through Parallware’s parallel patterns, which hidethe complexity of the dependency terminology that is difficultto understand for scientists and engineers. Overall, ParallwareTrainer reduces the costs of HPC training and education, themain advantages being: (1) reduction of learning effort andincrease of learning retention through learn-by-doing, (2) highavailability 24x7, (3) broader audience of STEM people notlocated near HPC training sites.

The rest of the paper is organized as follows. Section IIdiscusses related work. Section III presents the new toolParallware Trainer, describing its GUI layout and its technicalfeatures. The current technological roadmap under develop-ment in order to find the product-market fit is also sketched.Section IV describes the experience of staff of the EDANYAgroup using Parallware Trainer to learn how to parallelize asimulation code to help predicting tsunamis by the NOAA.Finally, Section V presents conclusions and future work.

II. RELATED WORK

There are not so many tools dedicated to training in parallelprogramming with OpenMP and OpenACC. HPC centersorganize courses and workshops [2] that typically teach themost relevant parallel programming concepts using step-by-step instructions and hands-on labs. Production-level compilers(e.g., Intel ICC, GNU GCC, NVIDIA PGI) are not of practicaluse to help understanding the technical reasons behind successand failure in the parallelization of a code. Compiler’s user

messages are written using the notation and terminology ofclassical dependence analysis theory, a mathematical approachto discover parallelism in sequential codes. Thus, compiler’suser messages typically report failures in discovering paral-lelism by pointing to source code instructions that may intro-duce true/output/anti-dependences during parallel execution.In contrast, Parallware uses a new computational approachthat consists of a hierarchical classification scheme for depen-dence analysis. Parallware Trainer reports algorithmic featuresfound in the code in terms of parallel patterns [4], such asfully parallel loops, parallel scalar reductions and parallelsparse reductions. In addition, it provides a ranking of severalparallelization strategies that are applicable to the code, andallows the user to generate, study and run implementations ofthose strategies. These features are not typically available inproduction-level compilers.

Web-based HPC training [3] typically include webinar se-ries, video tutorials and code samples. However, these HPCtraining environments do not enable experiential learningbecause the environment does not provide any feedback aboutthe problems encountered when the concepts are applied tothe code of the developer. Parallware Trainer is a step forwardthat could be integrated in third-party web-based trainingenvironments as well.

III. PARALLWARE TRAINER

Parallware Trainer [1] is a new interactive commercialtool for high-productivity HPC education and training usingOpenMP 4.5 and OpenACC 2.5. It allows experiential learningby providing an interactive, real-time GUI with editor capabili-ties to assist in the design and implementation of parallel code.Powered by the hierarchical classification engine of Parallwaretechnology, it discovers parallelism using parallel patterns,and implements those patterns using standards OpenMP 4.5and OpenACC 2.5 (see video tutorials How to use ParallwareTrainer available at www.parallware.com).

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 3

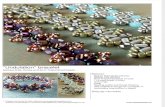

Fig. 3. Main screen of the GUI of Parallware Trainer. Layout composed of five panels: 1) Project manager, 2) Source code editor, 3) Parallel code editor, 4)Execution console, and 5) Parallware console. When clicking on a gutter attached to a scope (e.g., function, loop), the GUI shows a window that enables theuser to provide additional hints to control the behaviour of the Parallware core technology.

A. Graphical User Interface (GUI)

The layout of the GUI of Parallware Trainer is shown inFig. 3. It provides an environment for the edition, compilationand execution of sequential and OpenMP/OpenACC-enabledparallel code. Next, the panels of the main screen are describedin detail.

1) Project Manager: Handle multiple Parallware Trainerprojects, and drag-and-drop the C/C++/Fortran source codefiles that compose your projects. A Parallware Trainer projectconsists of a directory with the tree structure shown in Fig. 4a.It separates the source code (src), binaries (obj), executables(bin), external libraries (lib), external header files (inc), andother resources such as data input files (res). The projectdirectory also contains a Makefile generated automatically (seefile ATMUX.make in Fig. 4a), which provides targets to clean,build and run the code of the project (see an excerpt ofATMUX.make in Fig. 4b). Note that the project directory isself-contained, and all the targets can also be executed from aLinux terminal (outside of the Parallware Trainer GUI).

2) Source Code Editor: Edit multiple source code files ofthe active project. The GUI provides syntax-highlighting forC/C++/Fortran. Each scope of the source code (e.g., functions,loops) is attached a gutter that enables the user to supplyhints for controlling the analyses performed by Parallware coretechnology. As shown in Fig. 3, the user can select the parallelprogramming standard (OpenMP -or Default- and OpenACC),the device (CPU -or Default-, GPU and PHI), and the parallelprogramming paradigm (Loop -or Default-, Offload, Task andSIMD). The GUI also allows the user to select between threeparallel implementations of parallel reductions widely used in

benchmarks CORAL and NASA NPB: Atomic access, Built-in reduction and Variable privatization (see details in [4]).Note that unsupported combinations of hints are convenientlyreported to the user.

3) Parallel Code Editor: Handle multiple parallel versionsof the same source code, the one corresponding to the activetab in the source code editor (see the version pw atmux.c ofsequential code atmux.c in Fig. 3). By default, ParallwareTrainer creates a parallel version automatically generatedby Parallware core technology (see Fig. 3, version namedpw atmux.c). The user is allowed to create more parallel ver-sions for the same sequential code (see Fig. 3, version namedatmux synchopt manual.c). Such versions can be edited tofine-tune the OpenMP/OpenACC pragmas and clauses asneeded to improve the performance of the parallel code.Finally, note that the GUI requests additional information fromthe user as needed (see the check list at the bottom of the editorof file pw atmux.c).

4) Execution Console: The GUI provides two executionprofiles (see Fig. 3): Sequential, to build and run the codeusing one thread; and Parallel, to enable OpenMP/OpenACCcapabilities and run the code using multiple threads. The useris allowed to chose the preferred compiler suite (e.g., GCC,ICC, PGCC) and specify the flags needed to build and run thecode.

5) Parallware Console: The user is allowed to browse themessages reported by Parallware core technology after theanalysis of the source code, the one corresponding to the activetab in the source code editor. As shown in Fig. 3, Parallwarereports the parallel patterns found in each loop, giving detailsabout the source code instructions, variables and operators

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 4

(a) Tree view (project ATMUX).

(b) Makefile (excerpt of ATMUX.make).

Fig. 4. Internals of Parallware Trainer projects.

involved in the pattern (see Fig. 3, loop at line 9, messageParallel sparse reduction on variable ’y’). It also reports aranking of applicable parallelization strategies, the ranking topbeing the strategy automatically selected and implemented (seethe sparse accumulation at line 16 protected with an OpenMPpragma atomic). The resulting OpenMP/OpenACC pragmasinserted by Parallware can be studied in the parallel codeeditor.

We close the description of the GUI of Parallware Trainer bymentioning that the tool is shipped with a tutorial and severalself-learning examples of increasing technical complexity. Thesnapshots shown in Fig. 5 present four well-known samplecodes with very different technical features (e.g., parallelpattern, unbalanced workload, arithmetic intensity). For thesake of clarity, a list of exercises proposed to the student forthe sample code ATMUX is also presented.

B. Technological Roadmap for 2017-2018Early prototypes of Parallware Trainer have been tested in

PRACE PATC courses at Barcelona Supercomputing Center(BSC) since 2015. Since February 2017 we are conducting anearly access program of Parallware Trainer to find the product-market fit. As of writing we have 50 participants from 7supercomputing centers (OLCF, NERSC, BSC, CESGA, LRZ,EPCC, TACC), 26 international universities and 7 companiesor other organizations. In addition, key opinion leaders1 arealready supporting the rolling launch of Parallware Trainerat SC17. The feedback received from the HPC communityreinforces our vision that Parallware Trainer may become akey enabler in the STEM field for self-learning of parallelprogramming in computer science, maths, physics,.... at PhDand undergraduate levels.

The technological roadmap of Parallware Trainer is guidedby best practices on parallel programming with OpenMP andOpenACC. We have analyzed by hand the OpenMP/OpenACCimplementations of well-known benchmark suites [4], morespecifically, CORAL Benchmarks [6], NAS Parallel Bench-marks [7] and ORNL’s XRayTrace miniapp [8]. As a result,Parallware Trainer currently supports:• The most popular parallel patterns, namely, Parallel

Forall, Parallel Scalar Reduction and Parallel SparseReduction (see details in [4]).

• The parallel programming paradigms Loop and Offloadfor modern CPU devices (e.g., Intel Xeon, IBM Power)and NVIDIA GPUs.

• OpenMP and OpenACC implementations of parallelscalar/sparse reductions using the approaches Atomicaccess, Built-in reduction and Variable privatization (seemore details in [4]).

The HPC race to build (pre-)exascale supercomputers by2024 is leading to the design of increasingly complex hardwareand software stacks. From a hardware perspective, the tech-nological roadmap of Parallware Trainer is aligned with the

1(1) Fernanda Foertter (ORNL), recently elected as SIG HPC EducationVice Chair, co-designer of the technical features and the GUI of ParallwareTrainer; (2) Xavier Martorell (BSC), promoter of early usage of ParallwareTrainer in PRACE PATC courses at BSC; and (3) Dirk Pleiter (JSC), helpingto increase awareness of Parallware Trainer at SC17.

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 5

(a) Sample codes.

(b) Exercises proposed for ATMUX.

Fig. 5. Tutorial and sample examples shipped with Parallware Trainer.

US Exascale Compute Project (ECP) [9] and will also supportthe Intel Xeon Phi accelerator. From a software perspective,we will add support for the parallel programming paradigmsTask and SIMD, which are expected to have an increasinglyimportant role in future (pre-)exascale supercomputers. Notethat all of these options are shown in Fig. 3 in the snapshotof Parallware Trainer.

IV. CASE STUDY: EDANYA GROUP

In the scope of the early access program of ParallwareTrainer, we have organized a GPU Hackathon at the Su-percomputing Center of Galicia (CESGA) to collect di-rect feedback on the usage of the tool for self-learning ofparallel programming using OpenMP and OpenACC (seehttp://www.appentra.com/cesgahack/). Hereafter, we presentthe self-learning experience of a 2-people team coming fromthe EDANYA group of the University of Malaga (Spain).

The goal of the team was learning how to address the paral-lelization of a family of models for the simulation of geophys-ical flows. Among them, the code Tsunami-HySEA [10] hasbecome very popular among the tsunami modelers community.Tsunami-HySEA is the numerical model of the HySEA familyspecifically designed for quake generated tsunami simulations.It combines robustness, reliability and good accuracy in amodel based on a GPU faster than real time implementation.Tsunami-HySEA model implements in the same code thethree parts of an earthquake generated tsunami: generation,propagation, and coastal inundation. In the generation stage,Okada’s fault deformation model is used to predict the initialbottom deformation that is transmitted instantaneously to thesea surface generating the tsunami wave. The propagation andcoastal inundation is modeled by the well known shallow-water PDE system, that is discretized by means of a second-order finite volume scheme. Tsunamy-Hysea has been adoptedas official code for the Spanish and Italian tsunami earlywarning system and, recently, has been successfully evaluatedfor the National Tsunami Hazard Mitigation Program (U.S.)and has also been tested at the National Center for TsunamiReserch (NCTR) of the National Oceanic and AtmosphericAdministration (NOAA).

A. Preparation of the GPU Hackathon

The GPU Hackathon at CESGA was oriented to R+Dgroups responsible for the development of simulation codeswritten in C/C++/Fortran. The EDANYA group presentedTsunami-HySEA, a C++ code that approximates the shallow-water PDE system using a second order finite volumes method.An interview with Prof. Manuel Castro, member of EDANYAteam and responsible of the tsunami modeling project, stab-lished the main goal of the team: develop an OpenACC versionand compare its performance with the existing CUDA version.

The conversations also revealed that the Tsunami-HySEAcode is too complex for a 2-people team to address itsparallelization in a 3-days GPU Hackathon. As a result, theEDANYA team was requested to write a miniapp that containsa simple version of Tsunami-HySEA. As shown in Table I,the miniapp is a C code that focuses on the propagation of

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 6

Tsunami-HySEA miniappModel Hidrostatic shallow watersTsunami features Generation

Propagation PropagationInundation

Domain 3D 2DNo. layers 1 2Numerical scheme Finite volumesNo. volumes 10 · 106 – 60 · 106 10 · 103 – 60 · 104Bathimetry input Real world Simple math functionProgramming language C++ C

TABLE ICOMPARISON OF THE MINIAPP USED BY THE EDANYA TEAM WITH

RESPECT TO THE REAL CODE TSUNAMI-HYSEA.

the tsunami in a 2D domain. It was designed to keep both thephysics and the complexity of Tsunami-HySEA. This miniappreduces in one the order of the problem, allowing moreresolution in the vertical domain while keeping reasonablylow running times. Both codes implement the same hydrostaticshallow water model, which is run using different bathimetryinput data. The speedups obtained with the miniapp areexpected to be the speedups obtained with Tsunami-HySEA.

B. 1-Day Workshop: The Hardware-Software HPC Landscape

The GPU Hackathon was oriented to C/C++/Fortran de-velopers of scientific codes. As no prior knowledge of par-allel programming concepts was required, a 1-day workshopwas organized to give an overview of the HPC field froma hardware and software perspective. The talks introducedthe basics of the CPU+GPU parallel architecture before de-scribing the pragma-based parallel programming standardsOpenMP 4.5 and OpenACC 2.5 (see PDFs of the talks athttp://www.appentra.com/cesgahack/). At the end of the day,the teams were requested to present their projects, which wasshown to be a good way to foster collaboration between theparticipants and the mentors.

C. 3-Days GPU Hackathon

The EDANYA team consisted of 2 people: (1) one PhD-level developer with experience in GPU programming withCUDA, and (2) one PhD-student without prior knowledge onparallel programming. The activities carried out by the teamduring the 3 days were as follows:

1) Day 1: Setup the development environment at CESGAwith an introduction to the usage of Finisterrae II supercom-puter by CESGA staff. Following suggestions by organizersof GPU Hackathon at Julich Supercomputer Center (JSC),CESGA staff also gave an introduction to profiling scientificapplications with GNU gprof. The EDANYA group alsoshowed high interest in learning the usage of Git versioncontrol system for collaborative development. As a result, theactivity of the team during day 1 was focused mainly oncreating a Git repository in bitbucket.org and on profiling theminiapp with gprof to identify the hotspots (see Fig. 6). Forthe sake of clarity, a pseudocode of the miniapp is shownin Listing 1. The function main() contains the time-iterationloop, so the team identified calcula iteracion() as the hotspot

Fig. 6. Profiling of the miniapp of the EDANYA team using gprof.

Listing 1. Pseudocode of the OpenMP-enabled EDANYA miniapp.1 void main ( ) {2 #pragma omp p a r a l l e l shared ( var0 , var1 , varn , x ,

r e c o n s t r u c t i o n s , npar , ncapas , dx , dt , t i empo actua l, heps )

3 {4 do {5 va r1 = c a l c u l a i t e r a c i o n ( var0 , d t ) ;6 d t = c a l c u l a d t ( va r0 ) ;7 #pragma omp b a r r i e r8 } whi le ( t i e m p o a c t u a l<t i e m p o f i n a l ) ;9 }

10 }11

12 void c a l c u l a i t e r a c i o n ( va r0 ) {13 f o r ( i n t l =0; l<o r d e r ; l ++) {14 va r1 = 0;15 r e c o n s t r u c t i o n s , va r1 = r e c o n s t r u y e ( va r0 ) ;16

17 / / P a t t e r n : P a r a l l e l Sparse Reduc t ion18 / / ( v a r i a b l e ’ var1 ’ )19 #pragma omp f o r s c h e d u le ( s t a t i c )20 f o r ( i n t i =0; i<npa r ; i ++) {21 im = f ( i ) ;22 i p = g ( i ) ;23 f lusexm , f l u x e s p = calcula Dmp ( r e c o n s t r u c t i o n s ,

ncapas , dt , heps ) ;24 #pragma omp atomic update25 va r1 [ im]−= f l uxesm ;26 #pragma omp atomic update27 va r1 [ i p ]−= f l u x e s p ;28 }29

30 / / P a t t e r n : P a r a l l e l F o r a l l ( v a r i a b l e ’ var1 ’ )31 #pragma omp f o r s c h e d u le ( s t a t i c )32 f o r ( i n t i =0; i<npa r ; i ++) {33 va r1 = rk ( var0 , var1 , va rn ) ; / / Runge−Kut ta34 }35

36 #pragma omp b a r r i e r37 }38 }

to work on. Note that calcula Dmp() and reconstruccion o2()are called during the each invocation of calcula iteracion().

2) Day 2: The Appentra staff gave an introduction to theparallel patterns used in Parallware Trainer. The most widelyused patterns were described: Parallel Forall, Parallel ScalarReduction and Parallel Sparse Reduction (see details in [4]).The team was proposed to describe the behaviour of theminiapp in terms of such parallel patterns. The annotationsto calcula iteracion() in Listing 1 exhibit one parallel sparsereduction on variable var1 (see loop for i, lines 22-32) andone parallel forall on variable var1 (see loop for i, lines 37-39).

Next, the EDANYA team was instructed to use ParallwareTrainer to learn how to parallelize parallel sparse reductionswith OpenMP 4.5 using as a guide the sample code ATMUX

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 7

Problem size (No. volumes)10000 20000 50000 100000

OpenMP 3.1 running on multicore processor1 thread 65.28 − 260.03 − 1839.99 − 7276.63 −2 threads 42.07 1.55× 163.56 1.59× 1043.50 1.76× 4420.28 1.65×4 threads 19.22 3.40× 75.11 3.46× 486.22 3.78× 2093.91 3.48×6 threads 13.15 4.96× 51.50 5.05× 321.65 5.72× 1418.75 5.13×8 threads 10.03 6.51× 39.07 6.66× 243.21 7.57× 1072.03 6.79×

OpenACC 2.5 running on accelerator processorGPU 26.20 2.49× 97.67 2.66× 597.96 3.08× 1280.22 5.68×

TABLE IIEXECUTION TIME WITH DIFFERENT NUMBER OF FINITE VOLUMES.

RESULTS FOR OPENMP AND OPENACC.

shown in the source code editor of Fig. 3. By clicking onthe gutter attached to the loop for i (Fig. 3, lines 9-16), theteam selected OpenMP for CPU using the loop paradigm (see#pragma pw, Fig. 3, line 8). Finally, the appropriate OpenMPpragmas where added to the miniapp (see Listing 1, pragmafor at line 19, pragmas atomic at lines 24 and 26).

In order to finish the OpenMP implementation the teamproceed as follows: (1) parallelization of the parallel forallpattern (Listing 1, pragma for at line 36); (2) minimization ofparallelization overhead, in particular creation and destructionof threads (Listing 1, pragma parallel at line 2, pragmasbarrier at lines 7 and 36). The final OpenMP parallel sourcecode is represented in the pseudocode of Listing 1.

The execution times and speedups of Table II were mea-sured on the cluster Atlantico of the EDANYA group at theUniversity of Malaga (Spain). The hardware setup is an IntelXeon CPU E5-2620 v2 @ 2.10GHz and NVIDIA GeForceGTX Titan Black X GPUs. The compiler flags for GCC 6.3 are-O3 -march=native -fopenmp. The experimental re-sults for OpenMP 3.1 running on multicore processor revealedthat the implementation Atomic access is easy to understand,easy to apply to the miniapp, and easy to maintain, as wellas having a good strong scaling for increasing problem sizes(speedup for 8 threads is higher than 6.51× for 10000 up to100000 finite volumes). Due to the good performance of theOpenMP-enabled Atomic access parallel implementation, theEDANYA team decided to defer the study of the alternativeOpenMP implementation Variable privatization until they areback at their office. Finally, the team moved on to OpenACCduring the last day of the hackathon.

3) Day 3: Successful study of the OpenACC implementa-tion of parallel sparse reductions through Parallware Trainerand the sample code ATMUX. No remaining time to imple-ment and test an OpenACC-enabled version of the miniapp.The last day finished with a session where the EDANYA team,and all the other teams, described their progress during thehackathon. The EDANYA team presented a clear roadmaptowards developing an OpenACC version of their miniappafter the hackathon.

D. Ongoing work after the GPU Hackathon

After the hackathon the EDANYA team is still working onan OpenACC parallel implementation of the simulation code.Listing 2 shows the OpenACC-enabled miniapp developed sofar. The hotspot of the program is a Parallel Sparse Reduction,

Listing 2. Pseudocode of the OpenACC-enabled EDANYA miniapp.

1 void main ( ) {2 #pragma acc data copyin ( r e c o n s t r u c t i o n s , var1 )3 {4 do{5 va r1 = c a l c u l a i t e r a c i o n ( var0 , d t ) ;6 d t = c a l c u l a d t ( va r0 ) ;7 } whi le ( t i e m p o a c t u a l<t i e m p o f i n a l ) ;8 }9 #pragma acc r o u t i n e ( calcula Dmp ) seq

10 #pragma acc r o u t i n e ( r e c o n s t r u y e ) seq11 }12

13 void c a l c u l a i t e r a c i o n ( va r0 ) {14 f o r ( i n t l =0; l<o r d e r ; l ++) {15 va r1 = 0;16 r e c o n s t r u c t i o n s , va r1 = r e c o n s t r u y e ( va r0 ) ;17

18 / / P a t t e r n : P a r a l l e l Sparse Reduc t ion19 / / ( v a r i a b l e ’ var1 ’ )20 #pragma acc update d e v i c e ( r e c o n s t r u c t i o n s , var1 )21 #pragma acc p a r a l l e l loop22 f o r ( i n t i =0; i<npa r ; i ++) {23 im = f ( i ) ;24 i p = g ( i ) ;25 f lusexm , f l u x e s p = calcula Dmp ( r e c o n s t r u c t i o n s ,

ncapas , dt , heps ) ;26 #pragma acc atomic update27 va r1 [ im]−= f l uxesm ;28 #pragma acc atomic update29 va r1 [ i p ]−= f l u x e s p ;30 }31 #pragma acc update hos t ( var1 )32

33 f o r ( i n t i =0; i<npa r ; i ++) {34 va r1 = rk ( var0 , var1 , va rn ) ; / / Runge−Kut ta35 }36 }37 }

where an Atomic access implementation has been appliedusing pragmas parallel loop (see line 21) and atomic (lines 26and 28). The management of data transfers has been optimizedso that arrays reconstructions and var1 are copied to the GPUmemory at the beginning of the program (see pragma datacopyin, line 2). Later, each execution of the parallel sparsereduction is guarded by pragmas update that copy the latestvalues of the arrays to the GPU memory (see update device,lines 20), and the new values of array var1 back to the hostmemory (see update host, line 31).

The execution times and speedups of Table II revealed thatthis OpenACC-enabled miniapp outperforms the OpenMP ver-sion running on 6 threads (the GPU speedup 5.68× is higherthan the CPU speedup 5.13×). Such performance motivatesfurther improvement in the OpenACC code. First, optimizingstencil computations by removing complex control flows (e.g.,if-then statements inside loops) used to avoid out-of-boundmemory accesses. Second, optimizing memory managementby allocating private variables (specially arrays) directly in thememory of the GPU, thus avoiding CPU-GPU data transfersin each iteration of the time-iteration loop is also required.The EDANYA team is currently engaging these problems toachieve a full parallel version of the code with minimum datatransfer. Overall, the collaboration with the EDANYA grouphas been very successful and there is agreement to continueduring 2017-2018.

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 8

V. CONCLUSIONS AND FUTURE WORK

This paper shows evidences that Parallware Trainer has thepotential to become an effective tool to enable experientiallearning of parallel programming using OpenMP 4.5 andOpenACC 2.5. Powered by Parallware core technology, thetool eases the discovery of the most popular parallel patternsused in OpenMP/OpenACC-enabled scientific applications,namely, Parallel Forall, Parallel Scalar Reduction and ParallelSparse Reduction. The GUI supports implementing, build-ing, running and benchmarking parallel patterns on multicoreCPUs (e.g., Intel Xeon, IBM Power) and accelerator devices(e.g., NVIDIA GPU, Intel Xeon Phi).

The experience of the EDANYA team in GPU Hackathon atCESGA showed that our methodology based on parallel pat-terns is: (1) simple, as STEM people without prior knowledgeon parallel programming with OpenMP/OpenACC succeededto discover parallelism in sparse codes; (2) powerful, as theparallel patterns describe how to rewrite sequential code intoefficient OpenMP/OpenACC-enabled parallel code. Startingfrom scratch and in only 3 days, the EDANYA team imple-mented an scalable OpenMP parallel version of their miniapp,and defined the roadmap for the development of an OpenACCversion for NVIDIA GPUs.

As future work we plan to continue the validation ofParallware Trainer and its pattern-based methodology throughmore hackathons and control groups. We will improve the GUIfor self-training, providing basic, intermediate and advanced-level courses as well as progress metrics to enable self-evaluation. In order to widen our target audience, we will addsupport for Fortran, for SIMD execution, and for tasking withasynchronous execution, which are expected to be key in theupcoming (pre-)exascale supercomputers. Finally, we also planto conduct proof-of-concept experiments using a new SaaSproduct based on Parallware Trainer.

ACKNOWLEDGMENT

This research has been partially supported by the Span-ish Government and FEDER through Research projectMTM2015-70490-C2-1-R and Andalusian Government Re-search project P11-FQM-8179. Also thanks to the Super-computing Centre of Galicia (CESGA) for supporting theorganization of the GPU Hackathon and for providing accessto the FinisTerrae supercomputer.

REFERENCES

[1] Parallware Trainer, http://www.parallware.com/, Sep, 2017.[2] Swiss National Supercomputing Center (CSCS), Directive Based

GPU Programming: OpenACC and OpenMP, http://www.cscs.ch/-/events/event detail/index.html?tx seminars pi1%5BshowUid%5D=161,Sep, 2017.

[3] NVIDIA, Accelerate Applications on GPUs with OpenACC Directives,https://developer.nvidia.com/how-to-openacc, Sep, 2017.

[4] Manuel Arenaz, Oscar Hernandez, Dirk Pleiter: The TechnologicalRoadmap of Parallware and its Alignment with the OpenPOWER Ecosys-tem, International Workshop on OpenPOWER for HPC (IWOPH17) co-located with ISC17, 2017.

[5] Krste Asanovic, Rastislav Bodik, James Demmel, Tony Keaveny, KurtKeutzer, John Kubiatowicz, Nelson Morgan, David Patterson, KoushikSen, John Wawrzynek, David Wessel, and Katherine Yelick. 2009. A viewof the parallel computing landscape. Commun. ACM 52, 10 (October2009), 56-67. DOI: https://doi.org/10.1145/1562764.1562783

[6] Department of Energy (DoE), CORAL Benchmark Codes,https://asc.llnl.gov/CORAL-benchmarks/, 2014

[7] D.H. Bailey and E. Barszcz and J.T. Barton and D.S. Brown-ing and R.L. Carter and L. Dagum and R.A. Fatoohi and P.O.Frederickson and T.A. Lasinski and R.S. Schreiber and H.D. Si-mon and V. Venkatakrishnan and S.K. Weeratunga, The NAS Paral-lel Benchmarks - Summary and Preliminary Results, Proceedings ofthe 1991 ACM/IEEE Conference on Supercomputing, Supercomputing’91, 1991, 158–165, 8, https://www.nas.nasa.gov/publications/npb.html,10.1145/125826.125925, ACM

[8] Mark Berril, XRayTrace miniapp, https://code.ornl.gov/mbt/RayTrace-miniapp, 2017

[9] Exascale Computing Project (ECP): Messina Update: The USPath to Exascale in 16 slides. See Programming Models, De-velopment Environment and Tools (Intel Xeon, IBM Power, In-tel Xeon Phi, NVIDIA GPU, OpenMP, OpenACC, task-based,LLVM, FLANG). [https://www.hpcwire.com/2017/04/26/messina-update-u-s-path-exascale-15-slides/ last checked July 2017]

[10] M. de la Asuncion and M.J. Castro and E.D. Fernandez-Nieto and J.M.Mantas and S. Ortega and J.M. Gonzalez-Vida. Efficient GPU implemen-tation of a two waves TVD-WAF method for the two-dimensional onelayer shallow water system on structured meshes. Computers & Fluids80:441–452, 2013.

Manuel Arenaz is CEO at Appentra Solutions andprofessor at the University of A Corua (Spain).He holds a PhD in Computer Science from theUniversity of A Corua (2003) on advanced compilertechniques for automatic parallelization of scientificcodes. Recently, he co-founded Appentra Solutionsto commercialize products and services that takeadvantage of Parallware, a new technology for se-mantic analysis of scientific HPC codes.

Sergio Ortega obtained the Degree in Mathematicsat the University of Malaga (2005), the Master inthe department of Mathematical Analysis within theprogram of Physics and Mathematics (FISYMAT)(2007), and his PhD ”High order finite volumeschemes: GPU implementation and its application tothe simulation of geophysical flows” (2016). Since2007 he works in the EDANYA group of the Uni-versity of Malaga.

Ernesto Guerrero-Fernndez studied Industrial En-gineering at the University of Malaga (2015) fol-lowed by a master in Industrial Mathematics at theUniversity of Santiago de Compostela (2017). He iscurrently pursuing his Ph.D in applied mathematicswith the EDANYA group at the University of Malagaunder the supervision of Manuel J. Castro, wherehe is studying shallow water models and robustnumerical algorithms for hyperbolic equations.

Fernanda Foertter is HPC User Support Specialistand Programmer at the US Department of Energy’s(DOE’s) Oak Ridge Leadership Computing Facil-ity (OLCF). Computer geek interested in quantumchemistry, application development, parallelizationand clusters. Recently elected to the position ofSIGHPC Education Committee Vice Chair, whereshe will support the goals of SIGHPC Education topromote increased knowledge and greater interest inthe educational and scientific aspects of HPC.