Interpreting the unculturable majority

Transcript of Interpreting the unculturable majority

NATURE METHODS | VOL.4 NO.6 | JUNE 2007 | 479

NEWS AND VIEWS

Interpreting the unculturable majorityLior Pachter

New methods are necessary for the analysis and interpretation of massive amounts of metagenomic data.

Shotgun sequencing for the sampling of genomic fragments from microbial com-munities is yielding unprecedented insights into the genetic basis of interactions between microbes and their environments. The field of genomics is thereby being transformed into metagenomics, where the genomes of many interacting individuals and species are

considered as a whole. Mavromatis and col-leagues1 evaluate the performance of exist-ing genomics tools that have been adapted for the processing of metagenomic data, and highlight problems where new ideas are nec-essary to move the field forward. Such work is essential for realizing the full potential of metagenomics studies2.

The main object of study in metagenom-ics is the metagenome, which consists of the genomic content of a microbial community. Ideally, this includes the complete genome sequences of all the species in a community, details about the genetic variation of individu-als and annotations of genes together with a description of their function. Such a complete genomic view of a microbial community is the basis for quantifying diversity, and for under-standing the complex interactions between communities and their environmental or biological hosts. But because the majority of microbes are unculturable, classical methods for cloning and sequencing the entire genome of every species in a population are unsuitable. In contrast, existing metagenomics tech-nologies relying on environmental shotgun sequencing usually only offer the possibility of sampling from a community. The analysis of genomic samples from multiple (unknown) individuals and species is a challenging prob-lem that requires new computational biology methods and software tools.

The bioinformatics of metagenomics3 is similar to that of comparative genomics, but even though many of the computational techniques of comparative genomics are relevant, they are not always sufficient. The paper by Mavromatis et al.1, provides an assessment of existing software that has been adapted for use in metagenome analysis, and makes a strong case for the development of new methods, benchmarks and standards.

One of the useful aspects of the article is the construction of simulated data sets for three types of communities. Each faux com-munity consists of a subset of species from 113 sequenced microbes. The communities range from the simple (with few species, one of which is dominant) to more complex com-munities. Reads from the existing distinct isolate genome sequences of the microbes are sampled at random, and are then pro-cessed and compared to the initial assembled genomes. Simulated data sets of this type are useful for benchmarking existing tools, and also for calibrating and validating new methods.

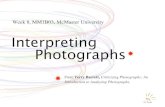

The main steps in the (initial) processing of metagenomic data are summarized in Figure 1. First steps include the assembly of

Lior Pachter is in the Departments of Mathematics and Computer Science, University of California-Berkeley, Berkeley, California 94720, USA.e-mail: [email protected]

Data

Geneprediction

Functionalannotation

AssemblyBinningTETRA

MetaClust

BLAST

PhyloPythia

Phrap

JAZZ

Celera

Glimmer

FGENESB Orpheus

Critica

Arachne

KEGG COGPfam

Figure 1 | The main steps in the processing of metagenomic data. Some of the available software packages and databases are shown. The development of a ‘processing pipeline’ requires making many choices about which steps to perform and which programs to use.

©20

07 N

atur

e P

ublis

hing

Gro

up

http

://w

ww

.nat

ure.

com

/nat

urem

eth

od

s

480 | VOL.4 NO.6 | JUNE 2007 | NATURE METHODS

NEWS AND VIEWS

reads and/or the clustering of reads based on their sequence composition (also known as binning). The choice of strategy depends on the type of community being considered and the amount of strain variation. Mavromatis et al.1 show that although the assemblers they tested were developed for whole genome shotgun assembly of single species, they are generally effective in assembling contigs that are comprised of reads belonging to individ-ual isolate genomes. They also provide guid-ance on the limits of existing assemblers.

Binning can be used either before or after assembly to group together sequences from the same species. The key is to identify genomic signatures, which can be measure-ments of quantities such as dinucleotide fre-quencies4 or combinations of predictors as identified through techniques such as prin-cipal-components analysis5.

The genes of microbes that comprise a community determine the functions of indi-vidual members. It is therefore essential to be able to accurately identify genes and their function from sequence fragments. There is a large amount of software dedicated to micro-bial gene finding, and present strategies for metagenomics gene identification use exist-ing software in combination with searches in databases of functional hierarchies. The results are useful but leave much room for improvement1. What is needed is a statisti-cally sound framework for gene finding that incorporates information about uncertainty in base calls, and integrates the procedures of binning and functional assignment. Gene predictions can then be combined with sta-tistical estimates of diversity6 and evolution-ary parameters7 to yield a complete picture of the community being studied.

The study of microbial systems through environmental shotgun sequencing is only beginning8. In a recent report of the National Research Council9, a group of leading scientists wrote that “The science of metagenomics, only a few years old, will make it possible to investigate microbes in their natural environments, the complex communities in which they normally live. It will bring about a transformation in biology, biomedicine, ecology and biotechnology as profound as that initiated by the invention of the microscope and as important to daily life as the pioneering work of Anton van Leeuwenhoek, Louis Pasteur, James Watson and Francis Crick”.

The massive amount of new metage-nomic data is exciting, and it is tempting to rush to analysis. We believe it is impor-

tant to benchmark existing bioinformat-ics tools, which are being used on a scale and in ways they were not designed for. It is also important to develop new sta-tistically sound analysis methods spe-cifically designed for metagenomic data. Additionally, it is imperative that ‘model metagenomics systems’ be developed (in analogy with model organisms for genet-ics). These should be simple custom-designed communities that are amenable to genetic and environmental manipula-tion. They will be invaluable as test beds for metagenomics tool development and biological discovery.

COMPETING INTERESTS STATEMENTThe author declares no competing financial interests.

1 Mavromatis, K. et al. Nat. Methods 4, 495–500 (2007).

2. Tress, M.L. et al. BMC Bioinformatics 7, 213 (2006).

3. Chen, K. & Pachter, L. PLoS Comp. Biol. 1, e24 (2005).

4. Karlin, S. & Burge, C. Trends Genet. 11, 283–290 (1995).

5. Turnbaugh, P.J. et al. Nature 444, 1027–1131 (2006).

6. Angly, F. et al. BMC Bioinformatics 6, 41 (2005).7. Johnson, P.L.F. & Slatkin, M. Genome Res. 16,

1320–1327 (2006).8. Eisen, J. PLoS Biol. 5, e82 (2007).9. Committee on Metagenomics. (National

Academies Press, 2007).

Deciphering the combinatorial histone codeJoshua M Gilmore & Michael P Washburn

A new mass spectrometry (MS) approach has been developed, allowing combinatorial analysis of histone H3.2 post-translational modifications that may provide the key to unlocking the histone code.

Regulation of eukaryotic gene expression is highly dependent on chromatin structure. The fundamental repeating subunit of chro-matin, the nucleosome, is composed of two pairs of each core histone (H2A, H2B, H3 and H4) and 147 base pairs of DNA wrapped around the histone octamer. Regulation of chromatin structure, and therefore transcrip-tion, is driven by post-translational modifica-tions (PTMs) of the core histones. Histones are modified primarily on their N-terminal tails, and are subject to several modifica-tions including methylation, acetylation, phosphorylation, sumoylation and ubiqui-tination. The combination of these modi-fications appearing on different histones at different times, to regulate cellular processes, is referred to as the ‘histone code’1.

In this issue, Garcia and colleagues from the Kelleher group describe a new approach that permits combinatorial analysis of PTMs within the N-terminal tail of human histone H3.2 using an MS approach combined with hydrophilic interaction chromatography (HILIC)2. Their analysis provides insight

into the most abundant patterns of combi-natorial modifications within the N-termi-nal tail of histone H3.2.

Conventional MS approaches used for PTM analysis to date require digesting the proteins into peptides before analysis. A technique known as collision-induced dissociation is then used to fragment the peptides into smaller fragments for MS analysis. This overall strategy is known as ‘bottom-up’ analysis. As with any approach, bottom-up analysis has its limitations. By digesting an entire population of histone proteins with trypsin, for example, smaller fragments are analyzed, and the diversity of differentially modified whole protein forms is lost (Fig. 1a). The origin of smaller pep-tides from differentially modified forms of a particular protein is challenging to recon-struct from a bottom-up approach.

A more recent approach has been devel-oped for looking at PTMs, in which an intact protein or long portions of proteins, rather than peptides, are introduced into the mass spectrometer. Techniques such as electron

Joshua M. Gilmore and Michael P. Washburn are at the Stowers Institute for Medical Research, 1000 E. 50th St., Kansas City, Missouri 64110, USA.e-mail: [email protected]

©20

07 N

atur

e P

ublis

hing

Gro

up

http

://w

ww

.nat

ure.

com

/nat

urem

eth

od

s